Abstract

Existing image processing and target recognition algorithms have limitations in complex underwater environments and dynamic changes, making it difficult to ensure real-time and precision. Multiple noise sources interfere with sonar signals, which affects both data precision and clarity. This article studies the dynamic display algorithm of sonar data based on grayscale distribution model and computational intelligence. It proposes to construct a grayscale distribution model for sonar images, analyze the grayscale histogram, determine the threshold selection of the maximum entropy threshold segmentation method, and finally complete the target segmentation. The segmented images can be used to train the convolutional neural network object recognition model constructed in this article. To verify the effectiveness of the proposed method, a test set was used to evaluate the trained target recognition model. The precision of the model recognition was 87.95%, the recall was 87.97%, and the F1 value was 0.8794, which is significantly higher than the traditional model (Such as Otsu and SVM is below 80%). The recognition speed reached 37 m, which is a certain improvement compared to the traditional model.

1 Introduction

In the 21st century, the rapid development of technology not only greatly promotes the progress of human society, but also provides unprecedented opportunities for people to develop and utilize marine resources. The ocean, as the largest ecosystem on Earth, not only possesses rich biodiversity, but also harbors enormous energy and mineral resources. With the continuous growth of the global economy and population expansion, the gradual depletion of land resources has made the development and utilization of marine resources particularly important (Jia-lin et al., 2022; Sandoval-Castillo et al., 2022; Barendse et al., 2023). In the process of marine resource development, sonar technology, as an important detection method, is widely used in fields such as marine terrain mapping, fish resource investigation, and seabed mineral exploration (Zhang et al., 2022; Shen et al., 2024). Sonar data can provide detailed underwater environmental information through the reflection and scattering of sound waves. Dynamic display of sonar data can provide real-time underwater environmental information, helping scientists and engineers make timely and accurate decisions in tasks such as ocean resource development, underwater construction, and exploration. Real-time dynamic display can detect underwater obstacles early, but processing large sonar data in real-time is computationally intensive. The complex underwater environment and multiple noise sources interfere with sonar signals, affecting data precision and clarity. Existing image processing and target recognition algorithms struggle with localization in complex, dynamic environments, limiting real-time precision and sonar imaging resolution. Especially in deep sea or long-distance situations, the precision of target recognition is limited, and how to efficiently process and display this data in real time has become an urgent problem to be solved (Ghavidel et al., 2022; Wang et al., 2023).

This article explores dynamic display algorithms for sonar data using grayscale distribution models and computational intelligence. The grayscale distribution model identifies echo signals of varying intensities by analyzing the distribution of grayscale values in sonar data. This simplifies complex data, enhances data contrast, and filters out noise, improving data quality (Mo and Pei, 2023; Zhao et al., 2022). Given the high-dimensional nature of sonar data, this model simplifies representation and extracts key features for more efficient processing. Integrating computational intelligence enables precise and efficient sonar data analysis, supporting marine resource development and utilization (Chen et al., 2022; Liu et al., 2022).

This article evaluates sonar data dynamic display algorithms based on grayscale distribution models and computational intelligence through a series of experiments. Results indicate that the proposed model outperforms traditional models, achieving an average precision of 87.95%, a recall rate of 87.97%, an F1 score of 0.8794, and a processing speed of 37 m. The research results of this article have certain academic value for the academic community in sonar data processing, target recognition, and dynamic display algorithms, providing new research ideas and practical experience for related fields.

2 Related work

With the continuous increase of marine resource development and underwater operations, sonar image underwater target recognition has become a hot research field. However, the complex underwater environment and scarcity of samples make this task even more challenging. Scholars such as Huang Haining (Haining et al., 2024) have conducted in-depth discussions on typical imaging sonar technologies, summarizing the problems of small sample size, class imbalance, weak target features, target diversity, and poor interpretability of target recognition in current technologies. Scholars such as Chen Peng (Peng et al., 2020) proposed a speckle reduction method for side scan sonar images based on adaptive 3D block matching filtering to address the issue of introducing speckle noise in imaging using echo intensity in side scan sonar. This method first performs power and logarithmic transformations on the side scan sonar image, and then uses wavelet transform to estimate the overall noise level of the image. Meanwhile, the parameters of the adaptive 3D block matching filtering algorithm are continuously updated based on the results of local noise estimation. During this process, the effects of global noise estimation and local noise estimation can be compared, and the most suitable parameters can be selected to solve the problem of uneven noise distribution. Through experiments, it has been proven that the equivalent number of views has increased by at least 6.83%. However, the adaptive 3D block matching filtering algorithm itself requires a large amount of computation, especially when processing high-resolution side scan sonar images, which may result in slow processing speed and affect the efficiency of real-time applications. Tueller et al. (2020) proposed a framework to predict seabed types based on the spatial distribution of features for reliable object detection in sonar images. They demonstrated through experiments that feature extraction-based detection methods have high adaptability and detection rates in sonar images. However, further research and optimization are needed in practical applications, such as adaptability, computational complexity, and false alarm rate control.

The grayscale distribution model is a statistical model used in image processing and computer vision to describe the distribution of pixel grayscale values in images. It has important application value in the research of dynamic display of sonar data, and can be used to enhance image quality, improve object detection and classification, and improve image segmentation precision. Scholars such as Gu Ming (Gu and Yuan, 2023) designed a splitting detection algorithm based on grayscale distribution curves to detect the splitting condition of the ice spoon head. By calculating the average grayscale image of the ice spoon head in the vertical direction, and then performing grayscale correction, the influence of uneven lighting can be eliminated. Next, concave line segments can be extracted from the corrected grayscale image and their absolute amplitudes can be calculated. Finally, the feature values of the splitting position can be calculated based on preset criteria. The advantage of this algorithm lies in its ability to effectively handle uneven lighting and small split openings through grayscale correction and feature extraction, improving detection precision, reducing missed and false detections, and adapting to different lighting conditions and changes in split opening sizes, with strong robustness. Pan Xinyu et al. (Pan and Yan, 2023) proposed a gas leakage detection algorithm based on gas shape characteristics and grayscale distribution. Experimental results show that the algorithm can accurately detect gas leakage areas and is robust to interference factors in videos.

Computational intelligence technology is commonly used to solve complex optimization problems, pattern recognition, data mining, decision support, and other tasks. In the research of dynamic display algorithms for sonar data, computational intelligence can help optimize the parameters of grayscale distribution models, improve the precision and efficiency of object detection and recognition. It mainly includes neural networks, fuzzy logic, evolutionary algorithms, simulated annealing algorithms, deep learning, etc. Fu et al. (2023) proposed an underwater small target detection method that combines region extraction and improved convolutional neural networks. They successfully achieved the detection of underwater small targets using convolutional neural network technology, and proved through experiments that this method can effectively improve the detection probability and correct alarm rate of underwater small targets. Compared with other object detection methods, this method has better detection performance and generalization. Zhang and Zhu, (2022) proposed an underwater target sonar detection system based on convolutional neural networks, which utilizes convolutional neural networks to optimize nonlinear excitation function calls. It can enhance and extract features from sonar images, simplify training and image processing, and achieve more convenient and efficient detection of linear underwater targets with high robustness. Computational intelligence technologies such as machine learning and deep learning algorithms can be utilized to effectively process and analyze large amounts of sonar data. Key features can be extracted from it to accurately identify and classify targets in the image, improving the efficiency and precision of data processing.

3 Method

3.1 Image enhancement and optimization

Sonar systems are affected by various background noises in marine environments, including underwater natural noise, environmental noise, reverberation and self-noise. Among them, reverberation has a significant impact on sonar imaging (Kazimierski and Zaniewicz, 2021; Yuan et al., 2021; Jin et al., 2019). Reverberation mainly includes reflection noise and scattering noise, which are mainly caused by the reflection of sound waves when encountering obstacles during underwater propagation, such as the seabed, sea surface, and underwater objects. Sound waves are noise formed by the scattering of irregular objects or media during propagation, as shown in Figure 1. The presence of these background noises can affect the detection and positioning precision of sonar systems. In order to improve the performance and reliability of the system, effective noise suppression and image enhancement techniques need to be adopted.

FIGURE 1

Sonar imaging noise.

The current algorithms used for noise removal in sonar images include mean filtering, non local mean filtering, median filtering, Gaussian filtering, wavelet transform, and Fourier transform (Elhoseny and Shankar, 2019; Sahu et al., 2019). In order to achieve better denoising results, this article chooses to first use Gaussian filtering for preliminary denoising, and then combine it with wavelet transform for further refinement processing. Gaussian filtering is based on the Gaussian distribution function to perform weighted averaging on the image, which can smooth out small noise in the image (He et al., 2022; Gao et al., 2020). The weight of a Gaussian filter is calculated using a Gaussian function, and the specific formula is as follows:

Among them, is the standard deviation of the Gaussian function, which determines the smoothness of the filter.

Wavelet transform has the ability of multi-resolution analysis, which can simultaneously process the local time-domain and frequency-domain characteristics of signals. It performs excellently in signal and image denoising, compression, and feature extraction (Guo et al., 2022; Rhif et al., 2019). Discrete wavelet transform uses discrete wavelet functions and scales for transformation, and decomposes the signal into coefficients of different scales and positions through multi-resolution analysis:

Among them, is the scale function, is the wavelet function, and are the scale and wavelet coefficients. Figure 2 shows the sonar image processed by Gaussian filtering and wavelet transform.

FIGURE 2

Sonar image denoising.

3.2 Building a grayscale distribution model

The grayscale distribution model describes the distribution of grayscale levels in sonar images, which helps identify targets (Sun et al., 2019; Wang et al., 2022). Therefore, after removing the noise from the image, a grayscale distribution model is established by statistically analyzing the grayscale value distribution of each pixel in the sonar image.

The detailed process for establishing a grayscale distribution model is as follows:

(1) Remove noise.

This article employs denoising methods based on Gaussian filtering and wavelet transformation for preprocessing sonar images, ensuring the accuracy of the grayscale distribution model. This has been completed in

Section 3.1.

(2) Extract pixel grayscale value distribution.

Extracting grayscale values from denoised sonar images:

The grayscale value range for each pixel is [0, 255] (for an 8-bit grayscale image).

Count the gray values of all pixels in the entire image to obtain the gray histogram.

Formula:

Among them, H(i) represents the number of pixels at gray level i.

(3) Establish a grayscale distribution model.

Based on the histogram statistical data, a gray value distribution model is fitted, Calculate the following feature values through the gray-level histogram.

①Mean value: The average value of overall brightness.

②Variance: The degree of dispersion of grayscale values

③Skewness: symmetry of distribution

④Kurtosis: The degree of sharpness of a distribution.

Then, the gray-level distribution model based on the mixture of Gaussian distributions is expressed as:

In order to distinguish the background and target in sonar images, this article selects an appropriate grayscale threshold based on grayscale distribution information, compares the grayscale values of each pixel in the image, and achieves the goal of target segmentation. Threshold segmentation is a commonly used method in image processing, which is divided into two types: global segmentation and local segmentation (Pare et al., 2020; Abdel-Basset et al., 2021). The global threshold segmentation approach is straightforward, user-friendly, and very computationally efficient, which primarily consists of the maximum entropy threshold segmentation method, Otsu’s method, and optimal threshold method (Amiriebrahimabadi et al., 2024; Xie et al., 2019). This article processes sonar images using the maximum entropy threshold segmentation approach. To achieve image segmentation, the ideal threshold is determined by maximizing the entropy of the image. The specific formula steps of the algorithm are as follows:

When the sonar image is composed of a target image O with pixel grayscale values lower than x and a background image P with pixel values higher than x, the range of x values is 0–255. The background entropy is:

The target entropy is:

Among them, , ,i = 0,1, … ,255, represent the probability of pixels with grayscale values of i appearing, and and are measured in bits per pixel.

The entropy function is the sum of and . To select the optimal threshold by maximizing the entropy function, the formula is:

The grayscale histogram that can be drawn for sonar data collected from the network is shown in Figure 3.

FIGURE 3

Grayscale histogram.

The histogram displays the pixel distribution of each grayscale level in the image, and by analyzing the histogram, the grayscale distribution characteristics of the target and background can be distinguished (Li et al., 2019; Guo et al., 2023). It can perform grayscale distribution statistics and analysis on images, establish grayscale distribution models, help identify grayscale features of targets and backgrounds, and apply them to tasks such as noise suppression, image enhancement, and object detection. The maximum threshold segmentation can be achieved based on the drawn grayscale histogram, as shown in Figure 4.

FIGURE 4

Implementation diagram of maximum entropy threshold segmentation under different thresholds.

It can be seen that when the threshold is set to 50, the image displays too much background noise, and the target area is also disturbed by many noise points, resulting in poor separation effect between the target and the background. When the threshold is set to 100, the image display effect is good, the target is well preserved, and it can effectively separate the target and background. When the threshold is set to 120, although the background noise is reduced, there is still some noise present, and the details of the target begin to be lost, especially in darker target areas that become difficult to distinguish. When the threshold is set to 150, the background noise is further reduced, but a large amount of information in the target area is also lost, and the target details in the image cannot be clearly displayed, resulting in poor overall performance. In contrast, setting the threshold to 100 shows significant superiority, with background noise effectively suppressed at this threshold, making the target more prominent, and considering both target retention and background noise comprehensively. A threshold of 100 can minimize background noise while preserving target information, resulting in the best display effect of the image.

3.3 Building a target recognition model

Convolutional neural networks are a type of deep learning model that excels in processing image data. By simulating the working principle of the human visual system, image features can be extracted layer by layer to achieve tasks such as image classification, object detection, and semantic segmentation (Li et al., 2021; Ketkar and Moolayil, 2021). Fully connected, pooling, and convolutional layers make up its fundamental structure. Among these, the pooling layer reduces and compresses the dimensionality of data, the convolutional layer uses convolutional operations to extract local information from the image, and the fully connected layer is utilized for tasks involving classification or regression. At present, CNN has achieved significant results in image classification, object detection, semantic segmentation, and other fields, greatly surpassing traditional methods in image processing and computer vision tasks (Krichen, 2023; Zhang et al., 2019; Huang et al., 2024).

In recent years, convolutional neural networks (CNN), as a crucial tool in deep learning, have played a significant role in the processing and application of sonar imaging. Due to its pivotal role in underwater detection, target recognition, and environmental modeling, sonar imaging, when combined with CNN algorithms, can notably enhance the accuracy of target recognition, segmentation capabilities, and the ability to adapt to complex environments. However, this technology also faces certain limitations, including high computational costs, strong data dependency, and a lack of interpretability regarding physical mechanisms (Krithika and Jayanthi, 2024; Banu et al., 2024; Ayaz et al., 2024; Lim et al., 2024).

This article chooses CNN as the basis for constructing a target recognition model, because CNN can automatically extract effective features from the original sonar images and perform accurate target classification, greatly improving the precision and efficiency of target detection. It has strong robustness to changes in various sonar images and can demonstrate good generalization ability on different sonar image datasets, suitable for different underwater detection environments.

Convolutional Neural Network (CNN) is a type of neural network widely used in deep learning for image processing and object recognition. By simulating the biological visual system, CNN can effectively extract features from images and complete object recognition tasks. The architecture of a standard CNN for object recognition tasks typically includes several main components: convolutional layers, pooling layers, fully connected layers, etc.

In this article, VGG-16 CNN model is utilized to extract multi-level features of sonar images, and its analysis is carried out. VGG-16 is a typical CNN, which includes a 16 layer convolutional layer, a complete connection layer, and a maximum soft layer. The characteristic of this method lies in its relatively simple network structure, deeper layers, consistent convolutional kernel size, and increasing channel numbers in the feature map, thus possessing good generalization ability and high recognition accuracy. In the first two levels of VGG-16, based on the basic theory of CNN, the network is improved by using the dropout algorithm and L2 regularization algorithm, so as to effectively suppress the “over fitting” phenomenon. For the first layer of VGG-16, convolution operations can be expressed as:

In Formula 1, i and j represent the size of the image. Among them, represents the output of the first layer, which represents the operation of the convolutional layer. The maximization operation of the pooling layer can be expressed by the following formula:

In Formula 2, after convolution and pooling operations in the first few layers, a certain size of feature map can be obtained. The obtained feature map is transformed into high-dimensional vectors as input to the fully connected layer. This transformation process is represented as:

In Formula 3, n represents the dimension size of the feature map. The Activation function used by VGG-16 is softmax, and the Cross entropy Loss function is used. The expression of the Activation function is as follows:

In Formula 4, means Activation function. In addition, the Cross entropy Loss function is expressed as follows:

Formula 5 represents the Loss function of VGG-16. In the process of extracting sonar image features, the pre trained VGG-16 model is first loaded and used as a feature extractor. The sonar images are converted into appropriate formats and preprocessing steps are applied to each sonar image. Subsequently, the VGG-16 model is utilized to extract the features of each sonar image. The first few convolutional layers of the model can extract lower level features, such as edges and textures, while deeper layers can extract higher level features, such as the shape and contour of the object. Each extracted image feature vector is standardized and normalized to ensure that they have the same scale and distribution. Finally, dimensionality reduction techniques are applied to each image feature vector, and other features are extracted from each sonar image to provide grayscale histograms, texture features, and shape features of the sonar features.

The focus of this article’s system design is on a target recognition model based on convolutional neural networks. The training process of the CNN model is roughly as follows: first, initialize the network weights and biases, set hyperparameters such as learning rate, batch size, and number of training rounds, and divide the sonar image dataset into training and testing sets.

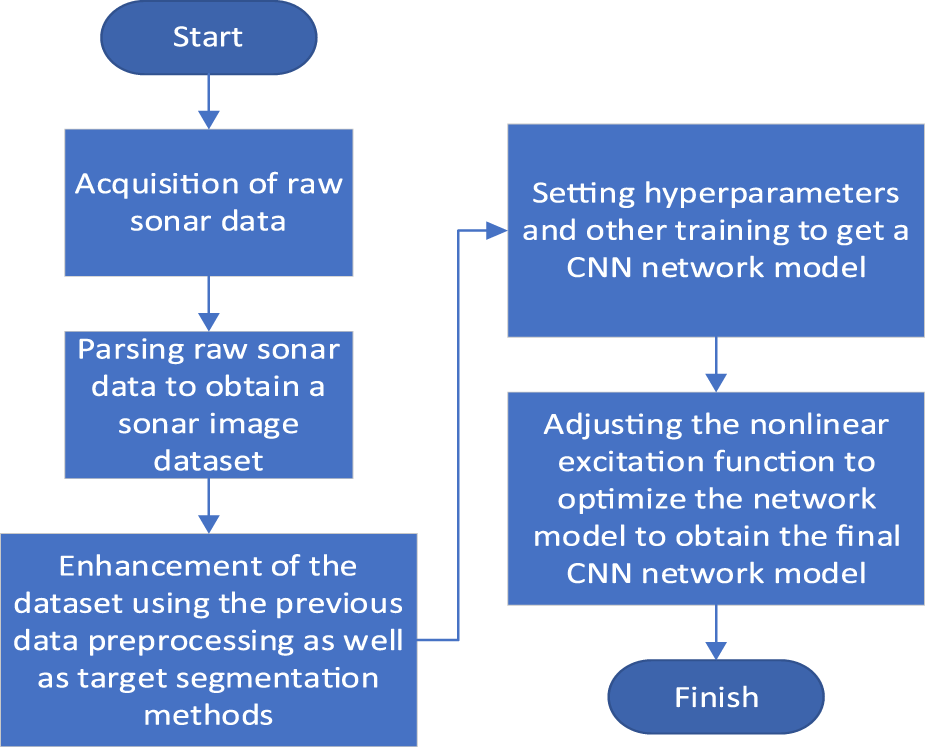

The image data in the dataset can be preprocessed according to the previous method to obtain the denoised image and then calculate the grayscale histogram. The most suitable threshold in the maximum entropy threshold segmentation method can be analyzed through the obtained grayscale histogram, and then the data can be segmented using the maximum entropy threshold segmentation method to obtain the enhanced image of the target based on the obtained threshold. The enhanced image can be used to train convolutional neural networks to improve the model’s generalization ability and robustness, thereby improving the precision and efficiency of object detection and recognition. During the model training phase, unsupervised learning methods can be used to initialize network parameters and generate initial feature representations using the training set data. The weights and biases of the network can be adjusted layer by layer using backpropagation algorithms. The loss function usually uses cross entropy loss function or mean square error function, combined with optimization algorithms to update parameters. At the end of each training round, the loss and precision on the test set can be calculated to monitor the training process and avoid overfitting. When the training error converges to the preset value or reaches the maximum number of training rounds, the training can be stopped to obtain a well trained network model. In order to optimize the model, a nonlinear excitation function Sigmoid function is introduced on the basis of training error convergence to enhance the nonlinear expression ability of the network. The flowchart for building the model is shown in Figure 5.

FIGURE 5

Construction of CNN target recognition model flowchart.

It can preprocess, enhance, and segment sonar image data, which is of great significance and importance for sonar image target recognition models based on convolutional neural networks. It makes it easier for the model to learn useful features, prevent overfitting, and improve the model’s generalization ability. This not only enhances the robustness of the model during training, but also enhances its precision and efficiency in practical applications, making target recognition tasks more reliable and effective.

4 Experiment

4.1 Experimental plan design

In order to verify the effectiveness of the target recognition and detection model based on grayscale distribution model and computational intelligence for sonar data dynamic display algorithm, simulation experiments were conducted to verify the model’s target recognition of sonar image data. The specific experiments are divided into the following parts:

(1) Data preparation: A set of 2,874 sonar image data of various types of targets (pipelines, sunken ships, airplanes, organisms, etc.) can be collected through the network. After preprocessing operations such as normalization, denoising, and labeling can be performed on the data in the dataset, 70% of it can be divided into a training set. The remaining 30% is divided into the test set and expanded by flipping, mirroring, scaling, and rotating the training set. The number of training set data obtained is shown in Table 1. Some datasets are shown in Figure 6.

(2) Data augmentation: the grayscale distribution model proposed earlier can be used to process the dataset, complete target segmentation, and improve the generalization ability and robustness of the target recognition model.

(3) Training model: the enhanced training set can be used to train the model.

(4) Model evaluation: the trained model can be tested using test set data to calculate recognition precision and other indicators to complete the evaluation of the model.

TABLE 1

| The sonar image data type | Total number of samples | Number of invalid samples | Actual sample size | |

|---|---|---|---|---|

| Seabed topography | Ups and downs | 342 | 8 | 334 |

| Sandbanks and Reefs | 336 | 4 | 332 | |

| Canyons and Rift Valleys | 232 | 6 | 226 | |

| Mountains and Plateaus | 272 | 4 | 268 | |

| Specific goals | Shipwreck | 201 | 2 | 199 |

| Airplane | 227 | 9 | 218 | |

| Subsea pipelines and cables | 203 | 3 | 200 | |

| Halobios | 305 | 12 | 293 | |

| Total | 2,118 | 48 | 2070 | |

Data size of model training set.

FIGURE 6

Partial data of the dataset.

4.2 Experimental environment

The experiment was deployed under the Ununtu 20.04 operating system, and the main software and hardware environments are shown in Table 2.

TABLE 2

| Hardware environment configuration | Software environment configuration | ||

|---|---|---|---|

| Configuration items | Model | Configuration items | Version |

| CPU | i9-12900K | Operating system | Ubuntu 20.04 LTS |

| GPU | RTX 4080 | Frame | TensorFlow 2.2.0 |

| Memory | 32 GB DDR4 | Deep Learning Libraries | Keras 2.3.1 |

| Image processing library | OpenCV 4.10.0 | ||

Experimental environment.

4.3 Experimental results and analysis

The test set data can be input into a trained CNN network model for testing, and the performance of the model can be evaluated by calculating recognition precision, recall ratio, F1 score, and other indicators. The precision refers to the proportion of samples predicted by the model to be positive, but actually positive. Recall rate refers to the proportion of samples that are actually positive and correctly predicted by the model as positive. The F1 score is the harmonic mean of precision and recall, and the formula is:

The detection results of the model for various types of targets are shown in Table 3.

TABLE 3

| The sonar image data type | Precision (%) | Recall (%) | F1 score | |

|---|---|---|---|---|

| Seabed topography | Ups and downs | 86.17 | 84.51 | 0.8533 |

| Sandbanks and Reefs | 84.31 | 82.52 | 0.8341 | |

| Canyons and Rift Valleys | 87.22 | 83.22 | 0.8517 | |

| Mountains and Plateaus | 93.14 | 96.27 | 0.9468 | |

| Specific goals | Shipwreck | 89.36 | 91.20 | 0.9027 |

| Airplane | 87.14 | 87.46 | 0.8730 | |

| Subsea pipelines and cables | 85.71 | 84.75 | 0.8523 | |

| Halobios | 90.55 | 93.82 | 0.9216 | |

| Average value | 87.95 | 87.97 | 0.8794 | |

Experimental results of various types of targets.

From Table 3, it can be seen that the CNN model has achieved an precision rate of over 84% for target recognition of various categories, with an average precision rate of 87.95%, indicating that the model’s ability to recognize targets of different categories is relatively stable. In terms of recall rate, the model has achieved a recall rate of over 80% for all types of molds, with an average recall rate of 87.97%. This means that the model has a high coverage of true positive examples and can correctly identify most targets. From the F1 scores in Table 3, it can be seen that the F1 scores of most types of targets are between 0.85 and 0.95, with an average F1 score of 0.8794. This indicates that the model has good balance in identifying different types of targets.

The confusion matrix generated by the model for the detection results of various types of targets is shown in Figure 7.

FIGURE 7

Model test confusion matrix.

Tags 1-8 represent different types of identification targets, including undulations, sandbars and reefs, canyons and rifts, mountains and plateaus, sunken ships, airplanes, underwater pipelines and cables, and marine organisms. The elements on the diagonal represent the number of correctly predicted samples by the model, while the elements on the non diagonal represent the number of incorrectly predicted samples by the model. In Figure 7, it can be seen that when the model identifies the first and second types of targets, it is easy to confuse each other. However, overall, the precision of the model’s recognition is relatively high, and the number of errors in identifying various types of targets is relatively small.

In order to verify the improvement of the CNN network model’s target recognition performance by using a grayscale distribution model for target segmentation of sonar image data, this article compares the recognition precision, recall, F1 score, and average recognition time required for each image between the traditional CNN network model and the proposed model. The results are shown in Table 4.

TABLE 4

| Model | Precision (%) | Recall (%) | F1 score | Average recognition speed (ms) |

|---|---|---|---|---|

| This article model | 87.95 | 87.97 | 0.8794 | 37 |

| CNN-Softpuls | 63.47 | 65.28 | 0.5476 | 69 |

| Visual Geometry Group Network | 78.24 | 74.39 | 0.7391 | 46 |

Comparison of this article’s model with other models.

The proposed model outperforms traditional models in precision, recall, F1 score, and recognition speed. It uses a grayscale distribution model for target segmentation of sonar images and inputs preprocessed data into a CNN for recognition, enhancing feature extraction and precision. The grayscale model effectively removes noise and redundant information, while the CNN improves processing efficiency and precision. The average recognition time per image is shorter.

5 Conclusion

This article explores a sonar data display algorithm using grayscale distribution and computational intelligence, proposing a CNN model for object detection. The grayscale distribution model improves segmentation and enhances CNN training and accuracy. Experiments show the proposed model surpasses traditional ones in precision, recall, F1 score, and recognition speed.Traditional object recognition models mainly include support vector machine (SVM), random forest (RF), and K-nearest neighbor classifier (KNN), The accuracy of traditional models (such as Otsu and SVM) is below 80%,The recall rate or F1 score of traditional models is relatively low (below 0.7), and the recognition speed of other traditional algorithms is 25–30 m.The average precision rate reached 87.95%, the average recall rate reached 87.97%, the average F1 score was 0.8794, and the average recognition speed was 37 m, which is significantly higher than other models and has the potential to be applied in practical sonar systems.This provides a more accurate technical means for improving the target recognition ability of sonar systems, achieving more intelligent sonar data processing, and for target recognition of sonar data. This is of great significance for sonar data dynamic display algorithms with high real-time requirements, which can achieve timely and accurate display of the position and status of underwater targets. However, in terms of object selection for recognition, the classification proposed in this article is limited and cannot cover all types included in the sonar data. Due to the limitations of the experimental environment and the complexity of sonar images, the training amount of the model is relatively small, and the generalization ability of the model may be insufficient, which may lead to the risk of overfitting. Therefore, it is necessary to increase the types of target recognition in sonar images, collect as much and richer sonar image data as possible, cover more types and scenes, and diversify training data to solve these problems.

The dynamic display algorithm for sonar data, based on grayscale distribution models and computational intelligence, holds tremendous potential for future development. By incorporating more advanced models, more powerful computational intelligence algorithms, and smarter display strategies, the visualization effect and information extraction efficiency of sonar data can be significantly improved, bringing profound impacts to fields such as underwater exploration, resource management, and environmental protection.

Statements

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/supplementary material.

Ethics statement

Ethical approval was not required for the studies involving humans because This trial is based on the sonar device and the dynamic display of the data, and does not involve the study of animal and human tissues. The studies were conducted in accordance with the local legislation and institutional requirements. The human samples used in this study were acquired from primarily isolated as part of your previous study for which ethical approval was obtained. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements. The manuscript presents research on animals that do not require ethical approval for their study.

Author contributions

HL: Writing–original draft, Writing–review and editing. DL: Writing–original draft, Writing–review and editing. HJ: Writing–original draft, Writing–review and editing.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This work was supported by the Research on the application of BIM in the whole life cycle of Urban Rail Transit [Foundation of Guizhou science and technology cooperation (2019) No. 1420]. The special projects for promoting the development of big data of Guizhou Institute of Technology. And that also funded by Geological Resources and Geological Engineering, Guizhou Provincial Key Disciplines, China [ZDXK(2018)001].

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Abdel-Basset M. Chang V. Mohamed R. (2021). A novel equilibrium optimization algorithm for multi-thresholding image segmentation problems. Neural Comput. Appl.33, 10685–10718. 10.1007/s00521-020-04820-y

2

Amiriebrahimabadi M. Rouhi Z. Mansouri N. (2024). A comprehensive survey of multi-level thresholding segmentation methods for image processing. Arch. Comput. Methods Eng.31 (6). 10.1007/s11831-024-10093-8

3

Ayaz F. Alhumaily B. Ahmed Z. (2024). Radar signal processing and its impact on deep learning-driven human activity recognition. Preprints.org| Not. PEER-REVIEWED | Posted.

4

Banu A. K. S. S. Shahul Hameed K. A. Vasuki P. (2024). A hybrid deep-learning-based automatic target detection and recognition of military vehicles in synthetic aperture radar images. Int. J. Industrial Eng.31(6). 10.23055/ijietap.2024.31.6.9991

5

Barendse J. Failler P. Okafor-Yarwood I. Mann-Lang J. (2023). Editorial: african ocean stewardship: navigating ocean conservation and sustainable marine and coastal resource management in Africa. Front. Mar. Sci.10, 1244652. 10.3389/fmars.2023.1244652

6

Chen G. H. Ni J. Chen Z. Huang H. Sun Y. L. Ip W. H. et al (2022). Detection of highway pavement damage based on a CNN using grayscale and HOG features. Sensors22 (7), 2455. 10.3390/s22072455

7

Elhoseny M. Shankar K. (2019). Optimal bilateral filter and convolutional neural network based denoising method of medical image measurements. Measurement143, 125–135. 10.1016/j.measurement.2019.04.072

8

Fu S. Feng Xu Jia L. (2023). Combined with area extraction and improvement of convolutional neural network underwater small target detection. Appl. Acoust.42 (06), 1280–1288.

9

Gao Z. Gu C. Yang J. Gao S. Zhong Y. (2020). Random weighting-based nonlinear Gaussian filtering. IEEE Access8, 19590–19605. 10.1109/access.2020.2968363

10

Ghavidel M. Azhdari S. M. H. Khishe M. Kazemirad M. (2022). Sonar data classification by using few-shot learning and concept extraction. Appl. Acoust.195, 108856. 10.1016/j.apacoust.2022.108856

11

Gu M. Yuan W. (2023). Grayscale distribution curve and ice spoon head splitting detection. Microprocessor44 (06), 19–22.

12

Guo D. Hou Z. Guo K. Peng Z. Zhang F. (2023). Quantitative model of hereditary behavior for carbon segregation in continuous casting billets based on grayscale analysis. steel Res. Int.94 (8), 2200782. 10.1002/srin.202200782

13

Guo T. Zhang T. Lim E. Lopez-Benitez M. Ma F. Yu L. (2022). A review of wavelet analysis and its applications: challenges and opportunities. IEEE Access10, 58869–58903. 10.1109/access.2022.3179517

14

Haining H. Baoqi Li Jiyuan L. etc (2024). Review and prospect of underwater target recognition of sonar images. J. Electron. Inf.46, 1–19.

15

He M. Q. Zhang D. B. Wang Z. D. (2022). Quantum Gaussian filter for exploring ground-state properties. Phys. Rev. A106 (3), 032420. 10.1103/physreva.106.032420

16

Huang F. Xiong H. Jiang S.-H. Yao* C. Fan X. Catani F. et al (2024). Modelling landslide susceptibility prediction: a review and construction of semi-supervised imbalanced theory. Earth-Science Rev.250, 104700. 10.1016/j.earscirev.2024.104700

17

Jia-lin L. I. Man-hong S. Ren-feng M. A. Yang H. s. Chen Y. n. Sun C. z. et al (2022). Marine resource economy and strategy under the background of marine ecological civilization construction. J. Nat. Resour.37 (4), 829–849. 10.31497/zrzyxb.20220401

18

Jin Y. Ku B. Ahn J. Kim S. Ko H. (2019). Nonhomogeneous noise removal from side-scan sonar images using structural sparsity. IEEE Geoscience Remote Sens. Lett.16 (8), 1215–1219. 10.1109/lgrs.2019.2895843

19

Kazimierski W. Zaniewicz G. (2021). Determination of process noise for underwater target tracking with forward looking sonar. Remote Sens.13 (5), 1014. 10.3390/rs13051014

20

Ketkar N. Moolayil J. (2021). “Convolutional neural networks,” in Deep learning with Python: learn best practices of deep learning models with PyTorch, (Berkeley, CA: Apress), 197–242. 10.1007/978-1-4842-5364-9_6

21

Krichen M. (2023). Convolutional neural networks: a survey. Computers12 (8), 151. 10.3390/computers12080151

22

Krithika R. Jayanthi A. N. (2024). High resolution radar target recognition using deep video processing technique. ICTACT J. IMAGE VIDEO Process.15 (02).

23

Li S. Qin B. Xiao J. Liu Q. Wang Y. Liang D. (2019). Multi-channel and multi-model-based autoencoding prior for grayscale image restoration. IEEE Trans. Image Process.29, 142–156. 10.1109/tip.2019.2931240

24

Li Z. Liu F. Yang W. Peng S. Zhou J. (2021). A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Trans. neural Netw. Learn. Syst.33 (12), 6999–7019. 10.1109/tnnls.2021.3084827

25

Lim S. He J. Jinho Y. Kim J. Y. (2024). Hawkeye: a point cloud neural network processor with virtual pillar and quadtree-based workload management for real-time outdoor bev detection. IEEE J. Solid-State Circuits1–12. 10.1109/jssc.2024.3508873

26

Liu Y. Al-Salihi M. Guo Y. Ziniuk R. Cai S. Wang L. et al (2022). Halogen-doped phosphorescent carbon dots for grayscale patterning. Light Sci. and Appl.11 (1), 163. 10.1038/s41377-022-00856-y

27

Mo W. Pei J. (2023). Sea-sky line detection in the infrared image based on the vertical grayscale distribution feature. Vis. Comput.39 (5), 1915–1927. 10.1007/s00371-022-02455-9

28

Pan X. Yan Ma (2023). A gas leak detection algorithm based on gas shape characteristics and grayscale distribution. Smart Comput. Appl.13 (09), 13–16+24.

29

Pare S. Kumar A. Singh G. K. Bajaj V. (2020). Image segmentation using multilevel thresholding: a research review. Iran. J. Sci. Technol. Trans. Electr. Eng.44 (1), 1–29. 10.1007/s40998-019-00251-1

30

Peng C. Cai X. Dongdong Z. (2020). Side-scan sonar image speckle noise reduction based on adaptive BM3D. Photoelectr. Eng.47 (7), 190580–190581.

31

Rhif M. Ben Abbes A. Farah I. R. Martínez B. Sang Y. (2019). Wavelet transform application for/in non-stationary time-series analysis: a review. Appl. Sci.9 (7), 1345. 10.3390/app9071345

32

Sahu S. Singh A. K. Ghrera S. P. Elhoseny M. (2019). An approach for de-noising and contrast enhancement of retinal fundus image using CLAHE. Opt. and Laser Technol.110, 87–98. 10.1016/j.optlastec.2018.06.061

33

Sandoval-Castillo J. Beheregaray L. B. Wellenreuther M. (2022). Genomic prediction of growth in a commercially, recreationally, and culturally important marine resource, the Australian snapper (Chrysophrys auratus). 12(3). 10.1093/g3journal/jkac015

34

Shen W. Peng Z. Zhang J. (2024). Identification and counting of fish targets using adaptive resolution imaging sonar. J. Fish Biol.104 (2), 422–432. 10.1111/jfb.15349

35

Sun H. Yang X. Gao H. (2019). A spatially constrained shifted asymmetric Laplace mixture model for the grayscale image segmentation. Neurocomputing331, 50–57. 10.1016/j.neucom.2018.10.039

36

Tueller P. Kastner R. Diamant R. (2020). Target detection using features for sonar images. IET Radar, Sonar and Navigation14 (12), 1940–1949. 10.1049/iet-rsn.2020.0224

37

Wang C. Fu P. Liu Z. Xu Z. Wen T. Zhu Y. et al (2022). Study of the durability damage of ultrahigh toughness fiber concrete based on grayscale prediction and the weibull model. Buildings12 (6), 746. 10.3390/buildings12060746

38

Wang F. Li W. Liu M. Zhou J. Zhang W. (2023). Underwater object detection by fusing features from different representations of sonar data. Front. Inf. Technol. and Electron. Eng.24 (6), 828–843. 10.1631/fitee.2200429

39

Xie D.-heng Lu M. Xie Y.-fang Duan L. Xiong Li (2019). A fast threshold segmentation method for froth image base on the pixel distribution characteristic. PloS one14 (1), e0210411. 10.1371/journal.pone.0210411

40

Yuan F. Xiao F. Zhang K. Huang Y. Cheng En (2021). Noise reduction for sonar images by statistical analysis and fields of experts. J. Vis. Commun. Image Represent.74, 102995. 10.1016/j.jvcir.2020.102995

41

Zhang Q. Zhang M. Chen T. Sun Z. Ma Y. Yu B. (2019). Recent advances in convolutional neural network acceleration. Neurocomputing323, 37–51. 10.1016/j.neucom.2018.09.038

42

Zhang Y. Zhang H. Liu J. Zhang S. Liu Z. Lyu E. et al (2022). Submarine pipeline tracking technology based on AUVs with forward looking sonar. Appl. Ocean Res.122, 103128. 10.1016/j.apor.2022.103128

43

Zhang Y. Zhu X. (2022). Underwater target sonar detection system based on convolutional neural network. Ind. control Comput.35 (07), 115–117.

44

Zhao B. Zhang M. Dong L. Wang D. (2022). Design of grayscale digital light processing 3D printing block by machine learning and evolutionary algorithm. Compos. Commun.36, 101395. 10.1016/j.coco.2022.101395

Summary

Keywords

sonar data, grayscale distribution model, convolutional neural network, maximum entropy threshold, target recognition

Citation

Lei H, Li D and Jiang H (2025) Dynamic display algorithm of sonar data based on grayscale distribution model and computational intelligence. Front. Earth Sci. 13:1526129. doi: 10.3389/feart.2025.1526129

Received

11 November 2024

Accepted

18 February 2025

Published

28 April 2025

Volume

13 - 2025

Edited by

Faming Huang, Nanchang University, China

Reviewed by

Mohammad Azarafza, University of Tabriz, Iran

Genbao Zhang, Hunan City University, China

Chenguang Zhang, Xinyang Normal University, China

Amit Shiuly, Jadavpur University, India

Updates

Copyright

© 2025 Lei, Li and Jiang.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Haidong Jiang, 20170783@git.edu.cn

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.