- 1Department of Methodology and Statistics, Utrecht University, Utrecht, Netherlands

- 2Psychometric Research Center, Dutch National Institute for Educational Measurement (Cito), Arnhem, Netherlands

- 3Department of Psychology, University of Amsterdam, Amsterdam, Netherlands

In this paper test equating is considered as a missing data problem. The unobserved responses of the reference population to the new test must be imputed to specify a new cutscore. The proportion of students from the reference population that would have failed the new exam and those having failed the reference exam are made approximately the same. We investigate whether item response theory (IRT) makes it possible to identify the distribution of these missing responses and the distribution of test scores from the observed data without parametric assumptions for the ability distribution. We show that while the score distribution is not fully identifiable, the uncertainty about the score distribution on the new test due to non-identifiability is very small. Moreover, ignoring the non-identifiability issue and assuming a normal distribution for ability may lead to bias in test equating, which we illustrate in simulated and empirical data examples.

1. Introduction

One of the advantages of item response theory (IRT) over classical test theory is its ability to handle incomplete designs. Among the important applications in which data are missing by design is test equating, where results of different test forms must be made comparable by accounting for the two key facts. The first is that the reference and the new tests need not be of the same difficulty, and the second is that the reference and the new populations need not have the same ability distribution (Kolen and Brennan, 2004; von Davier, 2011).

Suppose, that the same students respond both to the reference and to the new test. Assume, for the sake of the argument, that both tests are scored with a number correct score. It is clear that, if both tests represent the same underlying construct, both scores are automatically equated. The need for equating scores derives from the fact that for every student we only observe the response to either the reference or the new test. That is, it derives from the fact that there is a missing data problem.

Equating procedures are methods to overcome the missing data problem. There are many different methods for score equating with some methods based on IRT and other on classical test theory. These methods are covered in detail by, for example, Kolen and Brennan (2004), von Davier (2011), von Davier et al. (2004), Holland and Dorans (2006), and Livingston (2004). Most all equating procedures are such that all students with the same score on the reference test get the same equated score on the new test. This in contrast to both the complete data case we considered above, and more modern (multiple) imputation based techniques (Rubin, 1987).

The central question we consider in this paper is whether the distribution of the missing data (marginal or conditionally on the observed data) is in principle identifiable from the observed data. If the marginal distribution is not identifiable, neither is the conditional distribution needed to impute the missing data. Regardless of the preferred equating method, if the distribution of the missing data is not identifiable, the missing data problem can not be solved.

Suppose we take the most modest form of equating: translating the scores on the new test to a pass/fail decision (i.e., selecting a cut-score below which a student fails) consistently with the pass/fail criterion on the reference test, i.e., such the passing percentage in the reference population would be the same on the new test as it is on the reference test. To specify a new cutscore, it is sufficient to estimate the distribution of the scores of the persons from the reference population to the new test, denoted by p(X+mis)1. As we will show in the paper, this is not possible using an IRT model given the observed data only. Hence, solving more complicated problems of equating (obtaining a full correspondences between the scores on the two tests) is also not possible.

When IRT is used for test equating, the joint distribution of the observed data [responses of the reference population on the reference test, denoted by p(Xobs)] and the missing data [responses of the reference population on new test, denoted by p(Xmis)] is modeled by a marginal IRT model that consists of a conditional distribution of the data given a latent variable θ and a population distribution f(θ). Two elements are required to estimate the distribution of missing responses p(Xmis). First, the parameters of the items from the new test and from the reference test must be placed on the common scale. Second, the ability distribution of the reference population given the observed data f(θ|Xobs) must be estimated. In this paper, we have assumed that the tests are well connected through a linking design2 and the IRT model is correctly specified and, therefore, the first element of equating is fully satisfied. We have focused on the second element, which is usually ignored in test equating practice. The problem is that the full distribution of ability f(θ) is not identifiable, as has been shown by Cressie and Holland (1983). Consequently, as we show in this paper, the distribution p(X+mis) is also not identified from the observed data only. This issue is usually ignored in test equating practice, and instead a parametric distribution, usually a normal distribution, is assumed for f(θ). This assumption is not guaranteed to hold in practice, therefore it is important to consider to what extent the problem of inferring the distribution of missing responses can be solved without extra distributional assumptions.

We will discuss the problem of non-identifiability of p(X+mis) using the marginal Rasch model (RM) for dichotomous data, which has only one parameter in the conditional model (Rasch, 1960), as an example. The RM is chosen here for convenience; the identifiability issues are present at the level of the marginal model and are therefore not affected by the choice of a particular parametric conditional model.

In this study we investigate the extent to which the unavoidable uncertainty about the score distribution p(X+mis) that comes from non-identifiability is problematic in practice. The main purpose of this study is not to introduce a new method for test equating, but to highlight a fundamental property of marginal IRT models. This property is that in IRT equating the score distribution p(X+mis) can not be identified without making extra assumptions about the parametric shape of the ability distribution, and the practical consequences of ignoring this property.

2. Why IRT Cannot Solve the Missing Data Problem

In this section we describe a simple model for test equating that tries (unsuccessfully) to predict missing responses from the observed data without additional distributional assumptions. The marginal RM is:

where x is a vector of dichotomous responses with xi = 1 if item i is answered correctly and 0 otherwise; δi is the difficulty parameter of item i. There is assumed to be a population distribution f(θ); however, its parametric shape is not known.

Following Cressie and Holland (1983), the marginal RM in Equation (1) can be re-written as

where x+ is the number of items answered correctly. It can be seen that

which is the posterior distribution of ability given that the responses to all items are incorrect. Therefore,

To make p(Xobs = x) a proper density, a normalizing constant should be added. A convenient parameterisation of the marginal RM (Maris et al., 2015) is:

where b = {b1, b2, …, bn} is a vector of item parameters that are transformations of difficulty parameters: bi = exp(−δi); λ = {λ0, λ1, …, λn} is a vector of population parameters, and γt(b) denotes a t-th order elementary symmetric polynomial (Verhelst et al., 1984). The denominator ensures that the distribution integrates to 1. The model in Equation (5) is a marginal Rasch model if and only if λ is a sequence of moments of a distribution. This imposes a set of inequality constraints on the parameters (Shohat and Tamarkin, 1943):

and

The extended Rasch model [ERM] (Tjur, 1982; Cressie and Holland, 1983; Maris et al., 2015) does not have these restrictions.

We now apply the ERM to test equating. Let us consider the joint density of the response vectors Xobs and Xmis:

where d = {d1, …, dm} are the parameters of the items in the new test (analogous to b) and η = {η0, η1, …, ηn+m} is a vector of (n + m + 1) population parameters corresponding to a combined test consisting of the items from both the reference and the new exams. It can be derived that the marginal distribution of the scores of the reference population on the new test is (see Appendix A, for details):

The expression for this distribution contains parameters η, whereas the density of the observed data contains parameters λ. The parameters η and λ are related to each other as follows (see Appendix A, for details):

The parameters λ are identified from the data (up to a multiplicative constant), whereas parameters η are not; this is because in this system of (n+1) Equations (4) there are (n+m+1) unknowns. Therefore, having observed only data Xobs, we cannot make direct inferences about the distribution of X+mis. Hence, IRT cannot solve the missing data problem.

3. What IRT Allows us to Infer about the Distribution of Missing Responses

The conclusion at the end of the previous section does not mean that we do not know anything about the parameters η or the score distribution. The relations between λ and η impose restrictions on the values that η can take, and therefore, on the score distribution. Before considering what is and is not known about the score distribution p(X+mis), we should discussed some additional constraints for parameters η.

Along with the restriction given by the relations with the identified parameters (10), there are other restrictions that the parameters η must satisfy in order to be parameters of the ERM. First, they must be positive to ensure that all probabilities in Equation (9) are positive. To derive a second constraint, consider the probability of answering item i correctly given the rest score on the test:

where b(i) denotes a vector of item parameters of all items in the reference test except item i, and is the sum score on these items; that is, the rest score. From the measurement perspective, this probability should increase when s increases (Junker, 1993; Junker and Sijtsma, 2000). This ensures that all item-rest correlations are positive, so that it makes sense to score the particular set of items together as one test. For this to be true, the ratios must form a monotonically increasing sequence. The inequality constraint

can be specifies as a part of in the prior distribution of the population parameters (see Appendix E, for details).

An alternative motivation for using the constraints in Equation (12) is that they follow from an important feature of the marginal RM, namely that

is the (posterior) variance of exp(θ) of a person with a score of s (Maris et al., 2015). The monotonicity constraints in Equation (12) follow from non-negativity of variance. Therefore, the constraints in Equation (12) are necessary but not sufficient for the parameters to satisfy the moment constraints of the marginal RM. Hence, the model we are using for equating is an ERM with the monotonicity constraints. As will be shown in the next subsection this restriction enables to reduce the uncertainty about the score distribution on the new test.

3.1. A Simple Case: m = 1

In this subsection we derive the uncertainty about the marginal probability of answering a new item correctly, given the observed responses to n items. Let us consider the simplest case in which the number of items in the new test is equal to one (m = 1). Because we are ignoring the effect of sampling variability on the uncertainty, we consider all identifiable parameters (b and λ) known.

Let λ = {λ0, λ1, …, λn} denote the set of identifiable population parameters; η = {η0, η1, η2, …, ηn+1} the set parameters for the combined test; and d the item parameter of the new item. The relations between λ and η form a system of linear equations:

This system of n + 1 equations does not have a unique solution because the number of unknowns (n + 2) is larger than the number of equations. The general solution is:

where k is a parameter that captures all uncertainty about η, such that the unique solution to the system of equations can be computed when k is known. This parameter is not completely free because η must satisfy the set of inequalities:

We are interested in the probability of answering the new item correctly, which can be written as a function of k:

Using the solutions of the system of equations, one can derive (for details, see Appendix B):

This expression is linear in k. Therefore, the uncertainty about the probability of answering the new item correctly depends on the difference between the maximum and the minimum of k. The upper and the lower bounds for k can be derived from the inequalities for η in Equation (16).

From the non-negativity of the parameters η (the first set of inequalities in Equation 16), we have (see Appendix B, for details):

Moreover, the second set of inequalities in Equation (16) leads to (see Appendix B):

Equations (19) and (20) together provide the lower and the upper bounds for k.

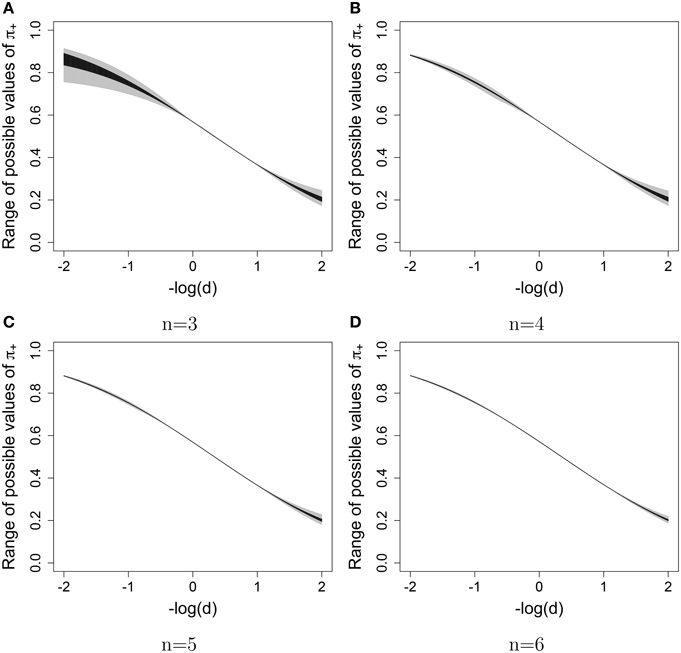

Next, we present a small example to show how the bounds on k change and what the uncertainty about the marginal probability of a correct response to the new item under the ERM is for different values of n. The item parameter d of this item varied from exp(−2) to exp(2), corresponding to the difficulty parameter varying from 2 to –2. We show how large the uncertainty is when only the non-negativity constraints are used, and when both the non-negativity and monotonicity constraints are used.

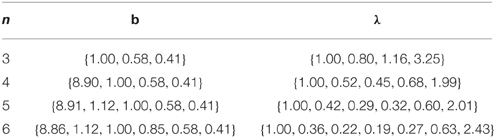

A data set with responses of persons sampled from a population with an ability distribution (0, 1) to a test of six items with difficulties sampled from ln(bi) ~ (0, 1) was simulated. First, only three items were taken into account, then four items, five items and, finally, all six items. We considered the identifiable parameters b and λ known in order to evaluate the uncertainty about π+ coming only from the non-identifiability of η. The identifiable parameters were fixed at their EAP-estimates obtained with a Gibbs sampler for the ERM (Maris et al., 2015), see Table 1.

The possible range of values for the free parameter k, and therefore for the probability of interest π+ (given the fixed values of b and λ) was evaluated for different values of the difficulty of the new item. Figure 1 shows the possible ranges of values for the probability of answering the new item correctly when only the constraints in Equation (19) were used (in gray) and when the constraints in Equations (19) and (20) were used (in black).

Figure 1. Uncertainty about the marginal probability of answering a new item correctly (gray—without monotonicity constraints, black—with monotonicity constraints) given the difficulty of the new item (on the x-axis). (A) n = 3, (B) n = 4, (C) n = 5, (D) n = 6.

The uncertainty about π+ decreases when n increases. For n = 3, the difference between the maximum and the minimum of π+ is for some d larger than 0.15 when only the constraints in Equation (19) were used and larger than 0.05 when all the constraints were used; however, when n = 6, the maximum discrepancy is 0.03 and 0.006 when only non-negativity constraints and all the constraints were used, respectively. Moreover, the uncertainty about π+ for the items with the difficulty parameter close to the items that have been answered is already very small if n = 3. In general, the uncertainty is larger for items with extreme difficulty3.

We have used this small example to explicitly show that it is not possible to compute the marginal probability of answering the new item correctly. However, there uncertainty about this probability is not large.

It is difficult to extend the analytic solution described in this section to realistic settings, with n and m being usual test lengths, because of the accumulation of error while computing the bounds for k. Therefore, below we present a simulation-based approach to the problem. Appendix C presents a proof of the fact that a simulation based approach is justifiable.

3.2. Simulated Examples

This subsection provides two simulated examples to illustrate the following:

1. the size of the uncertainty about the score distribution and which part of it is due to the non-identifiability of the parameters;

2. the practical consequences of ignoring the issue of non-identifiability of f(θ) when the true ability distribution is not normal.

In the first example, the data were simulated according to the non-equivalent group design with three linking groups. Each group consisted of 500 persons who gave responses to 15 items from the new test and 15 items from the reference test. The relevant equating designs are described in the Appendix D. The following parameters were used: n = m = 60, N = M = 5000. Responses were simulated according to the simple RM, with person parameters sampled from (0, 1) for the reference population, (0.5, 0.82) for the new population and (−0.5, 22), (−0.2, 22), (−0.1, 22) for the three linking groups4. The item difficulties (−ln bi) were sampled from a standard normal distribution.

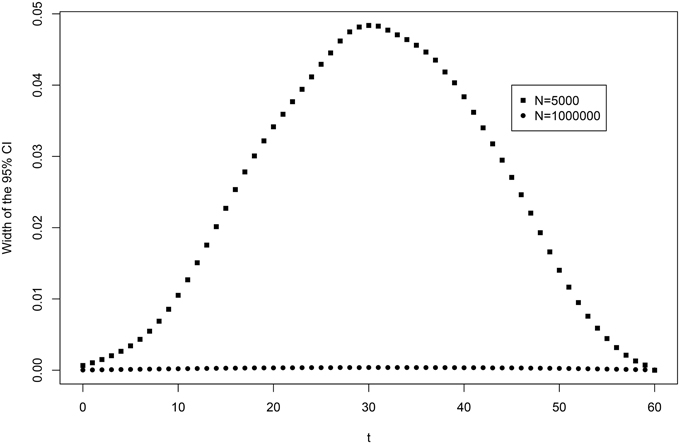

First, the data augmented Gibbs sampler for the ERM with monotonicity constraints was used to estimate the total uncertainty about the score distribution. Second, to eliminate the uncertainty coming from the sampling variability, the new data were simulated with the same parameters but larger sample sizes (N = M = 1, 000, 000) and the algorithm was used with all the item parameters fixed at their true values. The posterior variance of the score distribution that remained was almost entirely due to the non-identifiability of the population parameters. Figure 2 presents the widths of the 95% credibility intervals of Pr(X+mis ≤ T), ∀T∈[0:m] based on 50,000 draws from the posterior distribution after 10,000 iterations of burn-in. With a large N and fixed item parameters, the uncertainty about the score distribution becomes very small, not exceeding 0.002 on the probability scale.

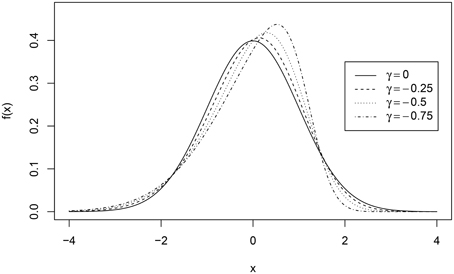

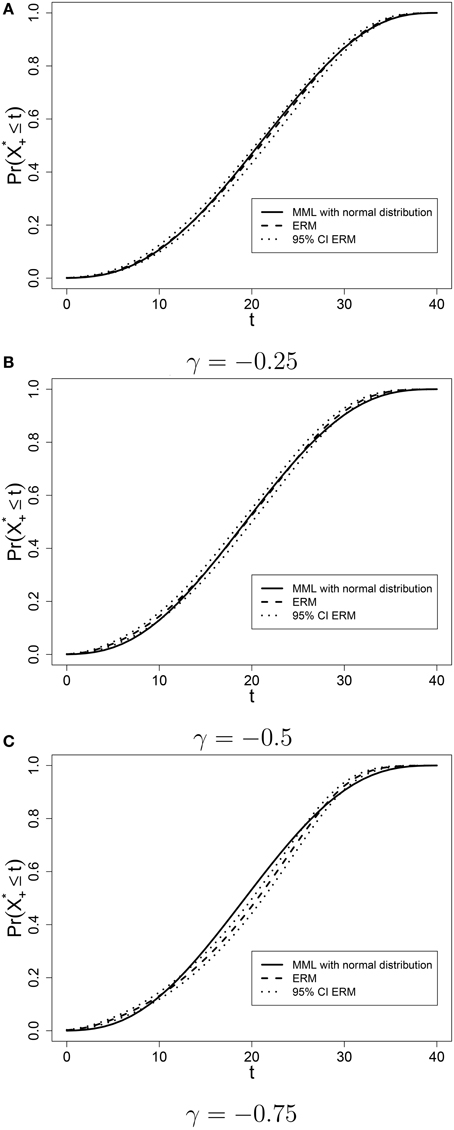

In the second example, we compared the results of test equating using a marginal RM assuming a normal distribution of ability in the population with the results of test equating using the ERM without the normality assumption. For the R-code of the analysis and the output, see Supplementary Material. The data with different distributions of ability in the reference population were simulated. To show what happens if normality is violated, we used skew-normal distribution for ability (Azzalini, 2005). The parameters of the skew-normal distribution were chosen such that the mean was equal to 0, variance was equal to 1, and skewness was varied γ = −0.25, −0.5, −0.75. These distributions can be seen in Figure 3 (dotted lines) next to the standard normal distribution (solid line).

For each of the three degrees of skewness, we simulated the data of 5000 persons from both the reference and the new populations taking the tests, which consisted of 40 items each, connected through three linking groups consisting of 500 persons responding to 20 items (10 from the reference test and 10 from the new test). For the new population and the three linking groups, person parameters were sampled from a normal distribution [(0.5, 0.92), (−0.5, 22), (−0.2, 22), (−0.1, 22), respectively]. Item difficulties were sampled from (0, 1). The data were simulated according to a RM.

The score distribution Pr(X+mis ≤ T) was estimated with marginal maximum likelihood (MML) assuming a normal distribution and with the Gibbs Sampler for the ERM. The ERM score distribution together with the 95% credibility intervals of Pr(X+mis ≤ T) based on 50,000 draws from the posterior distribution (after 10,000 iterations of burn-in) are presented in Figure 4, together with the MML-estimate of the score distribution. The more skewed the ability distribution is, the greater the difference between equating results for the MML and ERM approaches. When γ = −0.25, the MML-estimate does not fall outside of the 95% credibility interval obtained with the ERM. When γ = −0.5, the estimate based on the normality assumption is outside the credible interval for low and high scores, but within the interval for the middle range of the scores. Finally, when γ = −0.75, the MML-estimate is also outside the credible bound in the middle range of test scores. This is the range of scores within which the cutscore is usually placed, which means that different score distributions are likely to result in different cutscores. This has consequences for the pass/fail decision for hundreds of students.

Figure 4. Estimated score distributions with MML and ERM when the ability distribution in the reference population is skewed. (A) γ = −0.25; (B) γ = −0.5; (C) γ = −0.75.

4. Empirical Data Example

Using an empirical example we show the consequences of ignoring the problem of non-identifiability of f(θ) and assuming a normal distribution. We do this by comparing the estimated score distributions with and without the normality assumption.

4.1. Method and Data

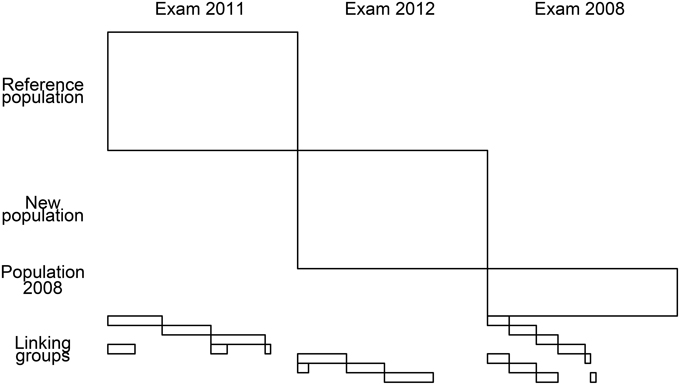

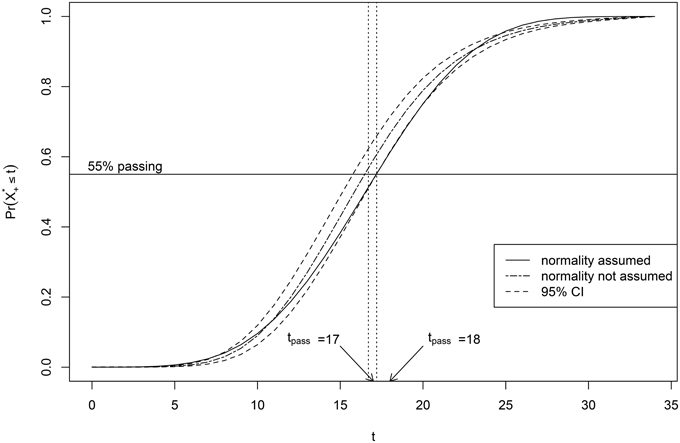

We analyzed data from the paper-and-pencil French language test for preparatory middle-level applied secondary education from examinations in 2011 and 2012. The sample sizes were 5518 for the reference exam and 5606 for the new exam. Both tests consisted of 41 items, but only dichotomous items were selected for analysis (35 and 34 in the reference and the new exams, respectively). The tests were linked through seven linking groups (with sample sizes ranging from 337 to 460) that responded to some items from either the reference test or the new test and some external anchor items (14 per group). The equating design is shown in Figure 5. There were 30 items from the reference test and 25 items from the new test answered by the linking groups. The items taken by the linking groups had been also answered by students in 2008.

First, the parameters of the ERM were estimated using the data augmented Gibbs sampler (see Appendix E). The algorithm was run for 60,000 iterations, of which the first 10,000 were discarded as a burn-in. The score distribution of the reference population on the new test was calculated at every iteration of the algorithm. Second, the marginal Rasch model with the normal distribution was fitted to the data and the MML-estimate of the score distribution was obtained. See Supplementary Material, for the data, software code of the analysis and the output.

4.2. Results

Figure 6 shows the posterior mean of the score distribution estimated with the ERM (together with the 95% credible interval) and the MML-estimate of the the score distribution. The estimated score distributions differ and the MML-estimate is outside of the credible interval at the lower and the higher scores. The posterior mean is also different from the MML-estimate in the middle range of scores, which could have consequences for establishing the new cutscore tnew. For example, if the desired proportion of persons from the reference population failing the new test was 55%, then the MML procedure would result in a cutscore of 17, whereas the ERM procedure would result in a cutscore of 18 as illustrated in Figure 6. The consequence of this would be that 476 students would have passed the test if a normal distribution were assumed, but would have failed if the ERM were used.

Figure 6. Score distribution of the reference population on the new test: posterior mean for the ERM (dashed line) and the MML-estimate based on the assumption of the normal distribution (solid line).

5. Discussion

Using a simple case, we have shown that, without the assumption of a parametric distribution, the score distribution on the new test is not identified. Knowing the difficulty parameter of the new item is not enough to predict the proportion of correct responses to this item in the population, after observing the responses to a finite set of items. When the number of items observed increases, the uncertainty about the score distribution decreases. This uncertainty tends to zero with n going to infinity, but is always there. Hence, IRT cannot, strictly speaking, solve the missing data problem, since it does not allow us to impute the unobserved responses of the reference population on the new test. We have investigated the degree of uncertainty about the score distribution in realistic applications. With realistic test lengths, the uncertainty coming from non-identifiability of population parameters is small enough to be ignored for practical purposes. Therefore, test equating can be done effectively without the not-fully-testable assumption of a particular parametric shape of the ability distribution, despite the non-identifiability issue.

The theoretical importance of this paper is that it has shown what one can and cannot do with respect to test equating using IRT based only on the observed data without the assumption of a parametric shape of the distribution. Although we have used the marginal RM for illustration, the issue of non-identifiability that is discussed holds in more general marginal IRT models, since the problem of the ability distribution not being identified will not go away if more parameters are added to the conditional model.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2015.01956

Footnotes

1. ^For simplicity, we considered a situation in which the new and the reference test do not have any common items. In the general case, the missing data are responses to the items that belong to the new test but not the reference test.

2. ^For a review of different linking designs see, for example, Angoff (1971), Wright and Stone (1979), Lord (1980), Petersen et al. (1989), and Kolen and Brennan (2004). Some of these linking designs are presented in Appendix D.

3. ^The graphs are not symmetric because the difficulties of the items in the reference test (−lnb) were also not symmetric around zero.

4. ^These values could be seen as matching empirical practice in the sense that the persons in the linking groups perform worse than in the examination conditions and are more heterogeneous.

References

Angoff, W. (1971). “Scales, norms and equivalent scores,” in Educational measurement, ed R. Thorndike (Washington, DC: American Council of Education), 508–600.

Azzalini, A. (2005). The skew-normal distribution and related multivariate fami-lies. Scand. J. Stat. 32, 159–188. doi: 10.1111/j.1467-9469.2005.00426.x

Cressie, N., and Holland, P. W. (1983). Characterizing the manifest probabilities of latent trait models. Psychometrika 48, 129–41. doi: 10.1007/BF02314681

Geman, S., and Geman, D. (1984). Stochastic relaxation, Gibbs distributions and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741. doi: 10.1109/TPAMI.1984.4767596

Holland, P. W., and Dorans, N. J. (2006). “Linking and equating,” in Educational measurement, 4th Edn., ed R. L. Brennan (Westport: Praeger), 189–220.

Holland, P. W. (1990). The Dutch identity: a new tool for the study of item response models. Psychometrika 55, 5–18. doi: 10.1007/BF02294739

Junker, B. W., and Sijtsma, K. (2000). Latent and manifest monotonicity in item response models. Appl. Psychol. Meas. 24, 65–81. doi: 10.1177/01466216000241004

Junker, B. W. (1993). Conditional association, essential independence and mono- tone unidimensional item response models. Ann. Stat. 21, 1359–1378. doi: 10.1214/aos/1176349262

Kolen, M. J., and Brennan, R. L. (2004). Test Equating, Scaling, and Linking: Methods and Practices. New York, NY: Springer.

Livingston, S. A. (2004). Equating Test Scores (without IRT). Princeton: Educational Testing Service.

Lord, F. M. (1980). Applications of Item Response Theory to Practical Testing Problems. Hillsdale, NJ: Erlbaum.

Maris, G., Bechger, T., and San Martin, E. (2015). A Gibbs sampler for the (Extended) marginal Rasch model. Psychometrika 80, 859–879. doi: 10.1007/s11336-015-9479-4

Metropolis, N., Rosenbluth, A., Rosenbluth, M., Teller, A., and Teller, E. (1953). Equations of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092. doi: 10.1063/1.1699114

Petersen, N. S., Kolen, M. J., and Hoover, H. D. (1989). “Scaling, norming and equating,” in Educational Measurement, ed R. L. Linn (New York, NY: American Council of Education and Macmillan), 221–262.

Rasch, G. (1960). Probabilistic Models for Some Intelligence and Attainment Tests. Expanded edition, 1980, Chicago, IL: The University of Chicago Press; Copenhagen: the danish institute of educational research.

Shohat, J. H. and Tamarkin, J. D. (1943). The Problem of Moments. New York, NY: American Mathematics Society.

Tanner, M., and Wong, W. (1987). The calculation of posterior distributions by data augmentation. J. Am. Stat. Assoc. 82, 528–540. doi: 10.1080/01621459.1987.10478458

Tjur, T. (1982). A connection between Rasch's item analysis and a multiplicative poisson model. Scand. J. Stat. 9, 23–30.

Verhelst, N. D., Glass, C. A. W., and van der Sluis, A. (1984). Estimation problems in the Rasch model: the basic symmetric functions. Comput. Stat. Q. 1, 245–262.

von Davier, A. A., Holland, P. W., and Thayer, D. T. (2004). The Kernel Method of Test Equating. New York, NY: Springer.

von Davier, A. A. (2011). Statistical Models for Test Equating, Scaling, and Linking. New York, NY: Springer.

Wright, B. D., and Stone, M. H. (1979). Best Test Design. Chicago, IL: MESA Press University of Chicago.

Zeger, K., and Karim, M. (1991). Generalized linear models with random effects: a Gibbs sampling approach. J. Am. Stat. Assoc. 86, 79–86. doi: 10.1080/01621459.1991.10475006

Appendix A

From Equation (8), we can derive the joint distribution of the scores on the reference test and the new test:

The marginal probability of obtaining a particular score on the new exam is then:

To derive the relations between the parameters λ and η, let us consider the probability of observing a particular response vector Xobs. On the one hand, it is given in Equation (5). On the other hand, it can be presented as follows:

Hence,

Appendix B

The probability of answering the new item correctly is:

Using the general solution of the system of equations in Equation (6), the two sums in this expression can be re-written as:

and

Hence,

First, we will consider the constraints on k, following from the parameters η being positive:

For even indices , we have:

For odd indices , we have:

Second, we consider the monotonicity constraints (12): Using the the general solution of the system of equations in Equation (10), we have:

If we multiply both sides by d2s and denote , then we get

When multiplying the elements on the left side and taking a square on the right side, most of the element on the both sides are the same, hence they cancel out, and the remaining inequality is:

For even indices , we have:

For odd indices , we have:

Appendix C

For a simulation approach (such as a Gibbs Sampler) to be applicable, we have to show that the solutions of the system of Equation (10) and inequalities (16) constitute a convex and bounded set, which ensures that the sampler can easily cover the full subspace of possible values of the non-identified parameters. All coefficients in the system of equations are positive, so are the parameters λ, and therefore each of the parameters ηs is bounded:

For every s ∈ [1 : (n + m − 1)] the solutions of the following set of inequalities:

form a convex set. The interaction of convex sets from each s is itself a convex set. The intersection of the set formed by solutions of all inequalities and the set formed by the system of linear equations (which is always a convex set) is also a convex set. Therefore, all possible values of η constitute a convex set, and for each individual parameter there is only one range of possible values. Although the parameters are not identified, it is still possible to sample from their joint posterior distribution. The data augmented Gibbs Sampler for test equating with the ERM which is an extension of the algorithm of Maris et al. (2015) was developed for this. The details of our algorithm can be found in the Appendix E.

Appendix D

Non-Equivalent Group Equating Designs

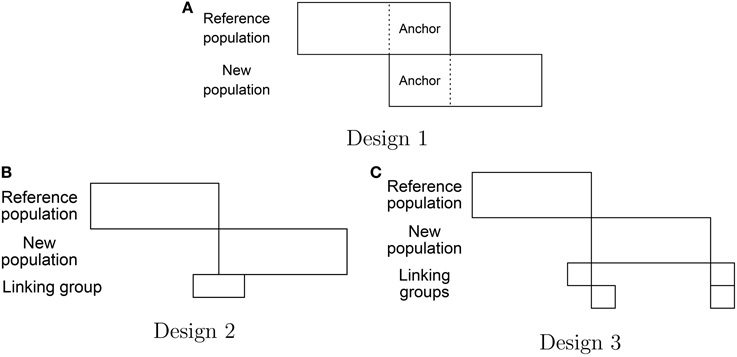

The most simple non-equivalent group design one is the anchor-item design, in which both the reference and the new tests include a common set of items. The second design is a post-equating design, in which the link between the two tests is established through the data collected in the so called linking groups, answering some items from the reference test together with some items from the new test. The third design is a variation of the post-equating design, in which persons from some of the linking groups answer the items from the reference test and some other items from the item bank, while persons from the other linking groups answer the items from the new test and the same items from the item bank. The items that do not belong to either the reference test or the new test might also be taken by students from some historic population in one of the previous years. The simplest forms of these designs are visualized in Figure A1.

Let us by Y denote the M×m data matrix with responses of a sample of persons from the new population to the new test, by κ denote the m+1 identified population parameters of the new population, by {r} the set of items in the reference test and by {c} the set of items in the new test. If the design includes linking groups, then Z is the data coming from the equating groups with K(g) and k(g) being the number of persons in the g-th linking group and the number of items answered by them; {e(g)} denotes the set of items answered by the g-th linking group and τ(g) are the population parameters of this linking group. Then the density of the observed data is:

where ui is the total number of correct responses to item i by all students which answered this item, Ns, Ms, and are the number of persons from the reference population, new population and the g-th linking group, respectively, that gave exactly s correct responses to the items in the corresponding tests.

The score distribution of the reference population on the new test depends on the population parameters η and the item parameters b:

To make inferences about this distribution we obtain samples from the posterior distribution p(η, b|… ). This is done using a data augmented Gibbs sampler.

Appendix E

We describe here how the samples from the joint posterior distribution

can be obtained using a Markov chain Monte Carlo algorithm. We describe it for the post-equating non-equivalent groups design (see Figure A1B) with G linking groups. The density of the data given this equating design is given in Equation (A19) in Appendix D. The algorithm can be easily altered for the different kinds of non-equivalent group designs.

Data Augmented Gibbs Sampler

For computational convenience, instead of parameters η, we use a different parametrization with the ratios of the consecutive parameters . To place the parameters on the scale common in IRT we consider logarithms of these ratios:

We use a prior which in addition to the monotonicity constraint (5) has a lower and an upper bound for the parameters:

where p−1 = −100 and pn+m = 100. This is a reasonable constraint, since it follows from the Dutch identity (Holland, 1990) that

A priori, item and population parameters are independent. For item parameters we choose a uniform prior for difficulty parameters −ln(bi), which is .

After the re-parametrization, the density of the observed data is:

where p, q, r(1), …, r(G) are the population parameters of the reference population, the new population and G linking groups, respectively.

Although, we are interested only in the parameters bc and p, the other parameters () are also sampled as nuisance parameters. Moreover, to make the full conditional posterior distribution of ps tractable, at every iteration we will sample augmented data x*: responses of persons from the reference group to the items of the new test (Tanner and Wong, 1987; Zeger and Karim, 1991). This amounts to sampling from the joint posterior:

A Gibbs sampler is used, i.e., all parameters are subsequently sampled from their full conditional distributions given the new values of all other parameters (Geman and Geman, 1984; Casella and George, 1992). After starting from the initial values (1 for all item parameters, and the population parameters equally distanced from –3 to 3), the algorithm goes through the following steps:

Step 1. Sample the augmented data x*.

For every person j∈[1:N], sample a vector of responses from its full conditional posterior , which is factored in the following way:

where xj+ is the sumscore of person j. First, sample from the categorical distribution with probabilities

And then for every item i∈[1:m] sample from a Bernoulli distribution with probability:

Step 2. Sample from the full conditional posterior of the distribution of the item parameters.

For every i ∈ {r∕e}, sample bi from its full conditional posterior:

where . This is an scaled beta-prime distribution, to sample from which first sample from (x+i, N−x+i), and then transform it: .

For every g∈[1:G], for every i∈{r∩eg}, sample bi from its full conditional posterior:

where and . Unlike the full conditional of the item parameters of the items taken by persons from only one population, this distribution is not easy to sample from directly. It is more convenient to sample from the distribution of βi = −ln(bi) using a Metropolis-Hasting algorithm (Metropolis et al., 1953). We use as a proposal density with bi being the current value of the parameter.

For every i∈{c∕e}, sample bi from its full conditional posterior analogously to sampling bi, i∈{r∩eg}, because these items are not only taken by the new population, but responses to these items by the reference population are imputed.

For every g∈[1:G], for every i∈{c∩eg} sample bi from its full conditional posterior:

where . Use the same Metropolis-Hastings algorithm as for the items, taken by two populations. If the equating design specifies more than 3 populations taking some of the items, then the full conditional posteriors of those items can be extended accordingly.

Step 3. Sample the population parameters.

For every s∈[0:(n+m−1)], sample ps from its full conditional posterior:

where To sample from this distribution, we first sample from the truncated beta distribution

where and , using rejection sampling with U(a1, a2) as a proposal distribution, and then transform it:

For every s∈[0:(m−1)], sample qs from its full conditional posterior analogously to sampling ps. For every g∈[1:G], for every s∈[0:k(g)−1], sample form its full conditional posterior analogously to sampling ps.

At every iteration of the Gibbs sampler, we compute the expected score distribution for the reference population on the new exam Pr(X+mis ≤ T).

Keywords: item response theory, incomplete design, marginal Rasch model, missing data, non-identifiability, test equating

Citation: Bolsinova M and Maris G (2016) Can IRT Solve the Missing Data Problem in Test Equating? Front. Psychol. 6:1956. doi: 10.3389/fpsyg.2015.01956

Received: 28 May 2015; Accepted: 06 December 2015;

Published: 05 January 2016.

Edited by:

Mike W.-L. Cheung, National University of Singapore, SingaporeCopyright © 2016 Bolsinova and Maris. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maria Bolsinova, bS5hLmJvbHNpbm92YUB1dmEubmw=

Maria Bolsinova

Maria Bolsinova Gunter Maris

Gunter Maris