- Department of Psychology, The University of Winnipeg, Winnipeg, MB, Canada

What type of language makes the most positive impression within a professional setting? Is competent/agentic language or warm/communal language more effective at eliciting social approval? We examined this basic social cognitive question in a real world context using a “big data” approach—the recent record-low levels of public approval of the U.S. Congress. Using Linguistic Inquiry and Word Count (LIWC), we text analyzed all 123+ million words spoken by members of the U.S. House of Representatives during floor debates between 1996 and 2014 and compared their usage of various classes of words to their public approval ratings over the same time period. We found that neither agentic nor communal language positively predicted public approval. However, this may be because communion combines two disparate social motives (belonging and helping). A follow-up analysis found that the helping form of communion positively predicted public approval, and did so more strongly than did agentic language. Next, we conducted an exploratory analysis, examining which of the 63 standard LIWC categories predict public approval. We found that the public approval of Congress was highest when politicians used tentative language, expressed both positive emotion and anxiety, and used human words, numbers, prepositions, numbers, and avoided conjunctions and the use of second-person pronouns. These results highlight the widespread primacy of warmth over competence as the primary dimensions of social cognition.

Introduction

What demeanor makes the best impression within a professional setting? Is playing up agentic or communal aspects of personality more effective? Past research has found that agency and communion are the primary dimensions of social cognition (Cuddy et al., 2008): they explain most of the variance in how people judge one another (Rosenberg et al., 1968; Fiske et al., 2002; Cuddy et al., 2008). Communion tends to be the primary dimension of social cognition (Wojciszke et al., 1998). That is, when trying to make a good impression, coming across as warm and kind seems to be more important than coming across as competent and hard working. However, the primacy of communion over agency may be limited to personal contexts, such as among friends and family; in professional settings, where merit and mastery matter more, agency may be more important (Abele and Brack, 2013). The goal of this research is to examine—in a real world, professional context—the roles of agentic and communal language in making a favorable impression.

We define agency as a desire to get ahead and differentiate oneself from others (Bakan, 1966). It is a concern for competence, intelligence, skill, creativity, achievement, power, mastery, and assertiveness. To lack agency is to be weak, submissive, incompetent, and likely to fail. We define communion as a desire to get along and be a part of a larger social or spiritual entity. It is concern for friendliness, helpfulness, sincerity, trustworthiness, togetherness, solidarity, and intimacy. To lack communion is to be hostile, manipulative, and antisocial.

The U.S. Congress has a complex relationship with its electorate. In November 2014, public approval ratings of the U.S. Congress reached an all-time low, with just 9% of Americans expressing satisfaction with their own, elected government. What might be responsible for this shaky relationship between the people and its government? One possible explanation is that Congress has become incompetent, failing to pass legislation that serves the public's interests. However, the available data do not support this notion: the number of bills passed by Congress only weakly—and negatively—predicts its public approval (Ramirez, 2009). A recent article in the Washington Post summarized the mystery surrounding public approval of U.S. Congress: “Even when Congress does things, people still hate it” (Bump, 2015).

What, then, might explain public approval of Congress? Examining trends in public approval over the past few decades, one quickly notices that approval has fluctuated dramatically. As recently as 2001, approval reached 84%, before beginning a precipitous decline toward recent record lows. Societal and global factors, such as the September 11, 2001 attacks, may be responsible for the high approval in 2001. When the country is under threat, the people rally behind the government. However, 9/11 cannot explain why public approval of Congress was relatively high (over 40%) before 2001. What else may be at play?

We suggest that part of the answer lies in the demeanor of politicians, the manner in which the government communicates and the words they choose. A recent study found that politicians' choice of words play a significant role in explaining public approval (Frimer et al., 2015). Specifically, prosocial language of members of U.S. Congress strongly predicted public approval. Language and approval followed the same trajectory over the period under study. Both levels of prosocial language and public approval increased from 1996 to 2001, then began a precipitous decline over the next 7 years, before settling in at low levels since. Americans listen to their government. A surprisingly large number of Americans (47 million, according to a recent poll, Eggerton, 2013), watch televised debates of U.S. Congress on C-SPAN at least once a week. And media tone may also play a role in transmitting the signal from Congress to the public (Frimer et al., 2015). Coming across as warm and prosocial, by using words like gentle, contribute, trust, and cooperate, may help governments make a favorable impression upon their electorate.

The previous paper (Frimer et al., 2015) examined the role of just one linguistic category (prosocial words) in explaining public approval of Congress, raising questions about what other linguistic categories may be implicated. The present research expands this inquiry by examining the linguistic predictors of public approval of U.S. Congress through both theory-driven and data-driven approaches. Our theory-driven approach seeks to predict approval using the “Big 2” dimensions of social cognition: agency and communion. We follow with an exploratory data-driven approach to understanding impression formation in Congress as a tentative check on whether current social cognitive theory may miss important aspects of impression formation.

To be clear, our approach is correlational, precluding the possibility of causal inference. That is, our data cannot say whether Congressional language influences public approval. The opposite directionality is also possible: rising public approval could change the manner in which politicians communicate. And third variables, such as the effects of special interest groups, global or domestic events, and so on, could influence both politicians' language and their public approval. However, Frimer et al. (2015) found a 6 months' time lag between language and approval, meaning that what Congress says today best predicts their public approval 6 months into the future. Given that causality can only operate forwards in time, this finding may mean that Congressional language can influence the public. Moreover, this effect persists when controlling for (a) societal and global factors, such as unemployment levels and events like 9/11, (b) government competence, operationalized as the number of bills passed, (c) and even bipartisan cooperation. These findings suggest that language may play a causal role. We include the same control variables in our analysis to test whether the effects of language have a direct link to public approval. However, our present goal is to merely examine linguistic correlates of public approval, the presence of which would be consistent with, but would not establish, a causal link. At the same time, the absence of a correlation would be inconsistent with causal claims.

We examined the linguistic predictors of public approval of U.S. Congress using the standard set of linguistic categories established in previous research (Tausczik and Pennebaker, 2010) and built into Linguistic Inquiry and Word Count (LIWC; Pennebaker et al., 2007). We started with a theory-driven approach, then followed with a data-driven approach to check for possible blind spots in extant theory.

Our theory-driven approach examined links between agentic and communal language in Congress and its public approval. Coming across as agentic, communal, or both can make a good impression. On the other hand, appearing agentic but not communal can elicit envy in others, and appearing communal but not agentic can elicit pity (Cuddy et al., 2007). Highly respected people, such as individuals who received awards for their charity, tend to be high in both agency and communion (Frimer et al., 2011). Agency/Communion theory suggests that the government's use of both highly agentic and highly communal language will predict their public approval. Hypothesis 1 states that levels of both agentic and communal language by members of the U.S. Congress will positively predict public approval.

Agency and communion are meta-constructs, high level abstractions that summarize multiple operationalizable constructs. At such a high level of abstraction, these meta-constructs may not always be fine-grained enough to explain some impression formation processes. For example, an individual may value relationships for self-centered, Machiavellian reasons. This person might appear to be high in communion even though he/she has little interest in improving the welfare of others. Accordingly, following Frimer et al. (2011), we propose that communion has at least two components—a desire to belong to a social group and a desire to benefits others (prosociality). Belongingness refers to intimacy with friends, family, and group members, whereas prosociality refers to advancing the interests of the other people. The two tend to go together, but they are distinct motives. And each motive may be differentially implicated in social cognition.

Our bifurcation of communion aligns with Schwartz (1992) distinction between conservation values (security, tradition, and conformity) vs. self-transcendent values (benevolence, universalism), with McAdams' (1993) distinction between narrative themes of love/friendship vs. caring/help, and with Leach et al. (2007) distinction between sociability and morality. When forming impressions of others, people may alter their weightings of information about sociability, morality, and competence depending on the purpose of their evaluation. For example, when deciding whether to trust a stranger with personal information, people may rely primarily on information about morality, whereas people rely on sociability information when deciding whether or not to invite a stranger to a party (Brambilla et al., 2011).

Generally, people may favor a belonging motive in people they know personally, because the self is the object of the desire for intimacy and affiliation. In more socially distant others, the belonging motive may carry less favor, and may even come across as flaky. Some previous research suggests that prosociality is more important than belongingness when trying to make a good impression in distant others: People who won a national award for their charity (a socially distant context) are differentiated from ordinary people in their prosocial motive, but not in terms of their belonging motive (Frimer et al., 2011). Given the remote relationship between the U.S. Congress and the public, these findings suggest that prosocial language will better predict public approval of U.S. Congress than will communal language that includes belongingness (Hypothesis 2; Congress and the public, 2015).

Does presenting an agentic or a communal demeanor make a more positive impression? Communion seems to be the primary dimension of social cognition in most settings (Cuddy et al., 2008). Politicians seen as beneficent tend to garner the most support (Cislak and Wojciszke, 2008). This leads to Hypothesis 3a: communal language will be a stronger predictor of public approval than will agentic language. However, agency may be more important than communion in professional settings (Abele and Brack, 2013). Given that U.S. Congress is a professional context, one might expect that using agentic language to be more important than using communal language for bolstering public approval. For example, perceptions of candidates' competence (agency), inferred from images of their faces, predict election outcomes (Todorov et al., 2005). The competing Hypothesis 3b is that agentic language will be a stronger predictor of public approval than will communal language.

Theory can sometimes have blind spots, failing to recognize the importance of certain concepts. We followed our theory-driven approaches with an exploratory, bottom-up, data-driven approach to understanding public approval of U.S. Congress. To test for these more specific predictors, we conducted an exploratory stepwise regression analysis, in which we entered all 63 LIWC categories.

More generally, how might the words of Congress influence public sentiment? Two possibilities come to mind. The first is a direct mechanism: Americans may watch televised debates of Congress on C-SPAN. A surprisingly large number (47 million) of Americans watch C-SPAN at least once a week (Hart Research Associates, 2013). With roughly 80 million Americans voting in midterm (Congressional) elections, the extent of C-SPAN viewership is substantial. The second mechanism is indirect: The media may watch Congressional debates, be influenced by the language used, and resultantly convey Congress in a positive or negative light in editorial columns. Once sentiments about Congress are seeded in the population, they may spread through a process of social contagion. We conclude our analyses by testing whether media portrayal mediates the link between congressional language and public approval.

Materials and Methods

U.S. Congress Word Corpus

Since 1996, U.S. Congress has transcribed all of the in-session floor debates and made them publicly available from the U.S. Government Publishing Office (Congressional Record, 2015). Using an API, we downloaded transcripts of all the words spoken during floor debates of the U.S. House of Representatives between January 1996 and November 2014, inclusive. We excluded files marked as “extension of remarks,” which are words that were not actually spoken out loud on the floor, but rather entered into the record after the fact. In total, House members uttered 123,781,226 words. Each transcript contained all the words spoken in a single month. To avoid unreliable measurements, we excluded the 22 transcripts that contained fewer than 5000 words. Remaining were 206 transcripts (months), with an average word count of 600,840 (SD = 353, 784). Since public officials consent to have their words entered into the public record, we did not ask an ethics committee to review this study.

Public Approval of the U.S. Congress

During 198 of the 227 months (87%) between January 1996 and November 2014, Gallup polled the U.S. public on whether they “approve or disapprove of the way Congress is handling its job”). For each survey, Gallup interviews a minimum of 1000 U.S. adults 18 years or older, randomly selected from all 50 US states and the District of Columbia. They weight their results to correct for unequal selection probability, nonresponse bias, and double coverage of landline and cellphone users. They also weight their data according to demographics from the current U.S. census1. Following Frimer et al. (2015), we averaged all the polls taken in a given month, and handled missing data with linear interpolation. Public approval averaged 33% (SD = 15%). We also note a limitation of this dataset: It is dichotomous. Approval of Congress may be a continuous construct, and we may miss some variability by relying on a dichotomous measure.

Text Analysis

We content-analyzed each transcript using LIWC (Pennebaker et al., 2007). We used the 2007 LIWC dictionary, which has 63 word categories. LIWC simply counts up the number of words in a target transcript that match any of the words in a particular category, and calculates a density score: density = #matches/ #words). Past research demonstrated each category's reliability and validity (see Tausczik and Pennebaker, 2010).

We also used the prosocial words dictionary as an additional word category. It contains 127 words conveying content about collective interests and helping others. Past research developed, introduced, and validated this dictionary (Frimer et al., 2014, 2015).

Agency and Communion Coding

We used the prosocial words dictionary to operationalize the prosocial facet of communion. And we used the standard LIWC categories to operationalize the broader agency and communion constructs. The standard LIWC categories do not include agency and communion, per se. However, some of the categories imply agentic or communal motives. For example, agency is evident in categories such as achievement, insight, and money. To operationalize agency and communion, we had five research assistants, each blind to the study hypotheses, code each LIWC category as being agentic and/or communal. (We examined whether our results were robust with respect the measurement of agency and communion and generally found positive evidence. See the Supplemental Materials for details).

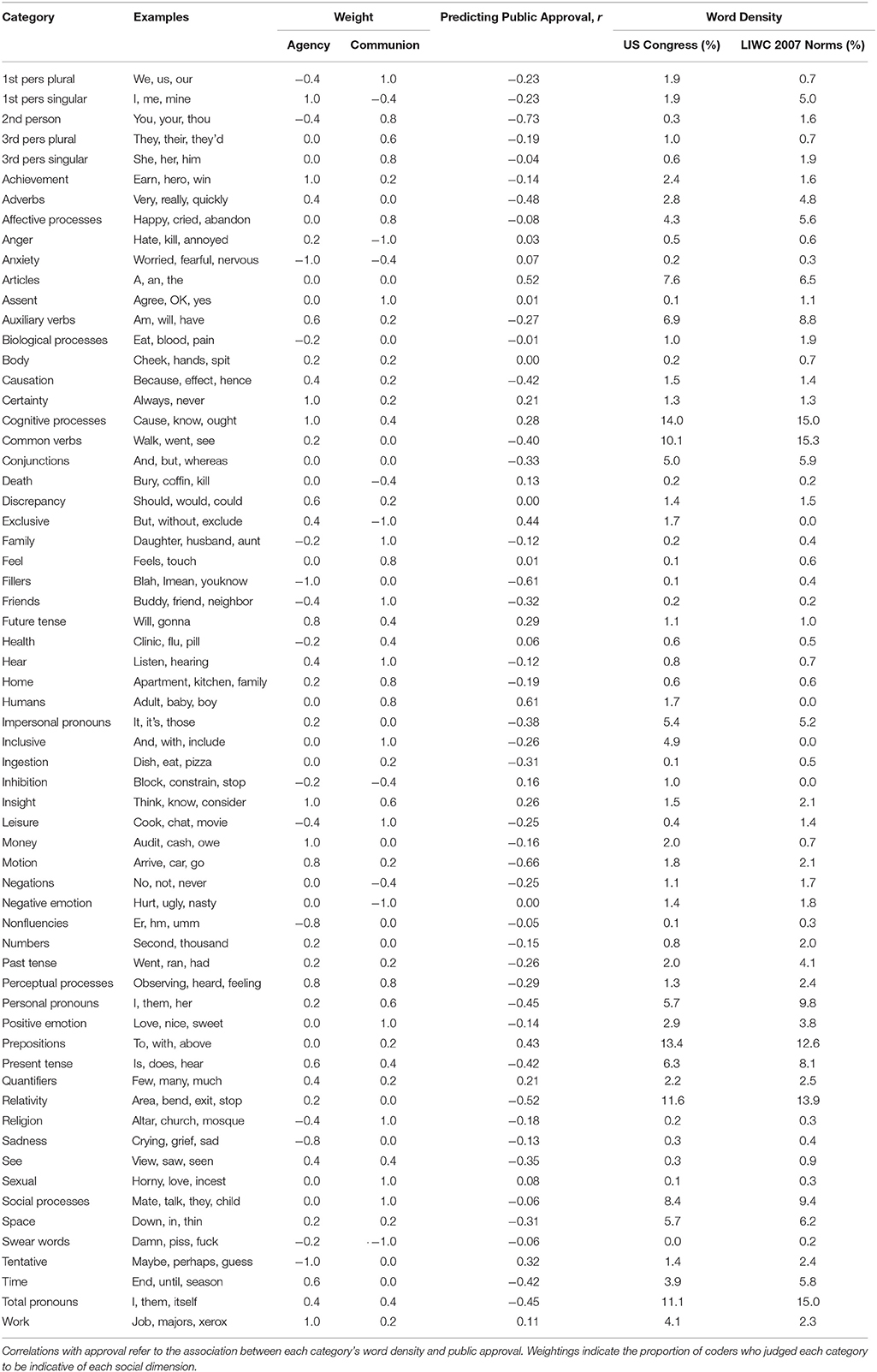

Coders first reviewed definitions of agency and communion derived from past research (Fiske et al., 2007; Cuddy et al., 2008). The definition of agency was “a desire to differentiate oneself from others. It is a concern for competence, intelligence, skill, creativity, achievement, power, mastery, and assertiveness. To lack agency is to be weak, submissive, incompetent, and likely to fail.” The definition of communion was “a desire to be a part of a larger social or spiritual entity. It is concern for friendliness, helpfulness, sincerity, trustworthiness, togetherness, solidarity, and intimacy. To lack communion is to be hostile, manipulative, and antisocial.” Coders then reviewed the names of the categories and a short list of representative keywords from each category2 (found in Table 1).

For agency, the coders assigned a score of +1 if the category was agentic, −1 if the category implied a lack of agency, 0 if the category was agency-neutral. They did the same, independently, for communion. For example, all five judges assigned a score of +1 on communion for the categories called family and friend. And all five judges also assigned a −1 agency score to the categories anxiety and tentativeness in that anxious and tentative people seem to lack agency. And all five judges assigned a score of 0 on both agency and communion to the category ingestion.

Coders generally agreed in their judgments (communion ICC = 0.90, agency ICC = 0.82) so we derived weights for agency and communion for each LIWC category by taking the average across the five judgments (see Table 1). Agency and communion weightings were independent, r = −0.03, p = 0.81. This aligns with the common theoretical understanding of these dimensions as orthogonal (Fiske et al., 2002; Cuddy et al., 2007).

Third Variables

To test whether the link between the language of Congress and the approval of Congress is merely a product of some third variable, we collected data on several factors exogenous to US Congress (e.g., the economy) and several factors endogenous to Congress (e.g., partisan conflict) to act as statistical controls.

Exogenous Factors

We collected data on several factors exogenous to US Congress that could explain both changes in language and changes in public approval. Among them were the effect of world events, US unemployment, and Americans' expectations about the economy. These are factors that previous research (Ramirez, 2009; Frimer et al., 2015) has examined as possible explanations for public approval of Congress.

World Events

We reasoned that if world events (e.g., 9/11) were responsible for changes in Congressional rhetoric and in public approval, they also would have had a similar effect on the language of the US President. We estimated the effect of world events by assessing the levels of prosocial, communal, and agentic language in the rhetoric of the President. Between 1996 and 2014, The President held 411 news conferences. We downloaded all conference transcripts for a total of 2,205,168 words3. We then analyzed each transcript for prosocial word density using the prosocial words dictionary, then averaged the scores of transcripts in each month (M = 1.60%, SD = 0.37%). We used the agency and communion coding described in section 1.4 to measure the communal and agentic content of Presidential rhetoric (agency M = 0, SD = 4.54, communion M = 0, SD = 5.00).

Unemployment

We downloaded employment statistics for persons above the age of 16 from the US Bureau of Labor Statistics (ID LNS 14000000)4. Between 1996 and 2014, the US unemployment rate averaged 6.0% (SD = 1.8%).

Economic Expectations

In accordance with past research (Ramirez, 2009), we operationalized public expectations about the economy as the University of Michigan Index of Consumer Sentiment5 (ICS). ICS polls are released monthly. It aggregates five measures of consumer confidence; whether (1) they are better off financially than they were 1 year ago, (2) they expect to be better off financially 1 year into the future, (3) they expect business to improve in the coming year, (4) they expect the country's financial situation to improve over the next 5 years, and (5) the present is a good time to buy major household appliances. Over the period of study, scores on this measure ranged from 55.3 to 112.0 (M = 86.67, SD = 13.99).

Endogenous Factors

As additional third variables, we also collected data on several factors endogenous to US Congress, such as the composition and functioning of Congress. Among these were the amount of conflict between the parties, the efficacy of Congress, and the demographic composition of Congress.

Partisan Conflict in Congress

Following Ramirez (2009), we defined a partisan vote as one in which at least 75% of Republicans voted one way and 75% of Democrats voted the other. The House voted on 12,563 bills between 1996 and 2014 for an average of 55 votes per month (SD = 42). We downloaded and retained data from months that had five or more votes6 (200 out of 227 mo, 88% retention). Finally, we operationalized partisan conflict as the proportion of partisan votes per month (M = 43%, SD = 18%).

Congressional Efficacy

We operationalized Congressional efficacy as the number of bills passed in a given month. We downloaded summaries of every vote in US Congress5. We operationalized bills passed in the House as the number of bills that received the majority of votes. On average, the House passed 38 bills (SD = 27) per month.

Presidential Vetoes

We collected veto counts from the U.S. Senate website. Presidential vetoes occurred infrequently over the period of study (1996–2014 total = 39, M = 0.17, SD = 0.50)7.

Congress Composition

We collected data on the composition of Congress, both in terms of party and gender. We retrieved the number of female members of Congress each term (M = 15%, SD = 2%) and the number of Democratic members of Congress each term (M = 49%, SD = 4%)8.

Mechanism

To examine how Congressional language could influence public opinion, we tested one possible communication channel: media coverage on Congress. We used Frimer et al.'s (2015) measure of Congressional media coverage, defined as the quantity × the valence of news editorials covering Congress in a given month. The quantity measure was defined as the number of news editorials published on Congress available in the Dow Jones & Company's Factiva database. Frimer et al. (2015) then sorted the results by relevance and downloaded the most relevant article for tone assessment. Tone was defined as the (human-coded) valence toward Congress on a nine-point scale, anchored to −4 (extremely negative) to +4 (extremely positive).

Results

Theory-Driven Approach

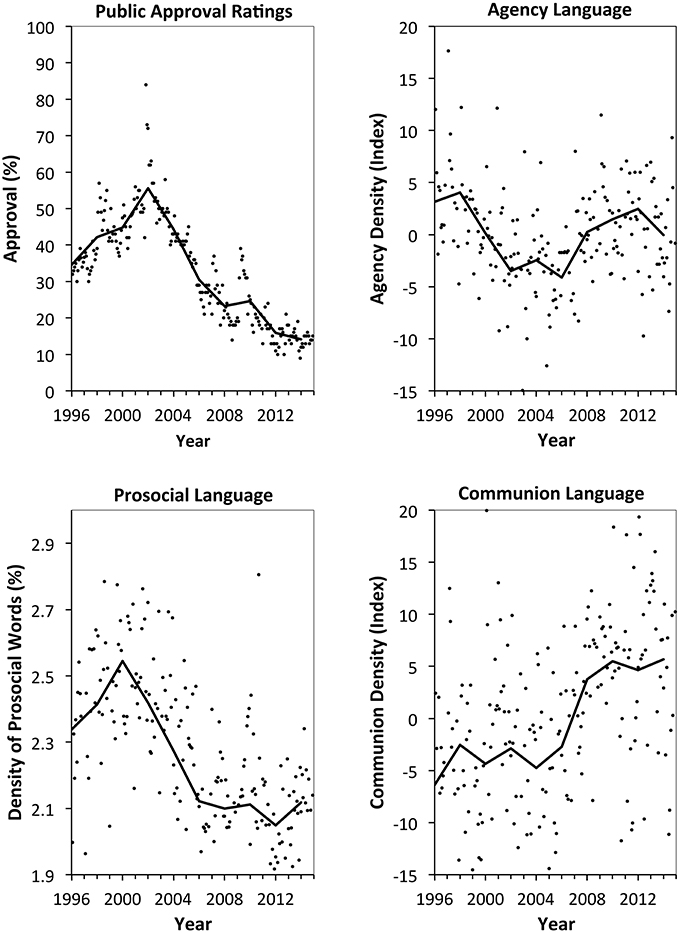

After standardizing the word densities (z-scores) from each word category, we calculated agency and communion indexes by multiplying each category's word density by its weight, and summing across all categories. The resulting communion scores ranged from −46.1 to 22.0 (M = 0, SD = 9.2). Agency scores ranged from −19.8 to 22.0 (M = 0, SD = 5.1). We then tested whether agentic and communal language in the U.S. House of Representatives positively predicts public approval. Figure 1 shows the trajectory of these types of language and public approval over time. Public approval climbed from 1996 to 2001, declined from 2001 to 2008, then remained low. Prosocial language followed the same trajectory. Interestingly, communal language—based on the broader notion of communion as including a sense of belonging—followed the opposite trend to some degree. Communal language was low until 2006 then climbed and remained steadily high after 2008. Communal and prosocial language were negatively related, r(206) = −0.14, p = 0.04. And agentic language seemed to take a small dip from 2001 to 2006 and otherwise remain high.

Figure 1. Language in the U.S. House of Representatives and public approval of Congress over time. Dots represent scores from individual months. Lines connect 2-year session averages. “public approval” and “prosocial words” taken from Frimer et al. (2015).

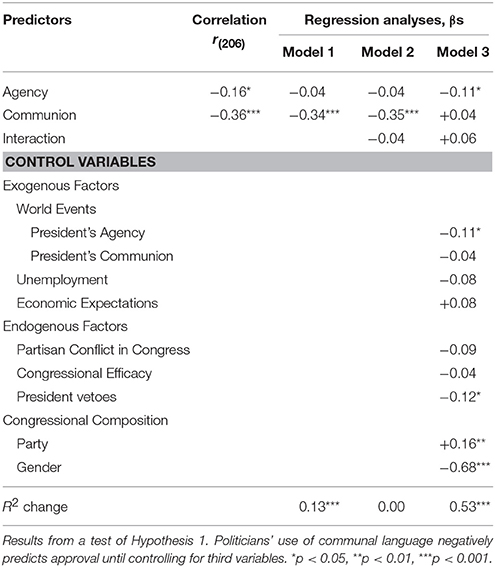

We tested the two theory-driven hypotheses in separate regression analyses. Hypothesis 1 stated that politicians' use of agentic and communal language (broadly defined) would positively predict public approval of the U.S. Congress. First, we examined simple correlations and found that the use of agentic and communal language negatively predicted public approval (see Table 2). Entering agency and communion into a regression equation predicting public approval (model 1), we found that only communion predicted public approval—but negatively. (Entering an agency × communion interaction term did not change the result; see model 2). Next, we controlled for third variables. In model 3, we re-ran the model but now including all exogenous and endogenous factors. We found that agency became a significant negative predictor of approval, whereas the effect of communion on public approval ceased to be significant. These results did not support Hypothesis 1.

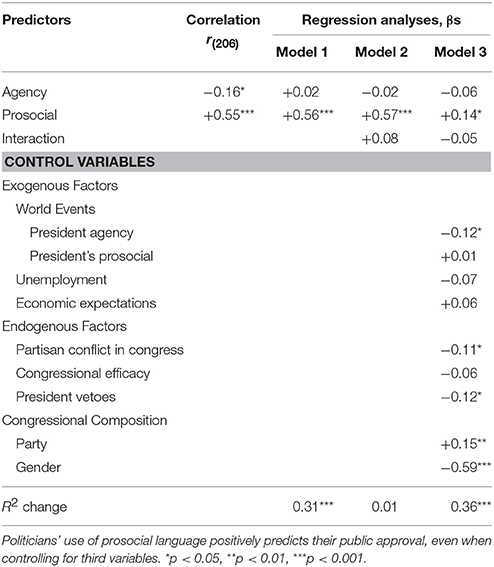

Hypothesis 2 states that the prosocial facet of communion will predict public approval whereas communion writ large will less so. To test this, we ran the same basic analyses, only replacing the communion index with the narrower prosocial language category. Table 3 presents the results. Supporting Hypothesis 2, we found that prosocial language positively predicted public approval. Agency and the interaction term did not (models 1, 2). Next, we controlled for third, and found that prosocial word density continued to predict public approval (model 3), suggesting that Congress' use of prosocial language remains a robust, independent positive predictor of public approval, whereas communion does not. This evidence supports Hypothesis 2.

Hypothesis 3 made competing predictions about whether agentic or communal language would be the stronger positive predictor of public approval within the U.S. Congress. Comparing the association between communal language and public approval (r = −0.36) vs. the association between agentic language and public approval (r = −0.16), we found a significant difference, z = 2.18, p = 0.03. Noting that both associations are negative complicates the interpretation of these results with respect to Hypothesis 3. However, when comparing the association between prosocial language and public approval (r = +0.55) vs. the association between agentic language and public approval (r = −0.16), we found a significant and meaningful difference, z = 7.89, p < 0.001, lending support to Hypothesis 3b. Even in this professional context, prosocial language was a more important predictor of social approval than was agentic language.

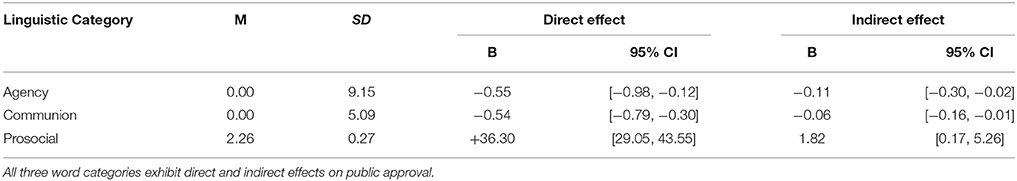

Next, we investigated how Congressional language may influence public sentiment—through the media. Using Hayes' bootstrapping procedure (Hayes, 2013), we tested whether media portrayal mediates the effects of Congressional language (prosocial, communal, and agentic) on public approval (see Table 4). Media portrayal explained the link between each of the three linguistic predictors and public sentiment.

Table 4. Direct effects (C-SPAN) and indirect effects (media valence) of linguistic categories on public approval from bootstrapped mediation analyses.

In a follow-up moderation analysis, we examined whether the effect of agentic and communal language on public approval depends on the demographic make-up of Congress (both in terms of political leaning and gender). In particular, we tested whether the public approved of stereotype-confirming behavior (e.g., communal females, agentic males) or stereotype-disconfirming behavior (e.g., agentic females, communal males). The results from our analyses were mixed, but more so supported the stereotype-disconfirming hypothesis. Our analyses suggested that agency becomes a negative predictor of public approval only when Congress is male-dominated, whereas agency does not predict public approval when Congress was less dominated by males. We also found that communal language garners approval when Congress is dominated by males, but communal language elicits disapproval when it was less dominated by males. Prosocial language consistently predicted higher approval, and the effect was stronger when Congress had fewer males and more Republicans. See the Supplemental Materials for details.

Data-Driven Approach

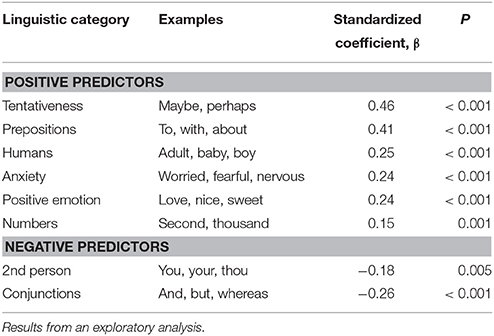

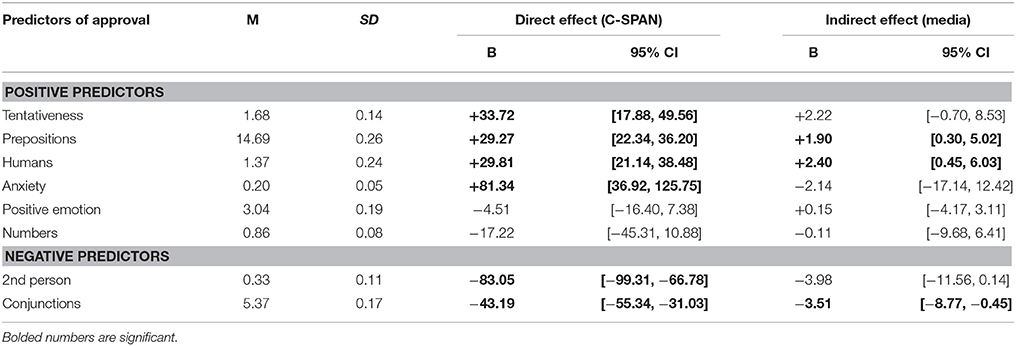

To explore whether extant theory may have missed certain features of impression formation, we conducted a stepwise regression analysis with all 63 LIWC categories as possible predictors of public approval. To limit the potential for false positive results, we set conservative limits of p < 0.005 for inclusion and p > 0.01 for exclusion in the model. Eight of 63 categories entered the model (see Table 5). Public approval of the U.S. Congress was highest when politicians used tentative language, talked about humans, expressed both positive emotion and anxiety, used prepositions and numbers, and avoided the use of conjunctions and the second person. We interpret these results in the Discussion.

To test whether each of the categories predicting approval did so directly or indirectly through media portrayal, we ran mediation analyses for each of the eight significant predictors, testing media portrayal as a mediator (see Table 6). The results suggest that media portrayal helps explain how the use of prepositions, conjunctions, and human words could influence the public's feelings toward Congress.

Table 6. Direct effects (C-SPAN) and indirect effects (media valence) of linguistic categories on public approval from bootstrapped mediation analyses.

Discussion

What demeanor makes the best impression? We examined whether the usage of different categories of words predict social approval in a pressing real world setting—the U.S. Congress. Over the time in which transcripts of Congress were available (since 1996), public approval has varied dramatically. Using both theory-driven and theory-driven approaches, we found that several linguistic categories were predictive of public approval. For example, public approval is highest when public officials talk helping and humans, express positive emotion, and use tentative language.

Theory-Driven Approach

The theory-driven component of this paper examined two hypotheses concerning the linguistic predictors of public approval of Congress. Hypothesis 1 arose from the theory that admired groups are highly agentic and highly communal (Fiske et al., 2002). It states that agentic and communal language should independently and positively predict approval. Our analyses did not support Hypothesis 1.

Noting that communion comes in more than one form, we derived Hypothesis 2, which states that only the prosocial, helping component of communion would positively predict approval, whereas the belonging motive would not. Our results supported Hypothesis 2. The American public approved of Congress more when its members spoke about helping, but not when they spoke about belonging. When hearing distant others speak, like members of U.S. Congress, observers might value helping more than belonging, because helping would more directly serve the public's interests. These findings highlight the importance of a distinction between belonging and helping as components of communion.

Hypothesis 3 examined two competing predictions about whether agentic or communal language would be the stronger, more positive predictor of public approval. When examining the differential predictive power of prosocial language and public approval, we found support for Hypothesis 3b: even in this professional setting, prosocial language was more positively associated with public approval than was agentic language. This finding is revealing of the far-reaching primacy of the desire for warmth in others over the desire for competence, even in some professional contexts. We also tested whether language exerted a direct effect on public approval, or an indirect effect via media portrayal. We found that communal, agentic, and prosocial language have direct and indirect effects on approval. That is, Congress' words could influence the public through the media and also through other channels, like public viewership of C-SPAN.

We acknowledge that our theory-driven approach carries a number of limitations. For one, our approach is correlational and is therefore subject the third variable and directionality problems. We did control for the effects of several exogenous factors, such as economic conditions, and endogenous factors, such as congressional performance, and still found that prosocial language predicted public approval. We did not, however, examine whether Congressional language still predicts public approval when controlling for the effects of other theoretically relevant demographic variables, such as political orientation, gender, or age of the members of the public. Moreover, unidentified variables may have suppressed a real and meaningful relationship between agentic word use and public approval.

Along the same lines, our approach leaves uncertainty about directionality. For example, the negative relationship between communion and public approval could result from the influence of negative approval ratings on bonding among members of congress. Perhaps as members of Congress feel more under threat from the public, they begin to speak more about Congress itself as a community to which they belong.

Another limitation of this study is that, because we did not predict a negative relationship between communal language and public approval, we did not develop a measure of “belonging” language. Hence, we are unable to confirm our explanation that “belonging” words in the context of Congress appear sappy or flaky. In the Supplemental Materials, however, we did find some additional evidence for this explanation using Hart et al. (2011) agency and communion dictionaries. When we entered the effect of (Hart's) communion on public approval controlling for prosocial words (theoretically leaving only the variability due to “belonging”), we found that it uniquely and negatively predicted approval, meaning that the belonging form of communion could elicit disapproval in Congress. Future research could more directly identify belonging and helping as components of communion by developing a “belonging” dictionary to accompany the existing prosocial word dictionary.

Data-Driven Approach

In an exploratory analysis, we entered 63 linguistic predictors of public approval, and found that eight predicted public approval in a regression analysis. Each result is merely suggestive until replicated. However, we offer speculative theoretical explanations for these predictors before concluding.

Tentativeness

The standard word category that most strongly predicted public approval was tentativeness; the more tentatively Congress spoke, the more the public approved of them (r = 0.46). The tentativeness dictionary contains words like “perhaps,” “maybe,” and “guess,” which may indicate a lack of conviction or certainty in the speaker. Consistent with this interpretation, our coding weights suggest that tentativeness may signal a lack of agency (agency weight = −1, communion weight = 0). Alternatively, expressing opinions with uncertainty may exude humility and receptiveness to others' opinions. Tentative expression may disarm an audience, and thus facilitate communication and improve impression formation. In his autobiography, Benjamin Franklin described using tentative language to positive effect when communicating with both colleagues and adversaries:

I grew very artful and expert in drawing people, even of superior knowledge, into concessions, the consequences of which they did not foresee, entangling them in difficulties out of which they could not extricate themselves, and so obtaining victories that neither myself nor my cause always deserved. I continu'd this method some few years, but gradually left it, retaining only the habit of expressing myself in terms of modest diffidence; never using, when I advanced anything that may possibly be disputed, the words certainly, undoubtedly, or any others that give the air of positiveness to an opinion; but rather say, I conceive or apprehend a thing to be so and so; it appears to me…This habit, I believe, has been of great advantage to me when I have had occasion to inculcate my opinions, and persuade men into measures that I have been from time to time engag'd in promoting (Franklin, 2006, Ch. 2).

Anxiety

Anxious language also positively predicted public approval. Our coders rated anxiety as a strong signal of lacking agency (weight = −1), and as a weaker signal of lacking communion (weight = −0.4). Thus, anxiety may predict public approval because it indicates a lack of agency and communion. Another possible explanation is that expressing anxiety signals threat. When people feel threatened, they tend to accept their position in the social hierarchy (Jost et al., 2004) and seek protection from leaders (Klapp, 1948; Atran and Norenzayan, 2004). Whether members of Congress use anxiety to deliberatively incite fear, or are merely reacting to threatening circumstances remains unclear from the present data.

Second-Person

We also found that the use of second-person words predicts lower public approval. When Congress used the word “you,” the public tended to like them less. Second person was rated as indicating the presence of communion (weight = 0.8) and the absence of agency (weight = −0.4). Thus, second person may convey the “flakiness” we suggest the public perceives when Congress talks about belonging. Or perhaps the public perceives the use of second-person speech as an accusatory tone, or as an attempt to avoid taking personal responsibility.

Prepositions

The use of prepositions also robustly predicted public approval. A Congress that used more words like “to,” “with,” and “above” had higher public approval than one that used these words less. Our coding weightings suggest preposition usage is mostly unrelated to agency (weight = 0) and communion (weight = 0.2). This variable may constitute a theoretical blind spot, the importance of which has gone unidentified by the agency and communion constructs. Psychological correlates of conjunction use include education level and concern with precision (Hartley et al., 2003). Hence, this correlation may, like tentativeness, arise because of an implicit preference among Americans for an educated, precise-sounding government.

Humans

When delegates speak about boys, girls, adults, and children, Americans show approval. Our coders thought talking about humans indicated communion (weight = 0.8) but not agency (weight = 0). Human words correlated with prosocial words, r(206) = 0.519, p < 0.001, suggesting that their usage may indicate the “helping” component of communion. The “humans” LIWC category has be largely unexamined among scholars to date (Tausczik and Pennebaker, 2010). However, one study found that mindfulness-based meditation can increase people's tendency to write about humans, perhaps because meditation increases empathy (Block-Lerner et al., 2007). Speaking about human beings may make politicians come across as empathic.

Positive Emotion

When people use words like “love,” “nice,” and “sweet,” the public approves. Positive emotion was coded as a strong indicator of communion (weight = 1), but not agency (weight = 0). Again, this suggests that specific components of communion may better predict public approval than the meta-construct as whole. Emotional contagion may also explain this effect. When leaders use positive emotion words, followers' moods improve (Bono and Ilies, 2006). So delegates' words may improve the affect of viewers, which reflects through their approval of Congress.

Numbers

Number words positively predict public approval. We are unaware of previous research on the psychological correlates of speaking about numbers, and they also did not receive significant weight on agency (weight = 0.2) or communion (weight = 0). Speculatively, number words may be a symptom of representatives describing specific issues and plans. Specifics may imply agentic action. Given the negative or null relationships between agentic language and public approval, these results raise the possibility that some sub-component or sub-form of agency may have a positive effect on impression formation. Perhaps audiences process agency implicitly—an agentic mode (e.g., being specific) may make a good impression whereas an agentic message (e.g., talking about work) may have less of an effect.

Conjunctions

Public approval drops when delegates use more conjunctions; words like “and,” “but,” and “whereas.” Coders did not rate conjunctions as indicative of agency or communion (both weights = 0). This category may represent another blind spot, not picked up by more broad theoretical constructs. This LIWC category remains largely unexplored and so has no known psychological correlates (Tausczik and Pennebaker, 2010). Conjunctions connect two separate phrases in a single, complex sentence. Perhaps people dislike conjunctions because they prefer straightforward communication. If the use of conjunctions were an indicator of complex language, we might expect to find a positive relationship between conjunction word usage and other indicators of rhetorical complexity, such as sentence length (words per sentence) and the density of words with six or more letters. Indeed we found that the density of conjunctions correlates positively with sentence length, r(215) = 0.24, p < 0.001, but (unexpectedly) correlates negatively with the density of six letter words, r(215) = −0.38, p < 0.001. While the negative link between conjunction usage and public approval could mean that the public dislikes obfuscating language, the present results are inconclusive on this matter. Future research should examine how conjunction use modifies impression formation and the effect of conjunction use on the social desirability of a speaker.

Conclusion

What should a person say to make a favorable impression in a professional context? This work examined the linguistic predictors of public approval of U.S. Congress. We emphasize that our findings should be interpreted tentatively, given that we can make no causal inferences from this correlational analysis. Nonetheless, our results suggest that speaking about helping, speaking tentatively, and avoiding the usage of second-person words may help Congress improve its relationship with the population is serves.

Author Contributions

JF developed the theoretical framework of the paper and guided the statistical analysis. JF proposed using coders to create agency and communion scores. JF contributed to writing through substantial editing of each draft of the paper. AD conducted the statistical analysis and designed and carried out the coding task. AD also reviewed theoretically-relevant literature and wrote several drafts of each section of the paper. AD also organized and wrote the references. But authors agree to be accountable for all aspects of the work.

Funding

This research was supported by a grant from the Social Sciences and Humanities Research Council of Canada to JF (grant number 435-2013-0589).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Ruth Vanstone, Alanna Johnson, L'jogreti Soto, and Mackenzie Sapacz for coding linguistic categories, the Sunlight Foundation and capitolwords.org9 for helping us gain access to the Congressional Record, and the Social Sciences and Humanities Research Council of Canada for funding support [435-2013-0589].

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2016.00240

Footnotes

1. ^For more information on Gallup polling methods, see http://www.gallup.com/185477/gallup-poll-social-series-work.aspx?utm_source=METHODOLOGY&utm_medium=topic&utm_campaign=tiles.

2. ^For category names and keywords, see http://liwc.net/descriptiontable1.php.

3. ^Retrievable from www.presidency.ucsb.edu.

4. ^Retrievable from www.bls.gov/data.

5. ^Retrievable from http://www.sca.isr.umich.edu.

6. ^Retrievable from voteview.com/partycount.htm.

7. ^Retrievable from www.senate.gov/reference/Legislation/Vetoes/vetoCounts.htm.

8. ^Retrievable from www.fec.gov.

9. ^Data from: Capitolwords. Sunlight Foundation (2015). Available online at: http://capitolwords.org.

References

Abele, A. E., and Brack, S. (2013). Preference for other persons' traits is dependent on the kind of social relationship. Soc. Psychol. 44, 86–94. doi: 10.1027/1864-9335/a000138

Atran, S., and Norenzayan, A. (2004). Religion's evolutionary landscape: counterintuition, commitment, compassion, communion. Behav. Brain Sci. 27, 713–730. doi: 10.1017/S0140525X04000172

Bakan, D. (1966). The Duality of Human Existence: An Essay on Psychology and Religion. Oxford, England: Rand Mcnally.

Block-Lerner, J., Adair, C., Plumb, J. C., Rhatigan, D. L., and Orsillo, S. M. (2007). The case for mindfulness-based approaches in the cultivation of empathy: does nonjudgmental, present-moment awareness increase capacity for perspective-taking and empathic concern? J. Marital Fam. Ther. 33, 501–516. doi: 10.1111/j.1752-0606.2007.00034.x

Bono, J. E., and Ilies, R. (2006). Charisma, positive emotions and mood contagion. Leadersh. Q. 17, 317–334. doi: 10.1016/j.leaqua.2006.04.008

Brambilla, M., Rusconi, P., Sacchi, S., and Cherubini, P. (2011). Looking for honesty: the primary role of morality (vs. sociability and competence) in information gathering. Eur. J. Soc. Psychol. 41, 135–143. doi: 10.1002/ejsp.744

Bump, P. (2015, May 13). Even when Congress does things, people still hate it. The Washington Post. Retrieved from: http://www.washingtonpost.com

Cislak, A., and Wojciszke, B. (2008). Agency and communion are inferred from actions serving interests of self or others. Eur. J. Soc. Psychol. 38, 1103–1110. doi: 10.1002/ejsp.554

Congressional Record (2015). Data from: Congressional Record. U.S. and Government Publishing Office. Available online at: http://www.gpo.gov/fdsys/browse/collection.action?collectionCode=CREC

Congress the public (2015). Data from: Congress and the Public. Gallup. Available online at: www.gallup.com/poll/1600/congress-public.asp.

Cuddy, A. J., Fiske, S. T., and Glick, P. (2007). The BIAS map: behaviors from intergroup affect and stereotypes. J. Pers. Soc. Psychol. 92:631. doi: 10.1037/0022-3514.92.4.631

Cuddy, A. J., Fiske, S. T., and Glick, P. (2008). Warmth and competence as universal dimensions of social perception: the stereotype content model and the BIAS map. Adv. Exp. Soc. Psychol. 40, 61–149. doi: 10.1016/S0065-2601(07)00002-0

Eggerton, J. (2013). Exclusive: C-SPAN Study Finds Almost Quarter of Cable/Satellite Subs Watch Weekly. Retrieved from: http://www.broadcastingcable.com

Fiske, S. T., Cuddy, A. J., and Glick, P. (2007). Universal dimensions of social cognition: warmth and competence. Trends Cogn. Sci. (Regul. Ed). 11, 77–83. doi: 10.1016/j.tics.2006.11.005

Fiske, S. T., Cuddy, A. J., Glick, P., and Xu, J. (2002). A model of (often mixed) stereotype content: competence and warmth respectively follow from perceived status and competition. J. Pers. Soc. Psychol. 82:878. doi: 10.1037/0022-3514.82.6.878

Franklin, B. (2006). The Autobiography of Benjamin Franklin (Vol. 1). Retrieved from: http://www.gutenberg.org/files/20203/20203-h/20203-h.htm

Frimer, J. A., Aquino, K., Gebauer, J. E., Zhu, L. L., and Oakes, H. (2015). A decline in prosocial language helps explain public disapproval of the US Congress. Proc. Natl. Acad. Sci. U.S.A. 112, 6591–6594. doi: 10.1073/pnas.1500355112

Frimer, J. A., Schaefer, N. K., and Oakes, H. (2014). Moral actor, selfish agent. J. Pers. Soc. Psychol. 106, 790–802. doi: 10.1037/a0036040

Frimer, J. A., Walker, L. J., Dunlop, W. L., Lee, B. H., and Riches, A. (2011). The integration of agency and communion in moral personality: evidence of enlightened self-interest. J. Pers. Soc. Psychol. 101, 149. doi: 10.1037/a0023780

Hart, C. M., Sedikides, C., Wildschut, T., Arndt, J., Routledge, C., and Vingerhoets, A. J. (2011). Nostalgic recollections of high and low narcissists. J. Res. Pers. 45, 238–242. doi: 10.1016/j.jrp.2011.01.002

Hartley, J., Pennebaker, J. W., and Fox, C. (2003). Abstracts, introductions and discussions: how far do they differ in style?. Scientometrics 57, 389–398. doi: 10.1023/A:1025008802657

Hayes, A. F. (2013). Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach. New York, NY: Guilford Press.

Jost, J. T., Banaji, M. R., and Nosek, B. A. (2004). A decade of system justification theory: Accumulated evidence of conscious and unconscious bolstering of the status quo. Polit. Psychol. 25, 881–919. doi: 10.1111/j.1467-9221.2004.00402.x

Klapp, O. E. (1948). The creation of popular heroes. Am. J. Sociol. 54, 135–141. doi: 10.1086/220292

Leach, C. W., Ellemers, N., and Barreto, M. (2007). Group virtue: the importance of morality (vs. competence and sociability) in the positive evaluation of in-groups. J. Pers.Soc. Psychol. 93, 234–249. doi: 10.1037/0022-3514.93.2.234

McAdams, D. P. (1993). The Stories We Live By: Personal Myths and the Making of the Self. New York, NY: Guilford Press.

Pennebaker, J. W., Booth, R. J., and Francis, M. E. (2007). Linguistic Inquiry and Word Count: LIWC [Computer software]. Austin, TX: LIWC.net.

Ramirez, M. D. (2009). The dynamics of partisan conflict on congressional approval. Am. J. Pol. Sci. 53, 681–694. doi: 10.1111/j.1540-5907.2009.00394.x

Hart Research Associates (2013). C-SPAN at 34:a Bi-Partisan, Politically Active Audience that Continues to Grow. Available online at: http:/series.c-span.org/About/The-Company/Press-Releases/

Rosenberg, S., Nelson, C., and Vivekananthan, P. S. (1968). A multidimensional approach to the structure of personality impressions. J. Pers. Soc. Psychol. 9, 283. doi: 10.1037/h0026086

Schwartz, S. H. (1992). Universals in the content and structure of values: theoretical advances and empirical tests in 20 countries. Adv. Exp. Soc. Psychol. 25, 1–65. doi: 10.1016/S0065-2601(08)60281-6

Tausczik, Y. R., and Pennebaker, J. W. (2010). The psychological meaning of words: LIWC and computerized text analysis methods. J. Lang. Soc. Psychol. 29, 24–54. doi: 10.1177/0261927X09351676

Todorov, A., Mandisodza, A. N., Goren, A., and Hall, C. C. (2005). Inferences of competence from faces predict election outcomes. Science 308, 1623–1626. doi: 10.1126/science.1110589

Keywords: impression formation, language, LIWC, the U.S. Congress, agency, communion

Citation: Decter-Frain A and Frimer JA (2016) Impressive Words: Linguistic Predictors of Public Approval of the U.S. Congress. Front. Psychol. 7:240. doi: 10.3389/fpsyg.2016.00240

Received: 07 October 2015; Accepted: 05 February 2016;

Published: 23 February 2016.

Edited by:

Jacob B. Hirsh, University of Toronto, CanadaReviewed by:

Eric Mayor, University of Neuchâtel, SwitzerlandMauro Bertolotti, Catholic University of The Sacred Heart, Italy

Copyright © 2016 Decter-Frain and Frimer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jeremy A. Frimer, ai5mcmltZXJAdXdpbm5pcGVnLmNh

Ari Decter-Frain

Ari Decter-Frain Jeremy A. Frimer

Jeremy A. Frimer