- 1Department of Teacher Education and School Research, Faculty of Educational Sciences, University of Oslo, Oslo, Norway

- 2Faculty of Educational Sciences, Centre for Educational Measurement, University of Oslo, Oslo, Norway

Introduction

Scientific reasoning represents a set of skills students need to acquire in order to successfully participate in scientific practices. Hence, educational research has focused on developing and validating assessments of student learning that capture the two different components of the construct, namely formal and informal reasoning. In this opinion paper, we explain why we believe that it is time for a new era of scientific reasoning assessments that bring these components together, and how computer-based assessments (CBAs) might accomplish this.

Reasoning is a mental process that enables people to construct new representations from existing knowledge (Rips, 2004). It includes cognitive processing that is directed at finding solutions to problems by drawing conclusions based on logical rules or rational procedures (Mayer and Wittrock, 2006). When people reason, they attempt to go “beyond the information given” to create a new representation that is assumed to be true (Bruner, 1957). The process of scientific reasoning comprises formal and informal reasoning (Galotti, 1989; Kuhn, 1993). Formal reasoning is characterized by rules of logic and mathematics, with fixed and unchanging premises (Perkins et al., 1991; Sadler, 2004). It encompasses the ability to formulate a problem, design scientific investigations, evaluate experimental outcomes, and make causal inferences in order to form and modify theories related to the phenomenon under investigation (Zimmerman, 2007). Formal scientific reasoning can be applied not only within the context of science, but in almost every other domain of society (Han, 2013). It can be used to make informed decisions regarding everyday life problems (Amsterlaw, 2006); for example, individuals use proportional reasoning to decide the fastest way to travel from one place to another.

In informal reasoning, students draw inferences from uncertain premises as they ponder ill-structured, open-ended, and debatable problems without definitive solutions (Kuhn, 1991). When students reason formally, they work with the given premises in belief mode, which concerns arriving at true and warranted conclusions whereas informal reasoning is carried out in design mode, which focuses on identifying relevant premises that can be used to establish a strong argument (Bereiter and Scardamalia, 2006). Since a premise of informal reasoning is uncertain and can be questioned, its conclusion can be withdrawn in the light of new evidence (Evans, 2005). This process involves weighing the pros and cons of a particular decision (Voss et al., 1991). Learners engage in informal reasoning when they deal with socio-scientific issues—controversial issues that are influenced by social norms and conceptually related to science, such as whether or not to consume genetically modified food or support government's plan for a car-free city (Sadler and Zeidler, 2005).

Both types of reasoning are used to manipulate existing information and share the same goal of generating new knowledge. While formal reasoning is judged by whether or not conclusions are valid, informal reasoning is assessed based on the quality of premises and their potential for strengthening conclusions.

The manipulation of existing information in formal and informal reasoning processes can be described with dual-process theories of reasoning (Evans, 2007; Glöckner and Witteman, 2010). According to these theories, there are two distinct processing modes: Type 1 processes are autonomous and intuitive processes that do not heavily rely on individuals' working memory, whereas Type 2 processes involve using mental simulation or thought experiments to support hypothetical thinking and reflective processes that require working memory (Evans and Stanovich, 2013). An individual's first response to a problem tends to be processed automatically and refers to their past experiences and personal beliefs (i.e., Type 1 process: Evans, 2008). For example, when using formal reasoning to decide the fastest way to travel from A to B, an individual's first thought might be to take a plane since it is commonly considered the fastest means of transport. However, the individual might change his or her mind after processing all necessary information, such as the travel time to and from the airport.

Not every individual is able to progress after the first stage and produce a rational decision. Those who are confined to Type 1 processes make intuitive decisions, whereas more experienced individuals utilize Type 2 processes to construct a well-informed choice (Wu and Tsai, 2011). In the example of using informal reasoning to decide whether or not to support a government's plan for a car-free city, intuitive thought might lead individuals to support the plan based on their experiences with pollution. However, with the purpose of generating new representations, only those who can (a) elaborate on their intuitive decision with acceptable justifications; (b) address opposite arguments; and (c) think about how the plan can be further improved are utilizing Type 2 processes. In this regard, there is a strong connection between formal and informal reasoning, in which both types of reasoning share the common goal of generating new knowledge by processing available information through the dual stages.

Activity in belief mode covers a broad range of scientific practices in school science (Bereiter and Scardamalia, 2006). Outside the classroom, however, students need to make decisions regarding problems with uncertain premises by working in design mode. Teachers should have ways to assess how students improve on their existing ideas by searching beyond what they already know rather than simply making sure their ideas align with accepted theories. It is therefore important to build a scientific reasoning assessment that incorporates both formal and informal reasoning skills in order to better measure the constructs underlying scientific reasoning. In the following, we argue that these complex skills can be best assessed using computer-based testing.

Joint Assessment of Formal and Informal Reasoning: What Can Computer-Based Testing Offer?

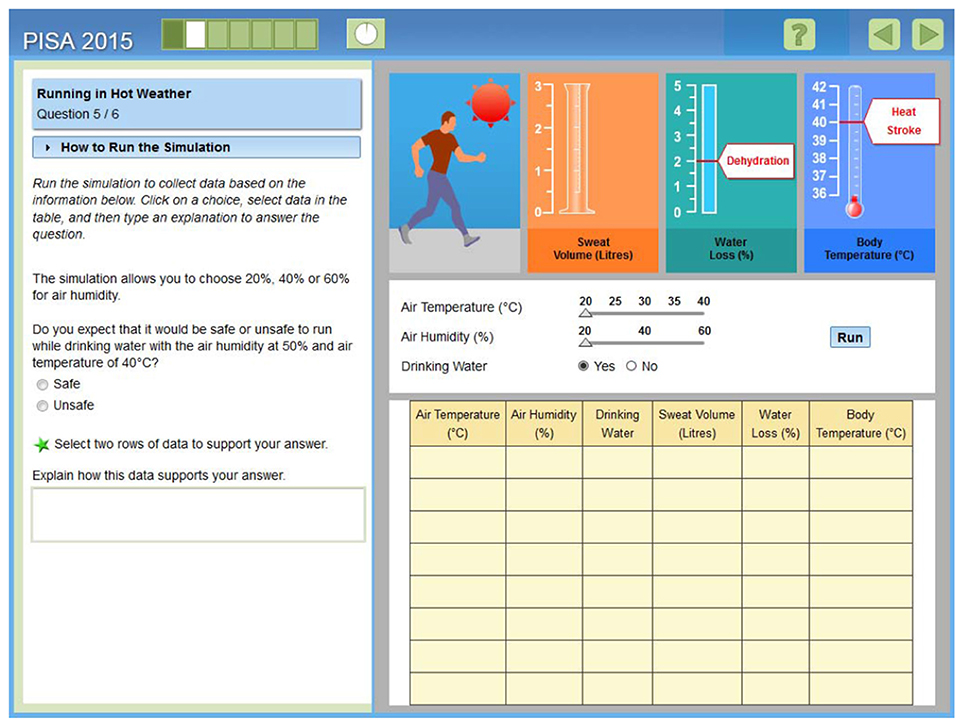

The rapid advancement of computer technology has changed the way scientific reasoning is assessed. Given that technology can offer rich reasoning activities that can be modified to serve different purposes, such as formative and summative assessment, static forms of assessment (e.g., paper-and-pencil tests) have been replaced by computer-based tests that contain dynamic and highly interactive simulations. This shift has taken place for a number of reasons: First, today's technology can deliver assessments that use multiple representations and various item formats to measure complex skills that are not easily measured in traditional paper-based testing (Quellmalz et al., 2013). Assessment of complex skills such as multivariable reasoning, in which learners disentangle the effects of independent variables on dependent variables in order to test their hypotheses, can be conducted efficiently with the use of simulations. They can run as many experiments as needed to observe how the results changes as the effects of change in input variables to test their hypotheses (see Figure 1). Second, CBAs can provide a broad range of data beyond students' mere performance on tasks. Additional information is stored in log files, including data on response times, the sequence of actions, and the specific strategies used to deal with multiple variables (Greiff et al., 2016).

Figure 1. Screenshot of the PISA (Programme for International Student Assessment) field trial item, Running in Hot Weather. Multivariable reasoning is required to solve the item (OECD, 2013, p. 39).

Against this backdrop, we argue that CBAs have the potential to integrate approaches for assessing both formal and informal reasoning—learning outcomes that are difficult or even impossible to assess using conventional methods.

Individual Reasoning and Collaborative Performance

To date, CBAs have been used to comprehensively measure individual students' formal reasoning skills (Kuo and Wu, 2013). These assessments enable students to test their hypotheses in environments that simulate the complexity of real experiments (Greiff and Martin, 2014; Scherer, 2015). The immediate feedback such environments provide based on students' manipulation of variables can be used to develop a mental model that represents the relationship among variables. While the benefits of using CBAs for the assessment of formal reasoning skills are well-recognized, collaborative classroom discussions during group work are considered to be the main sources of information on students' informal reasoning skills (Driver et al., 2000). Like actual scientists, students work together to solve an authentic task through debate and argumentation (Andriessen et al., 2013). This discussion process can offer rich information on students' communication and collaboration skills; yet, it remains difficult to measure each individual's ability and contribution. CBAs offer plenty of opportunities to capture collaborative activities by keeping track of individuals' contributions to the discussion and the sequence of arguments (De Jong et al., 2012; Nihalani and Robinson, 2012). Hence, combining the assessment of formal and informal reasoning and delivering it using computer-based testing may enable us to not only investigate students' individual reasoning skills but also their performance in group discussions.

Interactivity

Interactivity is a distinctive quality of CBA that allows individual student to demonstrate formal reasoning skills by interacting with a computer system (Kuo and Wu, 2013). A student participates in scientific investigations while actively exploring items that represent scientific phenomena (Quellmalz et al., 2012). During the task exploration phase, the student conducts experiments and manipulates the virtual environment in order to produce desirable outcomes. He or she engages in inquiry practices such as observing the phenomena under investigation, simulating interactive experiments by controlling variables to test their hypotheses, generating and interpreting evidence, and developing evidence-based knowledge. By using interactive and dynamic items, CBAs can examine a student's ability to coordinate complex, primarily formal reasoning skills.

To assess informal reasoning skills, interactive components in CBAs engage students to explore and make use of relevant information to support their arguments. When faced with a problem related to a socio-scientific issue, students can seek necessary information from a simulated website rather than using data that is already provided in the argumentation task in order to address contrasting positions and to construct a well-informed decision. Hence, CBAs provide an opportunity to assess how well students can select relevant information actively as well as their informal reasoning skills.

In addition to allowing learners to demonstrate their scientific reasoning skills, research has suggested that interactive features could improve learners' problem solving performance (e.g., Plass et al., 2009; Scherer and Tiemann, 2012). Evans and Sabry (2003) found that students who used an interactive system outperformed those using a non-interactive system. Furthermore, Quellmalz et al. (2012) showed that English Language Learners and special needs students performed better with the use of interactive, simulation-based science assessments. Interactivity is therefore considered a highly important component of building assessments of formal reasoning. Taken together, CBAs have the potential to provide stimulating, interactive environments in which students can perform both formal and informal reasoning.

Feedback

Another feature CBAs offer in testing formal and informal reasoning skills is the ability to provide students with the necessary feedback to help them take control of their own learning. This didactic advantage can lead to better learning outcomes when feedback is given in a timely fashion and tailored to individual needs (e.g., Lopez, 2009; van der Kleij et al., 2012). Customized and instant feedback is essential for helping students understand why their responses fail to solve specific formal reasoning problems or why the information they used to support their arguments is inadequate. Students can adapt and assess their learning through gradually increasing feedback, from a brief to a more detailed scaffold (Shute, 2008). Feedback can encourage students to actively construct their own knowledge and improve their learning.

Conclusion

On the basis of the strong conceptual connection between formal and informal reasoning, we argue that it is necessary to bring both components together for the assessment of scientific reasoning. The current developments in CBAs provide an opportunity to assess scientific reasoning in a way that reflects the complexities of formal and informal reasoning while also effectively measuring learning outcomes.

Author Contributions

NT drafted the paper, initiated the argumentation, and submitted the first version. RS discussed the argumentation, contributed to drafting the paper, and assisted in the submission.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Prof. Rolf Vegar Olsen for his valuable comments on the first draft of this paper.

References

Amsterlaw, J. (2006). Children's beliefs about everyday reasoning. Child Dev. 77, 443–464. doi: 10.1111/j.1467-8624.2006.00881.x

Andriessen, J., Baker, M., and Suthers, D. (2013). Arguing to Learn: Confronting Cognitions in Computer-Supported Collaborative Learning Environments, Vol. 1. Dordrecht: Springer Science and Business Media.

Bereiter, C., and Scardamalia, M. (2006). “Education for the knowledge age: design-centered models of teaching and instruction,” in Handbook of Educational Psychology, eds P. A. Alexander and P. H. Winne (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 695–713.

Bruner, J. S. (1957). Contemporary Approaches to Cognition: A Symposium held at the University of Colorado. Cambridge: Harvard University Press.

De Jong, T., Wilhelm, P., and Anjewierden, A. (2012). “Inquiry and assessment: future developments from a technological perspective,” in Technology-Based Assessments for 21st Century Skills, eds M. C. Mayrath, J. Clarke-Midura, D. H. Robinson, and G. Schraw (Charlotte, NC: Information Age Publishing), 249–265.

Driver, R., Newton, P., and Osborne, J. (2000). Establishing the norms of scientific argumentation in classrooms. Sci. Educ. 84, 287–312. doi: 10.1002/(SICI)1098-237X(200005)84:3 <287::AID-SCE1>3.0.CO;2-A

Evans, C., and Sabry, K. (2003). Evaluation of the interactivity of web-based learning systems: principles and process. Innov. Educ. Teach. Int. 40, 89–99. doi: 10.1080/1355800032000038787

Evans, J. S. (2005). “Deductive reasoning,” in The Cambridge Handbook of Thinking and Reasoning, eds K. J. Holyoak and R. G. Morrison (New York, NY: Cambridge University Press), 169–184.

Evans, J. S. (2007). Hypothetical Thinking: Dual Processes in Reasoning and Judgement, Vol. 3. New York, NY: Psychology Press.

Evans, J. S. (2008). Dual-processing accounts of reasoning, judgment, and social cognition. Annu. Rev. Psychol. 59, 255–278. doi: 10.1146/annurev.psych.59.103006.093629

Evans, J. S., and Stanovich, K. E. (2013). Dual-process theories of higher cognition: advancing the debate. Perspect. Psychol. Sci. 8, 223–241. doi: 10.1177/1745691612460685

Galotti, K. M. (1989). Approaches to studying formal and everyday reasoning. Psychol. Bull. 105, 331–351. doi: 10.1037/0033-2909.105.3.331

Glöckner, A., and Witteman, C. (2010). Beyond dual-process models: a categorisation of processes underlying intuitive judgement and decision making. Think. Reason. 16, 1–25. doi: 10.1080/13546780903395748

Greiff, S., and Martin, R. (2014). What you see is what you (don't) get: a comment on Funke's (2014) opinion paper. Front. Psychol. 5:1220. doi: 10.3389/fpsyg.2014.01120

Greiff, S., Niepel, C., Scherer, R., and Martin, R. (2016). Understanding students' performance in a computer-based assessment of complex problem solving: an analysis of behavioral data from computer-generated log files. Comput. Hum. Behav. 61, 36–46. doi: 10.1016/j.chb.2016.02.095

Han, J. (2013). Scientific Reasoning: Research, Development, and Assessment. Doctoral dissertation, The Ohio State University. Available online at: https://etd.ohiolink.edu/

Kuo, C.-Y., and Wu, H.-K. (2013). Toward an integrated model for designing assessment systems: an analysis of the current status of computer-based assessments in science. Comput. Educ. 68, 388–403. doi: 10.1016/j.compedu.2013.06.002

Lopez, L. (2009). Effects of Delayed and Immediate Feedback in the Computer-Based Testing Environment. Indiana State University.

Mayer, R. E., and Wittrock, M. C. (2006). “Problem solving,” in Handbook of Educational Psychology, eds P. A. Alexander and P. H. Winne (Mahwah, NJ: Lawrence Erlbaum), 287–303.

Nihalani, P. K., and Robinson, D. H. (2012). “Collaborative versus individual digital assessments,” in Technology-Based Assessments for 21st Century Skills, eds M. C. Mayrath, J. Clarke-Midura, D. H. Robinson, and G. Schraw (Charlotte, NC: Information Age Publishing), 325–344.

Perkins, D. N., Farady, M., and Bushey, B. (1991). “Everyday reasoning and the roots of intelligence,” in Informal Reasoning and Education, eds J. F. Voss, D. N. Perkins, and J. W. Segal (Hillsdale, NJ: Lawrence Erlbaum Associates, Inc.), 83–105.

Plass, J. L., Homer, B. D., and Hayward, E. O. (2009). Design factors for educationally effective animations and simulations. J. Comput. High. Educ. 21, 31–61. doi: 10.1007/s12528-009-9011-x

Quellmalz, E. S., Davenport, J. L., Timms, M. J., DeBoer, G. E., Jordan, K. A., Huang, C.-W., et al. (2013). Next-generation environments for assessing and promoting complex science learning. J. Educ. Psychol. 105, 1100–1114. doi: 10.1037/a0032220

Quellmalz, E. S., Timms, M. J., Silberglitt, M. D., and Buckley, B. C. (2012). Science assessments for all: integrating science simulations into balanced state science assessment systems. J. Res. Sci. Teach. 49, 363–393. doi: 10.1002/tea.21005

Rips, L. J. (2004). “Reasoning,” in Stevens' Handbook of Experimental Psychology, Memory and Cognitive Processes, Vol. 2, eds H. Pashler and D. Medin (New York, NY: John Wiley and Sons), 363–411.

Sadler, T. D. (2004). Informal reasoning regarding socioscientific issues: a critical review of research. J. Res. Sci. Teach. 41, 513–536. doi: 10.1002/tea.20009

Sadler, T. D., and Zeidler, D. L. (2005). The significance of content knowledge for informal reasoning regarding socioscientific issues: applying genetics knowledge to genetic engineering issues. Sci. Educ. 89, 71–93. doi: 10.1002/sce.20023

Scherer, R. (2015). Is it time for a new measurement approach? A closer look at the assessment of cognitive adaptability in complex problem solving. Front. Psychol. 6:1664. doi: 10.3389/fpsyg.2015.01664

Scherer, R., and Tiemann, R. (2012). Factors of problem-solving competency in a virtual chemistry environment: the role of metacognitive knowledge about strategies. Comput. Educ. 59, 1199–1214. doi: 10.1016/j.compedu.2012.05.020

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

van der Kleij, F. M., Eggen, T. J., Timmers, C. F., and Veldkamp, B. P. (2012). Effects of feedback in a computer-based assessment for learning. Comput. Educ. 58, 263–272. doi: 10.1016/j.compedu.2011.07.020

Voss, J. F., Perkins, D. N., and Segal, J. W. (1991). Informal Reasoning and Education. Hillsdale, MI: Lawrence Erlbaum Associates, Inc.

Wu, Y. T., and Tsai, C. C. (2011). High school students' informal reasoning regarding a socio-scientific issue, with relation to scientific epistemological beliefs and cognitive structures. Int. J. Sci. Educ. 33, 371–400. doi: 10.1080/09500690903505661

Keywords: computer-based assessment, formal reasoning, informal reasoning, interactivity, simulations

Citation: Teig N and Scherer R (2016) Bringing Formal and Informal Reasoning Together—A New Era of Assessment? Front. Psychol. 7:1097. doi: 10.3389/fpsyg.2016.01097

Received: 04 April 2016; Accepted: 06 July 2016;

Published: 19 July 2016.

Edited by:

Michael S. Dempsey, Boston University, USAReviewed by:

Stefano Cacciamani, Università della Valle d'Aosta, ItalyShane Connelly, University of Oklahoma, USA

Copyright © 2016 Teig and Scherer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nani Teig, bmFuaS50ZWlnQGlscy51aW8ubm8=

Nani Teig

Nani Teig Ronny Scherer

Ronny Scherer