Abstract

Despite six decades of creative cognition research, measures of creative ideation have heavily relied on divergent thinking tasks, which still suffer from conceptual, design, and psychometric shortcomings. These shortcomings have greatly impeded the accurate study of creative ideation, its dynamics, development, and integration as part of a comprehensive psychological assessment. After a brief overview of the historical and current anchoring of creative ideation measurement, overlooked challenges in its most common operationalization (i.e., divergent thinking tasks framework) are discussed. They include (1) the reliance on a single stimulus as a starting point of the creative ideation process (stimulus-dependency), (2) the analysis of response quality based on a varying number of observations across test-takers (fluency-dependency), and (3) the production of “static” cumulative performance indicators. Inspired from an emerging line of work from the field of cognitive neuroscience of creativity, this paper introduces a new assessment framework referred to as “Multi-Trial Creative Ideation” (MTCI). This framework shifts the current measurement paradigm by (1) offering a variety of stimuli presented in a well-defined set of ideation “trials,” (2) reinterprets the concept of ideational fluency using a time-analysis of idea generation, and (3) captures individual dynamics in the ideation process (e.g., modeling the effort-time required to reach a response of maximal uncommonness) while controlling for stimulus-specific sources of variation. Advantages of the MTCI framework over the classic divergent thinking paradigm are discussed in light of current directions in the field of creativity research.

Measuring Creative Ideation: History and Prospects

Creative Ideation (CI) refers to the process of generating original ideas in response to given open-ended problems (e.g., Fink and Benedek, 2014). CI has a rich tradition of empirical research tracing back to the 19th Century, with notably the experimental work of Alfred Binet and the Associationist school (Barbot and Guignard, 2019). But the most influential advances in the measurement of CI emerged after Guilford’s (1950) seminal push to study creativity. Since then, CI is dominantly operationalized by measures of Divergent Thinking (DT) (Kaufman et al., 2008). A classic DT task is the Alternate Uses Task (AUT, Guilford, 1967), in which respondents have to generate many uncommon uses for a common object in a limited time (e.g., a “brick” or a “newspaper”). Individual differences in the number (Fluency), relative uncommonness (Originality), and diversity (Flexibility) of the responses are used to characterize (cumulative) DT performance. This task-design is applicable in various modalities of responses, but verbal and figural tasks are most common given the limited domain-content knowledge they require (Barbot et al., 2015).

Since the emergence of the field of neuroscience of creativity (Fink et al., 2007) in the past few decades, CI research is increasingly (re)focusing on process-oriented and dynamic aspects (Christoff et al., 2016; Hass, 2017; Marron and Faust, 2019). Pioneering work in this line analyzed free-association switches using idiographic methods (Binet, 1900), real-time associative sequences measured by kymographic recordings (Bousfield and Sedgewick, 1944), or relations between ideas’ uncommonness and response time (Christensen et al., 1957). Presently, similar questions are tackled by coupling CI tasks with think-aloud protocols (Gilhooly et al., 2007; Pringle and Sowden, 2017), eye-tracking (Jankowska et al., 2018), systematic observation (Barbot and Lubart, 2012), or log-analysis of digital assessments (Hart et al., 2017; Loesche et al., 2018; Rominger et al., 2018). All of these methods attempt to track the chronology of CI, partition-out distinct activities (generating versus producing ideas) and capture their neurocognitive underpinning (Ellamil et al., 2012; Boot et al., 2017; Rominger et al., 2018; Benedek et al., in press).

While recent CI studies have made considerable advances in that direction by identifying the dual-process (Nijstad et al., 2010; Sowden et al., 2015) and collaborative nature of brain networks contributing to distinct cognitive resources of CI (Beaty et al., 2015; Volle, 2018), their conclusions converge with pioneering behavioral work. That is, CI is an effortful process (Binet, 1900; Christensen et al., 1957; Parnes, 1961; Jaušovec, 1997; De Dreu et al., 2008; Green et al., 2015; Kenett, 2018) likely more fruitful with greater processingspeed in a given unit of time (Dorfman et al., 2008; Vartanian et al., 2009; Preckel et al., 2011; Forthmann et al., 2018a). For example, serial-order effect research shows that as people iterate ideas in DT-type tasks, response rates decrease while response originality increases (Christensen et al., 1957; Beaty and Silvia, 2012; Heinonen et al., 2016; Silvia Paul et al., 2017; Wang et al., 2017; Acar et al., 2018). In short, it takes more “effort-time” to come up with an uncommon idea (i.e., involves more exploration/thinking time) than a common one (Beaty and Silvia, 2012; Acar and Runco, 2014; Kenett et al., 2015; Wang et al., 2017; Kenett, 2018).

Because these dynamic aspects seem particularly robust (e.g., Beaty and Silvia, 2012), assessments designed to directly measure them at the person-level are needed (Hart et al., 2017; Hass, 2017; Jankowska et al., 2018; Loesche et al., 2018). Time-based measurement approaches hold great promise toward this end (Hass, 2015; Sowden et al., 2015) and could address questions such as: What is the “baseline” effort-time provided by a person in generating ideas across a range of situations? How much additional effort-time does this person need to engage in producing responses of greater originality? How is this person’s CI performance impeded by cognitive fatigue or stimuli characteristics? Before attempting to steer the classic DT assessment’s status quo in this direction, it is essential to examine its limitations and most promising variants.

What Diverged in Divergent Thinking Tasks?

CI assessment has been somewhat “fixated” on the classic DT paradigm (Barbot, 2016). Even “gold standard” measures (e.g., Torrance’s, 2008, TTCT) still suffer from a number of task-design and psychometric shortcomings, which challenge notably the developmental study of CI (Barbot et al., 2016c). Psychometric limitations of classic DT tasks are amply documented (Plucker, 1999; Runco, 2010; Barbot et al., 2011; Zeng et al., 2011; Said-Metwaly et al., 2017). Shortcomings of their task-design framework are far less discussed and briefly outlined here.

Stimulus-Dependency

Test-takers usually perform very differently when completing seemingly identical DT tasks that use different stimuli (e.g., AUTs of a “brick” versus “newspaper”). Almost as if they had “preferences” for one stimulus over another. Previous experience (Runco et al., 2006), tasks instructions (Nusbaum et al., 2014) or stimulus salience (Chrysikou et al., 2016; Forthmann et al., 2016) contribute to these inconsistencies, translating in heterogeneous performance across DT tasks, particularly across domains (Baer, 2012; Barbot and Tinio, 2015; Barbot et al., 2016a). Indeed, Fluency inter-correlations often fall on the 0.30–0.40 range, and up to 50% of fluency’s variance represents only stimulus-specific factors (Silvia et al., 2008; Barbot et al., 2016a). Such low level of alternate-form reliability is traditionally unacceptable in common psychometric standards. Although this issue was outlined since decades (e.g., Harvey et al., 1970), researchers generally underestimate how DT performance is dependent upon the stimuli at hand (Barbot et al., 2016a). Regardless, a critical feature of reliable CI measures is to sample a variety of stimuli (rather than a single one), as conducted in some DT task variants (e.g., Guilford and Hoepfner, 1966; Folley and Park, 2005; Chrysikou et al., 2016).

Response Quality and Fluency-Dependency

Classic DT tasks first focus on the quantity of responses generated in a given time (e.g., 10 min). The divergent production can then be characterized qualitatively (e.g., uncommonness, flexibility). Hence, fluency is inherently confounded in summative quality scores (Forthmann et al., 2018b), with fluency-originality inter-correlations often exceeding the 0.80 range (Said-Metwaly et al., 2017). Solutions to overcome this lack of discriminant validity include (1) ignoring response quality (e.g., Batey et al., 2009; Lubart et al., 2011), (2) partialling-out the effect of fluency on quality scores (statistically or by averaging the level of uncommonness across all responses), or (3) relying on subjective ratings of responses’ quality (Harrington, 1975; Silvia et al., 2009). Nonetheless, the DT task format leads by default to an unequal number of responses across test-takers, from which response quality scores will be derived. As such, those with lower fluency have less opportunities to “demonstrate” their originality (impacting simultaneously the reliability of quality scores).

Static Cumulative Performance Scores

Summary DT scores are not able to capture (and may even obscure) the dynamic processes involved in CI (Hass, 2017). In keeping with serial-order research, it could be assumed that a focus on the sequence of DT responses could address this issue (e.g., Hass, 2017). This supposes that DT responses directly transcribe the process of the thought, as if responses were reported at the same time as ideas emerge. But beyond ideas generation, it is established that (1) DT involves a monitoring and selection of ideas (e.g., Nijstad et al., 2010), and (2) during the task time, those selected ideas must be produced and refined. This has several consequences with respect to DT performance scoring: (1) response-level analysis may not accurately capture the time-course of CI; (2) factors independent from CI are indiscriminately incorporated into summative (fluency) scores (e.g., typing time necessary to produce the response; Forthmann et al., 2017); (3) originality of observable responses might not properly represent the originality of all ideas generated. These points also outline the challenge of Fluency-Originality trade-off (e.g., Fulgosi and Guilford, 1968) according to which, DT tasks’ time constraints lead test-takers to necessarily emphasize response quantity over quality, or reciprocally. Irrespective of one’s trade-off, a varying number of qualitatively heterogeneous responses (e.g., varying originality) will ultimately be aggregated into cumulative fluency and originality scores. This, in turn, provides little insight on both “baseline” levels and dynamic processes of a person’s CI.

MTCI Framework

Most limitations outlined above can be addressed with the Multi-Trial Creative Ideation (MTCI) framework presented here. An essential feature of MTCI tasks is their use of a well-defined set of trials, each presenting a different stimulus (e.g., 20 AUT “trials”), from which a single original idea must be provided (self-paced format). Close monitoring of behavioral activity during task-resolution is used to segment response processes (e.g., isolate “think time” versus production time), and derive both cumulative and dynamic indicators of CI (e.g., baseline effort-time across trials, or incremental effort-time required to produce responses of maximal originality). Specific task-format and scoring features of the MTCI framework are now presented in greater length.

Trials Characteristics

Contrary to classic DT tasks relying on a single stimulus that initiates multiple CI iterations (e.g., generating original doodles using the same abstract design over and over as starting point; See Figure 1’s stimulus), the MTCI framework requires the use of multiple stimuli, preferably controlled for perceptual characteristics (e.g., semantic or morphological). For each trial, a single response will be generated. This format resembles recent DT tasks’ adaptation for neurophysiological studies involving extensive short time-locked CI trials (Benedek et al., in press). In MTCI, this feature is proposed in the intent to (1) limit stimulus-dependency (range of stimuli offered), and (2), control the number of responses generated (addressing fluency-originality dependency and trade-off; Zarnegar et al., 1988). Although such multi-trial single-response formats showed high reliability, predictive validity (Prabhakaran et al., 2014) and convergent validity with multi-response tasks (Perchtold et al., 2018), it has been criticized for its loss of open-endedness and potential for tracking iterative CI processes (Mouchiroud and Lubart, 2001; Hass, 2017). Yet, while both formats engage DT, observable responses uncover only one’s reported ideas which, as noted above, is insufficient to genuinely track the time-course of CI. Finally, because “problem-solving proficiency in the real world is probably a function of the number and qualitative excellence of initially generated approaches and ideas” (Harrington, 1975, p.434), it is thought that capturing the “baseline” ideational outputs across multiple CI trials will offer (3) more engaging tasks, and (4) more ecologically valid performance scores (Kaufman and Beghetto, 2009; Forthmann et al., 2018b).

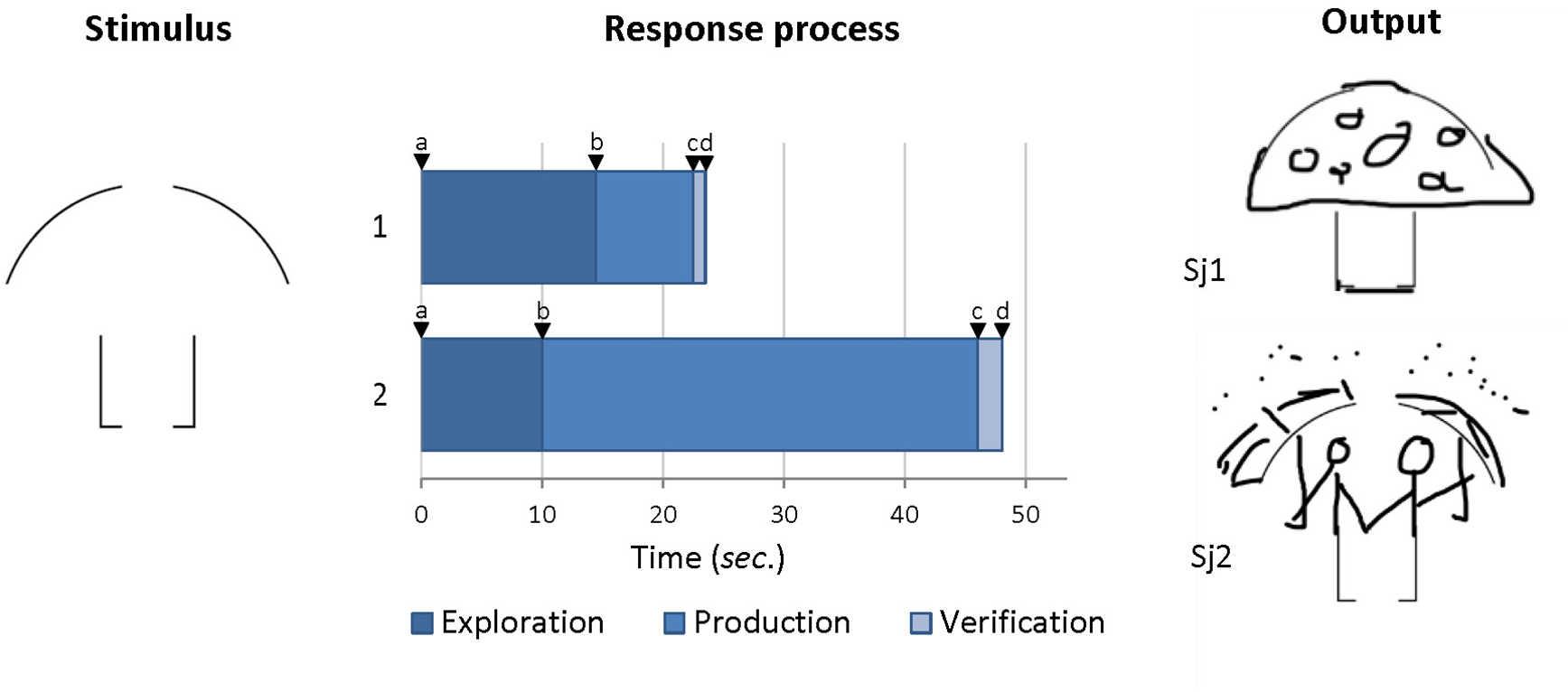

FIGURE 1

Sample item, response process times and response outputs for two subjects. In this sample trial, test-takers are required to generate an original doodle that uses the stimulus design as part of their answer (output). The response process represents the time-segmentation of the item resolution derived from log-analysis of test-takers’ interactions with the digital-platform. Phases of responses are segmented according to timestamps a to d (see description in text).

Response Time as a Measure of Fluency

Guilford (1950) rationalizes the concept of ideational fluency by stating that “the person who is capable of producing a large number of ideas per unit of time, other things being equal, has a greater chance of having significant ideas” (p.452). Conceptually, this number of ideas per unit of time can be fairly captured with the number of responses produced in a given set time (fluency in classic DT tasks). It can also be approximated by measuring the time taken to generate a response. In MTCI, this is the only way to do so given the standardization of the number of responses produced. Of course, both approaches add-up reciprocally. For example, if one takes an average of 50 s to produce a response, it can be inferred that the equivalent fluency score for a 10 min DT-task would be 12 (assuming a constant response rate; Christensen et al., 1957). Reciprocally, a test-taker generating 15 responses in 10 min, has an average response time of 40 s. Operationalizing fluency as response time has the clear advantage of relaxing constraints of time limits for task completion (although instructions should encourage the prompt resolution of the task). Because time pressure impacts response quality in CI tasks (Runco and Acar, 2012; Forthmann et al., 2018a), such self-paced format is desirable (Kogan, 2008). It offers a naturalistic and ecologically valid setting, and provides more room for persistence (effort) which is an essential pathway to achieving creative ideas (De Dreu et al., 2008; Nijstad et al., 2010). Of course, this format doesn’t prevent one from “rushing through” the task instead of using time efficiently to develop responses of high originality. But this can be made visible by modeling the effort-time effectiveness as described below (see “Dynamic indicators of CI”).

Administration Modality

The MTCI framework is best suited for implementation on digital-assessment platforms (e.g., Pretz and Link, 2008) that accurately monitor response time. In addition to practical advantages, digital-assessments offer a unique opportunity to unobtrusively record process data (log-analysis) inferred from the interactions between the test-taker and the digital environment (Zoanetti, 2010). Log data can be further analyzed to capture person-level dynamic markers of the task resolution process (Barbot and Perchec, 2015). While DT tasks’ implementation on computerized platforms have shown no detrimental effects on performance over paper-pencil formats (Lau and Cheung, 2010; Hass, 2015), the self-paced nature of MTCI tasks is likely more suitable than DT tasks (use of count-down) for unsupervised, non-lab-based online assessment.

Response Process Markers

As outlined above, much of the time devoted to producing a response in DT tasks is not solely ideation time (e.g., Forthmann et al., 2017). Neuroscience studies have often adapted DT tasks in a way that separates CI from response production time generally confounded in DT scores (Benedek et al., in press). Regrettably, it has resulted in overly constrained paradigms, imposing rigid time-structures for different phases of CI (e.g., 15 s “think time”, 10 s response time; Ellamil et al., 2012; Perchtold et al., 2018; Rominger et al., 2018) or requiring subjects to actively “signal” an idea (Heinonen et al., 2016; Boot et al., 2017). Consistent with recent computerized assessments (Hart et al., 2017; Loesche et al., 2018), log-analysis of test-takers’ interactions with MTCI tasks can inform a more realistic chronology of broad, qualitatively distinct phases of CI (Figure 1): (1) Exploration – response formulation, or “thinking” phase, measured by the time between stimulus presentation (timestamp a) and the onset of the response marked by the first interaction with the digital-platform (e.g., screen-touch, or typing; timestamp b) – (2) Production: response production phase, measured by the time between the first (timestamp b) and the last (timestamp c) interaction with the platform in producing the response (e.g., finger-doodling for graphic responses, typing text for verbal responses) – (3) Verification: “control” phase in which the produced response is being validated or discarded, measured by the time between the last interaction to produce the response (timestamp c), and the action (e.g., click) to validate the response/move on to next item (timestamp d).

As illustrated (Figure 1), subject Sj1 took a total of 23 s to complete the response “mushroom,” whereas Sj2 took a total of 48 s to complete the response “singing in the rain”1. According to the classic DT paradigm, Sj1 would be considered more fluent (about 26 responses in 10 min assuming constant CI rate), compared to Sj2 (about 12 responses in 10 min). However, MTCI should essentially focus on Exploration, the principal phase during which CI operations happen (e.g., combination, idea selection), as similarly operationalized in neuroscience paradigms (Ellamil et al., 2012; Rominger et al., 2018). Accordingly, the time-analysis suggests that Sj2 spent greatest time to produce the response, which should not be confounded with CI time (Exploration). Production time – devoted to actually converting the selected idea into a response (e.g., making a doodle, or typing a response) – doesn’t inform much about the relative effort taken in generating new ideas (CI). It reflects the time engaged in elaborating the response output, as well as technological or “domain-fluency” that impacts classic DT scores (Forthmann et al., 2017). Eliminating Production time and focusing on Exploration only reveals that Sj2 was faster to come-up with the response (10 s) compared to Sj1 (14 s). The MCTI framework would therefore consider Sj2 more fluent than Sj1.

In MTCI, Production is cleanly partitioned-out from Exploration, and fine-grained information on responses’ elaboration and domain-fluency can further be derived. Log-analysis could extract information on pixel completeness of Sj1 and Sj2’s responses and corresponding action counts (elaboration), and relative speed of execution (domain-fluency). Finally, the Verification phase could document Sj1 and Sj2’s tendency to favor quality (e.g., closely assessing the final product) over fluency (e.g., quickly moving on to the next trial). This tendency may be at play in a fuzzier way during other phases of CI (in particular, Exploration). In fact, similar to neuroscience paradigms (Benedek et al., in press), it must be acknowledged that much of the specific operations happening within each phase cannot be fully deciphered using log-analysis. However, such analysis provides a much more accurate picture of the relative effort-time devoted distinctly to generating, producing and evaluating responses, compared to the cumulative DT fluency score that aggregates all three phases across all DT iterations.

Dynamic Indicators of CI

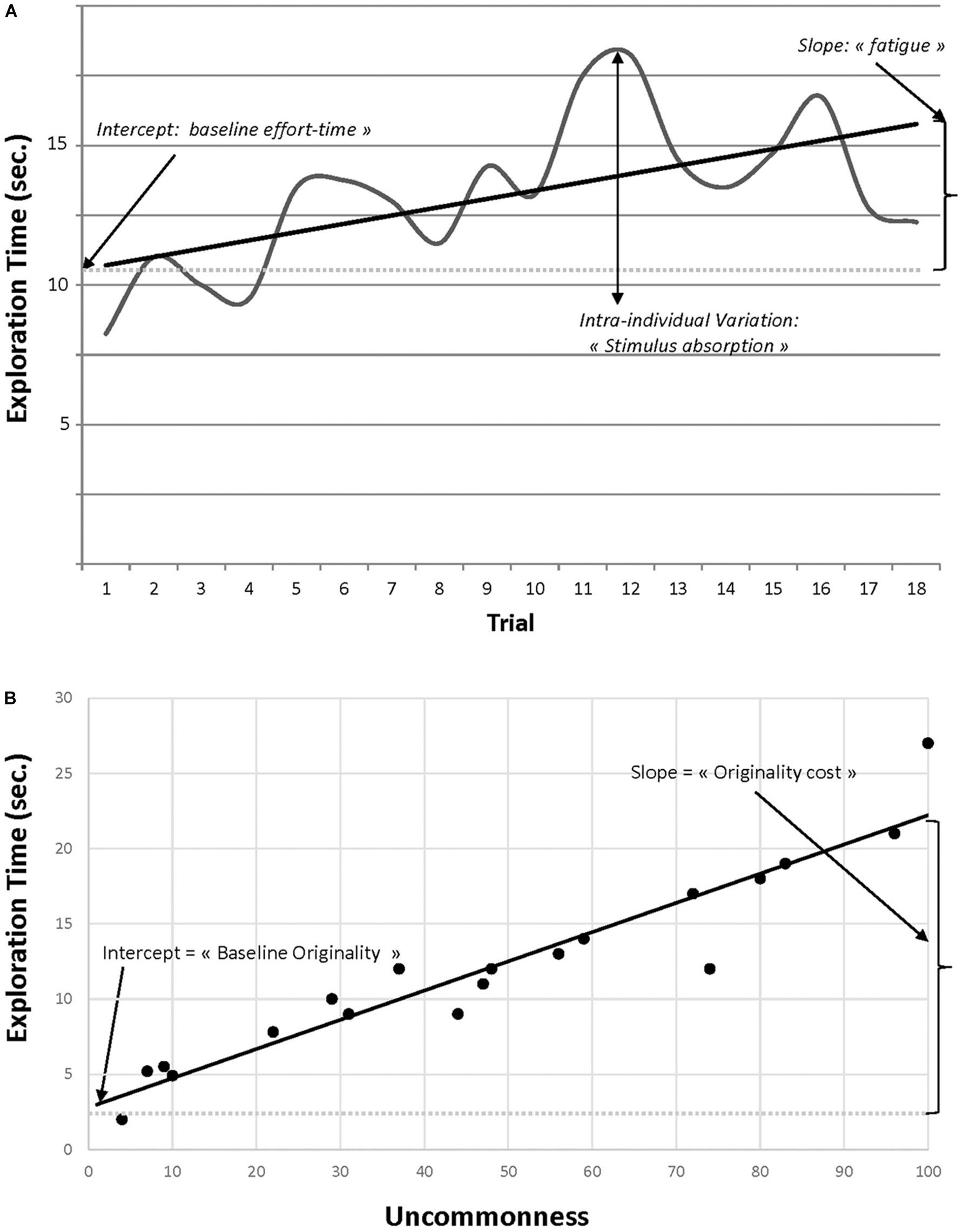

Extending the above sample item to a multi-trial context provides a number of advantages over classic DT tasks. First, MTCI’s allow one to fairly examine internal consistency of (cumulative) process times indicators (e.g., Prabhakaran et al., 2014) and uncommonness/originality ratings across trials (which DT tasks cannot, due to the unequal number of responses across test-takers). The MTCI framework also offers an unique opportunity to track intra-individual variations in performance across trials, providing a dynamic view of the CI process (Hass, 2017; Jankowska et al., 2018). Figure 2A represents Sj2’s microdevelopmental trajectory of Exploration time across 18 trials. Controlling for responses’ uncommonness and items difficulty, the overall performance can be characterized by a growth function with parameters meaningfully interpretable at the person-level, including an intercept (i.e., baseline effort-time in Exploration) and a slope (i.e., relative fatigue in the task resolution; see (Hass, 2017; Acar et al., 2018). Deviations from the growth function can also be fairly analyzed (e.g., capturing stimulus absorption, namely the person’s “preference” for one CI stimulus over another, likely to cause the stimulus-dependency challenge in DT tasks; (Barbot et al., 2016a). Extensions of trial-by-trial latent growth curve models for microdevelopmental data (Barbot et al., 2016b) could nicely accommodate such effort, while further controlling for stimulus-dependency (e.g., “method” factors by type of stimulus; Grimm et al., 2009).

FIGURE 2

Microdevelopmental trajectories of response times across trials (A) and across uncommonness (B). In panel (B), exploration times for each trial are reorganized by order of uncommonness.

Finally, a cornerstone of MTCI is that fluency shouldn’t be interpreted “in a vacuum”: In Figure 1, Sj1’s response (“mushroom”) is likely more obvious than Sj2’s response. By incorporating Exploration time with the corresponding Uncommonness of the response, and all other things being equal, MTCI would suggest a greater CI (effort-time effectiveness) for Sj2. In practice, MTCI data could help modeling this effort-time effectiveness by reordering each item-level exploration time data on a continuum of response uncommonness (Figure 2B). A person’s MTCI responses’ set should naturally show variability in uncommonness across trials. Once ranked, they provide the basis for modeling both the baseline CI effort (time required to come-up with the most obvious response, as captured by the growth function’s intercept) and the originality cost (growth function’s slope, representing the additional effort-time required to produce an idea of incremental uncommonness).

Conclusion

Classic DT tasks have a major benefit: they have helped creativity researchers study ideation for over half a century when few alternatives were available in their toolbox. However, a shift in assessment paradigm is overdue given critical shortcomings of these tasks, preventing the accurate study of CI, its dynamics and development. This paper introduced a new CI assessment framework coined “Multi-Trial Creative Ideation” (MTCI). MTCI capitalizes on the tools of our digital era (log-analysis of interactions with digital assessments) to shift the classic DT-framework’s focus on the number of responses produced, toward a precise measure of time engaged in the production of CI outputs. This framework is thought to minimize the influence of stimulus-dependency and fluency-dependency effects, while improving CI scores’ reliability (multi-trial), ecological and external validity. It also offers the possibility to examine CI under a more dynamic lens, which aligns well with current research efforts in the field. Ongoing work and publications to follow will provide further proofs-of-concept of the key features and advantages of the MTCI framework outlined here, to pave the way for a new era of CI research and tools.

Statements

Author contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Funding

This project was supported by the David Wechsler Early Carrer Grant for Innovative Work in Cognition from the American Psychological Foundation. The opinions expressed in this publication are those of the author and do not necessarily reflect the view of the American Psychological Foundation.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1.^All subjects and data presented in this work are fictive and used for illustrative purpose only. In this example, titles could of course be typed in after all CI trials be completed.

References

1

AcarS.RuncoM. A. (2014). Assessing associative distance among ideas elicited by tests of divergent thinking.Creat. Res. J.26229–238. 10.1080/10400419.2014.901095

2

AcarS.RuncoM. A.OgurluU. (2018). The moderating influence of idea sequence: a re-analysis of the relationship between category switch and latency.Personal. Individ. Differ. (in press). 10.1016/j.paid.2018.06.013

3

BaerJ. (2012). Domain specificity and the limits of creativity theory.J. Creat. Behav.4616–29. 10.1002/jocb.002

4

BarbotB. (2016). Preface: perspectives on creativity development.New Dir. Child Adolesc. Dev.20167–8. 10.1002/cad.20146

5

BarbotB.BesançonM.LubartT. (2015). Creative potential in educational settings: its nature, measure, and nurture.Education43371–381.

6

BarbotB.BesançonM.LubartT. (2016a). The generality-specificity of creativity: exploring the structure of creative potential with EPoC.Learn. Individ. Differ.52178–187. 10.1016/j.lindif.2016.06.005

7

BarbotB.KrivulskayaS.HeinS.ReichJ.ThumaP. E.GrigorenkoE. L. (2016b). Identifying learning patterns of children at risk for specific reading disability.Dev. Sci.19402–418. 10.1111/desc.12313

8

BarbotB.LubartT. I.BesançonM. (2016c). “Peaks, slumps, and bumps”: individual differences in the development of creativity in children and adolescents.New Dir. Child Adolesc. Dev.201633–45. 10.1002/cad.20152

9

BarbotB.BesanconM.LubartT. I. (2011). Assessing creativity in the classroom.Open Educ. J.458–66. 10.2174/1874920801104010058

10

BarbotB.GuignardJ. H. (2019). “Measuring Ideation in the 1900s: the contribution of alfred binet,” inThe Creativity Reader, ed.GlaveanuV. P. (Oxford, NY: Oxford University Press).

11

BarbotB.LubartT. (2012). Creative thinking in music: its nature and assessment through musical exploratory behaviors.Psychol. Aesthet. Creat. Arts6231–242. 10.1037/a0027307

12

BarbotB.PerchecC. (2015). New directions for the study of within-individual variability in development: the power of “N = 1.”.New Dir. Child Adolesc. Dev.201557–67. 10.1002/cad.20085

13

BarbotB.TinioP. P. (2015). Where is the “g” in creativity? A specialization–differentiation hypothesis.Front. Hum. Neurosci.8:1041. 10.3389/fnhum.2014.01041

14

BateyM.Chamorro-PremuzicT.FurnhamA. (2009). Intelligence and personality as predictors of divergent thinking: the role of general, fluid and crystallised intelligence.Think. Ski. Creat.460–69. 10.1016/j.tsc.2009.01.002

15

BeatyR. E.BenedekM.Barry KaufmanS.SilviaP. J. (2015). Default and executive network coupling supports creative idea production.Sci. Rep.5:10964. 10.1038/srep10964

16

BeatyR. E.SilviaP. J. (2012). Why do ideas get more creative across time? An executive interpretation of the serial order effect in divergent thinking tasks.Psychol. Aesthet. Creat. Arts6:309. 10.1037/a0029171

17

BenedekM.ChristensenA. P.FinkA.BeatyR. E. (in press). Creativity assessment in neuroscience research.Psychol. Aesthet. Creat. Arts.10.1037/aca0000215

18

BinetA. (1900). L’observateur et l’imaginatif.Année Psychol.7524–536. 10.3406/psy.1900.3229

19

BootN.BaasM.MühlfeldE.de DreuC. K. W.van GaalS. (2017). Widespread neural oscillations in the delta band dissociate rule convergence from rule divergence during creative idea generation.Neuropsychologia1048–17. 10.1016/j.neuropsychologia.2017.07.033

20

BousfieldW. A.SedgewickC. H. W. (1944). An analysis of sequences of restricted associative responses.J. Gen. Psychol.30149–165. 10.1080/00221309.1944.10544467

21

ChristensenP. R.GuilfordJ. P.WilsonR. C. (1957). Relations of creative responses to working time and instructions.J. Exp. Psychol.53:82. 10.1037/h0045461

22

ChristoffK.IrvingZ. C.FoxK. C. R.SprengR. N.Andrews-HannaJ. R. (2016). Mind-wandering as spontaneous thought: a dynamic framework.Nat. Rev. Neurosci.17718–731. 10.1038/nrn.2016.113

23

ChrysikouE. G.MotykaK.NigroC.YangS.-I.Thompson-SchillS. L. (2016). Functional fixedness in creative thinking tasks depends on stimulus modality.Psychol. Aesthet. Creat. Arts10425–435. 10.1037/aca0000050

24

De DreuC. K.BaasM.NijstadB. A. (2008). Hedonic tone and activation level in the mood-creativity link: toward a dual pathway to creativity model.J. Pers. Soc. Psychol.94:739. 10.1037/0022-3514.94.5.739

25

DorfmanL.MartindaleC.GassimovaV.VartanianO. (2008). Creativity and speed of information processing: a double dissociation involving elementary versus inhibitory cognitive tasks.Personal. Individ. Differ.441382–1390. 10.1016/j.paid.2007.12.006

26

EllamilM.DobsonC.BeemanM.ChristoffK. (2012). Evaluative and generative modes of thought during the creative process.Neuroimage591783–1794. 10.1016/j.neuroimage.2011.08.008

27

FinkA.BenedekM. (2014). EEG alpha power and creative ideation.Neurosci. Biobehav. Rev.44111–123. 10.1016/j.neubiorev.2012.12.002

28

FinkA.BenedekM.GrabnerR. H.StaudtB.NeubauerA. C. (2007). Creativity meets neuroscience: experimental tasks for the neuroscientific study of creative thinking.Methods4268–76. 10.1016/j.ymeth.2006.12.001

29

FolleyB. S.ParkS. (2005). Verbal creativity and schizotypal personality in relation to prefrontal hemispheric laterality: a behavioral and near-infrared optical imaging study.Schizophr. Res.80271–282. 10.1016/j.schres.2005.06.016

30

ForthmannB.GerwigA.HollingH.ÇelikP.StormeM.LubartT. (2016). The be-creative effect in divergent thinking: the interplay of instruction and object frequency.Intelligence5725–32. 10.1016/j.intell.2016.03.005

31

ForthmannB.HollingH.ÇelikP.StormeM.LubartT. (2017). Typing speed as a confounding variable and the measurement of quality in divergent thinking.Creat. Res. J.29257–269. 10.1080/10400419.2017.1360059

32

ForthmannB.LipsC.SzardeningsC.ScharfenJ.HollingH. (2018a). Are speedy brains needed when divergent thinking is speeded—or unspeeded?J. Creat. Behav.10.1002/jocb.350 [Epub ahead of print].

33

ForthmannB.SzardeningsC.HollingH. (2018b). Understanding the confounding effect of fluency in divergent thinking scores: revisiting average scores to quantify artifactual correlation.Psychol. Aesthet. Creat. Arts.10.1037/aca0000196 [Epub ahead of print].

34

FulgosiA.GuilfordJ. P. (1968). Short-term incubation in divergent production.Am. J. Psychol.81241–246. 10.2307/1421269

35

GilhoolyK. J.FioratouE.AnthonyS. H.WynnV. (2007). Divergent thinking: strategies and executive involvement in generating novel uses for familiar objects.Br. J. Psychol.98611–625. 10.1111/j.2044-8295.2007.tb00467.x

36

GreenA. E.CohenM. S.RaabH. A.YedibalianC. G.GrayJ. R. (2015). Frontopolar activity and connectivity support dynamic conscious augmentation of creative state.Hum. Brain Mapp.36923–934. 10.1002/hbm.22676

37

GrimmK. J.PiantaR. C.KonoldT. (2009). Longitudinal multitrait-multimethod models for developmental research.Multivar. Behav. Res.44233–258. 10.1080/00273170902794230

38

GuilfordJ. P. (1950). Creativity.Am. Psychol.5444–454. 10.1037/h0063487

39

GuilfordJ. P. (1967). The Nature of Human Intelligence, ed.SternbergR. J.Cambridge: Cambridge University Press.

40

GuilfordJ. P.HoepfnerR. (1966). Sixteen divergent-production abilities at the ninth-grade level.Multivar. Behav. Res.143–66. 10.1207/s15327906mbr0101_3

41

HarringtonD. M. (1975). Effects of explicit instructions to “be creative” on the psychological meaning of divergent thinking test scores1.J. Pers.43434–454. 10.1111/j.1467-6494.1975.tb00715.x

42

HartY.MayoA. E.MayoR.RozenkrantzL.TendlerA.AlonU.et al (2017). Creative foraging: an experimental paradigm for studying exploration and discovery.PLoS One12:e0182133. 10.1371/journal.pone.0182133

43

HarveyO.HoffmeisterJ. K.CoatesC.WhiteB. J. (1970). A partial evaluation of Torrance’s tests of creativity.Am. Educ. Res. J.7359–372. 10.3102/00028312007003359

44

HassR. W. (2015). Feasibility of online divergent thinking assessment.Comput. Hum. Behav.4685–93. 10.1016/j.chb.2014.12.056

45

HassR. W. (2017). Tracking the dynamics of divergent thinking via semantic distance: Analytic methods and theoretical implications.Mem. Cognit.45233–244. 10.3758/s13421-016-0659-y

46

HeinonenJ.NumminenJ.HlushchukY.AntellH.TaatilaV.SuomalaJ. (2016). Default mode and executive networks areas: association with the serial order in divergent thinking.PLoS One11:e0162234. 10.1371/journal.pone.0162234

47

JankowskaD. M.CzerwonkaM.LebudaI.KarwowskiM. (2018). Exploring the creative process: integrating psychometric and eye-tracking approaches.Front. Psychol.9:1931. 10.3389/fpsyg.2018.01931

48

JaušovecN. (1997). Differences in EEG activity during the solution of closed and open problems.Creat. Res. J.10317–324. 10.1207/s15326934crj1004_3

49

KaufmanJ. C.BeghettoR. A. (2009). Beyond big and little: the four c model of creativity.Rev. Gen. Psychol.131–12. 10.1037/a0013688

50

KaufmanJ. C.PluckerJ. A.BaerJ. (2008). Essentials of Creativity Assessment.Hoboken, NJ: John Wiley & Sons.

51

KenettY. N. (2018). “Going the extra creative mile: the role of semantic distance in creativity–Theory, research, and measurement,” inThe Cambridge Handbook of the Neuroscience of Creativity, ed.JungR. E. (Cambridge: Cambridge University Press).

52

KenettY. N.AnakiD.FaustM. (2015). Investigating the structure of semantic networks in low and high creative persons.Front. Hum. Neurosci.8:407. 10.3389/fnhum.2014.00407

53

KoganN. (2008). Commentary: divergent-thinking research and the Zeitgeist.Psychol. Aesthet. Creat. Arts2100–102. 10.1037/1931-3896.2.2.100

54

LauS.CheungP. C. (2010). Creativity assessment: comparability of the electronic and paper-and-pencil versions of the Wallach–Kogan Creativity Tests.Think. Ski. Creat.5101–107. 10.1016/j.tsc.2010.09.004

55

LoescheF.GoslinJ.BugmannG. (2018). Paving the way to eureka—introducing “Dira” as an experimental paradigm to observe the process of creative problem solving.Front. Psychol.9:1773. 10.3389/fpsyg.2018.01773

56

LubartT. I.BesançonM.BarbotB. (2011). EPoC: Évaluation du Potentiel Créatif des Enfants.Paris: Editions Hogrefe France.

57

MarronT. R.FaustM. (2019). Measuring spontaneous processes in creativity research.Curr. Opin. Behav. Sci.2764–70. 10.1016/j.cobeha.2018.09.009

58

MouchiroudC.LubartT. (2001). Children’s original thinking: an empirical examination of alternative measures derived from divergent thinking tasks.J. Genet. Psychol.162382–401. 10.1080/00221320109597491

59

NijstadB. A.De DreuC. K.RietzschelE. F.BaasM. (2010). The dual pathway to creativity model: creative ideation as a function of flexibility and persistence.Eur. Rev. Soc. Psychol.2134–77. 10.1080/10463281003765323

60

NusbaumE. C.SilviaP. J.BeatyR. E. (2014). Ready, set, create: what instructing people to “be creative” reveals about the meaning and mechanisms of divergent thinking.Psychol. Aesthet. Creat. Arts8423. 10.1037/a0036549

61

ParnesS. J. (1961). Effects of extended effort in creative problem solving.J. Educ. Psychol.52117–122. 10.1037/h0044650

62

PerchtoldC. M.PapousekI.KoschutnigK.RomingerC.WeberH.WeissE. M.et al (2018). Affective creativity meets classic creativity in the scanner.Hum. Brain Mapp.39393–406. 10.1002/hbm.23851

63

PluckerJ. A. (1999). Is the proof in the pudding? Reanalyses of Torrance’s (1958 to present) longitudinal data.Creat. Res. J.12103–114. 10.1207/s15326934crj1202_3

64

PrabhakaranR.GreenA. E.GrayJ. R. (2014). Thin slices of creativity: using single-word utterances to assess creative cognition.Behav. Res. Methods46641–659. 10.3758/s13428-013-0401-7

65

PreckelF.WermerC.SpinathF. M. (2011). The interrelationship between speeded and unspeeded divergent thinking and reasoning, and the role of mental speed.Intelligence39378–388. 10.1016/j.intell.2011.06.007

66

PretzJ. E.LinkJ. A. (2008). The creative task creator: a tool for the generation of customized, web-based creativity tasks.Behav. Res. Methods401129–1133. 10.3758/BRM.40.4.1129

67

PringleA.SowdenP. T. (2017). Unearthing the creative thinking process: fresh insights from a think-aloud study of garden design.Psychol. Aesthet. Creat. Arts11:344. 10.1037/aca0000144

68

RomingerC.PapousekI.PerchtoldC. M.WeberB.WeissE. M.FinkA. (2018). The creative brain in the figural domain: distinct patterns of EEG alpha power during idea generation and idea elaboration.Neuropsychologia11813–19. 10.1016/j.neuropsychologia.2018.02.013

69

RuncoM. A. (2010). “Divergent Thinking, Creativity, and Ideation,” in The Cambridge Handbook of Creativity.New York, NY: Cambridge University Press, 413–446. 10.1017/CBO9780511763205.026

70

RuncoM. A.AcarS. (2012). Divergent thinking as an indicator of creative potential.Creat. Res. J.2466–75. 10.1080/10400419.2012.652929

71

RuncoM. A.DowG.SmithW. R. (2006). Information, experience, and divergent thinking: an empirical test.Creat. Res. J.18269–277. 10.1207/s15326934crj1803_4

72

Said-MetwalyS.Van den NoortgateW.KyndtE. (2017). Methodological issues in measuring creativity: a systematic literature review.Creat. Theor. Appl.4276–301. 10.1515/ctra-2017-0014

73

SilviaP. J.MartinC.NusbaumE. C. (2009). A snapshot of creativity: evaluating a quick and simple method for assessing divergent thinking.Think. Ski. Creat.479–85. 10.1016/j.tsc.2009.06.005

74

SilviaP. J.WintersteinB. P.WillseJ. T.BaronaC. M.CramJ. T.HessK. I.et al (2008). Assessing creativity with divergent thinking tasks: exploring the reliability and validity of new subjective scoring methods.Psychol. Aesthet. Creat. Arts268–85. 10.1037/1931-3896.2.2.68

75

Silvia PaulJ.Nusbaum EmilyC.Beaty RogerE. (2017). Old or new? evaluating the old/new scoring method for divergent thinking tasks.J. Creat. Behav.51216–224. 10.1002/jocb.101

76

SowdenP. T.PringleA.GaboraL. (2015). The shifting sands of creative thinking: connections to dual-process theory.Think. Reason.2140–60. 10.1080/13546783.2014.885464

77

TorranceE. P. (2008). Torrance Tests of Creative Thinking: Norms-Technical Manual, Verbal Forms A and B.Bensenville, IL: Scholastic Testing Service.

78

VartanianO.MartindaleC.MatthewsJ. (2009). Divergent thinking ability is related to faster relatedness judgments.Psychol. Aesthet. Creat. Arts3:99. 10.1037/a0013106

79

VolleE. (2018). “Associative and controlled cognition in divergent thinking: theoretical, experimental, neuroimaging evidence, and new directions,” inThe Cambridge handbook of the neuroscience of creativity, ed.JungR. E. (New York, NY: Cambridge University Press), 333–360. 10.1017/9781316556238.020

80

WangM.HaoN.KuY.GrabnerR. H.FinkA. (2017). Neural correlates of serial order effect in verbal divergent thinking.Neuropsychologia9992–100. 10.1016/j.neuropsychologia.2017.03.001

81

ZarnegarZ.HocevarD.MichaelW. B. (1988). Components of original thinking in gifted children.Educ. Psychol. Meas.485–16. 10.1177/001316448804800103

82

ZengL.ProctorR. W.SalvendyG. (2011). Can traditional divergent thinking tests be trusted in measuring and predicting real-world creativity?Creat. Res. J.2324–37. 10.1080/10400419.2011.545713

83

ZoanettiN. (2010). Interactive computer based assessment tasks: how problem-solving process data can inform instruction.Australas. J. Educ. Technol.26585–606. 10.14742/ajet.1053

Summary

Keywords

ideation processes, measurement, divergent thinking, creativity, ideation ability, microdevelopment, assessment methods, digital assessment

Citation

Barbot B (2018) The Dynamics of Creative Ideation: Introducing a New Assessment Paradigm. Front. Psychol. 9:2529. doi: 10.3389/fpsyg.2018.02529

Received

20 April 2018

Accepted

27 November 2018

Published

11 December 2018

Volume

9 - 2018

Edited by

Orin Davis, New York Institute of Technology (NYIT), United States

Reviewed by

Andrea Lavazza, Centro Universitario Internazionale, Italy; Wolfgang Schoppek, University of Bayreuth, Germany

Updates

Copyright

© 2018 Barbot.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Baptiste Barbot, bbarbot@pace.edu

This article was submitted to Cognitive Science, a section of the journal Frontiers in Psychology

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.