- 1Casa Paganini–InfoMus, DIBRIS, University of Genoa, Genoa, Italy

- 2U-Vip Unit, Istituto Italiano di Tecnologia, Genoa, Italy

While technology is increasingly used in the classroom, we observe at the same time that making teachers and students accept it is more difficult than expected. In this work, we focus on multisensory technologies and we argue that the intersection between current challenges in pedagogical practices and recent scientific evidence opens novel opportunities for these technologies to bring a significant benefit to the learning process. In our view, multisensory technologies are ideal for effectively supporting an embodied and enactive pedagogical approach exploiting the best-suited sensory modality to teach a concept at school. This represents a great opportunity for designing technologies, which are both grounded on robust scientific evidence and tailored to the actual needs of teachers and students. Based on our experience in technology-enhanced learning projects, we propose six golden rules we deem important for catching this opportunity and fully exploiting it.

Introduction

Multisensory education is conceived as an instructional method using visual, auditory, kinesthetic, and tactile ways to educate students (Joshi et al., 2002). There has been a longstanding interest in how learning can be supported by representations engaging multiple modalities. For example, the Montessori education tradition makes use of artifacts such as sandpaper letters children trace with their fingers to develop the physical skill of learning to write. Papert (1980) discussed the idea of body-syntonic learning – projecting an experiential understanding of how bodies move – into learning about geometry. Moreno and Mayer (1999) explored the cognitive impact of multimodal learning material in reducing cognitive load by representing information in more than one modality.

Technology entered the classroom many years ago. It can be considered as a medium for inquiry, communication, construction, and expression (Bruce and Levin, 1997). Early technological interventions consisted of endowing classrooms with devices such as overhead projectors, cassette players, and simple calculators. These devices were intended to support the traditional learning and teaching paradigms and usually did not enable direct interaction of students with technology. More recently, a broad palette of technological tools became available, including technologies for computer-assisted instruction, i.e., the use of computers for tutorials or simulation activities offered in substitution or as a supplement to teacher-directed instruction (Hicks and Holden, 2007), and for computer-based instruction, i.e., the use of computers in the delivery of instruction (Kulik, 1983). These technologies exploit devices, such as interactive whiteboards, laptops, smartphones, and tablets, which are mainly conceived to convey visual information and are not intended for embodied interaction. Walling (2014) argued that tablet computers are toolboxes for learner engagement and suggested that the transition to using tablet computers in education is a natural process for teenagers. For example, there are multiple applications for tablets for learning mathematics1. Falloon (2013) reviewed 45 apps selected by an experienced teacher. Of them, 27 were considered educational apps, which focused on a broad variety of topics, including numeracy skills, reinforcing spelling, acquiring new vocabulary, and improving phonetics. Nevertheless, these solutions usually rely on the ability to see digital content rather than physically interacting with it. At the same time, novel technological developments also enabled the use of multiple sensory channels, including the visual, auditory, and tactile ones. This technology has been defined as multisensory technology. Technological advances and increased availability of affordable devices (e.g., Kinect, Oculus Rift, and HTC Vive) allowed a fast adoption of multisensory technology in many areas (e.g., entertainment, games and exergames, and assistive technologies). Its introduction in the classroom, however, is still somewhat limited. Early works addressed the use of virtual reality in educational software for either enabling full immersion in virtual environments or accentuating specific sensory information (Raskind et al., 2005). Nowadays, technologies such as augmented reality e.g., (see Santos et al., 2014) and serious games e.g., (see Connolly et al., 2012) play a relevant role in many educational contexts, both in science and in the humanities. Multisensory technologies enabling embodied interaction were used, for example, to support teaching in computer programming (e.g., Katai and Toth, 2010; Katai, 2011), music (e.g., Varni et al., 2013), and dance (e.g., Rizzo et al., 2018). Baud-Bovy and Balzarotti (2017) reviewed recent research on force-feedback devices in educational settings, with a particular focus on primary school teaching. Less traditional tools were also exploited, including Job Access With Speech (JAWS) and Submersible Audible Light Sensor (SALS). JAWS is a computer screen reader program allowing blind and visually impaired users to read the screen. SALS is a glass wand with an embedded light sensor, enabling the measuring of color intensity changes. These tools were used in a science camp for visually impaired students (Supalo et al., 2011), but they could also be modified and adapted for general inclusion in multisensory education. Despite such initiatives and the growing interest in these tools, most often the introduction of multisensory technologies in the learning environment has been exploratory, piecemeal, or ad hoc, focusing on understanding the potentials of the different modalities, rather than taking a combined multisensory focus.

Stakeholders consider the adoption of a technology-mediated pedagogical approach as a must, and the quest for innovation heavily drives choices. Attempts at integrating technology in the classroom, however, do not often take into account the pedagogical needs and paradigms. Teachers and students are not involved in the innovation process, and development of technologies does not follow a proper evidence-based iterative design approach. The risk is that technology can be rejected. For example, Groff and Mouza (2008) presented a literature review on the challenges associated with the effective integration of technology in the classroom. More recently, Johnson et al. (2016) discussed common challenges educators face when attempting to introduce technology at school. Philip (2017) described the difficulties that were experienced in a project relying on novel mobile technologies in the classroom.

In this article, we argue that the intersection between current challenges in pedagogical practices and recent scientific evidence opens novel opportunities for acceptance of technology as a tool for education, and those multisensory technologies can specifically bring a significant benefit to the teaching and learning process.

Scientific Evidence

The combination and the integration of multiple unimodal units are crucial to optimize our everyday interaction with the environment (Ernst and Bulthoff, 2004). Sensory combination allows us to maximize information delivered by different sensory modalities without these modalities being necessarily fused, while sensory integration enables reducing the variance in the sensory estimate to increase its reliability (Ernst and Bulthoff, 2004). In particular, sensory combination occurs when different environmental properties of the same object are estimated by means of different sensory modalities. Contrarily, sensory integration occurs when the same environmental property is estimated by different sensory modalities (Ernst and Bulthoff, 2004). Many recent studies show that our brain is able to integrate unisensory signals in a statistically optimal fashion as predicted by a Bayesian model, weighting each sense according to its reliability (Clarke and Yuille, 1990; Ghahramani et al., 1997; Ernst and Banks, 2002; Alais and Burr, 2004; Landy et al., 2011). This model has been useful to predict the multisensory integration behavior of adults across different sensory modalities in an optimal or near-optimal fashion (Ernst and Banks, 2002; Alais and Burr, 2004; Landy et al., 2011). There is also firm neurophysiological evidence for multisensory integration. Studies in cats have demonstrated that the midbrain structure superior colliculus (SC) is involved in integrating information between modalities and in initiating and controlling localization and orientation of motor responses (Stein and Meredith, 1993). This structure is highly sensitive to input from the association cortex, and emergence of multisensory integration critically depends on cross-modal experiences that alter the underlying neural circuit (Stein et al., 2014). Moreover, cortical deactivation impairs integration of multisensory signals (Jiang et al., 2002, 2007; Rowland et al., 2014). Studies in monkeys explored multisensory decision making and underlying neurophysiology by considering visual and vestibular integration (Gu et al., 2008). Similar effects were also observed in rodents (Raposo et al., 2012, 2014; Sheppard et al., 2013).

The role of sensory modalities in child development has been the subject of relevant research in developmental psychology, psychophysics, and neuroscience. On the one side, scientific results show that young infants seem to be able to match sensory information and benefit from the presence of congruent sensory signals (Lewkowicz, 1988, 1996; Bahrick and Lickliter, 2000, 2004; Bahrick et al., 2002; Neil et al., 2006). There is also evidence for cross-modal facilitation, where stimuli in one modality increase the responsiveness to stimuli in other modalities (Lewkowicz and Lickliter, 1994; Lickliter et al., 1996; Morrongiello et al., 1998). On the other side, the ability to integrate unisensory signals in a statistically optimal fashion develops quite late, after 8–10 years of age (Gori et al., 2008, 2012; Nardini et al., 2008; Petrini et al., 2014; Dekker et al., 2015; Adams, 2016). Recent results show that during the first years of life, sensory modalities interact and communicate with each other and the absence of one sensory input impacts on the development of other modalities (Gori, 2015). According to the cross-sensory calibration theory, in children younger than 8–10 years old, the most robust sensory modality calibrates the other ones (Gori et al., 2008). This suggests that specific sensory modalities can be more suitable than others to convey specific information and hence to teach specific concepts. For example, it was observed that children use the tactile modality to perceive the size of objects, whereas the visual signal is used to perceive their orientation (Gori et al., 2008). It was also observed that when the motor information is not available, visual perception of size is impaired (Gori et al., 2012) and that when visual information is not available, tactile perception of orientation of objects is impaired (Gori et al., 2010). These results suggest that until 8–10 years of age, sensory modalities interact and shape each other. Then, multisensory technology that exploits multiple senses can be crucial to communicating specific concepts in a more effective way (i.e., having multiple signals available, the child can use the one which is most suitable for the task). Scientific evidence also suggests that it is not always true that the lack of one sensory modality is associated with an enhancement of the remaining senses (Rauschecker and Harris, 1983; Rauschecker and Kniepert, 1994; Lessard et al., 1998; Röder et al., 1999, 2007; Voss et al., 2004; Lomber et al., 2010), but, in some cases, even the other not impaired senses are affected by the lack of the calibration modality (Gori et al., 2014; Finocchietti et al., 2015; Vercillo et al., 2015). For example, visually impaired children have impaired tactile perception of orientation (Gori et al., 2010); thus, touch cannot be used to communicate the orientation concept, and other signals, such as the auditory one, could be more suitable to convey this information.

We think that this scientific evidence should be reflected in teaching and learning practices, by introducing novel multisensory pedagogical methodologies grounded on it. In particular, we think that such scientific evidence supports an embodied and enactive pedagogical approach, using different sensory-motor signals and feedback (audio, haptic, and visual) to teach concepts to primary school children. For example, the use of sound associated with body movement could be an alternative way to teach visually impaired children the concept of orientation and angles. Such an approach would be more direct, i.e., natural and intuitive, since it is based on the experience and on the perceptual responses to motor acts. Moreover, the use of movement for learning was shown to deepen and strengthen learning, retention, and engagement (Klemmer et al., 2006; Habib et al., 2016).

It should be noticed that sensory combination and sensory integration are implemented differently in the way multisensory signals are provided through technology. At the technological level, there is a difference between teaching a concept by using more than one modality (i.e., by adopting multiple alternative strategies and promoting multisensory combination) versus stimulating those modalities simultaneously by providing redundant sensory signals (thus promoting multisensory integration). In the technological area, Nigay and Coutaz (1993) classified multimodal interactive systems depending on their use of modalities and on whether modalities are combined (i.e., what in computer science is called multimodal fusion). In particular, they made a distinction between sequential, simultaneous, and composite multimodal interactive systems. The kind of multimodal interactive system, which is selected to provide multisensory feedback, depends on and affects the pedagogical paradigm and the way the learning process develops. More research is needed to get a deeper understanding of all the implications related to this choice, e.g., with respect to the concepts to teach, the needs of teachers and students, the optimal way of providing technological support, the learning outcomes, and so on.

Challenges

Multisensory technologies can help in overcoming the consolidated hegemony of vision in current educational practice. A too strong focus on one single sensory channel may compromise the effectiveness and personalization of the learning process. Moreover, a pedagogical approach based on one single modality may prevent the inclusion of children with impairments (e.g., with visual impairment).

More specifically, multisensory technologies can support the learning process by enhancing effectiveness, personalization, and inclusion. With respect to effectiveness, this may be affected by a wrong or an excessive usage of vision, which is not always the most suitable channel for communicating certain concepts to children.

As for personalization, a pedagogical methodology based almost exclusively on the visual modality would not consider the learning potential, and routes of access for learning in children, of exploiting the different modalities in ways that more comprehensively convey different kinds of information (e.g., the tactile modality is often better for perception of texture than vision). Moreover, we might speculate that a more flexible multisensory approach could highlight individual predispositions of children. It could be possible, for example, to observe a different individual tendency of preferring a specific learning approach for different children, demonstrating that specific sensory signals can be more useful for some children to learn specific concepts. For example, recent studies showed that musical training can be used as a therapeutic tool for treating children with dyslexia (e.g., Habib et al., 2016). The temporal and rhythmic features of music could indeed exert a positive effect on the multiple dimensions of the “temporal deficit” that is characteristic of some types of dyslexia. Other specific examples are autism and Attention Deficit Hyperactivity Disorder (ADHD). There is solid evidence that multisensory stimuli improve the accuracy of decisions. Can multisensory technology or stimuli also improve attention or learning speed or retention? In our opinion, this issue is worthy of further investigation and found evidence in this direction would be crucial for designing effective technological support for children with developmental disorders (ADHD, autism, specific learning disabilities, dyslexia, and so on).

Concerning inclusion, the lack of vision in children with visual disability impacts, e.g., the learning of geometrical concepts that are usually communicated through visual representations and metaphors. A delay in the acquisition of cognitive skills in visually impaired children directly affects their social competence, producing in turn feelings of frustration that represent a risk for the development of personality and emotional competence (Thompson, 1941). The use of multisensory technology would allow having the same method for teaching to be used by sighted and blind children, thus naturally breaking barriers among peers and facilitating social interactions.

An Opportunity For Multisensory Technologies

In our view, multisensory technologies are ideal for effectively supporting a pedagogical approach exploiting the best-suited sensory modality to teach a concept.

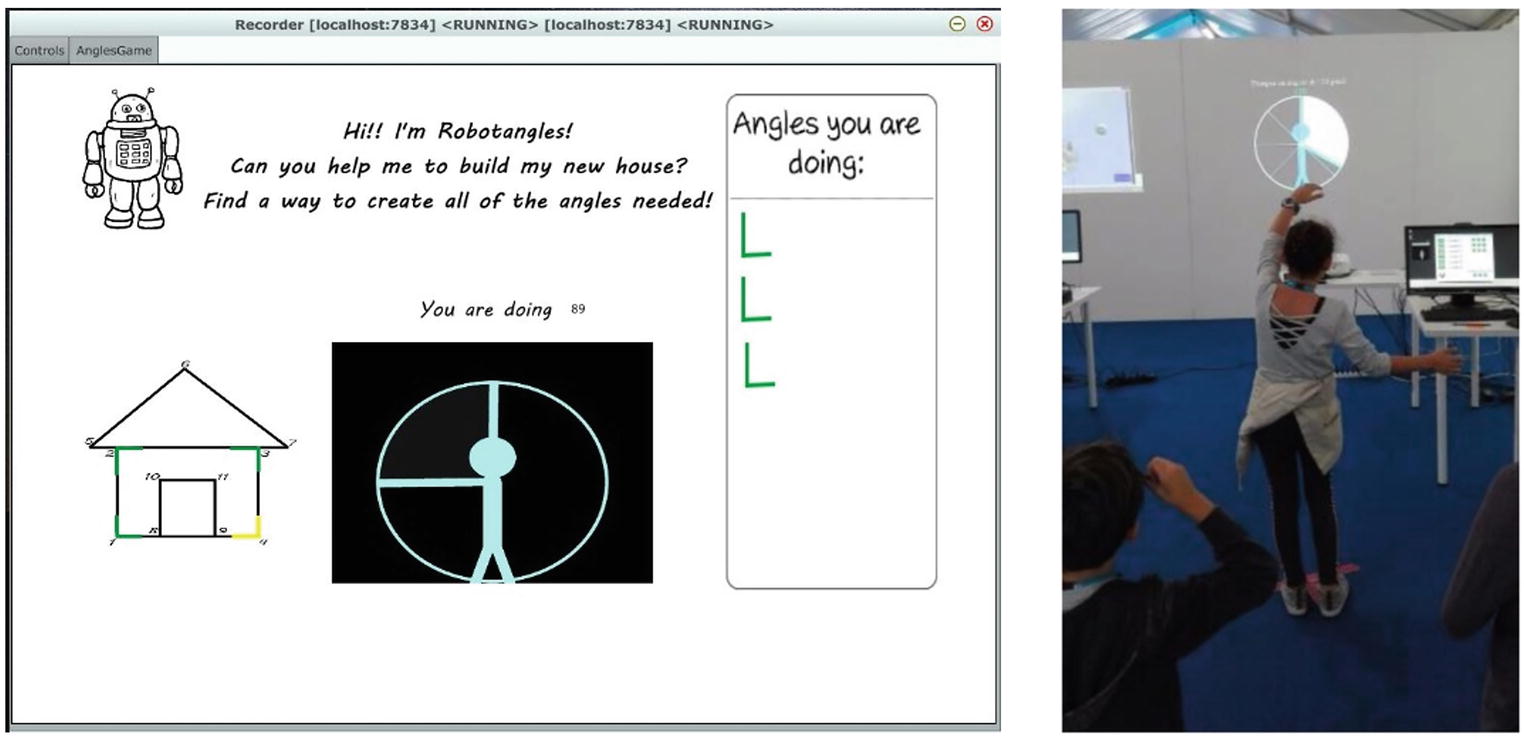

Multisensory technologies enable accurate and real-time mapping of motor behavior onto multiple facets of sound, music, tangible, and visual media, according to different strategies the teacher can select with great flexibility. Consider, for example, a recent technology-mediated learning activity we are developing for introducing geometric concepts, such as angles (Volta et al., 2018). In this activity, a child is asked to reproduce an angle by opening her arms. The child’s arms represent the two sides of the angle and her head its vertex. Arms aperture is automatically measured by means of a Microsoft Kinect v.2 device, and the motor behavior is mapped onto multisensory feedback in real time. A visual or auditory feedback or both of them is provided. The visual feedback consists of the visual representation within a circle of the angle the child is doing (see Figure 1). Concerning the auditory feedback, while the child moves her arms, she can listen to a musical scale covering the full range of angle amplitude. If the child changes the aperture of her arms, the note in the scale – played by a string instrument – changes according to the movement. A long distance between the arms (i.e., a big angle) corresponds to a low-pitch note, whereas a short distance (i.e., a small angle) corresponds to a high-pitch note. Such a mapping is grounded on psychophysical evidence showing that a low pitch is associated with a big size and a high pitch is associated with a small size (Tonelli et al., 2017). If the child is able to keep the same angle while rotating her arms, she listens to the same note with no changes in the auditory feedback, suggesting that angles are invariant under rotations. The teacher is provided with an interface enabling her to control the application (e.g., by selecting the angles that are proposed, the kind of feedback, several levels of difficulty, and so on). An initial and ongoing evaluation of this activity with children is suggesting that the proposed embodied representation of angles helps children in understanding angles and their properties (e.g., rotational invariance), even if more iterations of the development cycle are needed to address possible drawbacks (e.g., children get tired if asked to keep arms open for a too long time). While the angles activity implements a quite simple mapping of motor behavior onto visual and auditory feedback, more sophisticated approaches can be conceived. Multiple features of motor behavior can indeed be mapped onto multiple dimensions of sound morphology, including pitch, intensity, granularity, rhythm, and so on. While this is what usually happens when playing a musical instrument, technology makes it more flexible. Indeed, the teacher can choose which motor features are mapped onto which sound parameters and the child can quickly achieve a fine-grained control on the sound parameters, something that would require many years of practice with a traditional musical instrument. These issues have been debated for a long time in the literature of sound and music computing, see for instance, Hunt and Wanderley (2002), for a seminal work on this topic and the series of conferences on New Interfaces for Musical Expression2.

Figure 1. The visual feedback provided by a technology-mediated learning activity featuring angles. A child makes an angle by opening her arms, the arms representing the sides of the angle, and the head its vertex. Arms aperture is measured by a Microsoft Kinect v.2 device and mapped in real time onto visual and/or auditory feedback. The activity was designed and developed by the Casa Paganini – InfoMus research centre at DIBRIS – University of Genoa and the U-Vip Unit at Istituto Italiano di Tecnologia in the framework of the EU-H2020-ICT weDRAW project.

In our view, the adoption of an embodied and enactive pedagogical approach, tightly integrated with multisensory technology, would, therefore, foster effectiveness (for each specific concept, the most suited modality can be exploited) and personalization (flexibility for teachers and students) in the learning process. Moreover, inclusion can also take a great advantage: teaching can exploit the most suited substitutive modality for impaired children.

Guidelines

Since big opportunities most often entail likewise big risks, the introduction of multisensory technologies in the classroom needs to be careful. From our experience in technology-enhanced learning projects, we propose six golden rules we deem important for catching this opportunity and fully exploiting it.

1. Ground technology on pedagogical needs. Multisensory technologies should be tailored to the pedagogical needs of teachers. That is, they can help with teaching concepts that teachers specifically deem relevant in this respect. These could be concepts that are particularly difficult to understand for children or concepts that may enjoy communication through a sensory modality other than vision. We recently conducted a survey on over 200 math teachers. It was surprising for us to see that more than 75% of teachers agreed on the same concepts as the most difficult for children and the most appropriate for technological intervention.

2. Ground technology on scientific evidence. Multisensory technology should leverage on sensorial, perceptual, and cognitive capabilities children have according to scientific evidence. Concretely, for example, a technology able to detect specific motor behaviors in a target population of children (e.g., primary school) makes sense only if scientific evidence shows that children in the target population can actually display such behaviors. The same holds for feedback: multisensory technology can provide a specific feedback (e.g., based on pitch), if (1) children can perceive it (e.g., they developed perception of pitch) and (2) an experimentally proven association exists between feedback and concept to be communicated (e.g., the association between pitch and size of objects).

3. Adopt an iterative design approach and rigorously assess learning outcomes. Iterative design and refinement of technology are a critical component in the development cycle of interactive technologies. In case of multisensory technologies for education, this is crucial for successfully integrating technology in the classroom. Each iteration in the development process needs to rigorously assess learning outcomes (e.g., the speed of learning, longer-term outcomes like knowledge retention, student-centered outcomes like learner satisfaction, classroom behavior, and so on) and use this feedback to inform the next iteration.

4. Make technology flexible and customizable. This is a typical goal for technologies, but it assumes here a particular relevance. It means assessing (1) which is the preferred sensory modality for a child to learn a specific concept and (2) whether specific impairments require exploiting particular sensory modalities. As a side effect, technology may help with screening for behavioral problems and addressing them. For example, recent studies show that musical training can be used as a therapeutic tool for treating children with dyslexia (e.g., Curzon et al., 2009).

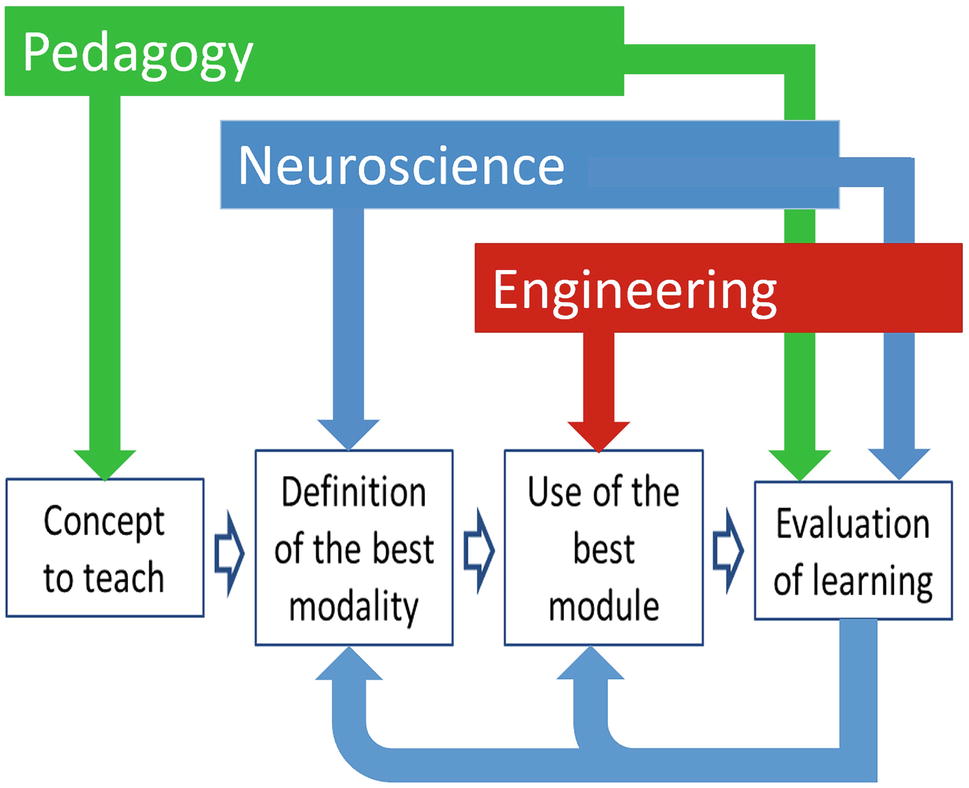

5. Emphasize the role of the teacher. In our view, technology does not replace the teacher. Rather, the teacher plays the central role of mediator. In an iterative methodology (see Figure 2), the teacher first chooses a concept to teach; then, following an initial evaluation phase, she identifies the best modality to teach it to each child and personalizes technology to exploit the selected modality; she finally evaluates the outcomes of the learning process and adopts possible further actions. Moreover, design, development, and evaluation of technology should be obviously carried out in the framework of a participatory design process involving teachers and students (see Guideline 3).

6. Promote cross-fertilization with the arts and human sciences. Taking a rigorous scientific approach should not exclude the opportunity of getting inspiration from humanities, and in particular from arts. Recent initiatives (e.g., the EU STARTS platform3) witness the increased awareness of how art and science are two strongly coupled aspects of human creativity (Camurri and Volpe, 2016), as well as the impact of art on scientific and technological research. In case of multisensory technology for education, the extraordinary ability art has of conveying content by means of sound, music, and visual media provides, in our view, a significant added value.

Figure 2. A four-phase iterative methodology directly involving the teacher in the choice of the sensory modalities to be exploited for conveying a specific concept. Following an initial evaluation phase, the best modality to teach the concept is identified for each child in a personalized way, and the selected modality is used to teach further concepts. The methodology involves tight integration of pedagogical, neuroscientific, and technological knowledge and an effective multidisciplinary approach.

Conclusion

We developed and tested our approach in the framework of the weDRAW project4. This was an EU-H2020-ICT-funded project focusing on multisensory technologies for teaching math to primary school children. The final goal was to open a new teaching/learning channel based on multisensory interactive technology. The project represented an ideal testbed to assess the support of multisensory technology to learning math. More importantly, we think that the approach we outlined in this article can enable the development of a multisensory embodied and enactive learning paradigm and of a teaching ecosystem that applies in the same way and provides the same opportunities to both typically developed and impaired children, thus breaking the barriers between them and fostering inclusion.

Ethics Statement

This is a perspective paper, presenting our viewpoint on future research that will potentially involve human subjects. The perspective paper, however, is not based on any specific experimental study but is rather grounded on the existing literature and on our vision of future research.

Author Contributions

GV and MG equally contributed to both the concept of the article and its writing.

Funding

This work was partially supported by the EU-H2020-ICT Project weDRAW. WeDRAW has received funding from the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 732391.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank our colleagues participating in the weDRAW project for the insightful discussions.

Footnotes

1. See e.g., http://www.pcadvisor.co.uk/feature/software/best-maths-apps-for-children-3380559/

3. https://ec.europa.eu/digital-single-market/en/ict-art-starts-platform

References

Adams, W. J. (2016). The Development of Audio-Visual Integration for Temporal Judgements. PLoS Comput. Biol. 12:e1004865. doi: 10.1371/journal.pcbi.1004865

Alais, D., and Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262. doi: 10.1016/j.cub.2004.01.029

Bahrick, L. E., Flom, R., and Lickliter, R. (2002). Intersensory redundancy facilitates discrimination of tempo in 3-month-old infants. Dev. Psychobiol. 41, 352–363. doi: 10.1002/dev.10049

Bahrick, L. E., and Lickliter, R. (2000). Intersensory redundancy guides attentional selectivity and perceptual learning in infancy. Dev. Psychol. 36, 190–201. doi: 10.1037/0012-1649.36.2.190

Bahrick, L. E., and Lickliter, R. (2004). Infants’ perception of rhythm and tempo in unimodal and multimodal stimulation: a developmental test of the intersensory redundancy hypothesis. Cogn. Affect. Behav. Neurosci. 4, 137–147. doi: 10.3758/CABN.4.2.137

Baud-Bovy, G., and Balzarotti, N. (2017). “Using force-feedback devices in educational settings: a short review” in Proceedings 1st ACM SIGCHI international workshop on multimodal interaction for education (MIE 2017), 14–21.

Bruce, B. C., and Levin, J. A. (1997). Educational technology: media for inquiry, communication, construction, and expression. J. Educ. Comput. Res. 17, 79–102. doi: 10.2190/7HPQ-4F3X-8M8Y-TVCA

Camurri, A., and Volpe, G. (2016). The intersection of art and technology. IEEE Multimedia 23, 10–17. doi: 10.1109/MMUL.2016.13

Clarke, J. J., and Yuille, A. L. (1990). Data fusion for sensory information processing. (Boston: Kluwer Academic).

Connolly, T. M., Boyle, E. A., MacArthur, E., Hainey, T., and Boyle, J. M. (2012). A systematic literature review of empirical evidence on computer games and serious games. Comput. Educ. 59, 661–686. doi: 10.1016/j.compedu.2012.03.004

Curzon, P., McOwan, P. W., Cutts, Q. I., and Bell, T. (2009). Enthusing & inspiring with reusable kinaesthetic activities. SIGCSE Bull. 41, 94–98. doi: 10.1145/1595496.1562911

Dekker, T. M., Ban, H., van der Velde, B., Sereno, M. I., Welchman, A. E., and Nardini, M. (2015). Late development of cue integration is linked to sensory fusion in cortex. Curr. Biol. 25, 2856–2861. doi: 10.1016/j.cub.2015.09.043

Ernst, M. O., and Banks, M. S. (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433. doi: 10.1038/415429a

Ernst, M. O., and Bulthoff, H. H. (2004). Merging the senses into a robust percept. Trends Cogn. Sci. 8, 162–169. doi: 10.1016/j.tics.2004.02.002

Falloon, G. (2013). Young students using ipads: app design and content influences on their learning pathways. Computers & Education 68:505e521.

Finocchietti, S., Cappagli, G., and Gori, M. (2015). Encoding audio motion: spatial impairment in early blind individuals. Front. Psychol. 6:1357. doi: 10.3389/fpsyg.2015.01357

Ghahramani, Z., Wolpert, D. M., and Jordan, M. I. (1997). “Computational models of sensorimotor integration, in self-organization, computational maps and motor control” in Self-organization, computational maps, and motor control. eds. P. G. Morasso and V. Sanguineti (Amsterdam: Elsevier), 117–147.

Gori, M. (2015). Multisensory integration and calibration in children and adults with and without sensory and motor disabilities. Multisens. Res. 28, 71–99. doi: 10.1163/22134808-00002478

Gori, M., Del Viva, M., Sandini, G., and Burr, D. C. (2008). Young children do not integrate visual and haptic form information. Curr. Biol. 18, 694–698. doi: 10.1016/j.cub.2008.04.036

Gori, M., Sandini, G., and Burr, D. (2012). Development of visuo-auditory integration in space and time. Front. Integr. Neurosci. 6:77. doi: 10.3389/fnint.2012.00077

Gori, M., Sandini, G., Martinoli, C., and Burr, D. (2010). Poor haptic orientation discrimination in nonsighted children may reflect disruption of cross-sensory calibration. Curr. Biol. 20, 223–225. doi: 10.1016/j.cub.2009.11.069

Gori, M., Sandini, G., Martinoli, C., and Burr, D. C. (2014). Impairment of auditory spatial localization in congenitally blind human subjects. Brain 137, 288–293. doi: 10.1093/brain/awt311

Gori, M., Tinelli, F., Sandini, G., Cioni, G., and Burr, D. (2012). Impaired visual size-discrimination in children with movement disorders. Neuropsychologia 50, 1838–1843. doi: 10.1016/j.neuropsychologia.2012.04.009

Groff, J., and Mouza, C. (2008). A framework for addressing challenges to classroom technology use. AACE J. 16, 21–46.

Gu, Y., Angelaki, D. E., and Deangelis, G. C. (2008). Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 11, 1201–1210. doi: 10.1038/nn.2191

Habib, M., Lardy, C., Desiles, T., Commeiras, C., Chobert, J., and Besson, M. (2016). Music and dyslexia: a new musical training method to improve reading and related disorders. Front. Psychol. 7:26. doi: 10.3389/fpsyg.2016.00026

Hicks, D., and Holden, C. (2007). Teaching the global dimension: Key principles and effective practice. (London: Routledge).

Hunt, A., and Wanderley, M. (2002). Mapping performer parameters to synthesis engines. Organised Sound 7, 97–108. doi: 10.1017/S1355771802002030

Jiang, W., Jiang, H., Rowland, B. A., and Stein, B. E. (2007). Multisensory orientation behavior is disrupted by neonatal cortical ablation. J. Neurophysiol. 97, 557–562. doi: 10.1152/jn.00591.2006

Jiang, W., Jiang, H., and Stein, B. E. (2002). Two corticotectal areas facilitate multisensory orientation behavior. J. Cogn. Neurosci. 14, 1240–1255. doi: 10.1162/089892902760807230

Johnson, A. M., Jacovina, M. E., Russell, D. G., and Soto, C. M. (2016). “Challenges and solutions when using technologies in the classroom” in Adaptive educational technologies for literacy instruction. eds. S. A. Crossley and D. S. McNamara (NY, USA: Routledge, New York), 18.

Joshi, R. M., Dahlgren, M., and Boulware-Gooden, R. (2002). Teaching reading in an inner city school through a multisensory teaching approach. Ann. Dyslexia 52, 229–242. doi: 10.1007/s11881-002-0014-9

Katai, Z. (2011). Multi-sensory method for teaching-learning recursion. Comput. Appl. Eng. Educ. 19, 234–243. doi: 10.1002/cae.20305

Katai, Z., and Toth, L. (2010). Technologically and artistically enhanced multi-sensory computer programming education. Teach. Teach. Educ. 26, 244–251. doi: 10.1016/j.tate.2009.04.012

Klemmer, S. R., Hartmann, B., and Takayama, L. (2006). “How bodies matter: five themes for interaction design” in Proceedings of the 6th conference on designing interactive systems. (New York, NY, USA: ACM), 140–149.

Kulik, J. A. (1983). Synthesis of research on computer-based instruction. Educ. Leadersh. 41, 19–21.

Landy, M. S., Banks, M. S., and Knill, D. C. (2011). “Ideal-observer models of cue integration” in Sensory cue integration. eds. J. Trommershauser, K. Kording, and M. S. Landy (Oxford: Oxford University Press), 5–29.

Lessard, N., Paré, M., Lepore, F., and Lassonde, M. (1998). Early-blind human subjects localize sound sources better than sighted subjects. Nature 395, 278–280. doi: 10.1038/26228

Lewkowicz, D. J. (1988). Sensory dominance in infants: 1. Six-month-old infants’ response to auditory-visual compounds. Dev. Psychol. 24, 155–171. doi: 10.1037/0012-1649.24.2.155

Lewkowicz, D. J. (1996). Perception of auditory-visual temporal synchrony in human infants. J. Exp. Psychol. Hum. Percept. Perform. 22, 1094–1106. doi: 10.1037/0096-1523.22.5.1094

D. J. Lewkowicz and R. Lickliter (eds.) (1994). The development of intersensory perception: Comparative perspectives. (Mahwah, NJ, USA: Lawrence Erlbaum Associates Inc.).

Lickliter, R., Lewkowicz, D. J., and Columbus, R. F. (1996). Intersensory experience and early perceptual development: the role of spatial contiguity in bobwhite quail chicks’ responsiveness to multimodal maternal cues. Dev. Psychobiol. 29, 403–416. doi: 10.1002/(SICI)1098-2302(199607)29:5<403::AID-DEV1>3.0.CO;2-S

Lomber, S. G., Meredith, M. A., and Kral, A. (2010). Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat. Neurosci. 13, 1421–1427. doi: 10.1038/nn.2653

Moreno, R., and Mayer, R. E. (1999). Cognitive principles of multimedia learning: the role of modality and contiguity. J. Educ. Psychol. 91, 358–368. doi: 10.1037/0022-0663.91.2.358

Morrongiello, B. A., Fenwick, K. D., and Chance, G. (1998). Cross-modal learning in newborn infants: inferences about properties of auditoryvisual events. Infant Behav. Dev. 21, 543–554. doi: 10.1016/S0163-6383(98)90028-5

Nardini, M., Jones, P., Bedford, R., and Braddick, O. (2008). Development of cue integration in human navigation. Curr. Biol. 18, 689–693. doi: 10.1016/j.cub.2008.04.021

Neil, P. A., Chee-Ruiter, C., Scheier, C., Lewkowicz, D. J., and Shimojo, S. (2006). Development of multisensory spatial integration and perception in humans. Dev. Sci. 9, 454–464. doi: 10.1111/j.1467-7687.2006.00512.x

Nigay, L., and Coutaz, J. (1993). “A design space for multimodal systems: concurrent processing and data fusion” in Proceedings of the INTERACT’93 and CHI’93 conference on human factors in computing systems (CHI’93). (New York, NY, USA: ACM), 172–178.

Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. (New York, USA: Basic Books Inc.).

Petrini, K., Remark, A., Smith, L., and Nardini, M. (2014). When vision is not an option: children’s integration of auditory and haptic information is suboptimal. Dev. Sci. 17, 376–387. doi: 10.1111/desc.12127

Philip, T. M. (2017). Learning with mobile technologies. Commun. ACM 60, 34–36. doi: 10.1145/2976735

Raposo, D., Kaufman, M. T., and Churchland, A. K. (2014). A category-free neural population supports evolving demands during decision-making. Nat. Neurosci. 17, 1784–1792. doi: 10.1038/nn.3865

Raposo, D., Sheppard, J. P., Schrater, P. R., and Churchland, A. K. (2012). Multisensory decision-making in rats and humans. J. Neurosci. 32, 3726–3735. doi: 10.1523/JNEUROSCI.4998-11.2012

Raskind, M., Smedley, T. M., and Higgins, K. (2005). Virtual technology: bringing the world into the special education classroom. Interv. Sch. Clin. 41, 114–119. doi: 10.1177/10534512050410020201

Rauschecker, J. P., and Harris, L. R. (1983). Auditory compensation of the effects of visual deprivation in the cat’s superior colliculus. Exp. Brain Res. 50, 69–83. doi: 10.1007/BF00238233

Rauschecker, J. P., and Kniepert, U. (1994). Auditory localization behaviour in visually deprived cats. Eur. J. Neurosci. 6, 149–160. doi: 10.1111/j.1460-9568.1994.tb00256.x

Rizzo, A., Raheb, K. E., Whatley, S., Velaetis, M., Zanoni, M., Camurri, A., et al. (2018). “WhoLoDancE: whole-body interaction learning for dance education” in Proceedings of the workshop on cultural informatics at the EUROMED international conference on digital heritage 2018 (EUROMED2018), 41–50.

Röder, B., Kusmierek, A., Spence, C., and Schicke, T. (2007). Developmental vision determines the reference frame for the multisensory control of action. Proc. Natl. Acad. Sci. USA 104, 4753–4758. doi: 10.1073/pnas.0607158104

Röder, B., Teder-Sälejärvi, W., Sterr, A., Rösler, F., Hillyard, S. A., and Neville, H. J. (1999). Improved auditory spatial tuning in blind humans. Nature 400, 162–166. doi: 10.1038/22106

Rowland, B. A., Jiang, W., and Stein, B. E. (2014). Brief cortical deactivation early in life has long-lasting effects on multisensory behavior. J. Neurosci. 34, 7198–7202. doi: 10.1523/JNEUROSCI.3782-13.2014

Santos, M. E. C., Chen, A., Taketomi, T., Yamamoto, G., Miyazaki, J., and Kato, H. (2014). Augmented reality learning experiences: survey of prototype design and evaluation. IEEE Trans. Learn. Technol. 7, 38–56. doi: 10.1109/TLT.2013.37

Sheppard, J. P., Raposo, D., and Churchland, A. K. (2013). Dynamic weighting of multisensory stimuli shapes decision-making in rats and humans. J. Vis. 13, pii: 4. doi: 10.1167/13.6.4

Stein, B. E., Stanford, T. R., and Rowland, B. A. (2014). Development of multisensory integration from the perspective of the individual neuron. Nat. Rev. Neurosci. 15, 520–535. doi: 10.1038/nrn3742

Supalo, C. A., Wohlers, H. D., and Humphrey, J. R. (2011). Students with blindness explore chemistry at “camp can do”. J. Sci. Educ. Stud. Disabil. 15, 1–9. doi: 10.14448/jsesd.04.0001

Thompson, J. (1941). Development of facial expression of emotion in blind and seeing children. Arch. Psychol. 37, 1–47. doi: 10.1038/nrn3742

Tonelli, A., Cuturi, L. F., and Gori, M. (2017). The influence of auditory information on visual size adaptation. Front. Neurosci. 11:594. doi: 10.3389/fnins.2017.00594

Varni, G., Volpe, G., Sagoleo, R., Mancini, M., and Lepri, G. (2013). “Interactive reflexive and embodied exploration of sound qualities with BeSound” in Proceedings of the 12th international conference on interaction design and children (IDC2013), 531–534.

Vercillo, T., Milne, J. L., Gori, M., and Goodale, M. A. (2015). Enhanced auditory spatial localization in blind echolocators. Neuropsychologia 67, 35–40. doi: 10.1016/j.neuropsychologia.2014.12.001

Volta, E., Alborno, P., Gori, M., and Volpe, G. (2018). “Designing a multisensory social serious-game for primary school mathematics learning” in Proceedings 2018 IEEE games, entertainment, media conference (GEM 2018), 407–410.

Voss, P., Lassonde, M., Gougoux, F., Fortin, M., Guillemot, J. P., and Lepore, F. (2004). Early- and late-onset blind individuals show supra-normal auditory abilities in far-space. Curr. Biol. 14, 1734–1738.

Keywords: multisensory technologies, education, integration of sensory modalities, enaction, inclusion

Citation: Volpe G and Gori M (2019) Multisensory Interactive Technologies for Primary Education: From Science to Technology. Front. Psychol. 10:1076. doi: 10.3389/fpsyg.2019.01076

Edited by:

Claudio Longobardi, University of Turin, ItalyReviewed by:

Benjamin A. Rowland, Wake Forest University, United StatesSharlene D. Newman, Indiana University Bloomington, United States

Copyright © 2019 Volpe and Gori. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gualtiero Volpe, Z3VhbHRpZXJvLnZvbHBlQHVuaWdlLml0

Gualtiero Volpe

Gualtiero Volpe Monica Gori

Monica Gori