- 1Pediatric Audiology Laboratory, Department of Communication Sciences and Disorders, University of Iowa, Iowa City, IA, United States

- 2Department of Biostatistics, University of Iowa, Iowa City, IA, United States

- 3Center for Hearing Research, Audibility, Perception, and Cognition Laboratory, Boys Town National Research Hospital, Omaha, NE, United States

Objectives: The aims of the current study were: (1) to compare growth trajectories of speech recognition in noise for children with normal hearing (CNH) and children who are hard of hearing (CHH) and (2) to determine the effects of auditory access, vocabulary size, and working memory on growth trajectories of speech recognition in noise in CHH.

Design: Participants included 290 children enrolled in a longitudinal study. Children received a comprehensive battery of measures annually, including speech recognition in noise, vocabulary, and working memory. We collected measures of unaided and aided hearing and daily hearing aid (HA) use to quantify aided auditory experience (i.e., HA dosage). We used a longitudinal regression framework to examine the trajectories of speech recognition in noise in CNH and CHH. To determine factors that were associated with growth trajectories for CHH, we used a longitudinal regression model in which the dependent variable was speech recognition in noise scores, and the independent variables were grade, maternal education level, age at confirmation of hearing loss, vocabulary scores, working memory scores, and HA dosage.

Results: We found a significant effect of grade and hearing status. Older children and CNH showed stronger speech recognition in noise scores compared to younger children and CHH. The growth trajectories for both groups were parallel over time. For CHH, older age, stronger vocabulary skills, and greater average HA dosage supported speech recognition in noise.

Conclusion: The current study is among the first to compare developmental growth rates in speech recognition for CHH and CNH. CHH demonstrated persistent deficits in speech recognition in noise out to age 11, with no evidence of convergence or divergence between groups. These trends highlight the need to provide support for children with all degrees of hearing loss in the academic setting as they transition into secondary grades. The results also elucidate factors that influence growth trajectories for speech recognition in noise for children; stronger vocabulary skills and higher HA dosage supported speech recognition in degraded situations. This knowledge helps us to develop a more comprehensive model of spoken word recognition in children.

Introduction

Every year, approximately three in 1,000 children are born with a significant hearing loss (Mehra et al., 2009). Children who are hard of hearing (CHH) have sufficient residual hearing to benefit from amplification. With the advent of newborn hearing screening, they are now being identified and fitted with hearing aids (HAs) during infancy (Holte et al., 2012). Early access to technology and services is posited to have a positive, long-term impact on functional outcomes, which results in the vast majority of CHH being educated in regular education settings (Page et al., 2018). As most CHH rely entirely on spoken language to communicate, they face significant challenges as they enter classrooms that are likely to have poor acoustics (Knecht et al., 2002). Most academic and extracurricular settings are characterized by background noise, which negatively affects speech recognition and academic outcomes in children with normal hearing (CNH), and has even greater consequences for CHH. Even though CHH have documented weaknesses with listening in noise (Crandell, 1993; Uhler et al., 2011; Caldwell and Nittrouer, 2013; Leibold et al., 2013; McCreery et al., 2015; Klein et al., 2017; Ching et al., 2018), there is little research on how their ability to recognize speech in noise develops over time during the school-age years. Increased knowledge in this area impacts both clinical decision-making and theoretical understanding of the mechanisms that drive listening in noise. The goals of the current study are twofold: (1) to investigate growth rates in speech recognition in noise for school-age CHH and CNH, and (2) to investigate the impact of auditory access and cognitive-linguistic abilities on CHH’s ability to listen in adverse acoustic conditions over time.

Given their reduced access to spectral and temporal cues in the speech signal, as well as reduced binaural processing, it is not surprising that listening in noise is a challenge for CHH. McCreery et al. (2015) examined word and phoneme recognition in noise in 7- to 9-year-old CHH and age-matched CNH. Even with amplification, CHH rarely reached the same level of performance as CNH in noise. Caldwell and Nittrouer et al. (2013) evaluated kindergartners with normal hearing, HAs, or cochlear implants (CIs) on measures of speech recognition in quiet and in noise and found significant group differences in favor of CNH. The question that then arises is whether children with hearing loss can eventually catch up with their peers, if the gap in speech recognition in noise widens over time, or if they show persistent but stable deficits in recognizing speech in noise. Given that adults with hearing loss show difficulties with listening in noise (Dubno, 2015), we would predict that CHH will not show speech recognition scores that are commensurate with CNH. On the other hand, CNH might reach a floor level on speech recognition in noise tasks, allowing CHH to eventually close the gap. It also seems improbable that the gap in speech recognition would widen over time; however, a recent study by Walker et al. (2019) indicated an increasing gap with age between CHH and CNH in identifying words during a gating paradigm. The third option, parallel growth rates between CHH and CHH, would appear to be the most reasonable prediction given what we know from previous research. This hypothesis has not been tested empirically, however, because much of the research to date is cross-sectional or has too few subjects or data points to conduct longitudinal analyses. Thus, there is minimal knowledge about the developmental aspects of speech recognition for CHH compared to CNH, or the cognitive and peripheral factors that support growth in listening skills over time. The question of developmental trajectories in speech recognition in noise can only be effectively addressed with longitudinal data sets, which are lacking in the research literature on CHH.

In addition to limited longitudinal data, previous large-scale studies of speech recognition in children with hearing loss have focused primarily on children with congenital, severe-profound hearing loss who use CIs (Davidson et al., 2011; Robinson et al., 2012; Ching et al., 2014; Dunn et al., 2014; Easwar et al., 2018). CHH are either excluded from these research studies or combined with children who are deaf, making it difficult to isolate the effects of mild to severe hearing loss on speech recognition. The studies that have been conducted with CHH have some limitations. First, children have been tested with words in quiet, rather than word or sentence recognition in noise (Stiles et al., 2012). Identifying monosyllabic words in quiet is not representative of the everyday listening experiences of children (Magimairaj et al., 2018) and may restrict individual differences for CHH, as many of these children will perform at or near ceiling levels (McCreery et al., 2015). Furthermore, speech recognition testing with background noise more accurately reflects listening experiences in realistic settings than monosyllabic word recognition in quiet (Kirk et al., 2012; Hillock-Dunn et al., 2014). Monosyllabic word recognition in quiet has minimal cognitive and linguistic processing demands, which are required in real-world listening environments (Walker et al., 2019). A second limitation of the prior research is that the focus is often on the influence of age at confirmation of hearing loss or age at amplification on speech recognition in noise (Sininger et al., 2010; Ching et al., 2013). Although it is important to evaluate the effectiveness of early hearing detection and intervention services, it is also important to understand the combined effects of auditory access, cognitive and linguistic abilities on listening development. There has been a great deal of attention directed toward understanding speech recognition skills in children with hearing loss, but we still lack a clear understanding of the mechanisms that drive developmental growth.

In environments with degraded signals (either due to poor acoustics or reduced hearing levels), listeners rely on higher level cognitive and linguistic skills to interpret information about the input (Nittrouer and Boothroyd, 1990). According to the Ease of Language Understanding (ELU) model (Rönnberg et al., 2013), adults with higher cognitive skills compensate in listening situations with distorted or missing information because they can use their memory and linguistic skills to repair the distorted signal (Akeroyd, 2008; Rönnberg et al., 2008; Tun et al., 2010; Zekveld et al., 2011). The findings supporting the predictions of the ELU model in children are mixed. Lalonde and Holt (2014) reported that parent report measures of working memory were positively correlated with speech recognition in quiet with 2-year-old CNH. McCreery et al. (2017) evaluated monosyllabic word and sentence recognition in noise for 96 5- to 12-year-old CNH. Children with higher working memory skills (measured as a combination of complex visual and verbal working memory span scores) had better speech recognition in noise skills than children with lower working memory. On the other hand, there are several studies that do not support the predictions of the ELU model in children. Eisenberg et al. (2000) did not find an association between working memory capacity (measured with forward digit span) and spectrally degraded speech recognition in CNH after controlling for age. Magimairaj et al. (2018) also did not find that working memory capacity (measured with forward digit span, auditory working memory, and complex working memory span tasks) was predictive of speech recognition in noise for 7- to 11-year-old CNH. The differences in findings may be due to the predictor variables and/or the outcome measures. Eisenberg et al. used a short-term working memory test (i.e., storage only), as opposed to complex working memory span measures (i.e., storage and processing). The proponents of the ELU model have posited that simple span tests like digit span are not good predictors of speech recognition (Rönnberg et al., 2013). McCreery et al. used sentences with no semantic context (which increased the memory load), whereas Magimairaj et al. used the Bamford-Kowal-Bench Speech in Noise sentences (BKB-SIN; Bench et al., 1979) which include semantic cues. It is also important to note that the effects of complex working memory span have not been thoroughly explored in CHH. More studies are needed to disentangle the associations between working memory, language, auditory access, and speech recognition in noise for children with hearing loss, who are most impacted by degraded acoustic input.

In addition to exploring the role of working memory capacity, the ELU model also predicts that language abilities will influence the ability to recognize degraded speech (Zekveld et al., 2011). Performance on sentence repetition tasks (which are used to measure speech recognition in noise) is likely tied to oral language skills (Klem et al., 2015). Stronger language skills allow individuals to make better predictions about an incoming message, even in the presence of limited sensory input (Nittrouer et al., 2013). Vocabulary knowledge accounts for a significant proportion of variance in word and sentence recognition in quiet for children with CIs and/or HAs (Blamey et al., 2001; Caldwell and Nittrouer, 2013), and language skills are significant predictors of speech recognition in noise for school-age CHH (McCreery et al., 2015; Klein et al., 2017; Ching et al., 2018). None of these studies included longitudinal data, so it was not possible to determine how these underlying mechanisms influence developmental trajectories of speech recognition in noise. In contrast to the former studies, Magimairaj et al. (2018) did not find that language skills were related to BKB-SIN scores, which they interpreted as an indication that speech recognition in noise is dissociated from language on that clinical measure. They did not include CHH as participants, however, and their language metric was a combined measure of receptive and expressive vocabulary, language comprehension, sentence recall, and inference-making. Thus, their composite language measure may have lacked sensitivity and masked variability, resulting in their reported finding of a dissociation between language and speech recognition in noise.

A third relevant factor to consider when examining sources of variance in speech recognition in noise is auditory access, particularly because CHH show large individual differences in this variable (McCreery et al., 2013; Walker et al., 2013). Auditory access has been explored as a predictor in several ways. One method is to use degree of hearing loss (i.e., pure tone average; PTA) as a predictor. Blamey et al. (2001) found that lower PTA was associated with better speech recognition in noise in children with moderate to profound hearing loss. In contrast, Sininger et al. (2010) examined auditory outcomes in young children with mild to profound hearing loss and found that PTA did not contribute to speech recognition skills. These mixed results may be related to the fact that PTA does not capture the everyday aided listening experiences of CHH. Because PTA measures only unaided audibility for very soft sounds, it does not reflect a child’s access to supra-threshold speech while wearing HAs.

An alternative to relying on PTA is to examine audibility levels, as measured by the Speech Intelligibility Index (SII). SII is a measurement that describes the proportion of speech accessible to the listener, with or without HAs. It accounts for the configuration of hearing loss, differences in ear canal size, and amplification characteristics of HAs. Studies have shown an association between SII and speech recognition in CHH (Stelmachowicz et al., 2000; Davidson and Skinner, 2006; Scollie, 2008; Stiles et al., 2012; McCreery et al., 2017); however, Ching et al. (2018) reported that aided SII did not contribute any additional variance to speech recognition in noise for 5-year-old CHH, after controlling for unaided hearing thresholds, non-verbal intelligence, and language skills. Children with HAs in the Ching et al. study were fitted within 3 dB of HA prescriptive targets, which likely reduced variability in SII.

A third way to examine auditory access is to consider individual differences in the amount of daily HA use. Only a few studies have looked at hours of HA use as a predictor variable of speech recognition in noise. McCreery et al. (2015) found that children with more hours of HA use showed higher scores on parent report measures of auditory skills and word recognition in quiet for toddlers and preschoolers with hearing loss. In contrast, Klein et al. (2017) did not find an effect of HA use on word and nonword recognition in school-age CHH. They acknowledged, however, that there was little variability in this factor among the participants, who were mostly consistent HA users.

To better understand the impact of auditory access on listening in noise, we propose to conceptualize the auditory experience of CHH as a combination of unaided hearing, aided SII, and amount of HA use (Walker et al., accepted). Our past studies showed that CHH demonstrate large individual differences in aided audibility (McCreery et al., 2013) and amount of HA use (Walker et al., 2013) over time. We have found unique effects of unaided SII, aided SII and amount of HA use on listening and language outcomes (McCreery et al., 2015; Tomblin et al., 2015), but we have not empirically tested the combined effects of these three factors on speech recognition. In pursuit of this goal, we have developed a metric we call hearing aid (HA) dosage. The concept of dosage has been applied to pharmacological and child language intervention research to study the effect of different treatment intensities (Warren et al., 2007), but it has not been utilized in the literature on childhood hearing loss. Combining HA dosage measures with longitudinal data on speech recognition in noise for children with hearing loss can inform us of the long-term effects of specific approaches to intervention and auditory access. For example, it is unclear whether higher HA dosage levels averaged across time is sufficient to support the development of speech recognition in noise, or whether fluctuations in auditory access (either due to inconsistency with wearing HAs or changes in hearing levels or aided audibility) could have a negative impact on listening in noise. The need to demonstrate the effects of aided auditory access is particularly relevant for school age children, some of whom receive less academic support in later grades (Page et al., 2018; Klein et al., 2019) and are at risk for inconsistent HA use in the classroom as they enter adolescence (Gustafson et al., 2015). Greater knowledge of the effects of HA dosage on speech recognition in noise can guide implementation of effective interventions for children with hearing loss and has the potential to motivate parents, teachers, and service providers to encourage increased HA usage.

In summary, no studies have compared developmental trajectories in speech recognition in noise between CNH and CHH. This paper describes results from a longitudinal study in which speech recognition in noise measures were collected on an annual basis in school-age CNH and CHH. The aims of this study were to: (1) compare the growth rates for speech recognition in noise for CNH and CHH, (2) determine whether CHH and CNH show similar growth rates over time, and (3) identify the auditory, cognitive, and linguistic factors that are associated with individual differences in growth rates for speech recognition in noise for CHH. It is expected that this knowledge will provide us with further insight into the everyday functional listening skills of children with and without hearing loss.

Method

Participants

Participants included 290 children (CHH, n = 199; CNH, n = 92) who were enrolled in a multicenter, longitudinal study on outcomes of children with mild to severe hearing loss, Outcomes of School-Age Children who are Hard of Hearing (OSACHH). The primary recruitment sites were the University of Iowa, Boys Town National Research Hospital, and University of North Carolina-Chapel Hill. Some of the children from the Iowa and Boys Town test sites also participated in a second longitudinal project that was conducted during the same time period as OSACHH. This second project was called Complex Listening in School-Age Hard of Hearing Children.

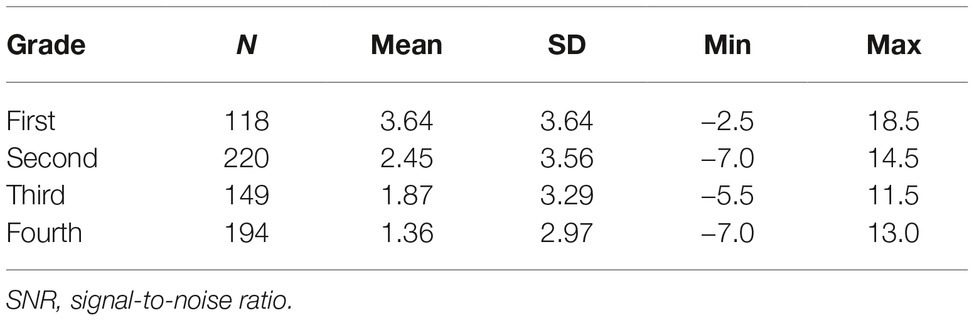

CHH had a permanent bilateral hearing loss with a better-ear four-frequency PTA in the mild to moderately severe range. One hundred seventy-nine children had a sensorineural or mixed hearing loss, 15 had a conductive hearing loss, and two had auditory neuropathy spectrum disorder. Three children did not have the type of hearing loss reported. Both CHH and CNH used spoken English as the primary communication mode and had no major vision, motor, or cognitive impairments. CNH and CHH were matched by age. There was no significant between-group difference in maternal education level [t(130) = −1.61, p = 0.11]. Demographic information, including audiologic data for the CHH, is provided in Table 1.

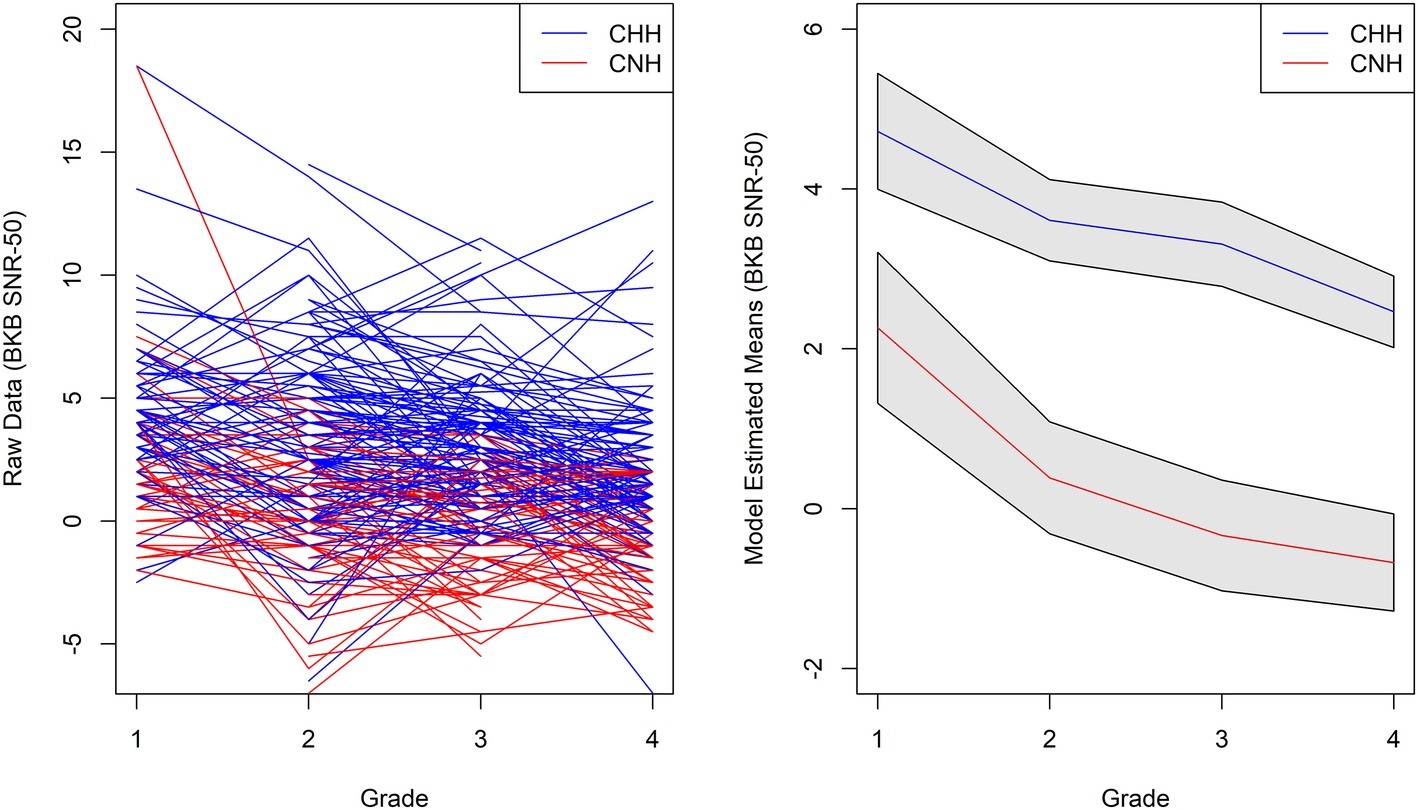

Table 1. Demographic characteristics for children who are hard of hearing (CHH) and children with normal hearing (CNH).

Data reported in the current analyses occurred when the children were approximately 7, 8, 9, or 10 years of age (respectively, first, second, third, or fourth grade). Children were seen for Complex Listening during first and third grade and OSACHH during second and fourth grade. All participants had completed the BKB-SIN (see description below) during at least one visit over the course of the studies.

Procedures

This study was carried out in accordance with the recommendations of the University of Iowa Institutional Review Board, with written informed consent from all subjects. All parents of the participants gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the University of Iowa Institutional Review Board.

For the current analysis, participants contributed data from the BKB-SIN at up to four visits: first grade (CHH, n = 74; CNH, n = 44); second grade (CHH, n = 145; CNH, n = 79); third grade (CHH, n = 93; CNH, n = 56); and fourth grade (CHH, n = 128; CNH, n = 69). Because participants entered the study at different time points, they varied in terms of their number of visits. Furthermore, some participants missed visits between years. We had 88 CHH and CNH with one visit, 63 with two visits, 82 with three visits, and 57 with four visits.

Audiology Measures

Audiologic measures, HA measures, and speech recognition in noise tests were collected at every visit. For CHH, a trained clinician obtained air-conduction thresholds at 250, 500, 1,000, 2,000, 4,000, 6,000, and 8,000 Hz. Bone-conduction thresholds were obtained at 500, 1,000, 2,000, and 4,000 Hz. The four-frequency (500, 1,000, 2,000, and 4,000 Hz) better-ear pure-tone average (BEPTA) was then calculated. CNH passed a hearing screen in both ears at 20 dB HL at these four frequencies.

Hearing Aid Verification

At each visit, the audiologist verified that participants’ HAs were functioning appropriately. The SII (ANSI, 1997) was calculated for both ears to estimate the speech audibility based on ear canal acoustics (measured real-ear-to-coupler difference or age-average real-ear-to-coupler difference) and hearing thresholds. SII represents access of the audible speech spectrum at a conversational speech level (65 dB SPL) from a distance of 1 m. Both better-ear aided and unaided SII were calculated; CHH who did not use HAs only had unaided SII included in the analysis.

Hearing Aid Use

During each test visit, the caregiver completed a questionnaire related to daily HA use (available at: https://ochlstudy.org/assessment-tools). Caregivers reported average number of hours that the child wore HAs during the week and weekends, which was calculated as a weighted HA use measure [weekday use × 0.71 (5/7 days of the week) + weekend use × 0.29 (2/7 days of the week)].

Hearing Aid Dosage

To measure the combined effects of HA use and audibility levels (aided and unaided), we calculated a variable termed “HA dosage.” This metric can be conceptualized as how much daily access a child receives from HAs. HA dosage combines the number of hours of daily HA use with aided and unaided hearing into one weighted measure of how much auditory access a child experiences during the day1 from their HAs. It is calculated as HA Dosage = Daily HA Use hoursAided Better-ear SII − (24 − Daily HA Use hours)Unaided Better-ear SII. The number of hours of daily HA use is weighted by aided SII (access to speech with HAs). If SII = 1, the child has full access to the speech spectrum for that number of hours throughout the day. The amount of time the child does not wear HAs during the day, weighted by unaided SII (access to speech without HAs), is then subtracted from the hours of use weighted by aided SII. A smaller value indicates lower HA dosage and a greater value indicates higher HA dosage.

Speech Recognition in Noise

We administered the BKB-SIN test (Bench et al., 1979) at each test visit. The BKB-SIN was developed to be used with children and includes short sentences with semantic and syntactic content at a first-grade reading level (Wilson et al., 2007). Recorded sentences were presented with a male talker in multi-talker background noise. The signal was calibrated at 65 dBA prior to administration. Each child received one list consisting of Part A and Part B (10 sentences per part) per visit. Lists 1–8 were administered randomly to participants; however, no participants received the same list 2 years in a row. Each sentence was presented at a different SNR, starting at 21 dB SNR and decreasing in 3 dB decrements. The tenth sentence was presented at −6 dB SNR. The test was scored in terms of the SNR needed to accurately identify 50% of the key words (i.e., SNR-50) rather than percent-correct of the total word list. Thus, a lower SNR-50 represents less difficulty understanding speech in background noise, and growth over time is seen as a downward trajectory.

Language Measures

Test protocols were developed to be appropriate for children utilizing spoken English in first through fourth grade. Test protocols varied depending on the year of testing. First and third grade test batteries were the same, and second and fourth grade test batteries were the same.

Vocabulary

At first and third grade, we administered two measures of vocabulary knowledge. The Wechsler Abbreviated Scale of Intelligence-2 (WASI-2; Wechsler and Hsiao-pin, 2011) Vocabulary subtest is a standardized measure of expressive vocabulary. The examiner instructs the participant to define a series of words. Responses are scored as 0, 1, or 2 points based on the accuracy of the definition. Also at first and third grade, examiners administered the Peabody Picture Vocabulary Test-4 (PPVT-4; Dunn and Dunn, 2007). The PPVT-4 assesses receptive vocabulary; the examiner says a target word that corresponds to one of four pictures in a set, and the participant indicates the correct word. The correlation between the WASI-2 Vocabulary raw scores and the PPVT-4 raw scores was 0.81. Because the raw scores for WASI-2 Vocabulary and PPVT-4 are on different scales, we transformed each participant’s score to z-scores and averaged the z-scores together to create a single vocabulary composite score. The conversion to z-scores allowed us to standardize performance relative to our own population of participants and better measure individual growth. At second and fourth grade, we administered the Woodcock-Johnson Tests of Achievement-III Picture Vocabulary subtest (WJTA-III; Woodcock et al., 2001), which measures expressive vocabulary via picture naming. Again, we transformed the raw scores to z-scores so they would be on the same scale as the other vocabulary measures.

Working Memory

At first and third grade, we administered two standardized working memory measures from the Automated Working Memory Assessment (AWMA; Alloway, 2007). The Odd One Out subtest is a visual–spatial complex working memory span task. The participant sees three shapes in a three-square matrix on a computer screen. Two of the shapes are the same and one is different. The participant points to the shape that is the “odd one out.” The participant is then shown three empty boxes and indicates where the odd shape was located. The task is administered using a span procedure, in which the participant is asked to indicate the location of an increasing number of items. When four out of six spans within a set are identified correctly, the participant moves to the next level and the span increases by one item. The task is discontinued after three incorrect span responses within a set.

The Listening Recall subtest is a verbal complex working memory span task. The participant hears a sentence (e.g., “You eat soup with a knife”) and must determine if it is true or false. After hearing a set of two sentences, the participant repeats back the last word of each sentence in the order that he/she heard them. This task is also administered using a span procedure; if the participant accurately identifies the last words of the sentences in the correct order, the span increases by one sentence. The correlation for Listening Recall and Odd One Out raw scores for participants in first and third grade was 0.61. Raw scores were transformed into z-scores and averaged together to form a composite score.

At second and fourth grade, we administered the Listening Recall and Odd One Out tasks. In addition, we administered Backward Digit span, a working memory span measure in which the participant hears a series of numbers and is instructed to verbally repeat them back in reverse order. The correlation between raw scores for Backward Digit Span and Listening Recall was 0.50, the correlation for Backward Digit Span and Odd One Out was 0.52, and the correlation for Listening Recall and Odd One Out was 0.52 for participants in second and fourth grade. The raw scores of the three variables were transformed into z-scores and averaged together to compute a composite working memory score at second and fourth grade.

Statistical Analyses

Our first two research questions evaluated the growth trajectories of the BKB-SIN SNR-50 scores for CHH and CNH, and whether the two groups showed similarities or differences in their rate of growth. To address these research questions, we constructed a longitudinal regression model. The fixed effects in the regression model were grade (first, second, third, and fourth); hearing status (CHH, CNH); and an interaction between grade and hearing status. To account for the correlation due to repeated measures, we included a correlation structure on the residuals. The Akaike Information Criterion (AIC; Akaike, 1974) was used to select the appropriate correlation structure within the statistical model with lower AIC values meaning better fitting models. A heterogeneous compound symmetric covariance matrix (AIC = 3315.2) was chosen over an unstructured covariance matrix (AIC = 3318.6). Therefore, the correlations between grades were approximately equal, but the variances at each time point were different.

The third research question examined which factors were associated with individual differences in growth rate for speech recognition in noise for only CHH. To construct this analysis, we used a linear regression model with a heterogeneous compound symmetric error structure to account for correlation between grades and unequal variances between grades. The dependent variable was again growth rate on BKB-SIN SNR-50 scores. The fixed effects were grade, maternal education level, age at confirmation of hearing loss, vocabulary composite z-scores, and working memory composite z-scores. Maternal education level was coded as ordinal levels (1 = High School or less, 2 = Some college, 3 = Bachelor’s degree, 4 = Post graduate, with 4 as the reference level). We also included average HA dosage across visits and change in HA dosage as separate fixed effects because HA dosage is a time-varying covariate. Change in HA dosage is calculated as each participant’s HA dosage at a given visit subtracted from the average HA dosage across visits. These separate variables allowed us to determine whether the average levels of HA dosage across visits or change in HA dosage were associated with growth rate.

Results

Changes in Speech Recognition in Noise Over Time

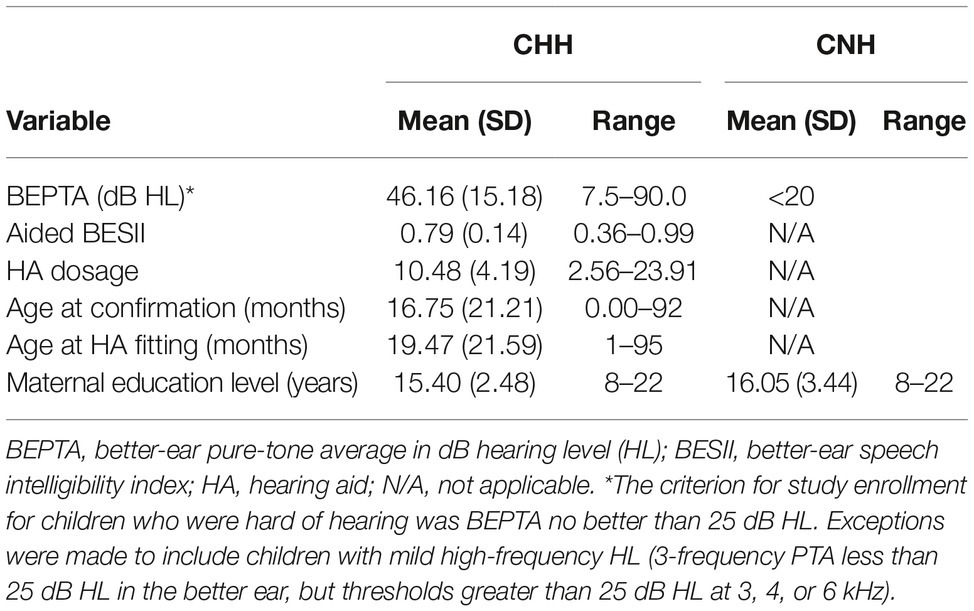

We found a significant main effect for grade, F(3, 389) = 23.78, p < 0.0001. Each older grade had a lower SNR-50 compared to younger grades (see Table 2). There was also a significant main effect for hearing status, t(284) = 8.19, p < 0.001. The interaction between grade and hearing status was not statistically significant, F(3, 389) = 1.18, p = 0.3154. This lack of an interaction is evident in Figure 1. On average, CHH demonstrated a SNR-50 that was 3.14 dB SNR higher than CNH, and the growth rate was consistent between groups.

Figure 1. Average raw (left panel) and predicted (right panel) BKB-SIN SNR-50 scores based on model from first through fourth grade for children with normal hearing (red) and children who are hard of hearing (blue).

Factors Associated With Growth Rate in Speech Recognition in Noise for Children Who Are Hard of Hearing

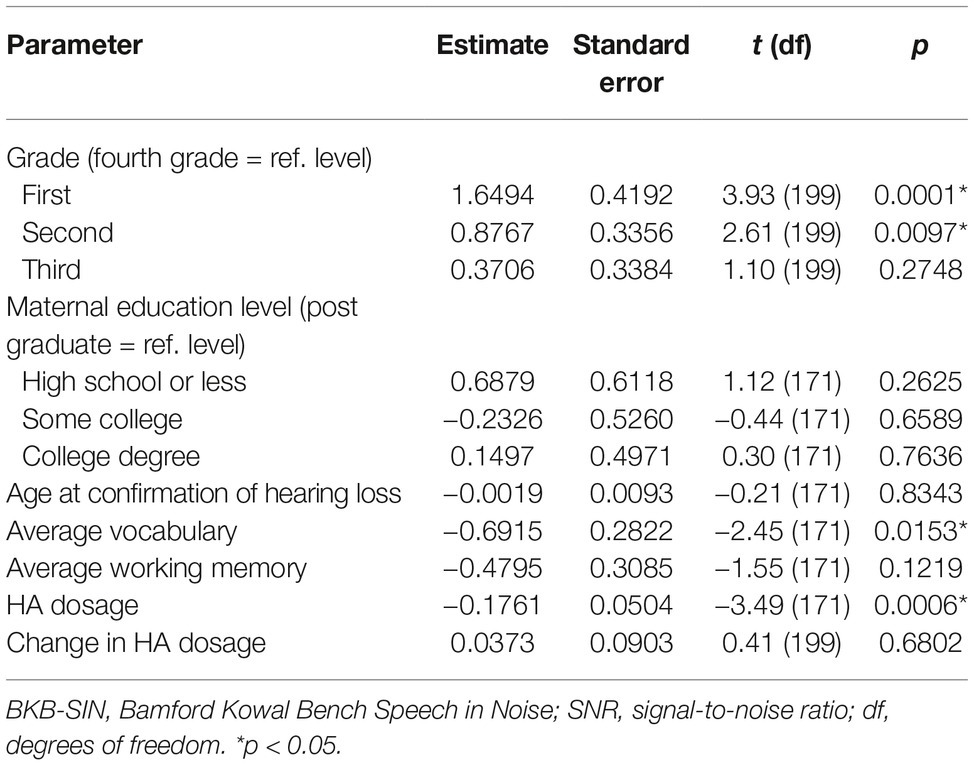

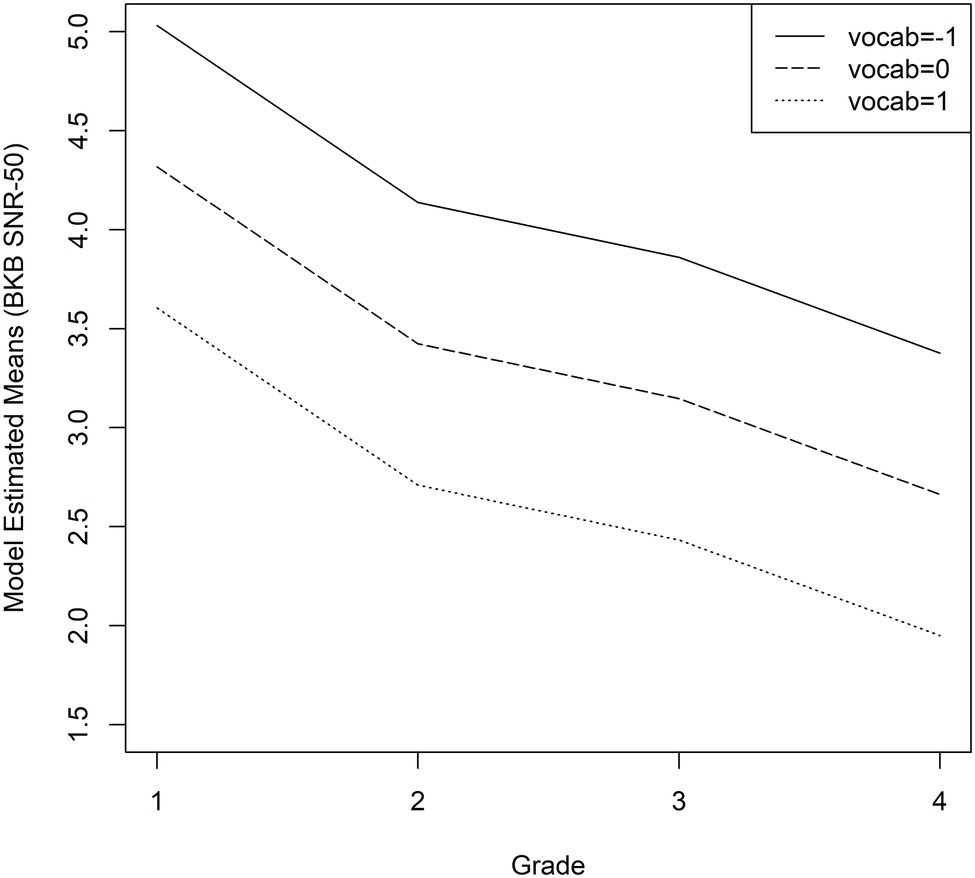

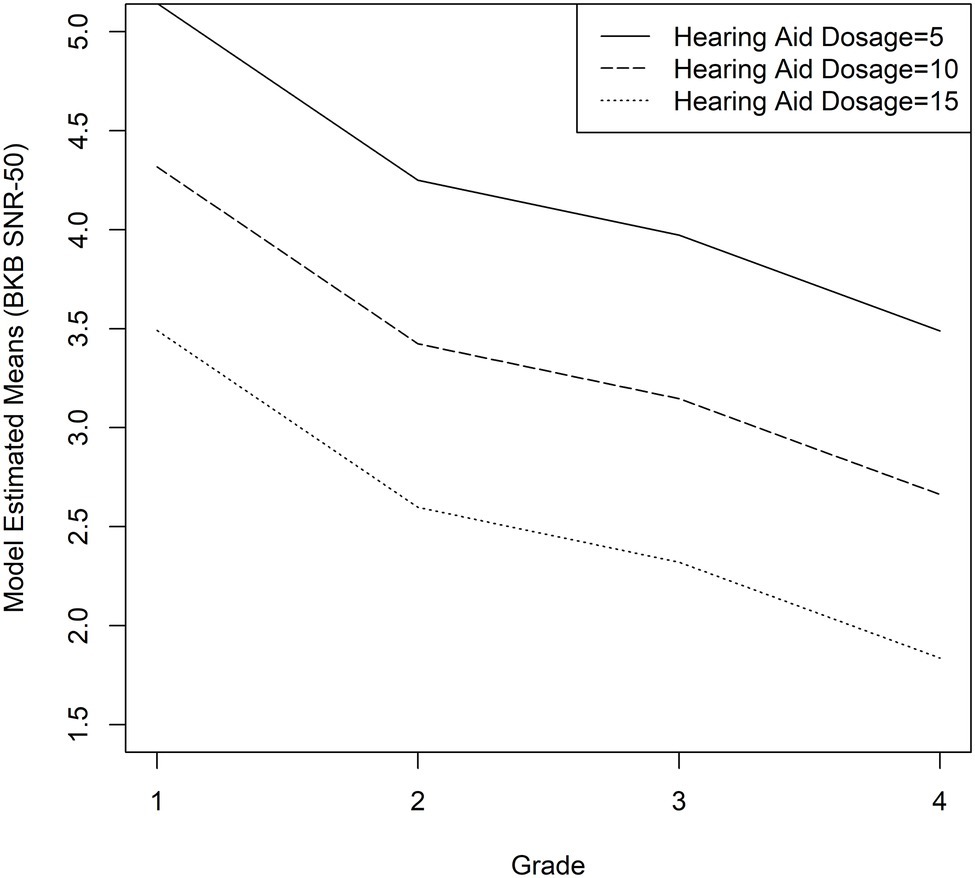

As described in the “Statistical Analyses” section, the fixed factors were grade, maternal education level, age at confirmation of hearing loss, vocabulary composite z-score, working memory composite z-score, average HA dosage, and change in HA dosage. Interactions were not significant, so they were not included in the final model. Table 3 shows the parameters of the linear regression models. Grade level [F(3, 199) = 6.04, p = 0.0006], vocabulary composite z-scores [F(1, 171) = 6.00, p = 0.0153], and average HA dosage [F(1, 171) = 12.19, p = 0.0006] were significantly associated with rates of growth in BKB-SIN SNR-50 scores. Maternal education level [F(3, 171) = 0.77, p = 0.5098], age at confirmation of hearing loss [F(1, 171) = 0.04, p = 0.8343], working memory composite z-scores [F(1, 171) = 2.42, p = 0.1219], and change in HA dosage [F(1, 199) = 17, p = 0.6802] were not significant predictors. Stronger vocabulary skills (Figure 2), and greater average HA dosage (Figure 3) were related to better recognition of speech in noise and these patterns were consistent across age.

Table 3. Linear regression model with grade, maternal education level, age at confirmation of hearing loss, average vocabulary, average working memory, average HA dosage, and change in HA dosage as fixed effects and BKB-SIN SNR-50 as the dependent variable.

Figure 2. Average predicted BKB-SIN SNR-50 scores as a function of vocabulary scores for children who are hard of hearing. The solid line represents z-scores of −1 (1 SD below average), the dashed line represents z-scores of 0 (average), and the dotted line represents z-scores of +1 (1 SD above the mean).

Figure 3. Average predicted BKB-SIN SNR-50 scores as a function of HA dosage for children who are hard of hearing. The solid line represents the 5th percentile (HA dosage = 5), the dashed line represents the 50th percentile (HA dosage = 10), and the dotted line represents the 95th percentile (HA dosage = 15).

Discussion

The primary aim of the current study was to compare speech recognition in noise in a large group of CHH and age-matched hearing peers who have been followed on an annual basis out to fourth grade. To our knowledge, this study is among the first to track the same group of children over time and compare developmental growth rates in speech recognition for CHH compared to CNH. We also evaluated the effects of auditory access, complex working memory span, and vocabulary size on listening in noise in CHH. Identifying the mechanisms that underlie speech recognition in degraded contexts will guide clinical decision-making process for optimizing outcomes (Ching et al., 2018) and inform theories about how auditory access shapes development for CHH (Moeller and Tomblin, 2015).

Group Differences in Growth Trajectories

Prior work on speech recognition in CHH have used cross-sectional designs with a focus on children in the 5- to 12-year-old age range (McCreery et al., 2015; Klein et al., 2017; Ching et al., 2018). CNH appear to improve in their ability to recognize words with age, reaching adult-like levels by adolescence (Eisenberg et al., 2000; Corbin et al., 2016). Based on these previous studies, we expected that CHH would have more difficulty with listening in background noise at the initial test visits and both groups would improve over time, but we were unsure of the between-group developmental patterns of these deficits. There were three possible options: (1) CHH would eventually catch up as a group to the CNH, (2) the gap in speech recognition in noise skills would widen over time, or (3) the gap would remain constant over time. Based on prior literature with adults, it seemed unlikely that CHH would catch up to their hearing peers. Results by Walker et al. (2019) provided some support for the possibility of an increasing gap in speech recognition; however, the results from the linear regression models pointed toward the third option: CHH showed a significant delay in speech recognition in noise skills at the initial visit around first grade, both groups improved in their speech recognition in noise skills over time, and the size of this gap remained approximately the same from first through fourth grade. In effect, both groups appeared to be progressing similarly with time, but the children with hearing loss started off delayed and stayed delayed. These data inform our knowledge about long-term trajectories in speech recognition in noise for children, as we do not see evidence of convergence or divergence between groups. These data also have important clinical implications because they highlight the need to continue providing support for children with all degrees of hearing loss in the general education setting as they transition from elementary grades into secondary grades. This support may take the form of resource support with a speech-language pathologist or teacher of the deaf/hard of hearing, classroom audio distribution systems, personal remote microphone systems, and/or preferential seating in the classroom.

Individual Differences in Growth Trajectories for Children Who Are Hard of Hearing

Our second aim was to examine the factors that support growth for speech recognition in noise for CHH. Previous studies have examined age at service delivery (Sininger et al., 2010), aided audibility (Davidson and Skinner, 2006; Scollie, 2008; Stiles et al., 2012), and language (Blamey et al., 2001; Nittrouer et al., 2013) as predictive factors, but only a few have looked at the combination of auditory access, cognition, and language (McCreery et al., 2015; Klein et al., 2017; Ching et al., 2018). The findings from these previous studies have been mixed. Ching et al. found that non-verbal IQ and global language skills predicted speech recognition in noise skills for CHH, but auditory access (measured with aided SII, after controlling for unaided hearing levels) did not contribute significant variance. Klein et al. found an effect of vocabulary size, but not working memory (measured with a phonological short-term memory task) or auditory access (measured with aided SII and HA use as separate variables). McCreery et al. (2015) showed significant associations between all three factors (vocabulary size, aided SII, and phonological working memory) and word recognition in noise.

Taken together, the results of the current study may be viewed as partial support of the predictions of the ELU model. Children with stronger language skills were better able to recognize degraded speech, and children with poorer language skills had more difficulty with speech recognition in noise. Our longitudinal results indicate not just that better vocabulary skills support the ability to perceive a degraded message, but the effect of vocabulary size is stable across time. As discussed in Ching et al. (2018), these findings point toward the critical importance of language development as a focus of intervention for children with hearing loss. For some CHH who demonstrate extreme difficulty with listening in noise, this intervention may need to continue into the school age years, a time period when the intervention needs of CHH are sometimes overlooked (Antia et al., 2009). We also acknowledge that reduced auditory access in early childhood may lead to poorer speech recognition in noise skills, which in turn makes the word learning process more difficult for children with hearing loss (Walker and McGregor, 2013; Blaiser et al., 2015). We are unable to determine the direction of the relationship between vocabulary size and speech recognition noise with our current analysis approach, but future studies could employ cross-lagged analysis models or mediation analysis to infer directionality.

In contrast to the effect of vocabulary, we did not find an impact of working memory on speech recognition. The lack of an association is consistent with Magimairaj et al. (2018), and inconsistent with McCreery et al. (2015, 2017). Magimairaj and colleagues used the same clinical outcome measure, BKB-SIN, as the current study. McCreery et al. (2017) used sentences that were either syntactically correct but had no semantic meaning or had no syntactic structure or semantic meaning. Thus, the stimuli in McCreery et al. may have required children to rely on memory skills to recall the words, because they could not use linguistic bootstrapping. The BKB-SIN sentences had less of a memory load because children could use linguistic skills to remember the sentence, leading to reduced need to use working memory to repeat target words even in high levels of noise. Another possibility is that the shared variance in the vocabulary and working memory composite measures may have resulted in only vocabulary accounting for unique variance in speech recognition in noise. A larger sample size might have been able to demonstrate unique effects of both variables.

If future studies continue to support a stronger effect of language skills compared to working memory on speech recognition in children, these findings may point toward a need to modify the predictions of the ELU model. The ELU model emphasizes working memory skills as a compensatory mechanism in complex listening situations, with less focus on language skills. Because children show more variability in vocabulary breadth and depth than adults, language ability may take on a more important role in understanding distorted or masked speech, relative to working memory. Additional research is needed to test the applicability of the ELU model to the pediatric population.

In addition to cognitive and linguistic measures, we looked at how auditory access impacts individual differences in speech recognition in noise for CHH. The effects of auditory access have been inconsistent across studies (Blamey et al., 2001; Sininger et al., 2010; McCreery et al., 2015; Klein et al., 2017; Ching et al., 2018). Part of this inconsistency is due to different approaches in quantifying how much access CHH have to speech. Our measure of auditory access represents a novel approach to quantifying the HA experience of CHH. Here we developed a metric, HA dosage, that considers specific effects of amplification by weighting the amount of time children wore amplification throughout the day with aided and unaided hearing levels. The measurement of HA dosage is an improvement on previous attempts to look at auditory access in CHH because it combines sources of variability related to amplification (aided SII and HA use). It also accounts for the differential impact of HA use time based on unaided SII. When we averaged HA dosage across visits for participants, it was a significant predictor of growth rates. Like vocabulary knowledge, as HA dosage increases, CHH show better speech recognition in noise, but the patterns of change do not vary in relation to levels of HA dosage. These results highlight the need for interventions that include well-fitted HAs and consistent HA use, even in cases of mild or moderate hearing loss. While CHH with more residual hearing may perform well in quiet with or without amplification, most listening and learning situations occur in suboptimal or adverse conditions (Shield and Dockrell, 2008; Mattys et al., 2012; Ambrose et al., 2014). Increased HA dosage appears to offer some protection against the difficulties of listening in noise for these children.

We also examined whether change in HA dosage over time influenced growth rates and did not find a significant effect. CHH show variation in the consistency of auditory access during childhood (McCreery et al., 2013; Walker et al., 2013). By the school-age years, these fluctuations in auditory access do not appear to have an impact on longitudinal growth trajectories in speech recognition in noise. In addition to a lack of a significant effect for change in HA dosage, we did not find an association between speech recognition in noise with maternal education level or age at confirmation of hearing loss. Both variables have been shown to have a positive effect on auditory outcomes in children with hearing loss in previous studies (Sininger et al., 2010; McCreery et al., 2015), but the children in these earlier studies were younger than the children in the current study. Other studies with this same cohort of children indicate that CHH who receive audiologic services later demonstrate initial delays in language outcomes, but show a pattern of catching up to CHH who received services earlier by age 6 years (Tomblin et al., 2015). Thus, timing of service provision may initially affect language and listening outcomes, but the impact of age at confirmation (which is highly correlated with age at HA fitting) gradually weakens over time as other factors (vocabulary skills, aided audibility, HA use) support speech recognition in noise and ameliorate the negative effects of later confirmation of hearing loss and lower maternal education levels.

Limitations

A strength of this study is that it is the first to document longitudinal change in growth trajectories for CNH and CHH on measures of speech recognition in noise. There are also several limitations that should be discussed. Due the study design, children were tested at different time points rather than all children participating at the same time points. This issue of inconsistent time points is a common obstacle in longitudinal research studies, as participants often start late, drop out, or skip test visits (Krueger and Tian, 2004). The use of linear mixed models for the statistical analysis accommodates data where individuals are measured at different time points (Oleson et al., 2019; Walker et al., 2019). The linear mixed model creates individual-specific trends through weighted averages of the individual observed data and the population average data so that all scores can be used in the analysis even if they are at differing time points.

Another limitation is that testing took place over a fairly limited time span (up to four visits). Further, we only tested participants up to 11 years of age, which is still a period of early adolescence. While the current data trends suggest that CNH and CHH show parallel rates of development in speech recognition in noise, it is possible that we may see differences in growth trajectories past 11 years (Corbin et al., 2016), particularly if CNH reach adult-like performance but CHH continue to improve. Future studies would need to include longitudinal data at older ages to determine if CHH eventually catch up to their hearing peers or if deficits persist with age.

We also note that the inclusionary and exclusionary criteria for this study resulted in a homogeneous cohort of children from English-speaking backgrounds with no additional motor or cognitive deficits. Thus, the current results may not generalize to linguistically diverse populations or children with hearing loss who have additional disabilities. We excluded children with profound hearing loss because we were interested in the impact of hearing loss in the mild to severe range. It is possible that we would have seen a stronger impact of age at confirmation of hearing loss if children who are deaf had been included in the sample. We did not control for the type of hearing loss because our goal was to recruit as many children with permanent hearing loss as possible; however, the majority of children presented with sensorineural hearing loss. We acknowledge that the consequences of sensorineural and conductive hearing loss can impact speech recognition in noise differently, but our limited number of children with conductive hearing loss prevents us from analyzing these children as a separate group.

A final limitation is that we restricted our speech in noise measure to the BKB-SIN test, which uses a four-talker babble as the competing signal. Other studies have shown that informational masking is increased as the number of competing talkers is decreased (Freyman et al., 2004), CNH demonstrate different developmental trajectories for two-talker maskers compared to more energetic masking signals (Corbin et al., 2016), and CHH have more difficulty with two-talker maskers than CNH (Leibold et al., 2013). We did not evaluate the effects of age, hearing status, and masker type in the present study, but this would be an important future direction in order to fully understand children’s susceptibility to background noise.

Conclusions

The current study established longitudinal growth trajectories of speech recognition in noise for school-age CHH and CNH. As a group, CHH demonstrated deficits in speech recognition in noise. These deficits do not appear to converge toward or diverge from CNH, as the growth rates were parallel for the CHH and CNH. These findings also helped us identify the underlying mechanisms that drive growth in speech recognition, with stronger vocabulary and higher HA dosage supporting speech recognition in degraded situations.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Author Contributions

EW and RM conceived of the presented idea. EW took the lead in writing the manuscript. CS and RM contributed to the interpretation of the result and the final version of the manuscript. JO performed the analytic and statistical calculations and data visualization. All authors provided critical feedback and helped shape the research, analysis, and manuscript.

Funding

This work was supported by National Institutes of Health Grants NIH/NIDCD 5R01DC009560 (co-principal investigators, J. Bruce Tomblin, University of Iowa and Mary Pat Moeller, Boys Town National Research Hospital), 5R01DC013591 (principal investigator, Ryan W. McCreery, Boys Town National Research Hospital), and 3R21DC015832 (principal investigator, Elizabeth A. Walker, University of Iowa). The content of this project is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Deafness and Other Communication Disorders or the National Institutes of Health.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The following people provided support, assistance, and feedback at various points in the project: Wendy Fick, Meredith Spratford, Marlea O’Brien, Mary Pat Moeller, J. Bruce Tomblin, Kelsey Klein, and Kristi Hendrickson. Special thanks go to the families and children who participated in the research and to the examiners at the University of Iowa, Boys Town National Research Hospital, and the University of North Carolina-Chapel Hill.

Footnotes

1. The 24°h would include periods of time when the child is sleeping and presumably receiving little to no input. We did not ask parents how many hours their children slept on average. In the absence of those data, it seemed appropriate to calculate a full 24°h of possible input, rather than try to estimate individual sleep patterns for children.

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Trans. Autom. Control 19, 716–723. doi: 10.1109/TAC.1974.1100705

Akeroyd, M. A. (2008). Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int. J. Audiol. 47(Suppl. 2), S53–S71. doi: 10.1080/14992020802301142

Alloway, T. (2007). The automatic working memory assessment (awma)[computer software]. London, UK: Harcourt Assessment.

Ambrose, S. E., VanDam, M., and Moeller, M. P. (2014). Linguistic input, electronic media, and communication outcomes of toddlers with hearing loss. Ear Hear. 35, 139–147. doi: 10.1097/AUD.0b013e3182a76768

ANSI (1997). Methods for calculation of the speech intelligibility index. Technical report S3. 5-1997. New York, NY: American National Standards Institute.

Antia, S. D., Jones, P. B., Reed, S., and Kreimeyer, K. H. (2009). Academic status and progress of deaf and hard-of-hearing students in general education classrooms. J. Deaf. Stud. Deaf. Educ. 14, 293–311. doi: 10.1093/deafed/enp009

Bench, J., Kowal, Å., and Bamford, J. (1979). The BKB (Bamford-Kowal-Bench) sentence lists for partially-hearing children. Br. J. Audiol. 13, 108–112.

Blaiser, K. M., Nelson, P. B., and Kohnert, K. (2015). Effect of repeated exposures on word learning in quiet and noise. Commun. Disord. Q. 37, 25–35. doi: 10.1177/1525740114554483

Blamey, P. J., Sarant, J. Z., Paatsch, L. E., Barry, J. G., Bow, C. P., Wales, R. J., et al. (2001). Relationships among speech perception, production, language, hearing loss, and age in children with impaired hearing. J. Speech Lang. Hear. Res. 44, 264–285. doi: 10.1044/1092-4388(2001/022)

Caldwell, A., and Nittrouer, S. (2013). Speech perception in noise by children with cochlear implants. J. Speech Lang. Hear. Res. 56, 13–30. doi: 10.1044/1092-4388(2012/11-0338)

Ching, T. Y., Day, J., Van Buynder, P., Hou, S., Zhang, V., Seeto, M., et al. (2014). Language and speech perception of young children with bimodal fitting or bilateral cochlear implants. Cochlear Implants Int. 15(Suppl. 1), S43–S46. doi: 10.1179/1467010014Z.000000000168

Ching, T. Y., Dillon, H., Marnane, V., Hou, S., Day, J., Seeto, M., et al. (2013). Outcomes of early-and late-identified children at 3 years of age: findings from a prospective population-based study. Ear Hear. 34, 535–552. doi: 10.1097/AUD.0b013e3182857718

Ching, T. Y., Zhang, V. W., Flynn, C., Burns, L., Button, L., Hou, S., et al. (2018). Factors influencing speech perception in noise for 5-year-old children using hearing aids or cochlear implants. Int. J. Audiol. 57(Suppl. 2), S70–S80. doi: 10.1080/14992027.2017.1346307

Corbin, N. E., Bonino, A. Y., Buss, E., and Leibold, L. J. (2016). Development of open-set word recognition in children: speech-shaped noise and two-talker speech maskers. Ear Hear. 37, 55–63. doi: 10.1097/AUD.0000000000000201

Crandell, C. C. (1993). Speech recognition in noise by children with minimal degrees of sensorineural hearing loss. Ear Hear. 14, 210–216. doi: 10.1097/00003446-199306000-00008

Davidson, L. S., Geers, A. E., Blamey, P. J., Tobey, E., and Brenner, C. (2011). Factors contributing to speech perception scores in long-term pediatric CI users. Ear Hear. 32, 19S–26S. doi: 10.1097/AUD.0b013e3181ffdb8b

Davidson, L. S., and Skinner, M. W. (2006). Audibility and speech perception of children using wide dynamic range compression hearing aids. Am. J. Audiol. 15, 141–153. doi: 10.1044/1059-0889(2006/018)

Dubno, J. R. (2015). Speech recognition across the life span: longitudinal changes from middle-age to older adults. Am. J. Audiol. 24, 84–87. doi: 10.1044/2015_AJA-14-0052

Dunn, D., and Dunn, L. (2007). Peabody picture vocabulary test. 4th edn. Minneapolis, MN: NCS Pearson, Inc.

Dunn, C. C., Walker, E. A., Oleson, J., Kenworthy, M., Van Voorst, T., Tomblin, J. B., et al. (2014). Longitudinal speech perception and language performance in pediatric cochlear implant users: the effect of age at implantation. Ear Hear. 35, 148–160. doi: 10.1097/AUD.0b013e3182a4a8f0

Easwar, V., Sanfilippo, J., Papsin, B., and Gordon, K. J. (2018). Impact of consistency in daily device use on speech perception abilities in children with cochlear implants: datalogging evidence. J. Am. Acad. Audiol. 29, 835–846. doi: 10.3766/jaaa.17051

Eisenberg, L. S., Shannon, R. V., Schaefer Martinez, A., Wygonski, J., and Boothroyd, A. (2000). Speech recognition with reduced spectral cues as a function of age. J. Acoust. Soc. Am. 107, 2704–2710. doi: 10.1121/1.428656

Freyman, R. L., Balakrishnan, U., and Helfer, K. S. (2004). Effect of number of masking talkers and auditory priming on informational masking in speech recognition. J. Acoust. Soc. Am. 115, 2246–2256. doi: 10.1121/1.1689343

Gustafson, S. J., Davis, H., Hornsby, B. W., and Bess, F. H. (2015). Factors influencing hearing aid use in the classroom: a pilot study. Am. J. Audiol. 24, 563–568. doi: 10.1044/2015_AJA-15-0024

Hillock-Dunn, A., Taylor, C., Buss, E., and Leibold, L. J. (2014). Assessing speech perception in children with hearing loss: what conventional clinical tools may miss. Ear Hear. 36, 57–60. doi: 10.1097/AUD.0000000000000007

Holte, L., Walker, E., Oleson, J., Spratford, M., Moeller, M. P., Roush, P., et al. (2012). Factors influencing follow-up to newborn hearing screening for infants who are hard of hearing. Am. J. Audiol. 21, 163–174. doi: 10.1044/1059-0889(2012/12-0016)

Kirk, K. I., Prusick, L., French, B., Gotch, C., Eisenberg, L. S., and Young, N. (2012). Assessing spoken word recognition in children who are deaf or hard of hearing: a translational approach. J. Am. Acad. Audiol. 23, 464–475. doi: 10.3766/jaaa.23.6.8

Klein, K. E., Spratford, M., Redfern, A., and Walker, E. A. (2019). Effects of grade and school services on children’s responsibility for hearing aid care. Am. J. Audiol. 28, 673–685. doi: 10.1044/2019_AJA-19-0005

Klein, K. E., Walker, E. A., Kirby, B., and McCreery, R. W. (2017). Vocabulary facilitates speech perception in children with hearing aids. J. Speech Lang. Hear. Res. 60, 2281–2296. doi: 10.1044/2017_JSLHR-H-16-0086

Klem, M., Melby-Lervåg, M., Hagtvet, B., Lyster, S. A. H., Gustafsson, J. E., and Hulme, C. (2015). Sentence repetition is a measure of children’s language skills rather than working memory limitations. Dev. Sci. 18, 146–154. doi: 10.1111/desc.12202

Knecht, H. A., Nelson, P. B., Whitelaw, G. M., and Feth, L. L. (2002). Background noise levels and reverberation times in unoccupied classrooms. Am. J. Audiol. 11, 65–71. doi: 10.1044/1059-0889(2002/009)

Krueger, C., and Tian, L. (2004). A comparison of the general linear mixed model and repeated measures ANOVA using a dataset with multiple missing data points. Biol. Res. Nurs. 6, 151–157. doi: 10.1177/1099800404267682

Lalonde, K., and Holt, R. F. (2014). Cognitive and linguistic sources of variance in 2-year-olds’ speech-sound discrimination: a preliminary investigation. J. Speech Lang. Hear. Res. 57, 308–326. doi: 10.1044/1092-4388(2013/12-0227)

Leibold, L. J., Hillock-Dunn, A., Duncan, N., Roush, P. A., and Buss, E. (2013). Influence of hearing loss on children’s identification of spondee words in a speech-shaped noise or a two-talker masker. Ear Hear. 34, 575–584. doi: 10.1097/AUD.0b013e3182857742

Magimairaj, B. M., Nagaraj, N. K., and Benafield, N. J. (2018). Children’s speech perception in noise: evidence for dissociation from language and working memory. J. Speech Lang. Hear. Res. 61, 1294–1305. doi: 10.1044/2018_JSLHR-H-17-0312

Mattys, S. L., Davis, M. H., Bradlow, A. R., and Scott, S. K. (2012). Speech recognition in adverse conditions: a review. Lang. Cogn. Process. 27, 953–978. doi: 10.1080/01690965.2012.705006

McCreery, R. W., Bentler, R. A., and Roush, P. A. (2013). Characteristics of hearing aid fittings in infants and young children. Ear Hear. 34, 701–710. doi: 10.1097/AUD.0b013e31828f1033

McCreery, R. W., Spratford, M., Kirby, B., and Brennan, M. (2017). Individual differences in language and working memory affect children’s speech recognition in noise. Int. J. Audiol. 56, 306–315. doi: 10.1080/14992027.2016.1266703

McCreery, R. W., Walker, E. A., Spratford, M., Oleson, J., Bentler, R., Holte, L., et al. (2015). Speech recognition and parent ratings from auditory development questionnaires in children who are hard of hearing. Ear Hear. 36, 60S–75S. doi: 10.1097/AUD.0000000000000213

Mehra, S., Eavey, R. D., and Keamy, D. G. Jr. (2009). The epidemiology of hearing impairment in the United States: newborns, children, and adolescents. Otolaryngol. Head Neck Surg. 140, 461–472. doi: 10.1016/j.otohns.2008.12.022

Moeller, M. P., and Tomblin, J. B. (2015). An introduction to the outcomes of children with hearing loss study. Ear Hear. 36, 4S–13S. doi: 10.1097/AUD.0000000000000210

Nittrouer, S., and Boothroyd, A. (1990). Context effects in phoneme and word recognition by young children and older adults. J. Acoust. Soc. Am. 87, 2705–2715. doi: 10.1121/1.399061

Nittrouer, S., Caldwell-Tarr, A., Tarr, E., Lowenstein, J. H., Rice, C., and Moberly, A. C. (2013). Improving speech-in-noise recognition for children with hearing loss: potential effects of language abilities, binaural summation, and head shadow. Int. J. Audiol. 52, 513–525. doi: 10.3109/14992027.2013.792957

Oleson, J. J., Brown, G. D., and McCreery, R. (2019). The evolution of statistical methods in speech, language, and hearing sciences. J. Speech Lang. Hear. Res. 62, 498–506. doi: 10.1044/2018_JSLHR-H-ASTM-18-0378

Page, T. A., Harrison, M., Moeller, M. P., Oleson, J., Arenas, R. M., and Spratford, M. (2018). Service provision for children who are hard of hearing at preschool and elementary school ages. Lang. Speech Hear. Serv. Sch. 49, 965–981. doi: 10.1044/2018_LSHSS-17-0145

Robinson, E. J., Davidson, L. S., Uchanski, R. M., Brenner, C. M., and Geers, A. E. (2012). A longitudinal study of speech perception skills and device characteristics of adolescent cochlear implant users. J. Am. Acad. Audiol. 23, 341–349. doi: 10.3766/jaaa.23.5.5

Rönnberg, J., Lunner, T., Zekveld, A., Sörqvist, P., Danielsson, H., Lyxell, B., et al. (2013). The ease of language understanding (ELU) model: theoretical, empirical, and clinical advances. Front. Syst. Neurosci. 7, 7–24. doi: 10.3389/fnsys.2013.00031

Rönnberg, J., Rudner, M., Foo, C., and Lunner, T. (2008). Cognition counts: a working memory system for ease of language understanding (ELU). Int. J. Audiol. 47(Suppl. 2), S99–S105. doi: 10.1080/14992020802301167

Scollie, S. D. J. (2008). Children’s speech recognition scores: the speech intelligibility index and proficiency factors for age and hearing level. Ear Hear. 29, 543–556. doi: 10.1097/AUD.0b013e3181734a02

Shield, B. M., and Dockrell, J. E. (2008). The effects of environmental and classroom noise on the academic attainments of primary school children. J. Acoust. Soc. Am. 123, 133–144. doi: 10.1121/1.2812596

Sininger, Y. S., Grimes, A., and Christensen, E. (2010). Auditory development in early amplified children: factors influencing auditory-based communication outcomes in children with hearing loss. Ear Hear. 31, 166–185. doi: 10.1097/AUD.0b013e3181c8e7b6

Stelmachowicz, P. G., Hoover, B. M., Lewis, D. E., Kortekaas, R. W., and Pittman, A. L. (2000). The relation between stimulus context, speech audibility, and perception for normal-hearing and hearing-impaired children. J. Speech Lang. Hear. Res. 43, 902–914. doi: 10.1044/jslhr.4304.902

Stiles, D. J., Bentler, R. A., and McGregor, K. K. (2012). The speech intelligibility index and the pure-tone average as predictors of lexical ability in children fit with hearing aids. J. Speech Lang. Hear. Res. 55, 764–778. doi: 10.1044/1092-4388(2011/10-0264)

Tomblin, J. B., Harrison, M., Ambrose, S. E., Walker, E. A., Oleson, J. J., and Moeller, M. P. (2015). Language outcomes in young children with mild to severe hearing loss. Ear Hear. 36, 76S–91S. doi: 10.1097/AUD.0000000000000219

Tun, P. A., Benichov, J., and Wingfield, A. (2010). Response latencies in auditory sentence comprehension: effects of linguistic versus perceptual challenge. Psychol. Aging 25, 730–735. doi: 10.1037/a0019300

Uhler, K., Yoshinaga-Itano, C., Gabbard, S. A., Rothpletz, A. M., and Jenkins, H. (2011). Longitudinal infant speech perception in young cochlear implant users. J. Am. Acad. Audiol. 22, 129–142. doi: 10.3766/jaaa.22.3.2

Walker, E. A., Kessler, D., Klein, K., Spratford, M., Oleson, J. J., Welhaven, A., et al. (2019). Time-gated word recognition in children: effects of auditory access, age, and semantic context. J. Speech Lang. Hear. Res. 62, 2519–2534. doi: 10.1044/2019_JSLHR-H-18-0407

Walker, E. A., and McGregor, K. K. (2013). Word learning processes in children with cochlear implants. J. Speech Lang. Hear. Res. 58, 1611–1625. doi: 10.1044/1092-4388(2012/11-0343)

Walker, E. A., Redfern, A., and Oleson, J. J. (2019). Linear mixed-model analysis to examine longitudinal trajectories in vocabulary depth and breadth in children who are hard of hearing. J. Speech Lang. Hear. Res. 62, 525–542. doi: 10.1044/2018_JSLHR-L-ASTM-18-0250

Walker, E. A., Sapp, C., Dallapiazza, M., Spratford, M., McCreery, R., and Oleson, J. J. (accepted). Language and reading outcomes in fourth-grade children with mild hearing loss compared to age-matched hearing peers. Lang. Speech Hear. Serv. Sch.

Walker, E. A., Spratford, M., Moeller, M. P., Oleson, J., Ou, H., Roush, P., et al. (2013). Predictors of hearing aid use time in children with mild-to-severe hearing loss. Lang. Speech Hear. Serv. Sch. 44, 73–88. doi: 10.1044/0161-1461(2012/12-0005)

Warren, S. F., Fey, M. E., and Yoder, P. J. (2007). Differential treatment intensity research. A missing link to creating optimally effective communication interventions. Ment. Retard. Dev. Disabil. Res. Rev. 13, 70–77. doi: 10.1002/mrdd.20139

Wechsler, D., and Hsiao-pin, C. (2011). Wechsler abbreviated scale of intelligence. San Antonio, TX: Pearson.

Wilson, R. H., McArdle, R. A., and Smith, S. L. (2007). An evaluation of the BKB-SIN, HINT, QUICKSIN, and WIN materials on listeners with normal hearing and listeners with hearing loss. J. Speech Lang. Hear. Res. 50, 844–856. doi: 10.1044/1092-4388(2007/059)

Woodcock, R. W., McGrew, K. S., Mather, N., and Schrank, F. (2001). Woodcock-Johnson III NU tests of achievement. Rolling Meadows, IL: Riverside Publishing.

Keywords: children, vocabulary, working memory, hearing loss, speech recognition

Citation: Walker EA, Sapp C, Oleson JJ and McCreery RW (2019) Longitudinal Speech Recognition in Noise in Children: Effects of Hearing Status and Vocabulary. Front. Psychol. 10:2421. doi: 10.3389/fpsyg.2019.02421

Edited by:

Mary Rudner, Linköping University, SwedenReviewed by:

Theo Goverts, VU University Medical Center, NetherlandsKristina Hansson, Lund University, Sweden

Copyright © 2019 Walker, Sapp, Oleson and McCreery. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Elizabeth A. Walker, ZWxpemFiZXRoLXdhbGtlckB1aW93YS5lZHU=

Elizabeth A. Walker

Elizabeth A. Walker Caitlin Sapp

Caitlin Sapp Jacob J. Oleson

Jacob J. Oleson Ryan W. McCreery

Ryan W. McCreery