- 1School of Psychology, Inner Mongolia Normal University, Hohhot, China

- 2College of Psychology, Liaoning Normal University, Dalian, China

- 3Department of Elementary Education, Hebei Normal University, Shijiazhuang, China

Numerous studies have shown that facial expressions influence trait impressions in the Western context. There are cultural differences in the perception and recognition rules of different intensities of happy expressions, and researchers have only explored the influence of the intensity of happy expressions on a few facial traits (warmth, trustworthiness, and competence). Therefore, we examined the effect of different intensities of Chinese happy expressions on the social perception of faces from 11 traits, namely trustworthiness, responsibility, attractiveness, sociability, confidence, intelligence, aggressiveness, dominance, competence, warmth, and tenacity. In this study, participants were asked to view a series of photographs of faces with high-intensity or low-intensity happy expressions and rate the 11 traits on a 7-point Likert scale (1 = “not very ××,” 7 = “very ××”). The results indicated that high-intensity happy expression had higher-rated scores for sociability and warmth but lower scores for dominance, aggressiveness, intelligence, and competence than the low-intensity happy expression; there was no significant difference in the rated scores for trustworthiness, attractiveness, responsibility, confidence, and tenacity between the high-intensity and low-intensity happy expressions. These results suggested that, compared to the low-intensity happy expression, the high-intensity happy expression will enhance the perceptual outcome of the traits related to approachability, reduce the perceptual outcome of traits related to capability, and have no significant effect on trustworthiness, attractiveness, responsibility, confidence, and tenacity.

Introduction

Cultural wisdom warns us not to judge a book by its cover. This suggests that the natural inclination is to judge people by their appearance. Indeed, when meeting strangers for the first time, people infer many characteristics about them based on their facial information (e.g., facial expressions), even in 34 ms (Willis and Todorov, 2006; Todorov et al., 2015). This inference process is called “social perception of faces” (Oosterhof and Todorov, 2008), and the inference results can affect the decisions of people, such as mate selection (Olivola et al., 2014; Valentine et al., 2014), trial outcomes (Wilson and Rule, 2015; Jaeger et al., 2020), and election outcomes (Na and Huh, 2016; Wong and Zeng, 2017).

Cultural Similarity and Difference in the Social Perception of Faces

Recently, researchers have started to model the structure underlying the social perception of faces. Oosterhof and Todorov (2008) used the trait assessment task to identify two evaluative dimensions: (1) valence related to approach-avoidance and (2) dominance related to physical strength-weakness. Based on the principal component analysis, the trustworthiness score can be used as the representative of the valence dimension, which refers to the behavioral intention of the target face to benefit or harm others. On the other hand, the dominance dimension refers to the ability of the target face to harm others (Oosterhof and Todorov, 2008). Wu et al. (2020) recruited local Chinese participants and used the trait assessment task to identify an approach-avoidance dimension, which was held cross-culturally, as well as a broader “capability” dimension that included dominance and tenacity related to physical and intellectual strength. The rating of the “capability” dimension was crucial for the survival of individuals and to obtain resources and a high social status, which might be considered more typical in collective societies such as China.

Additionally, the top-down stereotype content model has established that perceived warmth and competence are the two universal dimensions of human social cognition both at the individual and group levels. The warmth dimension includes traits that relate to perceived intent, which aligns with the approach-avoidance dimension which includes trustworthiness (Fiske et al., 2007). However, some researchers proposed the “morality differentiation hypothesis,” which suggests that trustworthiness and warmth are separate dimensions. These researchers define trustworthiness related to morality as the behavioral intention to categorize others as either enemies or friends. Conversely, warmth, considered unrelated to morality, has been defined as the proficiency of an individual in recruiting support for their intentions (Goodwin et al., 2014; Landy et al., 2016; Oliveira et al., 2020). Although others do not strongly argue for such distinction and consider trustworthiness and sociability as subcomponents of the warmth dimension, according to them, trustworthiness related to morality can be viewed to be distinct from, and primary compared to, sociability. Sociability implies being benevolent to people in ways that facilitate affectionate relations with them, but trustworthiness refers to being benevolent to people in ways that facilitate correct and principled relations with them (Brambilla et al., 2011, 2012; Brambilla and Leach, 2014). However, because the stereotype content model (two-dimension theories) agglomerated moral and amoral traits within a single dimension, people do not predict that the moral relevance of traits (as opposed to their warmth relevance) should have any special importance for person perception, and the omission of this information from two-dimensional models may therefore lead to a loss of predictive power (Goodwin et al., 2014).

Similar to the warmth-competence stereotype content models, the approach-avoidance dimension in the social perception of faces also agglomerated moral and amoral traits (e.g., trustworthiness and sociability) within a single dimension (Oosterhof and Todorov, 2008; Todorov et al., 2015; Todorov and Oh, 2021), and this might also obscure the information from the moral-relevance and warmth-relevance of traits, which would not reflect their special importance for person perception. Therefore, in the present study, we used the traits assessment task to rate multiple traits rather than dimensions and to explore the effects of happy expression intensity on the social perception of Chinese faces, which would provide more information about the social perception of Chinese faces. This would be a novel perspective in the study of the first impression of strange faces in the Chinese context to explore the “morality differentiation hypothesis.”

The Effect of Happy Expressions on the Social Perception of Faces

Facial cues in the social perception of faces include immutable (e.g., identity, gender, and race) and variable (e.g., expressions) cues (Haxby et al., 2000). In contrast to immutable cues, variable facial expressions provide critical clues while the social perception of faces is formed (Sutherland et al., 2017). In daily life, happy and neutral expressions are most frequently present on the faces of people. Compared to neutral expressions, happy expressions increase face value in interpersonal communication, resulting in a halo effect. This is the tendency for the positive traits of an individual to “overflow” into additional trait areas in perceptions of others of them (Thompson and Meltzer, 1964). Smiling faces have been rated as more trustworthy, attractive, and popular (Hehman et al., 2019; Li et al., 2020), and less aggressive (Oosterhof and Todorov, 2008). Previous research indicates that facial expressions influence the perception of a single specific dimension of trustworthiness (Caulfield et al., 2016; Sandy et al., 2017), dominance (Kim et al., 2016; Ueda and Yoshikawa, 2018), warmth (Wang et al., 2017), and capability (Beall, 2007; Gao et al., 2016). However, a few studies have directly evaluated the expression effects of multiple traits. Referring to the research by Li et al. (2020) and Wu et al. (2020) was the first group to directly compare the effects of happy Chinese expressions on multiple traits. The results indicated that the evaluation scores of trustworthiness and warmth regarding happy facial expressions varied, which supported the “morality differentiation hypothesis.” These results indicate that it is necessary to explore the effects of happy expressions on multiple traits rather than just single dimensions of the social perception of faces.

In the context of Western culture, mounting evidence indicates that happy expressions of different intensities convey different types of social information. Researchers believe that the intensity of expression corresponds to the intensity of behavioral tendencies (Ekman et al., 1980). Studies have reported that, compared with neutral facial expressions, happy facial expressions at different intensities (25 and 50%) increase the perception scores of trustworthiness among children above 10 years old and that the degree of influence proportionally increases with emotional intensity (Hess et al., 2000; Caulfield et al., 2014, 2016). Furthermore, compared to a low-intensity smile, a high-intensity teeth-showing smile increases the friendly and approachable behavioral tendency of the face, enhancing affinity to the individual. When people are eager to build cooperative relationships with others (Mehu et al., 2008; Bell et al., 2017) or are in search of harmonious interpersonal relationships (Hennig-Thurau et al., 2006), they tend to display a wider smile. Rhesus monkeys also display a toothy smile in subordinate environments, a defensive gesture showing friendly intentions (de Waal and Luttrell, 1985); whereas the bared-teeth display of chimpanzees communicates a benign and non-aggressive intent in affiliate environments (Parr and Waller, 2006). Thus, positive traits associated with sociality (e.g., trustworthiness, submissiveness, and warmth) have been positively correlated with the intensity of happy expressions. However, grins are considered to signal incapability. For example, professional fighters who laugh in pre-match photos are perceived to be less aggressive, less dominant, and more likely to lose than low-intensity smiling fighters (Kraus and Chen, 2013).

While many studies have been conducted on the influence of the intensity of happy expressions on the social perception of faces in Western culture, there are numerous necessary reasons for studying how expression intensity influences Eastern cultures. First, it should be noted that cultural differences exist in the frequency and rules of happy expressions. For example, when comparing photos of Western and Eastern leaders before and after elections, it was found that regardless of the election results, Western leaders presented a high-intensity smile, while Eastern leaders presented a calm and weak smile (Tsai et al., 2016; Fang et al., 2019). Furthermore, when articulating happy expressions via texting, Westerners often use parenthesis and a colon, such as in :-) or :), to exaggerate the mouth and reduce the eyes, respectively. In contrast, Easterners often use emoticons, such as (^.^) or (^_^), where the mouth is simplified but the eyes are expressive (Liu et al., 2010). Second, it should also be noted that cultural differences exist in the interpretation of happy expressions. For example, Chinese people believe that a smiling face signals emotional instability, while Americans do not (Walker et al., 2011). Third, although the “approachability” dimension displays cross-cultural consistency (Sutherland et al., 2018; Wu et al., 2020; Jones et al., 2021), contradictory perspectives exist regarding how the meaning of trustworthiness and warmth in the “approachability” dimension is interpreted. In the context of Western culture, some researchers believed that the meanings of these two traits are similar (Fiske et al., 2007; Wang et al., 2019), while others supported the “morality differentiation hypothesis” (Goodwin et al., 2014; Landy et al., 2016). Trustworthiness focuses on morality, while warmth focuses on social interaction (Wang and Cui, 2003), which has been supported by comparing the scores of the traits in happy and neutral expressions (Li et al., 2020). This suggests that displaying happy expressions might be a possible way to separate the two traits, but previous research still lacks relevant in-depth exploration. Additionally, content differences exist in the “capability” dimension of the social perception of the Chinese faces model and the “dominance” dimension of the valence-dominance model. Fourth, in previous studies, researchers used composite software that combined images of neutral and happy facial expressions in different proportions to form experimental materials with two different physical strengths (25 and 50%; Caulfield et al., 2014, 2016). For example, 25% of happy expressions were a 75/25 combination of neutral and happy expressions. The researchers then used the materials to investigate how the intensity of happy expressions affected the social perception of faces. However, the physical intensity of happy expressions did not strictly correspond to its perceived emotional intensity (Hess et al., 2000; Caulfield et al., 2016). Moreover, the composite images were more likely different from the natural faces that participants would encounter in daily life; therefore, they might not have matched with the mental representations of the participants (Hu et al., 2018). It is thus necessary to compare the influence of different intensities of happy expressions on the social perception of faces in the Chinese context with more natural photos.

The Present Study

In the present study, we investigated the effect of different intensities of happy expressions on the social perception of Chinese faces, which has not been previously addressed. We selected a series of high- and low-intensity happy face images. Participants were asked to rate these face images according to the traits of trustworthiness, responsibility, attractiveness, sociability, confidence, intelligence, aggressiveness, dominance, competence, warmth, and tenacity. These traits were derived from the study by Wu et al. (2020), and we chose 11 of them instead of 15 for the following reasons: these 11 traits had high internal consistency and overlapped with the traits included in the studies of Fiske et al. (2007) and Oosterhof and Todorov (2008), so they could be used as representative traits in the study of social perception of faces. The four traits of masculinity, femininity, emotional stability, and likeability were not included. The traits of masculinity and femininity were excluded because Wu et al. (2020) performed the principal component analysis of traits without the femininity and masculinity ratings. The trait of emotional stability was excluded due to low internal consistency (Li et al., 2020). Likeability was excluded to avoid overlap with sociability (Li et al., 2020; Brambilla et al., 2021). Although the cultural consensus regarding the meaning of the “approachability” dimension, based on the “moral differentiation hypothesis,” we hypothesized that the intensity of happy expressions had different effects on trustworthiness-related traits and warmth-related traits (Hypothesis 1). In addition, since Chinese leaders presented calm, weak smiles in political elections, we hypothesized that low-intensity happy expressions would be rated as more capable than high-intensity happy expressions (Hypothesis 2).

Materials and Methods

Participants

A total of 32 Chinese college students aged 18–25 years (16 males and 16 females, mean age 22.06 ± 2.17 years) from Liaoning Normal University participated in the face photo trait-rating experiment. All participants reported normal or corrected-to-normal visual acuity and normal color vision, claimed to be free of current and previous neurological and psychiatric disorders and were not currently using psychotropic medication. All participants were right-handed according to a self-report questionnaire. The sample size for the main study (N = 27) was considered appropriate to conduct a 2 × 2 repeated-measures ANOVA since the focus was on the main effect of only one variable (Brysbaert, 2019). The present study only focused on the main effect of the happy expression intensity; therefore, post hoc analysis was performed using the G∗Power software. The analysis indicated that the sample of the study (N = 32) was sufficient to detect an effect size of f = 0.40 (median effect) with a power of 1 − β = 0.8 (Brysbaert, 2019). All participants provided written informed consent and were paid CHN¥40 for their participation in the 1 h experiment. The study was previously approved by the Academic Ethics Committee of Liaoning Normal University.

Stimuli

Stimuli Development

A total of 76 smiling face photos were randomly selected from the Taiwan Facial Expression Image Database (TFEID; Chen and Yen, 2007). These photos were recorded from 38 Chinese people (19 males and 19 females). Two photographs were taken of each individual, one depicting a high-intensity smile and the other a low-intensity smile.

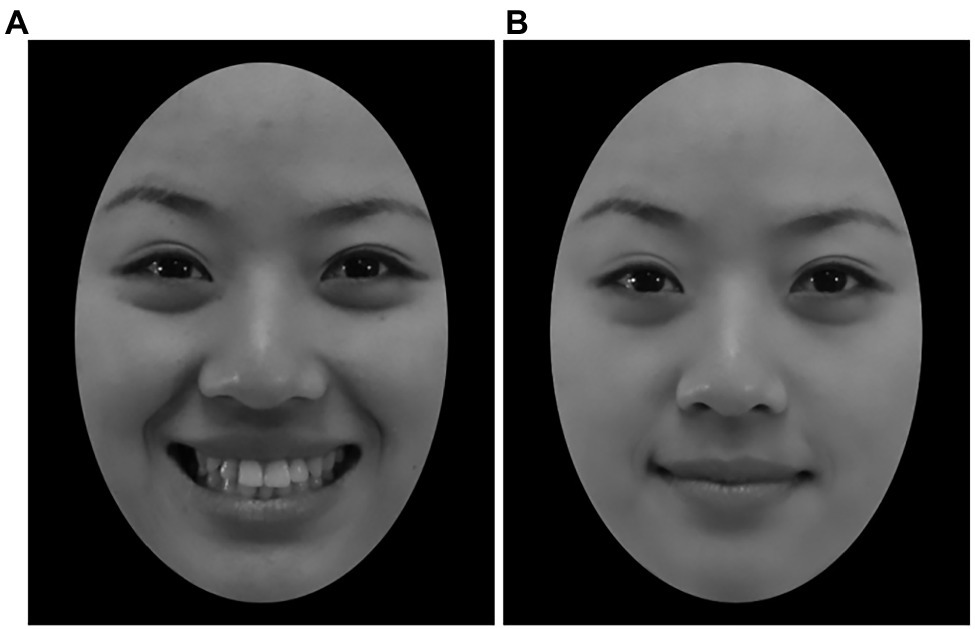

To have enough stimuli for the formal experiment, another 56 smiling face images were collected by taking photos of 28 additional Chinese college students (14 males and 14 females, mean age = 24.46 ± 1.45 years) using the procedure defined by Chen and Yen (2007) for TFEID. Before their photos were taken, the participants were shown sample photos of happy expressions in different intensities (high-intensity and low-intensity; selected from the TFEID, facial recognition rate >90%). The sample photos of happy expressions were formed according to the instructions of the Facial Action Coding System (FACS; Ekman et al., 2002). The facial movements of happy expression included (a) pushed-up cheeks, in which skin gathers under the eye, and a narrowed eye aperture and (b) pulled-up lip corners. Prior literature has determined that at a muscular level, smile intensity is indicated by the amplitude of the zygomatic major movement (the muscle group responsible for pulling the lips upwards; Ekman, 1993). Happy expressions of different intensities are mainly different in their zygomatic major movement levels. A low-intensity happy expression displays a slight contraction of the zygomatic major, which is not enough to show the teeth; a high-intensity happy expression involves displaying an intense contraction of the zygomatic major, which leads to a toothy smile. Participants relaxed their facial muscles and made corresponding expressions by imitating facial muscle movements of happy expressions of different intensities, as depicted in the photos. The location and light were identical, the participants wore the same clothes (white lab coat), the camera parameters were fixed (ISO 1600, 1/100 s, F/4.5), the camera was parallel to the faces of the participants, and the distance from the camera to the participant was 150 cm. After taking the photos, the image standardization process was also conducted according to the criteria of TFEID using Adobe Photoshop (Adobe, 2018) to remove the hair, ears, neck, accessories, and other external features, leaving only facial information. The unified image size was 480 pixels × 600 pixels, and a 4.05 cm × 5.85 cm black circle was applied around each face so that each face only displayed the internal information of the face, such as the eyebrows, eyes, nose, and mouth (example shown in Figure 1).

Figure 1. Example of the experimental picture. (A) High-intensity happy expression. (B) Low-intensity happy expression.

Stimuli Validation

To ensure that the photos presented happy expressions with a high- or low-intensity smile, a screening assessment was conducted. An additional 30 college students were recruited (15 males and 15 females, mean age = 22.17 ± 2.45 years) to assess all 132 photos that were selected from the TFEID and taken by the lab of the researchers. All participants were unfamiliar with the faces in the photos.

The program for assessment was compiled and presented in E-prime 2.0 (PST, 2013), and then divided into two phases: a practice phase (eight trials) and a formal phase (132 trials). The procedure in both phases was identical. For the practice phase, eight additional photos of Chinese people were selected from the TFEID, but they were not used in the formal phase. All eight selected photos corresponded to an emotion type including anger, sadness, fear, happiness, disgust, surprise, contempt, and a neutral expression. For the formal phase, the stimuli were selected from the TFEID and taken from the lab. The participants were tasked with judging the expression type and rating the intensity level of the face. After participants reached a 90% accuracy rate of judging the facial expression type in the practice phase, they entered the formal phase. If the participants failed to reach the 90% threshold within the practice phase, then they remained in that phase. The average number of practice trials was eight.

In each trial, the fixation point was initially presented for 1,000 ms, and facial expression photos were then presented randomly. For each face photo, the participants were first requested to classify the emotion types of the faces by pressing one of eight emotions labeled on the keys of a numeric keypad (1 for “angry,” 2 for “sad,” 3 for “fear,” 4 for “happy,” 5 for “disgusting,” 6 for “surprise,” 7 for “contempt,” and 8 for “neutral”). They were then asked to rate the emotional intensity of the faces on a 9-point Likert scale ranging from 0 (no emotion) to 8 (very strong emotion) on the alphanumeric keys. The image disappeared after the participant pressed the button, which was followed by a blank screen for 1,000 ms. The participants were given a 30-s break after completing 50 trials before they continued the experiment.

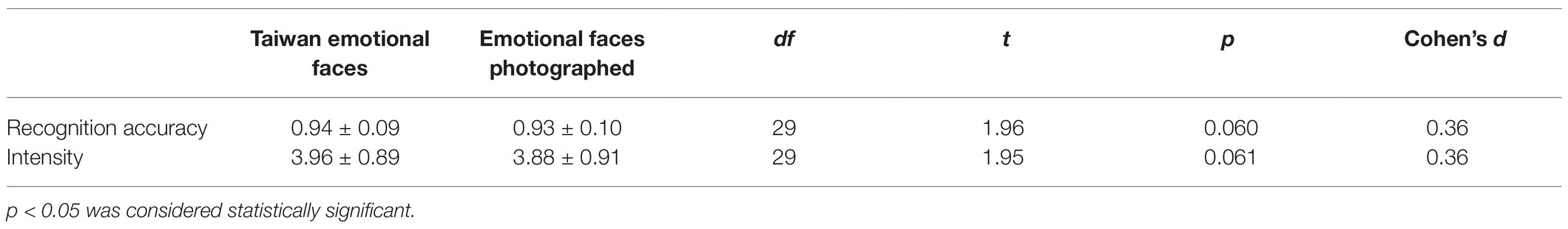

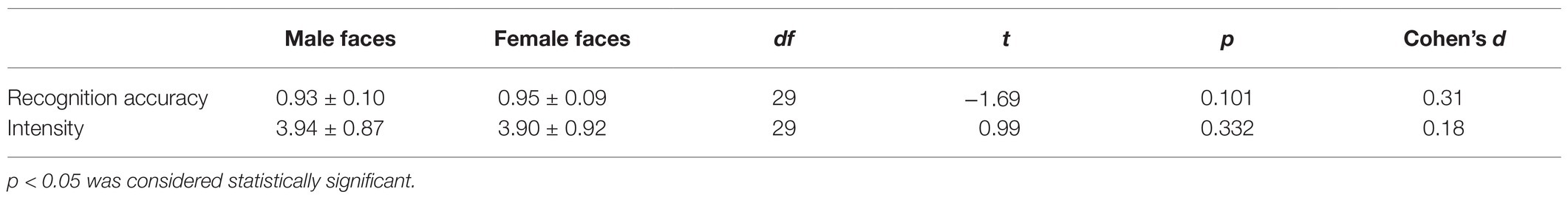

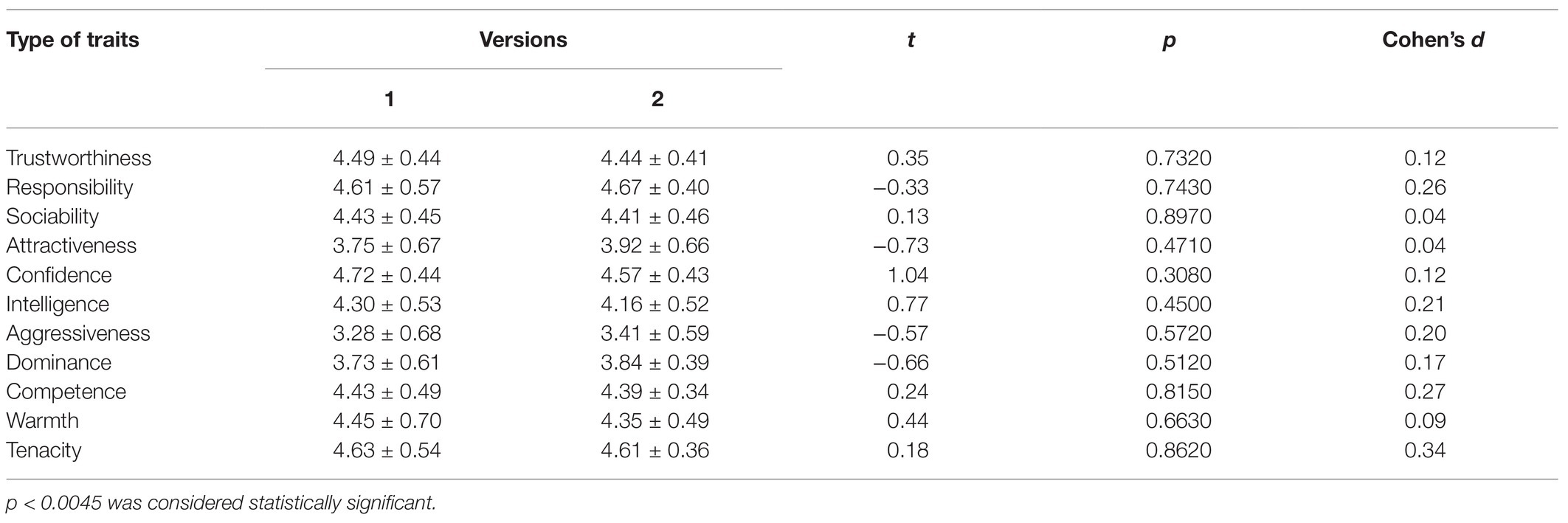

After collecting the assessment data, the recognition accuracy and intensity of happy expressions of each participant were calculated. Recognition accuracy denotes the percentage of the number of photos rated as the happy expression type compared to the total number of photos. Happy emotional intensity refers to the average value of the emotional intensity scores of all photos. A normal distribution test and homogeneity of variance test were conducted for the recognition accuracy and emotional intensity of TFEID, as well as for the newly collected images. The results indicated that the data satisfied normal distribution (Kolmogorov–Smirnov: p > 0.05) and homogeneity of variance (Levene’s statistic: p > 0.05). SPSS 24.0 software (IBM, 2018) was used to perform paired t-tests on the means of the recognition accuracy and intensity of happy expressions for the image sources and facial gender obtained from the responses of the same participants (N = 30). The results displayed no significant differences in the image sources (i.e., the TFEID facial expressions and photographed facial expressions by the lab of the researchers; as shown in Table 1) or facial gender (as shown in Table 2); this indicates that the images taken by the lab of the authors were equivalent to the TFEID images.

Additionally, the 132 face photos of 66 people that were taken (one photo with a high-intensity smile and another with a low-intensity smile for each person) were divided into two equal groups. In each group, no persons were represented in more than one face photo, and thus, the participants did not view two photos of the same person. These two groups of face photos were used separately to compile one version of a trait rating program; this was done to avoid interference with the identity information in the trait rating. Thus, Version 1 of the trait rating program included the face photos of 19 people with high-intensity happy expressions from TFEID and the face photos of 14 people with high-intensity happy expressions from the photos taken by the lab. The photos with low-intensity happy expressions of these 33 people were assigned to Version 2. The other photos were assigned in this same way among Versions 1 and 2.

After dividing the photos into two versions, paired t-tests were conducted on the means of recognition accuracy and intensity of happy expressions for the two versions. The results showed significant differences for the high- and low-intensity smiling faces in each version [Version 1: (5.33 ± 1.01) vs (2.47 ± 0.93), t (29) = 20.88, p < 0.001, Cohen’s d = 3.81; Version 2: (5.36 ± 1.01) vs (2.55 ± 0.94), t (29) = 19.67, p < 0.001, Cohen’s d = 3.59]. These two versions were well matched because no significant difference was observed in the smile intensity between the high-intensity smile faces across the two versions [(5.33 ± 1.01) vs (5.36 ± 1.01), t (29) = −0.66, p = 0.516, Cohen’s d = 0.12], nor between the low-intensity smile faces across the two versions [(2.47 ± 0.93) vs (2.55 ± 0.94), t (29) = −1.69, p = 0.102, Cohen’s d = 0.31].

Trait Assessment Task Procedure

The participants were tested in a quiet and comfortable laboratory with good sound insulation. They were introduced to the trait assessment task and were informed that their task would be to rate a series of face photos using a list of trait adjectives. As previously described, two groups of face photos were used to create two equivalent versions of a program for rating traits. One of the two versions was randomly selected for each participant. Each version comprised eight trials in the practice stage and 726 trials in the formal experimental stage. The stimuli in the practice stage were selected from the Compound Facial Expressions of Emotion (CFEE) Database (Du et al., 2014). Eight Asian faces were randomly selected, including four neutral expressions and four happy expressions. The stimuli in the formal experimental stage were selected from the face photos of 66 people (focusing on expression intensity and gender information). The 726 trials comprised 11 blocks, in which the face photos of the 66 people were repeatedly presented 11 times. Each block was assigned so that the participants rated each of the 11 traits, namely trustworthiness, responsibility, attractiveness, sociability, confidence, intelligence, aggressiveness, dominance, competence, warmth, and tenacity on a 7-point Likert scale ranging from 1 (not very ××) to 7 (very ××).

Each block was presented in a random order among the participants. After completing each block, the participants rested for at least 60 s so that they could have a break before proceeding to the next experiment block. The entire experiment lasted for approximately 1 h.

Results

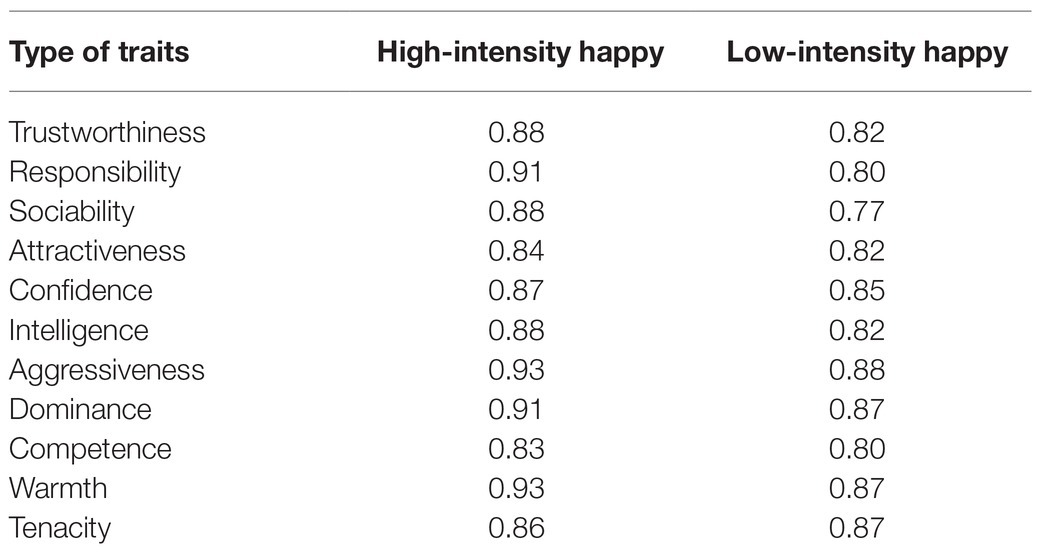

The present study was designed as a 2 (expression intensity: high and low) × 2 (face gender: male and female) within-subjects design, and the dependent variable was the evaluation score of 11 traits: trustworthiness, responsibility, sociability, attractiveness, confidence, intelligence, aggressiveness, dominance, competence, warmth, and tenacity. The SPSS 24.0 (IBM, 2018) statistical software was used for data processing and analysis. First, Cronbach’s α coefficient was calculated to test the stability and consistency of the evaluations of different participants of each trait, which determined that all Cronbach’s alphas were above 0.77 (as shown in Table 3). This indicated that the evaluation scores of these 11 traits had good internal consistency, even though participants were judging different intensities of natural photographs (Nunnally, 1978).

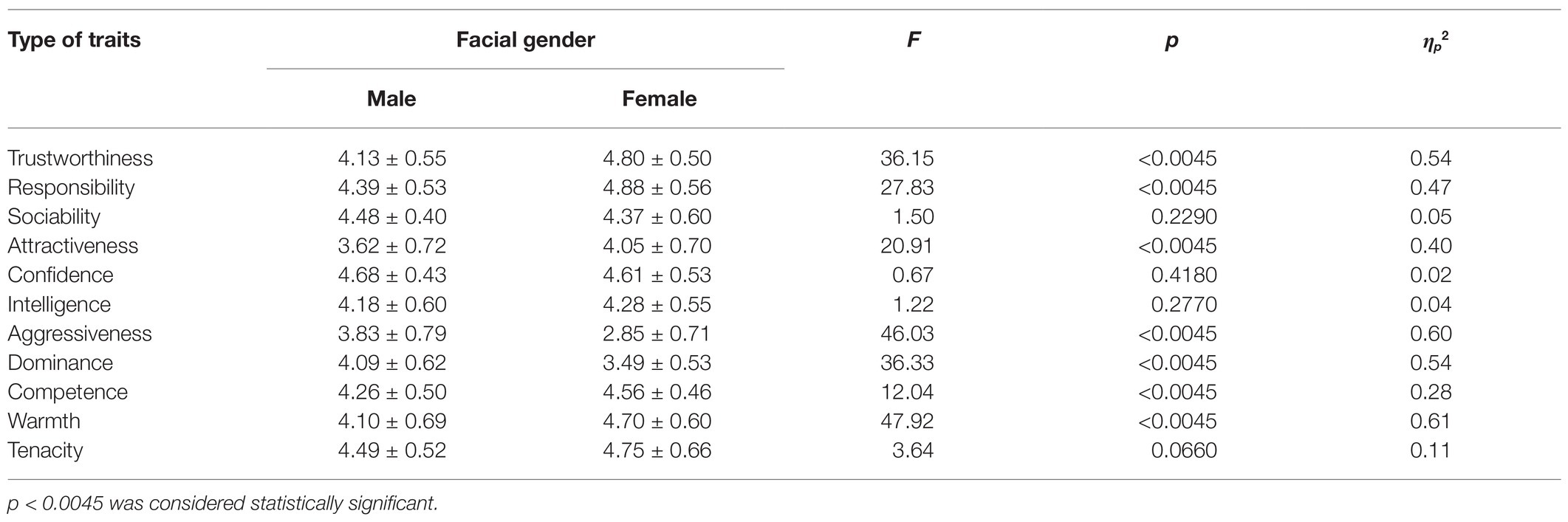

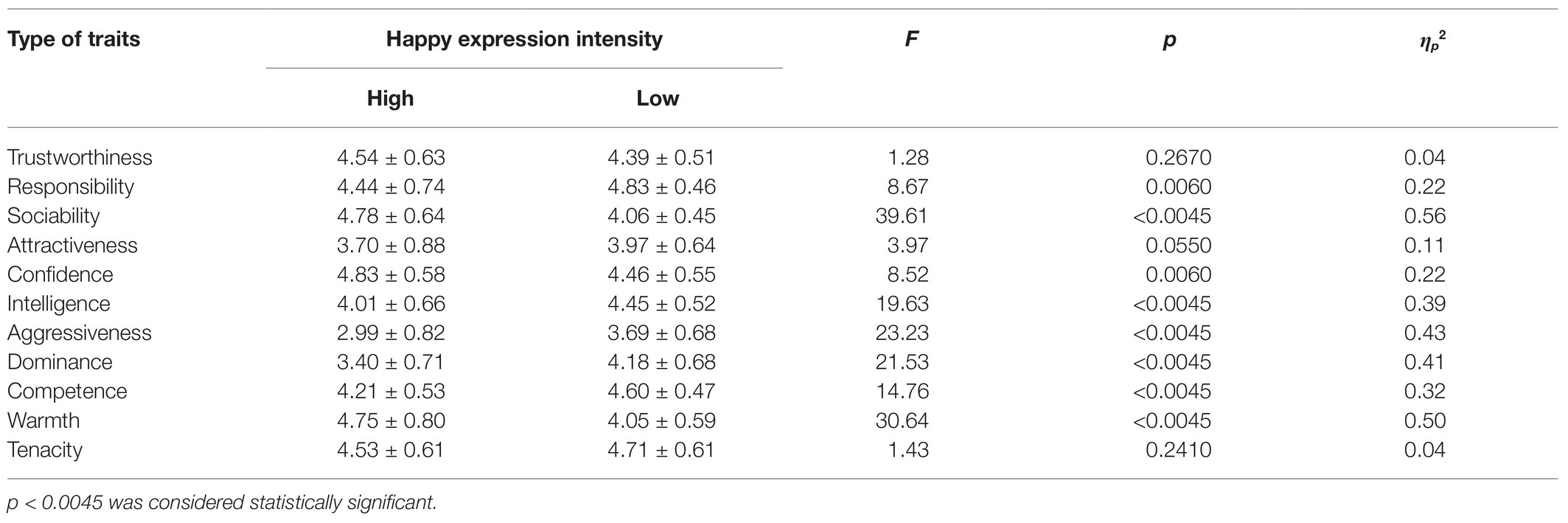

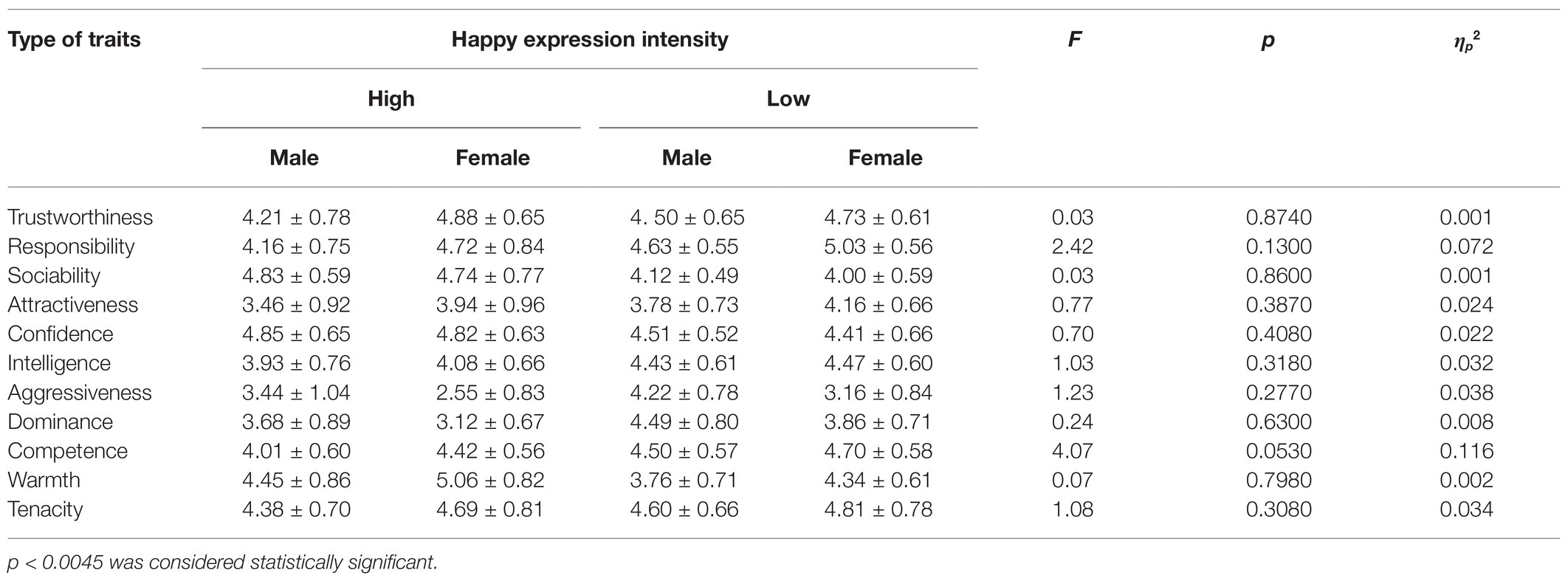

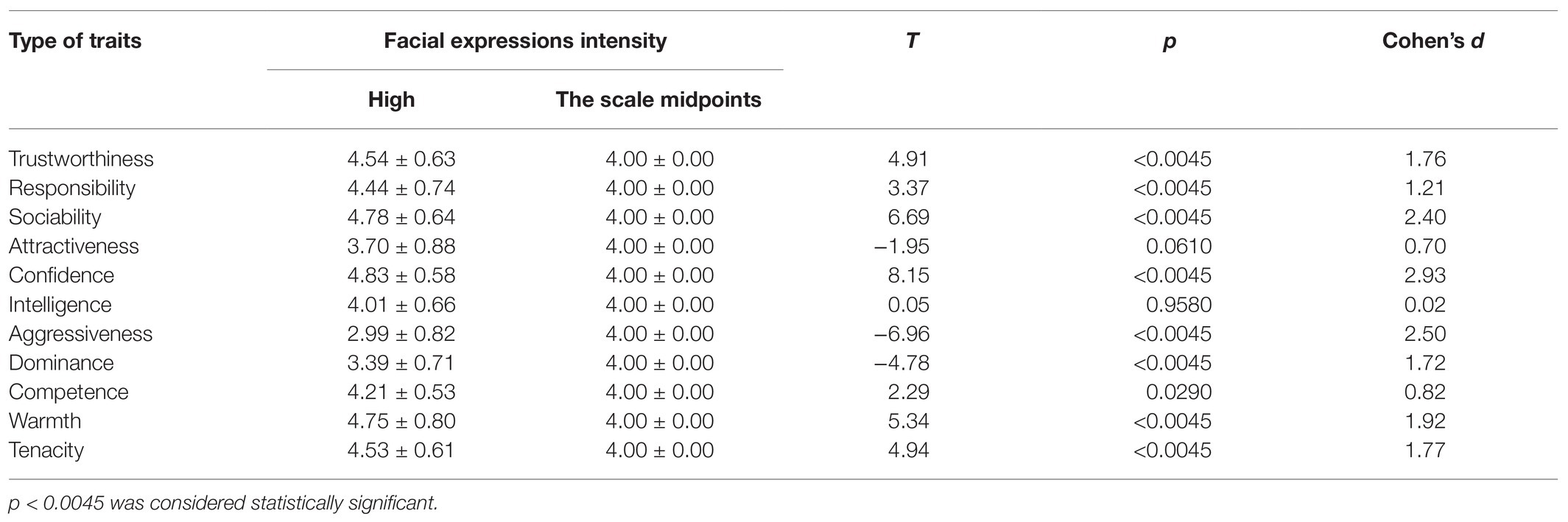

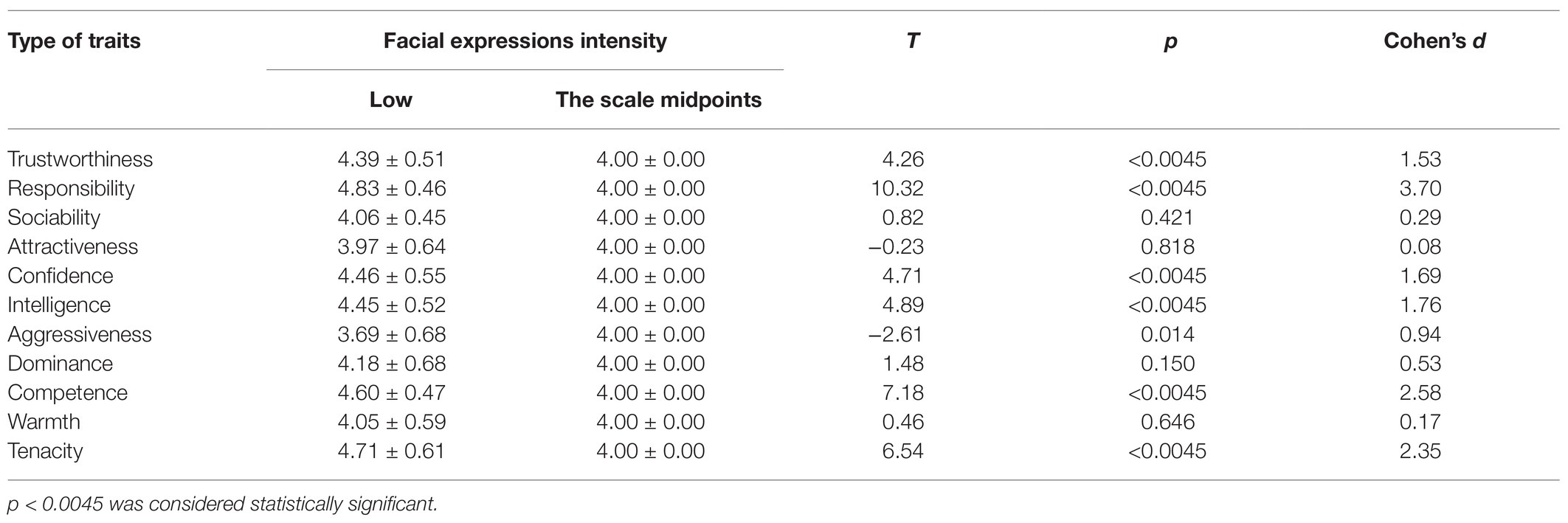

Second, to verify the data satisfied the assumptions for the ANOVA, we had conducted a normal distribution test and homogeneity of variance test for the rating scores for each trait. The results indicated that the data satisfied normal distribution (Kolmogorov–Smirnov: p > 0.05) and Bartlett’s test of sphericity, which suggested that the ANOVA hypothesis had been satisfied. Therefore, the rating scores for each trait were analyzed separately in a 2 (happy expression intensity: high or low) × 2 (facial gender: male or female) repeated measures ANOVA. Because there were many dependent variables in this study, the probability of type I error through multiple comparisons might be increased. To decrease the probability of type I error, the significance thresholds for the p-values reported below were adjusted and the Bonferroni correction method of multiple tests was conducted according to the following formula: α = α/k (α = 0.05, k = 11). The difference was statistically significant with p < 0.0045 (as shown in Tables 4–6; Rezlescu et al., 2015).

Table 4. Evaluation scores for the different social traits under high- and low-intensity happy expressions (M ± SD).

Table 6. Evaluation scores for the different social traits under happy expression intensity and facial gender (M ± SD).

For the judgments of trustworthiness, responsibility, and attractiveness, non-significant main effects of the happy expression intensity were observed, and the interaction between happy expression intensity and facial gender was not significant. However, the main effects of facial gender were significant, and the ratings of trustworthiness, responsibility, and attractiveness of the female faces were higher than those of male faces.

For sociability and intelligence, the main effects of the happy expression intensity were significant, and the ratings of sociability of the high-intensity happy faces were higher than the low-intensity happy faces, but the ratings of intelligence of the high-intensity happy faces were lower than the low-intensity happy faces. However, the main effects of facial gender and the interaction between happy expression intensity and facial gender were not significant.

For warmth, aggressiveness, dominance, and competence, the main effects of the happy expression intensity and facial gender were significant. For the happy expression intensity, the ratings of the warmth of the high-intensity happy faces were higher than the low-intensity happy faces, but the ratings of aggressiveness, dominance, and competence of the high-intensity happy faces were lower than the low-intensity happy faces. For the facial gender, the ratings of warmth and competence of the female faces were higher than the male faces, but the ratings of aggressiveness and dominance of the female faces were lower than the male faces. However, the interaction between happy expression intensity and facial gender was not significant.

For confidence and tenacity, the main effects of the happy expression intensity, facial gender, and the interaction between happy expression intensity and facial gender were not significant.

Further, this study reported the results of a one-sample t-test against the scale midpoints in addition to the relative comparisons between low- and high-intensity happy facial expressions (as shown in Tables 7 and 8).

Table 7. Evaluation scores for the traits under high-intensity happy expression and scale midpoints (M ± SD).

Table 8. Evaluation scores for the traits under low-intensity happy expression and scale midpoints (M ± SD).

Finally, a normal distribution test and homogeneity of variance test were conducted for the rating scores of each trait in both versions. The results indicated that the data satisfied normal distribution (Kolmogorov–Smirnov: p > 0.05) and homogeneity of variance (Levene’s Statistic: p > 0.05). SPSS 24.0 (IBM, 2018) was used to perform independent sample t-tests on the rating scores of each trait in both versions. The results showed no significant differences in either Version 1 or Version 2 (as shown in Table 9), indicating that the evaluation scores of traits in both versions were generally homogeneous.

Discussion

Based on the trait assessment task, this study manipulated the intensity of happy expressions (high, low) of target faces to explore the effect of happy emotional intensity on the social perception of Chinese faces. The results indicated that compared to the low-intensity happy expression, the high-intensity happy expression led to an enhanced perceptual outcome of the traits related to approachability, such as sociability and warmth, but not trustworthiness. Furthermore, compared to the low-intensity happy expression, the high-intensity happy expression reduced the perceptual outcome of traits related to capability.

The Effect of the Intensities of Happy Expressions on Approachability

The “approachability” dimension represents a welcoming behavioral tendency of the target face. Happy expressions not only indicate positive emotional states but also convey friendly behavioral tendencies (Montepare and Dobish, 2003). Researchers have suggested that the intensity of expression corresponds to the intensity of behavioral tendencies (Ekman et al., 1980). Similar to the “morality differentiation hypothesis” (Goodwin et al., 2014; Landy et al., 2016), the “approachability” dimension of Chinese faces also includes two sub-dimensions: warmth and trustworthiness.

The results of this study showed that the sociability and warmth of high-intensity happy faces were rated higher than low-intensity happy faces, supporting the results of previous studies (Harker and Keltner, 2001; Mehu et al., 2008). Toothy smiles convey the behavioral tendency of an expressive person to build social ties and higher social intentions (Mehu et al., 2008; Bell et al., 2017) as well as increase the sense of friendliness, approachability, and warmth of the individual. Thus, it is believed that positive traits associated with social skills (e.g., sociability and warmth) tend to increase with the intensity of happy expressions. Some researchers believe that the positive effects of happy expressions of different intensities on the social perception of faces are derived from the baby-face overgeneralization effect, indicating that people tend to believe that adults with baby-face facial features have the same traits as infants, such as meekness, innocence, and enthusiasm. The intensity of happy expressions is associated with zygomatic muscle intensity (Wang et al., 2015). The typical facial features of high-intensity happy expressions (i.e., a widened nose, upturned mouth, shortened chin, and round face) are similar to the face of a baby (e.g., small, round, and small jaw; Dou et al., 2014). With the increase in the intensity of a happy expression, the facial features become more similar to the face of a baby (Walker et al., 2011; Wang et al., 2015), and the baby-face overgeneralization effect is more obvious. Therefore, the score of high-intensity happy expressions is higher than that of low-intensity happy expressions for sociability and warmth.

In addition to the “warmth” dimension, the “approachability” dimension of Chinese faces also includes the subdimension of trustworthiness, which is a representative trait of valence and includes responsibility, attractiveness, and confidence (Oosterhof and Todorov, 2008). The intensity of a happy expression did not affect the rated scores for trustworthiness, attractiveness, confidence, or responsibility. Based on the perceptual fluency hypothesis (Westerman et al., 2015), happy expressions of different intensities (positive emotional valence) correspond with the valence of trustworthiness, responsibility, attractiveness, and confidence (positive traits). The perceptual process is simple and does not vary with the intensity of happy expressions. However, the result for trustworthiness was inconsistent with previous studies, which suggested that children could perceive different levels of face trustworthiness based on cues of happy expressions of different intensities (25 and 50%), and the influence of happy expressions on trustworthiness perception would be enhanced with an increase in emotional intensity (Hess et al., 2000; Caulfield et al., 2014, 2016). There may be several reasons for this conflicting result. First, the experimental materials used in previous research comprised a combination of neutral and happy facial images (Hess et al., 2000; Caulfield et al., 2016); this could have caused the happy faces to appear less natural and the less intense happy face to appear even less natural, thus decreasing its trustworthiness rating. Second, the differences in interpretations of trustworthiness, compared with previous studies, might have explained the inconsistencies of the abovementioned study. Some researchers believed that the meanings of trustworthiness, warmth, and sociability are similar and that they are used to evaluate the friendly behavior intentions of the target face and that they are associated with communality (Hess et al., 2000; Fiske et al., 2007; Oosterhof and Todorov, 2008; Caulfield et al., 2014, 2016). However, in Chinese culture, trustworthiness refers to a moral norm that is associated with correctness rather than with the development of interpersonal skills (Shu et al., 2017), which supports the “morality differentiation hypothesis” (Goodwin et al., 2014; Landy et al., 2016). A highly sociable individual may not be perceived as being more trustworthy.

This result of this study regarding the effect on attractiveness was also inconsistent with the results of previous studies, which reported a positive correlation between the intensity of natural smiles and ratings on physical attractiveness (Golle et al., 2014). The main reason for this inconsistency might be due to the technique of stimulus creation. According to the “average hypothesis,” the degree of facial averageness is the main factor affecting facial attractiveness, and the more average the face, the higher the facial attractiveness (Li and Cheng, 2010). Previous studies have used average faces formed by the Psychomorph software instead of natural faces to explore the effect of smiling intensity on attractiveness. Such a design allowed the influence of both averageness and smiling intensity, thus making it impossible to distinguish the effect of facial averageness and smiling intensity on facial attractiveness (Golle et al., 2014). When facial averageness in the present study was controlled, the smiling intensity did not influence facial attractiveness.

The non-significant effect on the trait of responsibility might be due to its uniqueness. Responsibility refers to a positive trait characterized by effort, self-discipline, carefulness, and conscientiousness (Oosterhof and Todorov, 2008; Huang et al., 2014). Thus, its rating scores mainly reflect the executive power of the behavior rather than the behavioral tendency of the target individual, which might be less related to smile.

The Effect of the Intensities of Happy Expressions on Capability

The result of the facial evaluation of people pertaining to the “capability” of a person represents the judgment of the ability of behavior intention of the target faces. Wu et al. (2020) found that the “capability” dimension denoted the traits of dominance and tenacity, which included physical and intellectual strength.

The results of this study showed that low-intensity smiling faces were rated as more dominant, aggressive, competent, and intelligent than high-intensity smiling faces. In general, the scores of physical strength, including dominance and aggressiveness, and intellectual strength, including competence and intelligence decrease with the increase in the intensity of happy expression. This is because the “capability” dimension is usually related to the attainment of military/political status, and the score of this dimension reflects the competitiveness and control of an individual in a particular field (Cheng et al., 2013). Compared to low-intensity smiling faces, high-intensity smiling faces have more baby-face features, and these faces represent weaker control (Kraus and Chen, 2013) and weaker competitiveness and competence (Gao et al., 2016). Additionally, regarding cultural differences, compared with Western leaders, Chinese leaders always present a calm and weak smile (Tsai et al., 2016), with more emphasis on “smiling without showing teeth” (Fang et al., 2019). In China, the expression of smiling without showing teeth is more likely to be a facial cue of high competence and dominance traits.

However, even if the overall trend is the same, due to the different meanings between dominance and competence, the intensity of happy expressions is not consistently evaluated for the two traits. This could be because dominance and aggressiveness are traits representing physical strength and imply a threatening ability to carry out the intention to hurt others, thus sharing a negative correlation with valence. Therefore, the dominance and aggressiveness scores for happy faces are lower than or equal to the scale midpoints. Compared to low-intensity happy faces, high-intensity happy faces increase the propensity for submissive behaviors. When people desire to build cooperative relationships with others (Mehu et al., 2008; Bell et al., 2017), or are in search of rapport (Hennig-Thurau et al., 2006), they tend to smile more intensely. This submissive motivation is also incompatible with the characteristics of the dominance trait (threat). Therefore, the scores of dominance and aggressiveness decrease with the increase in the intensity of happy expression. On the other hand, people with high competence gain social status through a high level of ability or generosity, and there is a positive correlation between competence and valence. Therefore, competence including intelligence scores for happy faces is greater than or equal to the median. However, high-intensity smiling faces are often considered to show that people are carefree, satisfied with the status quo, and open to change and improvement (Bodenhausen et al., 1994). This is inconsistent with the intention conveyed by the component of competence (e.g., high creativity and high efficiency; Fiske et al., 2007); thus, high-intensity happy expressions might be facial cues for a lack of competence. In addition, target faces were found to be affected by a stronger positivity effect in the competence domain for moderate levels of behaviors (Rusconi et al., 2020). Therefore, compared to high-intensity happy expressions, low-intensity happy expressions that are attributed to moderate levels of behaviors might work as to be facial cues for competence.

However, the present study demonstrated that the intensity of the happy expression did not affect the evaluation score of tenacity, though it was usually comprehended in the dimension of “capability.” As tenacity refers to a trait that is exhibited to protect the body from harm under stress (Zhang and Wang, 2011), a person with strong tenacity is more inclined toward focusing on problem-coping strategies than on emotion-coping strategies (Nicholls et al., 2008). Therefore, the score of tenacity might be unrelated to the intensity of happy expressions.

Taken together, the present studies have made a worthwhile contribution to the existing literature. In terms of the current research, this study explored how different intensities of happy expressions influenced the social perception of faces in the Chinese context. The results supported the “morality differentiation hypothesis” that trustworthiness and warmth/sociability had different meanings in China. Sociability in the context of Chinese culture focuses on the development of interpersonal skills (e.g., emotional management skills and conflict resolution strategies). Further, sociability is associated with communality (Zhang et al., 2012). However, trustworthiness is considered a moral code, and it is uniquely associated with correctness (Shu et al., 2017). Therefore, the intensity of happy expressions has different effects on these two traits. Compared with low-intensity happy expressions, high-intensity happy expressions only improve the evaluation score of sociability and do not affect the evaluation score of trustworthiness. Second, previous researchers have studied the “morality differentiation hypothesis,” which was applicable to the top-down stereotype content processing and familiar groups processing (Goodwin et al., 2014; Landy et al., 2016), as well as highlighted the distinct role of trustworthiness in face perception from the bottom-up perspective (Krumhuber et al., 2007; Todorov et al., 2015; Todorov and Oh, 2021). Compared with the previous studies, the present study distinguished trustworthiness and sociability through trait assessment tasks in the first impressions of strangers with different intensity smiling, which added another supportive evidence for the “morality differentiation hypothesis.” Third, the present study used natural face photographs, thus having more ecological validity than computer-generated faces and composite images that were used in previous studies, revealing the novel finding of this study that the trustworthiness and attractiveness ratings were not affected by the intensity of happiness. Fourth, the present study showed the differences between physical and intellectual strength. For example, the physical strength rating for low-intensity happy expressions was equal to the scale midpoints, while the score of intellectual strength was higher than the scale midpoints; similarly, for high-intensity happy expression, the physical strength rating was lower than the middle value, and that for intellectual strength had no significant difference from the scale midpoints. Fifth, the present study fully described how the influence of the intensity of happy expressions influenced 11 traits: trustworthiness, responsibility, attractiveness, sociability, confidence, intelligence, aggressiveness, dominance, competence, warmth, and tenacity. This has consequently provided more practical suggestions for the daily communications of people, as well as hints for researchers who are interested in conducting further research on one or several traits.

Although the present study produced several interesting findings, it has several limitations. First, this study only selected happy expressions, thereby lacking negative and neutral expressions for comparison groups. Further research must compare the effects of positive, negative, and neutral expressions on personality trait assessment. Second, this study adopted a within-subjects design that is similar to the studies of Walker et al. (2011) and Wang et al. (2019), in which the bias of the perceiver on the social perception of faces can be controlled; however, the evaluation of one trait by the participants was found to affect their judgment of another trait. To control the judgment error of traits by the same participants, the 11 traits in this study were divided into 11 blocks and then presented in random order to the participants. A mandatory rest time of 60 s was also set between each block for participants, as well as a freely regulated rest time. Therefore, the influence of the evaluation of the same participant of one trait that could affect the judgment of another trait was controlled, and the fatigue of the participants was also reduced. Future studies should adopt a between-subjects design to verify the stability of the results of this study. Third, because there are many levels of dependent variables in this study, multiple statistical analyses were conducted. Although they were statistically corrected, this does not eliminate the possible misrepresentation or understatement effect caused by multiple statistical comparative analyses. Future studies should conduct further targeted tests on these effects. Fourth, this study addressed the gap in previous research by considering how different intensities (low vs. high) of happy facial expressions affected the ascription of 11 traits focusing on Chinese faces. However, this current study lacked a direct comparison between Chinese and Western faces and participants. Therefore, further research that directly compares the underlying cultural differences of how different intensities of happy expressions affect the social perception of faces is necessary. Fifth, this was an exploratory experiment, and future research needs to recruit more participants to replicate the results of this study.

Conclusion

In summary, the present study revealed that different intensity happy expressions (high-intensity or low-intensity) had different effects on the social perception of Chinese faces among Chinese participants. This was mainly manifested by high-intensity happy expressions receiving higher scores for sociability and warmth in the dimension of “approachability,” as compared with low-intensity happy expressions. Further, high-intensity happy expressions had lower scores for the dimension of “capability,” (e.g., dominance, competence, and intelligence).

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethics Committee of Liaoning Normal University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

ZJ conceived this study. YL and YY participated in writing and revising the manuscript. YL and HL participated in performing the study. FP and QW participated in modifying the manuscript. All authors contributed to the article and approved the submitted version.

Funding

The authors gratefully acknowledge the financial supports of the 2019 Humanities and Social Sciences Research Project of the Ministry of Education (19YJA850014) and the Key project of Liaoning Provincial Department of Education (LZ2020001).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank all the students who participated in the research. We would also like to thank Editage (www.editage.cn) for English language editing.

References

Beall, A. E. (2007). Can a new smile make you look more intelligent and successful? Dent. Clin. N. Am. 51, 289–297. doi: 10.1016/j.cden.2007.02.002

Bell, R., Koranyi, N., Buchner, A., and Rothermund, K. (2017). The implicit cognition of reciprocal exchange: automatic retrieval of positive and negative experiences with partners in a prisoner’s dilemma game. Cognit. Emot. 31, 657–670. doi: 10.1080/02699931.2016.1147423

Bodenhausen, G. V., Kramer, G. P., and Süsser, K. (1994). Happiness and stereotypic thinking in social judgment. J. Pers. Soc. Psychol. 66, 621–632. doi: 10.1037/0022-3514.66.4.621

Brambilla, M., and Leach, C. W. (2014). On the importance of being moral: the distinctive role of morality in social judgment. Soc. Cogn. 32, 397–408. doi: 10.1521/soco.2014.32.4.397

Brambilla, M., Rusconi, P., Sacchi, S., and Cherubini, P. (2011). Looking for honesty: the primary role of morality (vs. sociability and competence) in information gathering. Eur. J. Soc. Psychol. 41, 135–143. doi: 10.1002/ejsp.744

Brambilla, M., Sacchi, S., Rusconi, P., Cherubini, P., and Yzerbyt, V. Y. (2012). You want to give a good impression? Be honest! Moral traits dominate group impression formation. Br. J. Soc. Psychol. 51, 149–166. doi: 10.1111/j.2044-8309.2010.02011.x

Brambilla, M., Sacchi, S., Rusconi, P., and Goodwin, G. (2021). The primacy of morality in impression development: theory, research, and future directions. Adv. Exp. Soc. Psychol. Advance online publication. doi: 10.1016/bs.aesp.2021.03.001

Brysbaert, M. (2019). How many participants do we have to include in properly powered experiments? A tutorial of power analysis with reference tables. J. Cogn. 2, 16–38. doi: 10.5334/joc.72

Caulfield, F., Ewing, L., Bank, S., and Rhodes, G. (2016). Judging trustworthiness from faces: emotion cues modulate trustworthiness judgments in young children. Br. J. Psychol. 107, 503–518. doi: 10.1111/bjop.12156

Caulfield, F., Ewing, L., Burton, N., Avard, E., and Rhodes, G. (2014). Facial trustworthiness judgments in children with ASD are modulated by happy and angry emotional cues. PLoS One 9:e97644. doi: 10.1371/journal.pone.0097644

Chen, L. F., and Yen, Y. S. (2007). Taiwanese Facial Expression Image Database. Brain Mapping Laboratory, Institute of Brain Science, National Yang-Ming University, Taipei, Taiwan.

Cheng, J. T., Tracy, J. L., Foulsham, T., Kingstone, A., and Henrich, J. (2013). Two ways to the top: evidence that dominance and prestige are distinct yet viable avenues to social rank and influence. J. Pers. Soc. Psychol. 104, 103–125. doi: 10.1037/a0030398

de Waal, F. B., and Luttrell, L. M. (1985). The formal hierarchy of rhesus macaques: an investigation of the bared-teeth display. Am. J. Primatol. 9, 73–85. doi: 10.1002/ajp.1350090202

Dou, D. H., Liu, X. C., and Zhang, Y. J. (2014). Babyface effect: babyface preference and overgeneralization. Adv. Psychol. Sci. 22, 760–771. doi: 10.3724/SP.J.1042.2014.00760

Du, S., Tao, Y., and Martinez, A. M. (2014). Compound facial expressions of emotion. Proc. Natl. Acad. Sci. 111, E1454–E1462. doi: 10.1073/pnas.1322355111

Ekman, P. (1993). Facial expression and emotion. Am. Psychol. 48, 384–392. doi: 10.1037/0003-066X.48.4.384

Ekman, P., Freisen, W. V., and Ancoli, S. (1980). Facial signs of emotional experience. J. Pers. Soc. Psychol. 39, 1125–1134. doi: 10.1037/h0077722

Ekman, P., Freisen, W. V., and Hager, J. (2002). Emotional facial action coding system. Manual and Investigators Guide. CD-ROM.

Fang, X., Sauter, D. A., and van Kleef, G. A. (2019). Unmasking smiles: the influence of culture and intensity on interpretations of smiling expressions. J. Cult. Cogn. Sci. 4, 293–308. doi: 10.1007/s41809-019-00053-1

Fiske, S. T., Cuddy, A. J., and Glick, P. (2007). Universal dimensions of social cognition: warmth and competence. Trends Cogn. Sci. 11, 77–83. doi: 10.1016/j.tics.2006.11.005

Gao, L., Ye, M. L., Peng, J., and Chen, Y. S. (2016). How important are facial appearance to leadership? A literature review of leaders’ facial appearance. Psychol. Sci. 39, 992–997. doi: 10.16719/j.cnki.1671-6981.20160434

Golle, J., Mast, F. W., and Lobmaier, J. S. (2014). Something to smile about: the interrelationship between attractiveness and emotional expression. Cognit. Emot. 28, 298–310. doi: 10.1080/02699931.2013.817383

Goodwin, G. P., Piazza, J., and Rozin, P. (2014). Moral character predominates in person perception and evaluation. J. Pers. Soc. Psychol. 106, 148–168. doi: 10.1037/a0034726

Harker, L., and Keltner, D. (2001). Expressions of positive emotion in women’s college yearbook pictures and their relationship to personality and life outcomes across adulthood. J. Pers. Soc. Psychol. 80, 112–124. doi: 10.1037/0022-3514.80.1.112

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system of face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hehman, E., Stolier, R. M., Freeman, J. B., Flake, J. K., and Xie, S. Y. (2019). Toward a comprehensive model of face impressions: what we know, what we do not, and paths forward. Soc. Personal. Psychol. Compass 13:e12431. doi: 10.1111/spc3.12431

Hennig-Thurau, T., Groth, M., Paul, M., and Gremler, D. D. (2006). Are all smiles created equal? How emotional contagion and emotional labor affect service relationships. J. Mark. 70, 58–73. doi: 10.1509/jmkg.70.3.58

Hess, U., Blairy, S., and Kleck, R. E. (2000). The influence of facial emotion displays, gender, and ethnicity on judgments of dominance and affiliation. J. Nonverbal Behav. 24, 265–283. doi: 10.1023/A:1006623213355

Hu, Y., Zhang, Y., and Chen, H. (2018). The effect of target sex, sexual dimorphism, and facial attractiveness on perceptions of target attractiveness and trustworthiness. Front. Psychol. 9:942. doi: 10.3389/fpsyg.2018.00942

Huang, Z. H., Bai, X. W., Lin, L., and Song, Y. (2014). The mechanisms through which conscientiousness and neuroticism influence procrastination. Chin. J. Clin. Psych. 22, 140–144. doi: 10.16128/j.cnki.1005-3611.2014.01.006

Jaeger, B., Todorov, A. T., Evans, A. M., and Beest, I. V. (2020). Can we reduce facial biases? Persistent effects of facial trustworthiness on sentencing decisions. J. Exp. Soc. Psychol. 90, 104004–104012. doi: 10.1016/j.jesp.2020.104004

Jones, B. C., DeBruine, L. M., Flake, J. K., Aczel, B., Adamkovic, M., Alaei, R., et al. (2021). To which world regions does the valence–dominance model of social perception apply? Nat. Hum. Behav. 5, 159–169. doi: 10.1038/s41562-020-01007-2

Kim, S. H., Ryu, V., Ha, R. Y., Lee, S. J., and Cho, H. S. (2016). Perceptions of social dominance through facial emotion expressions in euthymic patients with bipolar I disorder. Compr. Psychiatry 66, 193–200. doi: 10.1016/j.comppsych.2016.01.012

Kraus, M. W., and Chen, T. W. D. (2013). A winning smile? Smile intensity, physical dominance, and fighter performance. Emotion 13, 270–279. doi: 10.1037/a0030745

Krumhuber, E., Manstead, A., Cosker, D., Marshall, D., Rosin, P. L., and Kappas, A. (2007). Facial dynamics as indicators of trustworthiness and cooperative behavior. Emotion 7, 730–735. doi: 10.1037/1528-3542.7.4.730

Landy, J. F., Piazza, J., and Goodwin, G. P. (2016). When it’s bad to be friendly and smart: the desirability of sociability and competence depends on morality. Personal. Soc. Psychol. Bull. 42, 1272–1290. doi: 10.1177/0146167216655984

Li, O., and Cheng, H. (2010). The retrospect and prospect of facial attractiveness. Adv. Psychol. Sci. 30, 521–528. doi: 10.3724/SP.J.1142.2010.40521

Li, Y. N., Jiang, Z. Q., Wu, Q., Leng, H. Z., and Li, D. (2020). Effect of happy and neutral expression on social perceptions of faces in young adults. Chin. Ment. Health J. 34, 613–619. doi: 10.3969/j.issn.1000-6729.2020.7.011

Liu, C., Ge, Y., Luo, W. B., and Luo, Y. J. (2010). Show your teeth or not: the role of the mouth and eyes in smiles and its cross-cultural variations. Behav. Brain Sci. 33, 450–452. doi: 10.1017/S0140525X10001263

Mehu, M., Little, A. C., and Dunbar, R. I. M. (2008). Sex differences in the effect of smiling on social judgments: An evolutionary approach. J. Soc. Evol. Cult. Psychol. 2, 103–121. doi: 10.1037/h0099351

Montepare, J. M., and Dobish, H. (2003). The contribution of emotion perceptions and their overgeneralizations to trait impressions. J. Nonverbal Behav. 27, 237–254. doi: 10.1023/A:1027332800296

Na, J., and Huh, J. (2016). Facial inferences of social relations precited Korean elections better than did facial inferences of competence. Korean J. Soc. Person. Psychol. 30, 37–49. doi: 10.21193/kjspp.2016.30.4.003

Nicholls, A. R., Polman, R., Levy, A. R., and Backhouse, S. H. (2008). Mental toughness, optimism, pessimism, and coping among athletes. Pers. Individ. Dif. 44, 1182–1192. doi: 10.1016/j.paid.2007.11.011

Oliveira, M., Garcia-Marques, T., Garcia-Marques, L., and Dotsch, R. (2020). Good to bad or bad to bad? What is the relationship between valence and the trait content of the big two? Eur. J. Soc. Psychol. 50, 463–483. doi: 10.1002/ejsp.2618

Olivola, C. Y., Funk, F., and Todorov, A. (2014). Social attributions from faces bias human choices. Trends Cogn. Sci. 18, 566–570. doi: 10.1016/j.tics.2014.09.007

Oosterhof, N. N., and Todorov, A. (2008). The functional basis of face evaluation. Proc. Natl. Acad. Sci. U. S. A. 105, 11087–11092. doi: 10.1073/pnas.0805664105

Parr, L. A., and Waller, B. M. (2006). Understanding chimpanzee facial expression: insights into the evolution of communication. Soc. Cogn. Affect. Neurosci. 1, 221–228. doi: 10.1093/scan/nsl031

Rezlescu, C., Penton, T., Walsh, V., Tsujimura, H., Scott, S. K., and Banissy, M. J. (2015). Dominant voices and attractive faces: the contribution of visual and auditory information to integrated person impressions. J. Nonverbal Behav. 39, 355–370. doi: 10.1007/s10919-015-0214-8

Rusconi, P., Sacchi, S., Brambilla, M., Capellini, R., and Cherubini, P. (2020). Being honest and acting consistently: boundary conditions of the negativity effect in the attribution of morality. Soc. Cogn. 38, 146–178. doi: 10.1521/soco.2020.38.2.146

Sandy, C., Rusconi, P., and Li, S. (2017). “Can humans detect the authenticity of social media accounts? On the impact of verbal and non-verbal cues on credibility judgements of Twitter profiles,” in IEEE International Conference on Cybernetics ; June 2017; IEEE.

Shu, D., Shen, S., and Huang, Y. (2017). Tao, virtue, benevolence, righteousness and propriety:on the core values of shu school. Contemp. Soc. Sci. 3, 68–85. doi: 10.19873/j.cnki.2096-0212.2017.03.007

Sutherland, C. A., Liu, X., Zhang, L., Chu, Y., Oldmeadow, J. A., and Young, A. W. (2018). Facial first impressions across culture: data-driven modeling of Chinese and British perceivers’ unconstrained facial impressions. Personal. Soc. Psychol. Bull. 44, 521–537. doi: 10.1177/0146167217744194

Sutherland, C. A., Young, A. W., and Rhodes, G. (2017). Facial first impressions from another angle: how social judgements are influenced by changeable and invariant facial properties. Br. J. Psychol. 108, 397–415. doi: 10.1111/bjop.12206

Thompson, D. F., and Meltzer, L. (1964). Communication of emotional intent by facial expression. J. Abnorm. Soc. Psychol. 68, 129–135. doi: 10.1037/h0044598

Todorov, A., and Oh, D. (2021). The structure and perceptual basis of social judgments from faces. Adv. Exp. Soc. Psychol. 63, 189–245. doi: 10.1016/bs.aesp.2020.11.004

Todorov, A., Olivola, C. Y., Dotsch, R., and Mende-Siedlecki, P. (2015). Social attributions from faces: determinants, consequences, accuracy, and functional significance. Annu. Rev. Psychol. 66, 519–545. doi: 10.1146/annurev-psych-113011-143831

Tsai, J. L., Ang, J. Y. Z., Blevins, E., Goernandt, J., Fung, H. H., Jiang, D., et al. (2016). Leaders’ smiles reflect cultural differences in ideal affect. Emotion 16, 183–195. doi: 10.1037/emo0000133

Ueda, Y., and Yoshikawa, S. (2018). Beyond personality traits: which facial expressions imply dominance in two-person interaction scenes? Emotion 18, 872–885. doi: 10.1037/emo0000286

Valentine, K. A., Li, N. P., Penke, L., and Perrett, D. I. (2014). Judging a man by the width of his face: the role of facial ratios and dominance in mate choice at speed-dating events. Psychol. Sci. 25, 806–811. doi: 10.1177/0956797613511823

Walker, M., Jiang, F., Vetter, T., and Sczesny, S. (2011). Universals and cultural differences in forming personality trait judgments from faces. Soc. Psychol. Personal. Sci. 2, 609–617. doi: 10.1177/1948550611402519

Wang, D. F., and Cui, H. (2003). Processes and preliminary results in the construction of the Chinese personality scale (QZPS). Acta Psychol. Sin. 35, 127–136.

Wang, H., Han, C., Hahn, A. C., Fasolt, V., Morrison, D. K., Holzleitner, I. J., et al. (2019). A data-driven study of Chinese participants' social judgments of Chinese faces. PLoS One 14:e0210315. doi: 10.1371/journal.pone.0210315

Wang, Z., He, X., and Liu, F. (2015). Examining the effect of smile intensity on age perceptions. Psychol. Rep. 117, 188–205. doi: 10.2466/07.PR0.117c10z7

Wang, Z., Mao, H., Li, Y. J., and Liu, F. (2017). Smile big or not? Effects of smile intensity on perceptions of warmth and competence. J. Consum. Res. 43, ucw062–ucw805. doi: 10.1093/jcr/ucw062

Westerman, D. L., Lanska, M., and Olds, J. M. (2015). The effect of processing fluency on impressions of familiarity and liking. J. Exp. Psychol. Learn. Mem. Cogn. 41, 426–438. doi: 10.1037/a0038356

Willis, J., and Todorov, A. (2006). First impressions: making up your mind after a 100-ms exposure to a face. Psychol. Sci. 17, 592–598. doi: 10.1111/j.1467-9280.2006.01750.x

Wilson, J. P., and Rule, N. O. (2015). Facial trustworthiness predicts extreme criminal-sentencing outcomes. Psychol. Sci. 26, 1325–1331. doi: 10.1177/0956797615590992

Wong, S. H. W., and Zeng, Y. (2017). Do inferences of competence from faces predict political selection in authoritarian regimes? Evidence from China. Soc. Sci. Res. 66, 248–263. doi: 10.1016/j.ssresearch.2016.11.002

Wu, Q., Liu, Y., Li, D., Leng, H. Z., and Jiang, Z. Q. (2020). Dimensions of personality perception from Chinese face. Psychol. Explor. 40, 177–182.

Zhang, J., Tian, L. M., and Zhang, W. X. (2012). Social competence: concepts and theoretical models. Adv. Psychol. Sci. 20, 1991–2000. doi: 10.3724/SP.J.1042.2013.01991

Keywords: happy expression, social perception, intensity, Chinese faces, trait impression

Citation: Li Y, Jiang Z, Yang Y, Leng H, Pei F and Wu Q (2021) The Effect of the Intensity of Happy Expression on Social Perception of Chinese Faces. Front. Psychol. 12:638398. doi: 10.3389/fpsyg.2021.638398

Edited by:

Fernando Barbosa, University of Porto, PortugalReviewed by:

Patrice Rusconi, University of Messina, ItalyBeatrice Biancardi, TELECOM ParisTech, France

Copyright © 2021 Li, Jiang, Yang, Leng, Pei and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhongqing Jiang, anpxY2pqQGhvdG1haWwuY29t; Yisheng Yang, eWFuZ3lzMTk2NUAxNjMuY29t

Yaning Li

Yaning Li Zhongqing Jiang

Zhongqing Jiang Yisheng Yang

Yisheng Yang Haizhou Leng

Haizhou Leng Fuhua Pei

Fuhua Pei Qi Wu

Qi Wu