- Faculty of Special Education and Rehabilitation, University of Belgrade, Belgrade, Serbia

Paralinguistic comprehension and production of emotions in communication include the skills of recognizing and interpreting emotional states with the help of facial expressions, prosody and intonation. In the relevant scientific literature, the skills of paralinguistic comprehension and production of emotions in communication are related primarily to receptive language abilities, although some authors found also their correlations with intellectual abilities and acoustic features of the voice. Therefore, the aim of this study was to investigate which of the mentioned variables (receptive language ability, acoustic features of voice, intellectual ability, social-demographic), presents the most relevant predictor of paralinguistic comprehension and paralinguistic production of emotions in communication in adults with moderate intellectual disabilities (MID). The sample included 41 adults with MID, 20–49 years of age (M = 34.34, SD = 7.809), 29 of whom had MID of unknown etiology, while 12 had Down syndrome. All participants are native speakers of Serbian. Two subscales from The Assessment Battery for Communication – Paralinguistic comprehension of emotions in communication and Paralinguistic production of emotions in communication, were used to assess the examinees from the aspect of paralinguistic comprehension and production skills. For the graduation of examinees from the aspect of assumed predictor variables, the following instruments were used: Peabody Picture Vocabulary Test was used to assess receptive language abilities, Computerized Speech Lab (“Kay Elemetrics” Corp., model 4300) was used to assess acoustic features of voice, and Raven’s Progressive Matrices were used to assess intellectual ability. Hierarchical regression analysis was applied to investigate to which extent the proposed variables present an actual predictor variables for paralinguistic comprehension and production of emotions in communication as dependent variables. The results of this analysis showed that only receptive language skills had statistically significant predictive value for paralinguistic comprehension of emotions (β = 0.468, t = 2.236, p < 0.05), while the factor related to voice frequency and interruptions, form the domain of acoustic voice characteristics, displays predictive value for paralinguistic production of emotions (β = 0.280, t = 2.076, p < 0.05). Consequently, this study, in the adult population with MID, evidenced a greater importance of voice and language in relation to intellectual abilities in understanding and producing emotions.

Introduction

According to DSM-5 (American Psychiatric Association, 2013), intellectual disability (ID) is defined as a disability characterized by significant limitations both in intellectual functioning and adaptive behavior, manifested in conceptual, social, and practical adaptive skills. The social aspect of adaptive skills, among other things, includes empathy, social-emotional reasoning, establishing interpersonal interactions, etc. (American Psychiatric Association, 2013). The ability to recognize and produce emotions contributes to successful social adaptation and facilitates coping in a social environment (Kashani-Vahid et al., 2018). On the other hand, difficulties in understanding emotions may affect social adaptation and integration of people with ID (Trentacosta and Fine, 2010) and cause aggressive outbursts (Matheson and Jahoda, 2005). People with ID usually have difficulties in recognizing emotions, which persists in adulthood (Scotland et al., 2015, 2016). The persisting problems in understanding emotions also affect finding and keeping a job, self-esteem, and thus the overall quality of life of these people (Banks et al., 2010). Since there are various adverse effects of reduced emotion recognition abilities in people with ID, research studies aimed at detecting the predictors of these abilities may be useful, as they may result in guidelines for targeted and effective interventions. Nowadays, this kind of research is increasingly gaining importance as a support for teaching models for automatic emotion recognition, i.e., the creation of technological assistive devices that facilitate the treatment of children and adults with neurodevelopmental disorders (Ma et al., 2019; Martinez-Martin et al., 2020; Landowska et al., 2022).

Paralinguistic elements include various sounds, tones, crying, laughing, sobbing, speech speed and pitch, rhythm and intonation (Rot, 2004), as well as facial expressions (e.g., in the eyes and lips region – eyes widening) (Angeleri et al., 2012; Tomić, 2014). They also answer the question: “How has something been said?”. Therefore, paralinguistic comprehension of emotions could be viewed as the skill to precisely recognize and interpret an emotional state based on non-verbal clues, such as facial expressions, prosody, and/or body language (Angeleri et al., 2012, 2016). On the other hand, paralinguistic production of emotions involves expressing emotions by using non-linguistic aspects of speech, such as mime and prosody (Angeleri et al., 2012, 2016). Many studies show that people with ID perform worse on tasks involving paralinguistic recognition, comprehension, and production of emotions, than typically developing people (Hippolyte et al., 2008; Scotland et al., 2015, 2016; Murray et al., 2019; McKenzie et al., 2021). There are several hypotheses that can potentially explain the mentioned difficulties (e.g., Albanese et al., 2010; Beck et al., 2012; Mazzoni et al., 2020). However, it is still not completely clear what these skills are most closely related to both in typically developing people and people with ID.

A deficit in language skills, especially receptive skills, in people with ID may be related to difficulties in understanding emotions. Emotional recognition includes knowledge of emotions acquired from life experience, just as receptive vocabulary includes knowledge of concepts acquired from experience (Beck et al., 2012). Joyce et al. (2006) show that adults with ID are better at recognizing than producing emotions, and that these abilities are related to receptive language skills. Also, the results of some studies indicate a significant positive correlation between receptive language skills and recognizing certain emotions from facial expressions in adults with Down syndrome (DS1), while such correlation was not found in typically developing adults (Hippolyte et al., 2008).

Compared to language skills, the literature on prosodic features of voice in people with ID is not so extensive. Still, people with ID express certain changes in acoustic voice characteristics compared to typically developing people, both in childhood and in adulthood (Lee et al., 2009; Amadó et al., 2016; Becker et al., 2017; O’ Leary et al., 2019). Various research studies on typically developing people show that acoustic voice characteristics [e.g., Pitch, Fundamental frequency (F0), F0 contour, Jitter, Intensity, Attack, Pauses, etc.] are significant factors in recognizing emotions (Scherer et al., 1991; Banse and Scherer, 1996; Juslin and Laukka, 2003). Numerous studies have examined how a listener recognizes emotions from acoustic voice characteristics in situations where trained actors express emotional states while reading syllables or meaningless texts (e.g., Banse and Scherer, 1996). Basic emotions are best recognized (happiness, sadness, anger, surprise, fear, and disgust) when the actors who express emotions belong to the same culture as the listeners (Juslin and Laukka, 2003; Pell et al., 2009). In addition, research shows that vocal sighs (expressed through laughter, a scream, an exclamation, etc.) contribute to recognizing different emotions in given speech situations (Sauter and Scott, 2007; Simon-Thomas et al., 2009; Sauter et al., 2010; Laukka et al., 2013). Based on the information from studies on typical population, the question arises as to whether and to what extent these acoustic characteristics affect paralinguistic recognition and production in people with ID.

Limitations in overall intellectual functioning may also contribute to difficulties in understanding and producing emotions in people with ID, as indicated by some authors in this field (Moore, 2001). Also, IQ is negatively correlated with processing emotional information in people with borderline intellectual functioning (Smirni et al., 2019). One explanation is that emotional and cognitive intelligence share a neuro-functional-anatomical network and that explains these correlations (Barbey et al., 2014, according to Smirni et al., 2019). Higher non-verbal IQ was also associated with better emotional understanding in the population of participants with autism with different levels of cognitive functioning (Salomone et al., 2019). The relationship between non-verbal intelligence and understanding of emotions certainly exists, but it is not simple and age mediates it (Albanese et al., 2010). Matheson and Jahoda (2005) show that emotion recognition is associated with receptive language skills but not IQ. However, some authors (Beck et al., 2012) suggest that intellectual abilities could also account for the relation between receptive vocabulary and recognizing emotions. The same authors propose further examination of the role of intelligence in the development of emotional competencies.

Age is another significant variable in recognizing emotions. It has been shown that older typically developing adults perform worse on emotion recognition tasks than younger adults (Sullivan et al., 2015). In a meta-analysis that examined the relationship between intelligence and emotional recognition in adults of a typical population, it was found that the relationship exists, but that age also plays a significant role in it. One explanation is that in the elderly, the stronger connection between intelligence and emotion recognition can be explained by the hypothesis of cognitive dedifferentiation, which claims that the structure of individuals’ cognitive abilities becomes less differentiated in old age (Schlegel et al., 2020). Furthermore, some research studies show that older adults produce emotions more slowly than younger adults (Dupuis and Pichora-Fuller, 2010). Apart from age, gender differences have also been found. Women are generally more successful in recognizing emotions than men (Sullivan et al., 2015; Gonçalves et al., 2018). Similarly, the ability to recognize emotions decreases with age in adults with ID (Matheson and Jahoda, 2005). An older study (McKenzie et al., 2000) on a sample of adults with mild and moderate ID showed that younger participants and those with milder ID were better at recognizing emotions.

The literature shows that so far researchers have focused more on examining people with autism spectrum disorder than people with ID with regard to paralinguistic comprehension and production. Compared to language and cognitive abilities, the field of social-emotional skills in this population has been less frequently examined (Pochon et al., 2017). Research has mainly examined emotion recognition abilities based on facial expressions and prosodic elements of speech in people with ID compared to typically developing population, mostly at a younger age (Fernández-Alcaraz et al., 2010; Cebula et al., 2017; Pochon et al., 2017; Martínez-González and Veas, 2019), but also in the elderly (Roch et al., 2020; Andrés-Roqueta et al., 2021).

Some studies deal with paralinguistic recognition and production of emotions in relation to certain cognitive aspects in children and adolescents (Joyce et al., 2006; Amadó et al., 2016; Djordjevic et al., 2016a; Pochon et al., 2017; Barisnikov et al., 2020). However, we are not familiar with studies examining which of these aspects predict mentioned abilities in people with ID. The authors of previous research studies associate the obtained results, which show difficulties in understanding and producing emotions in people with moderate ID (MID2), with deficits in language and cognitive abilities (Djordjevic et al., 2016a). However, we did not find any manuscripts that examined the predictors of these abilities in adults with MID.

Based on this literature survey, we consider to be interesting to examine the relation between these skills and acoustic features of voice, intellectual ability, and receptive language skills in adults with ID. The topicality of this subject is also reflected in our everyday reality, where we face the global effects of the pandemic on the clinical population due to social distancing and limited social contacts. Thus, the practical value of this manuscript is even greater in this vulnerable population.

This research aimed to determine how different variables related to understanding and producing emotions predicted these skills in adults with MID.

The aim of this manuscript was to determine which of the mentioned variables – receptive language skills, voice acoustics, intelligence, and social-demographic variables (gender, age, etiology, level of intellectual functioning) were the best predictors of paralinguistic comprehension and production of emotions in communication in adults with MID.

Materials and Methods

Sample

The sample included 41 adults with MID, 20–49 years of age (M = 34.34, SD = 7.809). There were 17 (41.5%) male and 24 (58.5%) female participants in the sample. With regard to etiology, the sample included participants with MID of unknown etiology (N = 29) and participants with DS (N = 12). These two subgroups did not significantly differ in gender [t(39) = 0.016, p > 0.05] and age [t(39) = −2.018, p > 0.05]. All participants are native speakers of Serbian. After the initial consent from parents, we obtained data on the participants’ level of intellectual functioning from their medical records. Medical records provided information about the level of intellectual disability (i.e., the information that the participant functioned at the MID level) for each participant included in the sample. Their medical records also showed that different psychiatrists used different assessment tests at different times and referred to different diagnostic classifications. Therefore, we did not use the IQ data for the purpose of this study, but only the level of ID.

Procedure

Prior to conducting the research, we obtained the approval of the Ethics Committee of the Faculty of Special Education and Rehabilitation, University of Belgrade (no. 109/1). After obtaining the approval, a request for conducting the research was sent to managers of three day-care centers for adults with disabilities in Belgrade. The request included a detailed explanation of the aim, sample, instruments, and procedure of the research. After obtaining their consent, the request was forwarded to special educators employed in day-care centers, and they contacted the user’s parents.

Contacted participants’ parents or guardians voluntarily signed informed consent for the participants to take part in the research, after which persons with MID also gave their consent. Only those participants who gave their consent to participate were included in the research. The participants were explained that they could leave the research at any time. First, a triage assessment of the participants was conducted. On the basis of anamnestic data, the inclusion criteria were the following: diagnosed moderate ID, absence of autism spectrum disorder characteristics, absence of associated psychiatric disorders and/or illnesses which could affect voice characteristics, age between 18 and 55 (absence of aging voice influence).

The research was conducted in day-care centers3 for adults with disabilities in Belgrade, whose services the participants used. The assessment was conducted in a speech therapy room, isolated from noise and distractors.

The participants were first given an explanation about the nature of the task. The Peabody scale and Raven scale were presented to participants using pictures.

The Paralinguistic scale was presented to participants on a computer. On the basis of the presented videos and questions asked questions, the participants answered the questions, and the examiner recorded their answers on a special answer sheet.

Phonation of the vowel/a/for the acoustic analysis was recorded directly on the computer through a microphone placed at a specific distance from the participant’s mouth.

Research Instruments

Assssment of Paralinguistic Recognition of Emotions

The Paralinguistic scale from The Assessment Battery for Communication (Sacco et al., 2008), which consists of Paralinguistic comprehension of emotions and Paralinguistic production of emotions, was used to assess paralinguistic ability to recognize emotions. The complete battery was translated from Italian into Serbian with a double-blind method, so that the professor translated the Italian into Serbian, and again the court interpreter translated the Serbian translation back into Italian. Then both versions were compared and finally corrected. Of the total translated batteries, we used the translated two subscales.

Paralinguistic comprehension of emotions includes videos in which actors express emotions by speaking in a fictional language, and participants have to recognize those emotions (e.g., the actor makes angry hand gestures and grimaces while speaking in a fictional language, and the question is: “In your opinion, which emotion is the actor expressing, how does he/she feel?”). The participant then chooses one of five given answers (e.g., “happy, surprised, angry, sad, something else”). The videos were presented via laptop. The videos lasted between 20 and 25 s. It was possible to get a grade of 0 or 1 for each task, depending on whether the answer was correct (score = 1) or incorrect (score = 0). The respondent chooses one of the five offered answers. This subscale includes eight items and the total score is eight points. Paralinguistic production of emotions includes eight items in which participants should react to examiner’s request (e.g., “Ask me what time it is. Ask me as if you were upset.”). The items we used included requests involving different emotions (bored, upset, disturb, happy, angry, sad and scary). The same scoring principle applied to this subscale. The total score in this subscale is eight points (see Supplementary Material).

The total value of the Cronbach’s alpha coefficient for the Paralinguistic scale is 0.70 according to the authors of this scale (Bosco et al., 2012). The Paralinguistic scale has previously been used in the Serbian-speaking area in the population of people with ID, and it had a satisfactory value of the Cronbach’s alpha coefficient, which was 0.94 for the subscale of paralinguistic production, and 0.79 for the subscale of paralinguistic comprehension (Djordjevic et al., 2016b). In our research it was 0.617 for the subscale of paralinguistic comprehension, and 0.767 for the subscale of paralinguistic production.

Assessment of Receptive Language Skills

Peabody Picture Vocabulary Test, PPVT – 4 (Dunn and Dunn, 2007) – Peabody Picture Vocabulary Test was used to assess receptive language skills. This test includes 227 items, divided into 19 categories of 12 words. Testing is conducted by presenting the participants with four different pictures and instructing them to point to the picture which corresponds to the word said by the examiner. Two trials are given first, followed by testing in sets. Electronic version of the test was used in this research, and the pictures were shown on computer screen. Testing was stopped when a participant gave eight incorrect answers in one set. Raw score in this test was obtained by subtracting the total number of incorrect answers from the total number of items.

The Peabody test has high internal reliability, ranging from 0.92 to 0.98 (Dunn and Dunn, 2007). This instrument has previously been used in the Serbian-speaking area in the population of people with ID and it had satisfactory values (Djordjevic et al., 2018). In our research it was 0.995.

Assessment of Acoustic Voice Characteristics

Computerized Speech Lab (“Kay Elemetrics” Corp., model 4300), Multi-Dimensional Voice Program (MDVP) – MDVP software was used for the analysis of acoustic voice characteristics. This program provides a detailed graphical and numerical presentation for 33 parameters. The examiner instructed the participants to sound the vowel a calmly and spontaneously, for 3–4 s. In accordance with the author’s recommendations, this procedure was repeated three times in order to select a voice of the best quality. Sony ECM-T150 microphone was placed at a distance of 5 cm from the participant’s mouth. The signal was recorded directly on the computer. In this research, the following acoustic parameters were analyzed: frequency variability parameters (F0, Jitt, and PPQ), amplitude variability parameters (Shim, vAm, and APQ), voice interruption parameter (DVB), noise and tremor estimation parameters (NHR, VTI, and SPI).

Multi-Dimensional Voice Program is often used for voice assessment in different population of participants in the Serbian-speaking area (Petrovic-Lazic et al., 2009, 2014).

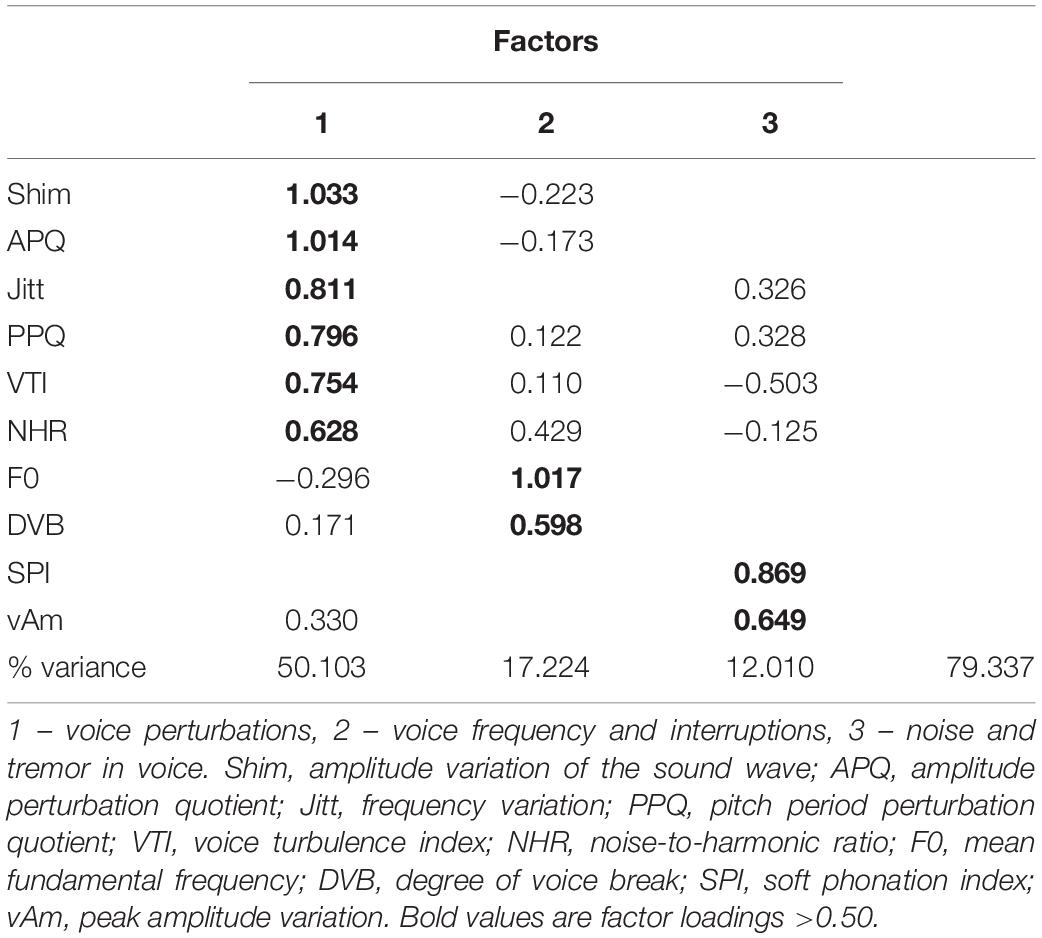

Factor analysis, with Promax rotation, was used in this research to single out latent dimensions which summarize the parameters of acoustic voice characteristics (Table 1). The analysis of main components, with the principle of distinguishing only those dimensions with eigenvalues greater than 1, singled out three factors. Together, they explain 80% of the variance in individual differences with regard to voice.

Table 1. Matrix of the results of analyzing main components used for acoustic voice characteristics with Promax rotation.

The obtained results are in accordance with the Scree plot chart, which also recommends that three dimensions be singled out. The first factor includes the following variables: Shim, APQ, Jitt, PPQ, VTI, and NHR (voice perturbations). This factor explains 50% of the variance. The second factor includes F0 and DVB variables (voice frequency and interruptions), and it explains additional 17% of the variance. The third factor includes SPI and vAm variables (noise and tremor in voice). It explains additional 12% of the variance. The obtained three factors (voice perturbations, voice frequency and interruptions, noise and tremor in voice) will be used in further analyses.

The Cronbach’s alpha coefficient for the acoustic parameters used in our research was 0.856.

Assessment of Intellectual Functioning

Raven’s Progressive Matrices (Raven and Raven, 1998) – Raven’s progressive matrices were used to assess intellectual functioning. This instrument consists of non-verbal tasks which measure the general intelligence factor. The tasks are organized in such a way that there is a pattern, but with one segment always missing. The participant has to discover a rule according to which the patterns are arranged, and choose the one that is missing form several given options. The matrix consists of 60 tasks arranged in five sets. Arrangement of tasks is based on difficulty. Sets of tasks are arranged with regard to topics (supplementing, finding analogy, changing, permutation, and division). Electronic version of the matrix was used for the purpose of this research. The participants were first explained that an element was missing at the top of the page, while the answers were given at the bottom of the page. After selecting an answer at the bottom, participants pointed with their finger and the number of the answer was recorded. Each participant first did a trial, after which the assessment began. All correct answers were added up to get a total raw score, which shows the level of intellectual functioning.

The Cronbach’s alpha coefficient for this scale in our research was 0.815.

Results

Participants’ Results on All Applied Scales

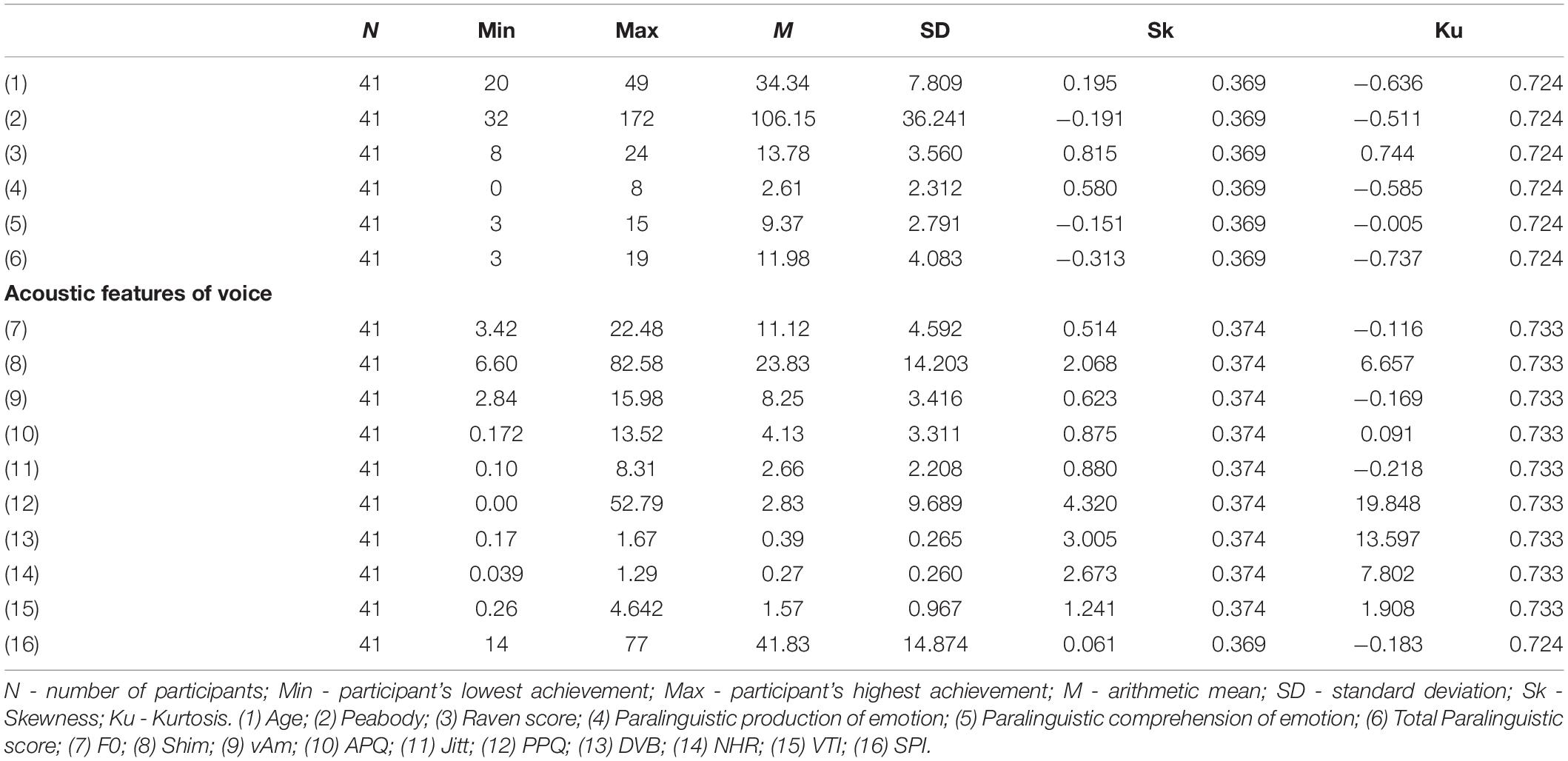

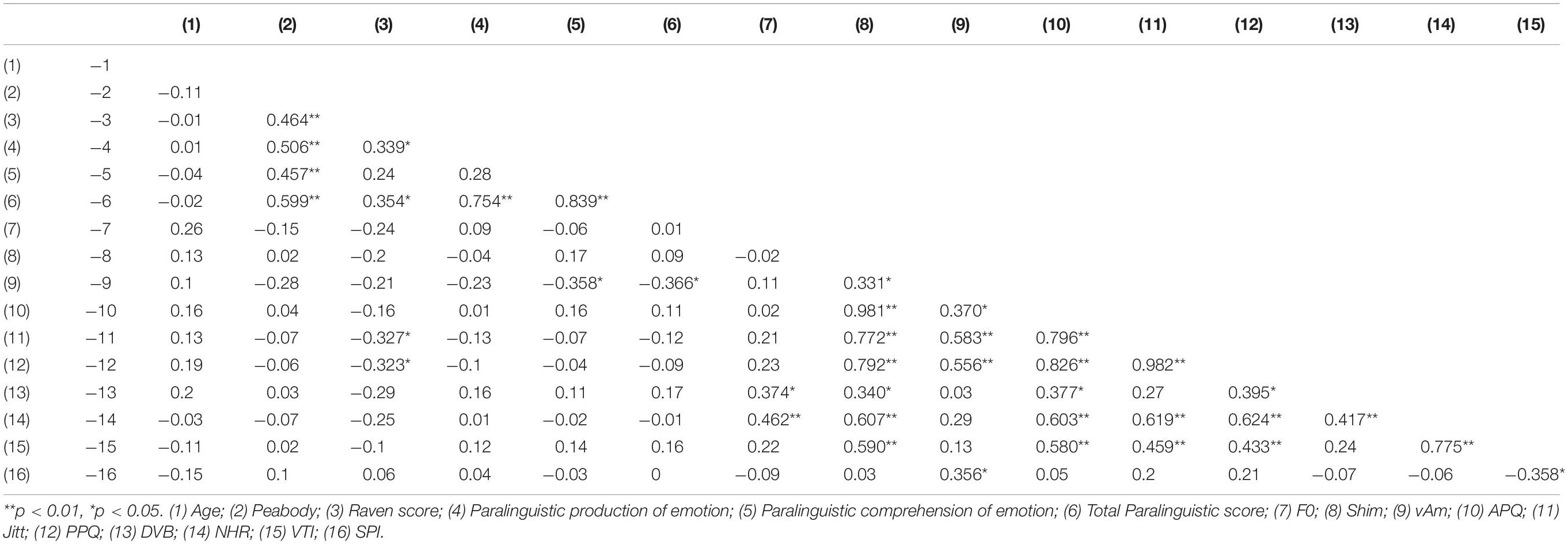

Table 2 shows descriptive statistics of participants’ achievements in all analyzed variables. The rows show the variables, and the columns show the determined data values – the number of the participants who answered the questions, the score range of the variables, mean, standard deviation, skewness and its standard error, kurtosis and its standard error. Raven’s progressive matrices scores were within the expected range given the examined population. High deviation of skewness and kurtosis parameters and their standard errors, which indicates not meeting the conditions for normal distribution of results, was in our manuscript observed in certain variables from the Acoustic voice characteristics domain: Shim, DVB, NHR, and PPQ (Table 2).

Table 3 shows the correlation coefficients of all used variables. The rows show numbered variables, and their names are shown in the description of columns. Spearman’s correlation coefficients are shown in the matrix cells. The variables of the same domain highly correlate with each other.

The table shows that acoustic voice characteristics highly correlate with each other. Paralinguistic production of emotions and paralinguistic comprehension are highly interrelated. Also, Raven and Peabody are highly interrelated.

Predictors of Paralinguistic Comprehension of Emotions

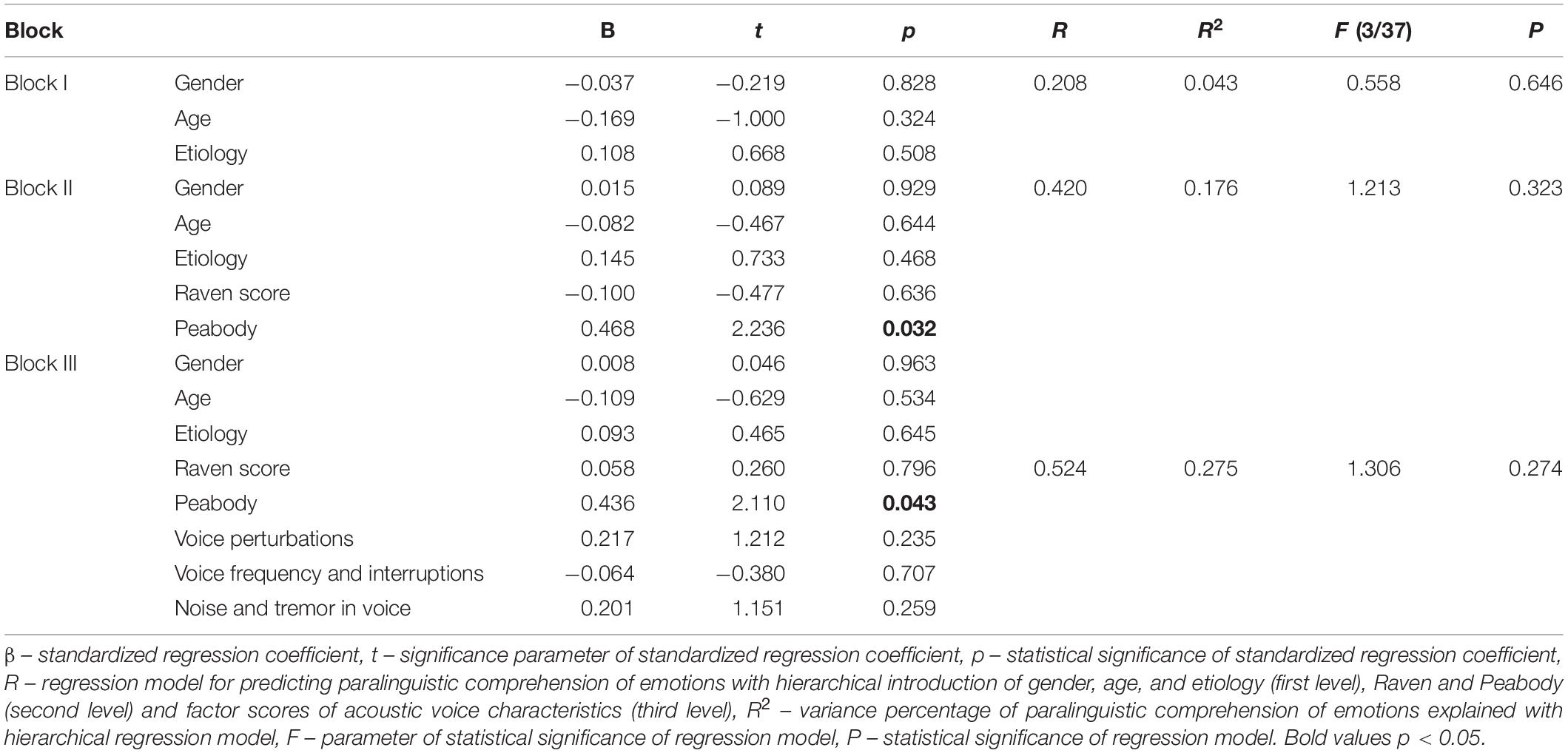

Hierarchical regression analysis was used to determine the predictors of paralinguistic comprehension of emotions (Table 4). This analysis evaluated the possibility to predict paralinguistic comprehension of emotions by the set of variables used in the research (gender, age, etiology, level of intellectual functioning, receptive language skills), as well as by three factors of acoustic voice characteristics (voice perturbation, voice frequency and interruptions, noise, and tremor in voice). The predictors were introduced hierarchically in blocks. The first block included gender, age, and etiology. The second block included variables from the domain of receptive language skills, level of intellectual functioning, and which were added to the variables from the first block. The third block included variables from the domain of acoustic voice characteristics which were grouped according to the results of factor analysis (Table 1).

Table 4. Results of hierarchical regression analysis for predicting paralinguistic comprehension of emotions.

The results of this regression analysis singled out two significant models. The first explains about 4% of the criteria variance [R2 = 0.043, F(3/37) = 0.558, P > 0.05], and the second explains about 17% [R2 = 0.176, F(3/37) = 1.213, P > 0.05]. In this analysis, receptive language skills proved to be a significant predictor of paralinguistic comprehension of emotions (β = 0.468, t = 2.236, p < 0.05).

Predictors of Paralinguistic Production of Emotions

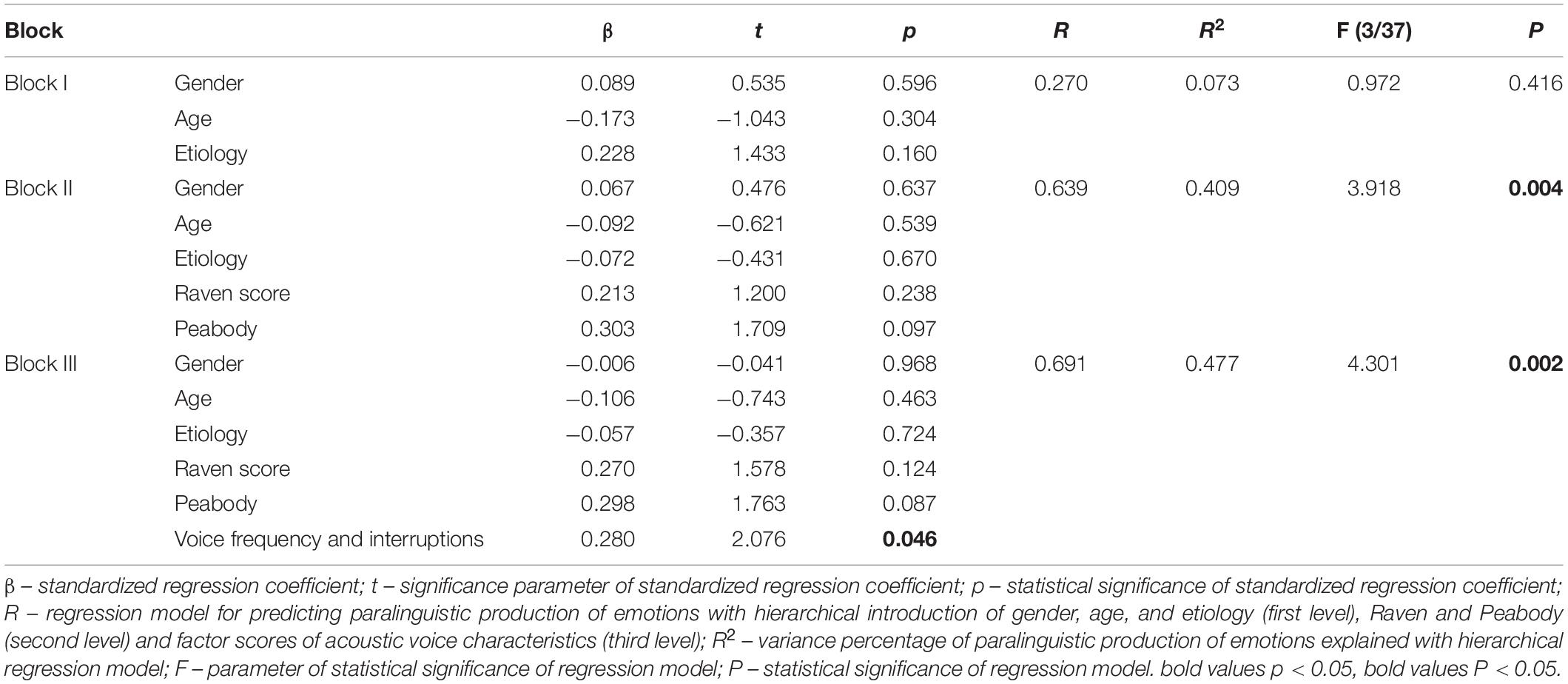

Hierarchical regression analysis was applied to determine the predictors of paralinguistic production of emotions (Table 5). This analysis evaluated the possibility to predict paralinguistic production of emotions by the set of variables used in the research (gender, age, etiology, level of intellectual functioning, receptive language skills), as well as by three factors of acoustic voice characteristics (voice perturbation, voice frequency and interruptions, noise and tremor in voice). The predictors were introduced hierarchically in blocks, in the same way as in the process of determining the predictors of paralinguistic comprehension of emotions.

Table 5. Results of hierarchical regression analysis for predicting paralinguistic production of emotions.

Regression analysis singled out three significant models. The first explains about 7% [R2 = 0.073, F(3/37) = 0.972, P > 0.05], the second about 40% [R2 = 0.409, F(3/37) = 3.918, P < 0.01], and the third 48% [R2 = 0.477, F(3/37) = 4.301, P < 0.01] of criteria variance. In this analysis, the second factor from the domain of acoustic voice characteristics (voice frequency and interruptions) proved to be a significant predictor of paralinguistic production of emotions. (β = 0.280, t = 2.076, p < 0.05).

Discussion

Earlier manuscripts on paralinguistic comprehension and production of emotions in people with ID assumed that these abilities were related to language and cognitive deficits. However, we are not familiar with manuscripts that more precisely determine which language and cognitive domains best predict these abilities. Our manuscript examined whether specific acoustic voice characteristics, receptive language skills, gender, age, etiology, and the level of intellectual functioning had a predictive value in paralinguistic comprehension and production of emotions in adults with MID.

Regression analysis showed that, from three hierarchical groups of predictors, only receptive language skills from the second group were a significant predictor of paralinguistic comprehension of emotions. Interestingly, receptive language skills were a significant predictor of understanding emotions, although the actors in our study spoke a non-existent language while expressing basic emotions. In addition to the required answers, the researchers also noted down the comments of the participants (which were not included in this analysis) who tried to detect the language the actor spoke (e.g., “I know, he is speaking Japanese”) or tried to “translate” the spoken message (e.g., “He said that he was sad and that he was crying because of that”). All this indicates that people with MID in our sample largely relied on the meaning of the spoken messages, even when they did not mean anything. Receptive language skills measured by the Peabody test for assessing receptive speech are not limited by expressive language abilities or working memory limitations (Loveall et al., 2016). Thus, we can assume that receptive language skills measured by the Peabody receptive speech test are a measure of lower, perceptual cognitive abilities compared to the level of intellectual functioning measured by Raven’s progressive matrices that measures higher, more complex forms of cognition. Pochon et al. (2017) showed that the measures of intellectual functioning obtained by Raven’s progressive matrices did not predict the ability to understand emotions in adolescents with DS, unlike in typically developing adolescents. According to Beck et al. (2012), receptive language skills refer to coded verbal concepts gained from experience in relation to the environment. The same authors state the possibility that recognizing emotions is closely related to receptive language skills since the conceptualization of emotions may arise from lexical-semantic differentiation. Another possible explanation is that there is a common conceptualization mechanism that connects receptive language skills and emotion recognition (Beck et al., 2012). The fact that these abilities are conditioned by learning and experience can also be the reason why we did not get significant results for Raven’s matrices measures. They are non-verbal cognitive measures that refer to general intelligence (Raven and Raven, 1998) unrelated to experience and learning (Cattell, 1971). Furthermore, Joyce et al. (2006) showed that adults with ID were better in recognizing emotions than in producing them, and that these abilities were related to receptive language skills. These findings confirmed previous ones on the importance of receptive language skills (Dagnan et al., 2000).

With regard to paralinguistic production, the participants in our research were required to utter specific content and show a specific emotion in the way they speak (e.g., “Tell me to close the door. Say it angrily.”; “Ask me where the doctor is. Do it sadly”). The results of regression analysis in predicting paralinguistic production of emotions showed that the second factor in the acoustic voice characteristics domain (frequency and interruptions) from the third group was a significant predictor of paralinguistic production of emotions. This factor refers to the mean value of fundamental frequency and the percentage of parts with voice interruptions. In one research (Mendhakar et al., 2019), these parameters proved to be significantly different in premature babies compared to term babies. DVB represents the relation between the total duration of the parts with voice interruptions and the duration of the complete voice sample. It was shown that high-risk babies had a higher degree of voice interruptions. A higher degree of interruptions indicates bigger pauses and distribution of non-vocal components in a child’s cry (Mendhakar et al., 2019). The authors assume that these characteristics are attributed to immature larynx innervation in premature babies (Mendhakar et al., 2019). Research into acoustic voice characteristics in adults with ID of unknown etiology and DS shows that these people have a smaller range of frequency variability, which indicates voice monotony (Gautam and Singh, 2016; Corrales-Astorgano et al., 2018). As an element of prosody, F0 has a significant paralinguistic function (Ní Chasaide and Gobl, 2004). Difficulties in producing emotions can be associated with monotonous and less melodic speech in people with ID. Certain intonation-melodic variability and a larger range of fundamental voice frequency are necessary to express emotions. It is assumed that structural differences in the larynx level become more pronounced in adulthood (O’ Leary et al., 2019) and can be related to emotion production.

Emotion production is a more complex ability than emotion comprehension and, apart from perception, requires the use of non-linguistic (prosodic) elements of speech (Scherer, 2003). The assessed receptive language skills and the level of intellectual functioning were measured by scales that require answering based on recognition only, without expressive production. Therefore, it was expected that they did not prove to be significant predictors. This was also assumed by the author whose research showed no significant correlation between paralinguistic production of emotions and the level of intellectual functioning measured by Raven’s progressive matrices (Djordjevic et al., 2016a).

According to Beck et al. (2012), people with more developed lexical abilities are more successful in the general conceptualization of verbal concepts, and thus probably in the conceptualization of emotions. Many research studies show that people with DS have significantly more pronounced deficits in the linguistic domain compared to their cognitive capacities (Laws and Bishop, 2004; Zampini et al., 2016). Therefore, the sample size in this manuscript may be the reason why etiology did not have a significant influence as a variable that significantly predicted the criteria variables. This was also confirmed by other studies such as Wishart et al. (2007), who compared the ability to recognize emotions in participants with ID of different etiology and found that only children with DS had worse results than typically developing children. A significant positive correlation was also found between receptive language skills measured by the Peabody picture test and recognizing certain emotions from facial expressions in adults with Down syndrome (DS) as opposed to the control group (typically developing adults) (Hippolyte et al., 2008). The authors assumed that some emotional expressions required more complex semantic representation but preferred to explain that by a specific emotional deficit characteristic of participants with DS.

An older study by Leung and Singh (1998) showed that adults with ID had a poorer ability to recognize emotions than typically developing children. Also, in a sample of adults with mild and moderate ID, it was shown that younger participants and people with milder ID recognized emotions better (McKenzie et al., 2000). This was also confirmed by Matheson and Jahoda (2005), who found that the ability to recognize emotions decreased with age in adults with ID. Possibly a more even age structure of the participants and a bigger sample would influence the obtained results, which could be a recommendation for future research.

Limitations

This research has several limitations. One refers to sample size, and future studies should include many more participants so that the results could be generalized. Apart from the size, another sample-related limitation is the participants’ structure. Thus, future studies should include more participants with known etiology, classified into groups with different syndromes. The application of tests that require different levels of cognitive information processing could be expanded in future research by using additional tests at the same level of processing. In addition to verbal tasks, accompanying pictographic material should also be included in assessing people with ID for the purpose of understanding orders.

Only one instrument was used in this research to assess comprehension and production of emotions. Thus, conclusions should be drawn with caution and future studies should verify these results using additional instruments.

Conclusion

The results obtained in our study showed that receptive language skills had a predictive value in paralinguistic comprehension of emotions, and voice frequency and interruptions, from the domain of acoustic voice characteristics, predicted paralinguistic production of emotions.

Since recognizing emotions is the basis of social interaction, as pointed out by many authors, it is important to encourage the skills which are most closely related to comprehension and production of emotions so that they could develop as well. Much more research in this area is needed before more precise educational and therapeutic guidelines can be established. With regard to this, future studies could go in the direction of longitudinal monitoring of these abilities in people with MID. Also, more precise measures of the level of intellectual functioning, in addition to Raven’s Progressive Matrices, should be included in further analyses.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Ethics Committee of the Faculty of Special Education and Rehabilitation, University of Belgrade (no. 109/1). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work, and approved it for publication.

Funding

This manuscript was a result of research within the projects of the Ministry of Education, Science and Technological Development of the Republic of Serbia (no. 451-03-68/2022-14).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.884242/full#supplementary-material

Footnotes

- ^ Down syndrome (DS) is the most common genetic cause of intellectual disability. The overall prevalence varies from 1 in 800 to 1,200. Most people with DS function within the mild to moderate ID range. These people have underdeveloped language and communication skills primarily due to speech delays, poor articulation due to narrow oral cavity, and not understanding language (Agarwal Gupta and Kabra, 2013).

- ^ There are four basic ID levels: mild, moderate (MID), severe, and profound. About 10% of people with ID function at the MID level. According to the DSM-4, persons with moderate ID have IQ in the range of 35–49 IQs. Description of this condition indicates that these people can take care of themselves, travel to familiar places in their community, and learn basic skills related to safety and health. Their self-care requires moderate support. According to all these previous statements, it can be concluded that MID can perform most daily activities independently with occasional support (American Psychiatric Association, 2013). Although they can be successful in basic communication, they can have difficulties understanding and comprehending non-verbal and subtle signals in social situations.

- ^ Day-care services in Serbia are social protection services for children and young people with physical impairment and intellectual disability. According to the current Policy, the purpose of day-care services is to improve the quality of life of users in their social environment through maintaining and developing social, psychological, and physical functions and skills, in order to enable them to live as independently as possible (The Policy was published in the “Official Gazette of RS, No. 42/2013 from 14th May 2013, and became effective on 22nd May 2013).

References

Agarwal Gupta, N., and Kabra, M. (2013). Diagnosis and management of down syndrome. Indian J. Pediatr. 81, 560–567. doi: 10.1007/s12098-013-1249-7

Albanese, O., De Stasio, S., Chiacchio, C. D., Fiorilli, C., and Pons, F. (2010). Emotion comprehension: the impact of nonverbal intelligence. J. Genet. Psychol. 171, 101–115. doi: 10.1080/00221320903548084

Albertini, G., Bonassi, S., Dall’Armi, V., Giachetti, I., Giaquinto, S., and Mignano, M. (2010). Spectral analysis of the voice in down syndrome. Res. Dev. Disabil. 31, 995–1001. doi: 10.1016/j.ridd.2010.04.024

Amadó, A., Serrat, E., and Vallès-Majoral, E. (2016). The role of executive functions in social cognition among children with Down syndrome: relationship patterns. Front. Psychol. 7:1363. doi: 10.3389/fpsyg.2016.01363

American Psychiatric Association (2013). Diagnostic and Statistical Manual of Mental Disorders, 5th Edn. Washington, D.C: American Psychiatric Association, doi: 10.1176/appi.books.9780890425596

Angeleri, R., Bosco, F. M., Gabbatore, I., Bara, B. G., and Sacco, K. (2012). Assessment battery for communication (ABaCo): normative data. Behav. Res. Methods 44, 845–861. doi: 10.3758/s13428-011-0174

Angeleri, R., Gabbatore, I., Bosco, F. M., Sacco, K., and Colle, L. (2016). Pragmatic abilities in children and adolescents with autism spectrum disorder: a study with the ABaCo battery. Minerva Psichiatr. 57, 93–103.

Andrés-Roqueta, C., Soria-Izquierdo, E., and Górriz-Plumed, A. B. (2021). Exploring different aspects of emotion understanding in adults with down syndrome. Res. Dev. Disabil. 114:103962. doi: 10.1016/j.ridd.2021.103962

Banks, P., Jahoda, A., Dagnan, D., Kemp, J., and Williams, V. (2010). Supported employment for people with intellectual disability: The effects of job breakdown on psychological well-being. J. Appl. Res. Intellect. Disabil. 23, 344–354. doi: 10.1111/j.1468-3148.2009.00541.x

Banse, R., and Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037/0022-3514.70.3.614

Barbey, A. K., Colom, R., and Grafman, J. (2014). Distributed neural system for emotional intelligence revealed by lesion mapping. Soc. Cogn. Affect. Neurosci. 9, 265–272. doi: 10.1093/scan/nss124

Barisnikov, K., Thomasson, M., Stutzmann, J., and Lejeune, F. (2020). Relation between processing facial identity and emotional expression in typically developing school-age children and those with Down syndrome. Appl. Neuropsychol. Child 9, 179–192. doi: 10.1080/21622965.2018.1552867

Beck, L., Kumschick, I. R., Eid, M., and Klann-Delius, G. (2012). Relationship between language competence and emotional competence in middle childhood. Emotion 12, 503–514. doi: 10.1037/a0026320

Becker, S., Nonn, K., Graessel, E., Becker, A. M., and Schuster, M. (2017). Voice disorders of adults with intellectual disability. JSM Commun. Disord. 1:1002.

Bosco, F. M., Angeleri, R., Zuffranieri, M., Bara, B. G., and Sacco, K. (2012). Assessment battery for communication: development of two equivalent forms. J. Commun. Dis. 45, 290–303. doi: 10.1016/j.jcomdis.2012.03.002

Cattell, R. B. (1971). Abilities: Their Structure, Growth, and Action. Boston, MA: Houghton Mifflin.

Cebula, K. R., Wishart, J. G., Willis, D. S., and Pitcairn, T. K. (2017). Emotion recognition in children with down syndrome: influence of emotion label and expression intensity. Am. J. Intellect. Dev. Disabil. 122, 138–155. doi: 10.1352/1944-7558-122.2.138

Corrales-Astorgano, M., Escudero-Mancebo, D., and Gonzalez-Ferreras, C. (2018). Acoustic characterization and perceptual analysis of the relative importance of prosody in speech of people with down syndrome. Speech Commun. 99, 90–100. doi: 10.1016/j.specom.2018.03.006

Dagnan, D., Chadwick, P., and Proudlove, J. (2000). Toward an assessment of suitability of people with mental retardation for cognitive therapy. Cogn. Ther. Res. 24, 627–636. doi: 10.1023/a:1005531226519

Djordjevic, M., Glumbic, N., and Brojcin, B. (2016a). Paralinguistic abilities of adults with intellectual disability. Res. Dev. Disabil. 48, 211–219. doi: 10.1016/j.ridd.2015.11.001

Djordjevic, M., Glumbic, N., and Brojcin, B. (2016b). Relation between paralinguistic skills and social skills in adults with mild and moderate intellectual disability. Spec. Edukac. Rehabil. 15, 265–285. doi: 10.5937/specedreh15-11313

Djordjevic, M., Glumbic, N., and Brojcin, B. (2018). Differences between pragmatic abilities of adults with down syndrome and persons with intellectual disability of unknown etiology - preliminary research. Belgrade Sch. Spec. Educ. Rehabil. 24, 29–40.

Djordjevic, M., Glumbic, N., and Memisevic, H. (2020). Socialization in adults with intellectual disability: the effects of gender, mental illness, setting type, and level of intellectual disability. J. Mental Health Res. Intellect. Disabil. 13, 364–383. doi: 10.1080/19315864.2020.1815914

Dunn, L. M., and Dunn, D. M. (2007). Peabody Picture Vocabulary Test, (PPVT-4). New York, NY: Pearson Assessments, doi: 10.1037/t15144-000

Dupuis, K., and Pichora-Fuller, M. K. (2010). Use of affective prosody by young and older adults. Psychol. Aging 25, 16–29. doi: 10.1037/a0018777

Fernández-Alcaraz, C., Extremera, M. R., García-Andres, E., and Fernando Molina, C. (2010). Emotion recognition in down’s syndrome adults: neuropsychology approach. Procedia Soc. Behav. Sci. 5, 2072–2076. doi: 10.1016/j.sbspro.2010.07.415

Gautam, S., and Singh, L. (2016). “A comparative study: Spectral parameter in speech of intellectually disabled and normal population,” in Proceedings of the 3rd International Conference on Computing for Sustainable Global Development, (New Delhi), 4009–4013.

Gonçalves, A. R., Fernandes, C., Pasion, R., Ferreira-Santos, F., Barbosa, F., and Marques-Teixeira, J. (2018). Effects of age on the identification of emotions in facial expressions: a meta-analysis. PeerJ. 6:e5278. doi: 10.7717/peerj.5278

Hippolyte, L., Barisnikov, K., and Van der Linden, M. (2008). Face processing and facial emotion recognition in adults with down syndrome. Am. J. Mental Retard. 113, 292–306. doi: 10.1352/0895-80172008113

Joyce, T., Globe, A., and Moody, C. (2006). Assessment of the component skills for cognitive therapy in adults with intellectual disability. J. Appl. Res. Intellect. Disabil. 19, 17–23. doi: 10.1111/j.1468-3148.2005.00287.x

Juslin, P. N., and Laukka, P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychol. Bull. 129, 770–814. doi: 10.1037/0033-2909.129.5.770

Kashani-Vahid, L., Mohajeri, M., Moradi, H., and Irani, A. (2018). “Effectiveness of computer games of emotion regulation on social skills of children with intellectual disability,” in Proceedings of the 2018 2nd National and 1st International Digital Games Research Conference: Trends, Technologies, and Applications (DGRC), (Tehran), doi: 10.1109/DGRC.2018.8712024

Landowska, A., Karpus, A., Zawadzka, T., Robins, B., Erol Barkana, D., Kose, H., et al. (2022). Automatic emotion recognition in children with autism: a systematic literature review. Sensors 22:1649. doi: 10.3390/s22041649

Laukka, P., Elfenbein, H. A., Söder, N., Nordström, H., Althoff, J., Chui, W., et al. (2013). Cross-cultural decoding of positive and negative non-linguistic emotion vocalizations. Front. Psychol. 4:353. doi: 10.3389/fpsyg.2013.00353

Laws, G., and Bishop, D. V. M. (2004). Pragmatic language impairment and social deficits in Williams syndrome: a comparison with Down’s syndrome and specific language impairment. Int. J. Lang. Commun. Disord. 39, 45–64. doi: 10.1080/13682820310001615797

Lee, M. T., Thorpe, J., and Verhoeven, J. (2009). Intonation and phonation in young adults with down syndrome. J. Voice 23, 82–87. doi: 10.1016/j.jvoice.2007.04.006

Leung, J. P., and Singh, N. N. (1998). Recognition of facial expressions of emotion by Chinese adults with mental retardation. Behav. Modif. 22, 205–216. doi: 10.1177/01454455980222008

Loveall, S. J., Channell, M. M., Phillips, B. A., Abbeduto, L., and Conners, F. A. (2016). Receptive vocabulary analysis in Down syndrome. Res. Dev. Disabil. 55, 161–172. doi: 10.1016/j.ridd.2016.03.018

Martínez-González, A. E., and Veas, A. (2019). Identification of emotions and physiological response in individuals with moderate intellectual disability. Int. J. Dev. Disabil. 67, 397–402. doi: 10.1080/20473869.2019.1651142

Martinez-Martin, E., Escalona, F., and Cazorla, M. (2020). Socially assistive robots for older adults and people with autism: an overview. Electronics 9, 367–383. doi: 10.3390/electronics9020367

Ma, K., Wang, X., Yang, X., Zhang, M., Girard, J. M., and Morency, L. P. (2019). “ElderReact: a multimodal dataset for recognizing emotional response in aging adults,” in In 2019 International Conference on Multimodal Interaction, (New York, NY: Machinery), 349–357. doi: 10.1145/3340555.3353747

Martin, G. E., Klusek, J., Estigarribia, B., and Roberts, J. E. (2009). Language characteristics of individuals with Down Syndrome. Top. Lang. Disord. 29, 112–132. doi: 10.1097/TLD.0b013e3181a71fe1

Matheson, E., and Jahoda, A. (2005). Emotional understanding in aggressive and nonaggressive individuals with mild or moderate mental retardation. Am. J. Mental Retard. 110, 57–67. doi: 10.1352/0895-80172005110

Mazzoni, N., Landi, I., Ricciardelli, P., Actis-Grosso, R., and Venuti, P. (2020). Motion or emotion? Recognition of emotional bodily expressions in children with autism spectrum disorder with and without intellectual disability. Front. Psychol. 11:478. doi: 10.3389/fpsyg.2020.00478

McKenzie, K., Murray, G., Murray, A., Whelan, K., Cossar, J., Murray, K., et al. (2021). Emotion recognition and processing style in children with an intellectual disability. Learn. Disabil. Pract. 22, 20–24. doi: 10.7748/LDP.2019.E1982

McKenzie, K., Matheson, E., McKaskie, K., Hamilton, L., and Murray, G. C. (2000). Impact of group training on emotion recognition in individuals with a learning disability. Br. J. Learning Disabil. 28, 143–147. doi: 10.1046/j.1468-3156.2000.00061.x

Mendhakar, A. M., Sreedevi, N., Arunraj, K., and Shanbal, J. C. (2019). Infant screening system based on cry analysis. Int. Ann. Sci. 6, 1–7. doi: 10.21467/ias.6.1.1-7

Moore, D. G. (2001). Reassessing emotion recognition performance in people with mental retardation: a review. Am. J. Mental Retard. 106, 481–502. doi: 10.1352/0895-80172001106<0481:rerpip<2.0.co

Murray, G., McKenzie, K., Murray, A., Whelan, K., Cossar, J., Murray, K., et al. (2019). The impact of contextual information on the emotion recognition of children with an intellectual disability. J. Appl. Res. Intellect. Disabil. 32, 152–158. doi: 10.1111/jar.12517

Ní Chasaide, A., and Gobl, C. (2004). “Voice quality and f0 in prosody: towards a holistic account,” in Proceedings of the 2nd International Conference on Speech Prosody, (Nara), 189–196.

O’ Leary, D., Lee, A., O’Toole, C., and Gibbon, F. (2019). Perceptual and acoustic evaluation of speech production in down syndrome: a case series. Clin. Linguist. Phon. 34, 1–2. doi: 10.1080/02699206.2019.1611925

Pell, M. D., Monetta, L., Paulmann, S., and Kotz, S. A. (2009). Recognizing emotions in a foreign language. J. Nonverbal Behav. 33, 107–120. doi: 10.1007/s10919-008-0065-7

Petrovic-Lazic, M., Babac, S., Ivankovic, Z., and Kosanovic, R. (2009). Multidimensional acoustic analysis of pathological voice. Serb. Arch. Med. 137, 234–238. doi: 10.2298/SARH0906234P

Petrovic-Lazic, M., Jovanovic, N., Kulic, N., Babac, S., and Jurisic, V. (2014). Acoustic and perceptual characteristics of the voice in patients with vocal polyps after surgery and voice therapy. J. Voice 29, 241–246. doi: 10.1016/j.jvoice.2014.07.009

Pochon, R., Touchet, C., and Ibernon, L. (2017). Emotion recognition in adolescents with Down Syndrome: a nonverbal approach. Brain Sci. 7:55. doi: 10.3390/brainsci7060055

Raven, J., and Raven, J. C. (1998). Manual for Raven’s Progressive Matrices and Vocabulary Scales Standard Progressive Matrices. Perth: Naklada Slap.

Roch, M., Pesciarelli, F., and Leo, I. (2020). How individuals with down syndrome process faces and words conveying emotions? Evidence from a priming paradigm. Front. Psychol. 11:692. doi: 10.3389/fpsyg.2020.00692

Sacco, K., Angeleri, R., Bosco, F. M., Colle, L., Mate, D., and Bara, B. G. (2008). Assessment battery for communication - ABaCo: a new instrument for the evaluation of pragmatic abilities. J. Cogn. Sci. 9, 111–157. doi: 10.17791/jcs.2008.9.2.111

Salomone, E., Bulgarelli, D., Thommen, E., Rossini, E., and Molina, P. (2019). Role of age and IQ in emotion understanding in autism spectrum disorder: implications for educational interventions. Eur. J. Spec. Educ. 34, 383–392. doi: 10.1080/08856257.2018.1451292

Sauter, D. A., Eisner, F., Calder, A. J., and Scott, S. K. (2010). Perceptual cues in nonverbal vocal expressions of emotion. Q. J. Exp. Psychol. 63, 2251–2272. doi: 10.1080/17470211003721642

Sauter, D. A., and Scott, S. K. (2007). More than one kind of happiness: can we recognize vocal expressions of different positive states? Motiv. Emot. 31, 192–199. doi: 10.1007/s11031-007-9065-x

Scherer, K. R. (2007). “Componential emotion theory can inform models of emotional competence,” in The Science of Emotional Intelligence: Knowns and Unknowns, eds G. Matthews, M. Zeidner, and R. D. Roberts (Oxford: Oxford University Press), 101–126.

Scherer, K. (2003). Vocal communication of emotion: a review of research paradigms. Speech Commun. 40, 227–256. doi: 10.1016/S0167-6393(02)00084-5

Scherer, K. R., Banse, R., Wallbott, H. G., and Goldbeck, T. (1991). Vocal cues in emotion encoding and decoding. Motiv. Emot. 15, 123–148. doi: 10.1007/bf00995674

Schlegel, K., Palese, T., Mast, M., Rammsayer, T. H., Hall, J. A., and Murphy, N. (2020). A meta-analysis of the relationship between emotion recognition ability and intelligence. Cogn. Emot. 34, 329–351. doi: 10.1080/02699931.2019.1632801

Scotland, J., Cossar, J., and McKenzie, K. (2015). The ability of adults with an intellectual disability to recognise facial expressions of emotion in comparison with typically developing individuals: a systematic review. Res. Dev. Disabil. 41-42, 22–39. doi: 10.1016/j.ridd.2015.05.007

Scotland, J., McKenzie, K., Cossar, J., Murray, A. L., and Michie, A. (2016). Recognition of facial expressions of emotion by adults with intellectual disability: is there evidence for the emotion specificity hypothesis? Res. Dev. Disabil. 48, 69–78. doi: 10.1016/j.ridd.2015.10.018

Simon-Thomas, E. R., Keltner, D. J., Sauter, D., Sinicropi-Yao, L., and Abramson, A. (2009). The voice conveys specific emotions: Evidence from vocal burst displays. Emotion 9, 838–846. doi: 10.1037/a0017810

Smirni, D., Smirni, P., Di Martino, G., Operto, F., and Carotenuto, M. (2019). Emotional awareness and cognitive performance in borderline intellectual functioning young adolescents. J. Nerv. Ment. Dis. 207, 365–370. doi: 10.1097/NMD.0000000000000972

Sullivan, S., Campbell, A., Hutton, S. B., and Ruffman, T. (2015). What’s good for the goose is not good for the gander: age and gender differences in scanning emotion faces. J. Gerontol. Ser. B. Psychol. Sci. Soc. Sci. 72, 441–447. doi: 10.1093/geronb/gbv033

Trentacosta, C. J., and Fine, S. E. (2010). Emotion knowledge, social competence, and behavior problems in childhood and adolescence: a meta-analytic review. Soc. Dev. 19, 1–29. doi: 10.1111/j.1467-9507.2009.00543.x

Wishart, J. G., Cebula, K. R., Willis, D. S., and Pitcairn, T. K. (2007). Understanding of facial expressions of emotion by children with intellectual disabilities of differing aetiology. J. Intellect. Disabil. Res. 51, 551–563. doi: 10.1111/j.1365-2788.2006.00947.x

Keywords: intellectual ability, moderate intellectual disability, paralinguistic comprehension, paralinguistic production, receptive language ability, acoustic features of voice, Serbian language

Citation: Calić G, Glumbić N, Petrović-Lazić M, Đorđević M and Mentus T (2022) Searching for Best Predictors of Paralinguistic Comprehension and Production of Emotions in Communication in Adults With Moderate Intellectual Disability. Front. Psychol. 13:884242. doi: 10.3389/fpsyg.2022.884242

Received: 25 February 2022; Accepted: 06 June 2022;

Published: 08 July 2022.

Edited by:

Petar Čolović, University of Novi Sad, SerbiaReviewed by:

Fasih Haider, University of Edinburgh, United KingdomSara Filipiak, Marie Curie-Sklodowska University, Poland

Copyright © 2022 Calić, Glumbić, Petrović-Lazić, Đorđević and Mentus. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gordana Calić, Y2FsaWNnb3JkYW5hQHlhaG9vLmNvbQ==

†These authors have contributed equally to this work

Gordana Calić

Gordana Calić Nenad Glumbić†

Nenad Glumbić† Mirjana Đorđević

Mirjana Đorđević Tatjana Mentus

Tatjana Mentus