- School of Human Sciences, University of Greenwich, London, United Kingdom

This article provides a comprehensive critique of psychology's overreliance on statistical modelling at the expense of epistemologically grounded measurement processes. It highlights that statistics deals with structural relations in data regardless of what these data represent, whereas measurement establishes traceable empirical relations between the phenomena studied and the data representing information about them. These crucial epistemic differences are elaborated using Rosen's general model of measurement, involving the coherent modelling of the (1) objects of research, (2) data generation (encoding), (3) formal manipulation (e.g., statistical analysis) and (4) result interpretation regarding the objects studied (decoding). This system of interrelated modelling relations is shown to underlie metrologists' approaches for tackling the problem of epistemic circularity in physical measurement, illustrated in the special cases of measurement coordination and calibration. The article then explicates psychology's challenges for establishing genuine analogues of measurement, which arise from the peculiarities of its study phenomena (e.g., higher-order complexity, non-ergodicity) and language-based methods (e.g., inbuilt semantics). It demonstrates that psychometrics cannot establish coordinated and calibrated modelling relations, thus generating only pragmatic quantifications with predictive power but precluding epistemically justified inferences on the phenomena studied. This epistemic gap is often overlooked, however, because many psychologists mistake their methods' inbuilt semantics—thus, descriptions of their study phenomena (e.g., in rating scales, item variables, statistical models)—for the phenomena described. This blurs the epistemically necessary distinction between the phenomena studied and those used as means of investigation, thereby confusing ontological with epistemological concepts—psychologists' cardinal error. Therefore, many mistake judgements of verbal statements for measurements of the phenomena described and overlook that statistics can neither establish nor analyze a model's relations to the phenomena explored. The article elaborates epistemological and methodological fundamentals to establish coherent modelling relations between real and formal study system and to distinguish the epistemic components involved, considering psychology's peculiarities. It shows that epistemically justified inferences necessitate methods for analysing individuals' unrestricted verbal responses, now advanced through artificial intelligence systems modelling natural language (e.g., NLP algorithms, LLMs). Their increasing use to generate standardised descriptions of study phenomena for rating scales and constructs, by contrast, will only perpetuate psychologists' cardinal error—and thus, psychology's crisis.

1 Statistics vs. measurement

Psychology cherishes its sophisticated ‘measurement' and modelling techniques for enabling quantitative research—the hallmark of modern science. A closer look reveals, however, that only methods of statistical data analysis are well elaborated, which together with pertinent research designs (e.g., between-subjects) fill our books and journals on psychological research methods. This emphasis reflects the prevailing view that statistics constitutes psychology's approach for ‘measuring' its non-observable study phenomena (e.g., in psychometrics). This assumption, however, is based on epistemic errors because statistics neither is measurement nor is statistics necessary for measurement.

1.1 Different scientific activities for different epistemic purposes

Measurement and measurement scales have been successfully developed in physics and metrology—the science of physical measurement and its application (JCGM100:2008, 2008, p. 2.2)—long before statistics was invented (Abran et al., 2012; Fisher, 2009; Uher, 2022b, 2023a). Measurement and statistics involve different scientific activities designed for different epistemic (knowledge-related) purposes.

Measurement requires traceable empirical interactions with the specific quantities to be measured in the phenomena and properties under study—the measurands (e.g., person A's body temperature but not A's body weight or volume; person B's duration of speaking in a specific situation). Epistemically justifiable inferences from observable indications of these empirical interactions back to the measurands require theoretical knowledge about both the object of research and the objects used as measuring instruments as well as their conceptualisation in a defined process structure within a realist framework (Mari et al., 2021; Schrödinger, 1964; von Neumann, 1955). Its empirical implementation necessitates unbroken documented connection chains that establish proportional (quantitative) relations of the results with both (1) the measurand's unknown quantity (e.g., A's body temperature; B's duration of speaking)—the principle of data generation traceability—and (2) a known reference quantity (e.g., international units). This reference is necessary to establish the results' quantitative meaning regarding the specific property studied (e.g., how warm or how long that is)—the principle of numerical traceability (Uher, 2018a, 2020b, 2021c,d, 2022a,b, 2023a). Process structures thus-established allow for deriving epistemically justified information about specific quantities that are assumed to exist in an object of research and for representing this information in sign systems that are unambiguously interpretable regarding those measurands (e.g., ‘TPers_A = 36.9°C'; ‘dPers_B = 16.2 mins/h').

Statistics, by contrast, enables probabilistic descriptions of what might happen as a consequence of complex, poorly understood and possibly random events and processes as well as of constraints that are set by stochastic boundaries (e.g., distribution curves). In data sets, statistical methods allow us to identify regularities beyond pure randomness, to group cases and compare groups by their parameters, to model and extrapolate patterns as well as to estimate error and uncertainty for justifying inferences from samples to distribution patterns in hypothetical populations (Romeijn, 2017). Statistics builds on theories that define the workings of the analytical operations performed (e.g., mathematical statistics, probability theory, item response theory). But it does not build on theories about the objects of research that scientists may aim to analyse for prevalences, differences and trends, and that may be as diverse as diseases, therapeutic treatments, behaviours, intellectual abilities, financial markets, policies and others. Statistics is mute about the specific phenomena and properties analysed (Strauch, 1976). That is, statistics concerns the analysis of data sets regardless of what these data are meant to represent. Therefore, it does not require a term denoting the specific quantity to be measured in the real study objects—the measurand. This may explain why most psychologists are unfamiliar with this basic term. Their focus on ‘true scores' in statistical modelling obscures the epistemic distinction between the real quantity to be measured and the measurement results used to estimate it (Strom and Tabatadze, 2022).

Statistics, however, is fundamental to so-called psychological ‘measurement'. Why?

1.2 Psychological ‘measurement': Statistical analysis enabling pragmatic quantification

Psychological ‘measurement' (e.g., psychometrics) is aimed at discriminating well and consistently between cases (e.g., individuals, groups) and in ways considered important (e.g., social relevance, relations to future outcomes). Therefore, ‘measuring instruments' (e.g., intelligence tests, rating ‘scales') are designed such as to generate data structures that are useful for these purposes (e.g., specific distribution or association patterns). To this pragmatic end, statistical analyses are indispensable (Uher, 2021c).

Many psychologists believe that measurement involves the assignment of numbers and capitalises on their mathematically defined quantitative meaning. In measurement, however, we assign numerical values whose specific quantitative meaning is conventionally agreed and traceable to defined reference quantities (e.g., of the International System, SI; BIPM, 2019). We know this from everyday life. The numerical values of ‘1 kilogram', ‘2.205 pounds', ‘35.274 ounces' and ‘0.1575 stones' differ—but they all indicate the same quantity of weight. These differences originate from once arbitrary decisions on specific quantities that were used as references. Meanwhile, their specific quantitative meaning is conventionally agreed and indicated by the measurement unit (e.g., ‘kg', ‘lb', ‘oz', ‘st'). The unit also indicates the specific kind of property measured—‘1' ‘kilogram' is not ‘1' ‘litre', ‘1' ‘metre' or ‘1' ‘volt'. That is, the measurement unit specifies also a result's qualitative meaning, such as whether it is a quantity of weight, volume, length or electric potential.

In psychology, by contrast, ‘measurement' values are commonly presented without a unit, thus indicating neither specific qualities (e.g., frequency, intensity or level of agreement) nor specific quantities of them (e.g., how often or how much of that). Unit-free values—therefore called ‘scores'—are meaningless in themselves. It requires statistics to first create quantitative meaning for scores from their distribution patterns and interrelations within specific samples (e.g., differential comparisons within age groups), leading to reference group effects (Uher, 2021c,d, 2022a, 2023a). Hence, psychometric scores constitute quantifications that are created for specific uses, contexts and pragmatic purposes, such as for making decisions or projections in applied settings (Barrett, 2003; Dawes et al., 1989; Newfield et al., 2022). This highlights first important differences from genuine measurement.

Specifically, psychometric theories and empirical practices clearly build on a pragmatic utilitarian framework that is aimed at producing quantitative results with statistically desirable and practically useful structures. By contrast, traceable relations to empirical interactions with the quantities to be measured (measurands) in individuals and to known reference quantities are neither conceptualised nor empirically implemented. Nevertheless, psychometricians explicitly aim for “measuring the mind” (Borsboom, 2005)—thus, for ‘measuring' specific quantitative properties that individuals are assumed to possess. Accordingly, psychometric results (e.g., IQ scores) are interpreted as quantifications of the studied individuals' psychical1 properties (e.g., intellectual abilities) and used for making decisions about these individuals (e.g., education). Here, psychometricians clearly invoke the realist framework underlying physical measurement, ignoring that they have theoretically and empirically established instead only a pragmatic utilitarian framework (Uher, 2021c,d, 2022b, 2023a). This confusion of two incompatible epistemological frameworks entails numerous conceptual and logical errors, as this article will show (Section 3).

But regardless of this, psychometricians' declared aims and result interpretations highlight basic ideas of measurement that are shared by metrologists, physicists and psychologists alike. These ideas can be formulated as two epistemic criteria as the most basic common denominators considered across the sciences that characterise an empirical process as one of measurement. Criterion 1 is the epistemically justified attribution of the generated quantitative results to the specific properties to be measured (measurands) in the study phenomena and to nothing else. Criterion 2 is the public interpretability of the results' quantitative meaning with regard to those measurands (Uher, 2020b, 2021a,b, 2023a). These two criteria are key to distinguish genuine measurement from other processes of quantification (e.g., opinions, judgements, evaluations). Importantly, this is not to classify some approaches as ‘superior' or ‘inferior'. Rather, a criterion-based approach to define measurement is essential for scrutinising the epistemic fundamentals of a field's pertinent theories and practices. This allowed for identifying, for example, the epistemological inconsistencies inherent to psychometrics (Uher, 2021c,d). A criterion-based approach is also crucial for pinpointing commonalities and differences between sciences.

Concretely, it shows that proposals to ‘soften', ‘weaken' or ‘widen' the definition of measurement for psychology (Eronen, 2024; Finkelstein, 2003; Mari et al., 2015) are epistemically mistaken. Certainly, psychology does not need the high levels of measurement accuracy and precision, as necessary for sciences like physics, chemistry and medicine where errors can lead to the collapse of buildings, chemical explosions or drug overdoses. But changing the definition of a scientific activity as fundamental to empirical science as that of measurement cannot establish its comparability across sciences. Much in contrast, it undermines comparability because it fails to provide guiding principles that specify how analogues of measurement that appropriately consider the study phenomena's peculiarities can be implemented in other sciences. The methodological principles of data generation traceability and numerical traceability, for example, can guide the design of discipline-specific processes that allow for meeting the two epistemic criteria of measurement also in psychology (Uher, 2018a, 2020b, 2022a,b, 2023a). Labelling disparate procedures uniformly as ‘measurement' also obscures essential and necessary differences in the theories and practices established in different sciences as well as inevitable limitations. Ultimately, measurement is not just any activity to generate numerical data but involves defined processes that justify the high public trust placed in it (Abran et al., 2012; Porter, 1995).

In everyday life, the differences between measurement and pragmatic quantification are obvious. When we buy apples in a shop, we measure their weight. But we do not measure their price. The apples' weight is a quantitative property, which they possess as real physical objects. It is determined through their traceable empirical interaction with a measuring instrument (therefore, we must place the apples on the weighing scale). The specific quantity of weight that we denote as ‘1 kg' is (nowadays) specified through known reference quantities, which are internationally agreed and thus, universally interpretable. The apples' price, by contrast, is pragmatically quantified for various purposes within a given socio-economic system that go beyond the apples' specific physical properties (e.g., sales, profit). Thus, the price merely indicates an attributed quantitative value—an attribute—which therefore changes across contexts and times (e.g., supply, demand and tariffs). The price's specific quantitative meaning, in turn, is derived from its relations to other attributed socio-economic values (e.g., currency, inflation) and can therefore vary in itself as well.

Psychological ‘measurement' (e.g., psychometrics) is widely practised and justified for its pragmatic and utilitarian purposes. However, it does not involve genuine measurement as often claimed (therefore here put in inverted commas, as are the psychological terms ‘scales' and ‘instruments'2). Instead, psychological ‘measurement' serves other epistemic purposes for which statistics is indispensable. Its focus is on analysing structures in data sets, such as data on persons' test performances or responses to rating ‘scales', in order to derive hypothetical quantitative relations, such as levels of “person ability” or item difficulty in Rasch modelling and item response theory. But the specific ways in which the analysed data—as well as the performances and responses encoded in these data—are generated in the first place are still hardly studied (Lundmann and Villadsen, 2016; Rosenbaum and Valsiner, 2011; Toomela, 2008; Uher, 2015c, 2018a,b, 2021a, 2022a, 2023a; Uher and Visalberghi, 2016; Uher et al., 2013b; Wagoner and Valsiner, 2005).

Indeed, rating ‘scales', psychology's most widely-used method of quantitative data generation, remained largely unchanged since their invention a century ago (Likert, 1932; Thurstone, 1928). This is astounding given that rating data form the basis of much of the empirical evidence used to test scientific hypotheses and theories, to make decisions about individuals in applied settings (Uher, 2018a, 2022b, 2023a) and to evaluate the effectiveness of interventions and trainings (Truijens et al., 2019b).

Hence, there is a gap between psychologists' numerical data and statistically modelled quantitative results, on the one side, and the specific entities to be quantified in their actual study phenomena, on the other. Bridging this gap requires measurement.

1.3 Metrological frameworks of measurement: Inherent limitations for psychology

Unlike statistics, measurement concerns how the data are generated—thus, the ways in which they are empirically connected both with the unknown quantity to be measured (measurand) in the study phenomena (data generation traceability) and with known reference quantities (numerical traceability). Unbroken documented connection chains determine how the measurement results can be interpreted regarding these measurands qualitatively and quantitatively (epistemic criteria 1 and 2). These two traceability principles underlie the measurement processes established in metrology (Uher, 2020b, 2022a).

Metrology, however, is concerned solely with the measurement of physical properties in non-living nature that feature invariant relations. Such properties are always related to one another in the same ways (under specified conditions), such as the fundamental relations between electric voltage (V), current (I) and resistance (R). It is this peculiarity that enables their formalisation in immutable laws (e.g., Ohm's law) and non-contradictory mathematical equations (formulas, e.g., V = I * R). Invariant relations can also be codified in natural constants (e.g., gravity on Earth, speed of light) and internationally agreed systems of units (e.g., metric, imperial; JCGM100:2008, 2008). Therefore, physical laws and formulas, natural constants and international units of measurement are assumed to be universally applicable.

But precisely because of this peculiarity, metrological frameworks cannot be applied or translated to psychological research as directly as metrologists and psychometricians increasingly propose (e.g., Fisher and Pendrill, 2024; Mari et al., 2021). This is because psychology's objects of research feature peculiarities not known from the non-living ‘world'. These involve variability, change and novel properties emerging from complex relations leading to irreversible development as well as the non-physicality and abstract nature of experience, and others (Hartmann, 1964; Morin, 1992). Moreover, unlike physical sciences and metrology, psychology explores not just objects and relations of specific phenomena (e.g., behaviours) in themselves but also, and in particular, their individual (subjective) and socio-cultural (inter-subjective) perception, interpretation, apprehension and appraisal (Wundt, 1896). These complex study phenomena are described in multi-referential conceptual systems—constructs. These conceptual systems cannot be studied with physical measuring instruments but require language-based methods instead (Kelly, 1955; Uher, 2022b, 2023b). Language, however, involves complexities that present unparalleled challenges to standardised quantitative inquiry, as this article will demonstrate. To tackle the challenges posed by psychology's complex study phenomena and methods of inquiry, metrology provides neither conceptual nor methodological fundamentals (Uher, 2018a, 2020b, 2022a).

Attempts to directly apply a science's concepts and theories to study phenomena not explored by that science involve challenges that cannot be mastered using the conceptual and methodological fundamentals of just single disciplines. Such interdisciplinary3 approaches underlie the current attempts to directly apply or translate metrological concepts to psychological ‘measurement' and psychometrics (e.g., Fisher and Pendrill, 2024; Mari et al., 2021). But they overlook fundamental ontological, epistemological and methodological differences. Developing epistemically justified research frameworks that are applicable across the sciences in that they are appropriate to the peculiarities of their different objects of research requires scrutinising the basic presuppositions of all the sciences involved. Such elaborations are at the core of transdisciplinarity, which is therefore applied in this article.

1.4 Transdisciplinarity: A new way of thinking and scientific inquiry

Transdisciplinarity has gained recognition as a new way of thinking about and engaging in scientific inquiry (Montuori, 2008; Nicolescu, 2002, 2008). Unlike all other types of disciplinary collaboration (e.g., cross-, multi- and inter-4), transdisciplinarity is aimed at analysing complex systems and complex (“wicked”) real-world problems, at developing an understanding of the ‘world' in its complexity and at generating unitary intellectual frameworks beyond specific disciplinary perspectives. To enable such explorations, transdisciplinarity5 not only relies on disciplinary paradigms but also transcends and integrates them. It is aimed at exposing disciplinary boundaries to facilitate the understanding of implicit assumptions, processes of inquiry and resulting knowledge as well as to discover hidden connections between different disciplines and their respective bodies of knowledge. A key focus is on identifying non-obvious differences, particularly in the underlying ontology (philosophy and theory of being), epistemology (philosophy and theory of knowing) and methodology (philosophy and theory of methods, connecting abstract philosophy of science with empirical research). That is, transdisciplinarity explores research questions that can be comprehended only outside of the boundaries of separate disciplines and therefore challenges the entire framework of disciplinary thinking and knowledge organisation (Bernstein, 2015; Gibbs and Beavis, 2020; Piaget, 1972; Pohl, 2011; Uher, 2024).

The present analyses—spanning concepts and approaches from psychology, social sciences, life sciences, physical sciences and metrology—rely on the Transdisciplinary Philosophy-of-Science Paradigm for Research on Individuals (TPS Paradigm;6 for introductory overviews, see Uher, 2015b, 2018a, pp. 3-8; Uher, 2021b, pp. 219–222; Uher, 2022b, pp. 3–6). This meta-paradigm was already applied, amongst others, to explore the epistemological and methodological fundamentals of data generation methods (Uher, 2018a, 2019, 2021a) and of theories and practices of measurement and pragmatic quantification across the sciences (Uher, 2020b, 2022a) as well as to scrutinise those underlying psychometrics and quantitative psychology (Uher, 2021c,d, 2022b, 2023a). Pertinent key problems were demonstrated empirically in multi-method comparisons (e.g., Uher et al., 2013a; Uher and Visalberghi, 2016; Uher et al., 2013b). The present article builds upon and substantially extends these previous analyses.

1.5 Outline of this article

This article offers a novel and ambitious transdisciplinary approach to advance the epistemological and methodological fundamentals of quantitative psychology by integrating relevant concepts from mathematical biophysics, metrology, linguistics, complexity science, psychology and philosophy of science. It elaborates the epistemic process structure of measurement, highlighting crucial differences to statistics (e.g., psychometrics). A focus is on elaborating the ways in which the peculiarities of language, when used in psychological methods (e.g., rating ‘scales', variables and models), obscure the epistemic differences between them. This confusion contributes to the common yet erroneous belief that statistics could constitute psychology's approach for ‘measuring' its study phenomena. The analyses are made with regard to psychology but equally apply to pertinent practices in other sciences.

Section 2 introduces fundamentals of measurement. These involve the measurement problem—the epistemically necessary distinction between the object of research and the objects used as measuring instruments as well as the conceptualisation of how the latter can provide information on the former. Measurement also requires the formal representation of observations in sign systems (e.g., data, formal models). The section presents Rosen's system of modelling relations as an abstract general model of the entire measurement process—from (1) conceptualising the objects of research, over (2) generating the data, (3) formally manipulating these data (e.g., statistical analysis) up to (4) interpreting the formal outcomes obtained with regard to the actual study phenomena. This process model is shown to underlie metrologists' approaches for tackling the problem of circularity in physical measurement, illustrated in the special cases of measurement coordination and calibration.

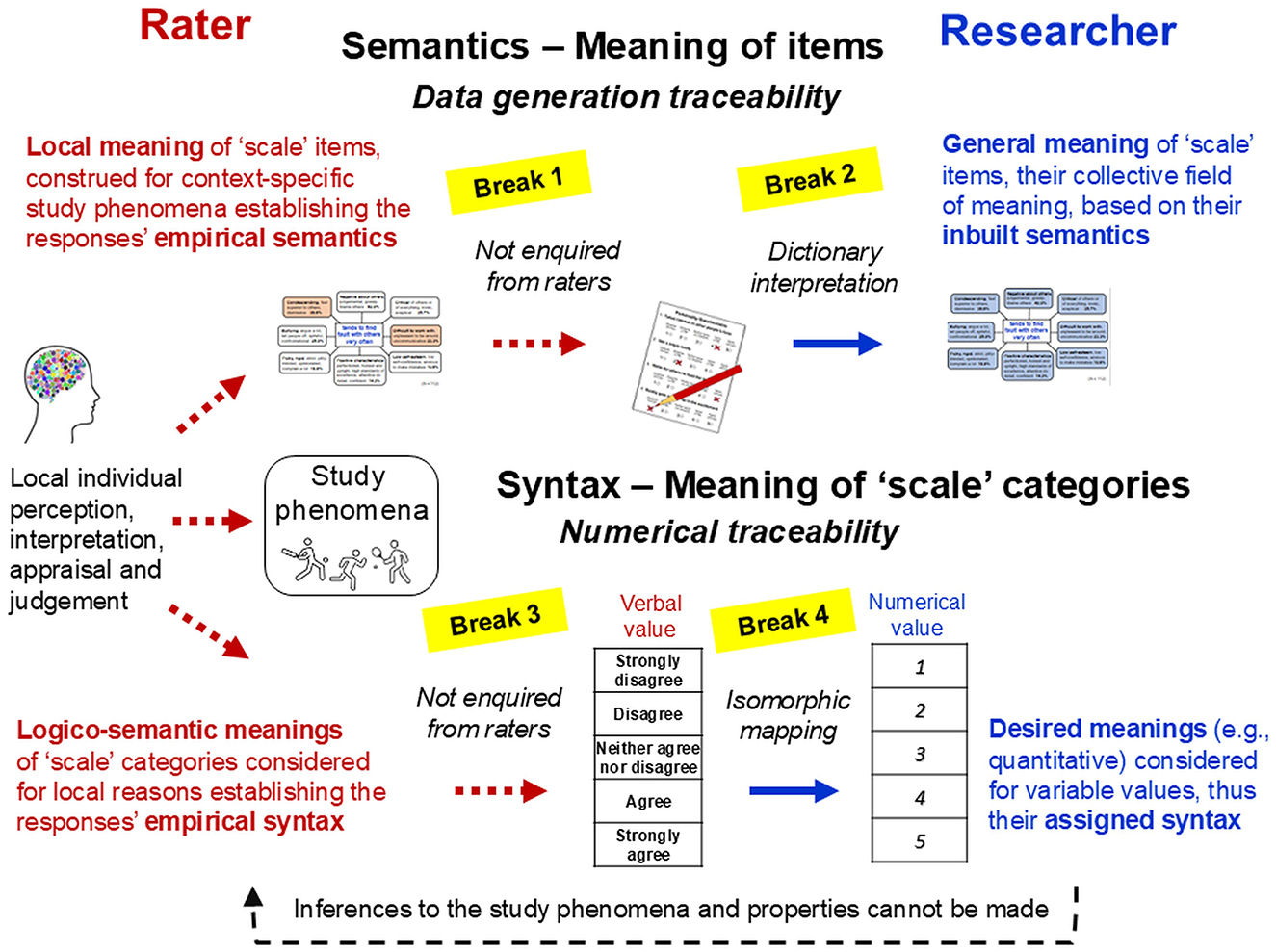

Section 3 applies these fundamentals to explore the challenges involved in establishing genuine analogues of measurement in psychology, which arise from the peculiarities of its study phenomena (e.g., higher-order complexity, non-ergodicity) and those of the language-based methods required for their exploration (e.g., inbuilt semantics). It demonstrates that psychology's focus on statistical modelling (e.g., psychometrics)—thus, on just one of the four necessary and interrelated modelling relations in Rosen's scheme—ignores the entire measurement process. But this often goes unnoticed because researchers consider only the general (dictionary) meanings of their verbal ‘scales'—their inbuilt semantics, yet ignore how raters actually interpret and use these ‘scales'. This introduces several breaks in the data's and model's relations to the actual phenomena that these are meant to represent. It also obscures psychology's measurement problem. This involves not just the crucial distinction between the phenomena studied (e.g., feelings) and those used as ‘instruments' for studying them (e.g., descriptions of feelings) but also individuals' (e.g., raters') local context-specific interactions with both. These complexify the ways in which epistemically justified (valid) information about the study phenomena can be obtained through language-based methods.

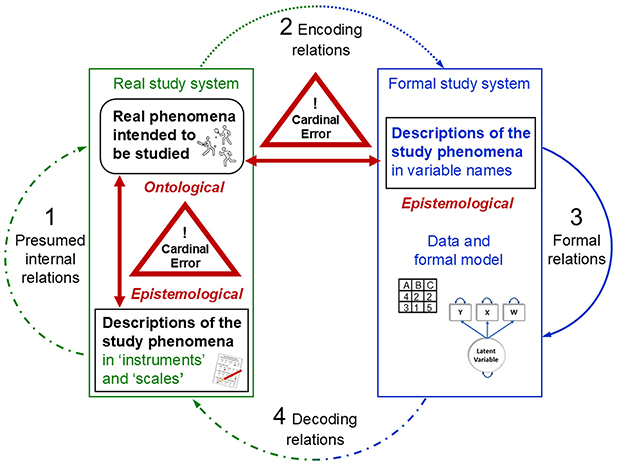

Section 4 shows that the frequent failure to distinguish the study phenomena from the means of their investigation (e.g., ‘instruments', formal models) confuses ontological with epistemological concepts—psychologists' cardinal error. This logical error is fuelled by quantitative psychologists' focus on statistics as well as by our human tendency to mistake verbal descriptions for the phenomena described. Many psychologists therefore mistake judgements of verbal statements for measurements of the phenomena described. Many also overlook that statistics can neither establish nor analyse a formal model's relations to the real phenomena studied. Establishing these relations requires genuine analogues of measurement for which the section elaborates necessary epistemological and methodological fundamentals. It closes by showing ways in which the powerful artificial intelligence systems (AI) now available for modelling human language can meaningfully support psychological research but also perpetuate psychologists' cardinal error.

2 Key problems of measurement

Measurement, in its most general sense, is a highly selective form of observation because ‘to measure' means that we must choose to measure something without having to measure everything. Every object of research may feature various non-equivalent properties (e.g., length, temperature and weight) as well as different quantitative entities of the same property (e.g., foot length, finger length and body height). Measurement is a process that involves the detection and recognition of selected properties in the object researched and that produces justified information about them (von Neumann, 1955; Uher, 2022b).

For simplicity, when ‘objects' are mentioned in the following, this is always meant to include their properties as well because we cannot measure objects in themselves (e.g., physical bodies) but only their specific properties (e.g., mass, voltage and temperature). Properties are also included when we understand by ‘objects of research' not just physical objects (e.g., individuals' bodies) but also non-physical phenomena (e.g., individuals' reasoning, beliefs and emotions)—thus, denoting the subject matter in general.

2.1 The measurement problem: Distinguishing the objects of research from the objects used as measuring instruments and conceptualising their interaction

We can describe all objects in their existence and being in the ‘world', thus ontologically. To describe how we can gain knowledge about a given object, thus epistemologically, we must distinguish the ontic object (the specific concrete entity) to be measured from the objects used for epistemic (knowledge-generating) purposes as measuring instruments. Measurement defines a theory-laden process structure that conceptualises the objects of research and the methods (including instruments) used to gain epistemically justified information about them (von Neumann, 1955).

Specifically, measurement requires an empirical interaction between the specific quantity to be measured (measurand) in the study object (e.g., the temperature of a cup of coffee) and the object used as instrument (e.g., mercury in glass tube). Measuring instruments must be designed such that they produce, through their empirical interactions with the measurand, distinctive indications that are observable for humans. In iterative processes of theorising and experimentation, scientists identify which variations of an instrument—when applied in defined ways (the method)—reliably produce distinct and for humans easily discernible patterns (e.g., linear extension of mercury in glass tubes). These indications are used to make inferences on the study object's specific state at the moment of interaction to obtain information about it. That is, scientists use their current state of knowledge to decide how to design specific objects as instruments, how to use them (methods) and which indications of their empirical interactions with the study object to consider as informative—thus, how ‘to read' these indications (Mari et al., 2021; Pattee, 2013; Tal, 2020; Uher, 2020b, 2023a).

In sum, the measurement problem7 concerns the epistemic distinction of the object of research from the objects used as measuring instrument. It requires their conceptualisation as well as that of their presumed empirical interaction under defined conditions (method) producing observable indications. To document and analyse them to derive measurement results, the observed and interpreted indications must be formally represented.

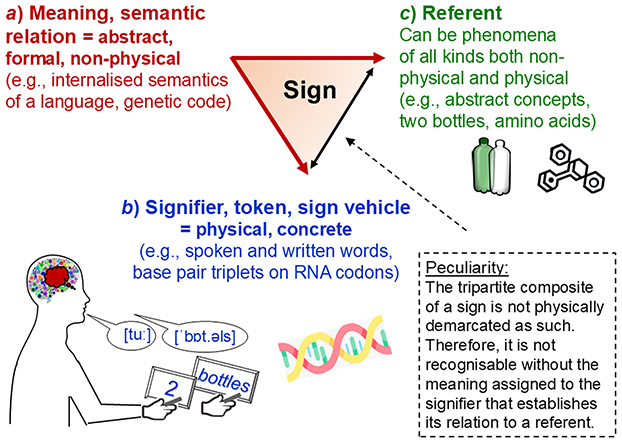

2.2 Measurement requires semiotic representation in rule-based formal models

The relations between physical properties are empirically given, invariant and lawful (those studied in metrology). But information about them can be formalised in various ways. Formalisms are conceptual, mathematical, algorithmic, representational and other abstract operations that follow logical, deductive or arbitrarily prescribed rules. In measurement, formal representation involves sign systems. Signs are composed of tokens (sign carriers; e.g., Latin or Greek letters, Arabic numerals) that are assigned meanings, which specify the information that these tokens are meant to represent (e.g., specific indications observed or quantitative relations). These sign systems constitute the data and formal models (e.g., variables, numerical values), which can be used to analyse the information represented (e.g., mathematically). The signs' meanings, however, because they just are assigned (attributed and ascribed), can vary. Numerals can represent numbers but also just order (e.g., door ‘numbers') or just nominal categories (e.g., genders). That is, formalisation is arbitrary, non-physical and rule-based (Abel, 2012; Pattee, 2013; Uher, 2023a; von Neumann, 1955).

In sum, semiotic (sign-based) representation is essential for all empirical sciences (Frigg and Nguyen, 2021; Pattee, 2013; van Fraassen, 2008). It requires that data and models are clearly distinguished from the objects that they semiotically represent. This separation is no philosophical doctrine but an epistemic necessity that follows from the definition of a sign as something that stands for something other than itself (Pattee, 2001; Peirce, 1958; Uher, 2020b, 2022b). The ways in which interpreted observations are encoded into data in a study are therefore crucial for understanding and analysing these data. The specific encoding is also essential for drawing justified conclusions from the analytical results about the actual objects explored. The study objects, their formal representations and the interrelations between both can be conceptualised and analysed in an overarching model.

2.3 The system of interrelated modelling relations underlying empirical science

Robert Rosen, a mathematical biophysicist and theoretical biologist, developed a general relational model to conceptualise the processes by which living beings selectively perceive specific parts of their environment and make sense of that information. Scientific knowledge generation is a special case of these fundamental processes. Rosen (1985, 1991, 1999) developed this process model mathematically building on earlier work by Rashevsky (1960b,a) and using category theory (Lennox, 2024).

2.3.1 Category theory: Modelling the relations of relations between objects

Many psychologists associate mathematics solely with quantitative analysis (e.g., algebra, arithmetic, calculus). But mathematics also involves many non-quantitative branches, such as category theory, combinatorics, geometry, logic, set theory or topology (Linkov, 2024; Rudolph, 2013), which are also used in empirical sciences.

Category theory is a general mathematical theory to formally describe abstract structures and relations. In this theory, a category is a system of mathematical objects and their relations. The focus is on conceptualising these relations, understood as morphisms, arrows or functors, that map a source object to its target object in specific ways (e.g., through structure-preserving transformations). Category theory also permits to map these relations in themselves—thus, to map the relations between categories, termed natural transformations. Hence, category theory is about modelling (mathematical) objects, relations of objects as well as relations of relations (Leinster, 2014). This makes it suitable to model also the process of scientific modelling in itself (Rosen, 1985).

2.3.2 Scientific modelling: Modelling the relations between causality, encoding, analysis and decoding

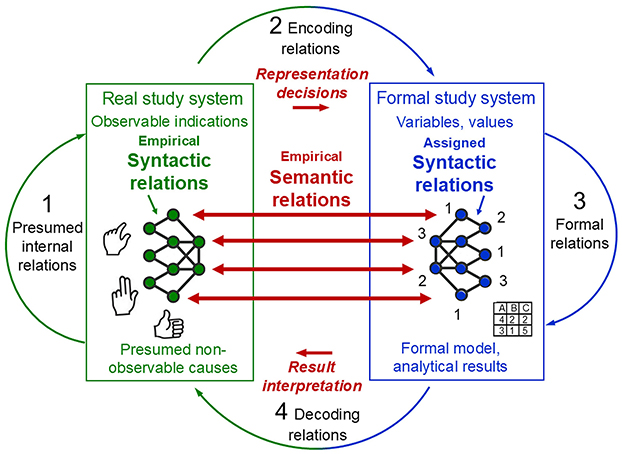

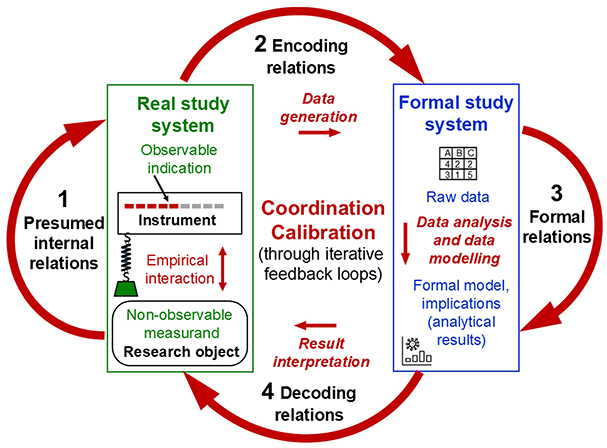

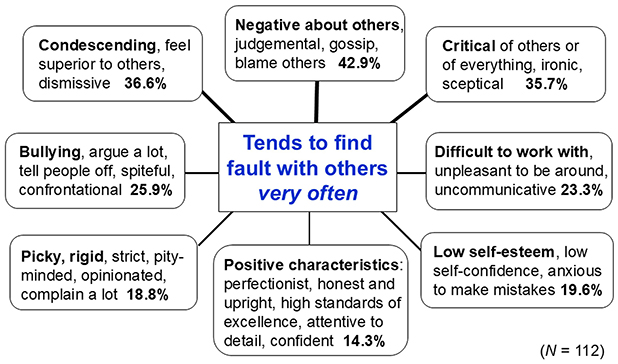

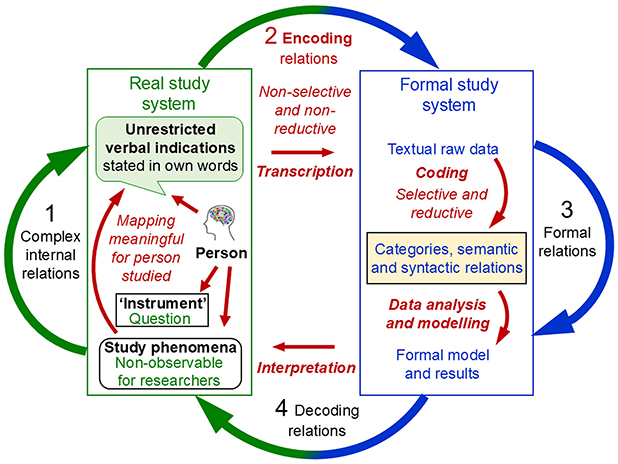

For scientific inquiry in general, Rosen's system8 of interrelated modelling relations conceptualises the basic set of processes that are used to explore a specific part of the ‘world', conceived as the real system under study (object of research, study phenomena). These processes specify the ways in which this real system being studied is mapped to the formal system that is used for studying it. Stated in category-theoretic terms, these modelling relations relate disjoint categories of objects (Mikulecky, 2000, 2001). In everyday life, we intuitively establish such modelling relations whenever we try to make sense of the complex phenomena that we encounter, grounded in the general belief that these are not completely random but show some kind of order. Figure 1 illustrates the system of interrelated modelling relations, comprising the real study system and the formal system used for studying it as mathematical objects as well as the processes (mappings, relations) that are conceptualised within and between them, depicted as arrows. What do these different processes involve?

Figure 1. Rosen's general process structure of empirical science: A coherent system of four interrelated modelling relations. The real study system and the formal system used for studying it, conceptualised as mathematical objects, as well as the processes (mappings, relations) each within and back and forth between them, depicted as arrows. Adapted from Rosen (1985) and Uher (2022b, Figure 6).

In science (and everyday life), when we perceive events as changes (e.g., in behaviour), we attribute to those changes some causes that we seek to explain (e.g., mental abilities, intentions) as possible causes of the observed events (e.g., through abduction; Peirce, 1958, CP 7.218). This (presumed) causal relation in the real system (e.g., a person) is depicted as arrow 1. Its exploration requires the encoding of the real changes observed. That is, selected indications that we deem relevant for exploring the presumed causal relations are encoded into objects and relations in the formal study system. These encoding relations9—the data generation—are depicted as arrow 2. The formal system is the explicit scientific model (or, in everyday life, the intuitive mental model) that we create to deal with the information obtained from our selected observations. It serves as a surrogate system that we can explore in ways that are not possible with the real system itself, such as mathematical analysis rather than physical dissection. Hence, the model is analysed in lieu of the actual objects of research (Rosen, 1985, 1991; Uher, 2015b,c,d).

We can manipulate the information encoded in the formal system in various ways using data modelling techniques (e.g., statistical or algorithmic analysis) to try to imitate the causal events that presumably occur in the real system (e.g., simulation models). Therefore, we must use our current knowledge of that real system (e.g., a person), its observable indications (e.g., behavioural responses) and (possible) non-observable internal relations (e.g., mental abilities) to decide which specific operational manipulations (e.g., statistical analysis) are appropriate to explore the information about that real system. Through manipulative changes and operations performed in the formal system—the data analysis—depicted as arrow 3, we obtain an implication, such as statistical or simulation results.

Once we believe that our formal system (e.g., structural equation model) is appropriate and may correspond to the presumed causal events in the real system, we must relate the results obtained in the formal system back to the real system studied. This decoding relation, depicted as arrow 4, requires interpreting the formal results with regard to the non-formal events occurring in the real study system. The aim is to check how well the formal model may represent the causes that we presume and that could explain the changes observed in that real system. Thus, decoding involves a mapping relation between disjoint categories of objects—thus, between the outcomes generated in a formal study system (e.g., mathematical) and the outcomes observable in a real study system (e.g., behavioural).

If the processes of encoding (2), implication (3) and decoding (4) appears to reproduce the presumed causal processes (1) sufficiently accurately, the system of modelling relations it said to commute. Commutation implies that the formal study system established in this process constitutes a successful model of the real system studied—expressed in category-theoretic terms by the equation: 1 = 2 + 3 + 4. Note that these numerals represent not numbers but different kinds of mapping relations, depicted as the four arrows in Figure 1. Hence, the system of modelling relations conceptualises the relations between relations between objects of different kinds (Rosen, 1985, 1991, 1999).

Rosen's process model is not commonly taught. Many scientists are even puzzled when they first encounter it (Mikulecky, 2011). This is astonishing and unfortunate because it conceptualises how empirical science, in general, and measurement, in particular, are done.

2.3.3 How empirical science is done: The epistemic necessity of making subjective decisions

Rosen's system of interrelated modelling relations highlights several key points that are fundamental to empirical inquiry but often not well considered. First, it specifies that the system studied and the surrogate system (model) used for studying it are of different kinds—real vs. formal. The relations (mappings) established between them—encoding (arrow 2 in Figure 1) and decoding (arrow 4)—therefore involve transformations that cannot be derived from within either system. These relations are thus independent of both systems.

Specifically, potentially unlimited amounts of observations that can be made of a real study system must be mapped onto the limited sign system that is used as its formal model. Encoding therefore requires that scientists reduce and simplify their observations to only those elements that they interpret as relevant for their given research question and that they choose to encode as data. Thus, the essence of encoding is high selectivity and reduction. This requires representational decisions about what to represent, and what not, and about how to represent it (Harvard and Winsberg, 2022). For example, observations of variable and highly dynamic phenomena, such as behaviours (e.g., hand gestures), often require their encoding in fuzzy categories. This involves the mapping of fuzzy subsets of observations (e.g., physical states of fingers) into the same formal category (e.g., hand configurations; Allevard et al., 2005). That is, scientific representation, in general, and measurement, in particular, involves the selective reductive mapping of an open domain of a study system to a closed sign system used as its surrogate model (for general principles, see Uher, 2019).

Decoding—the inverse relation from the formal system back to the real system (arrow 4)—as well, is a delicate process that is prone to many potential points of failure. This is because it involves the transformation of results obtained through formal manipulations (e.g., mathematical, statistical), which are not possible in the real system (e.g., behavioural, psychical) itself (Mikulecky, 2000; Rosen, 1985, 1999). This epistemic necessity makes the modelling process prone to methodomorphism, whereby methods impose structures onto the results that, if erroneously attributed to the study phenomena, may (unintentionally) influence and limit the concepts and theories developed about them (Danziger, 1985; Uher, 2022b).

Second, Rosen's process model highlights that the only part of our scientific models that—taken by itself—is free from operational subjectivity is the formal study system (e.g., statistical model) that is used as a surrogate for the real system studied (arrow 3). However, the formal model is established by the scientists' choice and decisions and is therefore subjective in many ways as well (Mikulecky, 2000, 2011; Rosen, 1991; Strauch, 1976).

“This makes modelling as much an art as it is a part of science. Unfortunately, this is probably one of the least well appreciated aspects of the manner in which science is actually practised and, therefore, one which is often actively denied” (Mikulecky, 2000, p. 421).

In sum, Rosen's general model conceptualises the processes of empirical science that epistemically justify the representation of observable regularities by means of abstract (e.g., mathematical) models. These processes concern the coordination (or correspondence) between theory and observable phenomena, such as the applicability of theoretical concepts to concrete events—known as the problem of coordination (or correspondence) in science (Hempel, 1952; Margenau, 1950; Torgerson, 1958). To specify the conditions under which abstract representations can be applied to observable phenomena and used to investigate—and also to quantify—entities of non-observable phenomena, it requires measurement.

2.4 Tackling the epistemic circularity of measurement requires a coherent system of modelling relations

Any method of data generation involves categorisation, which enables basic forms of analysis, such as grouping or classifying objects by their similarities and differences. Measurement has advantages over mere categorisation10 by enabling more sophisticated analyses of categorised objects and their relations by additionally enabling the descriptive differentiation between instances that are of the same kind (quality) and divisible—thus, that differ in quantity (see Hartmann, 1964; Uher, 2018a, 2020b).

Key problems of measurement arise from the fact that many objects of research are not directly observable with our senses (e.g., electric potential, others' mental processes) or not accurately enough (e.g., weight of smaller objects). Rosen's process model underlies the approaches that are used to tackle these epistemic challenges, as illustrated here in the problems of measurement coordination and calibration.

2.4.1 Measurement coordination: Exploring the relations between observable indications and unobservable measurands

Measurement coordination is the specific problem of how to justify the assumption that a specific measurement procedure does indeed allow us to measure a specific property in the absence of independent methods for measuring it. This involves the problem of how to justify that specific quantity values are assigned to specific measurands under a specific methodical procedure. Measurement coordination (also “problem of nomic measurement”; Chang, 2004) thus concerns the relations between the abstract terms used to express information about quantities and the ways of measuring those quantities (Luchetti, 2020).

Challenges arise from many phenomena's non-observability. We can often directly observe neither the specific quantity to be measured (measurand; e.g., a body's temperature) nor its relation to the observable quantitative indications that are produced by its interaction with the measuring instrument (e.g., length of mercury in glass tubes) and that may be useful to infer the measurand's unknown quantity. Thus, in the early stages of scientific inquiry, the mapping relation between indications and measurands is unknown (e.g., the function relating the values of length of mercury with temperature). But it cannot be determined empirically without already established, independent measurement methods—because it is through measurement that such relations are first established. This requires scientists to make preliminary decisions about what counts as an indication of the property studied (e.g., temperature)—not knowing their specific relations, nor (initially) what exactly that property actually is, nor what other factors may influence an instrument's observable indications.

The fact that these questions cannot be addressed independently of each other involves epistemic circularity, discussed in many sciences and philosophy for a century already (Chang, 2004; Luchetti, 2024; Mach, 1986; Reichenbach, 1920; van Fraassen, 2008). To tackle this problem, scientists must establish appropriate and independent sources of justification for a specific measurement procedure and the assignment of specific values to specific quantities of a specific property. To achieve this, they must coordinate several modelling relations and establish their interrelations coherently.

To construct thermometers, for example, scientists began with preliminary definitions that coordinated a preliminary theoretical concept of temperature with empirical indications that could be obtained from preliminary instruments and their variations. They filled various liquids or gases in glass tubes and studied variations in their extension (volume) obtained from various heat-producing operations. Presuming a linear invariant relation between volume and temperature, scientists experimented with different substances (e.g., alcohol, hydrogen, mercury and water) to identify under which standardised conditions (e.g., pressure and heat production) which substance reliably produces distinct (e.g., monotonously increasing) indications, thus showing thermometric properties. From consistent indications produced by different thermometric substances, scientists could develop different kinds of thermometers, thus enabling triangulation. The redefinition of temperature as the average kinetic energy of particles provided a theoretical foundation to substantiate the linear invariant relation between temperature and the volume of specific substances used in thermometers (under specified conditions; Chang, 2004; JCGM100:2008, 2008; Kellen et al., 2021; Uher, 2020b).

The problem of measurement coordination and its inevitable epistemic circularity can thus be tackled through iterative processes in which a coherent system of assumptions is established to justify specific knowledge claims—using a coherentist approach (Olsson, 2023). With each epistemic iteration, the theoretical concept is re-coordinated to more reliable indications, which in turn enables more precise tests of predictions, more advanced theories, more refined and more standardised methods and instruments of measurement, and so on (Luchetti, 2024; Tal, 2020; van Fraassen, 2008). Through these iterative feedback loops, scientists systematically develop epistemic justifications for having implemented coordinated connections between the (presumed) non-observable measurand (e.g., a cup of coffee's specific temperature), the observable indications produced by its lawful (invariant) interaction with the measuring instrument (e.g., length of mercury), a known reference quantity (e.g., another thermometer used for calibration), and the semiotic representations of the information thus-obtained (e.g., ‘37°Celsius', ‘98.6° Fahrenheit'). This information is then mathematically analysed in the formal system. The obtained result can be used to make justified inferences on the specific quantity of the non-observable measurand.

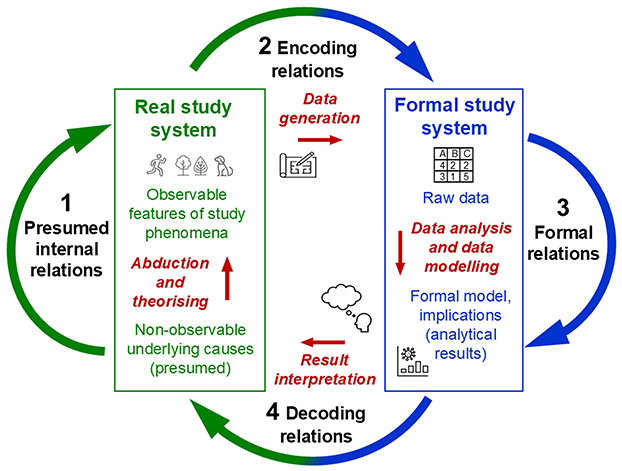

Rosen's general model allows for conceptualising the process structure underlying measurement coordination. Accordingly, this involves modelling the presumed relations within the real study system, comprising the non-observable object of research (measurand), the object used as instruments and the observable indication produced from their (non-observable) empirical interaction. Their presumed causal relations (arrow 1 in Figure 2) are then explored empirically through unbroken documented traceable relations to, within and back from the formal system that is used to study that real system (arrows 2, 3 and 4). In iterative feedback loops, the four modelling relations in Rosen's system (arrows 1 to 4) are passed through over and over again, thereby re-coordinating them with one another until their commutativity is established, indicating successful modelling of the real study system.

Figure 2. Physical measurement: A coherent system of four coordinated and calibrated modelling relations.

Necessarily, scientists can start to establish measurement coordination only from preliminary assumptions and theories about the study property and from preliminary instruments, methods and decisions on arbitrary encoding rules to obtain first empirical data. They must use preliminary, yet theoretically informed, analytical operations to obtain possibly informative implications. When decoding and interpreting these analytical results, scientists can also make only preliminary assumptions about the implications that these may have for the presumed relations between instrument indications and measurand. Each iteration in the overarching model of a measurement process enables new theoretical, methodical and empirical insights and refinements, which mutually stimulate each other, leading to cascades of development through which a coherent system of epistemically justified knowledge claims is established.

These iterative processes also involve testing and adjusting the specific parameters of a given measurement procedure—through calibration.

2.4.2 Calibration: Modelling precision and uncertainty in measurement

Calibration procedures establish reliable relations between the instrument indications obtained under a given method in the real study system and the measurement results obtained in the formal model, which specify information about the actual (non-observable) quantity to be measured (measurand). Calibration is theoretically constructed and empirically tested by modelling uncertainties and systematic errors under idealised theoretical and statistical assumptions (e.g., about distribution patterns and the randomness of influencing factors). The aim is to improve the accuracy of the measurement results by specifying the ranges of uncertainties and errors for all parameters involved in a given measurement procedure. This allows for incorporating corrections for systematic effects (e.g., of pressure on temperature) and for adjusting inconsistent observations of instrument indications (Chang, 2004; Luchetti, 2020; McClimans et al., 2017; Tal, 2017).

That is, calibration involves modelling activities that are aimed at refining the coordinated structure of a measurement process. In Rosen's scheme, this means that the parameters used to establish proportional (quantitative) relations in the measurement model are adjusted within and across all four modelling relations (arrows 1 to 4 in Figure 2). These modelling relations are passed through in iterative feedback loops to obtain quantitative parameter value ranges that maximise the predictive accuracy of the overarching model. Thus, calibration refers to the coordination of abstract quantity terms in the formal model with the specific quantities to be measured in real study objects when a specific measurement method (including measuring instrument) is used (Luchetti, 2020; McClimans et al., 2017).

This model-based view of calibration illustrates the coherentist approach that is necessary to tackle the epistemic circularity of measurement. This involves establishing theoretical and empirical justifications for the assumption that a specific method (including instrument) enables the measurement of a specific property in absence of other independent methods for measuring it. Once different methods (and instruments) for measuring the same property (e.g., temperature) are developed, uncertainties and systematic errors can also be modelled across different procedures and instruments, such as to calibrate thermometers involving different kinds of thermometric substances (e.g., gases and fluids; Chang, 2004).

Calibration processes are necessary to implement numerical traceability11—thus, to establish for the numerical values used as measurement results a publicly interpretable meaning regarding the specific quantities measured (how much of the studied property that is; Uher, 2022a). To ensure that measurement results are reliably interpretable and represent the same quantitative information regarding the measurands across time and contexts (e.g., specific weight of 1 kilogram), metrologists defined primary references, which are internationally accepted (e.g., through legislation) and assumed to be stable (e.g., prototype kilogramme12). From each primary reference, large networks of unbroken documented connection chains were established (via national references) to all working references that are used in measurement procedures in research and everyday life (e.g., laboratory weighing scales, household thermometers; JCGM200:2012, 2012). These calibration chains specify uncertainties and errors as quantitative indications of the quality of a measurement result to assess its precision and accuracy (JCGM100:2008, 2008; Uher, 2020b).

2.4.3 The theoretical and empirical process structure of measurement: A coordinated and calibrated system of four interrelated modelling relations

The essence of measurement is thus a theory-laden process structure that involves modelling relations each within a real and a formal study system as well as back and forth between them, which are coherently connected with one another in an overarching process, as conceptualised in Rosen's general model (Figure 2). This requires data generation methods that enable empirical interactions of the non-observable quantities to be measured with a measuring instrument. Identifying observable indications of these interactions that are (possibly) informative about these measurands requires a general model of coherent and epistemically justified interrelations within and between the real and the formal study system. These are re-coordinated and re-calibrated with one another by empirically re-testing the presumed relations (e.g., comparing predicted and observed indications), re-adjusting their parameters (e.g., errors, uncertainties) and re-fining assumptions (e.g., randomness).

In sum, a coordinated and calibrated system of interrelated modelling activities is necessary to empirically implement unbroken traceable connection chains that establish proportional (quantitative) relations between the measurement results obtained in the formal model and both (1) the measurand's unknown quantity (data generation traceability) and (2) a known reference quantity (numerical traceability) in the real study system. Measurement models thus-developed allow us to derive from defined observable instrument indications calibrated measurement results that can be (1) justifiably attributed to the measurands, and (2) publicly interpreted in their quantitative meaning regarding those measurands—the two epistemic criteria of measurement across sciences (Uher, 2020b, 2022a). The insights gained from iteratively developing the process structure of a measurement model may also necessitate a revision of the definitions and theoretical explanations of the objects and relations in the real system (e.g., temperature redefined as average kinetic particle energy).

Clearly, physical measurement procedures cannot be directly applied to psychology. But what specifically are the challenges for devising analogous processes in psychology?

3 Psychology's inherent challenges for quantitative research

The history of metrology testifies to the challenges involved in tackling the problems of measurement coordination and calibration in physical measurement (Chang, 2004)—thus, in the study of invariant relations in non-living nature, which can therefore be formalised in immutable laws, natural constants and mathematical formulas. Psychology, however, explores phenomena (e.g., behaviours, thoughts and beliefs) that are—in themselves—variable, context-dependent, changing and developing over time (Uher, 2021b). Such peculiarities are characteristic of living systems (e.g., psyche and society) and not studied in metrology. These peculiarities entail that the low replicability of psychological findings is not just an epistemic problem that could be remedied with more transparent and robust methods, as many currently believe. Rather, it is also a reflection of the indeterminate variability and changeability of the study phenomena themselves (arrow 1 in Figure 1). Low replicability of psychological findings thus reflects not just epistemic uncertainty of ‘measurement' but also fundamental ontic indetermination (Scholz, 2024).

3.1 Psychology's study phenomena: Peculiarities of higher-order complexity

Living systems (e.g., biotic, psychical and social) are of higher order complexity. They feature peculiarities not known from non-living systems (Baianu and Poli, 2011; Morin, 2008).

3.1.1 Emergent properties not present in the processes from which they arise

In higher-order (super) complex systems, interactions occur between various kinds of processes on different levels of organisation from which novel properties emerge on the level of their whole that are not present in the single processes from which they arise. These novel, higher-level properties can also feed back to and change the lower-level processes from which they emerge. Such dynamic multi-level feedback loops lead to continuous change and irreversible development on all levels of organisation (Morin, 1992; Rosen, 1970, 1999).

Human languages, for example, gradually emerged from individuals' interactions with one another. The language of a community, in turn, mediates and shapes the ways in which its single individuals perceive, think and organise their experiences into abstract categories. Through dynamic multi-level feedback processes over time, individuals, their community and their language mutually influence each other, thereby developing continuously further and getting ever more complex (Boroditsky, 2018; Deutscher, 2006; Valsiner, 2007; Vygotsky, 1962). This entanglement of mind and language first enables the use of language-based methods in science. But the intricacies of language also promote conceptual confusions, which are still largely overlooked, as this section will show.

Emergence also entails complex relations between the levels of parts and wholes.

3.1.2 Complex wholes and their parts: One–to–many, many–to–one and many–to–many relations

In living systems (e.g., individuals), the same process (e.g., a specific feeling) can generate different outcomes (e.g., different behaviours) in different times, contexts or individuals—thus, involving one–to–many relations (multifinality, pluripotency). Vice versa, different processes (e.g., of abstract thinking) can generate the same outcome (e.g., solving the same task)—thus, involving many–to–one relations (equifinality, degeneracy; Cicchetti and Rogosch, 1996; Mason, 2010; Richters, 2021; Sato et al., 2009; Toomela, 2008; Uher, 2022b). To consider multiple processes and outcomes at once, we must conceptualise many–to–many relations between the parts and their whole on different levels of organisation.

This entails that specific relations from observable indications to non-observable phenomena that apply to all individuals in all contexts and all times cannot be identified. This complicates the possibilities for solving the problem of measurement coordination in psychology. Specifically, complex relations challenge the appropriateness of the sample-level statistics commonly used in psychology, which are aimed at identifying invariant13 (e.g., cause–effect) relations, such as between latent and manifest variables in factor analyses or structural equation models—that is, one–to–one relations.

Complex multi-level relations also entail the fact that the properties of parts identified in isolation (e.g., cells) cannot explain the whole (e.g., organism) because its properties emerge only from the parts' joint interactions. Changes in single parts or single relations between them can change the properties of the whole. Psychical processes cannot even be isolated from one another, although they can be qualitatively distinguished (Luria, 1966). Thus, complex wholes are more than and different from the sum of their parts (Morin, 1992, 2008; Nowotny, 2005; Ramage and Shipp, 2020; Uher, 2024). All this entails that living systems cannot be explored by reducing them to the parts of which they are composed (e.g., organisms to cells), as this is possible for the non-living systems (e.g., technical) featuring invariant relations as studied in metrology (Rosen, 1985, 1991).

3.1.3 Humans are thinking intentional agents who make sense of their ‘world'

Psychologists also cannot ignore the fact that humans are thinking agents who have aims, goals and values that they pursue with intention and who can anticipate (mentally model) future outcomes and proactively adjust their actions accordingly. Humans hold personal (subjective) and socio-cultural views on their ‘world', including on the psychological studies in which they partake. Individuals memorise and learn. Therefore, simple repetitions of identical study conditions (e.g., experiments and items) cannot be used (Danziger, 1990; Kelly, 1955; Shweder, 1977; Smedslund, 2016a; Uher, 2015a; Valsiner, 1998).

In sum, psychology's study phenomena feature peculiarities that do not occur in the properties amenable to physical measurement. These peculiarities complicate the design of analogous research processes that meet the two epistemic criteria of measurement. In the following, we explore these complications stepwise, starting with the level of data analyses.

3.2 Psychology's focus on aggregate level analysis

Psychology's primary scientific focus (unlike sociology's) is on the individual, which constitutes its theoretical unit of analysis. The empirical units of analysis in psychological ‘measurement', however, are groups. Why is that so? And what justifies the assumption that results obtained on aggregate levels are suited to quantify individual level phenomena?

3.2.1 Indefinitely complex and uncontrollable influence factors: Randomisation and large sample analyses

Unlike metrologists and physical scientists, psychologists cannot isolate their study objects and experimentally manipulate the (presumed) quantities to be measured in them, such as individuals' processing speed, reasoning abilities or beliefs (Trendler, 2009). Moreover, in physical measurement, influencing factors involve comparably few and exclusively here-and-now factors. By contrast, the factors influencing psychology's study phenomena, such as internal and external conditions causing mental distraction, are indefinitely complex and ever-changing and can even transcend the here-and-now (Barrett et al., 2010; Smedslund et al., 2022; Uher, 2016a).

To deal with these challenges, psychologists study groups of individuals that are assumed to be sampled randomly with regard to these unspecifiable and uncontrollable influence factors. To estimate the impact of these factors, psychologists analyse samples that are large enough to allow for identifying regularities beyond pure randomness in the study phenomena (e.g., by comparing experimental with control groups). This approach necessitates the statistical analysis of group-level distribution patterns. The statistical results, however, are commonly interpreted with regard to the single individuals (e.g., their beliefs). That is, from statistical analysis to result interpretation, psychologists shift their unit of analysis from the sample back again to the individual—without explanation but in line with their theoretical unit of analysis (Danziger, 1985; Richters, 2021; Uher, 2022b).

But in what ways can results on aggregates be informative about single individuals?

3.2.2 The ergodic fallacy: Psychology's common sample–to–individual inferences built on mathematical errors

Statistical analyses of aggregated data sets can reveal information about the single cases only when their synchronic and diachronic variations are equal (isomorphic)—a property of some stochastic and dynamic processes in non-living systems termed ergodicity. In the 1930s already, mathematical-statistical (ergodic) theorems14 were used to prove that ergodicity does not hold for cases that vary, change and develop (Birkhoff, 1931). Hence, psychology's study phenomena are non-ergodic, which means that between-individual (synchronic) variations are uninformative about within-individual (diachronic) variations. Thus, when using sample-level analyses (e.g., factor analysis) to study individual-level phenomena (e.g., psychical ‘mechanisms'), psychologists commit an inferential error—the ergodic fallacy (Bergman and Trost, 2006; Danziger, 1990; Lamiell, 2018, 2019; Molenaar and Campbell, 2009; Richters, 2021; Smedslund, 2016a, 2021; Speelman and McGann, 2020; Uher, 2022b, 2015d; Valsiner, 2014b; van Geert, 2011; von Eye and Bogat, 2006).

In sum, the higher-order complexity of psychology's study phenomena poses considerable challenges for empirical research. The uncontrollability of influencing factors requires statistical analyses of large samples. But individuals' complexity renders sample-level results uninformative about the single individual. These and further problems complicate the development of genuine analogues of measurement.

3.3 Psychological ‘measurement' theories: Failure to conceptualise a coherent system of interrelated modelling relations

As Section 2 showed, measurement requires a coherent system of four interrelated modelling relations—each within a real and a formal study system and back and forth between them (arrows 1 to 4 in Figure 2). The ‘measurement' theories established in psychology, however, such as Representational Theory of Measurement (RTM) and psychometrics, focus on just some of these modelling relations, thereby ignoring the overall model that is necessary to relate them coherently to one another.

3.3.1 Representational Theory of Measurement: Simple observable relations represented in mathematical relations

Representational Theory of Measurement (RTM; Krantz et al., 1971; Luce et al., 1990; Suppes et al., 1989) formalises axiomatic conditions by which observable relational structures can be mapped onto symbolic relational structures. It provides mathematical theories for this mapping (representation theorem), including permissible operations for transforming the symbolic structures without breaking their mapping relations onto the observable structures (uniqueness theorem; Narens, 2002; Vessonen, 2017). That is, representational theory specifies the semiotic representation of observable indications—the encoding and decoding relations in Rosen's structural model. The theory's focus on isomorphisms—thus, on reversible one–to–one relations between observables and data (arrows 2 and 4 in Figure 2)—presupposes that the objects of research feature properties with quantitative relations that are directly observable (e.g., ‘greater than' or ‘less than'). Such relations can be mapped straightforwardly onto a symbolic system that preserves these relations (e.g., ordinal variables; Suppes and Zinnes, 1963).

Psychologists, however, encounter tremendous challenges when trying to identify empirical regularities in observable (presumed) indications of psychical phenomena as well as (possibly) quantitative relations in these indications (e.g., in behaviours, performances). Highly variable dynamic study phenomena necessitate fuzzy encoding relations, which can be defined and established differently. Specifying such many–to–one encoding relations is seldom straightforward. It requires theory-driven (in parts also arbitrary) decisions of what to formally represent and how. These decisions may impact the information encoded in the data—and thus, the results that can be obtained from them (Uher, 2019). All this further complicates the problem of measurement coordination in psychology (Luchetti, 2024; Uher, 2022b, 2023a). In all sciences, measurement requires highly selective and reductive representation. In psychology, it requires mapping information about a highly complex study system, which cannot be fully defined in principle (e.g., behavioural, psychical and belief systems), to a simple system, which can be fully defined (e.g., structural equation model).

Representational theory, however, provides neither concepts nor procedures for how and why some observations should be mapped to a symbolic relational system (Mari et al., 2017; Schwager, 1991). Concretely, it provides no concepts to specify the relations between observables and the (non-observable) quantity to be measured (measurand) in a study object. Nor does it provide concepts to specify the measurand's empirical interactions with the measuring instrument that first produce these observable indications (arrow 1 in Figure 2). Such specifications, however, are necessary to design suitable instruments and to operate them in defined empirical procedures (methods). They are also necessary to justify why some indications, but not others, should be observed—thus, to generate data that can be informative about the measurands (arrow 2). In view of this, it is unsurprising that representational theory provides no concepts for controlling the effects of influence properties and for modelling precision and uncertainty either. The theory confines empirical research to just simple observables that can be mapped easily onto useful mathematical relations, and vice versa. As Rosen (1985) highlighted, however, encoding and decoding (arrows 2 and 4) relations involve transformations that cannot be derived from within either system and that are therefore independent of these systems.

In sum, representational theory ignores the entire system of traceable modelling relations that must be coordinated and calibrated with one another to enable epistemically justified and publicly interpretable inferences from defined observable indications to the (non-observable) quantity of interest—the key criteria of measurement (Figure 2). Instead, it stipulates a purely representationalist and operationalist procedure that simplifies observations such as to align them to mathematically useful relations—in line with Stevens (1946, p. 667) earlier redefinition of ‘measurement' as “the assignment of numerals to objects according to a rule” (other than randomness; Stevens, 1957). These simplistic notions formed the basis for psychology's theories and practices of pragmatic quantification and separated them from those of measurement used in metrology and physics (Mari et al., 2021; McGrane, 2015; Uher, 2021c). Still today, these representationalist and operationalist notions of ‘measurement' underlie the psychology's main method of quantitative data generation—rating ‘scales', in which numerical scores are straightforwardly assigned to specific answer categories.

These representationalist and operationalist notions of ‘measurement' also underlie psychometrics—meant to mean the “science of measuring the mind” (Borsboom, 2005).

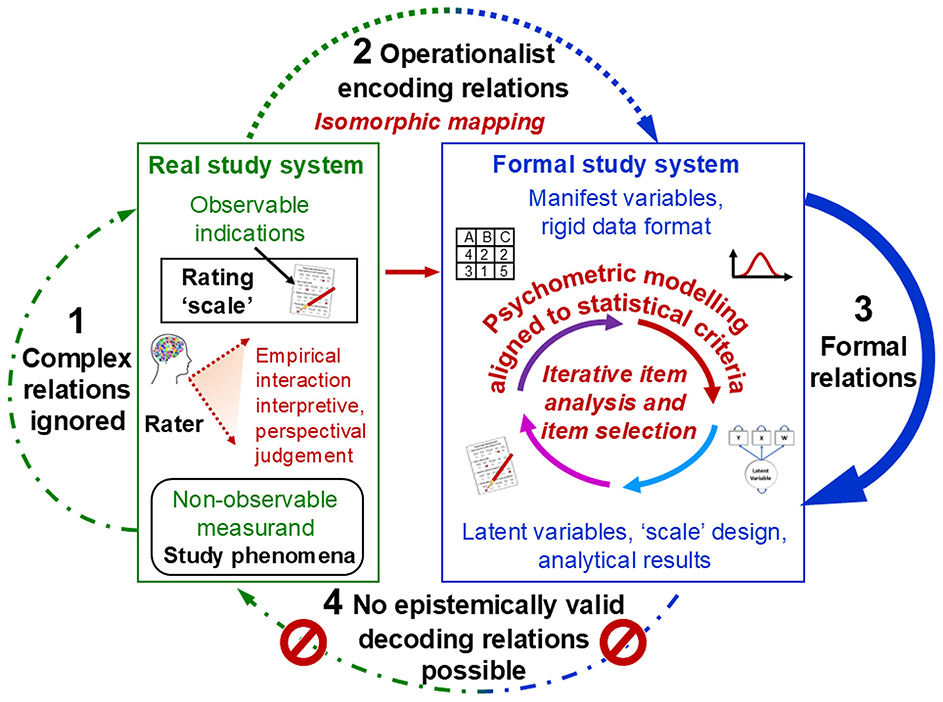

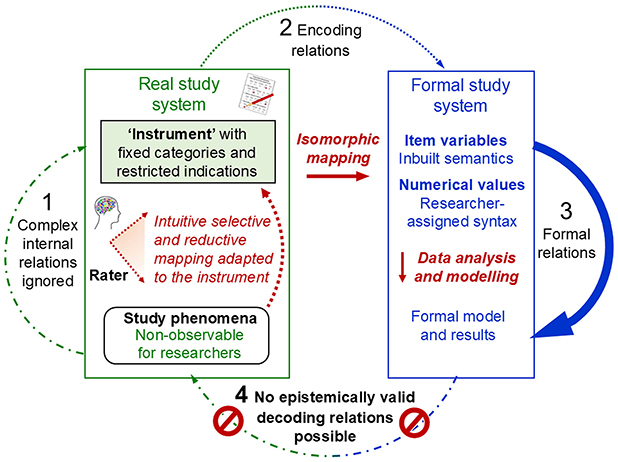

3.3.2 Psychometrics: Formal modelling aligned to statistical criteria and theories, enabling pragmatic result-dependent data generation

The triviality of the isomorphic relations in encoding and decoding (arrows 2 and 4 in Figure 3)—stipulated by representational theory and Steven's redefinition of ‘measurement' and implemented in rating methods—shifted psychologists' focus away from the real study system (arrow 1) to the formal model (arrow 3). Statistical theories and methods, such as those of psychometrics, were advanced to develop sophisticated models and analyses that enable the reliable and purposeful discrimination between cases (e.g., individuals). This involved designing psychometric ‘instruments' that allow for generating data with useful statistical properties (e.g., normal distribution, high item discrimination). Stevens' (1946) mathematically defined ‘scales' (e.g., ordinal, interval, ratio)—although these are neither exhaustive nor universally accepted (Thomas, 2019; Uher, 2022a; Velleman and Wilkinson, 1993)—contributed further concepts to this end.

Figure 3. Psychometrics: Result-dependent methods of data generation and data analysis. Adapted from Uher (2022b, Figure 9).

Psychometrics serves its pragmatic and utilitarian purposes well. But its approaches align the formal system (arrow 2 in Figure 3) to the criteria and theories on which the formal model and its manipulations are built (e.g., item-response theory)—regardless of the specific phenomena studied (e.g., behaviours and beliefs). Indeed, some even consider representation to be irrelevant for psychological ‘measurement' (e.g., Borsboom and Mellenbergh, 2004; Michell, 1999). The epistemic necessity to conceptualise and implement an empirical interaction with the (non-observable) quantity to be measured in individuals gets out of sight. Psychometricians also overlook that identifying observable indications of these empirical interactions that may be informative about the measurands requires theoretical knowledge about both the real system studied and the methodical system (including the ‘measuring instruments') used to study it (arrow 1). Instead, psychometricians choose ‘instrument' indications (e.g., answer categories on rating ‘scales') onto which pragmatically useful data structures (e.g., fixed numerical value ranges) can be mapped straightforwardly (Uher, 2018a, 2022a,b).

Hence, by focusing on statistical modelling (arrow 3, Figure 3), psychometricians neglect the three other modelling relations (arrows 1, 2 and 4) without which a formal system cannot be coordinated and calibrated with the real study system. Their interrelations are neither conceptualised nor empirically established but simply decreed, such as in the operationalist definition of ‘intelligence' as what an IQ-test measures (Boring, 1923; van der Maas et al., 2014). Specifically, psychometricians fail to conceptualise the real study system—comprising the study object, the measurand, the instrument and their empirical interaction producing observable indications. Therefore, they overlook that the quantitative scores recorded in ‘intelligence test' (e.g., number of correct answers) are properties of the outcomes of intellectual abilities but not of these abilities themselves.

Indeed, any test performance may involve several, qualitatively different intellectual abilities and modes of processing (e.g., symbolic, situational and verbal). More intelligent individuals may use qualitatively different (e.g., more efficient) abilities than less intelligent ones, different modes of processing and even multiple ones dynamically, leading to quantitatively different test performances. But none of these intricate many–to–one, one–to–many and many–to–many relations are considered in psychometrics. It only models relations of specific test outcomes to the abstract ‘intelligence' construct that they operationally define, which is then re-interpreted as a real unitary object to be ‘measured' (Khatin-Zadeh et al., 2025; Toomela, 2008; Uher, 2020b, 2021d,c, 2022b).