- 1School of Social Sciences, Nanyang Technological University, Singapore, Singapore

- 2Faculty of Human Sciences, University of Tsukuba, Tsukuba, Japan

- 3Japan Society for the Promotion of Science (JSPS), Tokyo, Japan

- 4Department of Psychology, National University of Singapore, Singapore, Singapore

- 5Graduate School of Intercultural Studies, Kobe University, Kobe, Japan

Presently, there is extensive evidence of multisensory integration in tactile and visual processing. While it has been shown that multisensory interaction between touch and vision influences many cognitive processes, such as object recognition, the role of multisensory interaction in the affective domain is still poorly understood. The aim of this study was to examine the influence of tactile perception on the affective processing of visual stimuli. Two experiments were conducted with urethane rubbers of differing compliance and with visually presented words. In the first experiment, participants rated the affective valence of the visually presented words while touching hard or soft urethane rubbers. Ratings and reaction times were recorded. Results showed touching a soft stimulus slowed the valence rating of visual words, but it did not affect the valence ratings per se. A second experiment clarified whether this effect was unique to valence (affective) ratings or whether it extended to semantic (cognitive) ratings as well. The second experiment was identical to the first one, but here participants rated the level of abstractness of the same visually presented words. Results indicated that abstractness ratings were not affected by the tactile stimuli. Overall, these confirm that, possibly via an attentional mechanism, tactile input influences the speed of affective visual processing.

1 Introduction

In our daily lives, we tend to think of our senses as distinct modalities that individually afford the perception of the objects around us. However, we often disregard how our senses work together to enhance our perceptions and how important this integration is in our daily lives (Stein, 2012).

Of particular interest is the interaction between vision and touch, which encompasses object recognition (Klatzky and Lederman, 2011; Lacey and Sathian, 2011; Martinovic et al., 2012; Newell et al., 2001), object localization (Cattaneo and Vecchi, 2008; Pasqualotto et al., 2013; Woods et al., 2004; Zuidhoek et al., 2004), as well as body representation (Della Longa et al., 2022; Kaneno et al., 2024; Pasqualotto and Proulx, 2015). Indeed, these two modalities complement one another by providing us with distinct sets of information (e.g., color by vision and temperature by touch). Therefore, multisensory integration between vision and touch has been broadly investigated (e.g., Pasqualotto et al., 2016), yet the affective component of visuo-tactile integration has been far less studied and understood (Filippetti et al., 2019; Morrison, 2016). We focused on touch because it is well-suited for affective processing (e.g., Cavdan et al., 2023; Drewing et al., 2018; Kirsch et al., 2020; Guest et al., 2011; Pasqualotto et al., 2020). For example, our studies found evidence that soft tactile stimuli engender pleasantness (Pasqualotto et al., 2020), and softness perception activates the insula, a region of the brain involved in affective processing (Kitada et al., 2019).

Previous studies suggested that perceiving pleasurable attributes in one sensory modality (e.g., touch) affects the overall multisensory experience of a product by biasing affective perceptions of other sensory modalities (e.g., vision and audition) (see Spence, 2022; Spence and Gallace, 2011 for reviews). Suzuki and Gyoba (2008) found that the repeated visual exposure to novel stimuli (mere-exposure effect, Zajonc, 1968) increased the participants’ subsequent preference for those stimuli when judged by touch. Wu et al. (2011) exposed participants to multisensory (visual and tactile) stimuli and found a similar mere-exposure effect for affective judgements within the visual domain. Are the results of these studies examples of multisensory effective priming? Although unisensory (vision), the study by Pecchinenda et al. (2014) offers an interesting methodology. In their Experiment 1, symmetric/asymmetric ‘clouds’ of dots preceded words that participants categorized as positive or negative. Authors found that symmetric clouds improved the categorization of positive words. This study belongs to the vast literature on affective visual priming, reporting congruency effects between a priming stimulus and the response of the observers (both in terms of accuracy and reaction times) (Fazio, 2001; Hermans et al., 1994, 2001). Here, symmetric clouds were perceived as more pleasant (Friedenberg and Bertamini, 2015), thus improving the processing of positive words (congruency). Would the same results stand in a multisensory setting?

We decided to investigate the effect of tactile stimuli on affective visual processing adapting the affective priming used by Pecchinenda et al. (2014), but utilised urethane rubbers of different compliance (soft/hard) as tactile stimuli (rather than clouds of dots), and words of varied valence (positive/neutral/negative) as visual stimuli, which participants rated in terms of valence. These urethane rubbers have been used in other experiments of ours experiments of yours (e.g., Pasqualotto et al., 2020), and we know their physical characteristics and pleasantness. To our knowledge, no study has investigated affective priming between tactile softness and words of valence.

We expect that the characteristics of the tactile stimuli (soft/hard) will interact with the valence of the visual stimuli (positive/neutral/ negative), thus influencing participants’ affective judgement of the visually presented word.

2 Experiment 1

The first experiment tested our principal hypothesis about the effect of touch on affective visual processing. Here, participants evaluated the valence of words presented on a computer screen, while they were touching hard/soft urethane rubbers. Beforehand, a pilot study helped us to choose and classify the visual words.

2.1 Materials and methods

2.1.1 Participants

Twenty-four (12 male and 12 female) right-handed individuals aged between 19 and 32 (mean 23.42 years) participated to Experiment 1 and were recruited via posters placed around Nanyang Technological University’s campus. The minimum sample size was determined by the experiments on affective priming presented by Pecchinenda et al. (2014). Participants’ handedness was obtained using the Fazio Laterality Inventory (FLI; Fazio et al., 2013). Participants did not present any tactile impairments or injuries on their hands and had normal or corrected-to-normal vision. All of them provided written informed consent before starting the experiment (or the pilot, see below). All studies were approved by the Institutional Review Board (IRB-2018-07-013) at Nanyang Technological University, thus are in accordance with the ethical standards of the Helsinki Declaration of 1975, as revised in 2000. Participants received 10 Singaporean Dollars (SGD) for their participation (5 SGD for the pilot).

2.1.2 Apparatus

In order to select the visual stimuli for Experiment 1, a pilot study (N = 10; five male and five female, average age 23.6 years, with normal or corrected-to-normal vision) was run using 130 words from the Affective Norms for English Words (ANEW; Bradley and Lang, 1999) and the software Presentation™ (Berkeley, USA). Participants were randomly presented with these words twice; during one presentation, participants rated the Valence of the words (from “not positive at all” to “very positive”) on a scale from 1-to-9 (with 1 indicating “not positive at all”), while in the other presentation participants rated how much a word was associated with tactile sensations (from “not associated at all” to “very associated”) on a scale from 1-to-9 (with 1 indicating “not associated at all”). For example, the word “book” would have a Tactile Association score higher than the word “cloud.” Knowing the Tactile Association ratings was necessary to ensure that all the selected words had small and comparable pre-existing associations with tactile sensations, thus preventing potential confounds in Experiment 1. The tasks’ order (Valence rating and Tactile Association rating) was counterbalanced across participants.

The average Valence and Tactile Association ratings were calculated for each word and then words were ordered by their Valence. Then we selected the top eight words (i.e., those with the highest Valence, or Positive Words, e.g., “Free”), the bottom eight words (those with the lowest Valence, or Negative Words, e.g., “Death”), and the eight words that were around the average Valence rating (5.72), or Neutral Words (e.g., “Farm”). At the same time, to avoid biases, the selected words had to have Tactile Association ratings below the average Tactile Association (4.53). The selected words (see Supplementary Table S1 for details) were visually presented in Experiment 1.

Four polyurethane rubbers (Katō Tech, Kyoto, Japan) with differing compliance were used in Experiment 1; rubber A (compliance: 0.13 mm/N), rubber B (0.45 mm/N), rubber H (7.56 mm/N), and rubber I (10.53 mm/N). Compliance indicates rubbers’ indentation when the same pressure is applied [refer to Pasqualotto et al. (2020) for a detailed explanation of the compliance measurement]. Therefore, as indicated by their low compliance values, rubbers A and B were the “Hard” rubbers, while H and I where the “Soft” rubbers. Additionally, based to our previous study (Pasqualotto et al., 2020), we know that soft rubbers (H and I) were more pleasant than hard rubbers (A and B). To ensure that rubbers were presented in the same manner, we used the device called Model SHR III-5 SK (Aikoh Engineering, Osaka, Japan) to press the rubbers onto three fingers of the participants at the same speed (5 cm/s) and with the same maximum applied force (5 N). This device was used in our previous studies (e.g., Pasqualotto et al., 2020).

2.1.3 Procedure

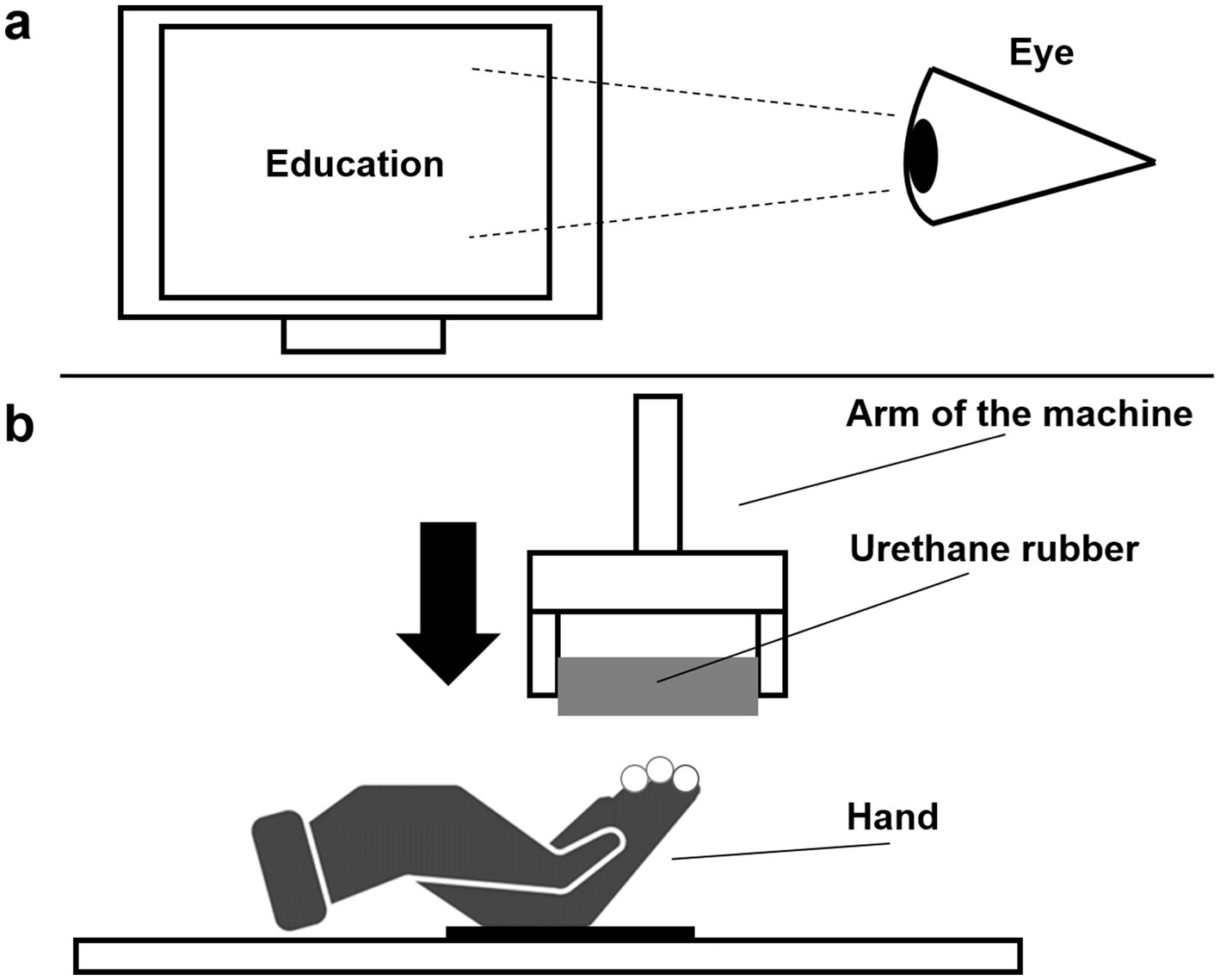

Upon giving informed written consent, completing the handedness questionnaire (FLI), and a brief demographic questionnaire, participants sat in front of the apparatus (see Figure 1 for details). Words were presented on a computer screen (36 × 28 cm, positioned about 35 cm apart) and, to ensure that participants paid attention to the visually presented words they rested their heads on an ophthalmic chin rest. Words subtended a visual angle included between 8.99° and 3.27° width (depending in words’ length) and 2.46° height. Instructions were: “Please, rate the words that will appear on the screen in terms of how positive they are. Use a scale from 1-to-9, with 1 = “not positive at all” and 9 = “very positive.” Participants used their non-dominant (left) hands to type their answers on a keypad. They were asked to pay attention only to the words appearing on the screen placed in front of them and to ignore tactile stimuli. Participants were required to answer as quickly and as accurately as possible.

Figure 1. Experimental setup: (a) the monitor showing the 24 visual stimuli (in this case, the word “Education”), while at the same time (b) the arm of the machine lowers to present the four tactile stimuli to the gloved hand fixed with Velcro to the table; the fingertips of three fingers (index, middle, and ring) are exposed to the tactile stimulus (the image of the hand was designed by Freepik [https://www.freepik.com] and modified for this article).

Words remained on the screen until an answer was produced or up to 2,500 ms. The tactile stimuli remained in touch with participants’ hands until the applied force of 5 N was reached. The initial five trials were for practice only, and employed rubbers and visuals words that were not used in the actual experiment. Twenty-four experimental trials were then conducted for each participant. The visually presented words were pseudo-randomized such that words belonging to the same category (positive/neutral/negative) were never consecutively presented. Likewise, tactile stimuli of the same category (soft/hard) were never consecutively presented. Valence ratings and reaction times (RT) were recorded for each trial. The experimental session lasted about 30 min, while the pilot experiment lasted about 15 min. None of the participants who joined the pilot were allowed to take part in Experiment 1.

2.2 Results

For each participant we calculated the average Valence Ratings and RT (dependent variables) for the three types of Visual Words (Positive/Neutral/Negative, first independent variable) and two types of Tactile Rubbers (Soft/Hard, second independent variable), thus resulting in a 3 × 2 design. These data underwent statistical processing.

2.2.1 Valence ratings

The Shapiro–Wilk test of normality was significant for two-out-of-six datasets [W(24) = 0.885, p = 0.011 and W(24) = 0.896, p = 0.017, while for all the others W(24) > 0.957, p > 0.387]. Since most of the tests was not significant (4-out-of-6) then we conducted parametric statistical tests on our data (Field, 2009).

We ran a two-way within-subjects ANOVA on the average Valence Ratings with Visual Words and Tactile Rubbers as factors. The Mauchly’s test showed that the assumption of sphericity was violated for the main effect of Visual Words [χ2(2) = 7.22, p = 0.030]. Therefore, the associated degree of freedom for the main effect of Visual Words was corrected using Greenhouse–Geisser estimates of sphericity.

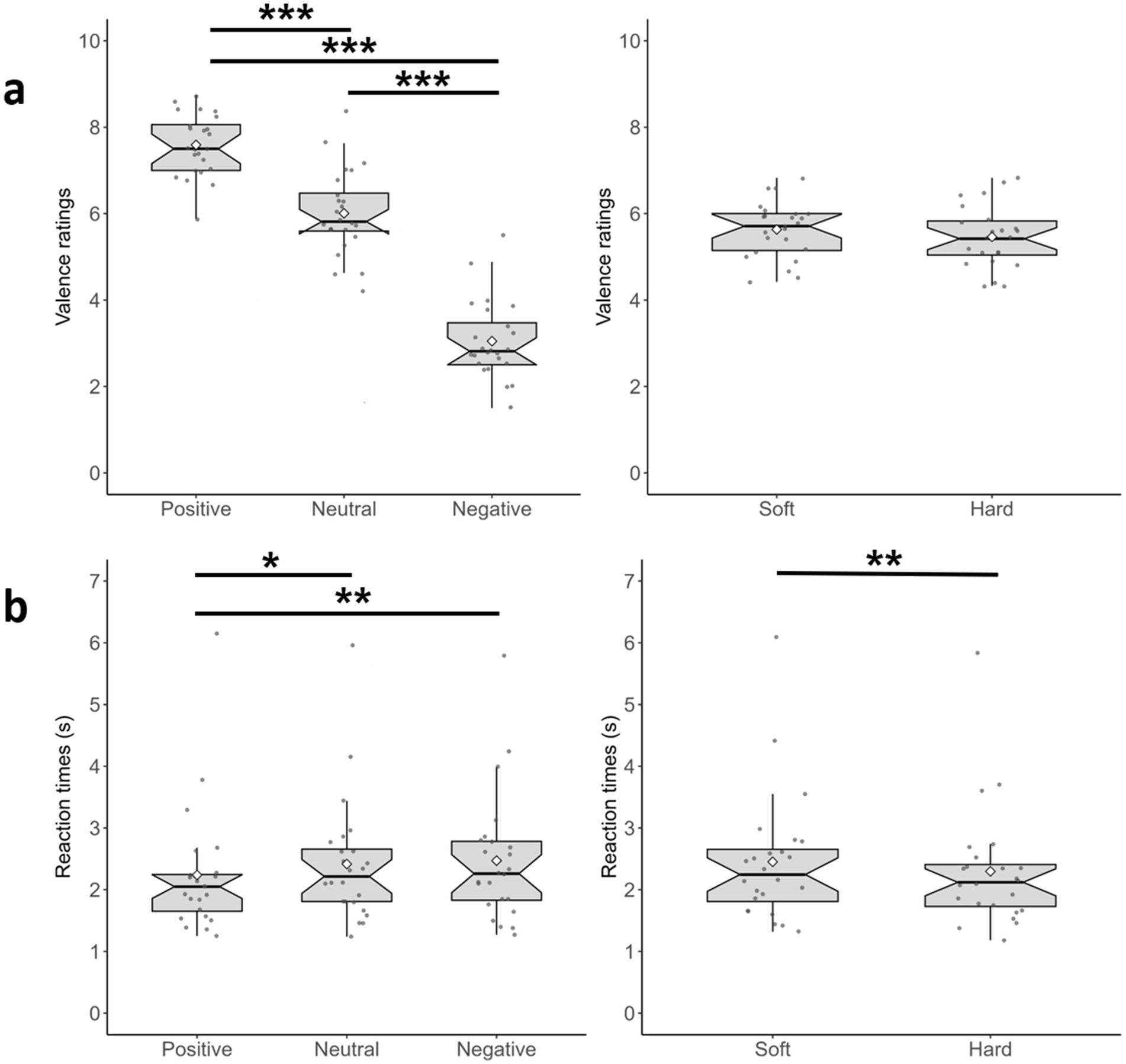

The results of the two-way ANOVA showed a statistically significant main effect of Visual Words on participants’ Valence Ratings [F(1.56, 35.94) = 171.98, p < 0.001, ηp2 = 0.88]. Post-hoc pairwise comparisons with Bonferroni adjustment indicated that the average Valence Ratings were significantly higher for Positive Visual Words (M = 7.59, SD = 0.90) than for Neutral Visual Words (M = 6.01, SD = 1.15) [p < 0.001] and Negative Visual Words (M = 3.04, SD = 1.20) [p < 0.001]. Average Valence Ratings were also significantly higher for Neutral Visual Words than for Negative Visual Words [p < 0.001] (see Figure 2a, left). This confirms the words’ categorization that emerged from the pilot experiment.

Figure 2. Results of Experiment 1 on Valence Ratings and RT; the size of the bars indicates the quartile, the horizontal lines inside each bar indicate the median, and the white diamonds indicate the average. Whiskers represent the largest and smallest data values that are within 1.5 × the interquartile range (IQR) above the third quartile (Q3) and below the first quartile (Q1), respectively. The dots above/below the whiskers are the outliers. The notches of the boxes indicate the 95% confidence level around the median. (a) Valence Ratings for the Visual Words (left) and Tactile Rubbers (right); (b) RT for the same variables. ***p < 0.001, **p < 0.010, and *p < 0.050.

The main effect of Tactile Rubbers and the interaction between Visual Words and Tactile Rubbers were not statistically significant [F(1, 23) = 0.87, p = 0.36, ηp2 = 0.04 and F(2, 46) = 1.78, p = 0.18, ηp2 = 0.07, respectively] (see Figure 2a, right). This suggests that the valence ratings of visually presented words were not influenced by the tactile stimuli.

2.2.2 Reaction times

The Shapiro–Wilk test of normality was significant for each dataset [all W(24) < 0.907, all p < 0.031]. Therefore, we conducted non-parametric statistical tests on our data.

Since our design requires a two-way ANOVA, to clarify the main effects of Visual Words and Tactile Rubbers we averaged these variables. The first procedure produced three datasets based on the types of Visual Word, which were analyzed using a Related-Samples Friedman test. Results showed a significant effect of the Visual Words [χ2(5) = 12.33, p = 0.002], and the Bonferroni-corrected pairwise comparisons confirmed that Positive Visual Words were rated more rapidly (M = 2.24 s, SD = 1.03) than Neural (M = 2.42 s, SD = 1.03) and Negative (M = 2.47 s, SD = 1.06) Visual Words [p = 0.012 and p = 0.004, respectively] (see Figure 2b, left). This indicates that positive words were rated more rapidly. Then, we averaged RT across the three types of Visual Words to obtain two datasets based on the types of Tactile Rubbers, which were analyzed using a Related-Samples Wilcoxon Test. Results showed a significant difference [Z = 34.50, p < 0.002], thus confirming that touching Soft rubbers slowed RT (M = 2.45 s, SD = 1.02 s), compared to Hard rubbers (M = 2.30 s, SD = 1.02 s) (see Figure 2b, right). Finally, investigate the interaction between Visual Words and Tactile Rubbers, for each visual condition (Positive/Neutral/Negative) we calculated the difference Soft minus Hard (see Supplementary Table S3 for details) and ran a Related-Samples Friedman test. The result was not significant [χ2(2) = 0.333, p = 0.846], indicating the lack of interaction.

2.3 Discussion

The main results of Experiment 1 were that (1) words with a positive valence were faster to rate. Additionally, (2) touching hard rubbers speeded the rating of all the visually presented words. Although the advantage for processing words with a positive valence is still strongly debated (Kauschke et al., 2019), most of the evidence favours the notion of faster reaction times for positive words during affective processing (Kappes and Bermeitinger, 2016; Kever et al., 2017; Liu et al., 2016; Stenberg et al., 1998). Therefore, our first result (faster processing of positive words) confirms this major trend in literature.

The other main finding was that hard tactile stimuli speeded the rating of visual words or, that soft tactile stimuli slowed the rating of visual words. We favor the latter interpretation of the results, because our past research (Pasqualotto et al., 2020) showed that the soft stimuli used in this experiment are also more pleasant. Although somewhat debated (Luck et al., 2021), pleasant stimuli are likely to attract more attention (Gupta, 2019) and to slow the reaction times for visually presented words. If this is the case, would pleasant tactile stimuli affect any kind of visual processing? Or just the visual processing involving affective valence? Evidence for the latter would suggest task-specific interactions between touch and vision (Lacey and Sathian, 2011; Pascual-Leone and Hamilton, 2001; Pasqualotto et al., 2013). To clarify this, we ran Experiment 2.

3 Experiment 2

Experiment 2 investigated the multisensory interaction of touch and vision, but using a semantic task that, unlike Experiment 1, did not involve the affective component. Specifically, here participants rated the level of abstractness of the same visually presented words used in Experiment 1, while they were touching the same urethane rubbers. A pilot study helped us to classify the visual words into three levels of abstractness (High/Medium/Low).

3.1 Materials and methods

3.1.1 Participants

Twenty-four (12 male and 12 female) naïve right-handed volunteers aged between 18 and 57 (mean 26.04 years) participated to Experiment 2. All the other details were the same as in Experiment 1.

3.1.2 Apparatus

In order to classify the visual stimuli for Experiment 2, a pilot study (N = 10; five male and five female, average age 25 years) was conducted. Participants were randomly presented with the 24 visual words used in Experiment 1, and they rated the level of abstractness of these words (from “not abstract at all” to “very abstract”) on a scale from 1-to-9 (with 1 indicating “not abstract at all”).

Average Abstractness ratings were calculated for each word and then words were ordered by their Abstractness. The top eight words (i.e., those with the highest abstractness ratings) were classified as High, the bottom eight words (those with the lowest abstractness ratings) were classified as Low, and the eight words in the middle were classified as Medium (see Supplementary Table S2 for details). All the other details were identical to Experiment 1.

3.1.3 Procedure

The procedure of Experiment 2 was the same as Experiment 1, with the exception that participants received the instruction: “Please, rate the words that will appear on the screen in terms of how abstract they are. Use a scale from 1-to-9, with 1 = “not abstract at all” and 9 = “very abstract.”

3.2 Results

Raw data were pre-processed as in Experiment 1.

3.2.1 Valence ratings

The Shapiro–Wilk test of normality was significant for one-out-of-six datasets [W(24) = 0.905, p = 0.028, while for all the others W(24) > 0.941, p > 0.174]. Therefore, we conducted parametric statistical tests on our data.

As in Experiment 1, a two-way within-subjects ANOVA on the Visual Words average ratings with Visual Words (High Abstractness/Medium Abstractness/Low Abstractness) and Tactile Rubbers (Hard vs. Soft) as independent variables was run.

The Mauchly’s test showed that the assumption of sphericity was violated for the main effect of Visual Words [χ2(2) = 11.44, p = 0.030]. Therefore, for this variable the degree of freedom was corrected using Greenhouse–Geisser estimates of sphericity.

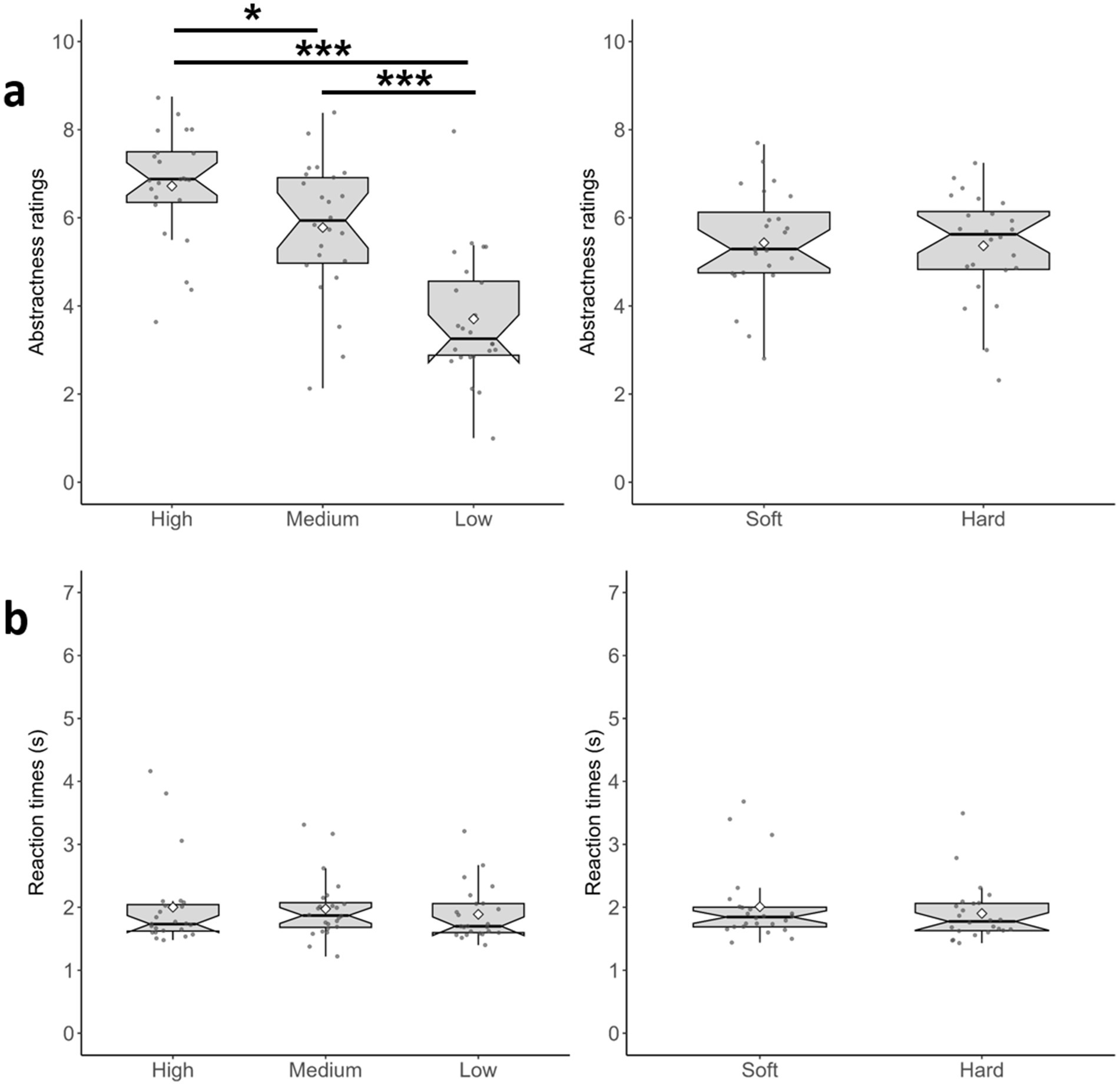

The results of the two-way ANOVA highlighted a statistically significant main effect of Visual Words on the participants’ abstractness ratings [F(1.42, 32.73) = 44.97, p < 0.001, ηp2 = 0.660]. Post-hoc pairwise comparisons with Bonferroni adjustments indicated that the average abstractness ratings were significantly higher for High Abstractness words (M = 6.72, SD = 1.43) than for Medium Abstractness words (M = 5.78, SD = 1.71) [p < 0.017] and Low Abstractness words (M = 3.70, SD = 1.65) [p < 0.001]. Average abstractness ratings were also significantly higher for Medium Abstractness words than for Low Abstractness Words [p < 0.001]. Substantially, these results corroborate the categorization of the words that emerged from the pilot (see Figure 3a, left).

Figure 3. Results of Experiment 1 on Abstractness Ratings and RT; the size of the bars indicates the quartile, the horizontal lines inside each bar indicate the median, and the white diamonds indicate the average. Whiskers represent the largest and smallest data values that are within 1.5 × the interquartile range (IQR) above the third quartile (Q3) and below the first quartile (Q1), respectively. The dots above/below the whiskers are the outliers. The notches of the boxes indicate the 95% confidence level around the median. (a) Abstractness Ratings for the Visual Words (left) and Tactile Rubbers (right); (b) RT for the same variables. ***p < 0.001, **p < 0.010, and *p < 0.050.

The main effect of Tactile Rubbers and the interaction effect between Visual Words and Tactile Rubbers were not statistically significant [F(1, 23) = 0.12, p = 0.735, ηp2 = 0.010 and F(2, 46) = 0.76, p = 0.474, ηp2 = 0.030, respectively] (see Figure 3a, right). These results suggest that abstractness ratings are mostly influenced by the abstractness of the displayed words.

3.2.2 Reaction times

The Shapiro–Wilk test of normality was significant for five-out-of-six datasets [all W(24) < 0.883, all p < 0.009, while for one dataset it was not W(24) = 0.937, p = 0.143]. Therefore, we conducted non-parametric statistical tests on our data.

As in Experiment 1, for the main effects of Visual Words and Tactile Rubbers we averaged these variables. A Related-Samples Friedman test showed a lack of significant effect of the Visual Words [χ2(2) = 1.36, p = 0.506], see Figure 3b, left. Then, a Related-Samples Wilcoxon Test indicted a non-significant effect for the Tactile Rubbers (Z = 105.50, p = 0.203, see Figure 3b, right). Finally, to investigate the interaction between Visual Words and Tactile Rubbers, for each visual condition (High/Medium/Low) we calculated the difference Soft minus Hard (see Supplementary Table S4 for details) and ran a Related-Samples Friedman test., which was not significant [χ2(2) = 0.583, p = 0.747].

3.3 Discussion

The aim of Experiment 2 was to better characterize the results of Experiment 1. We found that touching soft stimuli did not significantly affect the speed of abstractness ratings relative to words presented through vision. This suggests that the cross-modal interaction between touch and vision is task-sensitive (e.g., Lacey and Sathian, 2011) and can be measured in tasks involving the affective component only (Experiment 1).

4 General discussion

Our study investigated the multisensory interaction of vision and touch within the affective domain. Initially we found that the presentation of tactile stimuli did not affect the valance ratings of visual words, yet soft tactile stimuli slowed the affective valence ratings for visually presented words, compared to hard tactile stimuli. Then we better characterized the above results in terms of task-specificity; touching soft stimuli slowed the rating of visually presented words only in affective ratings (and not in semantic ratings). This set of results suggests touch affects the speed of affective visual processing.

Pecchinenda et al. (2014) reported an interaction between the valence of the words and the symmetricity of the dot clouds in terms of accuracy (but not in terms of reaction times). Yet, unlike them, we did not find an interaction between the valence of the words and the softness of the rubbers, but instead a main effect of softness in terms of reaction times. Our lack of interaction can be largely explained by the fact that their study was unisensory (vision) while ours was multisensory (vision and touch). Indeed, each sensory modality represents information in slightly different manners (Behrmann and Ewell, 2003; Lacey et al., 2007; Whitaker et al., 2008), therefore the representation of visually perceived affective stimuli is partly different from tactile perceived affective stimuli, and thus in our case interaction in the valence evaluation was not observed. Nevertheless, through different sets of results, both studies reported the effect of affective stimuli on affective processing, but not on semantic processing.

Studies on visual priming (including Pecchinenda and colleagues), where a visual stimulus facilitates the processing of the following visual stimulus, reported that the type of judgement required to participants (semantic vs. affective) determined the presence/absence of the priming itself (Klauer and Musch, 2002; Lichtenstein-Vidne et al., 2012; Spruyt et al., 2007; Storbeck and Robinson, 2004). Thus, semantic priming would occur when stimuli undergo a semantic judgement (e.g., is it a verb or an adjective?), while affective priming would occur when the same stimuli undergo an affective judgement (e.g., is it good or bad?). Indeed, in our experiments we found an effect of the tactile stimuli only when participants were asked to perform the affective judgement of the visual stimuli. Therefore, in terms of task-specificity our results are in line with previous research involving the visual modality and extend our knowledge to the vision-tactile (multisensory) domain.

Although we found that touching soft stimuli slows the affective processing of visually presented words, and this result fits with the priming literature, we are aware that in our experiments tactile and visual stimuli were presented at the same time, thus representing a particular case of priming with no interstimulus interval. Additionally, it is not entirely clear whether soft tactile stimuli slowed the affective judgment of visual stimuli or whether hard tactile stimuli speeded the affective judgment of visual stimuli. Yet, our previous research found that tactile stimulation of softer objects felt more pleasant (Kitada et al., 2021; Pasqualotto et al., 2020), thus supporting the interpretation that, probably through an attentional mechanism, soft tactile stimuli slowed the rating of visual words. The modulation of attention by soft-and-pleasant stimuli is also supported by Kawamichi et al. (2015), who reported that touching a friend’s hand (relative to a non-embodied rubber hand, Fahey et al., 2019) reduced the unpleasantness of aversive visual stimuli and reduced visual cortex activity. This suggests that the more pleasant (“comfortable”) tactile stimulation swayed the attention from the visual stimuli. Yet, although speculative, both processes might be at play, with soft objects slowing the affective judgment of visual stimuli via the above-mentioned attentional mechanism and hard objects speeding their judgement.

We found that the link between touch and vision was limited to the affective processing, thus task-sensitive. This is a rather established finding (Ghazanfar and Schroeder, 2006; Pascual-Leone and Hamilton, 2001), where “unimodal” and “associative” brain areas cooperate to accomplish the same task. Likewise, we found that an affective task carried out by vision was influenced by a tactile input eliciting pleasantness. Future studies should address how the present results could extend to other stimuli, such as textures (Roberts et al., 2024), and affect stimuli selection (Streicher and Estes, 2016).

In conclusion, although the generalization of our results should be tested using images rather that words (Bradley and Lang, 2007), our study for the first time investigated the effect of tactile input on the affective judgment of visual stimuli and found that softness slows judgment, and that this cross-modal effect is task-specific.

Data availability statement

Data are available at: https://osf.io/86der/overview.

Ethics statement

The studies involving humans were approved by the Institutional Review Board (IRB-2018-07-013) at Nanyang Technological University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AP: Writing – original draft, Writing – review & editing. UL: Writing – review & editing. RK: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This project was supported by a Japan Society for the Promotion of Science (JSPS) KAKENHI grant (24K06616) awarded to AP; a NAP start-up grant from Nanyang Technological University and a MEXT/JSPS KAKENHI grant (25H00581) to RK; and the Undergraduate Research Experience on CAmpus (U.R.E.CA.) program at Nanyang Technological University.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2025.1644393/full#supplementary-material

References

Behrmann, M., and Ewell, C. (2003). Expertise in tactile pattern recognition. Psychol. Sci. 14, 480–492. doi: 10.1111/1467-9280.02458

Bradley, M. M., and Lang, P. J. (1999) Affective Norms for English Words (ANEW): Instruction manual and affective ratings (Vol. 30, No. 1, pp. 25–36). Technical Report C-1, University of Florida, Center for Research in Psychophysiology. Available online at: http://www.scribd.com/doc/42601042/Affective-Norms-for-English-Words

Bradley, M. M., and Lang, P. J. (2007). “The international affective picture system (IAPS) in the study of emotion and attention” in Handbook of emotion elicitation and assessment. eds. J. A. Coan and J. J. B. Allen (Oxford: Oxford University Press), 29–46.

Cattaneo, Z., and Vecchi, T. (2008). Supramodality effects in visual and haptic spatial processes. J. Exp. Psychol. Learn. Mem. Cogn. 34, 631–642. doi: 10.1037/0278-7393.34.3.631

Cavdan, M., Celebi, B., and Drewing, K. (2023). Simultaneous emotional stimuli prolong the timing of vibrotactile events. IEEE Trans. Haptics 16, 622–627. doi: 10.1109/TOH.2023.3275190

Della Longa, L., Sacchetti, S., Farroni, T., and McGlone, F. (2022). Does nice or nasty matter? The intensity of touch modulates the rubber hand illusion. Front. Psychol. 13:901413. doi: 10.3389/fpsyg.2022.901413

Drewing, K., Weyel, C., Celebi, H., and Kaya, D. (2018). Systematic relations between affective and sensory material dimensions in touch. IEEE Trans. Haptics 11, 611–622. doi: 10.1109/TOH.2018.2836427

Fahey, S., Santana, C., Kitada, R., and Zheng, Z. (2019). Affective judgement of social touch on a hand associated with hand embodiment. Q. J. Exp. Psychol. (Hove) 72, 2408–2422. doi: 10.1177/1747021819842785

Fazio, R. H. (2001). On the automatic activation of associated evaluations: an overview. Cogn. Emot. 15, 115–141. doi: 10.1080/0269993004200024

Fazio, R., Dunham, K. J., Griswold, S., and Denney, R. L. (2013). An improved measure of handedness: the fazio laterality inventory. Appl. Neuropsychol. 20, 197–202. doi: 10.1080/09084282.2012.684115

Filippetti, M. L., Kirsch, L. P., Crucianelli, L., and Fotopoulou, A. (2019). Affective certainty and congruency of touch modulate the experience of the rubber hand illusion. Sci. Rep. 9:2635. doi: 10.1038/s41598-019-38880-5

Friedenberg, J., and Bertamini, M. (2015). Aesthetic preference for polygon shape. Empir. Stud. Arts 33, 144–160. doi: 10.1177/0276237415594708

Ghazanfar, A. A., and Schroeder, C. E. (2006). Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285. doi: 10.1016/j.tics.2006.04.008

Guest, S., Dessirier, J. M., Mehrabyan, A., McGlone, F., Essick, G., Gescheider, G., et al. (2011). The development and validation of sensory and emotional scales of touch perception. Atten. Percept. Psychophys. 73, 531–550. doi: 10.3758/s13414-010-0037-y

Gupta, R. (2019). Positive emotions have a unique capacity to capture attention. Prog. Brain Res. 247, 23–46. doi: 10.1016/bs.pbr.2019.02.001

Hermans, D., De Houwer, J., and Eelen, P. (2001). A time course analysis of the affective priming effect. Cogn. Emot. 15, 143–165. doi: 10.1080/0269993004200033

Hermans, D., Houwer, J. D., and Eelen, P. (1994). The affective priming effect: automatic activation of evaluative information in memory. Cogn. Emot. 8, 515–533. doi: 10.1080/02699939408408957

Kaneno, Y., Pasqualotto, A., and Ashida, H. (2024). Influence of interoception and body movement on the rubber hand illusion. Front. Psychol. 15:1458726. doi: 10.3389/fpsyg.2024.1458726

Kappes, C., and Bermeitinger, C. (2016). The emotional stroop as an emotion regulation task. Exp. Aging Res. 42, 161–194. doi: 10.1080/0361073X.2016.1132890

Kauschke, C., Bahn, D., Vesker, M., and Schwarzer, G. (2019). The role of emotional valence for the processing of facial and verbal stimuli—positivity or negativity bias? Front. Psychol. 10:1654. doi: 10.3389/fpsyg.2019.01654

Kawamichi, H., Kitada, R., Yoshihara, K., Takahashi, H. K., and Sadato, N. (2015). Interpersonal touch suppresses visual processing of aversive stimuli. Front. Hum. Neurosci. 9:164. doi: 10.3389/fnhum.2015.00164

Kever, A., Grynberg, D., and Vermeulen, N. (2017). Congruent bodily arousal promotes the constructive recognition of emotional words. Conscious. Cogn. 53, 81–88. doi: 10.1016/j.concog.2017.06.007

Kirsch, L. P., Besharati, S., Papadaki, C., Crucianelli, L., Bertagnoli, S., Ward, N., et al. (2020). Damage to the right insula disrupts the perception of affective touch. eLife 9:e47895. doi: 10.7554/eLife.47895

Kitada, R., Doizaki, R., Kwon, J., Tanigawa, T., Nakagawa, E., Kochiyama, T., et al. (2019). Brain networks underlying tactile softness perception: a functional magnetic resonance imaging study. NeuroImage 197, 156–166. doi: 10.1016/j.neuroimage.2019.04.044

Kitada, R., Ng, M., Tan, Z. Y., Lee, X. E., and Kochiyama, T. (2021). Physical correlates of human-like softness elicit high tactile pleasantness. Sci. Rep. 11:16510. doi: 10.1038/s41598-021-96044-w

Klatzky, R. L., and Lederman, S. J. (2011). Haptic object perception: spatial dimensionality and relation to vision. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 366, 3097–3105. doi: 10.1098/rstb.2011.0153

Klauer, K. C., and Musch, J. (2002). Goal-dependent and goal-in-dependent effects of irrelevant evaluations. Personal. Soc. Psychol. Bull. 28, 802–814. doi: 10.1177/0146167202289009

Lacey, S., Campbell, C., and Sathian, K. (2007). Vision and touch: multiple or multisensory representations of objects? Perception 36, 1513–1521. doi: 10.1068/p5850

Lacey, S., and Sathian, K. (2011). Multisensory object representation: insights from studies of vision and touch. Prog. Brain Res. 191, 165–176. doi: 10.1016/B978-0-444-53752-2.00006-0

Lichtenstein-Vidne, L., Henik, A., and Safadi, Z. (2012). Task relevance modulates processing of distracting emotional stimuli. Cogn. Emot. 26, 42–52. doi: 10.1080/02699931.2011.567055

Liu, T., Liu, X., Xiao, T., and Shi, J. (2016). Human recognition memory and conflict control: an event-related potential study. Neuroscience 313, 83–91. doi: 10.1016/j.neuroscience.2015.11.047

Luck, S. J., Gaspelin, N., Folk, C. L., Remington, R. W., and Theeuwes, J. (2021). Progress toward resolving the attentional capture debate. Vis. Cogn. 29, 1–21. doi: 10.1080/13506285.2020.1848949

Martinovic, J., Lawson, R., and Craddock, M. (2012). Time course of information processing in visual and haptic object classification. Front. Hum. Neurosci. 6:49. doi: 10.3389/fnhum.2012.00049

Morrison, I. (2016). ALE meta-analysis reveals dissociable networks for affective and discriminative aspects of touch. Hum. Brain Mapp. 37, 1308–1320. doi: 10.1002/hbm.23103

Newell, F. N., Ernst, M. O., Tjan, B. S., and Bülthoff, H. H. (2001). Viewpoint dependence in visual and haptic object recognition. Psychol. Sci. 12, 37–42. doi: 10.1111/1467-9280.00307

Pascual-Leone, A., and Hamilton, R.H. (2001). The metamodal organization of the brain. Prog. Brain Res. 134, 427–445. doi: 10.1016/s0079-6123(01)34028-1

Pasqualotto, A., Dumitru, M. L., and Myachykov, A. (2016). Multisensory integration: brain, body, and world. Front. Psychol. 6:2046. doi: 10.3389/fpsyg.2015.02046

Pasqualotto, A., Finucane, C. M., and Newell, F. N. (2013). Ambient visual information confers a context- specific, long-term benefit on memory for haptic scenes. Cognition 128, 363–379. doi: 10.1016/j.cognition.2013.04.011

Pasqualotto, A., Ng, M., Tan, Z. Y., and Kitada, R. (2020). Tactile perception of pleasantness in relation to perceived softness. Sci. Rep. 10:11189. doi: 10.1038/s41598-020-68034-x

Pasqualotto, A., and Proulx, M. J. (2015). Two-dimensional rubber-hand illusion: the Dorian gray hand illusion. Multisens. Res. 28, 101–110. doi: 10.1163/22134808-00002473

Pecchinenda, A., Bertamini, M., Makin, A. D. J., and Ruta, N. (2014). The pleasantness of visual symmetry: always, never or sometimes. PLoS One 9:e92685. doi: 10.1371/journal.pone.0092685

Roberts, R. D., Li, M., and Allen, H. A. (2024). Visual effects on tactile texture perception. Sci. Rep. 14:632. doi: 10.1038/s41598-023-50596-1

Spence, C. (2022). Multisensory contributions to affective touch. Curr. Opin. Behav. Sci. 43, 40–45. doi: 10.1016/j.cobeha.2021.08.003

Spence, C., and Gallace, A. (2011). Multisensory design: reaching out to touch the consumer. Psychol. Mark. 28, 267–308. doi: 10.1002/mar.20392

Spruyt, A., De Houwer, J., Hermans, D., and Eelen, P. (2007). Affective priming of nonaffective semantic categorization responses. Exp. Psychol. 54, 44–53. doi: 10.1027/1618-3169.54.1.44

Stenberg, G., Wiking, S., and Dahl, M. (1998). Judging words at face value: interference in a word processing task reveals automatic processing of affective facial expressions. Cogn. Emot. 12, 755–782. doi: 10.1080/026999398379420

Storbeck, J., and Robinson, M. D. (2004). Preferences and inferences in encoding visual objects: a systematic comparison of semantic and affective priming. Personal. Soc. Psychol. Bull. 30, 81–93. doi: 10.1177/0146167203258855

Streicher, M., and Estes, Z. (2016). Multisensory interaction in product choice: grasping a product affects choice of other seen products. J. Consum. Psychol. 26, 558–565. doi: 10.1016/j.jcps.2016.01.001

Suzuki, M., and Gyoba, J. (2008). Visual and tactile cross-modal mere exposure effects. Cogn. Emot. 22, 147–154. doi: 10.1080/02699930701298382

Whitaker, T. A., Simões-Franklin, C., and Newell, F. N. (2008). Vision and touch: independent or integrated systems for the perception of texture? Brain Res. 1242, 59–72. doi: 10.1016/j.brainres.2008.05.037

Woods, A. T., O’Modhrain, S., and Newell, F. N. (2004). The effect of temporal delay and spatial differences on cross-modal object recognition. Cogn. Affect. Behav. Neurosci. 4, 260–269. doi: 10.3758/CABN.4.2.260

Wu, D., Wu, T. I., Singh, H., Padilla, S., Atkinson, D., Bianchi-Berthouze, N., et al. (2011). The affective experience of handling digital fabrics: tactile and visual cross-modal effects. Lec. Notes Comput. Sci 6974, 427–436. doi: 10.1007/978-3-642-24600-5_46

Zajonc, R. B. (1968). Attitudinal effects of mere exposure. J. Pers. Soc. Psychol. 9, 1–27. doi: 10.1037/H0025848

Keywords: multisensory integration, vision, touch, valence, affective processing

Citation: Pasqualotto A, Leong U and Kitada R (2025) Touching soft materials slows affective visual processing. Front. Psychol. 16:1644393. doi: 10.3389/fpsyg.2025.1644393

Edited by:

Vincenza Tarantino, University of Palermo, ItalyReviewed by:

Fernando Marmolejo-Ramos, Flinders University, AustraliaMüge Cavdan, University of Giessen, Germany

Copyright © 2025 Pasqualotto, Leong and Kitada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Achille Pasqualotto, cGFzcXVhbG90dG8uYWNoaWwuZndAdS50c3VrdWJhLmFjLmpw

Achille Pasqualotto

Achille Pasqualotto Utek Leong1,4

Utek Leong1,4 Ryo Kitada

Ryo Kitada