- 1Economic Research Institute, Beijing Language and Culture University, Beijing, China

- 2School of Journalism and Communication, Hubei University of Economics, Wuhan, China

- 3Business Management and Organization, Wageningen University and Research, Wageningen, Netherlands

- 4School of Economics and Management, Tsinghua University, Beijing, China

Project expert evaluation is the backbone of public funding allocation. A slight change in score can push a proposal below or above a funding line. Academic researchers have discovered many factors that may affect evaluation decision quality, yet the subject of cognitive proximity towards decision quality has not been considered thoroughly. Using 923 observations of the 2017 Beijing Innofund data, the study finds that cognitive proximity has an inverted “U-shape” relation to decision-making quality. Moreover, two contextual factors, evaluation experience and evaluation efforts, exert moderation effects on the inverted U shape. These findings fill the gaps in the current research on cognition-based perspective by specifying the mechanism of cognitive proximity in the evaluation field and contributing to improving decision-making quality by selecting appropriate evaluators. Theoretical contributions and policy implications have been discussed.

Introduction

Project expert evaluation is the backbone of public funding allocation. In order to select the most innovative and promising project, grant funding agencies rely on project evaluation experts to decide which projects get funded. On such occasions, evaluators’ evaluation feedback and results are essential references for final resource allocation decisions (Olbrecht and Bornmann, 2010). Without high precision, some proposals will inevitably be incorrectly ranked and may undeservedly miss out on funding (Graves et al., 2011). Among the various factors that may affect decision quality, cognitive proximity towards decision quality has not been considered thoroughly, and the results remain inconsistent (Bornmann et al., 2010; Lee et al., 2013). Cognitive proximity refers to the degree of overlap between two actors concerning their knowledge bases (Wuyts et al., 2005; Broekel and Boschma, 2012). While it is supposed that cognitive proximity leads to higher decision quality (Dane et al., 2012; Li, 2017), other findings have reported opposite conclusions (Fisher and Keil, 2010; Mehta et al., 2011; Ottati et al., 2015). We boldly presume that neither too high nor too low proximity is suitable for decision quality. Too much proximity can be problematic because there is the risk for cognitive inertia, but too much distance is also problematic because of absorptive incapability (Nooteboom et al., 2007). Therefore, the degree to which an individual’s decision quality tends to rely on cognitive familiarity needs to be investigated, and how they make reviewers uncertain need to be further explored.

In the evaluation field, evaluators’ decision quality is also highly context-dependent. Factors like evaluation experience and efforts play indispensable roles during the evaluation process. Individuals with evaluation experience suggest that they can understand the underlying structural features of a problem, have superior pattern recognition skills, and develop more robust solutions to problems (North et al., 2009). On the other hand, the cognitive effort is the amount of attention devoted to creating a solution related to the intensity aspect of attention (Scheiter et al., 2020). It increases one’s cognitive-processing capacity to notice connections between different elements and make sense of these connections (Acar and Van den Ende, 2016). Nevertheless, there is no explicit evidence on the relationship between evaluation experience and efforts and decision quality. Most evidence is anecdotal, and there is surprisingly little compelling empirical evidence on this issue (Schuett, 2013). We believe that this issue is crucial because it addresses how the cognitive features of evaluators affect the quality of decisions. Under the influence of these two factors, individuals may become objectified, institutionalized, and embedded in their mental models and shape expectations and future interactions. Therefore, what kind of role the experience differences and evaluation efforts play in evaluation quality is critical.

This research addresses these issues by using a 923 sample size of Beijing Innofund. We find support for these arguments. Our results show cognitive familiarity has a curvilinear relation to decision-making quality, i.e., quality of decisions is highest at moderate levels of cognitive proximity, beyond which they recede. Moreover, the inverted U-shaped relationship between cognitive proximity and decision quality is moderated by evaluation experience and evaluation efforts.

This paper contributes several ways; we offer a more nuanced account of how the evaluator’s cognitive proximity affects decision quality. The results highlight that cognitive proximity has an inverted U-shape with decision quality, i.e., lesser expertise simply cannot see what experts can see, and highly close expertise may suffer knowledge boundedness (Boudreau et al., 2016). Moreover, our research contributes to a more comprehensive understanding of the impact of evaluation experience and evaluation efforts on the curvilinear relationship between cognitive proximity and quality of decisions by elucidating the moderating effects of evaluation experience and evaluation efforts.

Theory and Hypotheses

Expert Project Evaluation and Decision-Making Quality

Decision-making and decision-making competence are, at least to some extent, domain-dependent (Allwood and Salo, 2014). Decision-makers need to have a sufficient absorptive capacity to identify, interpret, and exploit knowledge of the target to make their predictions more reliable (Broekel and Boschma, 2012). Decision-makers, especially those close to a particular domain field, can observe and exploit a far broader array of informational cues. They perceive and appreciate more detail, complexity, patterns, and meaning when making the same observations as those not close to a certain field (Boudreau et al., 2016). These advantages in information processing are rooted in developing a richer, more textured library of domain-specific knowledge accumulated through extended periods of training, experience, and practice (Boudreau et al., 2016). When their mental model of the task is deficient initially, and subjects possess mental models that lack features needed to understand, control, and decide in problematic dynamic settings, decision-makers perform poorly.

Research on bounded rationality and expert cognition provides further explanation of decision making and its domain-dependent mechanism. Cognition is absorbing, interpreting, and categorizing knowledge (Kaplan and Tripsas, 2008). People bring a particular cognitive foundation along different life paths and environments to interpret, understand, and evaluate the world differently (Wuyts et al., 2005). These cognitive foundations form knowledge bases, which are the sources of expertise and action of individuals (Hautala, 2011), and lead to cognitive proximity between people (Nooteboom et al., 2007). Since evaluators have expertise in (or preferences for) one topic or approach (Li, 2017), they may not be able to identify fields of knowledge or research practices nor value the usefulness of the potential results of other areas (Banal-Estañol et al., 2019). Therefore, cognitive proximity is a key determinant towards decision-making quality.

Indeed, several studies have tried to discover the relationship between cognitive proximity and decision-making quality. For example, Dane (2010) supposes cognitive proximity may lead to entrenchment, which increases the difficulty of adaptation within one’s domain, causing evaluators to misjudge their option selection. Fisher and Keil (2010) discover that experts tend to overestimate their ability to explain their own areas more than they do unfamiliar areas. Mehta et al. (2011) demonstrate that experts’ higher sense of accountability for their judgments, coupled with their highly developed schemata, is identified as the mechanism of misjudgment. Mueller et al. (2012) demonstrate a negative bias against creativity when evaluators experience knowledge uncertainty. On the other hand, Li (2017) shows that evaluators are better informed but more biased about the quality of projects in their own area. Criscuolo et al. (2017) further discover that too large or too small a knowledge gap can cause decision-making bias. Therefore, the extent to which cognitive proximity may affect decision-making quality is needed to be explored. So, we aim to fill this gap by offering a more nuanced account of how evaluators’ decision quality relies on cognitive familiarity in an inverted U-shape.

Cognitive Proximity and Decision Quality

Intellectual distance and uncertainty might reduce decision quality in assessments (Boudreau et al., 2016). Distance from one’s knowledge domain reinforces the evaluator’s inability to assess the merits of knowledge correctly and increases difficulties in searching, internalizing, and leveraging that knowledge (Acar and Van den Ende, 2016). Thus, it makes it hard for individuals to make reliable predictions of that knowledge, and we might expect a greater uncertainty (Boudreau et al., 2016). In contrast, when evaluators have similar (but not necessarily identical) frames of knowledge, they can better understand the underlying structural features of a problem (North et al., 2009). Decisions will be easier, more predictable, and better understood when they continue from an existing body of well-established knowledge (Koehler, 1991).

However, if evaluators are too familiar with a particular field, i.e., their cognitive proximity is close enough to the rated project, they may process information in a manner that reinforces their prior opinion or expectation (Ottati et al., 2015). Evaluators with cognitive proximity tend to consider more attributes and attempt to conduct a more detailed comparative assessment in their evaluations. This effort will, in turn, cause them to align the non-alignable differences by filling in with their well-developed schemata (Mehta et al., 2011). Their complex schemata increase the likelihood of falsely recalling an associative link from memory (Baird, 2003). Evaluators’ knowledge structures further contribute to these false recalls since their richly developed schemata enable them to recall a comparable attribute more easily, albeit inaccurately. It leads evaluators to adopt a relatively dogmatic, closed-minded orientation (Ottati et al., 2015) and is predisposed to experience psychological insecurity.

The optimal level of cognitive proximity follows from the need to keep some cognitive distance (to stimulate new ideas through recombination) and to secure some cognitive proximity (to enable effective communication and knowledge transfer; Broekel and Boschma, 2012). A certain degree of dissimilarity in terms of know-how, know-what, and way of thinking can be fruitful for both parts of project evaluation. Evaluators deal with this problem by ensuring that they share a common knowledge database and allowing a certain degree of differences in the other dimensions of cognitive proximity (Huber, 2012). Therefore, we can surmise that the evaluator’s decision quality may peak at the middle-level of cognitive proximity. In other words, we presume,

H1: Evaluators’ cognitive proximity has a curvilinear relationship (inverted U-shape) with decision quality, such that the positive relationship between cognitive proximity and decision quality is attenuated when familiarity exceeds a certain high level.

Interaction Effect of Cognitive Proximity and Experience on Decision Quality

Experience is an essential factor influencing decision-making (Mishra et al., 2015). Individuals with evaluation experience can render evaluators with a better understanding of evaluation indicators and applicants’ development and eliminate their inconsistent expectations of those applicants (Mishra et al., 2015). As a result, individuals with evaluation experience may have greater cognitive flexibility or the ability to recognize and integrate information (Furr et al., 2012).

High cognitive proximity, on average, means a better understanding of a problem and high efficiency of decision making. Therefore, for a high cognitive proximity case, evaluators with high evaluation experience are likely to have a higher decision quality than evaluators with average experience levels (Kotha et al., 2013). The low cognitive proximity case will likely involve very little common ground between evaluators and the target project (Cronin and Weingart, 2007). Evaluators may have difficulties in understanding the nuances of the target project with minimal overlap (Huber and Lewis, 2010). As argued above, evaluators with a high evaluation experience are likely to have developed requisite common ground among all projects, reducing uncertainties. Therefore, at low cognitive proximity, increasing evaluation experience is likely to impact the decision quality positively.

Conversely, decreasing evaluation experience will likely exacerbate cognitive dissonance with lower decision-making quality (Kotha et al., 2013). For the inverted U shape, this implies that when evaluation experience is high, evaluators are allowed to correctly identify the objectively maximizing option (Scheiter et al., 2020). This means that in our context, the curve flattens when evaluators’ evaluation experience is high. Schroter et al. (2004) show that a short training program to improve peer review was slightly effective. Schroter et al. (2008) conducted a randomized trial, and it showed that the performance of reviewers was improved with different types of training intervention. Therefore, we propose the following hypothesis,

H2: The inverted U-shaped relationship between cognitive proximity and decision quality is moderated by evaluation experience, such that the curvilinear relationship is less pronounced for evaluators with high evaluation experience than for those with low evaluation experience.

Interaction Effect of Cognitive Proximity and Efforts on Decision Quality

Cognitive effort is inherent to task complexity and individual’s knowledge working on it (Scheiter et al., 2020). With more and more information gathered, the chance of forming a valid representation of a decision strongly increases (Blaywais and Rosenboim, 2019). As a result, evaluators might make optimal decisions. On the other hand, if evaluators are given insufficient examination time to consider which pieces of information are useful, one might expect them to conduct limited reviews of applications (Frakes and Wasserman, 2017). Thus, they may overlook relevant information and grant funding to unqualified proposals (Kim and Oh, 2017).

As stated above, high cognitive proximity means better understanding of a problem and high efficiency of decision making. In such conditions, high cognitive effort engagement may further improve the odds of making a reliable decision. For the low cognitive proximity case, evaluation effort is vital because it increases one’s cognitive-processing capacity to notice connections between different elements and to make sense of these connections in such a way that they can be recombined to generate a novel solution to a given problem (Li et al., 2013). If evaluators perceive their current state of knowledge as insufficient, they are proposed to exert cognitive effort to close the gap between actual and desired levels of decision quality. The inverted U shape implies that the curve becomes less pronounced when evaluators’ cognitive effort is high. Therefore, we propose the following hypothesis,

H3: The inverted U-shaped relationship between cognitive proximity and decision quality is moderated by evaluation efforts, such that the curvilinear relationship is less pronounced for evaluators with high evaluation efforts than for those with low evaluation efforts.

Methodology

Beijing Innofund Institution

A small and medium-sized technology-based enterprise special fund, Beijing Innofund, was initiated by the Government of Beijing Municipality in 2006. It aims to support small and medium-sized enterprises’ technological innovation activities and foster their growth in Beijing. Over 4,000 innovative SMEs have successfully won the grant, and 2,200 of them have grown into national high-tech enterprises.

In each year, there are thousands of firms applying for Beijing Innofund. Firms submit their proposals to one of the 10 panels: electronic information, biomedicine, new materials, equipment manufacturing, modern agriculture, sustainable development, clean energy and energy-saving, electric vehicles, cultural innovation, and city management. After qualification scrutiny, the evaluation process begins. Evaluators log into the evaluation system for online review. To ensure the fairness of evaluation, proposals are randomly distributed to evaluators. Evaluators make their own judgments independently, and no discussion group will be set up.

In particular, each proposal is rated by two technical evaluators and three business evaluators. Technical evaluators are generally researchers or CTOs who have deep insight into technology. Business evaluators are mainly composed of investors, entrepreneurs, or managers, who have made outstanding achievements in the business field. The evaluation indicators of technical evaluators are slightly different from business evaluators. Technical evaluators usually focus on the project’s technological innovation capabilities; their review indicators are human resources, technology innovation, and business model. Business evaluators focus on human resources, economic performance, product innovation, and business models. When the evaluation is completed, the system will automatically summarize all five evaluators’ scoring for the proposal. Their average score will be the final score, and it determines which proposals get the grant. Typically, around the top 23% of proposals in each panel will be the winners.

In 2017, nearly 2000 companies applied for Beijing Innofund, and over 200 evaluators participated in the evaluation process. In the end, 260 projects received support.

Data and Sample

The data used in this paper are obtained from Beijing Innofund database. The database contains rich information about proposals, evaluators, and evaluation results. Although evaluators may belong to different affiliations, we prefer evaluators employed in academic departments (universities and research institutions). Part of the reason is that information of these types of evaluators is more conveniently supplemented, and part of the reason lies in that we are more curious about how knowledge proximity affects decision quality in these knowledge-intense departments. Therefore, by deleting unmatched and missing data, our research was based on a sample size of 923 experts–proposal scoring pairs consisting of 35 evaluators and 772 proposals in 2017.

Measurements

Dependent Variable

Decision Quality

The higher the decision-making quality, the more reliable the decisions are. A high-quality decision should remain satisfying after the decision-maker decides, that is, s/he believes it is the right one (Allwood and Salo, 2014). Bruine de Bruin et al. (2007) developed a self-report measure of adverse decision outcomes. Milkman et al. (2009) suggested that decision quality can be evaluated based upon whether, after the fact, the decision-maker remains satisfied with his or her decisions. The approach taken in this study is based on a long tradition of research using subjective ratings for establishing predictive validity (Wood and Highhouse, 2014). Accordingly, we measured decision quality by asking the decision-maker whether s/he has confidence in their rating. They need to report it on the 6-point scale (1=entirely unsure, 2=unsure, 3=slightly less unsure, 4=slightly sure, 5=sure, 6=entirely sure).

Independent Variables

Cognitive Proximity

To measure cognitive proximity between the evaluator’s expertise and proposals, we should first specify evaluators’ knowledge expertise. Based on Jeppesen and Lakhani’s (2010) study, we categorized evaluators’ knowledge expertise into scales 1 to 3 (1=outside my field of expertise, 2=at the boundary of my field of expertise, 3=inside my field of expertise). The Beijing Innofund evaluator database contains four self-reported fields with which evaluators suppose they are most familiar, and we recoded those fields as three if they are coincident with projects’ panel field. We recorded those projects’ fields that matched reviewers’ majors but did not belong to the four most familiar fields as 2. Those fields that were not related to evaluator’s knowledge base were recoded as 1. The higher the value, the higher the cognitive proximity between the evaluator and the applicants.

Evaluation Experience

Evaluators’ evaluation experience was measured by the number of times evaluators had participated in Beijing Innofund evaluations. Due to Beijing Innofund having undergone a complete reform in 2015, its database only keeps evaluation information since 2015. Therefore, the maximum evaluation experience is 3 and the minimum is 1. A higher number means evaluators are more experienced.

Evaluation Efforts

Evaluation effort is a commonly used indicator of the amount of cognitive resources expended in a task. According to Acar and Van den Ende (2016), we took the total minutes evaluators had spent generating their final solution, including thinking about the solution and reading and researching it.

Control Variables

Gender

To provide equitable assessment, systematic differences in decisions by male and female evaluators need to be addressed (Tamblyn et al., 2018). We coded the male evaluator as 0; female evaluator as 1.

Education Level

Evaluators with higher degree level show more risk (Li, 2017), so there is a need to control it into the model. We coded bachelor as 1, master as 2, Ph.D. as 3.

Major

Discipline differences were apparent in evaluation studies (Bornmann et al., 2010); we divided the evaluator’s major into three categories, science and technology, liberal arts, and interdisciplinary backgrounds. Science and technology includes physics, chemistry, biology, engineering, astronomy, and mathematics; liberal arts includes literature, history, philosophy and art, human geography, law, education, economics, and management. If evaluators only major in science and technology, we coded it as 1; if evaluators only major in liberal arts, we coded it as 2; if a certain evaluator has both knowledge in science and technology and liberal arts, then we coded it as 3.

Evaluator’s Types

Since evaluation indicators of different types are different, evaluator types also need to be controlled (Jayasinghe et al., 2003). We coded the technical evaluator as 1; the business evaluator as 2.

Application Field

Applications and evaluators tend to be systematically different in each field (Banal-Estañol et al., 2019). We generate dummy variables for each field.

Statistical Analysis

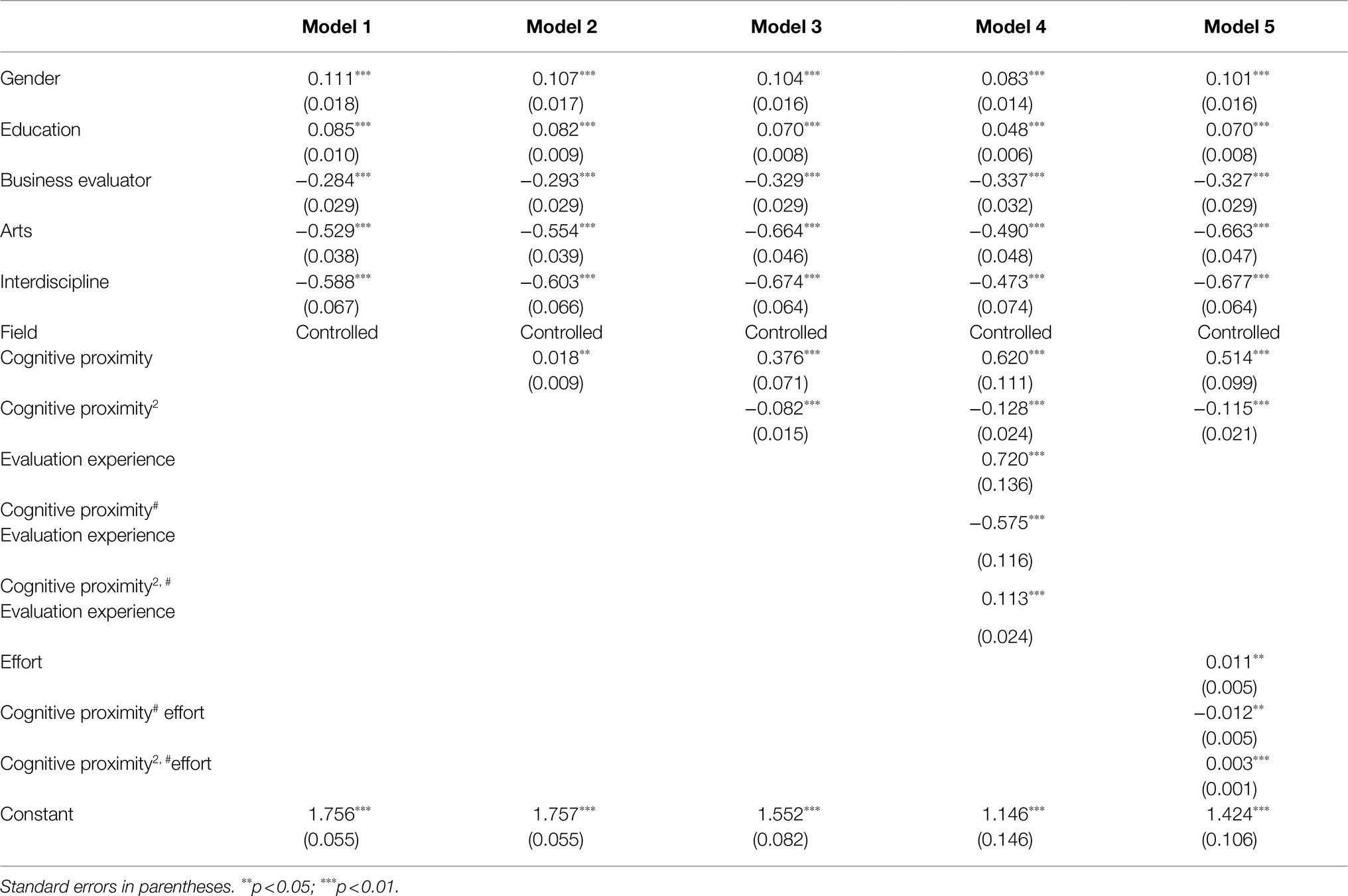

Since our dependent variable is a nonnegative count variable, the negative binomial model is appropriate for estimating it. The negative binomial model allows the variance to differ from the mean, which can correct for overdispersion. Moreover, since the evaluations of the same evaluator may not be independent, there is a need to control for evaluators’ fix effect. We use hierarchical models, with Model 1 serving as the baseline model that includes only the control variables, Models 2 to 3 introducing the independent variables, and Models 4 to 5 incorporating the moderating variables. Moreover, no symptoms of multicollinearity were observed, as the maximum variance inflation factor index does not exceed the critical value of 10.

Results

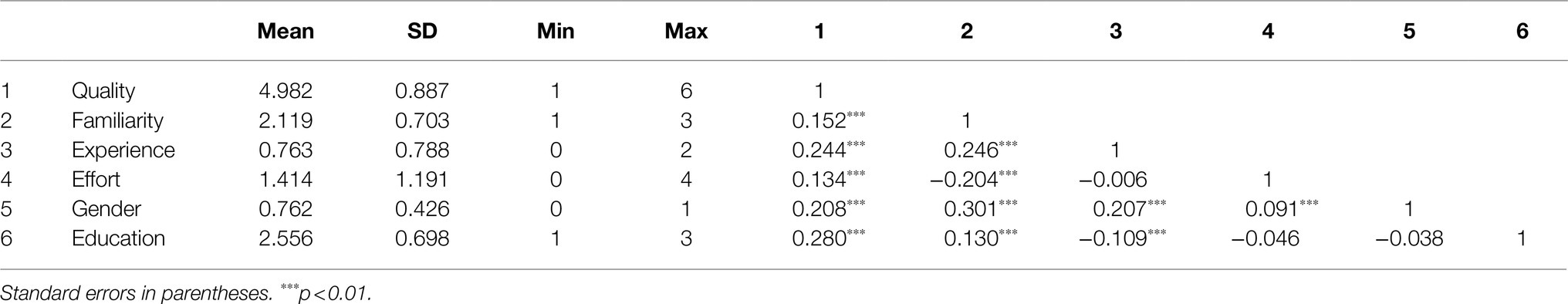

Table 1 provides the means, standard deviations, and correlation coefficients of all study variables. It shows relatively moderate correlations among variables.

Main Effect

The results are given in Table 2. In Model 1, we enter the control variables (gender, education, major, types, and field). In Model 2 and Model 3, we include independent variables and their quadratic term to predict the curvilinear relationship that the evaluators’ cognitive proximity would have on assessing quality, respectively. The result of Model 2 shows a positive and non-significant effect of evaluation quality (β=0.018), and that the coefficient of the quadratic term of cognitive proximity is significant and negative (β=−0.082, p<0.1) in Model 3.

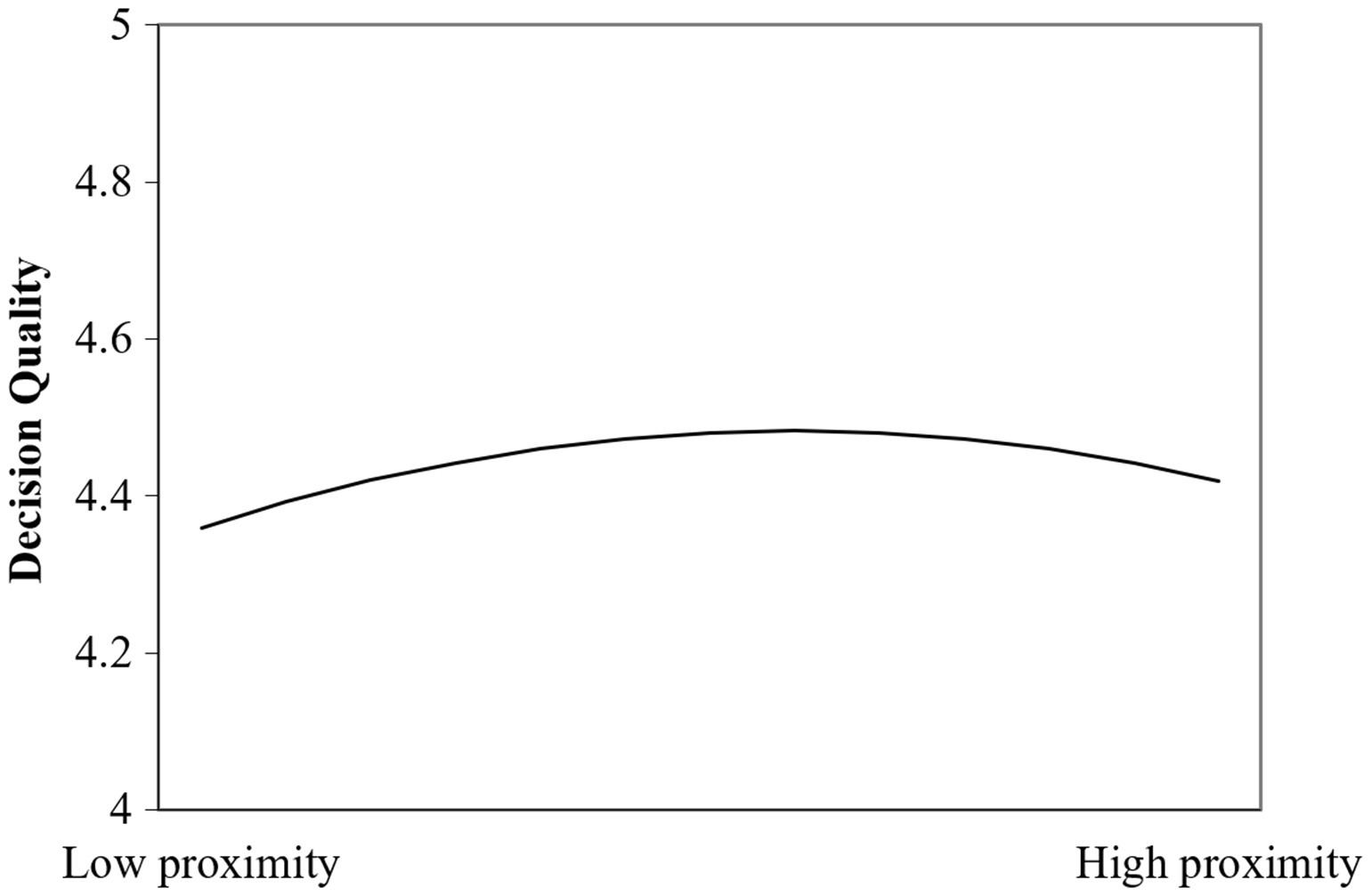

To facilitate interpretation of the results, we plot in Figure 1 the relation of cognitive proximity and decision quality. The results indicate that once the evaluators’ knowledge familiarity reaches a certain level, decision quality peaks and declines as evaluators’ knowledge increases further. The inverted U shape of this curve is consistent with H1.

H2 predicts that the inverted U-shaped relationship between cognitive proximity and decision quality is moderated by evaluation experience, such that the curvilinear relationship is less pronounced for evaluators with high evaluation experience than those with low evaluation experience. We enter evaluation experience into the model in Model 4. The results of Model 4 reveal that the interaction term between cognitive proximity and assessing experience is negative and significant (β=−0.575, p<0.05), whereas the interaction term between the squared term of cognitive proximity and the assessing experience is positive and significant (β=0.113, p<0.05).

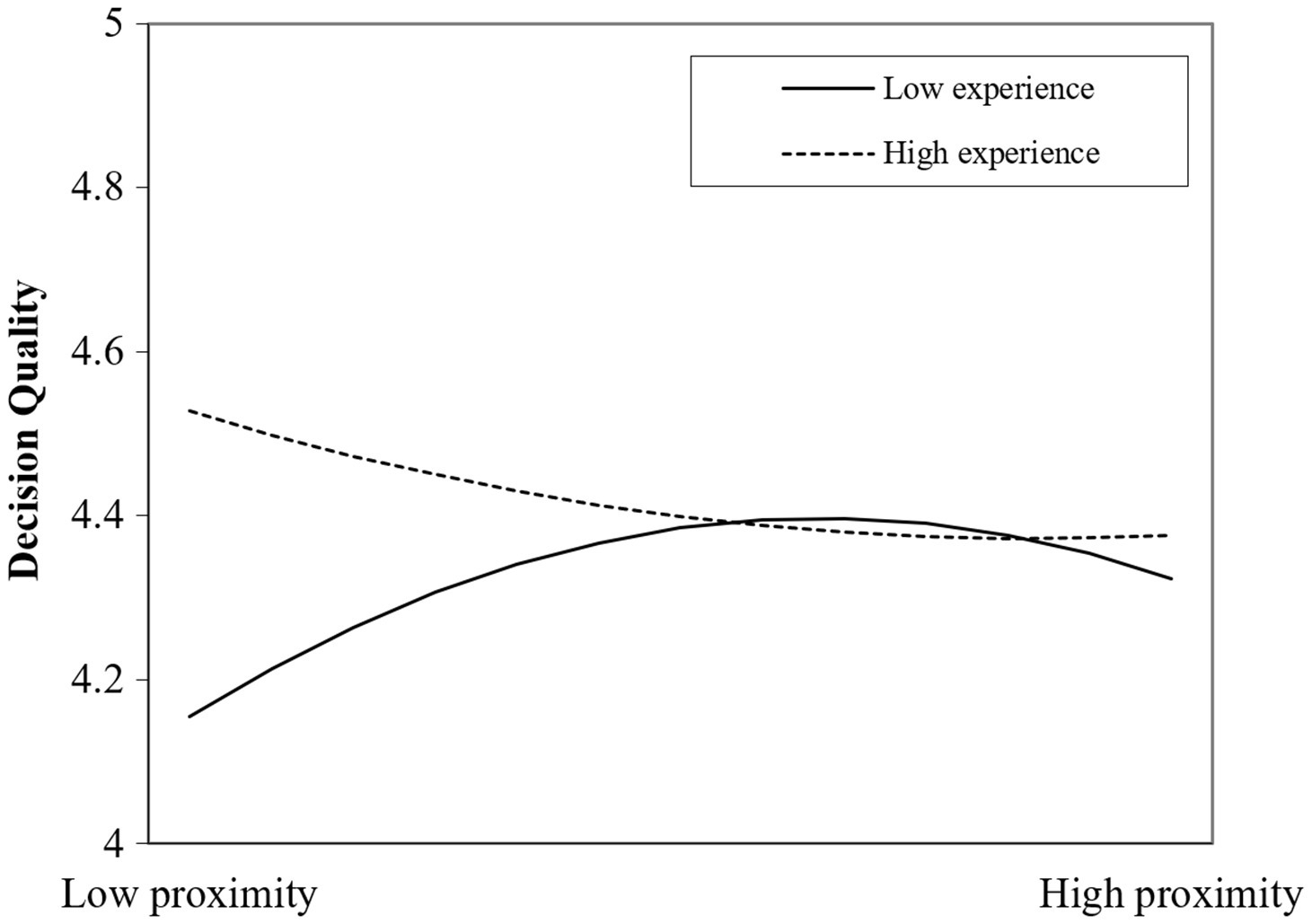

To facilitate interpretation, in Figure 2, we plot the curves between cognitive proximity and decision quality at a higher (one standard deviation above the mean) and a lower (one standard deviation below the mean) level of evaluation experience, respectively. The analysis suggests that for low and high cognitive proximity, the impact on assessing quality significantly differs between low and high assessing experience. For evaluators with low evaluation experience, cognitive proximity has an estimated increasingly positive effect on assessing quality. For high evaluation experience, cognitive proximity gradually drops on assessing quality, i.e., the increasing benefits of cognitive proximity on decision quality are lessened. However, after the turning point, the diminishing benefits of assessing quality are lessened for high experience evaluators since the curve turns up. Thus, the results prove that the inverted U-shaped relationship between cognitive proximity and decision quality is attenuated when the evaluator’s experience is high and accentuated when the experience is low.

H3 predicts that the inverted U-shaped relationship between cognitive proximity and decision quality is moderated by evaluation efforts, such that the curvilinear relationship is less pronounced for evaluators with high evaluation efforts than for those with low evaluation efforts. In Model 5, we include evaluation efforts and its interaction effect on decision quality with the squared term of familiarity. The interaction term between cognitive proximity and assessing efforts is negative but not significant with β=−0.012, whereas the interaction term between the squared term of cognitive proximity and the assessing effort is positive and significant (β=0.003, p<0.1).

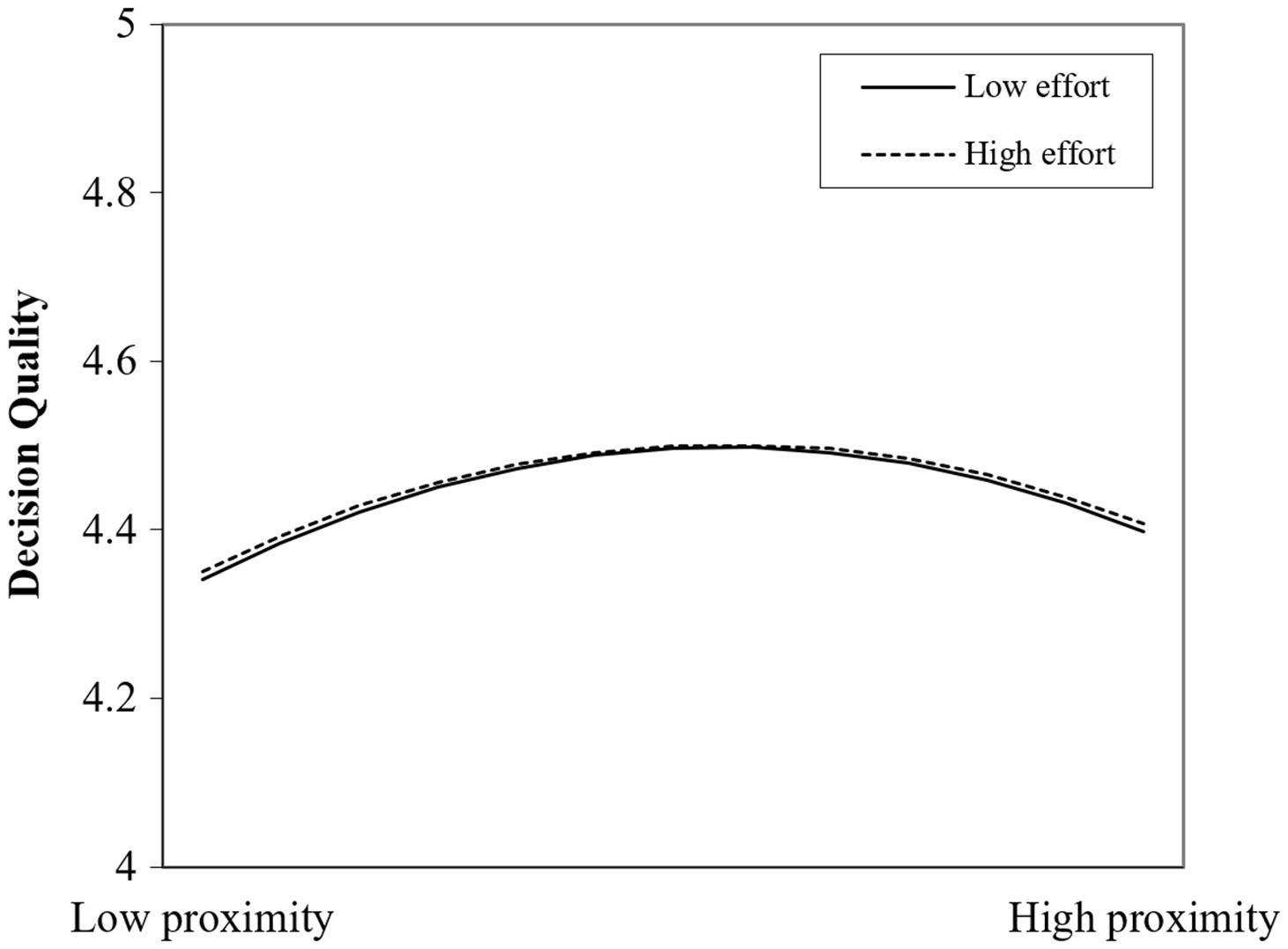

Figure 3 plots the curves between cognitive proximity and decision quality at a higher (one standard deviation above the mean) and a lower (one standard deviation below the mean) level of evaluation efforts. The figure suggests that the impact of cognitive proximity on assessing quality slightly differs between low and high assessing efforts. The curvilinear relationship is less pronounced for evaluators with high evaluation efforts than those with low evaluation.

Robust Check

We conduct further tests to check that our results are robust to changes in specifications (1) We conduct additional analysis by dropping the funded projects from the sample. Selecting unfunded projects can effectively reduce the error caused by quality issues between projects. Unfunded projects tend to be of similar quality, and their sample size is big enough (2) We replicate our findings with Poisson regression. This procedure is estimated using the maximum likelihood method compared to our main specification, effectively suppressing heteroscedasticity. Results provided in Table 3 are consistent with preliminary results.

Since proposal characteristics may also influence evaluation decisions, we opt for a robust cluster variance estimator to account for possible proposal correlations. Table 4 (within proposal) shows that the patterns of results were all consistent.

Discussion

In this study, we aim to investigate the cognitive proximity effect in the decision-making field as well as their potential influential factors. We attempted to examine the extent to which one’s cognitive proximity would affect decision quality by testing the inverted U-shape relationship. Our hypothesis was supported. As explained by the cognitive-based perspective, decision-makers are more confident of their decision with intermediate levels of cognitive proximity.

However, according to Figure 1, the inverted U-shaped relation of cognitive proximity to decision quality is not apparent. When knowledge proximity increases from moderate to familiarity, evaluators’ quality of decision still maintains relatively high. We presume it lies in that when evaluators’ expertise increases, their psychological security may increase as well. In such conditions, evaluators do not need to make risky judgments, which are less easily threatened by uncertainty. It may induce them to overestimate the accuracy of their beliefs (Ottati et al., 2015). Therefore, the decreasing effect between cognitive proximity to decision quality may be lessened.

Moreover, the two moderators, evaluation experience and effort, both positively moderate decision quality. However, their efficacy is quite different. Evaluators’ performance in many fields relies on extensive practice and experience. It is proposed that information that is most confidently retrieved from memory, regardless of its accuracy, will be most influential in decision making (Cowley, 2004). The experience-based memory may help integrate the disparate elements of tasks that are not easily decomposed, permitting one to have a holistic judgment (Dane et al., 2012). Therefore, the effectiveness of experience is amplified at a high level of domain expertise (Dane et al., 2012). On the other hand, high cognitive proximity means better understanding of a problem and high efficiency of decision making. It is possible that many of the evaluators already have had an evident opinion on the topic, reflected in very high decision quality. In such conditions, evaluators may process information less effortfully than processing unfamiliar information (Acar and Van den Ende, 2016), so the curve is turning flatter. Thus, the effect of evaluation efforts on the inverted U-shaped relation is less significant than that of evaluation experience.

Theoretical Implications

We challenge the traditional linear conceptualization of the effects of cognitive proximity on decision making and contribute to the literature on cognitive theory, peer evaluation, and decision theory by specifying the mechanism of cognitive proximity in the evaluation domain. Individuals with deep domain knowledge may have greater confidence in their predictions in fields where they have self-declared expertise (Heath and Tversky, 1991). Actors need to have a sufficient absorptive capacity to identify, interpret, and exploit knowledge (Cohen and Levinthal, 1990). However, too much cognitive proximity may result in cognitive lock-in, in the sense that too similar cognitive bases between evaluators and proposals may limit their cognition in verse. This study provides a cognitive account that may explain conflicting evidence about the link between cognitive proximity and decision quality by revealing that medium cognitive proximity is beneficial (Dane, 2010).

We also contribute to the decision-making field by exploring the consequences for evaluators to be exposed to experiences and effort. Evaluation experience has not received much research focus in the peer review literature; we highlight its importance in the evaluation field by elucidating its moderating role on the curvilinear relationship between cognitive proximity and decision certainty. We also identify that decision performance can be improved by allocating more cognitive resources to their execution by integrating evaluation efforts into cognition processes. Our research provides a new lens for investigating the impact of evaluation efforts on cognition and decision quality.

Practical Implications

The funding agency should consider the cognitive proximity between the evaluators and the proposals to improve decision consistency and the quality of review decisions. It is also possible to supplement evaluations with statistics providing objective measures of the degree of familiarity for a given proposal (Boudreau et al., 2016). Another option for improvement involves balancing the characteristics of evaluators. For example, if an evaluator’s knowledge significantly affects decisions, it may be best to assign evaluators with various backgrounds for evaluation.

Similarly, since the previous evaluation experience is critical to decision quality, selecting experienced evaluators and offering them training is also helpful to improve their decision quality. Furthermore, since the increased evaluation efforts of the evaluators improves their ability to identify high-quality proposals, it is crucial to give evaluators a sufficient amount of time to improve the decision quality.

Limitations and Future Research

These contributions, however, must be qualified in light of two critical limitations of this study. First is the limitation of data. Although the data enabled us to observe how cognitive search behavior was related to decision quality, the data collected in this study did not precisely determine causality in nature. Therefore, we encourage future researchers to use experimental manipulations to explicitly show the causal role of search behavior in influencing decision-making.

Second, the role of different types of organizational structures in decision-making needs to be further explored. This study only selects evaluation data from universities. The characteristics of evaluators from other industries, such as evaluators’ performance from government departments and enterprises, are also worth exploring. Assessing decisions may have unique functions in organizational types, for example, the bureaucratic/orthodox organization, the professional organization, the postmodern organization, the representative democratic organization, and network organizations (Diefenbach and Sillince, 2011).

Conclusion

Our finding suggests that decision-makers are more confident of their decision with intermediate levels of cognitive proximity. Optimal knowledge proximity is reached when people’s knowledge bases shared similarities and some newness (Nooteboom et al., 2007). Such relation is lessened by evaluation experience as evaluation experience positively affects decision quality, i.e., the curvilinear relationship is less pronounced for evaluators with high evaluation experience than for those with low evaluation experience. Moreover, the inverted U-shaped relationship between cognitive proximity and decision quality is also moderated by evaluation efforts. That is, the curvilinear relationship is less pronounced for evaluators with high evaluation efforts than for those with low evaluation efforts.

Data Availability Statement

The datasets presented in this article are not readily available because the data belongs to an ongoing project. Requests to access the datasets should be directed to the first author ZC,Y2h1cWluZ3poYW5nQDEyNi5jb20=.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This research project is supported by the Science Foundation of Beijing Language and Culture University (supported by the Fundamental Research Funds for the Central Universities) (21YJ050007) and Foshan and Tsinghua Industry-University Research Collaborative Innovation Project (2019THFS01).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Acar, O. A., and Van den Ende, J. (2016). Knowledge distance, cognitive-search processes, and creativity: The making of winning solutions in science contests. Psychol. Sci. 27, 692–699. doi: 10.1177/0956797616634665

Allwood, C. M., and Salo, I. (2014). Conceptions of decision quality and effectiveness in decision processes according to administrative officers and investigators making decisions for others in three swedish public authorities. Hum. Serv. Organ. Manag. Leadersh. Gov. 38, 271–282. doi: 10.1080/23303131.2014.893277

Baird, R. R. (2003). Experts sometimes show more false recall than novices: A cost of knowing too much. Learn. Individ. Differ. 13, 349–355. doi: 10.1016/S1041-6080(03)00018-9

Banal-Estañol, A., Macho-Stadler, I., and Pérez-Castrillo, D. (2019). Evaluation in research funding agencies: are structurally diverse teams biased against? Res. Policy 48, 1823–1840. doi: 10.1016/j.respol.2019.04.008

Blaywais, R., and Rosenboim, M. (2019). The effect of cognitive load on economic decisions. Manag. Decis. Econ. 40, 993–999. doi: 10.1002/mde.3085

Bornmann, L., Rüdiger, M., and Daniel, H. D. (2010). A reliability-generalization study of journal peer reviews: a multilevel meta-analysis of inter-rater reliability and its determinants. PLoS One 5, 1–10. doi: 10.1371/journal.pone.0014331

Boudreau, K. J., Guinan, E. C., Lakhani, K. R., and Riedl, C. (2016). Looking across and looking beyond the knowledge frontier: intellectual distance, novelty, and resource allocation in science. Manag. Sci. 62, 2765–2783. doi: 10.1287/mnsc.2015.2285

Broekel, T., and Boschma, R. (2012). Knowledge networks in the Dutch aviation industry: the proximity paradox. J. Econ. Geogr. 12, 409–433. doi: 10.1093/jeg/lbr010

Bruine de Bruin, W., Parker, A. M., and Fischhoff, B. (2007). Individual differences in adult decision-making competence. J. Pers. Soc. Psychol. 92, 938–956. doi: 10.1037/0022-3514.92.5.938

Cohen, W. M., and Levinthal, D. A. (1990). Absorptive capacity: A new perspective on learning and innovation. Adm. Sci. Q. 35, 128–152. doi: 10.2307/2393553

Cowley, E. (2004). Recognition confidence, recognition accuracy and choice. J. Bus. Res. 57, 641–646. doi: 10.1016/S0148-2963(02)00307-7

Criscuolo, P., Dahlander, L., Grohsjean, T., and Salter, A. (2017). Evaluating novelty: The role of panels in the selection of R&D projects. Acad. Manag. J. 60, 433–460. doi: 10.5465/amj.2014.0861

Cronin, M. A., and Weingart, L. R. (2007). Representation gaps, information processing and conflict in functionally diverse terms. Acad. Manag. Rev. 32, 761–773. doi: 10.5465/amr.2007.25275511

Dane, E. (2010). Reconsidering the trade-off between expertise and flexibility: A cognitive entrenchment perspective. Acad. Manag. Rev. 35, 579–603. doi: 10.5465/AMR.2010.53502832

Dane, E., Rockmann, K. W., and Pratt, M. G. (2012). When should I trust my gut? Linking domain expertise to intuitive decision-making effectiveness. Organ. Behav. Hum. Decis. Process. 119, 187–194. doi: 10.1016/j.obhdp.2012.07.009

Diefenbach, T., and Sillince, J. A. (2011). Formal and informal hierarchy in different types of organization. Organ. Stud. 32, 1515–1537. doi: 10.1177/0170840611421254

Fisher, M., and Keil, F. C. (2010). The curse of expertise: when more knowledge leads to miscalibrated explanatory insight. Cogn. Sci. 40, 1251–1269. doi: 10.1111/cogs.12280

Frakes, M. D., and Wasserman, M. F. (2017). Is the time allocated to review patent applications inducing examiners to Grant invalid patents? Evidence from microlevel application data. Rev. Econ. Sta. 99, 550–563. doi: 10.1162/REST_a_00605

Furr, N. R., Cavarretta, F., and Garg, S. (2012). Who changes course? The role of domain knowledge and novel framing in making technology changes. Strateg. Entrep. J. 6, 236–256. doi: 10.1002/sej.1137

Graves, N., Barnett, A. G., and Clarke, P. (2011). Funding grant proposals for scientific research: retrospective analysis of scores by members of grant review panel. BMJ 343, 1–8. doi: 10.1136/bmj.d4797

Hautala, J. (2011). Cognitive proximity in international research groups. J. Knowl. Manag. 15, 601–624. doi: 10.1108/13673271111151983

Heath, C., and Tversky, A. (1991). Preference and belief: ambiguity and competence in choice under uncertainty. J. Risk Uncertain. 4, 5–28. doi: 10.1007/BF00057884

Huber, F. (2012). On the role and interrelationship of spatial, social and cognitive proximity: personal knowledge relationships of R&D workers in the Cambridge information technology cluster. Reg. Stud. 46, 1169–1182. doi: 10.1080/00343404.2011.569539

Huber, G. P., and Lewis, K. (2010). Cross-understanding: implications for group cognition and performance. Acad. Manag. Rev. 35, 6–26. doi: 10.5465/AMR.2010.45577787

Jayasinghe, U. W., Marsh, H. W., and Bond, N. (2003). A multilevel cross-classified modelling approach to peer review of grant proposals: the effects of assessor and researcher attributes on assessor ratings. J. R. Stat. Soc. Ser. A-Stat. Soc. 166, 279–300. doi: 10.1111/1467-985X.00278

Jeppesen, L. B., and Lakhani, K. R. (2010). Marginality and problem-solving effectiveness in broadcast search. Organ. Sci. 21, 1016–1033. doi: 10.1287/orsc.1090.0491

Kaplan, S., and Tripsas, M. (2008). Thinking about technology: applying a cognitive lens to technical change. Res. Policy 37, 790–805. doi: 10.1016/j.respol.2008.02.002

Kim, Y. K., and Oh, J. B. (2017). Examination workloads, grant decision bias and examination quality of patent office. Res. Policy 46, 1005–1019. doi: 10.1016/j.respol.2017.03.007

Koehler, D. J. (1991). Explanation, imagination, and confidence in judgment. Psychol. Bull. 110, 499–519. doi: 10.1037/0033-2909.110.3.499

Kotha, R., George, G., and Srikanth, K. (2013). Bridging the mutual knowledge gap: coordination and the commercialization of university science. Acad. Manag. J. 56, 498–524. doi: 10.5465/amj.2010.0948

Lee, C. J., Sugimoto, C. R., Zhang, G., and Cronin, B. (2013). Bias in peer review. J. Am. Soc. Sci. Tec. 64, 2–17. doi: 10.1002/asi.22784

Li, D. (2017). Expertise versus bias in evaluation: evidence from the nih. Am. Econ. J.-Appl. Econ. 9, 60–92. doi: 10.1257/app.20150421

Li, Q., Maggitti, P. G., Smith, K. G., Tesluk, P. E., and Katila, R. (2013). Top management attention to innovation: the role of search selection and intensity in new product introductions. Acad. Manag. J. 56, 893–916. doi: 10.5465/amj.2010.0844

Mehta, R., Hoegg, J., and Chakravarti, A. (2011). Knowing too much: expertise-induced false recall effects in product comparison. J. Consum. Res. 38, 535–554. doi: 10.1086/659380

Milkman, K. L., Chugh, D., and Bazerman, M. H. (2009). How can decision making be improved? Perspect. Psychol. Sci. 4, 283–379. doi: 10.1111/j.1745-6924.2009.01142.x

Mishra, J., Allen, D., and Pearman, A. (2015). Information seeking, use, and decision making. J. Assoc. Inf. Sci. Tech. 66, 662–673. doi: 10.1002/asi.23204

Wood, N. L., and Highhouse, S. (2014). Do self-reported decision styles relate with others’ impressions of decision quality? Pers. Individ. Differ. 70, 224–228. doi: 10.1016/j.paid.2014.06.036

Mueller, J. S., Melwani, S., and Goncalo, J. A. (2012). The bias against creativity: why people desire but reject creative ideas. Psychol. Sci. 23, 13–17. doi: 10.1177/0956797611421018

Nooteboom, B., Haverbeke, W. V., Duysters, G., Gilsing, V., and Oord, A. V. D. (2007). Optimal cognitive distance and absorptive capacity. Res. Policy 36, 1016–1034. doi: 10.1016/j.respol.2007.04.003

North, J. S., Williams, A. M., Hodges, N., Ward, P., and Ericsson, K. A. (2009). Perceiving patterns in dynamic action sequences: investigating the processes underpinning stimulus recognition and anticipation skill. Appl. Cogn. Psychol. 23, 878–894. doi: 10.1002/acp.1581

Olbrecht, M., and Bornmann, L. (2010). Panel peer review of grant applications: what do we know from research in social psychology on judgment and decision-making in groups? Res. Evaluat. 19, 293–304. doi: 10.3152/095820210X12809191250762

Ottati, V., Price, E. D., Wilson, C., and Sumaktoyo, N. (2015). When self-perceptions of expertise increase closed-minded cognition: The earned dogmatism effect. J. Exp. Soc. Psychol. 61, 131–138. doi: 10.1016/j.jesp.2015.08.003

Scheiter, K., Ackerman, R., and Hoogerheide, V. (2020). Looking at mental effort appraisals through a metacognitive lens: are they biased? Educ. Psychol. Rev. 32, 1003–1027. doi: 10.1007/s10648-020-09555-9

Schroter, S., Black, N., Evans, S., Carpenter, J., Godlee, F., and Smith, R. (2004). Effects of training on quality of peer review: randomised controlled trial. BMJ. 328, 673–677. doi: 10.1136/bmj.38023.700775.AE

Schroter, S., Black, N., Evans, S., Godlee, F., Osorio, L., and Smith, R. (2008). What errors do peer reviewers detect, and does training improve their ability to detect them? J. R. Soc. Med. 101, 507–514. doi: 10.1258/jrsm.2008.080062

Schuett, F. (2013). Patent quality and incentives at the patent office. Rand J. Econ. 44, 313–336. doi: 10.1111/1756-2171.12021

Tamblyn, R., Girard, N., Qian, C. J., and Hanley, J. (2018). Assessment of potential bias in research grant peer review in Canada. CMAJ 190, E489–E499. doi: 10.1503/cmaj.170901

Keywords: cognitive proximity, decision-making quality, evaluation experience, evaluation effort, funding allocation

Citation: Zhang C, Zhang Z, Yang D, Ashourizadeh S and Li L (2021) When Cognitive Proximity Leads to Higher Evaluation Decision Quality: A Study of Public Funding Allocation. Front. Psychol. 12:697989. doi: 10.3389/fpsyg.2021.697989

Edited by:

Chris Baber, University of Birmingham, United KingdomReviewed by:

Surapati Pramanik, Nandalal Ghosh B.T. College, IndiaAnja Kühnel, Medical School Berlin, Germany

Copyright © 2021 Zhang, Zhang, Yang, Ashourizadeh, and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Daozhou Yang, ZGFvemhvdTYwOUAxMjYuY29t

Chuqing Zhang

Chuqing Zhang Zheng Zhang1

Zheng Zhang1 Shayegheh Ashourizadeh

Shayegheh Ashourizadeh