- 1Seoul School of Integrated Sciences and Technologies, Seoul, South Korea

- 2Business School Lausanne, Chavannes-près-Renens, Switzerland

- 3The Institute for Industrial Policy Studies, Seoul, South Korea

The quality of sales processes is crucial in automotive and directly related to the firm’s competitive advantage and financial success. Sales training is the most prevalent intervention to guarantee quality and productivity. Extant literature has attempted to measure training effectiveness adequately, and the Context, Input, Process, and Product evaluation (CIPP) model has been a popular approach. This study endeavored to advance current literature and suggest a novel effectiveness framework, Content, Instructional design, Programmed learning, and Recommendation (CIP-R). The framework was applied to examine three different methodologies—traditional, pure digital, and hybrid training—collecting 583 instances from the automotive sales training conducted from 2019 to 2020 in South Korea. The findings advocate the importance of human elements, the role of efficacy, and self-determination in generating learning transferability, leading to performance in the digital age.

Introduction

Coronavirus disease 2019 (COVID-19) created a global mechanism that “forced” online learning to be a reasonable alternative to traditional ways of developing people (Lands and Pasha, 2021). HR managers began to rethink development initiatives, providing flexible, personalized online learning opportunities (Li and Lalani, 2020). Training online could comfort the employees, reducing their skill gaps (Zhang et al., 2018) under isolated situations, and global institutions started providing digital workforce development and upskilling programs (Lands and Pasha, 2021).

A skilled workforce is substantial when it comes to the sales function. A firm’s economic success depends on the relationships between the customers and the employees that function as the interface that connects the company’s value chain to the market (Davenport, 2012). Ever-elevating customer expectations upon better services and products, complex buying and selling processes would draw more pressure and let businesses pay attention to the quality and competencies of their sales organizations (Zoltners et al., 2008; Williams et al., 2017; Arli et al., 2018; Hartmann et al., 2018; Hartmann and Lussier, 2020; Lim, 2020). The automotive industry is the largest sector in overall retail spending (Palandrani, 2020) and a vital customer that plays a crucial role in the industry ecosystem (Mathur and Kidambi, 2012; Dweiri et al., 2016). The quality of sales processes is crucial in auto retail since that is where the customers perceive the brand’s quality (Hoffmeister, 2018). Hence, automakers are expected to focus on human resource development (HRD) in retailing. Sales training is the most prevalent and widespread intervention to guarantee quality and increase productivity (Singh and Venugopal, 2015; Kodwani and Prashar, 2019). Today’s sales organizations proactively adopt digitalized training to enhance efficiency and effectiveness (Zoltners et al., 2021). However, it remains uncertain to determine the most optimized way of adopting technology in sales training.

Maximizing the effectiveness is the primary goal for any training initiative (Sitzmann and Weinhardt, 2015) and measuring the outcomes is essential to ensure the continuation and improvement of interventions (Kirkpatrick and Kirkpatrick, 2006). The Context, Input, Process, and Product evaluation (CIPP) model is one of the most frequently used frameworks (Adedokun-Shittu and Shittu, 2013; Finney, 2019). However, its limitations, such as time, cost, complexity, and lack of the participants’ voice, hinder practitioners and researchers from adopting the framework despite its advantages (e.g., Robinson, 2002; Tan et al., 2010). Furthermore, defining the training effectiveness, which includes learning transfer and post-training changes, is vital in the existing training literature (Alvarez et al., 2004). Hence, the CIPP model requires further theoretical review to prove itself as a robust and valuable framework (Finney, 2019). In the post-COVID era, more businesses are expected to provide entirely digital or hybrid learning opportunities for their workers, replacing traditional classrooms. It is required to empirically compare and analyze the effectiveness of traditional and technology-enhanced training. The CIPP model requires further effort to reflect the changing trend and theoretical attempt to calculate the “effectiveness” over “evaluation.”

From the abovementioned data, the study seeks to propose a new framework based on the CIPP model, advancing it into a practical, trainee-centered model. Based on the new framework, the study aims to compare the outcomes of three methodologies, namely, traditional, pure digital, and hybrid training and measure the effectiveness of each method. The study then seeks to analyze the factors that generated the differences in the training outcome. In this respect, the study presents the following research questions: (1) What is the optimal way of measuring training effectiveness in the digital age? (2) How does the training effectiveness change as digital technologies involve? and (3) What are the factors that affect training effectiveness?

This article is structured as follows. Section 2 provides theoretical background and a literature review related to the research. Section 3 deals with the methodology used in the study, analytical procedure, and details for data collection. Section 4 addresses the study’s results and Section 5 summarizes and concludes the research findings. Section 6 proposes theoretical and practical implications. Finally, Section 7 presents limitations and suggestions for future researchers.

Theoretical background

Training of sales organization

Modern consumers expect extended service and consumer-centric behaviors from salespeople (Lassk et al., 2012). Businesses focus on keeping the quality of the sales by investing in training (Kodwani and Prashar, 2019). Firms strive to meet consumers’ escalating expectations and manage organizational resources to provide quality service (Shabani et al., 2017). Managers expect the training to result in several returns, such as salesforce motivation and sales competence (Kauffeld and Lehmann-Willenbrock, 2010; Panagopoulos et al., 2020), leading to overall performance enhancement and goal achievement (Salas-Vallina et al., 2020). Sales training is the most frequent and universal intervention to improve sales productivity or customer orientation (Singh and Venugopal, 2015; Kodwani and Prashar, 2019). Effective sales training interventions may enhance sales organizations’ knowledge, skill, and performance (Lichtenthal and Tellefsen, 2001; Singh et al., 2015).

The most common challenges that organizations undergo are (1) constant changes in salespeople’s role and definition, (2) emphasis on accountability and reliability of sales function, and (3) reskilling and upskilling of salesforce facing new technologies (Lassk et al., 2012). However, one of the less explored issues is the (1) understanding of differences in training methodologies and (2) measurement for training evaluation and effectiveness (e.g., Lupton et al., 1999; Attia et al., 2021). This article discusses two primary challenges in the following sections of the literature review, namely, training methodologies and the training evaluation/effectiveness measurements.

Digital technology in a corporate learning context

Using technology in training must conform to the changing environment (Hartmann and Lussier, 2020). Digital transformation of salesforce pursued by firms has been accelerating (Guenzi and Nijssen, 2021) due to the merits, such as elevated efficiency and effectiveness (Zoltners et al., 2021). Online learning is now replacing traditional, instructor-led, and offline settings, becoming a significant corporate learning and development element to upskill employees based on a lower cost (Lands and Pasha, 2021). Advanced technologies adopted in the learning management system provide a flexible learning environment (Traxler, 2018), and technologies such as gamified learning could affect users’ flow and continuous usage (Kim, 2021).

However, a single method (purely online or purely offline) might be challenging to satisfy all learners. Instead, by combining online and offline, learning satisfaction could be increased (Mantyla, 2001). Integrating technology with traditional methods has raised the interest in “Blended learning” (Hrastinski, 2019). Blended learning combines traditional methods with technology-based learning and avoids the demerits of offline and online learning (e.g., Driscoll, 2002; Graham, 2006). At present, the terms blended learning and hybrid learning are interchangeably used (Watson, 2008; Graham, 2009) and are regarded as one of the most suitable modes for training in the digital era (Liu et al., 2020). Consequently, technology-blended learning could lead to best practices for businesses (Singh and Reed, 2001; Driscoll, 2002) since the learners’ cognitive process and the training/teaching strategy might change (Liu et al., 2020).

This study takes the empirical case from a sales training program conducted by Company A in South Korea. The firm is the regional headquarters of a multinational automotive company. The firm’s overall HRD system is shown in Figure 1. The sales training program, which is the main interest of this study, is named sales consultant training and education program (STEP). The company had difficulties conducting face-to-face sales training during COVID-19. Consequently, the firm decided to implement salesforce training using digital technology. In this respect, two alternatives, namely, structured pure online training and hybrid training that mixed offline and digital, were developed and applied.

Training transfer, evaluation, and effectiveness

Measurement of training outcome contributes to the continuity of training and program improvement (Kirkpatrick and Kirkpatrick, 2006). Training evaluation is an act of evaluating the value or quality of training elements systematically and scientifically. The expectancy theory by Vroom (1964) supports the views of prior researchers that the training’s perceived value or trainees’ beliefs (valence) about the training outcome would be crucial to training success (Colquitt et al., 2000). If the trainees get motivated, their job performance may increase with the feeling of accomplishment, which may also provide the potential for their future growth (e.g., Hanaysha and Tahir, 2016; Ibrahim et al., 2017; Wolor et al., 2020).

One of the most widely accepted performance indicators is the transfer performance of learning (Leach and Liu, 2003). Changes might occur when the employees transfer what was learned to their workplace (i.e., Ployhart and Hale, 2014). Researchers have insisted that training efforts will not bring the desired outcomes if a proper transfer does not follow (i.e., Wilson et al., 2002). Extant training literature has argued that learning transfer is a concern for organizations (Friedman and Ronen, 2015). Learning transferability has been a long-time researched topic in educational psychology (Hung, 2013). Adapting acquired knowledge in changed or modified forms in diverse situations to complete a given task or solve problems based on cognitive processes roughly defines “learning transfer” (Hung, 2013). Training evaluation and training effectiveness have drawn significant recognition in the literature (e.g., Broad and Newstrom, 1992; Kraiger, 2002; Noe and Colquitt, 2002; Holton, 2003). Although two terms, training evaluation and training effectiveness, are interchangeably used, they are independent of each other (Alvarez et al., 2004). Training evaluation means the appropriateness, relevance, and usefulness measured by the reaction to training content and design items. It is more of a “measurement technique” to check if the training programs meet the goals as planned (Alvarez et al., 2004). In contrast, training effectiveness investigates the variables that might affect training outcomes throughout the process (pre/during/post) and could be estimated by transfer measures and post-training attitudes (Alvarez et al., 2004). Several conceptual models (e.g., Tannenbaum et al., 1993; Holton, 1996) were suggested to measure training effectiveness. In summary, training evaluation is a methodological approach for measuring training outcomes, while the latter is a theoretical effort to understand the causalities.

However, the studies dealing with the various effects of variables and timewise training outcome comparison are limited (Grossman and Salas, 2011; Massenberg et al., 2017). As previously discussed, the evaluation of extended training intervention adopting technology should be able to (1) measure transfer performance adequately and (2) explain training effectiveness that could capture the states of trainees.

Advancing the CIPP evaluation model

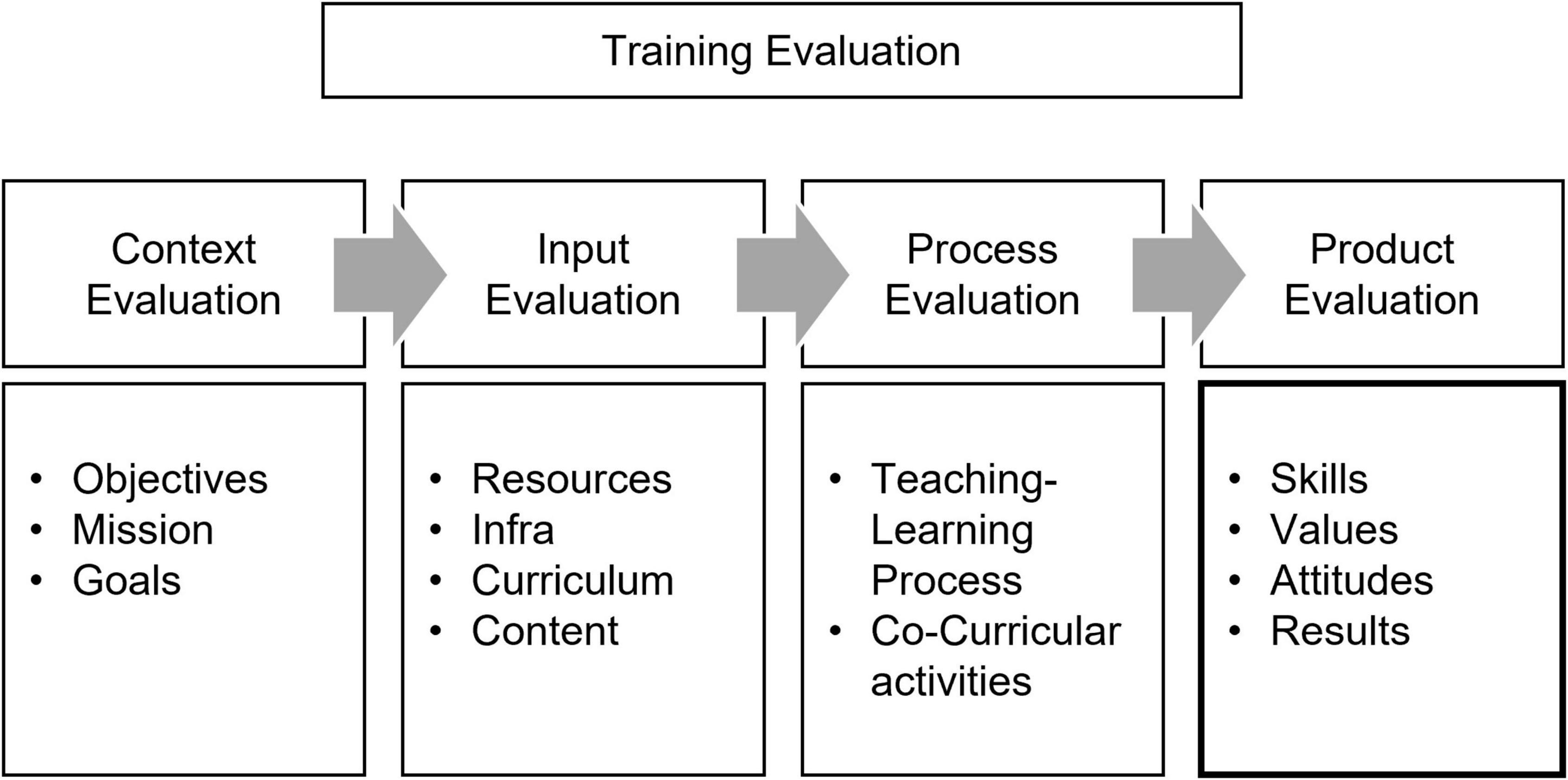

Researchers have tried to extend the existing models to measure the training outcome in the literature, and the CIPP is one of the most frequently referenced models (e.g., Adedokun-Shittu and Shittu, 2013; Finney, 2019). CIPP is a comprehensive framework for evaluation aimed at long-term, sustainable interventions (Stufflebeam, 2004), standing for context, input, process, and product evaluation. CIPP reviews four areas of training (Figure 2). Context evaluation focuses on the overall objectives. Input evaluation concentrates on resources (infra, curriculum, content, and material), while process evaluation sees the teaching–learning process or other activities. The attitudinal and behavioral changes are regarded as the outcomes during the product evaluation. Generally, the CIPP is often sequentially applied following the orders of context–input–process–product assessment to confirm the accountability of a program and review the quality of each step (Stufflebeam, 2004). Using CIPP, the evaluators could grasp how well and effectively the training outcomes are achieved.

However, there are several limitations to the CIPP model. First, although it is a robust model to measure training outcomes, several situations would not allow smooth evaluation due to the complicated dynamics of stakeholders (Robinson, 2002; Tan et al., 2010). Second, it requires tremendous effort and resources, leading to slow evaluation. Third, CIPP is a framework for the evaluator, not the trainee, and might lack actual feedback from the trainees unless adequately applied (Tan et al., 2010). Finally, as pointed out in the previous section, the dynamic aspect of training “effectiveness” should be considered (i.e., Alvarez et al., 2004), including transfer measures and post-training attitudes. The CIPP model requires further updates and theoretical concerns to prove itself as a more robust framework.

This study suggests a new conceptual framework based on the CIPP model, attempting to close the theoretical gaps unveiled above. Then, the new framework collects and analyzes empirical data. The unique effectiveness model that (1) is more concise, (2) enables practical evaluation, (3) realizes trainee-centered evaluation, and (4) could measure learning transferability is presented in the following section.

Methods

Analytical procedure

The study includes the following analytical procedures. First, this study suggests a novel CIP-R model that overcomes the limitations of the existing CIPP model. Second, evaluation data for traditional/pure digital/hybrid training methods were collected from the sales training sessions administered in 2019 and 2020. Third, frequency analysis of the collected data and descriptive statistics for each variable are presented. Fourth, the three training methods are comparatively analyzed, and ANOVA confirms the statistically significant differences. Fifth, factors affecting the learning transfer are identified and compared by general linear model analysis. For all the statistical analysis, the jamovi ver. 1.8.2 software (jamovi, 2021) was used.

The CIP-R framework

The primary goal of this article was to analyze and compare the different modes of sales training enabled by digital technology and examine the factors affecting training effectiveness. As discussed in the “Advancing the CIPP evaluation model” section, the article adopted CIPP as the base framework and attempted to provide a new effectiveness model, further improving its limitations.

For decades, organizations have shifted from satisfaction to next-level constructs to measure training effectiveness (Mattox, 2013). Kirkpatrick (1998) argued that organizations should avoid using one simple “satisfaction measure” to measure training’s value. The rest of the stages in Kirkpatrick’s model focus on more critical aspects of learning transfer, which is training effectiveness (Mattox, 2013). Researchers such as Phillips (2012) and Brinkerhoff (2003) also advocated the necessity of “beyond satisfaction” measures in the post-training stages.

Satisfaction and loyalty (recommendation) are two concepts that have become crucial constructs in modern management (Kristensen and Eskildsen, 2011). The positive causal effect of satisfaction on loyalty has been proved in the literature (i.e., Tuu and Olsen, 2010). Tracking promoters propose organizations a compelling way to measure loyalty (Reichheld, 2003), and promoters who recommend services or products to others are loyal enthusiasts (Reichheld, 2006). This basic idea of focusing on recommendations is borrowed from the net promoter score (NPS) concept by Reichheld (2003). NPS measures the “recommendation” by the customers’ rating toward a simple question asking if they want to “recommend” the service or product they experienced to other close people such as friends, family, or colleagues, using a 0- to 10-point scale. The rating, which is believed to present loyalty, helps divide the respondents into three groups, namely, promoters (9–10), passives (7–8), and detractors (0–6). Considering recommendation factor in training is now commonplace across organizational units and utilized frequently as a valuable predictor and effectiveness measure for HR practices (Mattox, 2013) since it is not the driver but an outcome of training. Certainly, if trainees learn new skills and knowledge, they might want to apply the learning to their jobs and encourage others to do the same when successful. In this respect, recommending a training program could be a strong predictor of learning transfer.

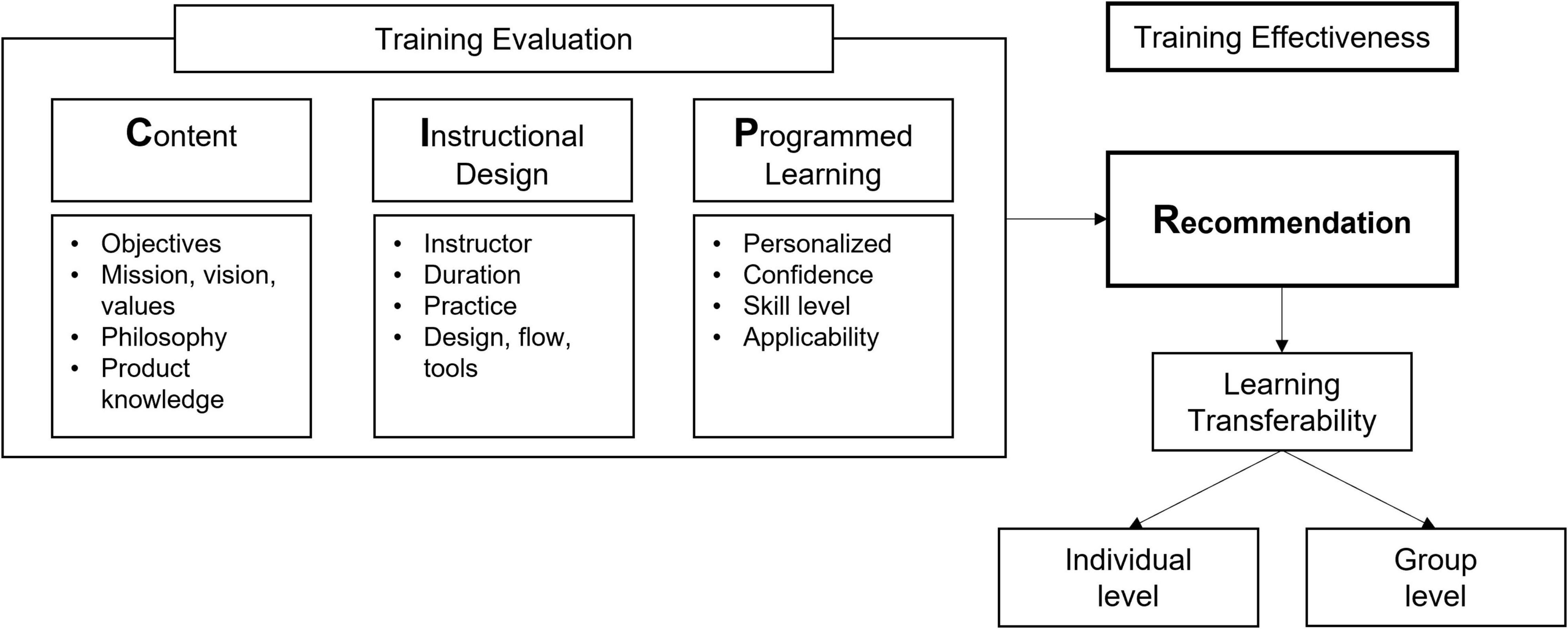

Based on the above discussions, the existing CIPP model was reviewed and redesigned regarding the following two issues. First, the new framework should endorse digital training’s particular characteristics, enabling an apple-to-apple comparison. In this respect, the new framework excluded spatial factors to reflect the digital training’s characteristics and offline-only elements from the measurements. Second, ambiguous and almost-duplicative factors should be removed or simplified for clarity. For example, excessively detailed measures for context (e.g., objectives, goals) and input (e.g., content, curriculum) evaluation in CIPP may confuse the trainees while evaluating. As a result, a new training effectiveness framework, the CIP-R model, was presented (Figure 3). CIP-R is an acronym for content, instructional design, programmed learning, and recommendation. Recommendation measures loyalty. It was adopted for learning transferability toward individual and group levels, which is the key to training effectiveness. As discussed in the “Theoretical background” section, the C-I-P could be viewed as the training evaluation, and the R (recommendation) could be understood as a training effectiveness measurement.

Data collection

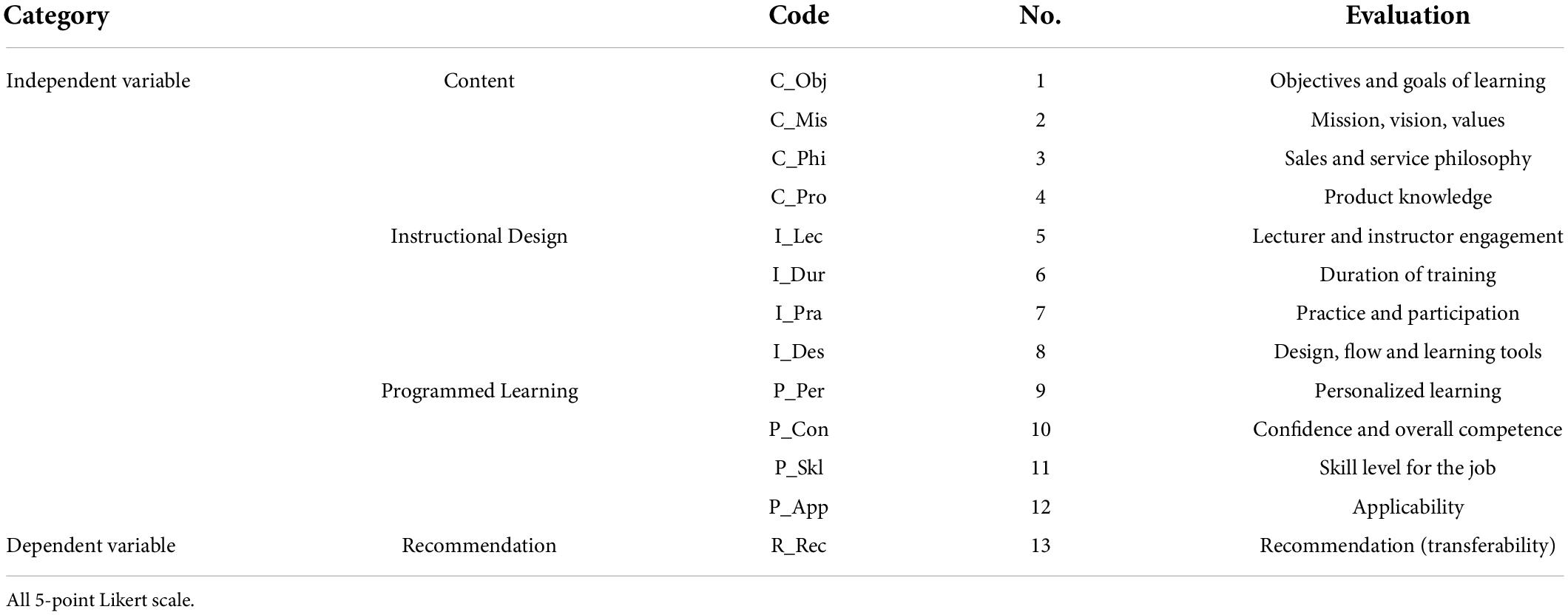

As explained in the “Digital technology in a corporate learning context” section, the study takes the empirical data from STEP (sales consultant training and education program) of Company A, based in South Korea, an importer of global premium and mass automobile brands. Three HRD experts in automotive sales training and two Ph.D. researchers participated in the analytical procedure. Sales training data from March 2019 to October 2020 were used. Trainees assessed all training sessions through an online survey. To ensure unbiased evaluation, the survey did not ask for personal information. The collected survey results were sent to the firm’s training experience management system. The measurements of the CIP-R framework are shown in Table 1, and the 5-point Likert scale was applied to all items. STEP is a structured program designed to maximize the competence of sales consultants and aims to pursue customer delight through quality sales. The training is a 3-day, off-site, traditional classroom-type program. Due to the suspension of training during COVID-19, the company shifted to pure digital (from April to June 2020) and hybrid training (from July to October 2020) mixed online and offline.

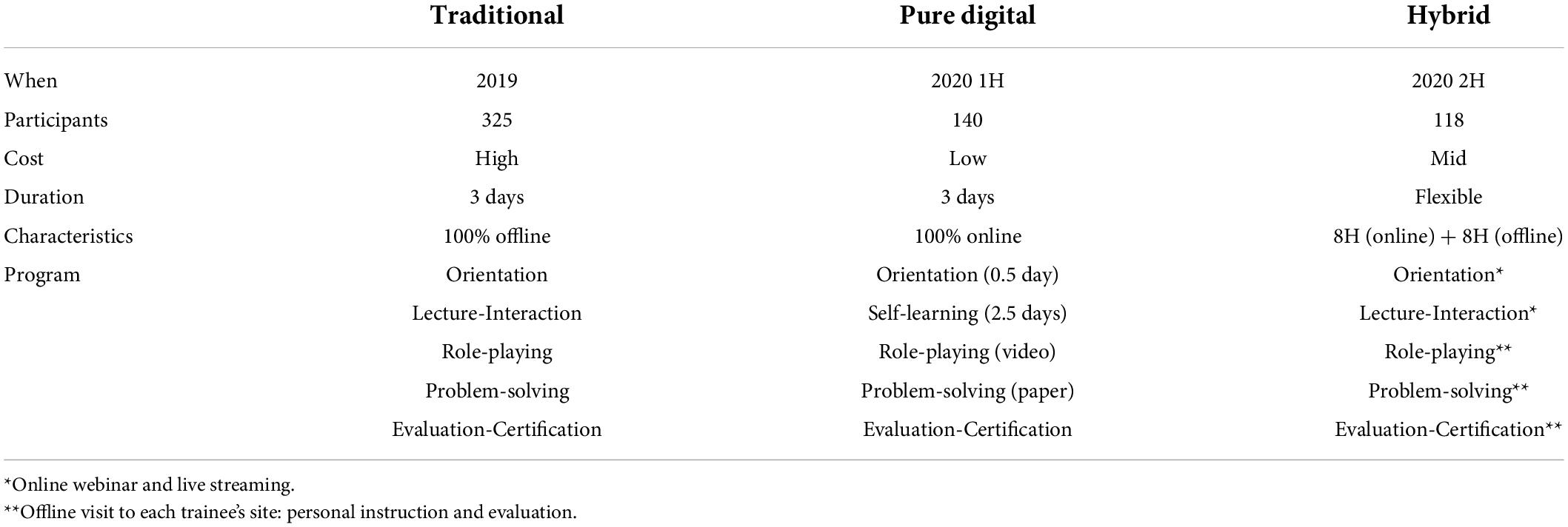

In the traditional training conducted in 2019, 325 trainees participated in the 3-day session. Offline-based lectures, discussions, role-playing, and problem-solving sessions were implemented. A total of 140 sales executives participated in the pure digital training. Lectures and interactions were not provided, and the course depended on the learner’s self-directedness. Hybrid training was a well-blended version of offline and online. A total of 118 employees participated, and real-time live streaming sessions were provided. After the online session, instructors visited the learners’ offices to check their competencies and conducted role-play and problem-solving evaluations. First, the instructors handed out various scenarios, including multiple car purchase scenarios for customers with differentiated needs. Second, they evaluated each personnel based on structured measurement scales and checklists to assess sales consultants’ capabilities expected to be enhanced throughout the prior online learning sessions.

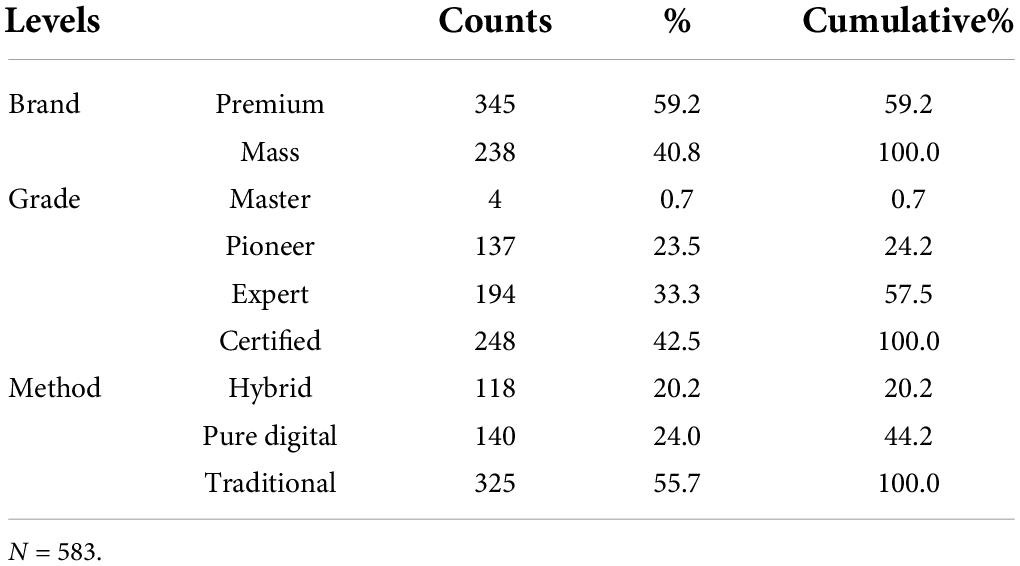

Although the three methods differed in their implementation, there was no significant difference in each course’s learning objectives and curriculum. The traditional method was the highest in terms of cost, the hybrid type was medium, and the pure digital training was the lowest. The details of the three training modes and frequency table are presented in Tables 2, 3. Brand: premium brand salespeople 59.2%, mass brand 40.8%. Grade: certified (42.5%), expert (33.3%), pioneer (23.5%), master (0.7%). Training methods: traditional (55.7%), pure digital (24.0%), and hybrid (20.2%).

Results

Outcome comparison of three training methods

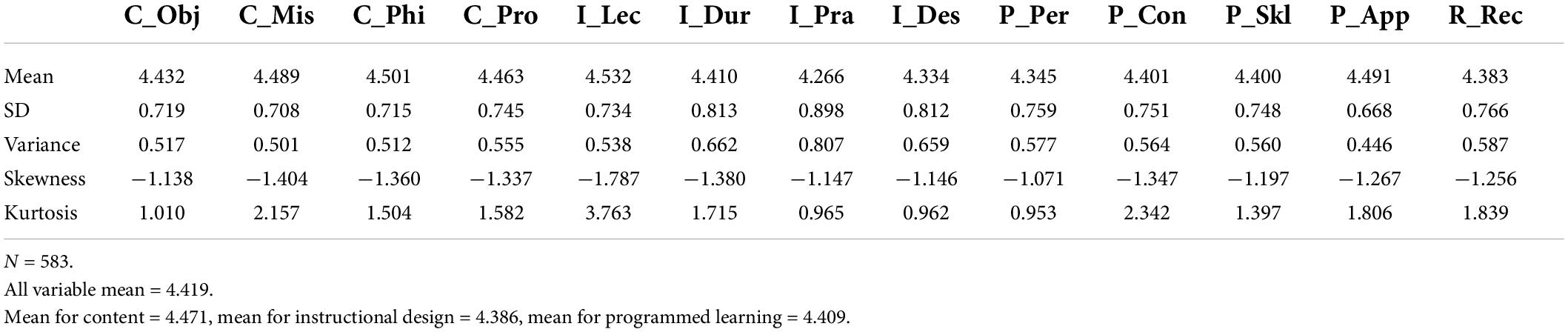

To compare the effectiveness of the three training methodologies, the evaluation results by training participants were analyzed. A total of 583 data instances were used for the analysis. The basic summary statistics results are as follows (Table 4). The mean value of the items was 4.266–4.501. The item with the highest value was the “Sales and service philosophy” item in content evaluation, and the lowest item was the “Practice and participation” item in instructional design. The results indicate that the training participants were relatively satisfied with the training content delivering the firm’s philosophy and were less content with participatory and practical learning settings. The mean of all variables was 4.419. The average values of each dimension of content, instructional design, and programmed learning were 4.471, 4.386, and 4.409, respectively, and it was found that the content dimension received relatively higher ratings than the other three dimensions.

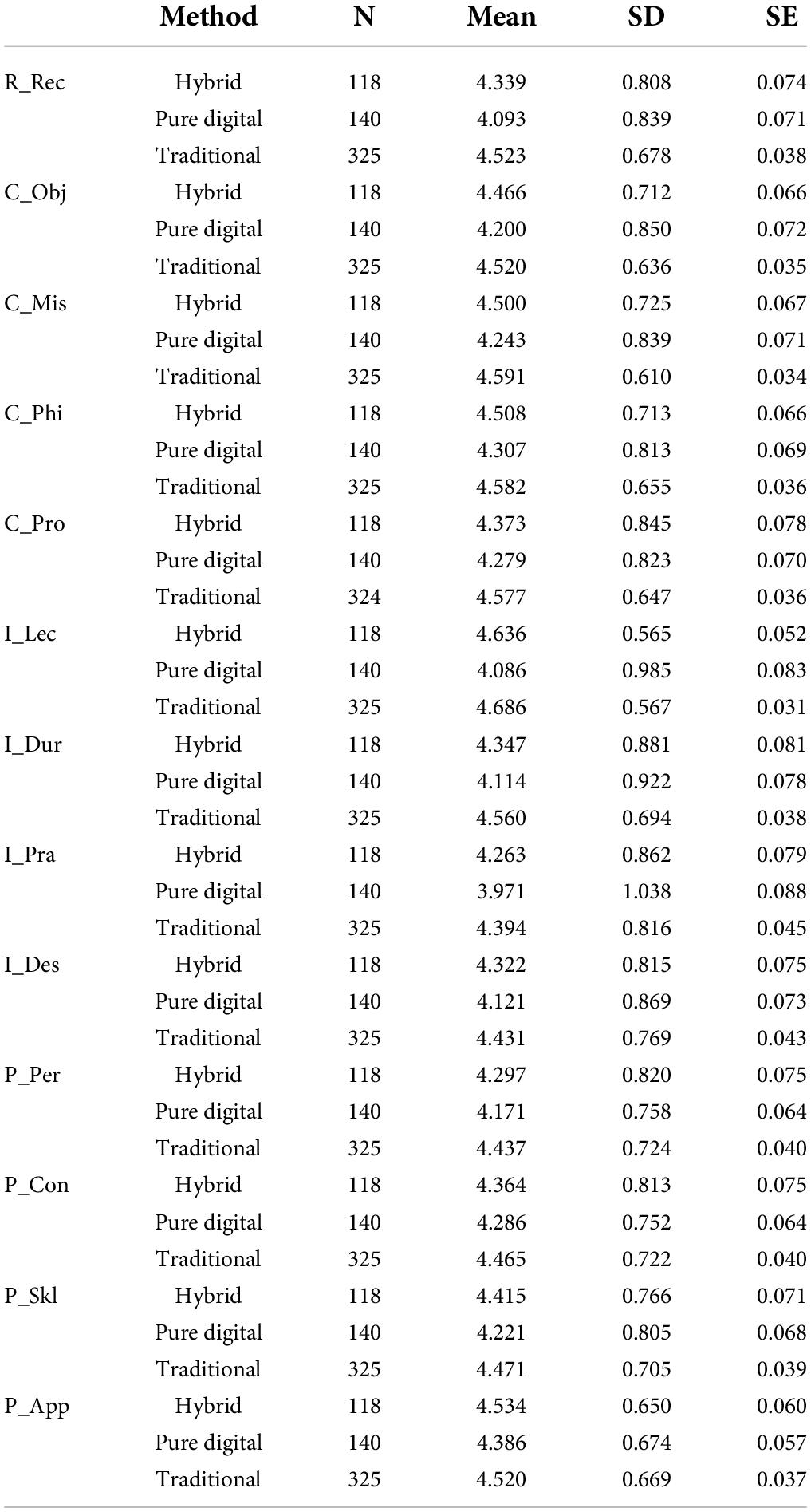

Then, all variables were divided by three training methodologies, and group statistics were derived (Table 5). By comparing the mean value of each item, it was confirmed in which area the differences in the training methodology developed significantly. As for the “recommendation” item that indicates training effectiveness, the traditional method appeared to be the highest (4.523), and the pure digital method was the lowest (4.093), while the hybrid method was in the middle (4.339). In the first half of 2020, when all the training interventions shifted from traditional to pure digital due to COVID-19, the item where the trainees’ rating decreased the most was the “Lecturer and instructor engagement” in the instructional design dimension (traditional = 4.686, pure digital = 4.086, difference = −0.600). The second highly impacted item was the “Duration of training” in the same dimension, showing a steep decrease (traditional = 4.560, pure digital = 4.114, difference = −0.446). The item that showed the slightest change was the “applicability” item in the programmed learning dimension (traditional = 4.520, pure digital = 4.386, difference = −0.134). In the second half of 2020, training shifted from pure digital to the hybrid method, and the overall ratings were improved. The “Lecturer and instructor engagement” item in the instructional design dimension indicated the most improvement (pure digital = 4.086, hybrid = 4.636, difference = + 0.550). The next most improved area was the “Practice and participation” in the instructional design dimension (pure digital = 3.971, hybrid = 4.263, difference = + 0.292). The item with the most negligible difference was “Confidence and overall competence” in the programmed learning dimension (pure digital = 4.286, hybrid = 4.364, difference = + 0.078).

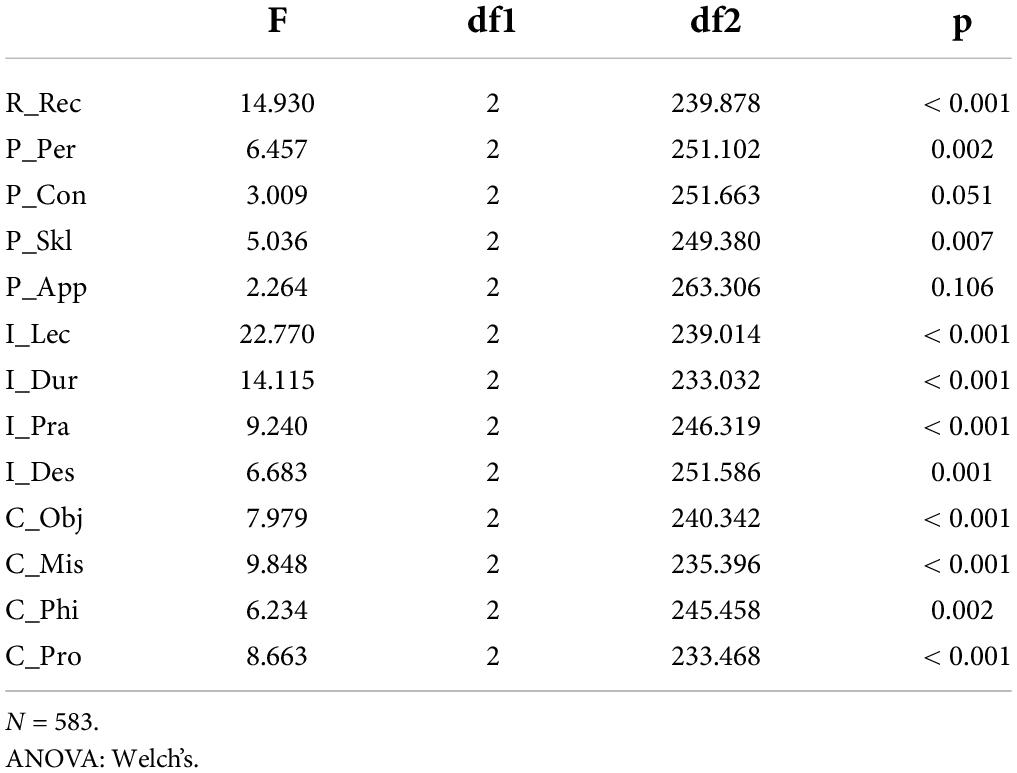

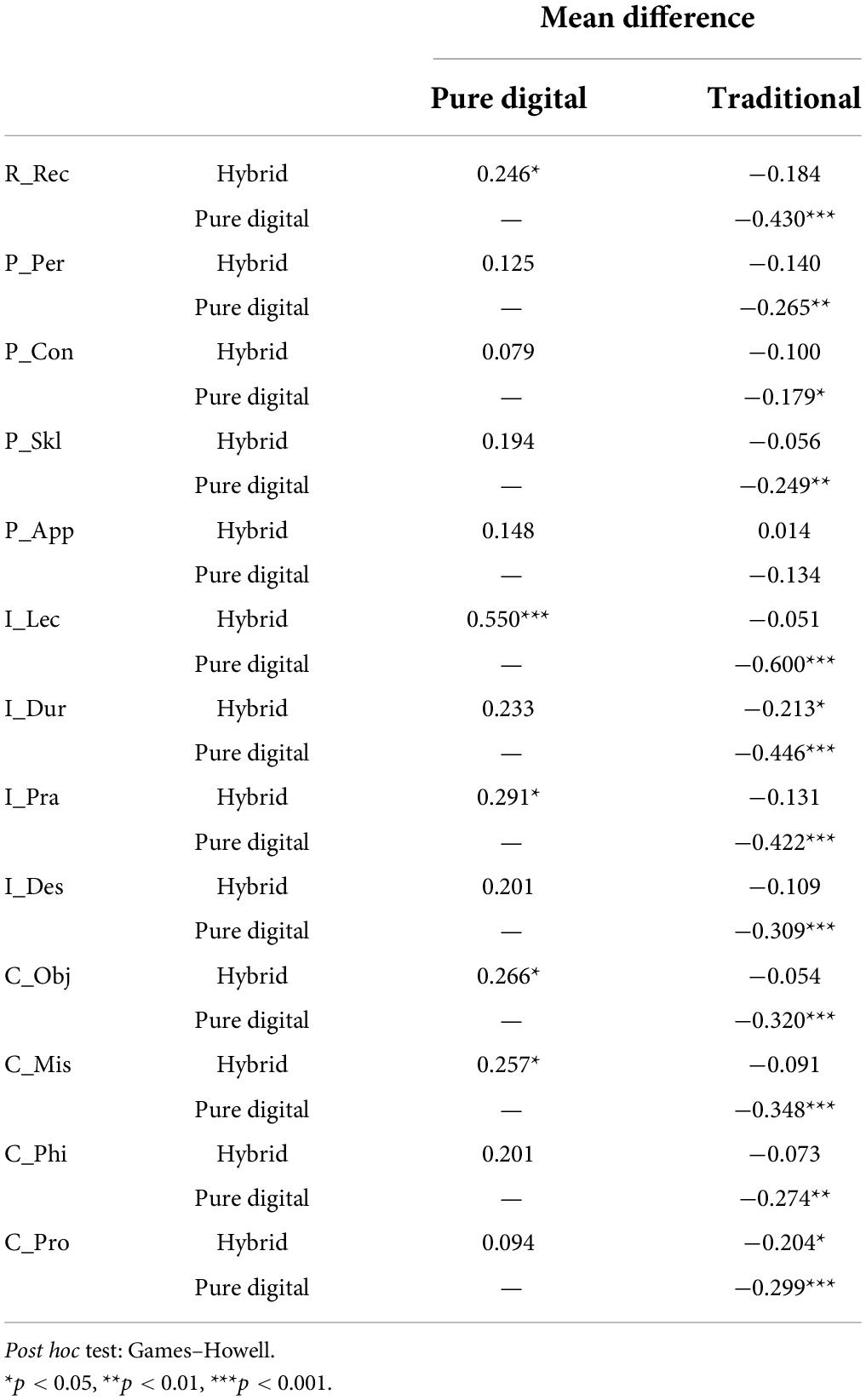

Finally, an ANOVA was conducted for each variable. The result displayed that there is a statistically significant difference stemming from training methodology in all items except for two, “Confidence and overall competence” (F = 3.009, p > 0.05) and “Applicability” (F = 2.264, p > 0.05) in the programmed learning dimension (Table 6). Additionally, post hoc test results were provided to check the significant mean differences found in the variables based on the training methodology (Table 7).

Factors affecting training effectiveness

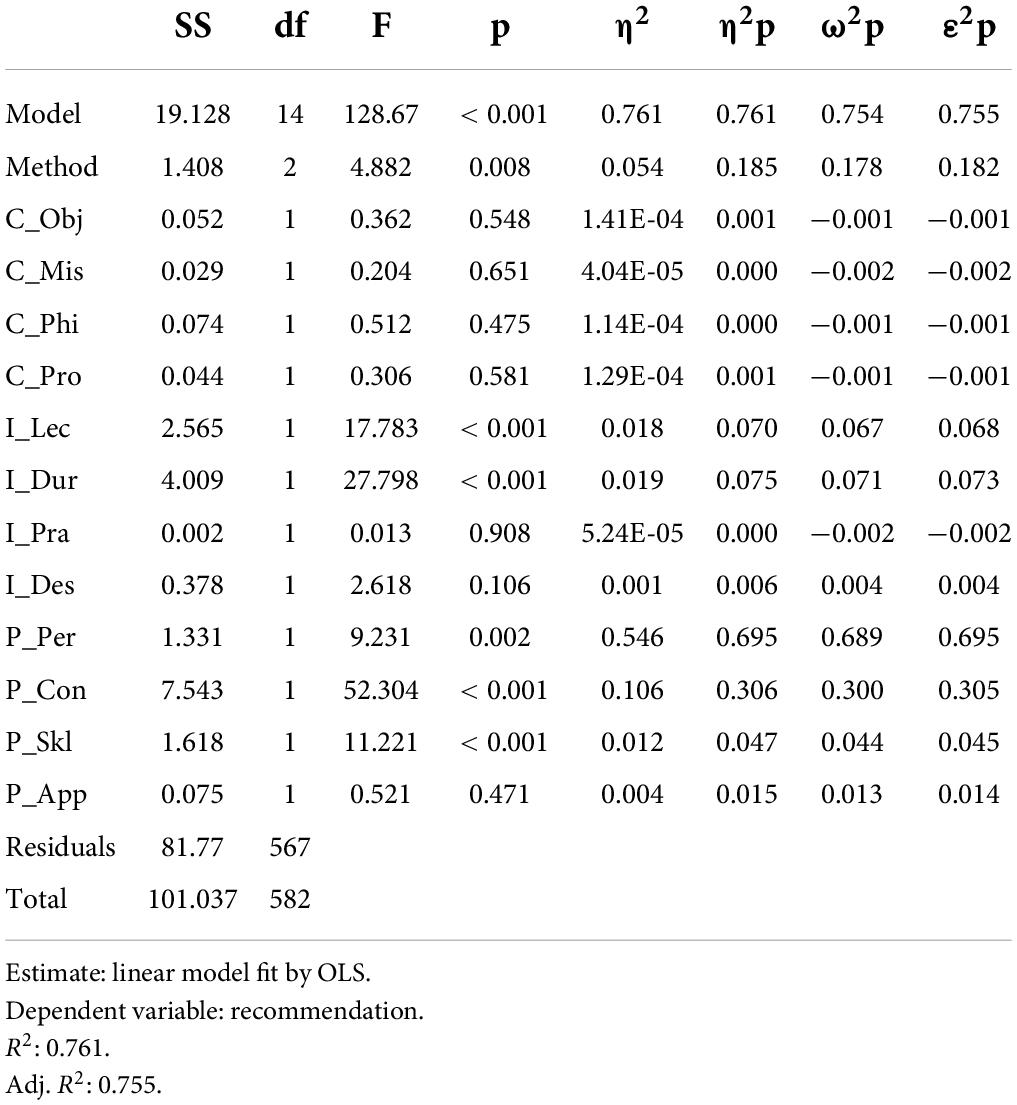

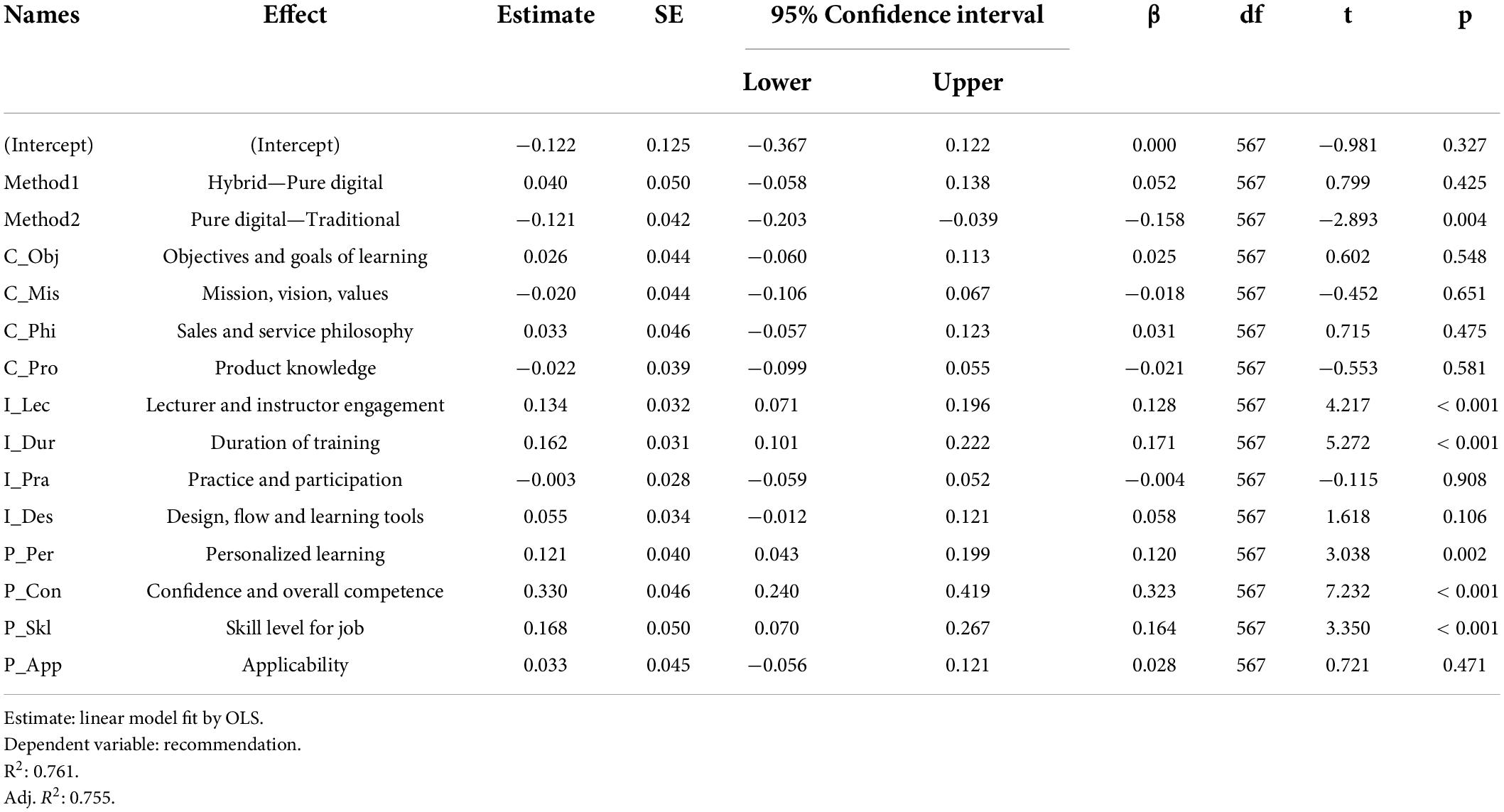

Then, the study verified the results of the ANOVA omnibus test and the fixed-effects parameter estimates result (Gallucci, 2019) to confirm the variables’ effect sizes on training effectiveness and the coefficient for each term (Tables 8, 9). The three sales training methods—traditional, pure digital, and hybrid—showed statistically significant differences (F = 4.882, p < 0.01). The result confirmed a statistically significant difference between the pure digital method and the traditional training effectiveness (B = −0.121, p < 0.01). The difference between the hybrid training and the pure digital method was not statistically significant (B = 0.040, p > 0.05). The existence of five factors affecting the training effectiveness (recommendation) was confirmed. “Lecturer and instructor engagement” (F = 17.783, B = 0.134, p < 0.001), “Duration of training” (F = 27.798, B = 0.162, p < 0.001) in instructional design dimension and “Personalized learning” (F = 9.231, B = 0.121, p < 0.01), “Confidence and overall competence” (F = 52.304, B = 0.330, p < 0.001), and “Skill level for the job” (F = 11.221, B = 0.168, p < 0.001) in programmed learning dimension confirmed the clear difference by methodology and appeared to affect the dependent variable in a statistically significant way.

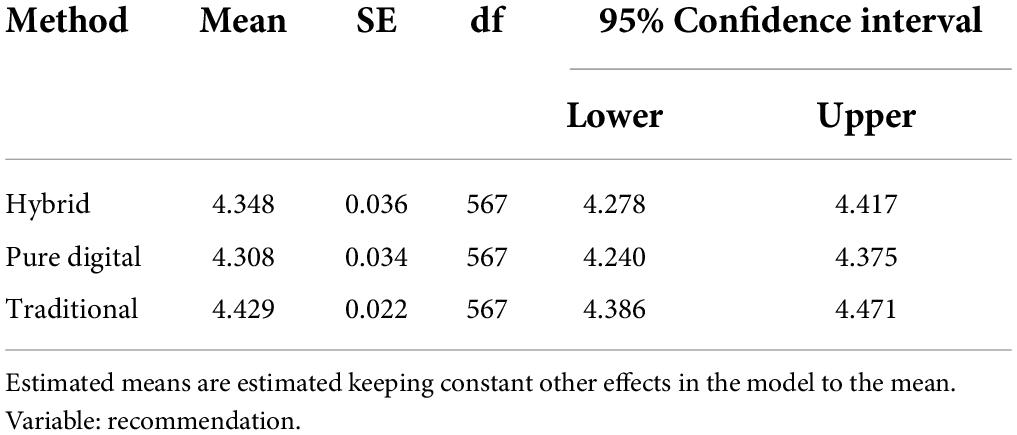

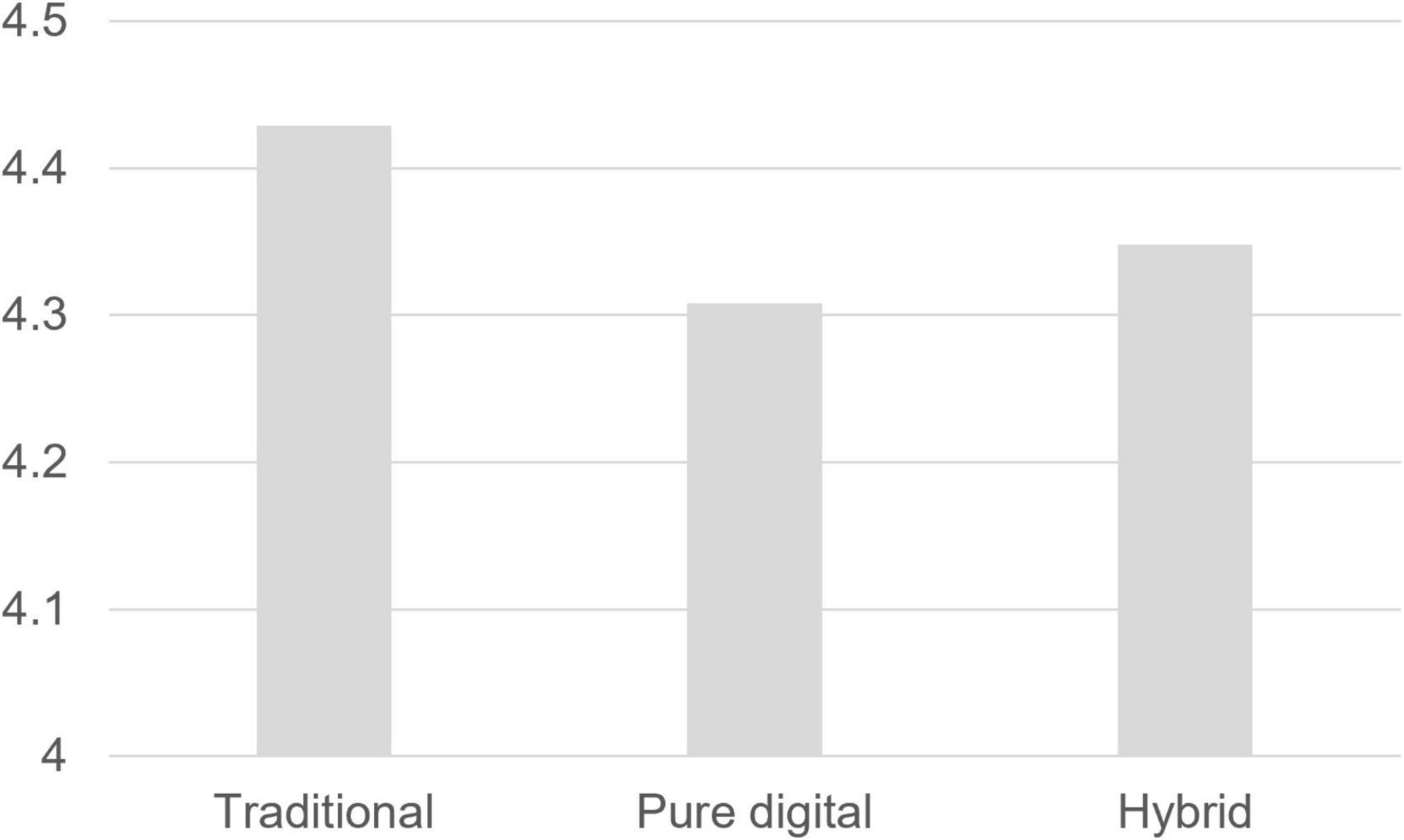

Finally, estimated marginal means of the dependent variable were calculated and compared for each training method to compare training effectiveness, reflecting the influence of each term included in the model. The result indicated that the traditional method has the highest effectiveness (mean = 4.429). The effectiveness decreased in the pure digital method (mean = 4.308) and then recovered after shifting to the hybrid training method that blended both (mean = 4.348). The result is presented in Table 10 and Figure 4.

Discussion

This study suggested a novel CIP-R training effectiveness framework to analyze the difference in training outcomes derived from the adoption of digital technology and verify which factors influence training effectiveness. The findings of the study are summarized as follows. First, the results of this study confirmed the effectiveness of blended learning, the hybrid method (Table 10). The overall sales training effectiveness fell as the firm shifted from the traditional to the pure digital (pure digital—traditional, mean difference = –0.121). However, the effectiveness was recovered with the hybrid method (hybrid—pure digital, mean difference = 0.040). Pure digital training required a complete self-directedness of participants and showed strength in terms of time and cost but had the lowest effectiveness. Blending the advantages of digital and offline could enhance learning effectiveness (Mantyla, 2001) and under today’s digital transformation, advancing traditional methods by technology-based learning (e.g., Driscoll, 2002; Graham, 2006) might be a suitable way of talent development (i.e., Liu et al., 2020).

Second, the findings confirmed the importance of human factors in training. The variables that caused visible differences in training effectiveness were primarily related to human involvement. The score of “Lecturer and instructor engagement” in the instructional design dimension decreased the most (pure digital—traditional, mean difference = –0.600) and then recovered to the maximum (hybrid—pure digital, mean difference = 0.550). Also, the “Practice and participation” item of the same dimension showed the second-largest increase (hybrid—pure digital, mean difference = 0.291). In the “Duration of training” item, which evaluates the appropriateness of learning time, the second-largest decrease was confirmed (pure digital—traditional, mean difference = −0.446). This empirical evidence confirms the claims that social interactions with colleagues in a digital learning setting play a crucial role in trainee engagement (i.e., Kim, 2021). Although traditional and pure digital training had the same learning duration (3 days), the perceived duration of online self-directed training might have been felt loosely. In this respect, social relationships might have to be regarded as a substantial factor in building a proper learning environment.

In contrast, the ANOVA result displayed that there were no statistically significant differences by training method in the “Confidence and overall competence” and “Applicability” items of the programmed learning dimension (Table 6). Despite the changed training methodologies, the actual content changed little; accordingly, the training program’s perceived value (valence) may not have changed. However, a statistically significant difference was found in the same dimension, in the “Personalized learning” and “Skill level for the job” items. This finding may support the assumption that the human factors caused a difference positively regarding training effectiveness. In fact, in hybrid training, instructor–trainee interaction was strengthened, and practice and feedback sessions were provided through field visits.

Third, businesses must understand the role of efficacy and self-determination. Factors that influenced the learning transferability were mostly variables that define how the learning is delivered to the participants and variables related to trainees’ benefits (two variables in the instructional design dimension and three in the programmed learning dimension). In fact, in this study, the four variables in the content dimension were found to have little effect on learning transfer (Table 9). If personalized learning is provided, trainees could feel confident in their knowledge and skills, have higher expectations for improved competency, and develop actual work performance based on learning transfer (Colquitt et al., 2000). It can be explained by the self-efficacy of individuals and groups (i.e., Bandura, 2000).

Moreover, it can be expected that self-determined learning will occur through programs that provide a sense of self-efficacy (i.e., Ryan and Deci, 2000). As the training program shifted to hybrid, trainees appeared to have found more value. If intrinsic motivation increases, behaviors to disseminate knowledge and skills acquired during training to peers would increase. Based on empirical evidence, extant literature argued that intrinsic motivators such as self-efficacy in knowledge/skill and enjoyment of supporting others might expedite the transfer of acquired competencies, enhancing the upper-level or group performance (e.g., Lin, 2007; Wen and Lin, 2014; Na-Nan and Sanamthong, 2020). Consequently, it can be assumed that understanding group psychology and reflecting it on talent management might lead to higher workforce performance.

Theoretical and managerial implications

The study provides theoretical implications as follows. First, this study suggested the CIP-R framework, a novel effectiveness model that overcomes the limitations of the CIPP model. In particular, the study differentiated itself from the existing models by defining the trainee’s intention to recommend as a measurement for future learning transferability. The studies that provided empirical evidence of the effects of training variables and learning transferability as training outcomes are limited in the literature (Grossman and Salas, 2011; Massenberg et al., 2017). The CIP-R framework and the empirical evidence presented in this study are expected to contribute to existing domain knowledge and theoretical expansion. Second, by demonstrating the effects of digital technology in training, the study drew academic interest in sub-variables in each dimension. Moreover, the study calls for scholarly attention to organizational psychology theories by shedding light on individual/group efficacy (i.e., Bandura, 2000) and self-determination theories (i.e., Ryan and Deci, 2000), searching for a structural mechanism leading to learning transferability. The findings of this study present a perspective on how existing theories should change and be applied as digital technology expands.

The managerial implications of the study are as follows. First, the importance of digital technology acceptance should be reviewed from the talent development perspective. From a business standpoint, it is unimaginable to return to the traditional ways of developing people. HR professionals should consider development programs and provide flexible, personalized learning online (Li and Lalani, 2020). This study attempted both a blended learning format and an entirely online program. The findings of this research could be a practical example for future HRD professionals. Second, the study devised a practical and efficient framework for workforce development. Constant upskilling would be critical for organizations. Businesses need a useful and ready-to-use recipe for their people development initiatives. Existing effectiveness models are resource-taking and inadequate to measure the dynamic aspects of the learning or unreasonably focus on checking post-training changes (e.g., Robinson, 2002; Alvarez et al., 2004; Tan et al., 2010). Sometimes such academic interests fail to meet the actual needs of the business managers (Mattox, 2013). This study proposed the CIP-R framework as an alternative that efficiently measures learning transferability. It would enable practitioners to explore new opportunities to evaluate and improve training at a lower cost.

Limitations and future research

This study has several limitations. First, it is difficult to generalize the research findings since the study was conducted in a specific context, such as the region and profession. It remains uncertain that the findings of this study could be applied to other cultures or workplace environments. Therefore, further studies are required to test the causalities between variables. Future researchers are invited to conduct repeated research with different subjects and contexts. Second, the conceptual framework presented in this study requires additional validation. It is needed to examine the utility of the CIP-R model through additional empirical studies. The replicated usage can strengthen the model’s validity, and its theoretical/managerial value could be proved. The critical views from subsequent researchers, model adjustments, and measurement changes are also considered meaningful. The study used statistical regression to examine causal relationships and effect sizes. If enough data instances are secured, using the recently preferred machine learning (ML) method could be valuable (Kim et al., 2021). Using ML techniques, non-linear, hidden relationships might be discovered. Third, the study did not track trainees’ post-training behavioral changes or the financial results generated. However, training is not the only driver for organizational performance improvement. Hence, it is recommended to study other related variables, such as the working environment, motivation, and exchange relationships in the workplace, which might be related to sustaining the post-training performance.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the patients/participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

SK was responsible for the conceptualization, data collection, methodology, analytical procedures (quantitative), writing – original draft, review and editing.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adedokun-Shittu, N. A., and Shittu, A. J. K. (2013). ICT impact assessment model: an extension of the CIPP and the Kirkpatrick models. Int. HETL Rev. 3, 1–26.

Alvarez, K., Salas, E., and Garofano, C. M. (2004). An integrated model of training evaluation and effectiveness. Hum. Resour. Dev. Rev. 3, 385–416. doi: 10.1177/1534484304270820

Arli, D., Bauer, C., and Palmatier, R. W. (2018). Relational selling: past, present and future. Ind. Mark. Manage. 69, 169–184. doi: 10.1016/j.indmarman.2017.07.018

Attia, A. M., Honeycutt, E. D., Fakhr, R., and Hodge, S. K. (2021). Evaluating sales training effectiveness at the reaction and learning levels. Serv. Mark. Q. 42, 124–139. doi: 10.1080/15332969.2021.1948489

Bandura, A. (2000). Exercise of human agency through collective efficacy. Curr. Dir. Psychol. Sci. 9, 75–78.

Brinkerhoff, R. O. (2003). The Success Case Method: Find Out Quickly What’s Working and What’s Not. Oakland, CA: Berrett-Koehler Publishers.

Broad, M. L., and Newstrom, J. W. (1992). Transfer of Training: Action-Packed Strategies To Ensure High Payoff from Training Investments. Boston, MA: Addison-Wesley.

Colquitt, J. A., LePine, J. A., and Noe, R. A. (2000). Toward an integrative theory of training motivation: a meta-analytic path analysis of 20 years of research. J. Appl. Psychol. 85, 678–707. doi: 10.1037/0021-9010.85.5.678

Davenport, T. H. (2012). Retail’s Winners Rely on the Service-Profit Chain. Brighton: Harvard Business Review.

Driscoll, M. (2002). Blended Learning: Let’s get Beyond the Hype. Available online at: http://www-07.ibm.com/services/pdf/blended_learning.pdf (accessed May 1, 2021).

Dweiri, F., Kumar, S., Khan, S. A., and Jain, V. (2016). Designing an integrated AHP based decision support system for supplier selection in automotive industry. Expert Syst. Appl. 62, 273–283.

Finney, T. L. (2019). Confirmative evaluation: new CIPP evaluation model. J. Mod. Appl. Stat. Methods 18:e3568. doi: 10.22237/jmasm/1598889893

Friedman, S., and Ronen, S. (2015). The effect of implementation intentions on transfer of training. Eur. J. Soc. Psychol. 45, 409–416. doi: 10.1002/ejsp.2114

Gallucci, M. (2019). GAMLj: General Analyses for Linear Models. Available online at: https://gamlj.github.io/ (accessed June 1, 2021).

Graham, C. R. (2006). “Blended learning systems: Definition, current trends and future directions,” in The Handbook of Blended Learning: Global Perspectives, Local Designs, eds C. J. Bonk and C. R. Graham (Aßlar: Pfeiffer), 3–21.

Graham, C. R. (2009). “Blended Learning Models,” in Encyclopedia of Information Science and Technology, Second Edition, ed. M. Khosrow-Pour (Philadelphia, PA: IGI Global), 375–382. doi: 10.4018/978-1-60566-026-4.ch063

Grossman, R., and Salas, E. (2011). The transfer of training: What really matters. Int. J. Train. Dev. 15, 103–120. doi: 10.1111/j.1468-2419.2011.00373.x

Guenzi, P., and Nijssen, E. J. (2021). The impact of digital transformation on salespeople: an empirical investigation using the JD-R model. J. Pers. Sell. Sales Manage. 41, 130–149. doi: 10.1080/08853134.2021.1918005

Hanaysha, J., and Tahir, P. R. (2016). Examining the Effects of Employee Empowerment, Teamwork, and Employee Training on Job Satisfaction. Procedia Soc. Behav. Sci. 219, 272–282. doi: 10.1016/j.sbspro.2016.05.016

Hartmann, N. N., and Lussier, B. (2020). Managing the sales force through the unexpected exogenous COVID-19 crisis. Ind. Market. Manage. 88, 101–111. doi: 10.1016/j.indmarman.2020.05.005

Hartmann, N. N., Wieland, H., and Vargo, S. L. (2018). Converging on a new theoretical foundation for selling. J. Mark. 82, 1–18. doi: 10.1509/jm.16.0268

Hoffmeister, M. (2018). Sustainable Employee Engagement of Salespeople at Automotive Dealerships in Germany. Curepipe: International Business Conference Mauritius.

Holton, E. F. III (1996). The flawed four-level evaluation model. Hum. Resour. Dev. Q. 7, 5–21. doi: 10.1002/hrdq.3920070103

Holton, E. F. III (2003). “What’s really wrong: Diagnosis for learning transfer system change,” in Improving Learning Transfer in Organizations, ed. E. Salas (San Francisco, CA: Jossey-Bass), 59–79.

Hrastinski, S. (2019). What Do We Mean by Blended Learning? TechTrends 63, 564–569. doi: 10.1007/s11528-019-00375-5

Hung, W. (2013). Problem-based learning: a learning environment for enhancing learning transfer. New Dir. Adult Cont. Educ. 2013, 27–38. doi: 10.1002/ace.20042

Ibrahim, R., Boerhannoeddin, A., and Bakare, K. K. (2017). The effect of soft skills and training methodology on employee performance. Eur. J. Train. Dev. 41, 388–406. doi: 10.1108/EJTD-08-2016-0066

jamovi (2021). The jamovi project. Available online at: https://www.jamovi.org (accessed June 1, 2021).

Kauffeld, S., and Lehmann-Willenbrock, N. (2010). Sales training: effects of spaced practice on training transfer. J. Eur. Ind. Train. 34, 23–37. doi: 10.1108/03090591011010299

Kim, S. (2021). How a company’s gamification strategy influences corporate learning: a study based on gamified MSLP (Mobile social learning platform). Telematics Inform. 57:101505. doi: 10.1016/j.tele.2020.101505

Kim, S., Connerton, T. P., and Park, C. (2021). Exploring the impact of technological disruptions in the automotive retail: a futures studies and systems thinking approach based on causal layered analysis and causal loop diagram. Technol. Forecast. Soc. Change 172:121024. doi: 10.1016/j.techfore.2021.121024

Kirkpatrick, D. (1998). Evaluating Training Programs: The Four Levels, 2nd Edn. Oakland, CA: Berrett-Koehler Publishers.

Kirkpatrick, D., and Kirkpatrick, J. (2006). Evaluating training programs: The four levels. Oakland, CA: Berrett-Koehler Publishers.

Kodwani, A. D., and Prashar, S. (2019). Assessing the influencers of sales training effectiveness before and after training. Benchmarking 26, 1233–1254. doi: 10.1108/BIJ-05-2018-0126

Kraiger, K. (2002). “Decision-based evaluation,” in Creating, implementing, and managing effective training and development, ed. K. Kraiger (San Francisco, CA: Jossey-Bass), 331–375.

Kristensen, K., and Eskildsen, J. (2011). “Is the Net Promoter Score a reliable performance measure?,” in Proceedings of the 2011 IEEE International Conference on Quality and Reliability, Manhattan, NY.

Lands, A., and Pasha, C. (2021). “Reskill to Rebuild: Coursera’s Global Partnership with Government to Support Workforce Recovery at Scale,” in Powering a Learning Society During an Age of Disruption, eds S. Ra, S. Jagannathan, and R. Maclean (Berlin: Springer Singapore), 281–292. doi: 10.1007/978-981-16-0983-1_19

Lassk, F. G., Ingram, T. N., Kraus, F., and Mascio, R. D. (2012). The future of sales training: challenges and related research questions. J. Pers. Sell. Sales Manage. 32, 141–154. doi: 10.2753/PSS0885-3134320112

Leach, M. P., and Liu, A. H. (2003). Investigating interrelationships among sales training evaluation methods. J. Pers. Sell. Sales Manage. 23, 327–339. doi: 10.1080/08853134.2003.10749007

Li, C., and Lalani, F. (2020). The COVID-19 pandemic has changed education forever. This is how Available online at: https://www.weforum.org/agenda/2020/04/coronavirus-education-global-covid19-online-digital-learning/ (accessed June 1, 2021).

Lichtenthal, J. D., and Tellefsen, T. (2001). Toward a theory of business buyer-seller similarity. J. Pers. Sell. Sales Manage.t 21, 1–14. doi: 10.1080/08853134.2001.10754251

Lim, W. M. (2020). Challenger marketing. Ind. Mark. Manage. 84, 342–345. doi: 10.1016/j.indmarman.2019.08.009

Lin, H.-F. (2007). Effects of extrinsic and intrinsic motivation on employee knowledge sharing intentions. J. Inf. Sci. 33, 135–149. doi: 10.1177/0165551506068174

Liu, S., Zhang, H., Ye, Z., and Wu, G. (2020). Online blending learning model of school-enterprise cooperation and course certificate integration during the COVID-19 epidemic. Sci. J. Educ. 8, 66–70. doi: 10.11648/j.sjedu.20200802.16

Lupton, R. A., Weiss, J. E., and Peterson, R. T. (1999). Sales Training Evaluation Model (STEM): a conceptual framework. Ind. Mark. Manage. 28, 73–86. doi: 10.1016/S0019-8501(98)00024-8

Massenberg, A.-C., Schulte, E.-M., and Kauffeld, S. (2017). Never too early: learning transfer system factors affecting motivation to transfer before and after training programs. Hum. Resour. Dev. Q. 28, 55–85. doi: 10.1002/hrdq.21256

Mathur, M., and Kidambi, R. (2012). The contribution of the automobile industry to technology and value creation. Chicago, IL: Kearney.

Mattox, J. R. II (2013). Why L&D Needs Net Promoter Score. Inside Learning Technologies & Skills. Buford, GA: Net promoter score.

Na-Nan, K., and Sanamthong, E. (2020). Self-efficacy and employee job performance. Int. J. Qual. Reliabil. Manage. 37, 1–17. doi: 10.1108/IJQRM-01-2019-0013

Noe, R. A., and Colquitt, J. A. (2002). “Planning for training impact: Principles of training effectiveness,” in Creating, implementing, and managing effective training and development, ed. K. Kraiger (San Francisco, CA: Jossey-Bass), 53–79.

Panagopoulos, N. G., Rapp, A., and Pimentel, M. A. (2020). Firm actions to develop an ambidextrous sales force. J. Serv. Res. 23, 87–104. doi: 10.1177/1094670519883348

Phillips, P. P. (2012). The Bottom Line on ROI: Benefits and Barriers to Measuring Learning, Performance Improvement, and Human Resources Programs, 2nd Edn. Hoboken, NJ: Wiley.

Ployhart, R. E., and Hale, D. (2014). The fascinating psychological microfoundations of strategy and competitive advantage. Annu. Rev. Organ. Psychol. Organ. Behav. 1, 145–172. doi: 10.1146/annurev-orgpsych-031413-091312

Reichheld, F. F. (2006). The microeconomics of customer relationships. MIT Sloan Manage. Rev. 47, 73–78.

Ryan, R. M., and Deci, E. L. (2000). Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp. Educ. Psychol. 25, 54–67. doi: 10.1006/ceps.1999.1020

Salas-Vallina, A., Pozo, M., and Fernandez-Guerrero, R. (2020). New times for HRM? Well-being oriented management (WOM), harmonious work passion and innovative work behavior. Employee Relat. 42, 561–581. doi: 10.1108/ER-04-2019-0185

Shabani, A., Faramarzi, G. R., Farzipoor Saen, R., and Khodakarami, M. (2017). Simultaneous evaluation of efficiency, input effectiveness, and output effectiveness. Benchmarking 24, 1854–1870. doi: 10.1108/BIJ-10-2015-0096

Singh, H., and Reed, C. (2001). Achieving Success with Blended Learning. Centra Software. ASTD State of the Industry Report. Jacksonville, FL: American Society for Training and Development.

Singh, R., and Venugopal, P. (2015). The impact of salesperson customer orientation on sales performance via mediating mechanism. J. Bus. Ind. Mark. 30, 594–607. doi: 10.1108/JBIM-08-2012-0141

Singh, V. L., Manrai, A. K., and Manrai, L. A. (2015). Sales training: a state of the art and contemporary review. J. Econ. Finan. Adm. Sci. 20, 54–71. doi: 10.1016/j.jefas.2015.01.001

Sitzmann, T., and Weinhardt, J. M. (2015). Training engagement theory: a multilevel perspective on the effectiveness of work-related training. J. Manage. 44, 732–756. doi: 10.1177/0149206315574596

Stufflebeam, D. L. (2004). “The 21st-century CIPP model: Origins, development, and use,” in Evaluation roots, ed. M. C. Alkin (Thousand Oaks, CA: Sage), 245–266.

Tan, S., Lee, N., and Hall, D. (2010). CIPP as a model for evaluating learning spaces. Available online at: https://researchbank.swinburne.edu.au/file/b9de5b45-1a28-4c23-ae20-4916498741b8/1/PDF%20(Published%20version).pdf (accessed April 25, 2021).

Tannenbaum, S. I., Cannon-Bowers, J. A., Salas, E., and Mathieu, J. E. (1993). Factors that influence training effectiveness: A conceptual model and longitudinal analysis (Technical report No. 93-011). Orlando, FL: Naval training systems center.

Tuu, H. H., and Olsen, S. O. (2010). Nonlinear effects between satisfaction and loyalty: an empirical study of different conceptual relationships. J. Target. Meas. Anal. Mark. 18, 239–251. doi: 10.1057/jt.2010.19

Watson, J. (2008). Blended learning: The convergence of online and face-to-face education. Promising Practices in Online Learning. Available online at: https://eric.ed.gov/?id=ED509636 (accessed May 20, 2021).

Wen, M. L. Y., and Lin, D. Y. C. (2014). Trainees’ characteristics in training transfer: the relationship among self-efficacy, motivation to learn, motivation to transfer and training transfer. Int. J. Hum. Resour. Stud. 4, 114–129. doi: 10.5296/ijhrs.v4i1.5128

Williams, T. A., Gruber, D. A., Sutcliffe, K. M., Shepherd, D. A., and Zhao, E. Y. (2017). Organizational response to adversity: fusing crisis management and resilience research streams. Acad. Manage. Ann. 11, 733–769. doi: 10.5465/annals.2015.0134

Wilson, P. H., Strutton, D., and Farris, M. T. (2002). Investigating the perceptual aspect of sales training. J. Pers. Sell. Sales Manage. 22, 77–86. doi: 10.1080/08853134.2002.10754296

Wolor, C. W., Solikhah, S., Fidhyallah, N. F., and Lestari, D. P. (2020). Effectiveness of E-Training, E-Leadership, and work life balance on employee performance during COVID-19. J. Asian Finan. Econ. Bus. 7, 443–450. doi: 10.13106/jafeb.2020.vol7.no10.443

Zhang, C., Myers, C. G., and Mayer, D. M. (2018). To cope with stress, try learning something new. Brighton: Harvard Business Review.

Zoltners, A. A., Sinha, P., and Lorimer, S. E. (2008). Sales force effectiveness: a framework for researchers and practitioners. J. Pers. Sell. Sales Manage. 28, 115–131. doi: 10.2753/PSS0885-3134280201

Keywords: COVID-19 training, training effectiveness, workforce upskilling, technology-enhanced training, CIP-R framework, CIPP model, digital training, hybrid learning

Citation: Kim S (2022) Innovating workplace learning: Training methodology analysis based on content, instructional design, programmed learning, and recommendation framework. Front. Psychol. 13:870574. doi: 10.3389/fpsyg.2022.870574

Received: 07 February 2022; Accepted: 29 June 2022;

Published: 22 July 2022.

Edited by:

Abdul Hameed Pitafi, Sir Syed University of Engineering and Technology, PakistanReviewed by:

Ana Moreira, University Institute of Psychological, Social and Life Sciences (ISPA), PortugalElif Baykal, Istanbul Medipol University, Turkey

Andrés Salas-Vallina, University of Valencia, Spain

Copyright © 2022 Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sehoon Kim, c2Vob29uX2tpbUBob3RtYWlsLmNvbQ==

Sehoon Kim

Sehoon Kim