- Department of Brain and Cognitive Sciences, University of Rochester, Rochester, NY, United States

Communication has been studied extensively in the context of speech and language. While speech is tremendously effective at transferring ideas between people, music is another communicative mode that has a unique power to bring people together and transmit a rich tapestry of emotions, through joint music-making and listening in a variety of everyday contexts. Research has begun to examine the behavioral and neural correlates of the joint action required for successful musical interactions, but it has yet to fully account for the rich, dynamic, multimodal nature of musical communication. We review the current literature in this area and propose that naturalistic musical paradigms will open up new ways to study communication more broadly.

Introduction

When two people communicate, they exchange information, ideas, or feelings, typically through dialogue. While the vast majority of communication research has focused on speech and language, another universal and emotionally powerful medium of human communication is music. Music allows parents to bond with babies, friends to form lifelong memories while singing together on a car trip, and performers to feel a rush of connection with other players and audience members.

Music’s evolutionary origins are rooted in communicative functions (Savage et al., 2021), with one example being the use of African talking drums to communicate messages across villages, including emotional information (Carrington, 1971; Ong, 1977). Music and verbal communication share many common features. For example, music relies primarily on acoustic signaling but is highly multisensory, involving social cues such as gesture and facial expression. Music and language rely on overlapping neural substrates (see Peretz et al., 2015 for a review), both recruiting a hierarchy progressing from primary auditory cortex to higher-order brain regions that process successively longer syntactic units (Lerner et al., 2011; Farbood et al., 2015). This hierarchical structure has also been observed in auditory samples taken from conversations, musical interactions, and even in communications between animals such as killer whales (Kello et al., 2017). Many studies have focused on the ontological and neural overlap and mutual influence between music and language (see Peretz et al., 2015 for a review). However, music connects people in ways that transcend language, thus providing a unique lens into certain aspects of communication largely overlooked or less relevant in language research. Perhaps more than almost any other medium, music communicates a rich tapestry of emotional content, another type of communicable information (Parkinson, 2011), both within a musical group and to the audience. As a collective art form, music plays an important role in establishing shared cultural identity through ritual (e.g., at weddings, funerals, festivals), in which its powers of emotional communication play a large role. In film and television, music adds emotional depth to critical plot points, and it can unlock autobiographical memories in patients with dementia (El Haj et al., 2012a,b). In essence, it provides the social–emotional fabric of many people’s lives, both in the moment and through the ongoing construction of our personal and collective social narratives. While music’s activation of specific emotional processing regions in individual listeners has been fairly extensively studied (Koelsch, 2014), there is little work examining how multiple performers’ brains jointly represent the moment-to-moment dynamics of musical emotion and how these representations relate to listeners’ ongoing experiences.

Another key component of communication between performers takes the form of joint action. Although conversational speech does involve turn-taking and mirroring of body movements (e.g., gestures, head nods), the degree of interpersonal bodily coordination involved in a musical interaction is typically much more precisely temporally aligned, can have a large impact on the interpretation of a piece, and is often required for the interaction to run smoothly. For exampleed fo, musicians must breathe together to align note onsets, and coordinate head, arm and even leg movements to maintain a steady tempo. If this bodily system of the ensemble becomes misaligned, it can have disastrous effects on the musical outcome, potentially requiring the players to start over. Beyond large-scale, visible bodily coordination, players also must precisely coordinate intonation by making micro-adjustments to embouchure and strings, which involves listening and matching at multiple levels (see Supplementary Video Figure 1). Broadly, communication is a requirement for coordination, which is particularly relevant for joint music-making among all types of human interactions. Although incidental synchrony could theoretically occur in the absence of communication (e.g., if two people played at the same time by individually following the beat of a conductor without seeing or hearing each other), this would not represent musical coordination or communication between players. Lacking the in-the-moment, interpersonal adaptation that makes every performance unique, this would almost certainly yield a less naturalistic, less cohesive, less beautiful result.

Traditionally, most studies of human communication have focused on language and have investigated processing and learning of highly controlled linguistic units. However, modern approaches have begun to feature more naturalistic stimuli, such as stories (Huth et al., 2016), movies (Chen et al., 2017), and lectures (Meshulam et al., 2021), and real-world tasks, such as live interactions between multiple interlocutors using hyperscanning paradigms (Dikker et al., 2017; Dai et al., 2018). In this review, we propose applying novel and naturalistic approaches to characterizing the myriad of complex cognitive, social, and emotional processes that enable playing music with others. We highlight new ways of measuring crucial but largely overlooked aspects of everyday musical communication: the dynamic exchange of acoustic and gestural signals, the interpersonal coordination that supports rhythmic and harmonic alignment, and the uniquely rich emotional exchange between performers (and between performers and listeners).

Another unique feature of music that enriches its contribution to communication research is the diversity of musical interactions that exist in the world. Virtually all humans are experts at using language to communicate, but the depth and type of musical engagement varies drastically across individuals and cultures, enabling the investigation of musical communication across a range of abilities, modes, genres, and contexts (e.g., amateur vs. professional, instrumental vs. vocal, classical vs. rock, theater vs. church vs. karaoke bar). Finally, music’s potential as an educational and therapeutic tool has only begun to be explored, and we highlight the ways in which research on naturalistic musical communication can lead to more powerful interventions in classroom and clinical settings.

How has real-world musical communication been studied and which questions remain unanswered?

Joint music-making is unquestionably a highly social activity (see Mehr et al., 2021; Savage et al., 2021 for a debate on its evolutionary origins). Over the course of a musical interaction, musicians must jointly attend to ensure that they are aligning to one another (rhythmically, harmonically, affectively) and flexibly reacting to moment-to-moment changes enacted by their musical partner(s), resulting in mutual entrainment on several levels. Most previous studies examining the behavioral and neural underpinnings of communication have largely ignored these dynamic features, which are critical components of everyday interactions (see Redcay and Schilbach, 2019).

Some researchers have begun to investigate behavioral coordination between multiple musicians (see Volpe et al., 2016 for a review). The string quartet is an excellent model for the study of small group dynamics and communication; because there is no conductor leading them, the musicians must jointly attend to one another in order to play together. For example, musicians must expertly direct attention simultaneously to the written music and, peripherally, to the other players to maintain synchrony. Quartets have been used as a proxy for intense work groups, and the success of a quartet predicts measures of its conflict management and group effectiveness (Murnighan and Conlon, 1991). Members of string quartets become empathetically attuned to one another during a performance (Seddon and Biasutti, 2009), and body sway reflects joint emotional expression (Chang et al., 2019). Players identify and correct for timing asynchronies, and these adaptive strategies often vary based on each quartet’s unique dynamics (Wing et al., 2014). Joint action also requires separately tracking and differentiating one’s own actions versus a partner’s over time, which facilitates interpersonal coordination (Loehr et al., 2013; Liebermann-Jordanidis et al., 2021) (See Supplementary Video Figure 1 for a demonstration of the dynamic joint actions required to support successful musical communication in a similar small group, a woodwind quintet).

To answer questions about musical communication at the neural level, researchers have used a variety of neuroimaging paradigms. In single-brain approaches, researchers collect data from only one participant’s brain, while that person communicates with an in-person or virtual partner, or is made to believe they are (Donnay et al., 2014). In dual-brain (hyperscanning) approaches, researchers collect data simultaneously from two participants’ brains during a social interaction (see Czeszumski et al., 2020 and Montague et al., 2002 for reviews). Both types of paradigms can make use of electroencephalography (EEG), functional magnetic resonance imaging (fMRI), electrocorticography (ECoG), and/or functional near-infrared spectroscopy (fNIRS) to answer different questions. EEG has millisecond-level temporal resolution but poor spatial resolution and is best suited to answer questions about the brain’s response to a particular event in time (e.g., a listener’s response to a musician’s change in tempo). By contrast, fMRI has good spatial resolution but poor temporal resolution, and is best suited to answer questions regarding the patterns of activation in certain brain regions during a particular task or listening condition. ECoG offers high spatial and temporal resolution and enables good coverage of the temporal lobe, but the practical constraints of working with surgical patients limit the feasibility of naturalistic, interactive musical tasks to some degree. Lastly, fNIRS has higher spatial resolution than EEG and comparable temporal resolution to fMRI but is relatively cost-effective and minimally susceptible to motion artifacts, making it well-suited for naturalistic hyperscanning paradigms (Czeszumski et al., 2020).

Studying live interactions between multiple musicians at once enables the characterization of interpersonal dynamics that underlie everyday music-making, such as mutual entrainment and joint improvisation. Entrainment can involve alignment (synchronization) to an external beat maintained by a metronome or conductor, whereas mutual entrainment additionally involves moment-to-moment adaptive adjustments between multiple players to maintain a steady meter amidst local changes in tempo. Behavioral studies have shown that two people can jointly entrain to a beat, and that being paired with a musician increases a non-musician’s ability to maintain a steady beat via tapping (Schultz and Palmer, 2019). During joint music-making, humans temporally and affectively entrain to one another (see Phillips-Silver and Keller, 2012 for a review), resulting in synchronous brain activity (Zamm et al., 2021). Using EEG paradigms, between-brain oscillatory couplings both before and during dyadic guitar playing have been linked to interpersonally coordinated actions (Lindenberger et al., 2009; Sänger et al., 2012). Additionally, different patterns of directionality in brain-to-brain synchronization are associated with leader and follower roles during guitar playing (Sänger et al., 2013). Similarly, during joint piano playing, alpha oscillations index participants’ knowledge about their own actions as well as their musical partner’s (Novembre et al., 2016). In an fNIRS study, distinct blood oxygenation patterns in temporo-parietal and somatosensory areas were found to be associated with different violin parts in a duo (Vanzella et al., 2019).

The inferior frontal cortex (IFC) has been implicated in several studies of communicative interaction, both because of its role in language processing and its involvement in the mirror neuron system for joint action (Rizzolatti and Craighero, 2004; Stephens et al., 2010; Jiang et al., 2012). Studies using fNIRS have revealed neural synchronization in the left IFC while two people sang or hummed together (Osaka et al., 2015), and the strength of neural synchronization in bilateral IFC between learners and instructors during interactive song learning predicts behavioral performance (Pan et al., 2018).

While most everyday verbal communication (e.g., dialogue) is improvised, musical communication regularly takes both scripted and improvised forms. The difference between these forms of joint action lies in performers’ reliance on planned versus emergent coordination mechanisms (Goupil and Aucouturier, 2021). During improvisation between musical partners, not only do shared intentions emerge and cause coordination between musicians, but third-party listeners can identify the musicians’ goals (e.g., finding a good ending for oneself or the group as a whole). Hyper-networks based on an interplay of different EEG frequencies are involved in leader/follower roles during guitar improvisation (Müller et al., 2013), and musical improvisation enhances interpersonal coordination, promoting alignment of body movements in a subsequent conversation (Robledo et al., 2021). fMRI research has begun to examine the neural underpinnings of jazz improvisation, finding deactivation of the dorsolateral prefrontal cortex (DLPFC), associated with creative thinking or being in a state of “flow” (Limb and Braun, 2008). During the highly communicative exchange known as “trading fours,” activation in areas related to syntactic processing was observed, suggesting these areas play a domain-general role in communication beyond language (Donnay et al., 2014). However, very few neuroimaging studies have asked pairs or groups of musicians to improvise freely and exchange musical ideas back and forth. Finally, and perhaps counterintuitively, even very large groups improvising together can attain a high level of coordination and joint action during complex tasks without much external structure (Goupil et al., 2020).

The existing hyperscanning literature largely focuses on mirrored synchrony and phase-locking to stimuli or to a communicative partner measured across an entire interaction, but future dual-brain approaches must embrace the moment-to-moment, back-and-forth, nature of music-making, including the way musicians adapt to one another on a number of features and how that process is modulated by expertise. This will require measuring different kinds of interpersonal coupling in fine-grained time bins organized according to constantly changing musical content. For instance, EEG could be used to examine the precise timing of the brain’s response in musician A to a change in tempo, pitch, or rhythm by musician B and the resulting behavioral change. fMRI could be used to measure how a musician’s brain adapts to unexpected musical content (e.g., a non-diatonic note or syncopated rhythm) initiated by a partner outside of the scanner. fNIRS could be used to examine moment-to-moment adaptation to a communicative partner in pairs of musicians, across multiple brain regions at once. EEG could be used simultaneously in many musicians to examine the leader/follower temporal dynamics of orchestral music. Motion capture could be used in conjunction with hyperscanning to assess how the brain represents the dynamics of gesture over time. Finally, while previous studies have used hyperscanning to examine scripted music-making, future studies should directly compare scripted versus improvised musical interactions, as well as joint versus solo improvisation.

How is emotion communicated during joint music-making?

Music is inherently emotional (Juslin, 2000), enabling it to support important social functions when played or even listened to. Performing in an ensemble has a uniquely powerful bonding effect between players (and between players and audiences), and simply listening to music often occurs in the context of social experiences that have huge emotional implications (e.g., hearing a sweeping movie score and feeling connected to the other people in the theater or listening to a nostalgic song with a friend during a road trip). However, emotion has been largely ignored in more ecologically valid hyperscanning paradigms examining musical communication (Acquadro et al., 2016). Indeed, music and speech overlap to some degree in their ability to communicate emotion, with a shared mechanism responsible for affective processing of musical and vocal stimuli in the auditory cortex (Paquette et al., 2018) and shared acoustic codes (Curtis and Bharucha, 2010; Coutinho and Dibben, 2013; Nordström and Laukka, 2019). Across speech and music, participants’ emotional experiences can be predicted by the same seven features: loudness, tempo/speech rate, melody/prosody contour, spectral centroid, spectral flux, sharpness, and roughness (Coutinho and Dibben, 2013), and the minor third is associated with sadness both in music and in speech prosody (Curtis and Bharucha, 2010).

Music often triggers physiological responses like chills, changes in skin conductance, and changes in heart rate. When participants listened to music that induced chills, areas of the brain associated with reward/motivation, emotion, and arousal were found to be more active (Blood and Zatorre, 2001). Additionally, those who experience chills show higher white matter connectivity between auditory, social, and reward-processing areas (Sachs et al., 2016). Further, feeling moved or touched by music shares common physiological changes with feeling moved or touched by videos of emotional social interactions, like an elephant reuniting with its mother (Schubert et al., 2018; Vuoskoski et al., 2022).

While there is an intuitive notion that music has the ability to communicate emotion even more powerfully than speech, there is minimal direct empirical evidence for this. Developmental research has shown that song is reliably more effective than speech at modulating emotion in infants (Corbeil et al., 2013; Trehub et al., 2016; Cirelli and Trehub, 2020). And in a dual-brain fNIRS study with adults, the right temporo-parietal junction (rTPJ), which has been previously implicated in social and emotional communication, was found to be more strongly activated during joint drumming than conversation (Rojiani et al., 2018). However, the relative efficacy of music versus speech to communicate emotional information is still largely unknown.

Future studies must delineate the multiple ways that musical emotion is communicated during everyday experiences: the emotions a listener experiences as they hear live or recorded music, the emotions a performer experiences as they play with others or receive feedback from listeners, and the way the communicated emotion helps to create a cohesive social unit between people. Such studies could use behavioral methods and questionnaires to assess emotional states before and after music making, ask performers or listeners to continuously indicate (e.g., via button press or slider) when they enter a new emotional state throughout a performance, and/or use multivariate pattern extraction techniques in neuroimaging paradigms to decode the fine-grained patterns of brain responses in emotion-associated areas as these states change over the course of music-making. Further, while research suggests that song has a unique emotional power over speech in infants (Corbeil et al., 2013; Trehub et al., 2016; Cirelli and Trehub, 2020), it remains to be seen if this effect persists into adulthood. Thus, future studies would benefit from directly comparing emotional communication using musical versus matched linguistic stimuli in adults.

Musical engagement exists on a spectrum: how do individual differences in musical engagement affect musical communication–and everyday communication more broadly?

One major way in which communication via music differs from communication via language is that in many cultures, musical expertise—as well as degree and type of musical engagement—varies widely in a population. Such variability also exists across cultures: in some cultures, joint music-making is a crucial part of everyday life and rituals, and in others, music is less a part of everyday life and is something that is reserved for certain occasions and highly trained experts. How do differences in musical engagement affect musical communication and translate into everyday social communication?

Most people engage with music in some way on a daily basis—overhearing it at the grocery store, actively listening to recordings at home, attending live concerts among other audience members, singing karaoke with friends, or performing in an ensemble. In many of these examples, music acts as a social reinforcer, but it is unknown how this spectrum of musical engagement impacts development, social bonding, and other processes. Much of the research on the impacts of musical expertise has investigated how training affects relatively low-level communicative processes, such as early neural responses to simple speech sounds. For example, in two longitudinal studies, preschoolers showed enhanced auditory brainstem responses to speech in noise after 1 year of musical training (Strait et al., 2013), and adolescents in a school band showed a level of subcortical response consistency to syllables that was less degraded over the course of adolescence than in peers in a non-musical control group (Tierney et al., 2015). Some studies have found a modest benefit of musical training on speech segmentation (François et al., 2013) and phonological awareness (Degé and Schwarzer, 2011; Patscheke et al., 2016). Given that musical engagement early in life (e.g., singing or playing in a school choir or band) is often a highly rewarding and socially enriching experience, surprisingly little is known about the impact of early musical training on social processes, but formal music training has been associated with a decrease in relationship conflict in undergraduate students (MacDonald and Wilbiks, 2021).

Around the world, music plays a crucial role in important moments in life, from celebrations (festivals, weddings, graduations, inaugurations) to funerals. The extent to which collective music-making exists as an important part of social bonding is surprisingly variable across small-scale societies (Patel and von Rueden, 2021). In addition, there is significant variability across kinds of collective music-making in terms of whether most people (versus merely professionals) feel welcome to participate. For example, at weekly religious services, many congregants sing along regardless of vocal training (this is also true at karaoke bars and many sporting events around the world), but at other kinds of social occasions (dinner parties, talent shows) non-musicians often feel shy and ill-equipped to “perform” at a certain level. This variability in people’s engagement with various musical interactions also likely differs across cultures in ways that would be productive to study within a multi-brain framework.

While previous work has established musician/non-musician differences in processing communicative information (typically, simple music and speech sounds), future work must take into account this spectrum of everyday musical engagement and how it impacts music’s communicative functions throughout development and society at large. For example, it would be informative to use dual-brain hyperscanning paradigms to examine the difference between patterns of neural synchrony within pairs of highly trained musicians versus less trained musicians, or between pairs of people from a culture where music is more versus less widely practiced. In addition, studying differences in both musical and verbal communication between pairs of professional musicians who are accustomed to playing with each other versus strangers could help elucidate the neural overlap between these two domains of communication in more naturalistic, interactive contexts. For example, highly skilled jazz musicians who regularly “trade fours” are uniquely adept at matching certain aspects of other performers’ acoustic patterns to create coherent and complementary melodic lines; does this powerful “conversational” ability extend in any way to verbal communication [beyond body movement; (Robledo et al., 2021)] among such players, and do similar neural substrates support both of these processes?

Music therapy can improve interpersonal connection and communication in clinical settings

One of the most powerful clinical applications of music is to improve communication among populations that struggle with verbal communication, self-expression, and/or social engagement. In children with autism spectrum disorder (ASD), music therapy has been shown to improve emotional and interpersonal responsiveness during the course of a session (Kim et al., 2009) and improve communication over an 8–12 week intervention (Sharda et al., 2018). Music has also been used in group settings to specifically improve communication and interpersonal relationships: group music therapy has been shown to improve social skills of children with ASD (Blythe, 2014) and family-centered music therapy has been shown to improve interpersonal relationships at home (Thompson, 2012; Mayer-Benarous et al., 2021 for a review).

Music therapy is also used to help improve communication for people with dementia. Music therapy strengthens interpersonal relationships between the patient and their music therapist, peers, and family (McDermott et al., 2014). People with dementia show more communicative behavior during a music therapy session (Schall et al., 2014) and an increase in language use and fluency after a session compared to a conversational therapy session (Brotons and Koger, 2000). Since listening to familiar music enhances self-awareness (Arroyo-Anlló et al., 2013) and autobiographical memory (El Haj et al., 2012a,b), for people with dementia, music may provide a means of connecting with the people around them and their past selves, thus helping them maintain a sense of personal identity as the disease progresses.

These studies document some of the cognitive and behavioral benefits of music therapy on communication, but there is more work to be done to fully understand these benefits and the neural mechanisms that give rise to them. A traditional approach would be to use neuroimaging pre- and post-intervention in an attempt to measure neural correlates of observed cognitive, behavioral, or social effects. Beyond that, hyperscanning approaches (e.g., with EEG or fNIRS) will be transformative in capturing the dynamic interactions between patients and music therapists or parents as they unfold in real time. For example, dyads of parents and non-verbal children become neurally synchronized during a music therapy session even when the parents are not active participants (Samadani et al., 2021). This could be evidence of cognitive-emotional coupling mediated by therapy and might be involved in strengthening the parent/child relationship. Isolating a neural signature or biomarker of communication success will provide useful feedback to improve the efficiency of not only music therapy but music education as well.

Concluding remarks: future work would benefit from using music as a model for communication and taking into account complex brain-behavior dynamics

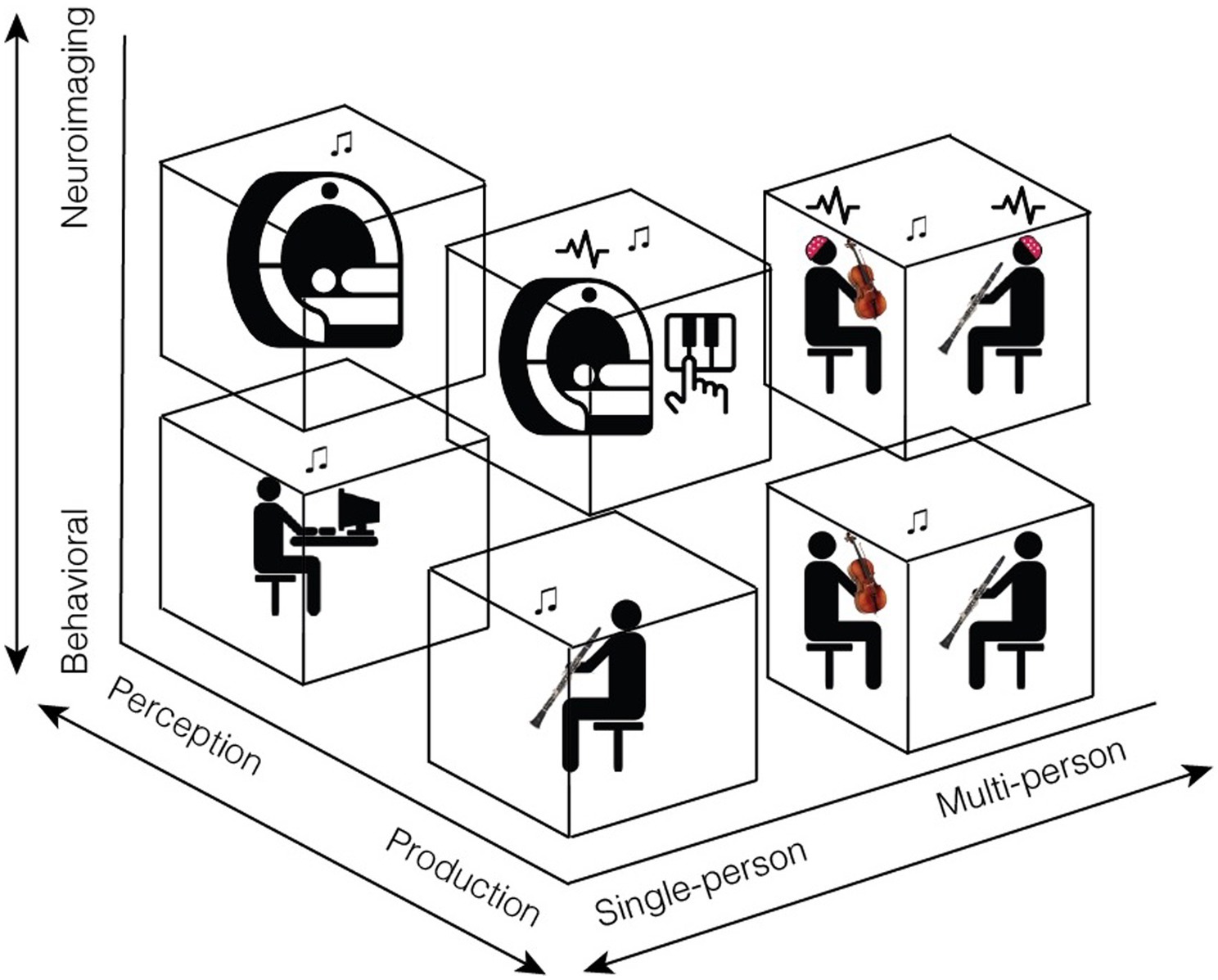

Because music is universal, has features that overlap and complement those found in speech, and plays a unique role in human emotional, social, and cultural experiences, it offers an ideal model for future studies on human communication. In this review, we have proposed that naturalistic, interactive music paradigms provide a rich opportunity to study the interpersonal dynamics of communication, and that communication research in general would benefit from a closer examination into the phenomenon of joint music-making (see Figure 1).

Figure 1. Our proposed framework for future studies of communication includes three methodological axes: behavioral to neuroimaging, perception to production, and single- to multi-person. Single-person behavioral studies of perception, which are already quite common, include psychophysical experiments or survey methods. Single-person neuroimaging studies of perception typically include EEG or fMRI experiments in which participants listen to music. Studying music production in a single person behaviorally involves examining the way a single musician communicates information as they play, whether by adjustments in tempo, dynamics, body sway, or other features. Finally, multi-person studies are essential for capturing the real- world dynamics of everyday musical communication. For example, we propose neuroimaging experiments in which a person’s brain responses are measured while they interact with a live partner or are made to believe they are. Such studies could examine the way musicians jointly attend to one another and adjust to musical partners, how they communicate emotion both to each other and to an audience, and how representations in brain areas involved in these joint actions dynamically change across the interaction. This figure contains icons from thenounproject.com: Activity by mikicon, Mri by Flowicon, Music Note by Nico Ilk, and Piano by Adrien Coquet.

While previous studies have examined some aspects of musical communication, often focusing on the overlap between the processing of music and language at the acoustic or syntactic level, most paradigms use fairly simplistic stimuli and individual participants (rather than dyads or groups) or have focused on inter-brain mirrored synchrony rather than modeling the complex dynamics inherent in real-life musical interaction. Further, the existing literature has largely ignored the influence of musical experience on communication as well as the power of music to transfer emotions between people. Future studies must take into account the dynamic nature of communication and the parallel features that must be coordinated across performers (e.g., timing, tuning, leader/follower dynamics), going beyond the single- or even dual-brain models that have previously been used. Naturalistic paradigms featuring real-life musical interactions and stimuli are best suited to address these complex questions and relationships, and findings have the potential to inform avenues for future therapeutic interventions.

Author contributions

SI and EP contributed to the conception of the topic. All authors wrote the sections, contributed to revision, and read and approved the final manuscript.

Acknowledgments

This work was supported by a GRAMMY Museum Grant awarded to EP. Figure 1 contains icons from thenounproject.com: Activity by mikicon, Mri by Flowicon, Music Note by Nico Ilk, and Piano by Adrien Coquet. Thanks to video figure quintet members Vince Cassano, clarinet; Mark Questad, horn; Kristy Larkin, bassoon; Meaghan Stambaugh, flute (left to right in the video) who played Pastorale Op. 14, No. 1 by Gabriel Pierné. Written informed consent was obtained from the individual(s) and/or minor(s)’ legal guardian/next of kin for the publication of any potentially identifiable images or data included in this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2023.1012839/full#supplementary-material

Supplementary Video 1 | Demonstration of the dynamic joint actions required to support successful musical communication in a similar small group, a woodwind quintet.

References

Acquadro, M. A. S., Congedo, M., and De Riddeer, D. (2016). Music performance as an experimental approach to hyperscanning studies. Front. Hum. Neurosci. 10:242. doi: 10.3389/fnhum.2016.00242

Arroyo-Anlló, E. M., Díaz, J. P., and Gil, R. (2013). Familiar music as an enhancer of self-consciousness in patients with Alzheimer’s disease. Biomed. Res. Int. 2013, 1–10. doi: 10.1155/2013/752965

Blood, A. J., and Zatorre, R. J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl. Acad. Sci. U. S. A. 98, 11818–11823. doi: 10.1073/pnas.191355898

Blythe, A. (2014). Effects of a music therapy group intervention on enhancing social skills in children with autism. J. Music. Ther. 51, 250–275. doi: 10.1093/jmt/thu012

Brotons, M., and Koger, S. M. (2000). The impact of music therapy on language functioning in dementia. J. Music. Ther. 37, 183–195. doi: 10.1093/jmt/37.3.183

Carrington, J. F. (1971). The talking drums of Africa. Sci. Am. 225, 90–94. doi: 10.1038/scientificamerican1271-90

Chang, A., Kragness, H. E., Livingstone, S. R., Bosnyak, D. J., and Trainor, L. J. (2019). Body sway reflects joint emotional expression in music ensemble performance. Sci. Rep. 9:205. doi: 10.1038/s41598-018-36358-4

Chen, J., Leong, Y. C., Honey, C. J., Yong, C. H., Norman, K. A., and Hasson, U. (2017). Shared memories reveal shared structure in neural activity across individuals. Nat. Neurosci. 20, 115–125. doi: 10.1038/nn.4450

Cirelli, L. K., and Trehub, S. E. (2020). Familiar songs reduce infant distress. Dev. Psychol. 56, 861–868. doi: 10.1037/dev0000917

Corbeil, M., Trehub, S., and Peretz, I. (2013). Speech vs. singing: infants choose happier sounds. Front. Psychol. 4:372. doi: 10.3389/fpsyg.2013.00372

Coutinho, E., and Dibben, N. (2013). Psychoacoustic cues to emotion in speech prosody and music. Cognit. Emot. 27, 658–684. doi: 10.1080/02699931.2012.732559

Curtis, M. E., and Bharucha, J. J. (2010). The minor third communicates sadness in speech, mirroring its use in music. Emotion 10, 335–348. doi: 10.1037/a0017928

Czeszumski, A., Eustergerling, S., Lang, A., Menrath, D., Gerstenberger, M., Schuberth, S., et al. (2020). Hyperscanning: a valid method to study neural inter-brain underpinnings of social interaction. Front. Hum. Neurosci. 14:39. doi: 10.3389/fnhum.2020.00039

Dai, B., Chen, C., Long, Y., Zheng, L., Zhao, H., Bai, X., et al. (2018). Neural mechanisms for selectively tuning in to the target speaker in a naturalistic noisy situation. Nat. Commun. 9:2405. doi: 10.1038/s41467-018-04819-z

Degé, F., and Schwarzer, G. (2011). The effect of a music program on phonological awareness in preschoolers. Front. Psychol. 2:124. doi: 10.3389/fpsyg.2011.00124

Dikker, S., Wan, L., Davidesco, I., Kaggen, L., Oostrik, M., McClintock, J., et al. (2017). Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 27, 1375–1380. doi: 10.1016/j.cub.2017.04.002

Donnay, G. F., Rankin, S. K., Lopez-Gonzalez, M., Jiradejvong, P., and Limb, C. J. (2014). Neural Substrates of Interactive Musical Improvisation: An fMRI Study of ‘Trading Fours’ in Jazz. PLoS ONE, 9:e88665. doi: 10.1371/journal.pone.0088665

El Haj, M., Fasotti, L., and Allain, P. (2012b). The involuntary nature of music-evoked autobiographical memories in Alzheimer’s disease. Conscious. Cogn. 21, 238–246. doi: 10.1016/j.concog.2011.12.005

El Haj, M., Postal, V., and Allain, P. (2012a). Music enhances autobiographical memory in mild Alzheimer’s disease. Educ. Gerontol. 38, 30–41. doi: 10.1080/03601277.2010.515897

Farbood, M. M., Heeger, D. J., Marcus, G., Hasson, U., and Lerner, Y. (2015). The neural processing of hierarchical structure in music and speech at different timescales. Front. Neurosci. 9:157. doi: 10.3389/fnins.2015.00157

François, C., Chobert, J., Besson, M., and Schön, D. (2013). Music training for the development of speech segmentation. Cereb. Cortex 23, 2038–2043. doi: 10.1093/cercor/bhs180

Goupil, L., and Aucouturier, J.-J. (2021). Distinct signatures of subjective confidence and objective accuracy in speech prosody. Cognition 212:104661. doi: 10.1016/j.cognition.2021.104661

Goupil, L., Saint-Germier, P., Rouvier, G., Schwarz, D., and Canonne, C. (2020). Musical coordination in a large group without plans nor leaders. Sci. Rep. 10:20377. doi: 10.1038/s41598-020-77263-z

Huth, A. G., De Heer, W. A., Griffiths, T. L., Theunissen, F. E., and Gallant, J. L. (2016). Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532, 453–458. doi: 10.1038/nature17637

Jiang, J., Dai, B., Peng, D., Zhu, C., Liu, L., and Lu, C. (2012). Neural synchronization during face-to-face communication. J. Neurosci. 32, 16064–16069. doi: 10.1523/JNEUROSCI.2926-12.2012

Juslin, P. N. (2000). Cue utilization in communication of emotion in music performance: Relating performance to perception. Journal of Experimental Psychology: Human Perception and Performance, 26, 1797–1812. doi: 10.1037/0096-1523.26.6.1797

Kello, C. T., Bella, S. D., Médé, B., and Balasubramaniam, R. (2017). Hierarchical temporal structure in music, speech and animal vocalizations: jazz is like a conversation, humpbacks sing like hermit thrushes. J. R. Soc. Interface 14:20170231. doi: 10.1098/rsif.2017.0231

Kim, J., Wigram, T., and Gold, C. (2009). Emotional, motivational and interpersonal responsiveness of children with autism in improvisational music therapy. Autism 13, 389–409. doi: 10.1177/1362361309105660

Koelsch, S. (2014). Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15, 170–180. doi: 10.1038/nrn3666

Lerner, Y., Honey, C. J., Silbert, L. J., and Hasson, U. (2011). Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J. Neurosci. 31, 2906–2915. doi: 10.1523/JNEUROSCI.3684-10.2011

Liebermann-Jordanidis, H., Novembre, G., Koch, I., and Keller, P. E. (2021). Simultaneous self-other integration and segregation support real-time interpersonal coordination in a musical joint action task. Acta Psychol. 218:103348. doi: 10.1016/j.actpsy.2021.103348

Limb, C. J., and Braun, A. R. (2008). Neural substrates of spontaneous musical performance: an fMRI study of jazz improvisation. PLoS One 3:e1679. doi: 10.1371/journal.pone.0001679

Lindenberger, U., Li, S.-C., Gruber, W., and Müller, V. (2009). Brains swinging in concert: cortical phase synchronization while playing guitar. BMC Neurosci. 10:22. doi: 10.1186/1471-2202-10-22

Loehr, J. D., Kourtis, D., Vesper, C., Sebanz, N., and Knoblich, G. (2013). Monitoring individual and joint action outcomes in duet music performance. J. Cogn. Neurosci. 25, 1049–1061. doi: 10.1162/jocn_a_00388

MacDonald, J., and Wilbiks, J. M. P. (2021). Undergraduate students with musical training report less conflict in interpersonal relationships. Psychol. Music 50, 1091–1106. doi: 10.1177/03057356211030985

Mayer-Benarous, H., Benarous, X., Vonthron, F., and Cohen, D. (2021). Music therapy for children with autistic spectrum disorder and/or other neurodevelopmental disorders: a systematic review. Front. Psych. 12:643234. doi: 10.3389/fpsyt.2021.643234

McDermott, O., Orrell, M., and Ridder, H. M. (2014). The importance of music for people with dementia: the perspectives of people with dementia, family carers, staff and music therapists. Aging Ment. Health 18, 706–716. doi: 10.1080/13607863.2013.875124

Mehr, S. A., Krasnow, M. M., Bryant, G. A, and Hagen, E. H. (2021). Origins of music in credible signaling. Behavioral and Brain Sciences, 44:643234. doi: 10.1017/S0140525X20000345

Meshulam, M., Hasenfratz, L., Hillman, H., Liu, Y. F., Nguyen, M., Norman, K. A., et al. (2021). Neural alignment predicts learning outcomes in students taking an introduction to computer science course. Nat. Commun. 12:1922. doi: 10.1038/s41467-021-22202-3

Montague, P. R., Berns, G. S., Cohen, J. D., McClure, S. M., Pagnoni, G., Dhamala, M., et al. (2002). Hyperscanning: simultaneous fMRI during linked social interactions. NeuroImage 16, 1159–1164. doi: 10.1006/nimg.2002.1150

Müller, V., Sänger, J., and Lindenberger, U. (2013). Intra- and inter-brain synchronization during musical improvisation on the guitar. PLoS One 8:e73852. doi: 10.1371/journal.pone.0073852

Murnighan, J. K., and Conlon, D. E. (1991). The dynamics of intense work groups: a study of British string quartets. Adm. Sci. Q. 36, 165–186. doi: 10.2307/2393352

Nordström, H., and Laukka, P. (2019). The time course of emotion recognition in speech and music. J. Acoust. Soc. Am. 145, 3058–3074. doi: 10.1121/1.5108601

Novembre, G., Sammler, D., and Keller, P. E. (2016). Neural alpha oscillations index the balance between self-other integration and segregation in real-time joint action. Neuropsychologia 89, 414–425. doi: 10.1016/j.neuropsychologia.2016.07.027

Ong, W. J. (1977). African talking drums and oral noetics. New Lit. Hist. 8, 411–429. doi: 10.2307/468293

Osaka, N., Minamoto, T., Yaoi, K., Azuma, M., Shimada, Y. M., and Osaka, M. (2015). How two brains make one synchronized mind in the inferior frontal cortex: FNIRS-based hyperscanning during cooperative singing. Front. Psychol. 6:1811. doi: 10.3389/fpsyg.2015.01811

Pan, Y., Novembre, G., Song, B., Li, X., and Hu, Y. (2018). Interpersonal synchronization of inferior frontal cortices tracks social interactive learning of a song. NeuroImage 183, 280–290. doi: 10.1016/j.neuroimage.2018.08.005

Paquette, S., Takerkart, S., Saget, S., Peretz, I., and Belin, P. (2018). Cross-classification of musical and vocal emotions in the auditory cortex: Cross-classification of musical and vocal emotions. Ann. N. Y. Acad. Sci. 1423, 329–337. doi: 10.1111/nyas.13666

Parkinson, B. (2011). Interpersonal emotion transfer: contagion and social appraisal. Soc. Personal. Psychol. Compass 5, 428–439. doi: 10.1111/j.1751-9004.2011.00365.x

Patel, A. D., and von Rueden, C. (2021). Where they sing solo: accounting for cross-cultural variation in collective music-making in theories of music evolution. Behav. Brain Sci. 44:e85. doi: 10.1017/S0140525X20001089

Patscheke, H., Degé, F., and Schwarzer, G. (2016). The effects of training in music and phonological skills on phonological awareness in 4- to 6-year-old children of immigrant families. Front. Psychol. 7:1647. doi: 10.3389/fpsyg.2016.01647

Peretz, I., Vuvan, D., Lagrois, M.-É., and Armony, J. L. (2015). Neural overlap in processing music and speech. Philos. Trans. R. Soc. B 370:20140090. doi: 10.1098/rstb.2014.0090

Phillips-Silver, J., and Keller, P. (2012). Searching for roots of entrainment and joint action in early musical interactions. Front. Hum. Neurosci. 6:26. doi: 10.3389/fnhum.2012.00026

Redcay, E., and Schilbach, L. (2019). Using second-person neuroscience to elucidate the mechanisms of social interaction. Nat. Rev. Neurosci. 20, 495–505. doi: 10.1038/s41583-019-0179-4

Rizzolatti, G., and Craighero, L. (2004). The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192. doi: 10.1146/annurev.neuro.27.070203.144230

Robledo, J. P., Hawkins, S., Cornejo, C., Cross, I., Party, D., and Hurtado, E. (2021). Musical improvisation enhances interpersonal coordination in subsequent conversation: motor and speech evidence. PLoS One 16:e0250166. doi: 10.1371/journal.pone.0250166

Rojiani, R., Zhang, X., Noah, A., and Hirsch, J. (2018). Communication of emotion via drumming: dual-brain imaging with functional near-infrared spectroscopy. Soc. Cogn. Affect. Neurosci. 13, 1047–1057. doi: 10.1093/scan/nsy076

Sachs, M. E., Ellis, R. J., Schlaug, G., and Loui, P. (2016). Brain connectivity reflects human aesthetic responses to music. Soc. Cogn. Affect. Neurosci. 11, 884–891. doi: 10.1093/scan/nsw009

Samadani, A., Kim, S., Moon, J., Kang, K., and Chau, T. (2021). Neurophysiological synchrony between children with severe physical disabilities and their parents during music therapy. Front. Neurosci. 15:531915. doi: 10.3389/fnins.2021.531915

Sänger, J., Müller, V., and Lindenberger, U. (2012). Intra- and interbrain synchronization and network properties when playing guitar in duets. Front. Hum. Neurosci. 6:312. doi: 10.3389/fnhum.2012.00312

Sänger, J., Müller, V., and Lindenberger, U. (2013). Directionality in hyperbrain networks discriminates between leaders and followers in guitar duets. Front. Hum. Neurosci. 7:234. doi: 10.3389/fnhum.2013.00234

Savage, P. E., Loui, P., Tarr, B., Schachner, A., Glowacki, L., Mithen, S., et al. (2021). Music as a coevolved system for social bonding. Behav. Brain Sci. 44:e59. doi: 10.1017/S0140525X20000333

Schall, A., Haberstroh, J., and Pantel, J. (2014). Time series analysis of individual music therapy in dementia. GeroPsych. Available at: https://econtent.hogrefe.com/doi/10.1024/1662-9647/a000123

Schubert, T. W., Zickfeld, J. H., Seibt, B., and Fiske, A. P. (2018). Moment-to-moment changes in feeling moved match changes in closeness, tears, goosebumps, and warmth: time series analyses. Cognit. Emot. 32, 174–184. doi: 10.1080/02699931.2016.1268998

Schultz, B. G., and Palmer, C. (2019). The roles of musical expertise and sensory feedback in beat keeping and joint action. Psychol. Res. 83, 419–431. doi: 10.1007/s00426-019-01156-8

Seddon, F. A., and Biasutti, M. (2009). Modes of communication between members of a string quartet. Small Group Res. 40, 115–137. doi: 10.1177/1046496408329277

Sharda, M., Tuerk, C., Chowdhury, R., Jamey, K., Foster, N., Custo-Blanch, M., et al. (2018). Music improves social communication and auditory–motor connectivity in children with autism. Transl. Psychiatry 8:231. doi: 10.1038/s41398-018-0287-3

Stephens, G. J., Silbert, L. J., and Hasson, U. (2010). Speaker-listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U. S. A. 107, 14425–14430. doi: 10.1073/pnas.1008662107

Strait, D. L., Parbery-Clark, A., O’Connell, S., and Kraus, N. (2013). Biological impact of preschool music classes on processing speech in noise. Dev. Cogn. Neurosci. 6, 51–60. doi: 10.1016/j.dcn.2013.06.003

Thompson, G. (2012). Family-centered music therapy in the home environment: promoting interpersonal engagement between children with autism spectrum disorder and their parents. Music. Ther. Perspect. 30, 109–116. doi: 10.1093/mtp/30.2.109

Tierney, A. T., Krizman, J., and Kraus, N. (2015). Music training alters the course of adolescent auditory development. Proc. Natl. Acad. Sci. U. S. A. 112, 10062–10067. doi: 10.1073/pnas.1505114112

Trehub, S. E., Plantinga, J., and Russo, F. A. (2016). Maternal vocal interactions with infants: reciprocal visual influences. Soc. Dev. 25, 665–683. doi: 10.1111/sode.12164

Vanzella, P., Balardin, J. B., Furucho, R. A., Zimeo Morais, G. A., Braun Janzen, T., Sammler, D., et al. (2019). fNIRS responses in professional violinists while playing duets: evidence for distinct leader and follower roles at the brain level. Front. Psychol. 10:164. doi: 10.3389/fpsyg.2019.00164

Volpe, G., D’Ausilio, A., Badino, L., Camurri, A., and Fadiga, L. (2016). Measuring social interaction in music ensembles. Philos. Trans. R. Soc. B Biol. Sci. 371:20150377. doi: 10.1098/rstb.2015.0377

Vuoskoski, J. K., Zickfeld, J. H., Alluri, V., Moorthigari, V., and Seibt, B. (2022). Feeling moved by music: investigating continuous ratings and acoustic correlates. PLoS One 17:e0261151. doi: 10.1371/journal.pone.0261151

Wing, A. M., Endo, S., Bradbury, A., and Vorberg, D. (2014). Optimal feedback correction in string quartet synchronization. J. R. Soc. Interface 11:20131125. doi: 10.1098/rsif.2013.1125

Keywords: music, communication, naturalistic, neuroimaging, joint action, social

Citation: Izen SC, Cassano-Coleman RY and Piazza EA (2023) Music as a window into real-world communication. Front. Psychol. 14:1012839. doi: 10.3389/fpsyg.2023.1012839

Edited by:

Yafeng Pan, Zhejiang University, ChinaReviewed by:

Chris Kello, University of California, Merced, United StatesIsabella Poggi, Roma Tre University, Italy

Copyright © 2023 Izen, Cassano-Coleman and Piazza. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah C. Izen, c2FyYWhpemVuQGdtYWlsLmNvbQ==

Sarah C. Izen

Sarah C. Izen Riesa Y. Cassano-Coleman

Riesa Y. Cassano-Coleman Elise A. Piazza

Elise A. Piazza