- Department of Psychology, University of Notre Dame, Notre Dame, IN, United States

Introduction: Artificial Intelligence (AI) has the capability to create visual images with minimal human input, a technology that is being applied to many areas of daily life. However, the products of AI are consistently judged to be worse than human-created art, even when comparable in quality. The purpose of this study is to determine whether explicit cognitive bias against AI is related to implicit perceptual mechanisms active while viewing art.

Methods: Participants’ eye movements were recorded while viewing religious art, a notably human domain meant to maximize potential bias against AI. Participants (n = 92) viewed 24 pieces of Biblically-inspired religious art, created by the AI tool DALL-E 2. Participants in the control group were told prior to viewing that the pieces were created by art students, while participants in the experimental group were told the pieces were created by AI. Participants were surveyed after viewing to ascertain their opinions on the quality and artistic merit of the pieces.

Results: Participants’ gaze patterns (fixation counts, fixation durations, fixation dispersion, saccade amplitude, blink rate, saccade peak velocity, and pupil size) did not differ based on who they believed created the pieces, but their subjective opinions of the pieces were significantly more positive when they believed pieces were created by humans as opposed to AI.

Discussion: This study did not obtain any evidence that a person’s explicit “valuation” of artworks modulates the pace or spatial extent of visual exploration nor the cognitive effort expended to develop an understanding of them.

Introduction

How does knowing how something was created influence cognition and behavior? Specifically, to what degree do people treat things differently if they think they were created by a person or a machine? The main aim of the current project was to assess how the attribution of an artwork to a person or a computer-based artificial intelligence influences not only people’s opinions about it, but also how they actively perceive it. We additionally asked if any such perceptual changes vary as a function of observer attitudes toward art and artificial intelligence.

Artificial intelligence (AI) is a rapidly-growing set of technologies that enable computers to emulate many aspects of human perception, learning, comprehension, problem-solving, and decision making. Hence, they hold enormous potential to transform many aspects of daily life. However, public opinion of the growing incorporation of AI technologies in business (e.g., customer service, inventory management, supply chain operations), finance (e.g., market forecasting, fraud detection), education (e.g., adaptive learning platforms, intelligent tutoring systems), and healthcare (e.g., preventative care, medical diagnosis), is mixed. For example, a 2023 Pew Research Center survey found that 52% of Americans feel more concern than excitement about developments in AI, an increase from 37% in 2021 (Pew Research Center, 2023a). Moreover, Pew found that people want to see more regulation and oversight of AI technologies. For example, 67% do not think the government will go far enough to regulate the use of ChatBots, 75% think health care providers are moving too fast incorporating AI into their practices, and 87% want driverless vehicles to be held to higher safety testing (Pew Research Center, 2023b). Indeed, legal scholars have argued that the “race to AI” must be accompanied by a “race to AI regulation” (e.g., Smuha, 2021).

Public perception of AI also tends to be negative in areas of creative expression (e.g., Castelo et al., 2019; Köbis and Mossink, 2021; Pew Research Center, 2023c; Schepman and Rodway, 2020). The recent development of generative AI technologies has given computers the ability to create audio, text, and image content. Generative AI therefore stands to redefine creative processes that have, until now, been uniquely human. Human-created literature, film, music, dance, and art are often thought to convey the creator’s ideas, emotions, and deliberative intention to impact the viewer in some manner (e.g., Bullot and Reber, 2013; Jucker et al., 2014; Pignocchi, 2014). The arts are a way to express thoughts and communicate with others, often without words. They are valued not just for aesthetic pleasure but also the creator’s talent and originality in thought or approach. In contrast, we do not perceive generative AI as being capable of reflecting on the human condition, feeling or expressing emotions, honing talent over years of work, or developing new ways of thinking. Thus, recent cases where award-winning creative works were revealed to be AI-generated (or generated with AI assistance) have sparked extensive criticism and debate on social media platforms (see, e.g., Boris Eldagsen’s image “The Electrician” taking first place at the 2023 Sony World Photography Awards and Rie Kudan’s novel “Tokyo-to Dojo-to” winning the 2023 Akutagawa Prize).

In the visual arts, artworks attributed to an AI (or created with significant assistance from digital technologies) are generally rated lower than art attributed to a human creator in terms of aesthetics, liking, quality, novelty, meaning, and collection/purchase intention (Chamberlain et al., 2018; Chiarella et al., 2022; Gangadharbatla, 2021; Gu and Li, 2022; Hong and Curran, 2019; Kirk et al., 2009; Ragot et al., 2020; Xu and Hsu, 2020), although the magnitude of the effect can vary. For example, the bias is stronger among experts (Gu and Li, 2022), reduced when considering abstract art (Gangadharbatla, 2021), and eliminated in some viewing contexts (Chiarella et al., 2022). Regardless, within the general population, people often hesitate to call AI an “artist” or its creations “art” (Hong, 2018; Lyu et al., 2022; Mikalonytė and Kneer, 2022) and the Pew Research Center has found that while over half of Americans view AI as a major advance in fields such as medicine (59%), agriculture (54%), and meteorology (50%), fewer than a third (31%) consider it to be a major advance in art (Pew Research Center, 2023c).

While negative explicit attitudes toward AI-generated art are well documented, much less research has considered the degree to which implicit measures of bias (i.e., those that could detect a person’s unconscious reactions to visual input) are affected by artist attribution during their encounters with artworks, with existing work providing mixed results. For example, brain activity in the entorhinal cortex, temporal pole, and primary visual cortex is greater when observers view artworks attributed to humans compared to AI, irrespective of their explicit ratings of aesthetic value (Kirk et al., 2009). However, implicit measures of psychophysiological activation, such as electrodermal activity and heart rate, have been shown to be independent of artistic attribution (Chiarella et al., 2022). Here, we ask if people’s explicit, conscious, negative biases toward AI-generated art are mirrored by implicit, unconscious, shifts in how they look at that art.

When viewing artworks (or any other visual stimulus), people continuously reorient their gaze from place to place. The resulting sequences of fixations (periods of time where the eyes are relatively stationary and high-fidelity foveal vision is used to accumulate visual detail from a relatively small area of a display) and saccades (ballistic eye movements that shift the point of gaze from one place to another) are not random, but arise from the real-time information processing priorities of the visual system. These priorities, and hence the eye movement behaviors exhibited while viewing art, can change as a function of image content (e.g., DiPaola et al., 2013; Fuchs et al., 2011; Massaro et al., 2012; Quiroga and Pedreira, 2011) and style (e.g., Pihko et al., 2011; Uusitalo et al., 2009), as well as the observer’s goals (Sharvashidze and Schütz, 2020), artistic preferences (e.g., Alvarez et al., 2015; Mitschke et al., 2017; Plumhoff and Schirillo, 2009), knowledge (Bubic et al., 2017), artistic expertise (e.g., Francuz et al., 2018; Lin and Yao, 2018; Koide et al., 2015; Pihko et al., 2011; Vogt and Magnussen, 2007), and perceived challenge in understanding an artwork (e.g., Ganczarek et al., 2020).

Recently, Zhou and Kawabata (2023) provided the first evidence that viewers’ beliefs about the source of an artwork (human or AI) may be associated with their viewing behavior. In their study, participants looked at 40 artworks, half made by humans and half made by generative AI. Participants were not told how each artwork was created, but they were instead asked to categorize the works themselves based on their own intuitions about their origin. The authors found that participants’ ability to discriminate between human- and AI generated artworks was poor, but when they believed an artwork was made by a human artist they looked at it longer. This result suggested to them that explicit beliefs can also manifest as implicit shifts in viewing behavior, although they acknowledged that multiple mechanisms could be responsible for those shifts and cautioned against generalization of their findings to other image sets and viewing contexts. Our study is therefore an attempt to conceptually replicate and extensively extend Zhou and Kawabata’s investigation to test the generalizability of their conclusions and to more fully characterize the potential associations between explicit attitudes towards particular artworks and how they are implicitly viewed. As discussed in more detail below, this included the inter-relationships between explicit attitudes, temporal and spatial measures of gaze control, changes in gaze behavior over time, implicit measures of engagement and mental effort, and a variety of individual differences.

Numerous eye movement variables can be measured and related to cognitive processing of visual information. Here, we focused on gaze measures that are considered content-independent because they can be obtained without reference to the visual input itself. We concentrated on such measures for two reasons. First, they reflect general processing speed and overall implicit information gathering strategies rather than local or idiosyncratic shifts in viewing based on specific stimulus content. Second, they are more likely to generalize across stimuli, tasks, and viewing contexts than content-dependent measures (e.g., visual salience and semantic content at fixation). As such, they could potentially constitute robust indicators of implicit bias with respect to artist attribution.

The “pace” with which visual information is acquired is revealed by temporal indices of gaze control such as the number of fixations and the durations of those fixations. Longer, and thus fewer, fixations tend to be associated with more difficult or more complex visual processing as more time is needed to understand the fixated information (see Henderson, 2007 for a review). The spatial extent of visual sampling can be measured by saccade amplitude (the distance between consecutive fixations) and fixation dispersion (a measure of the spread of fixation points). Shifts in spatial aspects of gaze may reflect changes in viewing strategies. For example, observers may adopt a more “exploitative” strategy in which visual processing is concentrated on a few areas or a more “exploratory” strategy where processing is more diffusely spread across many locations (e.g., Gameiro et al., 2017; Hills et al., 2015). Additionally, gaze can be used as an implicit measure of viewer engagement with, and effort applied to, visually-based tasks. Blink rates decrease when observers are looking at content that they think is important or relevant (e.g., Ranti et al., 2020); the peak velocity of saccades is inversely related to task complexity (e.g., Di Stasi et al., 2013); and, pupil size is positively correlated with the cognitive resources needed to complete a task (e.g., Kahneman and Beatty, 1966).

In the current study, we had people view a set of 24 artworks that depicted people and events described in the Bible. To control for any actual differences that might exist between AI- and human-created art, all these artworks were created using the generative AI program DALL-E 2. Thus, none of the participants had prior experience with the pieces. In a between-subjects manipulation, half of participants were led to believe that the artworks were created by humans and the other half were told they were created by AI. Our choice to use artworks that portray religious and sacred stories stemmed from a desire to maximize the dichotomy between humans and AI. While humans are capable of experiencing spirituality, faith, and a belief in the divine, machines are not. Hence, divergence in viewers’ perceptions of the intentionality of human and AI creators (a key aspect of art appreciation, see, e.g., Bullot and Reber, 2013) should be highlighted in the context of sacred art. Additionally, religious contexts may also evoke notions of morality, which people are less likely to entrust to AI (Gogoll and Uhl, 2018; Zhang et al., 2022).

Based on prior work discussed above, we expected participants in the AI group to have more explicit negative attitudes towards, opinions of, and reactions to the artworks. Our goal was to determine if these differences in attitudes are accompanied by shifts in the pace of visual processing (fixation count and duration), the extent of spatial exploration (saccade amplitude and fixation dispersion), and the exertion of effort (blink count, peak saccade velocity, and pupil size) during viewing. If people “discount” or “devalue” AI generated artworks, and this affects viewing behavior, we may observe shifts in gaze-based hallmarks of greater visual and cognitive engagement. For example, art thought to be created by humans may enjoy fewer fixations, longer fixation durations, greater fixation dispersion, fewer blinks, higher peak velocities, and/or larger pupil size. It is, however, important to recognize that such shifts may develop over time as people view artworks. As people view art, they transition from an initial global survey of the piece aimed at understanding its compositional elements and overall gist to a more focal analysis aimed at building a complete conceptual representation of its content (Nodine and Krupinski, 2003). By considering potential changes in eye movements over time, our goal was to determine if artistic attribution has more influence on viewing behavior early (i.e., when aesthetic appreciation is prioritized) and/or late in viewing (i.e., when understanding is prioritized).

In addition to examining the potential impact of artist attribution on viewing behavior, we took the opportunity to also consider whether and how individual differences in participants’ own religiosity, desire for aesthetics, and general attitudes toward AI affect gaze behavior regardless of author attribution. It is possible that people who are more religious (i.e., interested in the content of the artworks used in this study) or are more driven by aesthetics could view the artworks differently than people who are less so. We additionally considered individual differences in people’s general attitudes towards artificial intelligence. Those who are more positive about AI may engage with AI-created artworks differently than those who are more concerned. As with artistic attribution, such individual differences may be observed by changes in spatial, temporal, and/or cognitive aspects of gaze.

Method

Participants

A minimum sample size was guided by Zhou and Kawabata (2023) who reported negative explicit biases toward art they believed to be created by artificial intelligence as well as correlated shifts in the distribution of fixation durations. Their study included a single sample of 34 participants. Given our 2-group between-subjects design, we set a minimum sample size of 68 participants, with a goal to collect data until the conclusion of the Spring 2024 academic term. The final sample of 92 participants was obtained, all of whom were undergraduate students at the University of Notre Dame. Demographic information is reported in the Sample Characteristics subsection of the Results. While we did not measure participants’ level of art experience or expertise, none of them were art, art history, or design majors. Hence, the variability in our sample was likely consistent with that within the non-expert population. All participants were compensated with course credit.

Stimuli

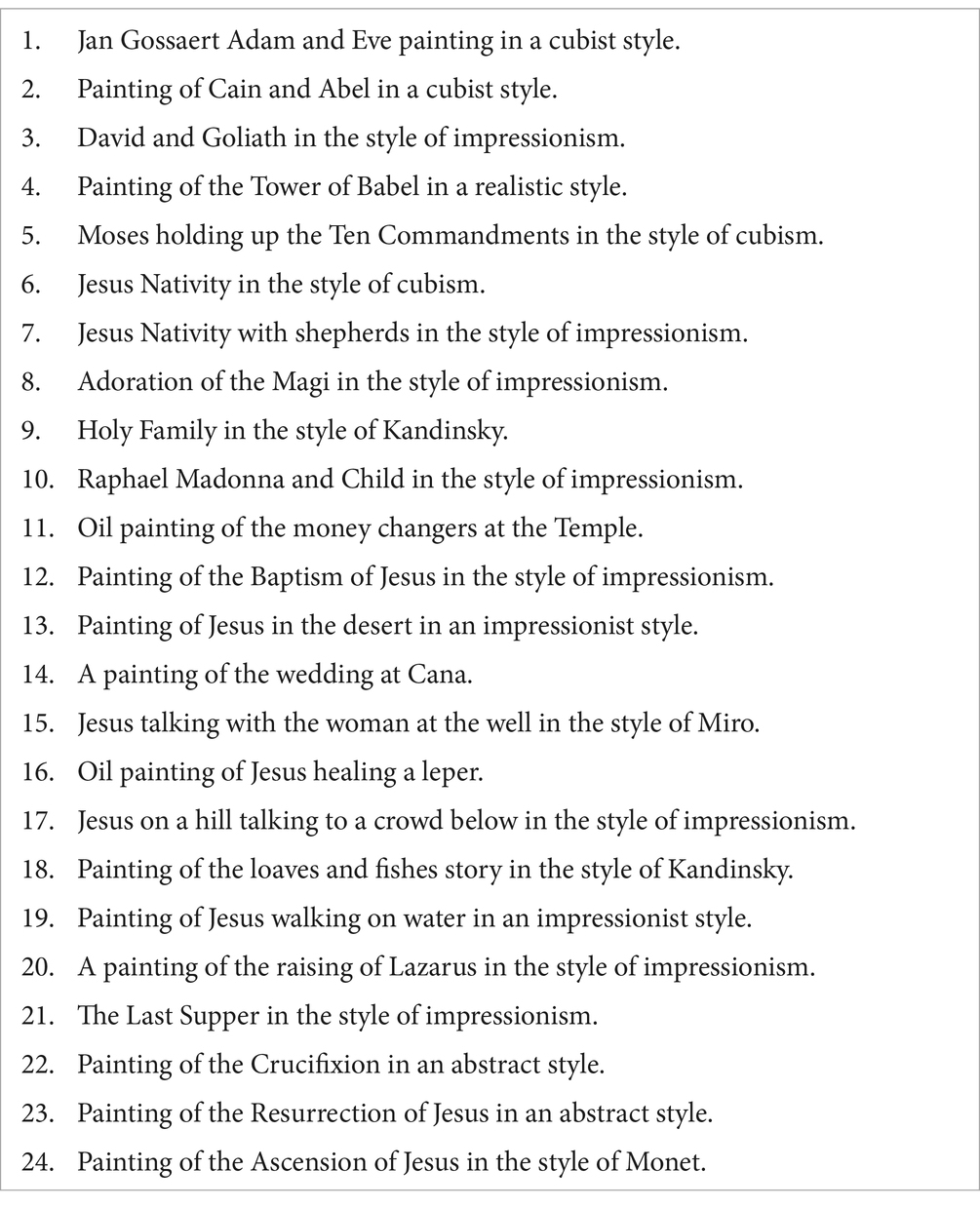

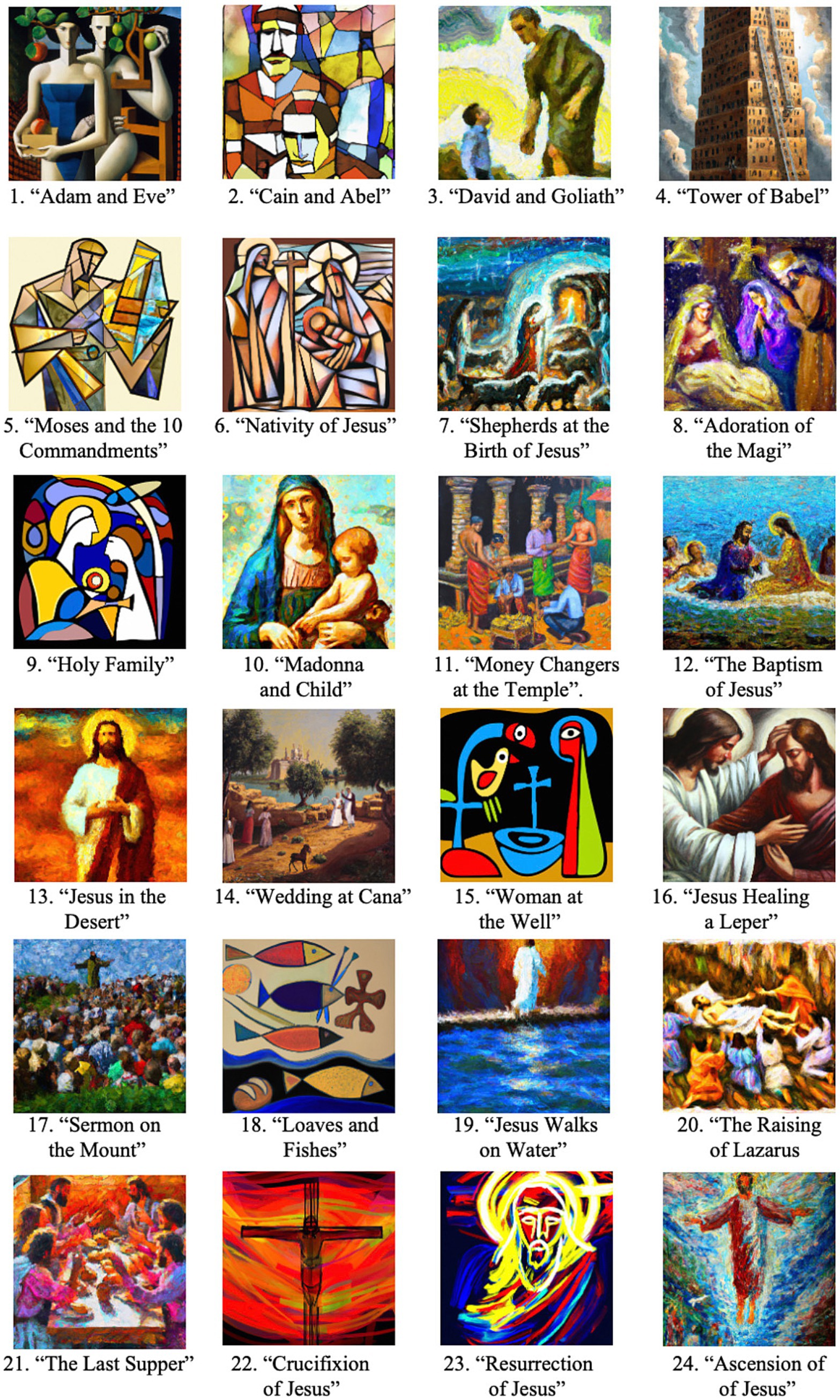

Stimuli consisted of 24 artworks created by DALL-E 2, OpenAI’s natural language visual art generator. Artworks were generated by providing prompts that included a topic and artistic style or artist to emulate. Examples of prompts included “painting of the Tower of Babel in a realistic style” or “painting of the loaves and fishes story in the style of Kandinsky.” Religious topics were Christian in nature, drawn from both the Old and New Testaments of the Bible. Various artistic styles were used, including Impressionism, Cubism, and realism. A full list of prompts is provided in Table 1 and the stimuli are illustrated in Figure 1.

Figure 1. Artworks generated by DALL-E 2 and used in this study. Image numbers correspond to prompts provided in Table 1.

Measures

Eye gaze

Eye position was recorded while participants viewed the artworks (see Apparatus). From these records, several temporal, spatial, and cognitive aspects of eye gaze were computed. Temporal parameters of gaze control included the number of fixations observers made while viewing each artwork and the duration of those fixations1. Spatial aspects of gaze included saccade amplitude and fixation dispersion. Saccade amplitude is the distance between consecutive fixations. Fixation dispersion is the root mean square of the Euclidean distance between each fixation point and the average position of all fixations (reported on a 0–1 scale). Gaze measures correlated with viewer engagement and cognitive effort included blink rates, the peak velocity of the eyes reached during a saccade, and pupil size.

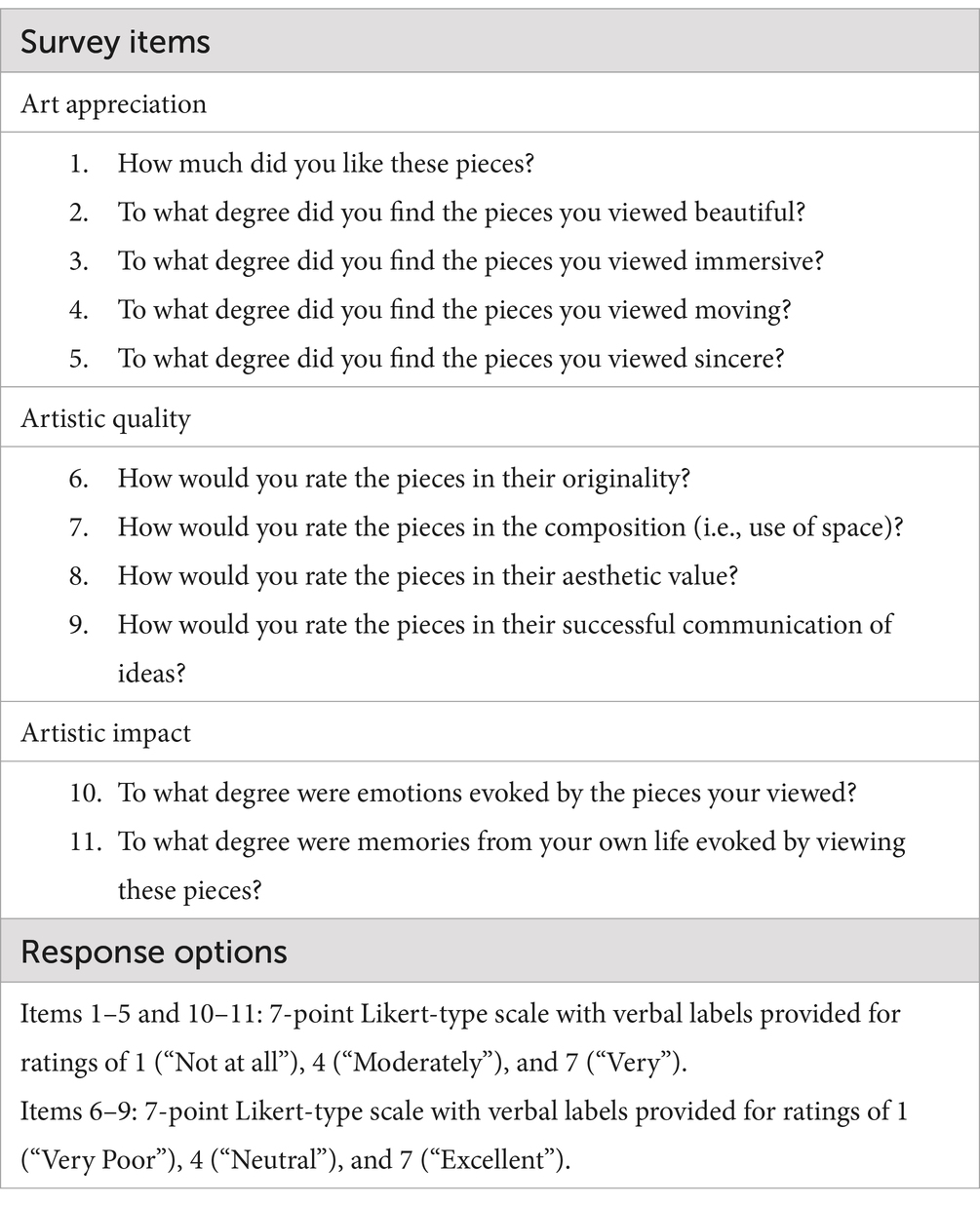

Questionnaires

To assess participants’ attitudes toward the artworks, as well as the cognitive and emotional impacts of the artworks, we created a questionnaire, which, for ease of discussion, we call an artistic impressions survey. This survey is not presented here as a formally constructed scale, but rather as a selective amalgamation of questions drawn from (or closely inspired by) prior works that were themselves devised to understand different aspects of people’s attitudes toward artworks. Some questions were aimed at participants’ overall appreciation of the artworks. They were asked to indicate how much they liked the artworks (see Chamberlain et al., 2018) and how beautiful, immersive, sincere, and moving they found them to be as a whole (see Miller et al., 2024). Other questions pertained to their perception of artistic quality by rating originality, composition, aesthetic value, and communication of ideas (see Hong and Curran, 2019). Finally, some questions assessed the impact the artworks had on participants by asking them to indicate the degree to which emotions and memories were evoked (see Dageforde et al., 2024). All responses were made on a 7-point Likert-type scale. The full text of these questions and the response options are provided in Table 2. For analysis, we derived several scores based on (1) the aggregate of all responses, (2) the responses to items pertaining to art appreciation, (3) the responses to items pertaining to art quality, and (4) the responses to items pertaining to artistic impact (see Results for further details).

Individual differences in attitudes toward aesthetics, religion, and artificial intelligence were measured with three additional questionnaires. The Desire for Aesthetics Scale (Lundy et al., 2010) is a 36-item questionnaire that measures individuals’ motivation to seek out and care about a wide range of aesthetic stimuli (e.g., indicating degree agreement with statements such as “I often find myself staring in awe at beautiful things.”). The Centrality of Religiosity Scale (Huber and Huber, 2012) measures the importance of religion and religious engagement in a person’s life (e.g., “How often do you experience situations in which you have the feeling that God or something divine intervenes in your life?”). Multiple versions of the questionnaire are available and we elected to use the 20-item interreligious version. The General Attitudes Towards Artificial Intelligence Scale (Schepman and Rodway, 2020) is a 20-item questionnaire that measures the degree to which a person supports, or has concerns regarding, the use of artificial intelligence in daily life. It includes both positive (e.g., indicating degree of agreement with statements such as “Artificially intelligent systems can help people feel happier.”) and negative scales (e.g., indicating degree of agreement with statements such as “Organizations use Artificial Intelligence unethically”).

Apparatus

Artworks were presented on a 22” LCD monitor, at a resolution of 725 × 725 pixels on a dark gray 1,024 × 768 pixel background. Questionnaires were presented using Qualtrics and were presented on a Microsoft Surface Pro 8 tablet. While viewing the artworks, participants’ eye movements were sampled monocularly at a rate of 1,000 Hz using an EyeLink Portable Duo eyetracker (SR Research Inc.). Viewing distance was constrained with a chin rest positioned 95 cm from the display.

Design and procedure

Participants were randomly assigned to one of two groups based on artistic attribution. The human attribution group (n = 46) was told that they would be viewing images of artworks created by students enrolled in a college course titled “Picturing the Bible” in which students study the ways Christians represented their sacred stories in visual art from the early Christian period to present day. This cover story was fictitious but believable given our extensive catalog of theology and religion-focused courses coupled with a university requirement that all undergraduate students take courses in theology and/or Catholicism. The AI attribution group (n = 46), was (truthfully) told that they would be viewing religiously themed artworks created by DALL-E 2, an artificial intelligence created by a research and development company called OpenAI that responds to natural language prompts with images.

Participants then viewed the artworks serially while their eye movements were recorded. Each trial began by presenting a “title” for each artwork (i.e., part of the prompt provided to DALL-E 2) in the center of the screen for 2 s. Then, the artwork was shown for 10 s. Between trials, participants completed a 1-point calibration to correct for drift in the eye tracker signal. The 24 items were presented in a different random order for each participant and they moved from trial to trial at their own pace. Gaze was tracked for the entire viewing phase. After viewing all artworks, participants completed the questionnaires and surveys described above, during which gaze was not tracked.

Results

Sample characteristics

Among participants in our sample, 62 identified as female and 30 as male. The mean age was 19.3 years (SD = 1.08). Sixty-eight identified as Roman Catholic, 12 as a non-Catholic Christian denomination, 2 as another Abrahamic religion (Jewish or Muslim), and 10 as having no religion.

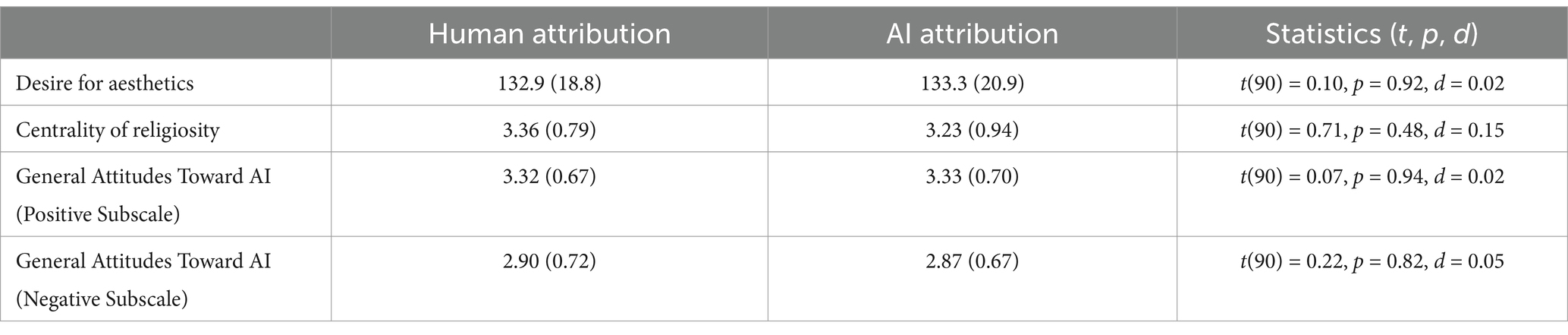

Table 3 reports mean scores and inferential statistics obtained on the Desire for Aesthetics Scale, the Centrality of Religiosity Scale, and the General Attitudes Towards Artificial Intelligence Scale, broken down by attribution group. On the Desire for Aesthetics Scale (Lundy et al., 2010) the minimum possible score is 0 and the maximum is 216. A score of 108 indicates a neutral attitude toward aesthetics; lower scores indicate lesser, while higher scores indicate greater motivation to appreciate and incorporate aesthetics in their daily lives. On the Centrality of Religiosity Scale (Huber and Huber, 2012) the minimum possible score is 1 and the maximum is 5. Higher scores indicate religiosity is of greater, or more central, importance to a person. On the General Attitudes Towards Artificial Intelligence Scale (Schepman and Rodway, 2020) the minimum possible score is 1 and the maximum is 5. A score of 3 indicates a neutral attitude toward AI. Higher scores, regardless of subscale, indicate a more positive attitude toward AI. For none of the scales did we observe reliable differences between attribution conditions (all p’s > 0.48). Because these scales could not be used to differentiate participant groups, they cannot account for differences in gaze behavior based on creator attribution, should they be observed.

Table 3. Mean scores (with standard deviations) obtained on the desire for aesthetics, centrality of religiosity, and general attitudes toward artificial intelligence scales broken down by attribution.

Artistic attribution and explicit attitudes toward artworks

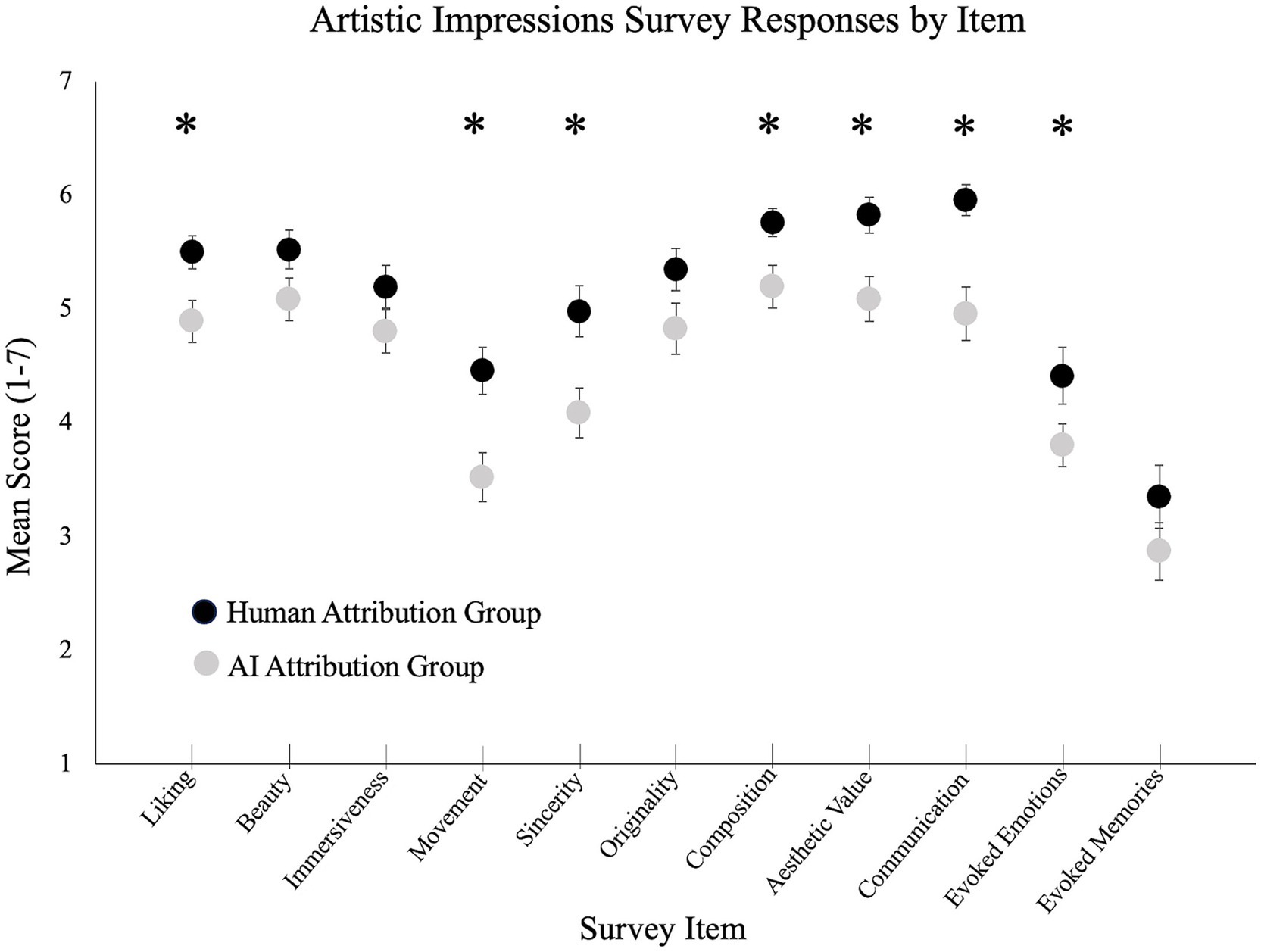

Responses to the individual questions included in our artistic impressions survey are summarized in Figure 2. We analyzed this data in multiple ways. First, we computed an overall impressions score by averaging responses across all questions. This was higher (more favorable) for the human attribution group (M = 5.12, SD = 0.80) than the AI attribution group (M = 4.47, SD = 0.97), t(90) = 3.52, p < 0.001, d = 0.22.

Figure 2. Mean responses to items included in the artistic impressions survey. Full item descriptions are provided in Table 2. Error bars depict +/− 1 standard error of the mean. Asterisks denote reliable differences between the human- and AI attribution groups (p < 0.05).

Second, we calculated an appreciation subscore by averaging responses to the 5 questions pertaining to participant liking, and to how beautiful, immersive, sincere, and moving they found the set of artworks to be. This subscore was higher for the human attribution group (M = 5.13, SD = 0.94) than the AI attribution group (M = 4.48, SD = 1.13), t(90) = 3.01, p = 0.003, d = 0.22. Within this set of questions, average scores were numerically higher on all items within the human attribution condition, with reliable differences observed for liking (p = 0.012), sincerity (p = 0.006), and movement (p = 0.002). Differences between groups were marginally reliable for ratings of beauty (p = 0.09), and not reliably different for judgments of immersiveness (p = 0.15).

Third, we calculated a quality subscore by averaging responses to the 4 questions pertaining to originality, composition, aesthetic value, and communication of ideas. This subscore was higher for the human attribution group (M = 5.72, SD = 0.67) than the AI attribution group (M = 5.02, SD = 1.09), t(90) = 3.75, p < 0.001, d = 0.23. Within this set of questions, average scores were numerically higher in the human attribution group for all items, with reliable differences observed for composition (p = 0.01), aesthetic value (p = 0.004), and communication of ideas (p < 0.001). A marginally reliable difference between groups was observed for ratings of originality (p = 0.08).

Fourth, we calculated an impact subscore by averaging responses to the 2 questions regarding evoked emotions and memories. This subscore was higher in the human attribution group (M = 3.88, SD = 1.54) than the AI attribution group (M = 3.34, SD = 1.24), but this difference was only marginally reliable, t(90) = 1.87, p = 0.07, d = 0.21. Within this set of questions, scores were numerically higher in the human attribution group for both items, with reliable differences observed for emotion (p = 0.05), but not memory (p = 0.21).

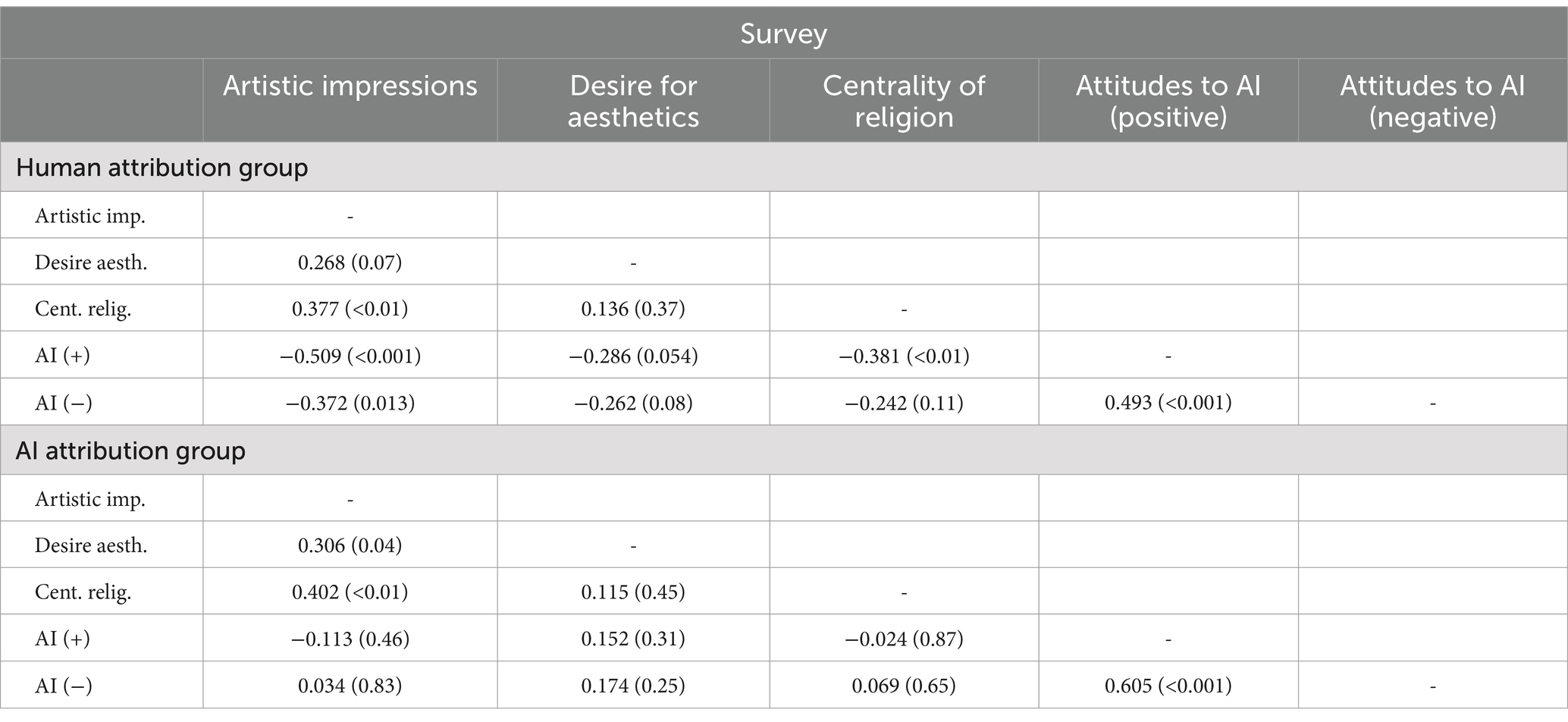

Finally, although we had no a priori hypotheses regarding the relationship between participants’ attitudes towards the artworks, their religiosity, desire for aesthetics, and attitudes towards artificial intelligence, for archival purposes Table 4 reports the correlations among these measures within each attribution group. Across groups, participants who viewed the artworks more favorably tended to more strongly incorporate aesthetics into their lives and report greater religiosity. Interestingly, in the human attribution group, participants who viewed the artworks more positively tended to have a more negative attitude toward artificial intelligence, an effect that was absent in the AI attribution group. Based on this group-level difference, we speculate that the experience of knowingly viewing AI-generated art enabled participants in the AI-attribution group to separate their evaluation of AI-generated art per se from their more general (negative) posture toward artificial intelligence as a technology.

Artistic attribution and gaze behavior

Prior to data analysis, we established several a priori criteria for including gaze-based data in our analyses. Fixations that occurred outside the image borders (1.0%), fixation durations under 50 milliseconds or over 2,000 milliseconds (2.0% of fixations), saccade durations over 200 milliseconds (5.4% of saccades), and saccade amplitudes over 20 degrees (<1% of saccades) were excluded. Following these trims, 62,289 fixation samples (97.1%) and 58,828 saccade samples (94.2%) were included in the analyses.

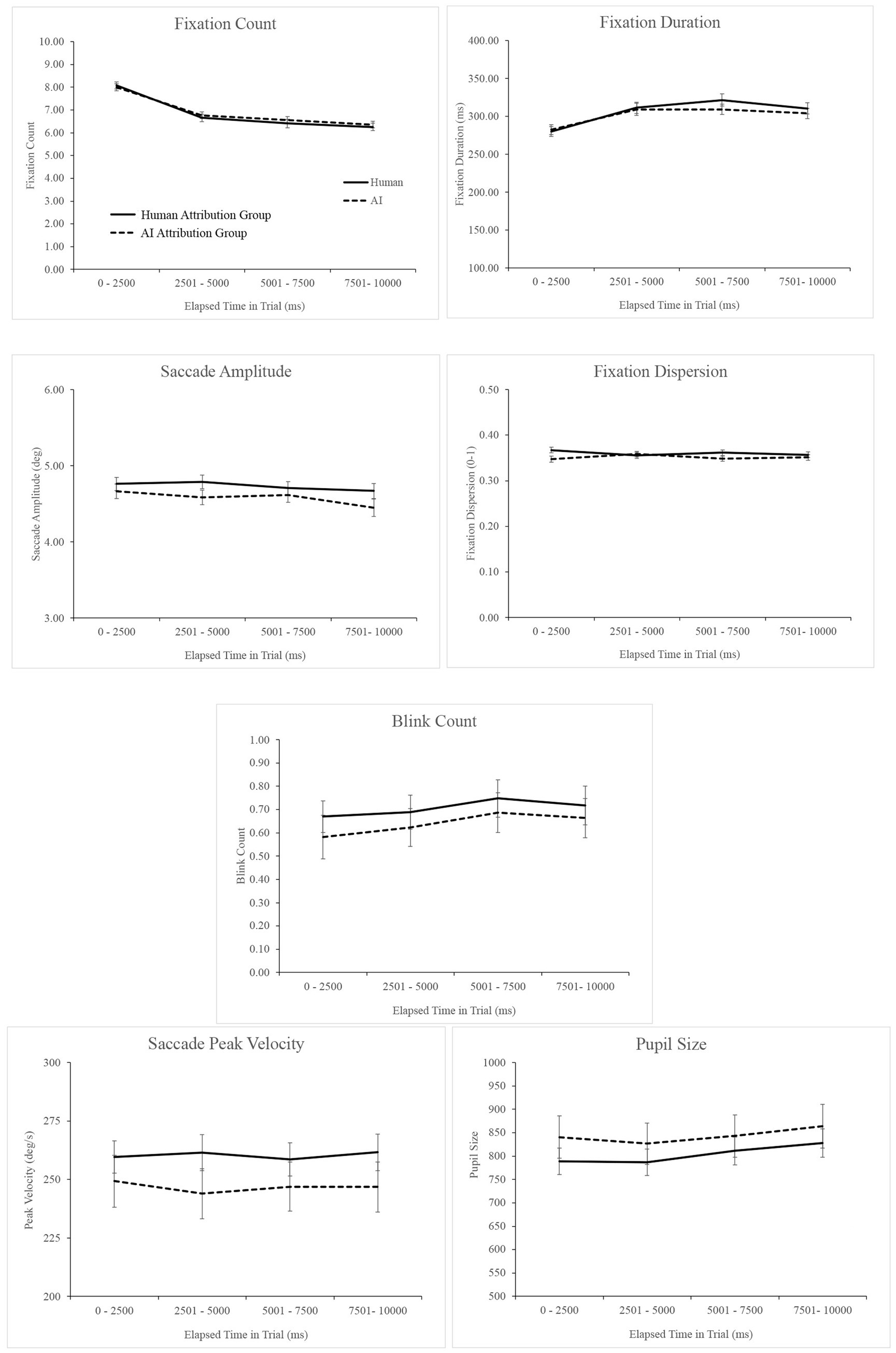

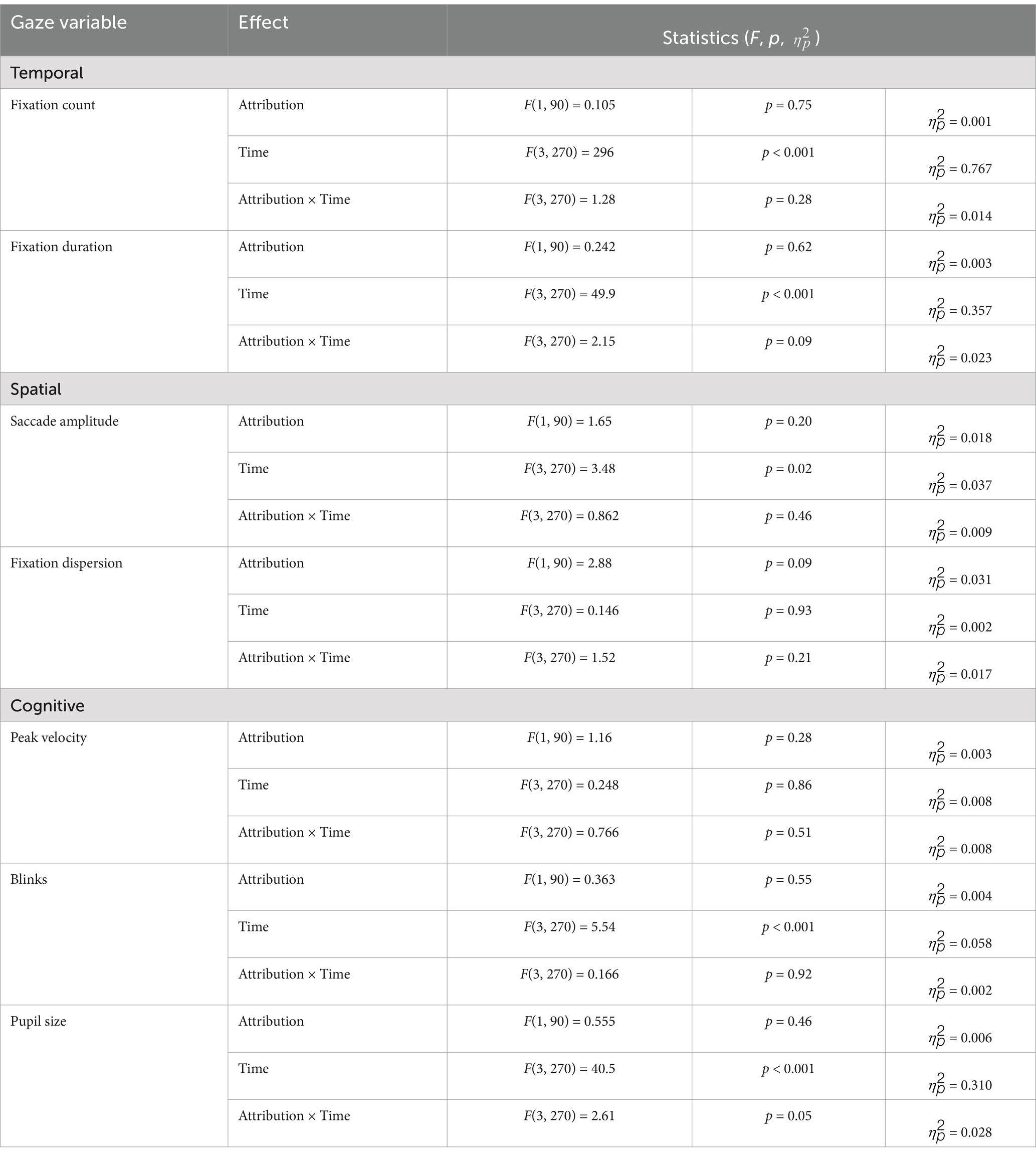

Potential differences in gaze variables based on artistic attribution were assessed over time to determine if such differences emerge or diminish as viewing progresses. Each trial was divided into four 2,500 ms time windows and, within each window, average values for each gaze variable were calculated. For this within-trial analysis, gaze variables were therefore submitted to separate 2 (attribution group) × 4 (time window) mixed model analyses of variance. Data are illustrated in Figure 3 and the inferential statistics obtained from the ANOVA analyses are provided in Table 5. As viewing progressed within a trial, participants made fewer fixations, maintained fixation for longer, executed shorter saccades, and blinked more frequently. These changes over time are typical as viewing strategies shift from more global to more local visual analysis and fatigue increases. Importantly, no main effects or interactions involving art attribution were reliable2.

Figure 3. Gaze variables plotted over elapsed viewing time within trials. Error bars depict +/− 1 standard error of the mean.

Table 5. Summary of main effects and interactions for ANOVA analyses of gaze measures, with art attribution as a between-subjects factor and time bin within trials as a within-subjects factor.

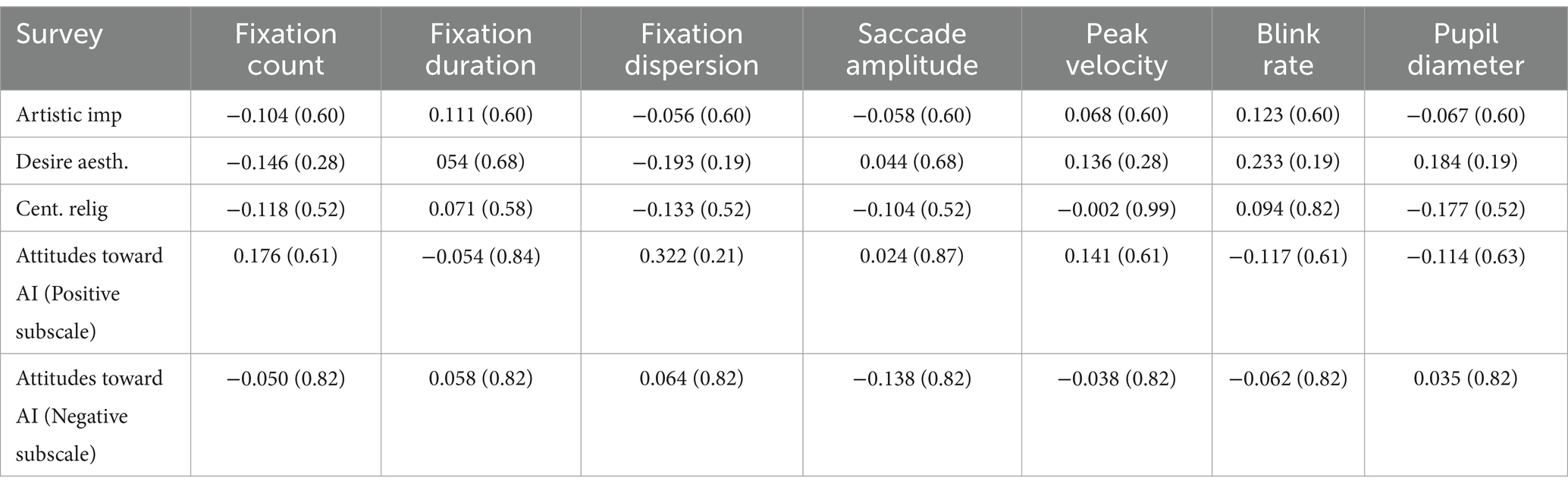

Individual differences and gaze behavior

Scores on the Artistic Impressions, Desire for Aesthetics, Centrality of Religiosity, and General Attitudes Towards Artificial Intelligence Scales were correlated with each gaze variable. For this analysis we collapsed across artistic attribution, time windows within trials, and blocks of trials across the experiment. Our analysis of participants’ attitudes towards AI was restricted to the AI attribution group because this was the only group that was told the artworks were produced by a generative AI. Correlation coefficients and associated p-values are reported in Table 6. Within each scale, p-values were adjusted using the false discovery rate (Benjamini and Hochberg, 1995) to correct for multiple comparisons. None of the calculated correlation coefficients was statistically reliable. Hence, we obtained no evidence that individual differences in the importance religion and aesthetics play in people’s lives altered the way in which they viewed religiously themed artworks. Similarly, we obtained no evidence that people’s attitudes towards AI affect their viewing of AI generated art.

Table 6. Correlations coefficients (with FDR adjusted p-values) among gaze measures and survey scores.

Discussion

Generative AI has the ability to produce content that, until recently, depended exclusively on the creative efforts of human beings. Today, algorithms can generate, in seconds (or less), original textual, audio, and visual outputs. Despite the potential utility and efficiency of such programs, people are hesitant to refer to their outputs as “art” (Hong, 2018; Lyu et al., 2022; Mikalonytė and Kneer, 2022) and consider them to have less intrinsic and extrinsic value than those created by a person (Chamberlain et al., 2018; Chiarella et al., 2022; Gangadharbatla, 2021; Gu and Li, 2022; Hong and Curran, 2019; Kirk et al., 2009; Ragot et al., 2020; Xu and Hsu, 2020). The primary goal of the study reported here was to determine if the attribution of visual artworks to AI leads people to look at them differently than when they are attributed to a human artist. Our focus was on potential shifts in the pace and extent of visual exploration, as well as the exertion of mental effort during encounters with artworks.

Participants in this study looked at a set of artworks created using DALL-E 2, a generative AI program that can produce realistic images from a natural language prompt. These artworks all depicted events described in the Old and New Testaments of the Bible. In a between-subjects manipulation, participants were either told the images were created by students in a college course called “Picturing the Bible” or truthfully told they were generated by an AI. While viewing the artworks, participants’ eye movements were recorded, and afterward, they completed surveys to ascertain their opinions of the artworks and to measure their attitudes towards religion, aesthetics, and AI.

Even though all of our participants saw exactly the same artworks, those in the human-attribution group had more positive opinions of them. Specifically, participants in this group judged the artworks to be more likable, sincere, and moving; they found them to be better composed, to have higher aesthetic value, and to more effectively communicate ideas to the viewer; and, they felt that the artworks evoked stronger emotional responses. These findings are consistent with prior work (Chamberlain et al., 2018; Chiarella et al., 2022; Gangadharbatla, 2021; Gu and Li, 2022; Hong and Curran, 2019; Kirk et al., 2009; Xu and Hsu, 2020), and indicate that people’s appreciation and evaluation of visual artworks, as well as their affective response to them, are affected by the knowledge they have about the human- or machine-made origin of those works. Such results are also consonant with studies that have shown that viewers’ impressions of artworks can be modulated by other contextualizing information provided alongside artworks such as titles (Millis, 2001), explanatory labels (Temme, 1992), and artist statements (Specht, 2010), as well as prior knowledge of the artist including his or her prestige (Mastandrea and Crano, 2019), personality (Van Tilburg and Igou, 2014), nationality (Mastandrea et al., 2021), disability (Szubielska et al., 2020), and other biographical details (e.g., Kaube and Rahman, 2024).

The differences we observed in participants’ attitudes toward artworks attributed to humans and AI were not accompanied by any changes in how they viewed the artworks. Fixation counts, fixation durations, fixation dispersion, saccade amplitude, blink rate, saccade peak velocity, and pupil size were unaffected by art attribution. Hence, we did not obtain any evidence to suggest that a person’s explicit “valuation” of artworks modulates the pace or spatial extent of visual exploration nor the cognitive effort needed or expended to develop an understanding of them. We were also unable to detect group differences in viewing behavior over time which further suggests that early exploratory viewing associated with aesthetic appreciation and later analytical viewing associated with understanding (cf. Nodine and Krupinski, 2003) are both insulated from conscious biases based on artistic attribution.

Our results provide some replication of, and contrast to, prior research seeking to connect artistic attribution to viewing behavior. Recently, Zhou and Kawabata (2023) considered fixation counts, individual fixation durations, and the cumulative time spent looking at images of artworks, some of which were human-created and others of which were AI-generated. When participants judged a work to be human-generated, their cumulative looking (dwell) times were, on average, 330 ms longer per image (the available viewing window was 20 s). That said, as in our study, fixation counts and individual fixation durations were not affected. For dwell times to increase without a corresponding increase in fixation counts and/or individual fixation durations is curious. One possibility is that the effect of artistic attribution may be too small to be reliably detected at the level of the individual fixations, and, instead, may only be observed when fixations are aggregated. To test this hypothesis in our own data, we calculated the total duration of all fixations made by our participants. Summing across all stimuli and fixations, participants in the human attribution group looked at the artworks for an average of 195,654 ms, or about 3 min and 15 s (SD = 14,672 ms), and those in the AI attribution group looked at them for 196,620 ms (SD = 14,805 ms) (Mdiff = 34 ms, p > 0.99). Hence, when aggregating fixations in our study we failed to replicate Zhou and Kawabata’s (2023) dwell time finding and have found no empirical support for their conclusion that gaze behavior can be affected by artistic attribution to humans or computers.

In addition to the effects of artistic attribution on how people look at art, we also considered, as a secondary question, the degree to which some individual differences might also affect viewing behavior. To this end, we measured participants’ own religiosity, desire for aesthetics, and general attitudes toward AI and correlated these with gaze behaviors. None of these individual differences correlated with any measure of viewing behavior. Hence, we obtained no evidence to suggest that individual differences in participant attitudes toward the subject matter of artworks, aesthetics, or artificial intelligence altered the way in which they viewed artworks.

While we observed no effects of artistic attribution and various individual differences on gaze, it is possible that other empirical approaches could demonstrate such links. First, our choice to focus on content-independent gaze parameters was motivated by our goal to consider implicit, generalizable, viewing strategies divorced from idiosyncratic differences in stimulus content. Future work could consider content-dependent measures related to the visual and semantic content of artworks. If one’s beliefs about the origins of art modify the balance between visual or semantic analysis, it may be that the degree to which gaze is driven by factors such as visual salience, edge density, and semantic informativeness could be altered. Indeed, an unplanned exploratory analysis of the current data suggests that such differences in content-dependent measures may exist3. Figure 4 depicts those areas within each image that were disproportionately fixated in the AI attribution conditions relative to the human attribution condition and vice versa. Areas marked in yellow and red tones were looked at longer in the AI attribution condition whereas areas marked in blue tones were looked at longer in the human attribution condition4. One striking commonality across the artworks was a propensity for participants to spend more time looking at faces when they thought the artworks were created by humans. Second, we chose to constrain viewing at a constant distance, for a set period of time, over a predetermined group of images. In many cases, however, when viewing art these parameters are not so limited. Future work could investigate the possibility that artist attribution and/or viewers’ interest in the content of art, their appreciation of aesthetics, and/or their attitudes towards AI could lead them to make different overt choices regarding how closely they might approach an artwork, how long they might look at them, or even which artworks to look at or pass by altogether. Third, while our participant sample was representative of the general public, we were unable to determine how the relationship between one’s attitudes toward art and gaze behavior might vary as a function of artistic expertise, a factor known to influence viewing (e.g., Francuz et al., 2018; Lin and Yao, 2018; Koide et al., 2015; Pihko et al., 2011; Vogt and Magnussen, 2007). Finally, aside from our exploratory artwork-level analysis described above, our measures of both explicit attitudes and implicit viewing behaviors collapsed across individual artworks, restricting our conclusions to those that can be drawn about the collection of works as a whole. In future work, a set of images could be constructed to specifically explore the possibility that more fine-grained differences will emerge between individual items that vary in artistic style, subject matter, image content, or other factors that differentiate one work from another in either its physical composition or its influence on individual participants (e.g., observer goals, artistic preferences, knowledge, etc.).

Figure 4. Yellow and red tones denote areas looked at longer in the AI attribution condition whereas blue tones mark areas that were looked at longer in the human attribution condition (see Footnote 3 for additional detail).

In summary, while recent studies (including this one) have consistently found explicit negative biases against AI-generated art, we found no evidence that such biases are mirrored by implicit shifts in how non-expert viewers look at such art. Moreover, we found no evidence that individual differences in attitudes toward content, aesthetics, and AI alter looking behavior. Together, these results suggest that factors known to influence the specific content that we choose to look at (e.g., knowledge, personal attitudes and opinions) do not affect content-independent aspects of gaze related to the pace, spatial extent, and effort of visual exploration.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of Notre Dame Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

CC: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Writing – original draft, Writing – review & editing. GR: Conceptualization, Funding acquisition, Methodology, Writing – original draft, Writing – review & editing. JB: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by a Templeton Religion Trust Grant to JB and GR (TRT0455).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^Measures of frequency and duration are not orthogonal. Within a given amount of time, an increase in fixation durations should correlate with a decrease in the number of fixations observed. The measures do, however, characterize gaze in different ways and consistency across non-orthogonal measures enables stronger conclusions to be drawn from the data.

2. ^We additionally conducted an items-level analysis and no difference in any measure of gaze was observed between the human- and AI-attribution conditions for any item, indicating consistency in our findings across our materials.

3. ^We thank an anonymous reviewer for suggesting this exploratory analysis.

4. ^Each image in Figure 4 was generated using the “difference fixation map” function in EyeLink Data Viewer Version 4.1.63. These fixation maps collapse across participants and are based on the cumulative duration of all fixations. In order to highlight areas of greatest difference across the attribution conditions, the low activity cut-off percentage was set to 40%. All other parameters were set to default values. Note that like-colors indicate equivalent differences within, but not across, images.

References

Alvarez, S. A., Winner, E., Hawley-Dolan, A., and Snapper, L. (2015). What gaze fixation and pupil dilation can tell us about perceived differences between abstract art by artists versus by children and animals. Perception 44, 1310–1331. doi: 10.1177/0301006615596899

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. Royal Stat. Soc. 57, 289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x

Bubic, A., Susac, A., and Palmovic, M. (2017). Observing individuals viewing art: the effects of titles on viewers’ eye-movement profiles. Empir. Stud. Arts 35, 194–213. doi: 10.1177/0276237416683499

Bullot, N. J., and Reber, R. (2013). The artful mind meets art history: toward a psycho-historical framework for the science of art appreciation. Behav. Brain Sci. 36, 123–137. doi: 10.1017/S0140525X12000489

Castelo, N., Bos, M. W., and Lehmann, D. R. (2019). Task-dependent algorithm aversion. J. Mark. Res. 56, 809–825. doi: 10.1177/0022243719851788

Chamberlain, R., Mullin, C., Scheerlinck, B., and Wagemans, J. (2018). Putting the art in artificial: aesthetic responses to computer-generated art. Psychol. Aesthet. Creat. Arts 12, 177–192. doi: 10.1037/aca0000136

Chiarella, S. G., Torromino, G., Gagliardi, D. M., Rossi, D., Babiloni, F., and Cartocci, G. (2022). Investigating the negative bias towards artificial intelligence: effects of prior assignment of ai-authorship on the aesthetic appreciation of abstract paintings. Comput. Hum. Behav. 137:107406. doi: 10.1016/j.chb.2022.107406

Dageforde, S. M., Parra, D., Malik, K. M., Christensen, L. L., Jensen, R. M., Brockmole, J. R., et al. (2024). The effect of temporal context on memory for art. Acta Psychol. 248:104349. doi: 10.1016/j.actpsy.2024.104349

Di Stasi, L. L., Marchitto, M., Antoli, A., and Canas, J. J. (2013). Saccade peak velocity as an alternative index of operator attention: short review. Eur. Rev. Appl. Psychol. 63, 335–343. doi: 10.1016/j.erap.2013.09.001

DiPaola, S., Riebe, C., and Enns, J. T. (2013). Following the masters: portrait viewing and appreciation is guided by selective detail. Perception 42, 608–630. doi: 10.1068/p7463

Francuz, P., Zaniewski, I., Augustynowicz, P., Kopis, N., and Jankowski, T. (2018). Eye movement correlates of expertise in visual arts. Front. Hum. Neurosci. 12:87. doi: 10.3389/fnhum.2018.00087

Fuchs, I., Ansorge, U., Redies, C., and Leder, H. (2011). Salience in paintings: bottom-up influences on eye fixations. Cogn. Comput. 3, 25–36. doi: 10.1007/s12559-010-9062-3

Gameiro, R. R., Kaspar, K., Konig, S., Nordholt, S., and Konig, P. (2017). Exploration and exploitation in natural viewing behavior. Sci. Rep. 7:2311. doi: 10.1038/s41598-017-02526-1

Ganczarek, J., Pietras, K., and Rosiek, R. (2020). Perceived cognitive challenge predicts eye movements while viewing contemporary paintings. PsyCh J. 9, 490–506. doi: 10.1002/pchj.365

Gangadharbatla, H. (2021). The role of AI attribution knowledge in the evaluation of artwork. Empir. Stud. Arts 40, 125–142. doi: 10.1177/0276237421994697

Gogoll, J., and Uhl, M. (2018). Rage against the machine: automation in the moral domain. J. Behav. Exp. Econ. 74, 97–103. doi: 10.1016/j.socec.2018.04.003

Gu, L., and Li, Y. (2022). Who made the paintings: artists or artificial intelligence? The effects of identity on liking and purchase intention. Front. Psychol. 13:941163. doi: 10.3389/fpsyg.2022.941163

Henderson, J. M. (2007). Regarding Scenes. Curr. Dir. Psychol. Sci. 16, 219–222. doi: 10.1111/j.1467-8721.2007.00507.x

Hills, T. T., Todd, P. M., Lazer, D., Reddish, A. D., and Cousin, I. D. and Cognitive Search Research Group (2015). Exploration versus exploitation in space, mind, and society. Trends Cogn. Sci. 19, 46–54. doi: 10.1016/j.tics.2014.10.004

Hong, J.-W. (2018). “Bias in perception of art produced by artificial intelligence” in Human-computer interaction. Interaction in context (Cham, Switzerland: Springer International Publishing), 290–303.

Hong, J.-W., and Curran, N. M. (2019). Artificial intelligence, artists, and art. ACM Trans. Multimed. Comput. Commun. Appl. 15, 1–16. doi: 10.1145/3326337

Huber, S., and Huber, O. W. (2012). The centrality of religiosity scale (CRS). Religions 3, 710–724. doi: 10.3390/rel3030710

Jucker, J., Barrett, J. L., and Wlodarski, R. (2014). “I just don’t get it”: perceived artists’ intentions affect art evaluations. Empir. Stud. Arts 32, 149–182. doi: 10.2190/EM.32.2.c

Kahneman, D., and Beatty, J. (1966). Pupil diameter and load on memory. Science 154, 1583–1585. doi: 10.1126/science.154.3756.1583

Kaube, H., and Rahman, R. A. (2024). Art perception is affected by negative knowledge about famous and unknown artists. Sci. Rep. 14. doi: 10.1038/s41598-024-58697-1

Kirk, U., Skov, M., Hulme, O., Christensen, M. S., and Zeki, S. (2009). Modulation of aesthetic value by semantic context: an fmri study. NeuroImage 44, 1125–1132. doi: 10.1016/j.neuroimage.2008.10.009

Köbis, N., and Mossink, L. D. (2021). Artificial intelligence versus Maya Angelou: experimental evidence that people cannot differentiate AI-generated from human-written poetry. Comput. Hum. Behav. 114:106553. doi: 10.1016/j.chb.2020.106553

Koide, N., Kubo, T., Nishida, S., Shibata, T., and Ikeda, K. (2015). Art expertise reduces influence of visual salience on fixation in viewing abstract-paintings. PLoS One 10:e0117696. doi: 10.1371/journal.pone.0117696

Lin, F., and Yao, M. (2018). The impact of accompanying text on visual processing and hedonic evaluation of art. Empir. Stud. Arts 36, 180–198. doi: 10.1177/0276237417719637

Lundy, D. E., Schenkel, M. B., Akrie, T. N., and Walker, A. M. (2010). How important is beauty to you? The development of the desire for aesthetics scale. Empir. Stud. Arts 28, 73–92. doi: 10.2190/EM.28.1.e

Lyu, Y., Wang, X., Lin, R., and Wu, J. (2022). Communication in human–AI co-creation: perceptual analysis of paintings generated by text-to-image system. Appl. Sci. 12:11312. doi: 10.3390/app122211312

Massaro, D., Savazzi, F., Di Dio, C., Freedberg, D., Gallese, V., Gilli, G., et al. (2012). When art moves the eyes: a behavioral and eye-tracking study. PLoS One 7:e37285. doi: 10.1371/journal.pone.0037285

Mastandrea, S., and Crano, W. D. (2019). Peripheral factors affecting the evaluation of artworks. Empir. Stud. Arts 37, 82–91. doi: 10.1177/0276237418790916

Mastandrea, S., Wagoner, J. A., and Hogg, M. A. (2021). Liking for abstract and representational art: national identity as an art appreciation heuristic. Psychol. Aesthet. Creat. Arts 15, 241–249. doi: 10.1037/aca0000272

Mikalonytė, E. S., and Kneer, M. (2022). Can artificial intelligence make art?: folk intuitions as to whether AI-driven robots can be viewed as artists and produce art. ACM Trans. Hum. Robot Int. 11, 1–19. doi: 10.1145/3530875

Miller, S., Cotter, K. N., Fingerhut, J., Leder, H., and Pelowski, M. (2024). What can happen when we look at art?: an exploratory network model and latent profile analysis of affective/cognitive aspects underlying shared, Supraordinate responses to museum visual art. Empir. Stud. Arts 0. doi: 10.1177/02762374241292576

Millis, K. (2001). Making meaning brings pleasure: the influence of titles on aesthetic experiences. Emotion 1, 320–329. doi: 10.1037/1528-3542.1.3.320

Mitschke, V., Goller, J., and Leder, H. (2017). Exploring everyday encounters with street art using a multimethod design. Psychol. Aesthet. Creat. Arts 11, 276–283. doi: 10.1037/aca0000131

Nodine, C., and Krupinski, E. (2003). How do viewers look at artworks? Bull. Psychol. Arts 4, 65–68. doi: 10.1037/e514602010-005

Pew Research Center (2023a). Growing public concern about the role of artificial intelligence in daily life. Available online at: https://pewrsr.ch/3QZ6H6D

Pew Research Center (2023b). What the data says about Americans’ views of artificial intelligence. Available online at: https://pewrsr.ch/3SOjvNZ

Pew Research Center (2023c). How Americans view emerging uses of artificial intelligence, including programs to generate text or art. Available online at: https://pewrsr.ch/3xP4NdO

Pignocchi, A. (2014). The intuitive concept of art. Philos. Psychol. 27, 425–444. doi: 10.1080/09515089.2012.729484

Pihko, E., Virtanen, A., Saarinen, V. M., Pannasch, S., Hirvenkari, L., Tossavainen, T., et al. (2011). Experiencing art: the influence of expertise and painting abstraction level. Front. Hum. Neurosci. 5:94. doi: 10.3389/fnhum.2011.00094

Plumhoff, J. E., and Schirillo, J. A. (2009). Mondrian, eye movements, and the oblique effect. Perception 38, 719–731. doi: 10.1068/p6160

Quiroga, R. Q., and Pedreira, C. (2011). How do we see art: an eye-tracker study. Front. Hum. Neurosci. 5:98. doi: 10.3389/fnhum.2011.00098

Ragot, M., Martin, N., and Cojean, S. (2020). AI-generated vs. human artworks. A perception bias towards artificial intelligence? Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems.

Ranti, C., Jones, W., Klin, A., and Shultz, S. (2020). Blink rate patterns provide a reliable measure of individual engagement with scene content. Sci. Rep. 10:8267. doi: 10.1038/s41598-020-64999-x

Schepman, A., and Rodway, P. (2020). Initial validation of the general attitudes towards artificial intelligence scale. Comput. Hum. Behav. Rep. 1:100014. doi: 10.1016/j.chbr.2020.100014

Sharvashidze, N., and Schütz, A. C. (2020). Task-Dependent Eye-Movement Patterns in Viewing Art. J. Eye Mov. Res. 13:10.16910/jemr.13.2.12. doi: 10.16910/jemr.13.2.12

Smuha, N. A. (2021). From ‘a race to AI’ to a ‘race to AI regulation’: regulatory competition for artificial intelligence. Law Innov. Technol. 13, 57–84. doi: 10.1080/17579961.2021.1898300

Specht, S. M. (2010). Artists’ statements can influence perceptions of artwork. Empir. Stud. Arts 28, 193–206. doi: 10.2190/EM.28.2.e

Szubielska, M., Imbir, K., Fudali-Czyż, A., and Augustynowicz, P. (2020). How does knowledge about an Artist’s disability change the aesthetic experience? Adv. Cogn. Psychol. 16, 150–159. doi: 10.5709/acp-0292-z

Temme, J. E. V. (1992). Amount and kind of information in museums: its effects on visitors satisfaction and appreciation of art. Visual Arts Res. 18, 28–36.

Uusitalo, L., Simola, J., and Kuisma, J. (2009). Perception of abstract and representative visual art. In Proceedings of AIMAC, 10th Conference of the International Association of Arts and Cultural Management (pp. 1–12).

Van Tilburg, W. A. P., and Igou, E. R. (2014). From van Gogh to Lady Gaga: artist eccentricity increases perceived artistic skill and art appreciation. Eur. J. Soc. Psychol. 44, 93–103. doi: 10.1002/ejsp.1999

Vogt, S., and Magnussen, S. (2007). Expertise in pictorial perception: eye-movement patterns and visual memory in artists and laymen. i-Perception 36, 91–100. doi: 10.1068/p5262

Xu, R., and Hsu, Y. (2020). Discussion on the aesthetic experience of artificial intelligence creation and human art creation. In: Shoji, H. Proceedings of the 8th International Conference on Kansei Engineering and Emotion Research. KEER 2020. Advances in intelligent systems and computing, vol 1256. Springer, Singapore.

Zhang, Z., Chen, Z., and Xu, L. (2022). Artificial intelligence and moral dilemmas: perception of ethical decision-making in AI. J. Exp. Soc. Psychol. 101:104327. doi: 10.1016/j.jesp.2022.104327

Keywords: art, eye movements, artificial intelligence, individual differences, attitudes, vision

Citation: Cunningham CV, Radvansky GA and Brockmole JR (2025) Human creativity versus artificial intelligence: source attribution, observer attitudes, and eye movements while viewing visual art. Front. Psychol. 16:1509974. doi: 10.3389/fpsyg.2025.1509974

Edited by:

Antonino Vallesi, University of Padua, ItalyReviewed by:

Marco Sperduti, Université Paris Cité, FranceBranka Spehar, University of New South Wales, Australia

Copyright © 2025 Cunningham, Radvansky and Brockmole. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: James R. Brockmole, amFtZXMuYnJvY2ttb2xlQG5kLmVkdQ==

†ORCID: Gabriel A. Radvansky, http://orcid.org/0000-0001-7846-839X

James R. Brockmole, http://orcid.org/0000-0003-3227-1078

Caitlin V. Cunningham

Caitlin V. Cunningham Gabriel A. Radvansky

Gabriel A. Radvansky James R. Brockmole

James R. Brockmole