Abstract

This study explores how audiovisual immersive virtual environments (IVEs) can assess cognitive performance in classroom-like settings, addressing limitations in simpler acoustic and visual representations. This study examines the potential of a test paradigm using speaker-story mapping, called “audiovisual scene analysis (AV-SA),” originally developed for virtual reality (VR) hearing research, as a method to evaluate audiovisual scene analysis in a virtual classroom scenario. Factors affecting acoustic and visual scene representation were varied to investigate their impact on audiovisual scene analysis. Two acoustic representations were used: a simple “diotic” presentation where the same signal is presented to both ears, as well as a dynamically live-rendered binaural synthesis (“binaural”). Two visual representations were used: 360°/omnidirectional video with intrinsic lip-sync and computer-generated imagery (CGI) without lip-sync. Three subjective experiments were conducted with different combinations of the two acoustic and visual conditions: The first experiment, involving 36 participants, used 360° video with “binaural” audio. The second experiment, with 24 participants, combined 360° video with “diotic” audio. The third experiment, with 34 participants, used the CGI environment with “binaural” audio. Each environment presented 20 different speakers in a classroom-like circle of 20 chairs, with the number of simultaneously active speakers ranging from 2 to 10, while the remaining speakers kept silent and were always shown. During the experiments, the subjects' task was to correctly map the stories' topics to the corresponding speakers. The primary dependent variable was the number of correct assignments during a fixed period of 2 min, followed by two questionnaires on mental load after each trial. In addition, before and/or after the experiments, subjects needed to complete questionnaires about simulator sickness, noise sensitivity, and presence. Results indicate that the experimental condition significantly influenced task performance, mental load, and user behavior but did not affect perceived simulator sickness and presence. Performance decreased when comparing the 360° video and “binaural” audio experiment with either the experiment using “diotic” audio and 360°, or using “binaural” audio with CGI-based, showing the usefulness of the test method in investigating influences on cognitive audiovisual scene analysis performance.

1 Introduction

Classroom communication and comprehension are often challenging due to unfavorable listening conditions such as background noise and reverberation. Existing studies primarily focus on simple auditory tasks (see, e.g., Doyle, 1973; Rueda et al., 2004; Holmes et al., 2016; Röer et al., 2018), but actual classroom listening requires students to process complex, continuous speech in challenging listening environments. Understanding how varying acoustic and visual conditions affect comprehension in these settings is essential to support effective learning. Until now, existing paradigms have focused on relatively simple acoustic and visual representations (see, e.g., Spence and Driver, 1997; Koch et al., 2011; Lawo et al., 2014; Oberem et al., 2014, 2017, 2018; Barutchu and Spence, 2021; Fichna et al., 2021). In this study, the effectiveness of audiovisual virtual environments in assessing cognitive performance in classroom-like Immersive Virtual Environment (IVE) settings is investigated by creating complex visual and acoustic scenes in a controlled environment. To improve the validity of cognitive performance research in such environments, the realism of these paradigms needs to be progressively increased, especially in terms of the visual scene complexity. It is hypothesized that a more sophisticated audiovisual representation, and hence a more medially rich representation of the virtual environment, has a positive impact on task performance.

To do so, in this article the “audiovisual scene analysis test paradigm” originally developed by Ahrens et al. (2019) and Ahrens and Lund (2022) is adapted and expanded to assess its suitability for analyzing audiovisual scene analysis in a virtual classroom scenario. To maintain consistency with existing research, it was intentionally decided to keep the original naming convention of the paradigm. In the paradigm originally developed by Ahrens et al. (2019), multi-speaker scene perception has been investigated regarding auditory and visual information. Twenty-one speakers were depicted as schematic avatar silhouettes positioned semi-circularly around the listener, ranging from –90° to 90° in 30° steps. In each trial, 2–10 speakers simultaneously read stories from different locations, without visual feedback of which of the speakers were active, while visually all 20 possible speakers were always shown. During the actual perception test, six normal-hearing subjects, wearing an HTC Vive Pro HMD, were asked to match the stories to the corresponding visual locations of the speakers, with the speakers and their positions being randomly assigned in each trial. Authors concluded that participants were able to accurately analyze scenes with up to six speakers. However, when more speakers were added to the scene, azimuth localization accuracy declined, while distance perception remained consistent regardless of the number of speakers.

While classical frontal-teaching classroom settings typically involve only a few simultaneously active speakers, interactive classroom settings and realistic classroom activities, such as resulting group work, discussions, collaborative exercises, and other interactive learning formats, often create situations where several conversations happen simultaneously, resulting in multiple simultaneous speakers in one room. In these situations, it is essential to focus on the content spoken by a single speaker, known in literature as the cocktail party effect (Cherry, 1953; Bronkhorst, 2000; Shinn-Cunningham, 2008; Bronkhorst, 2015). Such scenarios may occur in real classroom environments, for example, when multiple smaller groups are talking in close proximity. Understanding cognitive performance in these contexts, even in extreme conditions such as having 10 simultaneously active speakers, is crucial, even though such scenarios might not occur every day in real classrooms. Hence, this study proposes a psychometric method to investigate audiovisual interaction effects by measuring cognitive performance in scenarios with good speech intelligibility, aiming to systematically evaluate cocktail party settings. To achieve this, the number of simultaneous speakers was intentionally and systematically increased, an approach previously demonstrated to be an appropriate independent variable by Ahrens et al. (2019) and Ahrens and Lund (2022). Further, the paradigm proposed in this study systematically extends the visual aspects of the paradigm initially suggested by Ahrens et al. (2019), which were previously underrepresented due to the use of a rather simple visual scene featuring semi-transparent avatar silhouettes. The proposed paradigm enables a deeper understanding of psychometric aspects of audiovisual paradigms, more specifically the audiovisual interplay through a realistic and controlled IVE setting, which is crucial for moving toward an even more realistic and child-appropriate scenario in the future, such as collaborative group work and discussion settings.

In Fremerey et al. (2024), a modified version and implementation of the original paradigm by Ahrens et al. (2019) was published, referred to as AVT-ECoClass-VR.1 It features two different visual instances for different audiovisual scenarios, namely 360° video and computer-generated imagery (CGI), both including 20 different speakers and two implementations for dataset playback. The 360° video scenario consists of individually recorded 360° videos of speakers, embedded together within a 360° image scene. The CGI scenario represents a computer-generated classroom environment created using SketchUp Make 2017, including 3D-scanned avatars of the same individuals recorded in the 360° video scenario. Thus, each speaker is represented both as a 360° video recording and as a 3D-scanned avatar. Hence, for auditory cognition research, the visual representations of persons in both virtual classroom settings can be combined with different acoustic presentations of the speech signals initially recorded together with the 360° video representations. In the research presented in this study, to investigate which factors are having an influence on dependent variables like the perceived task performance, a simplified but easily accessible diotic presentation via headphones is compared to a dynamic binaural rendering.

In this way, the goal of the study is to develop a psychometric method to investigate audiovisual interaction effects by varying the complexity of the audiovisual scene, with an initial focus on measuring cognitive performance by adjusting the number of simultaneously active speakers, ranging from 2 to 10, in an IVE setting with good speech intelligibility. The audiovisual scene dataset and tools made available by the authors in Fremerey et al. (2024), building on Ahrens et al. (2019), increase the number of available speakers and add both a 360° and a CGI visual representation.

This study systematically addresses the question of the extent to which manipulating the auditory and visual presentation of an IVE influences factors such as task performance, mental load, and user behavior in the context of the speaker identification task. More specifically, in this article, differences between the two classroom scenario-like IVEs, 360° video and CGI, in conjunction with the two audio settings “diotic” and “binaural,” are investigated regarding cognitive performance, user behavior (cf. Fremerey et al., 2018), task load (cf. Georgsson, 2019), presence (cf. Schubert et al., 2001), and simulator sickness (cf. Kennedy et al., 1993). To this end, a series of three subjective tests is conducted, where subjects are instructed to map simultaneously told stories to the respective visual representations. Hence, this study contributes to the broader research topic on multisensory perception by examining how audiovisual coherence and the characteristics of different experimental conditions influence cognitive performance in IVE settings.

Three subjective experiments were conducted with different combinations of the two acoustic and visual conditions: The first experiment used 360° video but with binaural audio. The second experiment combined 360° video with diotic audio. The third experiment used the CGI environment with binaural audio. During the experiments, it became clear that the difficulty level of the 360° diotic experimental condition was already high enough, despite good lip-sync quality. Therefore, we decided not to explicitly include an additional CGI diotic experimental condition, especially since technical limitations of the IVE would not have allowed lip-sync of comparable visual quality to the 360° video. A CGI diotic experimental condition without lip-sync would have theoretically also been possible but was not included, as it would have provided no localization information through either the auditory or visual channel. In such a scenario, participants would have relied more on guessing than on actual knowledge for speaker-story assignments. In summary, this led us to decide against integrating any CGI diotic experimental condition into our experiments.

The goal is to answer the following research questions, while in brackets the respective dependent variables used to investigate the specific research question are mentioned:

-

RQ1: How does the task performance correlate with the results of Ahrens et al. (2019) for different levels of the total number of stories presented simultaneously, across the two visual IVE representations (360° video vs. CGI) and audio conditions (binaural vs. diotic)? (Dependent variables: correctly assigned stories, total time needed)

-

RQ2: How do the total number of stories presented simultaneously, the audio condition (binaural vs. diotic), and the visual IVE representation (360° video vs. CGI) impact task performance and mental load? (Dependent variables: correctly assigned stories, total time needed, NASA RTLX results, listening effort)

-

RQ3: Is the user behavior, in terms of head movement, different across audio conditions (binaural vs. diotic) and visual IVE representations (360° video vs. CGI)? (dependent variables: proportion of time spent watching active speakers, total yaw degrees explored, total number of yaw direction changes)

-

RQ4: What differences in constructs related to Quality of Experience (QoE) for IVEs, such as simulator sickness and presence, exist across different audio conditions (binaural vs. diotic) and visual IVE representations (360° video vs. CGI)? (dependent variables: simulator sickness, presence questionnaire results)

Based on the research questions, the hypotheses of this study are presented as follows:

-

H1: It is hypothesized that task performance in both visual IVE representations (360° video and CGI) shows a strong correspondence with the performance observed in the study by Ahrens et al. (2019), regardless of whether the audio condition is binaural or diotic.

-

H2: While a general decline in task performance and an increase in mental load with a higher number of simultaneously presented stories is expected, it is hypothesized that these effects will be more visible in the diotic audio condition compared to the binaural condition and in the CGI representation compared to the 360° video.

-

H3: It is hypothesized that head movement patterns (e.g., proportion of time spent watching active speakers, total yaw degrees explored, total number of yaw direction changes) will differ between binaural and diotic audio conditions, as well as between 360° video and CGI representations.

-

H4: It is hypothesized that simulator sickness levels (before/after the experiment) will not differ between audio conditions (binaural vs. diotic) or visual IVE representations (360° video vs. CGI). However, differences are expected in the sense of presence, with higher levels of presence reported for binaural audio conditions and 360° video representations.

2 Background

The following provides an overview of the existing state-of-the-art research on the design of audiovisual virtual environments to investigate cross-modal perception and task performance in established cognitive tasks.

Stecker (2019) emphasize the potential benefits of Immersive Virtual Environments (IVEs) for assessing auditory performance, as they improve multisensory consistency, can bring the real world into the lab, enable natural multidimensional tasks, and enhance the engagement of subjects. Furthermore, as stated in Owens and Efros (2018), the visual and auditory components of a video should be jointly modeled through a combined multisensory representation. Based on these requirements to improve the validity of state-of-the-art research, the present study aimed to increase the complexity of virtual audiovisual scenes and to investigate their impact on perceived mental load as well as presence.

Virtual reality (VR) has gained significant popularity in cognitive psychology research over the past two decades (see Foreman, 2010; Schnall et al., 2012), providing innovative methods to examine how the features of these virtual audiovisual environments influence auditory perception. Other studies that specifically evaluated speaker identification as a task are, for example, the study by Stecker et al. (2018). In this study, an experiment with six participants was conducted to measure co-immersion in virtual auditory scenes using a spatial localization and speaker identification task. The authors conclude that listeners can distinguish the reverberant characteristics of multiple simultaneous speakers in a complex auditory scene when visual, auditory, and dynamic information about the talkers' locations is provided. Another study is the one by Josupeit and Hohmann (2017), where speaker identification, speech localization, and word recognition were modeled for a multi-speaker setting. The model is able to extract salient audio features from a multi-speaker audio signal and use a classification method that matches these features with templates from clean target signals to determine the best target. In the study by Rungta et al. (2018), the influence of reverberation and spatialization on the cocktail-party effect was evaluated for a multi-speaker IVE. The cocktail party effect was introduced by Cherry (1953) and further investigated in, e.g., Bronkhorst (2000), Bronkhorst (2015), and Shinn-Cunningham (2008). It describes the need for spatial attention to distinguish relevant acoustic information from multiple competing acoustic sources, such as several simultaneous conversations at a party. In adverse cases, classroom communication and comprehension could be comparable to the cocktail party effect.

With the aim to investigate differences between binaural and diotic listening, Rungta et al. (2018) used diotic audio and two different methods of spatialization: stereo using vector-based amplitude panning and binaural convolving a generic KEMAR head-related impulse response (HRIR) (cf. Gardner and Martin, 1995) with the room impulse response giving the binaural room impulse response (BRIR) for the listener. In all cases, the audio was played back through the integrated headphones of the Oculus Rift CV1 HMD. The authors found that spatialization had the highest impact on target-word identification performance, as binaural listening outperforms diotic listening and has been shown to be robust with respect to the arrangement of distractor stimuli. Increased reverberation negatively affects speech intelligibility by decreasing the robustness of diotic and binaural cues, with the effect becoming more visible when reverberation is higher.

Another study by Gonzalez-Franco et al. (2017) aimed to investigate how audiovisual cues affect selective listening using virtual reality and spatial audio. To do so, participants were exposed to an information masking task with concurrent speakers. To this aim, wide-field-of-view stereoscopic video and audio recordings were made. The resulting recordings were rendered in VR as 185° stereoscopic videos. The results show that asynchronous visual and auditory speech cues, particularly mismatched lips and audio, significantly impact comprehension and auditory selective attention, emphasizing the importance of visual cues in multisensory integration. In relation to this, Vollmer et al. (2023) conducted an audiovisual serial recall experiment with 13 participants. Authors found that for audiovisual speech recordings, the recall performance is significantly better for presentations with auditory stimuli than for visual-only presentations. However, the audiovisual representation did not significantly improve the recall performance.

A complementary dataset with 360° video is described in Kishline et al. (2020). It presents a database from five speakers recorded as anechoic audio speech samples with stereoscopic and omnidirectional video. This study provides tools and resources that shall enable the simulation of scenarios with up to three speakers positioned at various azimuthal and depth locations in an IVE. It is noted that the initially publicly available dataset can no longer be found under the link given in Kishline et al. (2020).

In relation to the previously described study, Fichna et al. (2021) aimed to investigate the effects of acoustic scene complexity and visual scene representation on auditory perception in IVEs. To do so, authors used a combination of an HMD and a 3-dimensional 86-channel loudspeaker array, assessing five psychoacoustic measures: speech intelligibility, sound source location, distance perception, loudness, and listening effort. The subjective test involved twelve listeners, who performed a speech perception and assessment task in both echoic and anechoic virtual environments. During the task, the same fixed order was followed: after presenting the target and maskers, participants repeated the sentence of the target speaker, named the position of the target, rated the loudness, and rated the listening effort. Target distances, number of maskers, and reverberation conditions were varied. Results showed that reverberation and the number of interfering sound sources significantly affected the five psychoacoustic measures, contributing to scene complexity. Wearing an HMD did not substantially alter performance, indicating that the setup allows for realistic testing of auditory perception. The study emphasizes the ecological validity of IVEs and their potential for comparing virtual and real-world acoustic environments.

Another study by Slomianka et al. (2024) investigated how eye and head movements are influenced by the complexity of audiovisual scenes, by factors such as reverberation and the number of concurrent speakers. To analyze these, the authors conducted a test with thirteen normal-hearing participants engaged in a speech comprehension and localization task, using the original version of the paradigm by Ahrens et al. (2019). The results show that increased scene complexity delays initial head movements, extends the search period, and leads to more gaze shifts and less accurate final head positioning when identifying the target speaker.

3 Materials and methods

The following section describes the test setup for the three tests, including test stimuli and methods, details about the participants and pre-screening, instructions, tasks, independent and dependent variables, and information about the dataset and technical setup.

3.1 Test stimuli and test method

Within this research, three different perceptual tests have been carried out, using the two different Unity IVEs 360° videos and CGI published in Fremerey et al. (2024). Two different audio settings and two visual representations for classroom-like IVE settings have been tested: 360° video and CGI, where the 360° reference visual condition with intrinsic lip-sync of the comprised audio recordings was conducted once with binaural and once with diotic audio. As detailed in Fremerey et al. (2024), the IVEs were developed to enhance the complexity of the audiovisual scenes to more accurately simulate a typical classroom environment. The virtual classroom was equipped with 20 chairs and speakers arranged in a circle with a diameter of 2.6 m. The 360° video and CGI visuals in the AVT-ECoClass-VR dataset are accompanied by 10 – reflecting the number of different stories – single-channel speech recordings per speaker, which can be positioned at various spatial locations within the virtual scene, here located on the speakers. This is done using VAUnity,2 a Unity package of the Virtual Acoustics v2022a auralisation software by IHTA, RWTH Aachen University (2024).

The first two experiments, “360° binaural” and “360° diotic,” have been carried out using the 360° video version, while the third experiment, “CGI binaural,” used the CGI version of the AVT-ECoClass-VR IVE. The first and third experiments used binaural audio, and the second experiment used diotic audio. To ensure consistency with the other two tests, the diotic audio setup for the second experiment was also performed using the VAUnity package, with the MyAmbientMixer renderer implemented to make the sound audible without spatialization. The live-tracked dynamic binaural audio setup for the first and third experiments was performed using VAUnity, using the MyBinauralFreeField renderer with a 1x1° HRTF of the ITA artificial head.

3.2 Participants and pre-screening

The subjective experiments have been approved by the ethics committee of the Technische Universität Ilmenau, cf. the positive ethics vote from 14 March 2023. In the “360° diotic” test, 25 subjects participated (nine female, 16 male, mean age 29.5); in the “360° binaural” test, 36 subjects (10 female, 26 male, mean age 29.8); and in the “CGI binaural” test, 34 subjects (18 female, 16 male, mean age 33.6). To avoid potential learning effects, each participant was limited to participating in only one of the three experiments. Subjects were recruited from the university student and staff body via email lists. Due to the fact that the stories were only available in German, an inclusion criterion for all experiments was that the participants had to have German language proficiency at the native speaker level. A further criterion was normal hearing ability within 20 dB. This was tested before each experiment using an Oscilla Audiometer USB100 with frequencies between 125 and 8,000 Hz, using the “automatic 20 dB test” functionality of the software AudioConsole v2.4.8 connected to the audiometer. In addition, subjects of all experiment groups were tested for visual acuity and color vision using Snellen (20/25) (cf. Pro Visu Foundation, 2023) and Ishihara (cf. Ishihara, 2009) charts.

3.3 Instructions

After the subjects gave their informed consent, they were introduced to the test procedure using an adapted version of the publicly available AVrateVoyager tool published by Göring et al. (2021), which was used during all experiments to answer the questionnaires later explained in Section 3.4.3. Afterwards, the operation of the IVE with the HTC Vive controller was explained. Then, the interpupillary distance of the HMD was adjusted to enable comfortable viewing of the IVEs. Afterwards, both the correct fit of the headphones and the HTC Vive Pro 2 HMD were checked. During the experiments, subjects had the task of correctly assigning the stories to the corresponding active speakers. They were allowed to revise and reassign their choices as needed. During the speaker identification task, participants were instructed to remain seated but were free to rotate their head and controller in any direction. Once participants completed the task or 2 min had passed, they were asked to remove the HMD and headphones and complete the questionnaires.

3.4 Task

In a subsequent training session with four trials, subjects were familiarized with the test procedure, the questionnaires, as explained in Section 3.4.3, and the controller interaction. The task of the subjects in all three experiments was to correctly map the stories to their corresponding speakers as fast as possible, using an HTC Vive 2.0 controller. To do that, subjects had 2 min of time. They were instructed to press the controller's menu button when they completed the speaker-story assignments, resulting in the termination of the IVE. The IVE session ended either when 2 min expired or when participants pressed the controller's menu button. In both cases, after subjects exited the IVE, the speaker-story mappings were saved, the IVE was terminated, and the subjects were asked to remove headphones, controller, and HMD.

3.4.1 General aspects

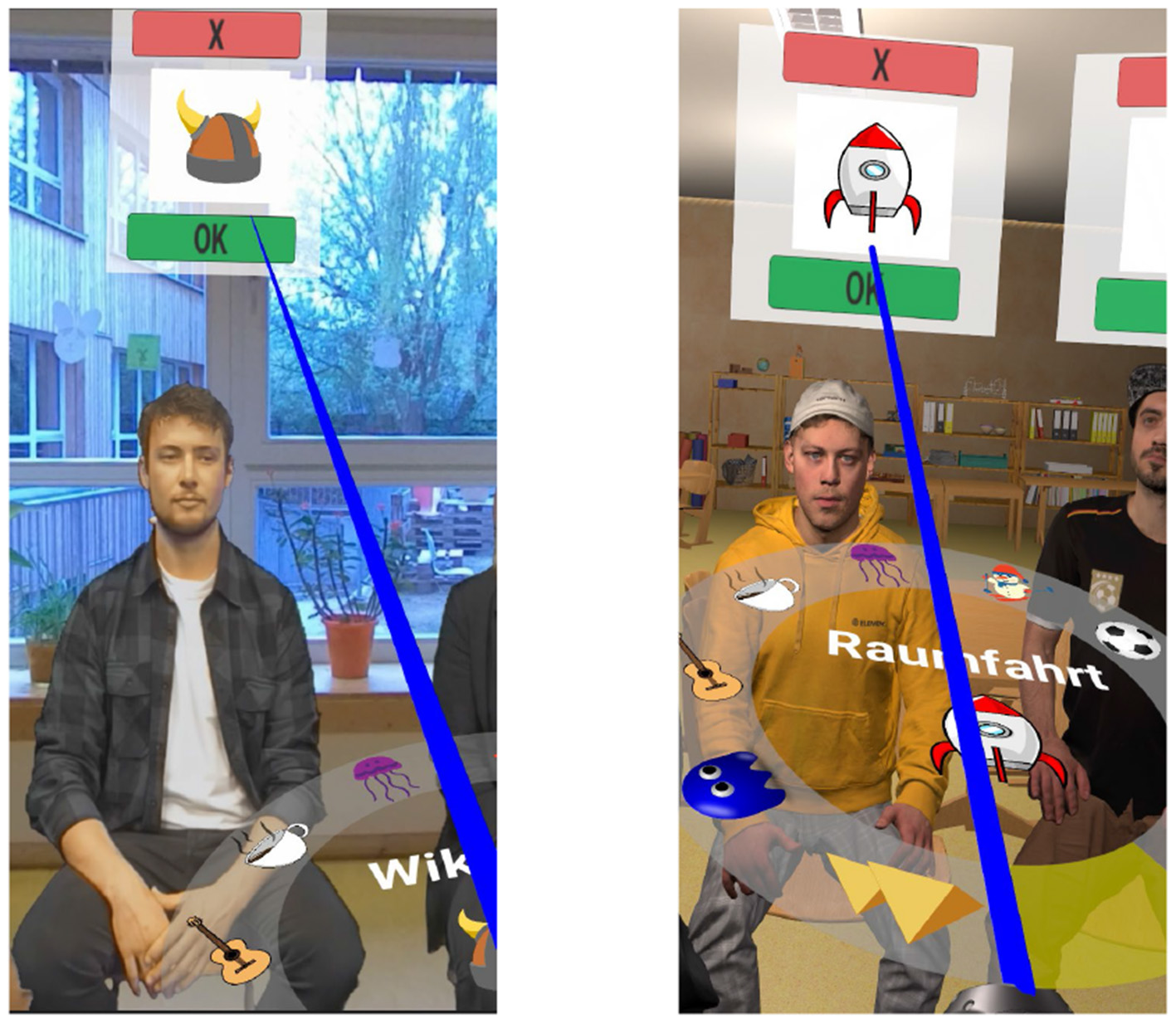

A general visualization of the task in all three experiments is shown in Figure 1. During the experiments, participants were placed on a rotating chair in the middle of the circle of 20 chairs, with the headset and headphone cables hanging from a hook above, as previously done by Agtzidis et al. (2019). All 20 possible speakers, 10 female and 10 male speakers recruited from the university student body, were always shown. This setup enabled them to rotate the chair freely without being hindered by cables, which might otherwise have caused them to avoid head and chair rotations and thus to orientate toward rearward speakers. In one hand, they hold the controller with the interaction wheel sticking above it. Subjects were asked to correctly assign the stories to the corresponding speakers by first selecting one of a total of 10 unique symbols representing the topics of the 10 possible stories, using the interaction wheel shown in Figure 1. The assignment of a story to a speaker was done using a blue ray appearing at the end of the controller and pointing to the green “OK” button while simultaneously clicking the controller's trigger button. The latter is more specifically shown in Figure 2, on the left for the 360° video IVE and on the right for the CGI IVE. Subjects were also allowed to remove a given assignment using the same principle explained above, now with the red “X” button, with the removed story appearing again on the interaction wheel.

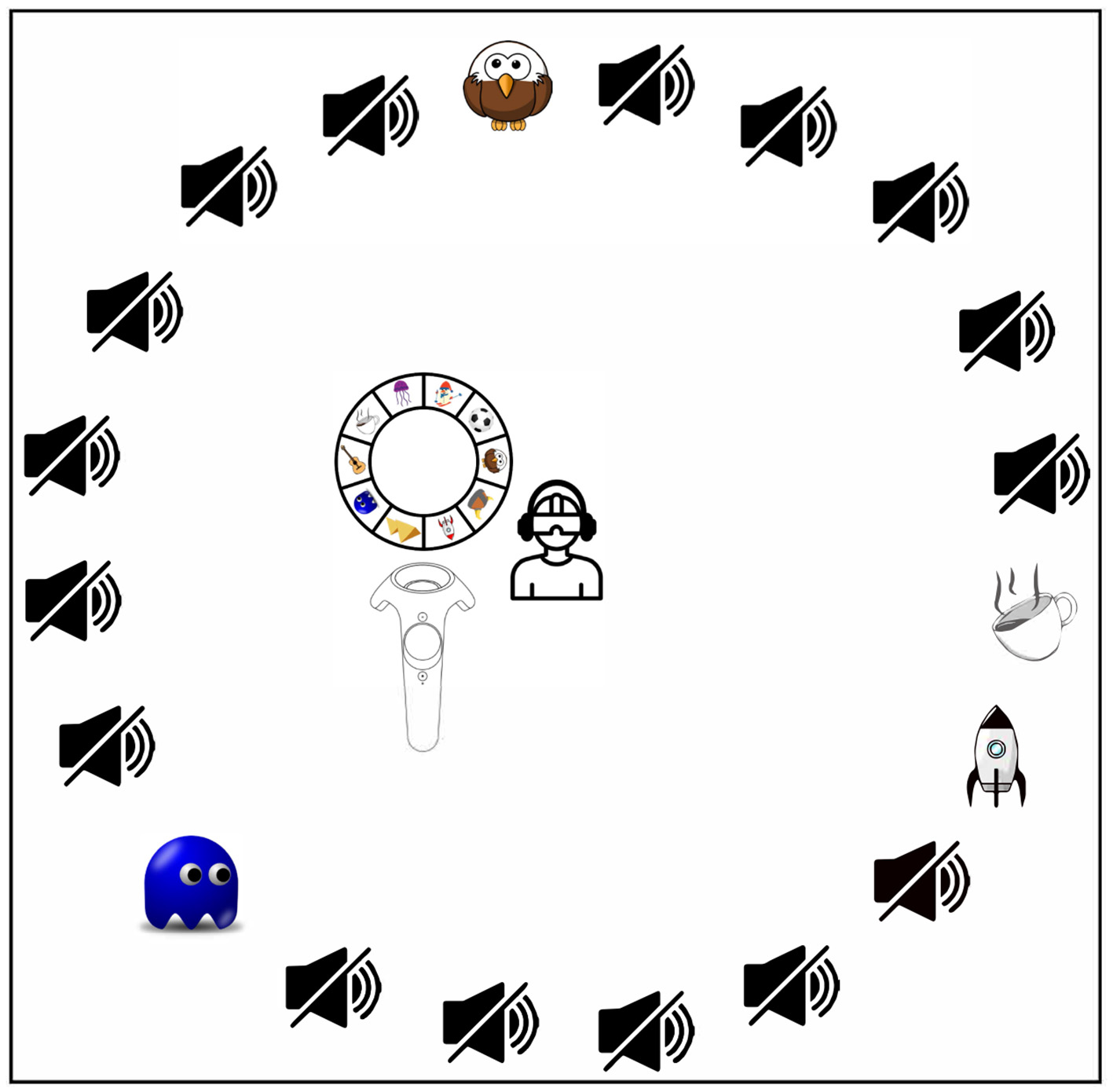

Figure 1

Visualization of the task used in all three experiments. The listener is located in the middle of a circle of 20 chairs with 20 speakers, of which 10 of them can potentially simultaneously each read out one story out of 10 possible stories. On the left of the listener, the controller with the interaction wheel is shown; for more details, see the paper text and Figure 2. The silent loudspeaker symbol represents a silent speaker, while the symbol refers to the story narrated by one of the 20 possible speakers. Here, an exemplary trial with four simultaneously active speakers, each narrating different stories, and 16 silent speakers is presented.

Figure 2

Speaker-story mapping system in the 360° IVE (left) and the CGI IVE (right), using an HTC Vive 2.0 controller and the interaction wheel.

The 10 possible stories were taken from Ahrens et al. (2019) and modified (cf. Fremerey et al., 2024) to extend them to 120 s, which was the maximum allowed time to complete the task. The story topics included jellyfish, skiing, soccer, birds, Vikings, outer space, pyramids, Pac-Man, guitar, and coffee, each providing general facts about its subject. The complete German versions of the stories were previously published in Fremerey et al. (2024).

3.4.2 Training

In the training session, subjects experienced the respective IVE with one, two, five and 10 stories presented simultaneously, while the remaining speakers kept silent. The order of the number of simultaneously active speakers has been kept constant across all training sessions for all experiments to not directly overwhelm subjects with, e.g., 10 active speakers in the first training trial. To familiarize subjects with not only the handling of the IVE, subjects also needed to fill in the questionnaires after each trial. This has been done to provide participants with an impression of how the subjective test procedure would look and also to indicate the kind of difficulty they can expect in terms of speaker-story mapping.

3.4.3 Questionnaires

In all three subjective tests, once before the training session, subjects were asked to answer the Simulator Sickness Questionnaire (SSQ) (Kennedy et al., 1993), consisting of 16 questions regarding different symptoms of simulator sickness on a discrete scale from 0 to 3. The SSQ is widely used in literature for assessing simulator sickness in IVE settings; see, e.g., Lin et al. (2002), Singla et al. (2017), and Sevinc and Berkman (2020). The SSQ was administered to obtain a baseline from the subjects regarding their general feelings before the experiment. Moreover, the three different IVE instances can only be utilized to evaluate audiovisual scene analysis in a virtual classroom scenario if they do not induce a significant level of simulator sickness. Furthermore, at the beginning of the test session, subjects were asked to fill in the German version of Weinstein's noise sensitivity scale (Zimmer and Ellermeier, 1997) once per test, consisting of 21 questions on a discrete scale from 0 to 4.

In all three experiments, after each of the nine trials, subjects left the IVE and were asked to provide ratings on five scales regarding effort, frustration, mental and temporal demand, and performance from the NASA RTLX questionnaire by Georgsson (2019) and one German version of the listening effort scaling question by Van Esch et al. (2013) on a continuous scale of 0–100. The questions from the NASA RTLX questionnaire were translated into German as already done by Schmutz et al. (2009) and Flägel et al. (2019), with ratings provided on a continuous scale from 0 to 100. The physical demand question was removed from the NASA RTLX questionnaire, as no specific physical activity was included in the experiment. This is also suggested by Helton et al. (2022) as physical demand may not be able to combine in a meaningful way with cognitive demands. For the performance rating of the NASA RTLX, the same continuous 0–100 scale (very low to very high) as for the other four questions from the NASA RTLX questionnaire was used to make subjects understand performance more intuitively (cf. Parkin et al., 2016; Said et al., 2020; Chen and Eickhoff, 2023). After answering a total of six questions after each trial, hence five from the NASA RTLX and one listening effort scaling question, the test supervisor again equipped the subjects with the HMD, headphones, and controller and continued the test. It was decided not to let the subjects answer the questionnaires in VR to give them a break from the virtual environment, as HTC also recommends it, the device manufacturer of the Vive Pro 2 HMD used in the experiments (cf. HTC Corporation, 2021).

After subjects completed the experiment with all nine trials, they were required to complete again the SSQ described above to get an insight into how the IVE usage impacted the participants' perceived amount of simulator sickness. Furthermore, participants were asked to complete the Igroup Presence Questionnaire (IPQ) by Schubert et al. (2001). It consists of 14 questions about general presence, spatial presence, participation, and experienced realism, each on a discrete scale from 0 to 6.

3.4.4 Main part of the experiment

After the four-trial training session, subjects began the main part of the experiment. The task in each of the nine trials was the same as in the training session: to assign the stories to the correct speakers as quickly as possible, within a 2-min time limit. Unlike in Ahrens et al. (2019), participants in our study were not allowed to continue assigning stories once the 120 s playback ended, to avoid extending the experiment and to prevent a visual stagnation of the 360° video scene. The time limit was set to 120 s to prevent participants from overthinking or spending excessive time on speaker-story mappings, particularly during trials with a higher number of simultaneously active speakers. Additionally, the time limit was intended to simulate real-world time constraints. Since the recorded stories varied in length among the speakers, stories were repeated if they were under 120 s long. No algorithms were applied to alter the speech tempo in order to avoid possible side effects from slowing down the video in the 360° video experimental conditions. During the task, subjects were free to explore the virtual environment with three degrees of freedom, limited to head rotations only. It was decided not to allow six degrees of freedom, including body movements, as it would have added unnecessary complexity to the scenario and precluded direct comparisons with existing research in this domain, such as the study by Ahrens et al. (2019).

A mixed design was used for the conducted experiments, where the total number of simultaneously presented stories within each experimental condition was tested as a within-subject factor, and the three experimental conditions with their specific visual and acoustic instantiations, described in Section 3.5.1, were tested as between-subject factors. For each experiment, the nine trials were randomized in terms of the number of active speakers, while each participant experienced a unique set of nine trials. The trials differed in the total number of stories presented simultaneously, ranging from 2 to 10, each involving a different speaker. Because the levels of “total number of stories presented simultaneously” were deliberately varied, it is treated as a fixed factor in the analysis. A random drawing system ensured equally frequent usage of speakers and stories and avoided repetitions of individual speaker-story pairs. To prevent learning effects, the active speakers' locations were randomly selected on each trial. In Supplementary Figure S6, on the x-axis, the total number of stories presented simultaneously, and on the y-axis, the shortest circular distance in degrees between active speakers, with 95% confidence intervals (CI), is shown. It is visible that even though the locations of the active speakers were not controlled and randomly chosen, the distance between active speakers was constant between the three different experimental conditions. These measures ensured that each subject received a different combination of speakers and stories per trial, minimizing the influence of, e.g., learning effects on other dependent variables, e.g., task performance. Since 10 stories were recorded from each speaker in Fremerey et al. (2024), participants will hear the same story more than once during the nine trials, although it will be spoken by different speakers. This was necessary, as the effort required to record the dataset (cf. Fremerey et al., 2024) would have made it impractical to record 54 unique 2 m long stories from each speaker. To ensure an equal distribution of male and female speakers, differences in audio pitch were taken into account. This was carefully maintained for even numbers of speakers, while for odd numbers, the gender of the last speaker was chosen randomly. Additionally, for the first two experiments, the audiovisual recordings always matched the location of the talker, while in the third experiment, the audio was consistently paired with the CGI avatar modeled for the specific speaker.

For the first two tests, “360° binaural” and “360° diotic,” synchronous lip movements were given due to the video recording for active speakers, while non-active speakers remained silent but were still slightly moving. In the third test, “CGI binaural,” static CGI avatars without lip synchronization were presented. Hence, except for the talkers' gender, the listener had no visual indicator of who was speaking in the virtual room and had to rely solely on her or his auditory perception. Due to the high number of questions, the total test duration was about 70 min. To keep the overall testing time manageable and within acceptable limits, every participant experienced each of the nine trials once. During the experiments, other subject-related data, including head rotation data, Euler angles from the HMD, and timestamps, were recorded at the same frequency as the fixed frame rate of 90 fps in the Unity environment. Recording of this data began at the start of the trial and continued until its conclusion. Data were recorded at 90 Hz for the experimental conditions 360° diotic and 360° binaural and 45 Hz for the CGI binaural test. For the latter test, a lower recording frequency has been chosen to enable a smooth playout of the IVE.

3.5 Independent and dependent variables

As follows, the independent and dependent variables of the three different experiments, which will be evaluated in Section 4, are described.

3.5.1 Independent variables

The independent variables of the three experiments were the visual and acoustic instantiations of the IVE used: 360° video with binaural audio for the first experiment, 360° video with diotic audio for the second experiment, and a CGI environment with binaural audio for the third experiment. Here, 360° with “binaural” can be considered as the reference with the best audiovisual match and intrinsic lip-sync. Another independent test variable for all three experiments was the number of stories presented simultaneously, which ranged from 2 to 10. One more independent variable is the distance between active speakers [°] and refers to the shortest circular distance in degrees between active speakers.

3.5.2 Dependent variables

There were various dependent variables of the three conducted experiments with regard to measuring task performance. Based on the story-to-speaker mappings, we can calculate the percentage of correctly assigned stories. Furthermore, the total time required is another dependent variable related to task performance.

As already mentioned in Section 3.4.4, head rotation data in the form of Euler angles were recorded from each participant, from which a few dependent variables were derived. This includes the amount of time spent watching active speakers, which serves as one dependent variable. The total number of yaw degrees explored is also derived as a dependent variable from the head rotation data. This is computed as follows, with i representing the iterated yaw degree value and n as the last recorded yaw degree value of each recorded data sample:

Another dependent variable derived from the head rotation data is the total number of yaw direction changes, which was computed as follows. At first, the differences were computed between each pair of two consecutive yaw values:

Then, the signs were computed with a threshold τ. It was defined as the so-called micromovements of the head (cf. Rossi et al., 2024) that would otherwise lead to an artificially high amount of yaw direction changes. Therefore, to compensate for the recording frequency of the head rotation data, τ was set to 0.1° for the experiments with the 360° experimental condition and to 0.2° for the experiment with the CGI experimental condition.

As a last step, initialize count = 0 and prev_sign = 0, while count is the total number of yaw direction changes per recorded data sample. For each signi: Another dependent variable, “Number of yaw direction changes per second,” refers to the total number of yaw direction changes divided by the total time needed per trial. The last dependent variable, “Deviation [°],” refers to the amount of degrees that subjects have deviated if they have recognized the right story per se but have assigned it to the wrong speaker.

Further dependent variables were derived from the questionnaires administered to the subjects. That includes the five single dimensions of the NASA RTLX questionnaire mentioned in Section 3.4.3, hence NASA RTLX effort, frustration, mental demand, performance and temporal demand. Further, from the recorded NASA RTLX values, one unweighted score, called the total NASA RTLX mental workload score, was calculated by averaging the raw data from the five factors. Another dependent variable, listening effort, was derived from the listening effort scaling question mentioned in Section 3.4.3. Further dependent variables were derived from the SSQ mentioned in Section 3.4.3, including nausea (N), oculomotor (O), disorientation (D) and the total score (TS), which is computed from the three single scores. Other dependent variables are derived from the IPQ mentioned in Section 3.4.3, hence general presence (G1), spatial presence (SP), involvement (INV), and experienced realism (REAL).

3.6 Dataset

All recorded data from the three experiments are made publicly available.3 This includes speaker-story mappings, head rotation data, NASA RTLX and effort scaling questionnaires, and SSQ, IPQ, and Weinstein questionnaire results.

3.7 Technical setup and equipment

The PC used for the subjective tests was running Windows 11 and equipped with an Intel Core i7-13700K with 64 GB RAM, a Samsung SSD 980 Pro 2 TB, and an NVIDIA RTX 4090 graphics card. Unity version 2019.4.17f1 was used to run all IVEs. An HTC Vive Pro 2 was connected to the PC, together with an RME Fireface UCX II sound card and Sennheiser HD 650 headphones. As described in Fremerey et al. (2024), the 360° videos were encoded using the High Efficiency Video Coding (HEVC) implementation of FFmpeg 6.0 (libx265) with a Constant Rate Factor (CRF) of 1 and chroma subsampling of 4:2:0, resulting in a visually lossless encoding while supporting hardware-accelerated decoding for smooth playback.

The audio post-processing is described in more detail in Fremerey et al. (2024). An FFT filter from Adobe Audition 1.5 with an FFT size of 1,892 was used to filter frequencies above 13–14 kHz, mitigating ambient noises from, e.g., lamps and cameras. The denoising noise reduction plugin from Adobe Audition was only necessary for 2–3 speakers; further audio peaks have been smoothed out. Normalization was applied according to the EBU R128 standard (EBU Recommendation, 2023) to all recorded audio signals.

To play back one speaker with an average sound pressure level of 60 dB(A), several measurements have been performed. Measurements were carried out with the GRAS KEMAR dummy head with torso, including pinna models GRAS KB0060 and KB0061, 1/2″ microphone capsules type 40AO, microphone preamplifier type 26CS, and IEPE conditioning modules M32. The dummy head was placed 1.3 m from a Genelec 8341A loudspeaker, representing the distance from the listener, placed in the middle of the circle of chairs, to one speaker in all three experiments. The loudspeaker was connected to a PC that played pink noise using VAUnity, the auralization engine used for both IVEs, 360° video, and CGI. To aim for a later sound pressure level of 60 dB(A) in the headphones, as a first step, the volume of the loudspeaker was adjusted in the sound card so that a sound pressure level of 60 dB(A) was measured at the same height of the artificial head using the NTI XL2 audio analyzer. At the same time, the input levels of the artificial head were measured with the microphone capsules integrated in the dummy head. Afterwards, headphones were placed on the dummy head. As a second step, pink noise was again played back via VAUnity, whereby the volume on the sound card was set so that the input level measured by the microphones of the dummy head was reached. These measurements and the respective settings of the sound card were performed for both diotic and binaural audio. For verification purposes, the measurement was done again with one speaker as the audio signal instead of pink noise to ensure that an average sound pressure level of 60 dB(A) was achieved for a speaker at a recording time of 10 s.

4 Results

For each trial, the speaker-story mappings, head-rotation values in Euler angles (pitch, yaw, and roll, respectively), and results for the NASA RTLX and effort scaling questionnaire have been recorded. Furthermore, the total time passed until the trial was finished by the subject or, if the subject had exceeded the maximum allowed time of 120 s, this respective maximum was recorded. In addition, the Weinstein noise sensitivity score, the SSQ scores before and after the test, and the IPQ scores per subject were obtained and calculated. As indicated in Supplementary Section 1.1 and Supplementary Figure S4, noise sensitivity scores are consistent across the three participant groups of the conducted experiments, which is why we decided not to investigate this further.

To investigate whether the independent variables had an influence on the dependent variables for the different auditory and visual conditions of the tests, an Aligned Rank Transform (ART) (Wobbrock et al., 2011) has been performed using R v4.4.2 with the ARTool package v0.11.1 (Kay et al., 2021), followed by a nonparametric analysis of variance (ANOVA) applied to the transformed data according to Wobbrock et al. (2011) and subsequent Bonferroni-corrected contrast tests. As Shapiro-Wilk tests revealed that the data were not normally distributed and Levene's test indicated a violation of homoscedasticity, it was decided not to perform a mixed analysis of variance. Since the recorded data contains very few outliers and not for every total story detected by boxplot outlier detection, as shown in Supplementary Figure S1, it was decided to analyze all subjects as they are and not to exclude any.

4.1 Influence of total number of stories and experimental condition on percentage of correctly assigned stories

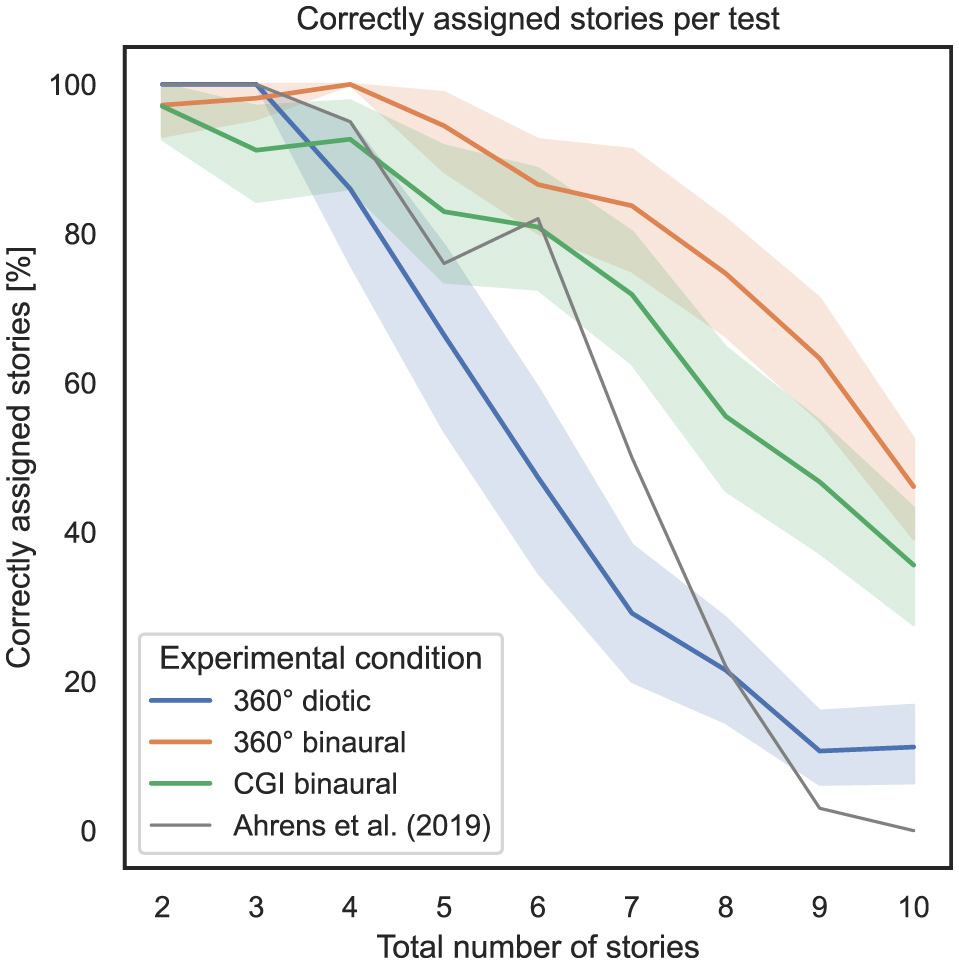

To answer RQ1 and RQ2 stated in Section 1, the influence of the total number of stories presented simultaneously and the experimental condition (i.e., specific experiment) on the percentage of correctly assigned stories as a measure of task performance is analyzed. Figure 3 shows, on the x-axis, the total number of stories presented simultaneously, and on the y-axis, the percentage of correctly assigned stories, with 95% confidence intervals (CI). The gray line represents the mean percentage of correctly assigned stories per total number of stories simultaneously presented for the study conducted by Ahrens et al. (2019).

Figure 3

Total number of stories (independent variable) vs. percentage of correctly assigned stories per test (dependent variable) across the three different experimental conditions (cf. RQ1, RQ2 in Section 1).

To compute the ART model, the number of correctly assigned stories per trial was defined as the dependent variable, the total number of simultaneously presented stories as the within-subject variable, and the experimental condition as the between-subject variable. Subsequently, a nonparametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.2. Subsequent Bonferroni-corrected contrast tests revealed that the experimental condition used in general had a significant impact on the percentage of correctly assigned stories; therefore, between 360° diotic and 360° binaural (p = 1.03*10−13), between 360° diotic and CGI binaural (p = 8.16*10−7) and between 360° binaural and CGI binaural (p = 3.9*10−4). Table 1 presents the results of Bonferroni-corrected contrast tests, with the percentage of correctly assigned stories as the dependent variable.

Table 1

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 5 | 360° diotic | 360° binaural | 1.08·10−6 |

| #S * T | 6 | 360° diotic | 360° binaural | 5.98·10−11 |

| #S * T | 6 | 360° diotic | CGI binaural | 1.43·10−7 |

| #S * T | 7 | 360° diotic | 360° binaural | 1.21·10−17 |

| #S * T | 7 | 360° diotic | CGI binaural | 9.66·10−9 |

| #S * T | 7 | 360° binaural | CGI binaural | 0.045 |

| #S * T | 8 | 360° diotic | 360° binaural | 2.59·10−13 |

| #S * T | 8 | 360° diotic | CGI binaural | 0.0001 |

| #S * T | 8 | 360° binaural | CGI binaural | 0.009 |

| #S * T | 9 | 360° diotic | 360° binaural | 9.02·10−10 |

| #S * T | 9 | 360° diotic | CGI binaural | 0.0003 |

| #S * T | 10 | 360° diotic | 360° binaural | 0.0004 |

Bonferroni-corrected contrast tests from ART model, dependent variable: percentage of correctly assigned stories.

Only significant effects reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ1, RQ2 in Section 1).

To examine the correspondence with regard to task performance, measured by the percentage of correctly assigned stories, between the experiments in this study and the study by Ahrens et al. (2019), Pearson correlation coefficients (PCC) were calculated for each experimental condition, which is a key aspect of RQ1. Between the 360° diotic and the test from Ahrens et al. (2019), a PCC of 0.81, p = 1.79*10−52 was calculated. Between the 360° binaural and the test from Ahrens et al. (2019), a PCC of 0.63, p = 1.2*10−37 was computed. Between the CGI binaural and the test from Ahrens et al. (2019), a PCC of 0.65, p = 3.47*10−38 was calculated.

In summary, regarding RQ1, it can be stated that hypothesis H1 is partially falsified concerning the correspondence of the percentage of correctly assigned stories. A strong correspondence to the results of the study by Ahrens et al. (2019) is evident only for the 360° diotic test. In contrast, the 360° binaural and CGI binaural experimental conditions only show a moderate correspondence with the findings of Ahrens et al. (2019). Regarding RQ2, hypothesis H2 can be confirmed, particularly in relation to the assertion that an increasing total number of stories presented simultaneously results in decreasing task performance. This effect is more visible in diotic compared to binaural audio conditions and in the CGI representation compared to 360° video.

4.2 Influence of total number of stories and experimental condition on task completion time

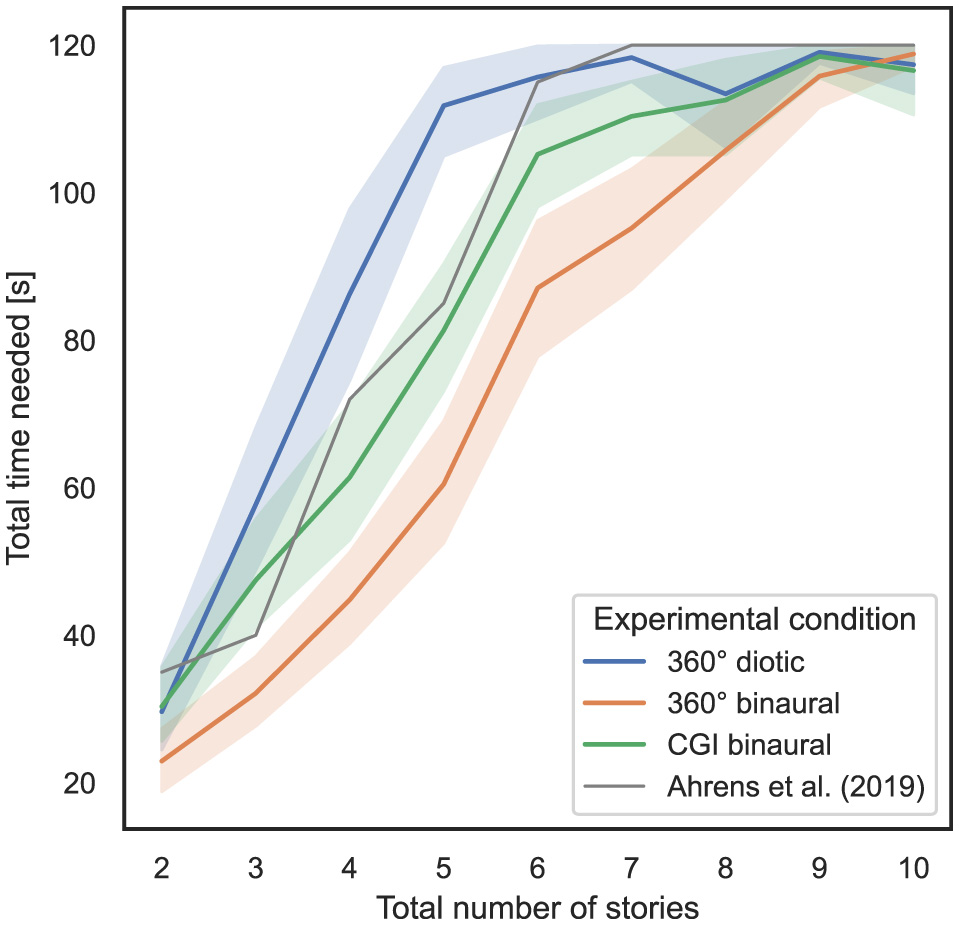

In order to answer RQ1 and RQ2 stated in Section 1, the influence of the total number of stories presented simultaneously and the experimental condition used on task completion time as a measure of task performance is analyzed. In Figure 4, the x-axis represents the total number of stories presented simultaneously, while the y-axis shows the total time required for all responses, including incorrect ones, with 95% CIs. The gray line represents the mean time required per total number of stories narrated simultaneously for the listeners of the experiment conducted by Ahrens et al. (2019). It should be noted that in that experiment, the subjects were still able to give their rating after the stories were played for 120 s, which was not possible in the experiments conducted in the present study. The last four values, therefore, for a total number of stories greater than seven, the values in the original study were greater than 120 s and clipped to 120 s for this presentation.

Figure 4

Total number of stories (independent variable) vs. total time needed per test (dependent variable) across the three different experimental conditions (cf. RQ1, RQ2 in Section 1).

To compute the ART model, the total time needed per trial was defined as a dependent variable, the total number of stories simultaneously presented was defined as a within-subject variable, and the experimental condition was defined as a between-subject variable. Subsequently, a nonparametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.3. Bonferroni-corrected contrast tests quantitatively prove that the experimental condition used in general had a significant impact on the total time needed; therefore, between 360° diotic and 360° binaural (p = 3.4*10−13), between 360° diotic and CGI binaural (p = 2.58*10−5) and between 360° binaural and CGI binaural (p = 3.37*10−5). Table 2 presents the results of Bonferroni-corrected contrast tests, with the total time needed as the dependent variable.

Table 2

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 3 | 360° diotic | 360° binaural | 0.0006 |

| #S * T | 4 | 360° diotic | 360° binaural | 4.97*10−11 |

| #S * T | 4 | 360° diotic | CGI binaural | 0.0001 |

| #S * T | 5 | 360° diotic | 360° binaural | 5.85*10−18 |

| #S * T | 5 | 360° diotic | CGI binaural | 2.79*10−7 |

| #S * T | 5 | 360° binaural | CGI binaural | 0.004 |

| #S * T | 6 | 360° diotic | 360° binaural | 1.3*10−7 |

| #S * T | 6 | 360° binaural | CGI binaural | 0.009 |

| #S * T | 7 | 360° diotic | 360° binaural | 3.98*10−7 |

| #S * T | 7 | 360° binaural | CGI binaural | 0.006 |

Results of Bonferroni-corrected contrast tests applied to computed ART model, dependent variable: total time needed.

Only significant effects reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ1, RQ2 in Section 1).

To examine the correspondence with regard to task performance, measured by the total time needed, between the experiments in this study and the study by Ahrens et al. (2019), Pearson correlation coefficients (PCC) were calculated for each experimental condition, which is a key aspect of RQ1. Between the 360° diotic and the test from Ahrens et al. (2019), a PCC of 0.83, p = 3.77*10−58 was calculated. Between the 360° binaural and the test from Ahrens et al. (2019), a PCC of 0.84, p = 1.81*10−86 was computed. Between the CGI binaural and the test from Ahrens et al. (2019), a PCC of 0.84, p = 6.54*10−82 was calculated.

In summary, regarding RQ1, hypothesis H1 is confirmed with respect to the correspondence with regard to total time needed. A strong correspondence with the findings of Ahrens et al. (2019) is evident across all experimental conditions. For RQ2, hypothesis H2 is also confirmed, particularly supporting the claim that an increasing total number of stories presented simultaneously leads to an increase in total time needed, which subsequently reduces task performance. This effect is more visible in diotic compared to binaural audio conditions and in the CGI representation compared to 360° video.

4.3 Influence of total number of stories and experimental condition on mental load

In order to answer RQ2 stated in Section 1, which aims to investigate how the total number of simultaneously presented stories, the audio condition (binaural vs. diotic), and the visual IVE representation (360° video vs. CGI) impact the task performance of subjects, the influence of the total number of stories presented simultaneously and the experimental condition on the perceived mental load of subjects is analyzed. After each trial, subjects were required to complete the NASA RTLX questionnaire, which consists of five questions on perceived effort, frustration, mental demand, performance, and temporal demand. Additionally, a scaling effort question was asked after each trial. The NASA RTLX performance rating was inverted before evaluation as the scale was inverted, as stated in Section 3.

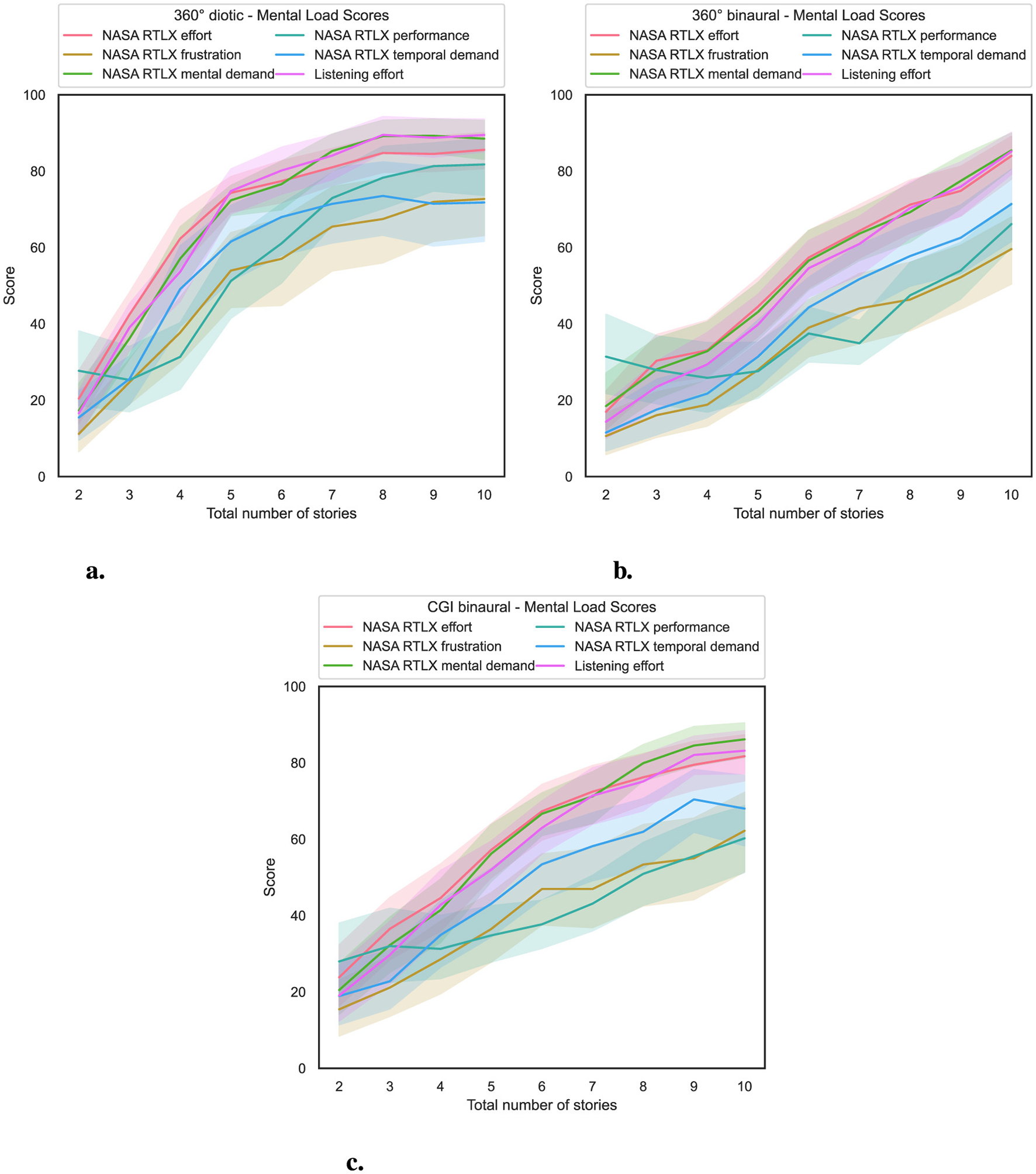

To investigate RQ2, in Figure 5, on the x-axis, the total number of stories presented simultaneously is shown, and on the y-axis, the mental load score of the specific dimension from the NASA RTLX or listening effort questionnaire.

Figure 5

Total number of stories (independent variable) vs. mental load score (dependent variable) for the three experimental conditions (cf. RQ2 in Section 1). (a) 360° diotic. (b) 360° binaural. (c) CGI binaural.

An increasing total number of simultaneously presented stories results in a corresponding increase in mental load.

For statistical analysis, an ART model was computed, with the respective score defined as the dependent variable, the total number of stories presented simultaneously as the within-subject variable, and the experimental condition as the between-subject variable. That was followed by a non-parametric mixed ANOVA, which was applied to the transformed data. The results of the nonparametric mixed ANOVAs are shown in Supplementary Table S1 and show that the interaction effect between the specific test and the score of the respective question used is statistically significant. This indicates that the effect of the experimental condition on the specific score varies. Hence, the difference between experimental conditions is not uniform at all story levels across all dimensions of NASA RTLX and listening effort. In addition, there was a notable effect of the total number of stories presented simultaneously on the scores for the respective questions used, again across all dimensions of the NASA RTLX and listening effort. Furthermore, across all dimensions of NASA RTLX and listening effort, the experimental condition had a significant effect on the scores of the respective questions.

The results of the Bonferroni-corrected contrast tests are shown in Supplementary Table S2. The results reveal that the experimental condition used had a significant impact on NASA RTLX and listening effort scores, depending on the number of stories presented simultaneously.

In summary, regarding RQ2, hypothesis H2 is confirmed, as the total number of stories presented simultaneously increases, resulting in a corresponding increase in mental load for participants, as shown in Figure 5. Significant effects were observed between the 360° diotic and binaural experimental conditions, as well as between the 360° diotic and CGI binaural experimental conditions, particularly when the total number of stories presented simultaneously exceeded four. However, no significant differences in mental load were found between the 360° binaural and the CGI binaural experimental conditions.

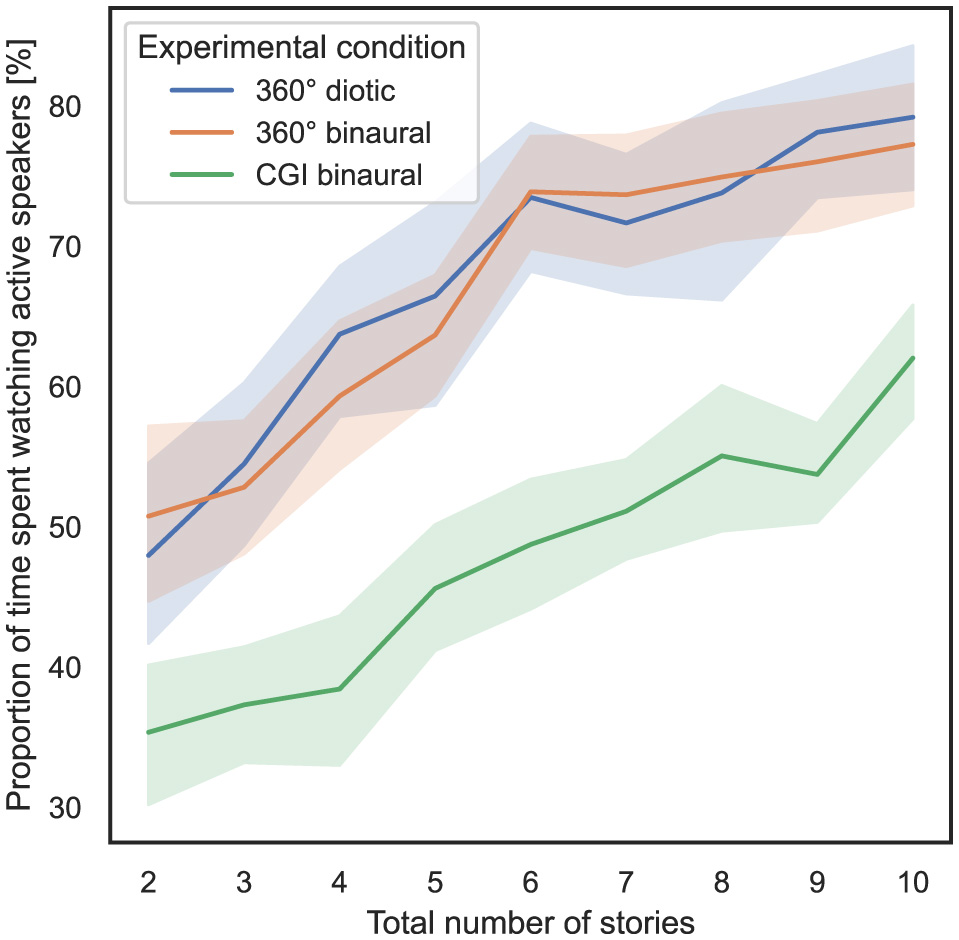

4.4 Influence of total number of stories and experimental condition on proportion of time spent watching active speakers

In order to answer RQ3 stated in Section 1, the influence of the total number of stories presented simultaneously and the experimental condition on the proportion of time spent watching active speakers, as defined in Section 3.5.2, is analyzed. In Figure 6, the total number of stories presented simultaneously is shown on the x-axis, and the proportion of time spent watching active speakers, with 95% CIs, is shown on the y-axis. For the three tests conducted, always 20 speakers were included in the IVE, and hence the scene was divided into 18° wide yaw angle ranges. The head rotation yaw values of the subjects were mapped to the specific ranges of active speakers, hence speakers who narrate a story in the specific trial.

Figure 6

Total number of stories (independent variable) vs. proportion of time spent watching active speakers per test (dependent variable) across the three different experimental conditions (cf. RQ3 in Section 1).

To compute the ART model, the proportion of time spent watching active speakers is defined as the dependent variable, the total number of stories presented simultaneously is defined as the within-subject variable, and the experimental condition is defined as the between-subject variable. Subsequently, a non-parametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.4. Bonferroni-corrected contrast tests show that the experimental condition used had a significant impact on the proportion of time spent watching active speakers between the 360° diotic and CGI binaural (p = 5.88*10−15) and between the 360° binaural and CGI binaural experimental conditions (p = 5.1*10−16). It did not have a significant impact between the 360° diotic and the 360° binaural experimental conditions (p = 0.685). Table 3 presents the results of Bonferroni-corrected contrast tests, with the total time needed as the dependent variable.

Table 3

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 2 | 360° binaural | CGI binaural | 0.004 |

| #S * T | 3 | 360° diotic | CGI binaural | 0.0006 |

| #S * T | 3 | 360° binaural | CGI binaural | 0.001 |

| #S * T | 4 | 360° diotic | CGI binaural | 2.14*10−8 |

| #S * T | 4 | 360° binaural | CGI binaural | 3.38*10−7 |

| #S * T | 5 | 360° diotic | CGI binaural | 8.55*10−8 |

| #S * T | 5 | 360° binaural | CGI binaural | 4.03*10−6 |

| #S * T | 6 | 360° diotic | CGI binaural | 5.15*10−10 |

| #S * T | 6 | 360° binaural | CGI binaural | 1.45*10−12 |

| #S * T | 7 | 360° diotic | CGI binaural | 1.49*10−7 |

| #S * T | 7 | 360° binaural | CGI binaural | 6.48*10−11 |

| #S * T | 8 | 360° diotic | CGI binaural | 1.3*10−5 |

| #S * T | 8 | 360° binaural | CGI binaural | 1.89*10−7 |

| #S * T | 9 | 360° diotic | CGI binaural | 2.73*10−10 |

| #S * T | 9 | 360° binaural | CGI binaural | 2.96*10−10 |

| #S * T | 10 | 360° diotic | CGI binaural | 0.0002 |

| #S * T | 10 | 360° binaural | CGI binaural | 0.0002 |

Results of Bonferroni-corrected contrast tests applied to computed ART model, dependent variable: proportion of time spent watching active speakers.

Only significant effects were reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ3 in Section 1).

In summary, regarding RQ3, hypothesis H3 can be partially confirmed, as the proportion of time spent watching active speakers significantly differs between the 360° diotic and CGI binaural experimental conditions and between the 360° binaural and CGI binaural experimental conditions. However, no significant difference is observed between the 360° diotic and binaural experimental conditions.

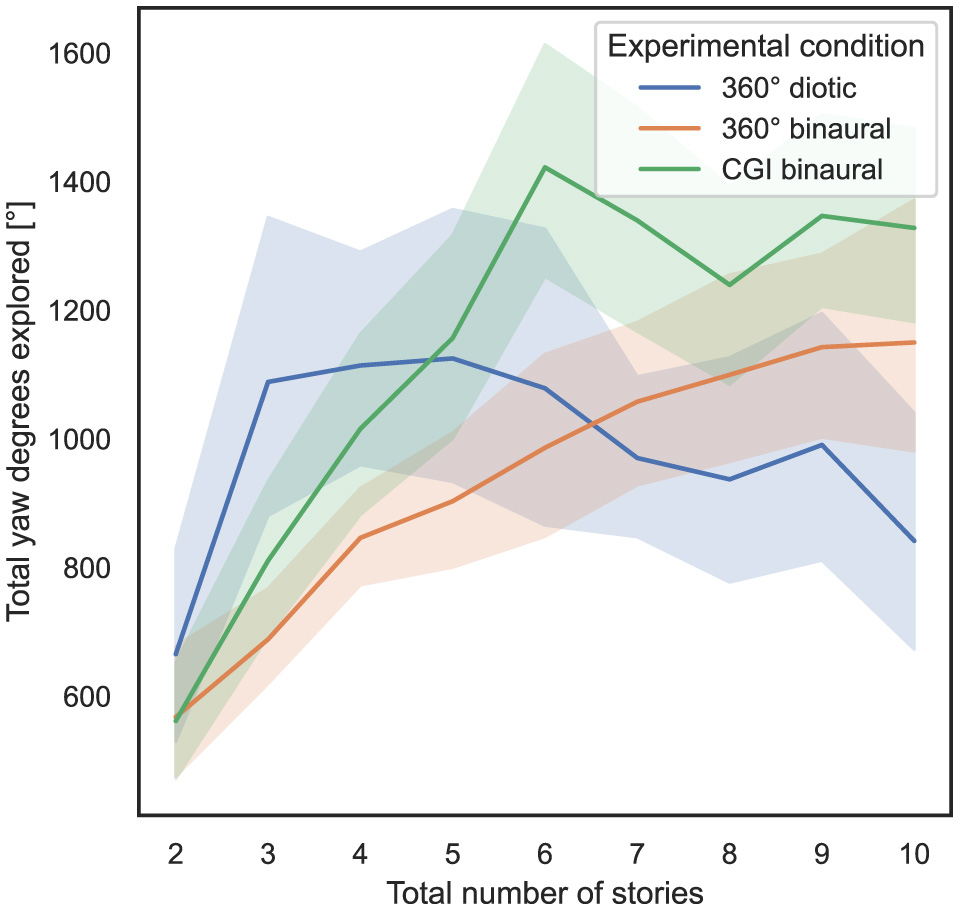

4.5 Influence of total number of stories and experimental condition on total yaw degrees explored

In order to answer RQ3 stated in Section 1, the influence of the total number of stories presented simultaneously and the experimental condition on the total amount of yaw degrees explored, as defined in Section 3.5.2, is analyzed. In Figure 7, the total number of stories presented simultaneously is shown on the x-axis, and on the y-axis, the total yaw degrees explored, with 95% CIs.

Figure 7

Total number of stories (independent variable) vs. total yaw degrees explored (dependent variable) across the three different experimental conditions (cf. RQ3 in Section 1).

As a result of the experiments, subjects primarily turned their heads and did not move them up or down more than necessary to look at the controller. Hence, in the following behavioral analysis, the focus is placed on yaw values.

To compute the ART model, the total yaw degrees explored were defined as the dependent variable, the total number of stories presented simultaneously was defined as the within-subject variable, and the experimental condition was defined as the between-subject variable. Subsequently, a nonparametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.5. Bonferroni-corrected contrast tests revealed that the experimental condition used had a significant impact on the total yaw degrees explored between the 360° diotic and the CGI binaural test (p = 0.012) and between the 360° binaural and CGI binaural experimental conditions (p = 0.004), but not between the 360° diotic and the 360° binaural experimental conditions (p = 0.867). Table 4 presents the results of Bonferroni-corrected contrast tests, with the total yaw degrees explored as the dependent variable.

Table 4

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 3 | 360° diotic | 360° binaural | 0.007 |

| #S * T | 6 | 360° diotic | CGI binaural | 0.027 |

| #S * T | 6 | 360° binaural | CGI binaural | 0.001 |

| #S * T | 9 | 360° diotic | CGI binaural | 0.016 |

| #S * T | 10 | 360° diotic | CGI binaural | 4.45*10−5 |

Results of Bonferroni-corrected contrast tests applied to computed ART model, dependent variable: total yaw degrees explored.

Only significant effects were reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ3 in Section 1).

In summary, regarding RQ3, hypothesis H3 can be partially falsified in relation to the total yaw degrees explored, as no significant differences were observed between most of the experimental conditions.

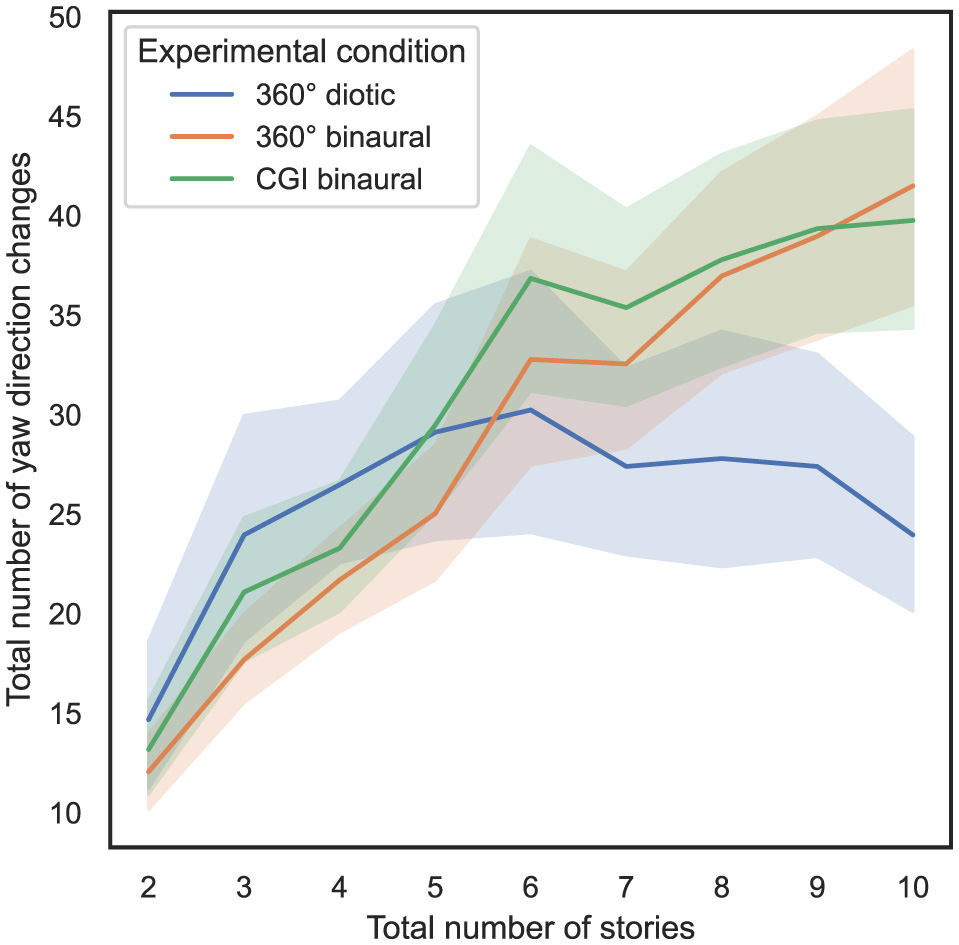

4.6 Influence of total number of stories and experimental condition on yaw direction changes

In order to answer RQ3 stated in Section 1, the influence of the total number of stories presented simultaneously and the experimental condition on the total amount of yaw direction changes, as defined in Section 3.5.2, is analyzed. In Figure 8, on the x-axis, the total number of stories presented simultaneously is plotted vs. the total number of yaw direction changes on the y-axis, with 95% CIs.

Figure 8

Total number of stories (independent variable) vs. total number of yaw direction changes (dependent variable) across the three different experimental conditions (cf. RQ3 in Section 1).

To compute the ART model, the total number of yaw direction changes was defined as dependent variable, the total number of stories presented simultaneously was defined as within-subject variable, and the experimental condition was defined as between-subject variable. Subsequently, a nonparametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.6. Bonferroni-corrected contrast tests reveal that the experimental condition used only had a significant impact on the total number of yaw direction changes between the 360° diotic and the CGI binaural test (p < 0.05). Table 5 presents the results of Bonferroni-corrected contrast tests, with the total number of yaw direction changes as the dependent variable.

Table 5

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 9 | 360° diotic | 360° binaural | 0.037 |

| #S * T | 9 | 360° diotic | CGI binaural | 0.023 |

| #S * T | 10 | 360° diotic | 360° binaural | 0.0004 |

| #S * T | 10 | 360° diotic | CGI binaural | 0.0004 |

Results of Bonferroni-corrected contrast tests applied to computed ART model, dependent variable: total number of yaw direction changes.

Only significant effects reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ3 in Section 1).

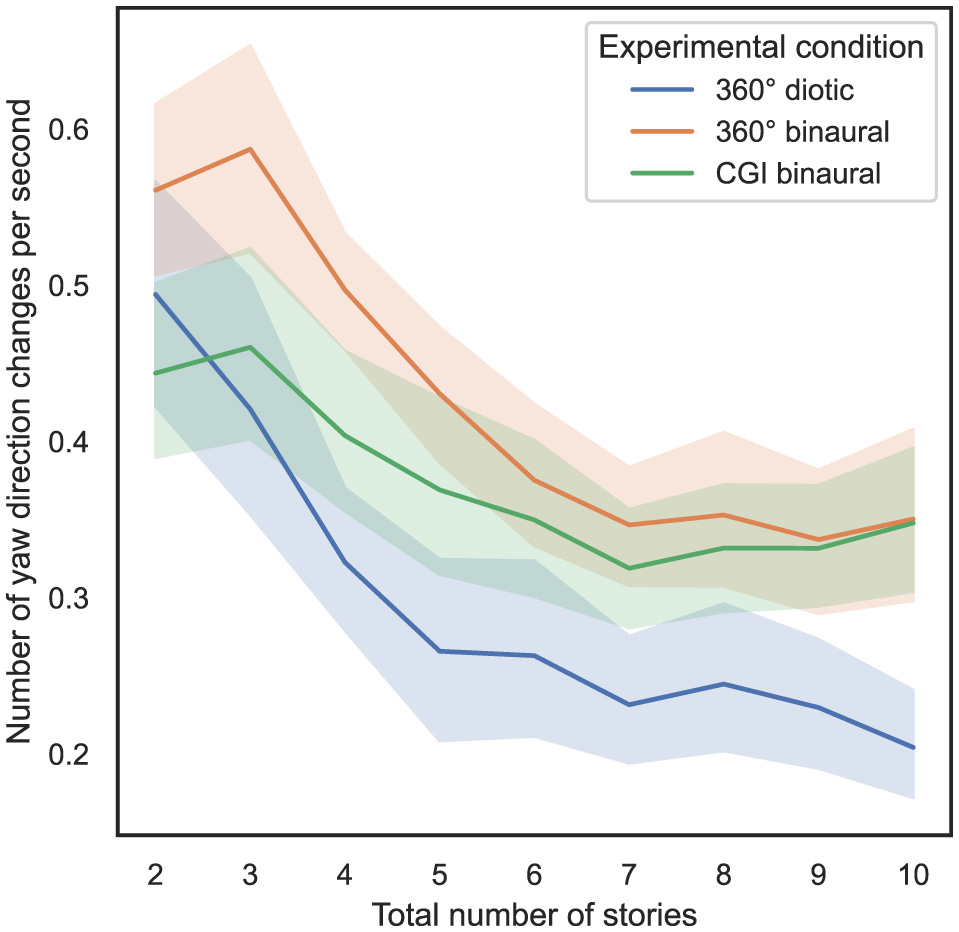

Additionally, in Figure 9 another variant of this analysis is shown, while on the y-axis the number of yaw direction changes per second is shown, with 95% CIs.

Figure 9

Total number of stories (independent variable) vs. number of yaw direction changes per second (dependent variable) across the three different experimental conditions (cf. RQ3 in Section 1).

From Figure 9, it can be observed that as the number of stories presented simultaneously increases, the number of yaw direction changes per second decreases. To compute the ART model, the number of yaw direction changes per second was defined as a dependent variable, the total number of stories presented simultaneously was defined as a within-subject variable, and the experimental condition was defined as a between-subject variable. Subsequently, a nonparametric mixed ANOVA has been applied to the transformed data, while the detailed results are stated in Supplementary Section 1.6. Bonferroni-corrected contrast tests reveal that the experimental condition used had a significant impact on the number of yaw direction changes per second between all different tests (p < 0.05). Table 6 presents the results of Bonferroni-corrected contrast tests, with the number of yaw direction changes per second as the dependent variable.

Table 6

| Contrast | #S | A | B | p-corr |

|---|---|---|---|---|

| #S * T | 3 | 360° diotic | 360° binaural | 0.029 |

| #S * T | 4 | 360° diotic | 360° binaural | 0.00006 |

| #S * T | 5 | 360° diotic | 360° binaural | 0.00005 |

| #S * T | 6 | 360° diotic | 360° binaural | 0.006 |

| #S * T | 7 | 360° diotic | 360° binaural | 0.008 |

| #S * T | 8 | 360° diotic | 360° binaural | 0.028 |

| #S * T | 9 | 360° diotic | 360° binaural | 0.02 |

| #S * T | 9 | 360° diotic | CGI binaural | 0.03 |

| #S * T | 10 | 360° diotic | 360° binaural | 0.0008 |

| #S * T | 10 | 360° diotic | CGI binaural | 0.0008 |

Results of Bonferroni-corrected contrast tests applied to computed ART model, dependent variable: number of yaw direction changes per second.

Only significant effects were reported.

#S * T refers to the total number of stories presented simultaneously * the experimental condition specified in “A” and “B” (cf. RQ3 in Section 1).

In summary, regarding RQ3, hypothesis H3 can be partially falsified in relation to the total number of yaw direction changes, as no significant differences were observed between most of the experimental conditions. Regarding the number of yaw direction changes per second, significant differences can mainly be observed between the 360° diotic and binaural experimental conditions across almost all levels of total stories presented simultaneously.

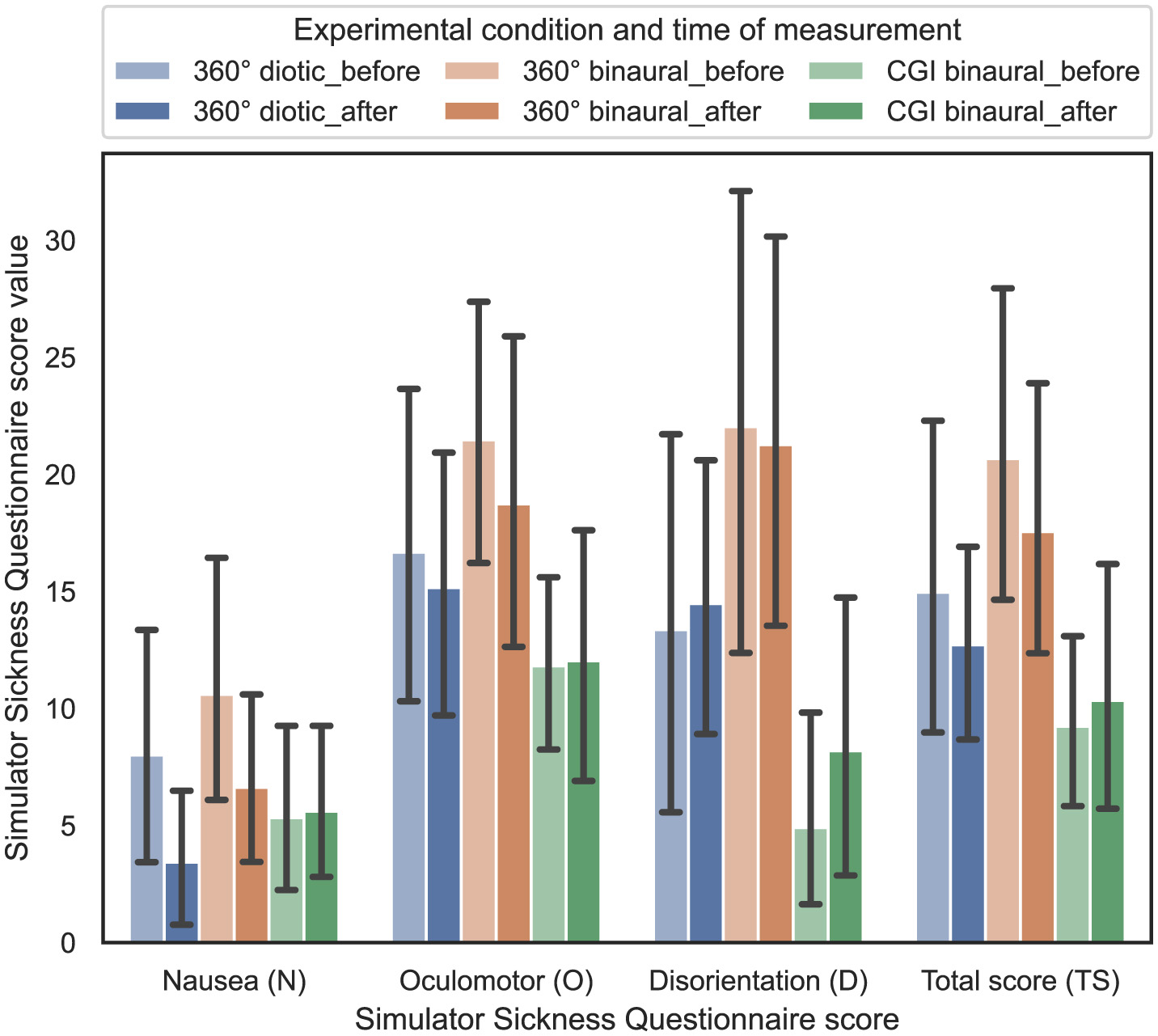

4.7 Influence of experimental condition and time of measurement on simulator sickness

In order to answer RQ4 stated in Section 1, the influence of the experimental condition and time of measurement on the perceived amount of simulator sickness is analyzed. Based on Kennedy et al. (1993), three different scores of the SSQ were calculated from the questions, resulting in nausea (N), oculomotor (O), and disorientation (D). From these three single scores, the total score (TS) was computed. In Figure 10, the resulting SSQ scores before and after each test are shown with 95% CIs.

Figure 10

Simulator Sickness Questionnaire score values (dependent variable) vs. experimental condition and time of measurement (independent variable), that is, before or after the test across the three different experimental conditions (cf. RQ4 in Section 1).

For statistical analysis, an ART model has been computed for all four different SSQ scores. In contrast, the respective SSQ score was defined as the dependent variable, while the time of measurement—before or after the test—was defined as the within-subject variable. Afterwards, nonparametric one-way ANOVAs were calculated for the four different SSQ scores. The results revealed that the effect of the time of measurement is statistically significant for the nausea score [F(1, 165) = 4.27, p = 0.04]. Further, the results revealed that the effect of the used experimental condition is statistically significant for the oculomotor score [F(2, 146) = 4.49, p = 0.013], the disorientation score [F(2, 146) = 8.41, p = 0.0003], and the total score [F(2, 146) = 6.88, p = 0.001]. However, subsequent Bonferroni-corrected contrast tests indicate that the effect of the combination of experimental condition and time of measurement on each of the SSQ scores was not significant (p>0.05).

In summary, regarding RQ4, hypothesis H4 is confirmed, as simulator sickness levels (before/after the experiment) do not differ significantly between the visual IVE representations or audio conditions.

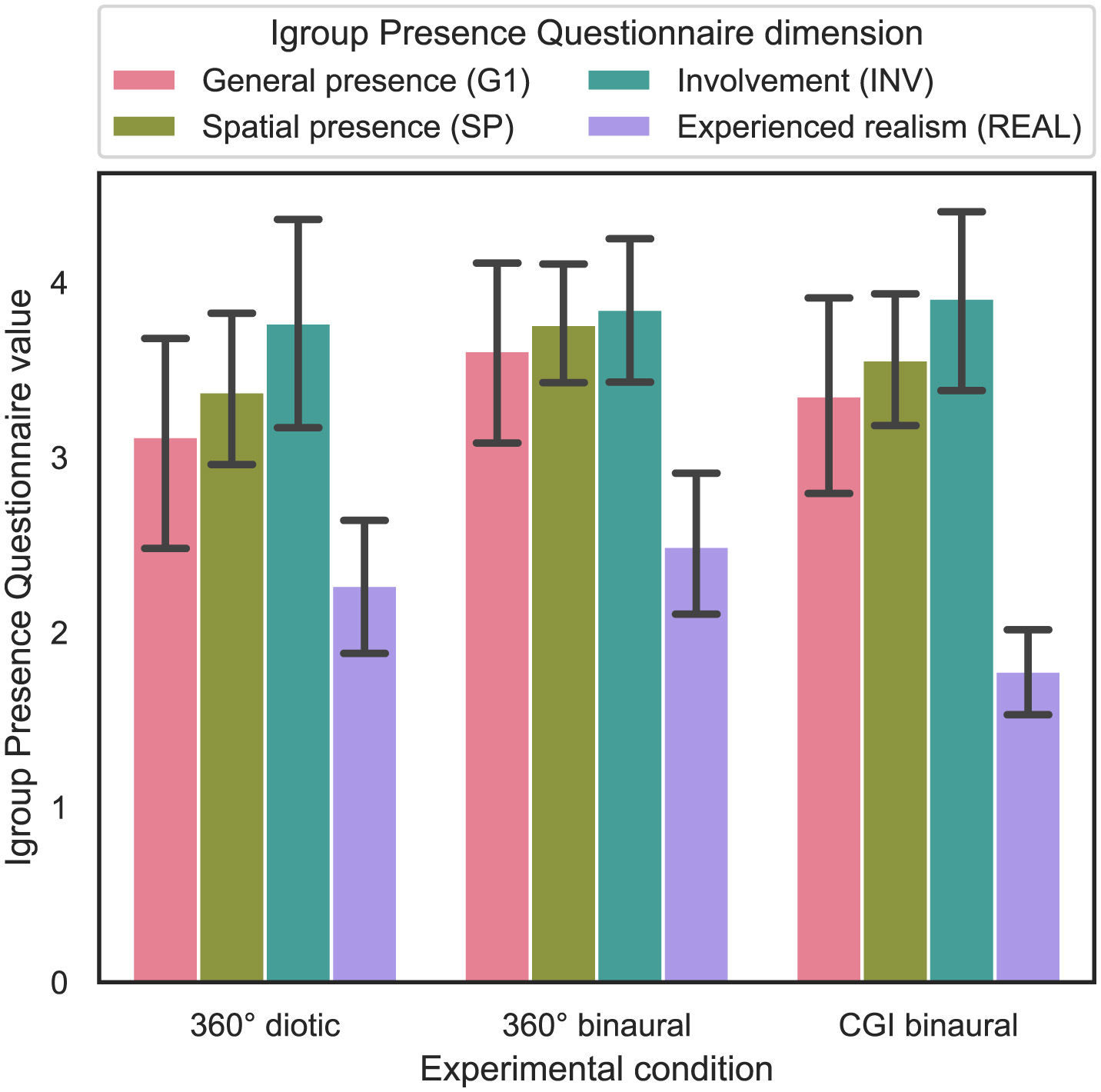

4.8 Influence of experimental condition on presence

In order to answer RQ4 stated in Section 1, the influence of the experimental condition on the perceived amount of presence is analyzed. In all three subjective tests, participants were asked to complete the IPQ once after the experiment. Based on Schubert et al. (2001), four different dimensions of the IPQ were calculated from the questions, namely general presence (G1), spatial presence (SP), participation (INV) and experienced realism (REAL). In Figure 11, the resulting IPQ scores are shown with 95% CIs.

Figure 11

Experimental condition (independent variable) vs. Igroup Presence Questionnaire dimension values (dependent variable) across the three different experimental conditions (cf. RQ4 in Section 1).

For statistical analysis, an ART model was computed for all four different IPQ dimensions, with the IPQ score as the dependent variable and the experimental condition as a between-subject variable. Subsequently, a nonparametric one-way ANOVA was calculated for the four different IPQ dimensions. The results show that only the effect of the experimental condition on the REAL dimension is statistically significant [F(2, 92) = 4.43, p = 0.015]. Subsequent Bonferroni-corrected contrast tests indicate that the effect of the experimental condition on the REAL dimension is only statistically significant between the 360° binaural and the CGI binaural experimental conditions, also reflected by the means (p = 0.015, 2.5 vs. 1.78). For the other dimensions of the IPQ, no statistically significant effects could be found.

In summary, regarding RQ4, hypothesis H4 is falsified, as presence levels do not differ significantly between the visual IVE representations or audio conditions, except for the REAL dimension, where a significant difference was observed between the 360° binaural and CGI binaural experimental conditions.

5 Discussion

This study proposes a psychometric method to investigate audiovisual interaction effects by measuring cognitive performance in three different audiovisual experimental conditions with good speech intelligibility. To do so, either 360° video or CGI was used for the visual representation of speakers and two different acoustic conditions, diotic and binaural audio. To this end, three different experimental conditions were investigated between subjects in three experiments: 360° diotic, 360° binaural, and CGI binaural. In all three experiments, subjects had the same task of correctly assigning the story to the respective speaker, while up to 10 stories were played simultaneously, and visually, all 20 possible speakers were always shown. Understanding cognitive performance in these contexts, even under extreme conditions with 10 concurrent speakers, mainly facilitates a deeper understanding of audiovisual interplay and the limitations of attentional mechanisms using a realistic and controlled IVE setting. The observed differences between CGI and 360° video conditions, particularly regarding the absence of lip-sync in the CGI scenario, underline the role of audiovisual interactions in evaluating audiovisual scene analysis performance. Although the hypotheses did not explicitly focus on audiovisual interaction, the results clearly demonstrate that subtle audiovisual cues, such as synchronized speech movements, significantly impact cognitive load and task performance. This study quantifies the scalability of audiovisual performance and cognitive load, leading to an understanding of audiovisual aspects that extends beyond simplified experimental scenarios often used in previous research. It also provides a valuable approach for improving real-world classroom acoustics in the future, for example, by using the integrated Virtual Acoustics naturalization software to simulate specific classroom acoustic settings. The answers to the four research questions raised at the beginning of this study in Section 1 and whether the four hypotheses also stated could be verified or falsified will be discussed as follows.

5.1 Correspondence of task performance with a previous study by Ahrens et al.

With RQ1, cf. Section 1, it was aimed to investigate how the task performance correlates with the results of Ahrens et al. (2019) for different levels of the total number of stories presented simultaneously across the two visual IVE representations (360° video vs. CGI) and audio conditions (binaural vs. diotic). In H1, cf. Section 1, it was hypothesized that task performance in both visual IVE representations (360° video and CGI) shows a strong correspondence with the performance observed in the study by Ahrens et al. (2019), regardless of whether the audio condition is binaural or diotic.