- 1School of Journalism and New Media, Xi'an Jiaotong University, Xi'an, Shaanxi, China

- 2Research Center of New Media and Rural Revitalization, Xi'an, Shaanxi, China

Objective: With the rapid development and widespread adoption of generative artificial intelligence (GenAI) technologies, their unique characteristics—such as conversational capabilities, creative intelligence, and continuous evolution—have posed challenges for traditional technology acceptance models (TAMs) in adequately explaining user adoption intentions. To better understand the key factors influencing users' acceptance of GenAI, this study extends the AIDUA model by incorporating system compatibility, technology transparency, and human-computer interaction perception. These variables are introduced to systematically explore the determinants of users' intention to adopt GenAI. Furthermore, the study examines the varying mechanisms of influence across different user groups and application scenarios, providing theoretical insights and practical guidance for optimizing and promoting GenAI technologies.

Methods: During the data collection phase, this study employed a survey method to measure behavioral intentions and other key variables within the proposed framework. The survey design included demographic information about the respondents as well as detailed information related to their use of GenAI. In the data processing and analysis phase, a Structural Equation Modeling (SEM) approach was utilized to systematically examine the path relationships among the variables. Additionally, to compare the differences in variable relationships across different subgroups, a multi-group structural equation modeling(MGSEM) analysis was conducted.

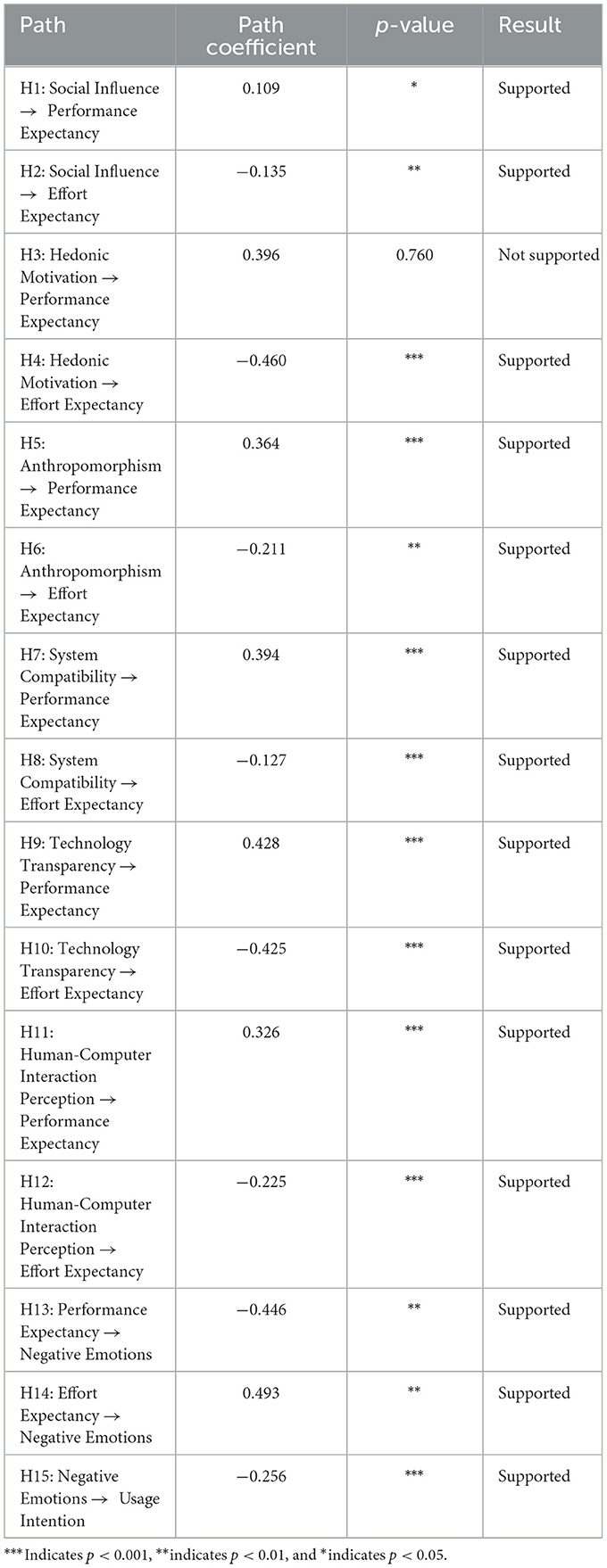

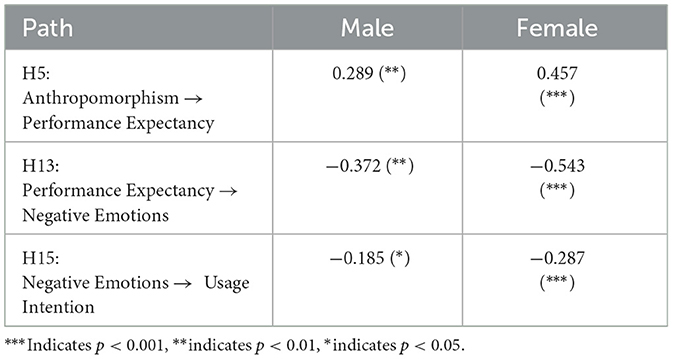

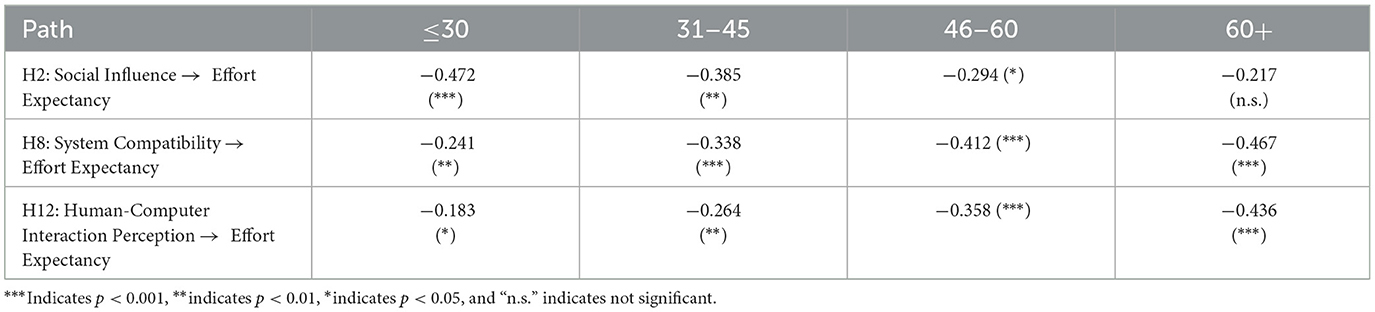

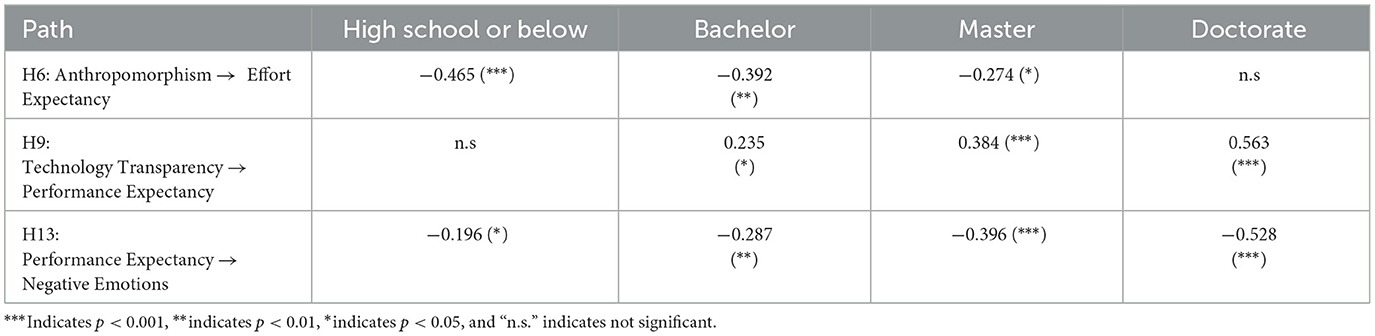

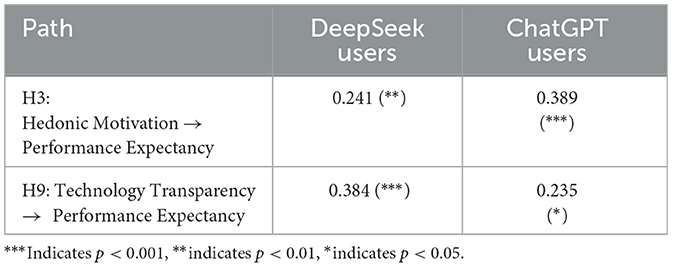

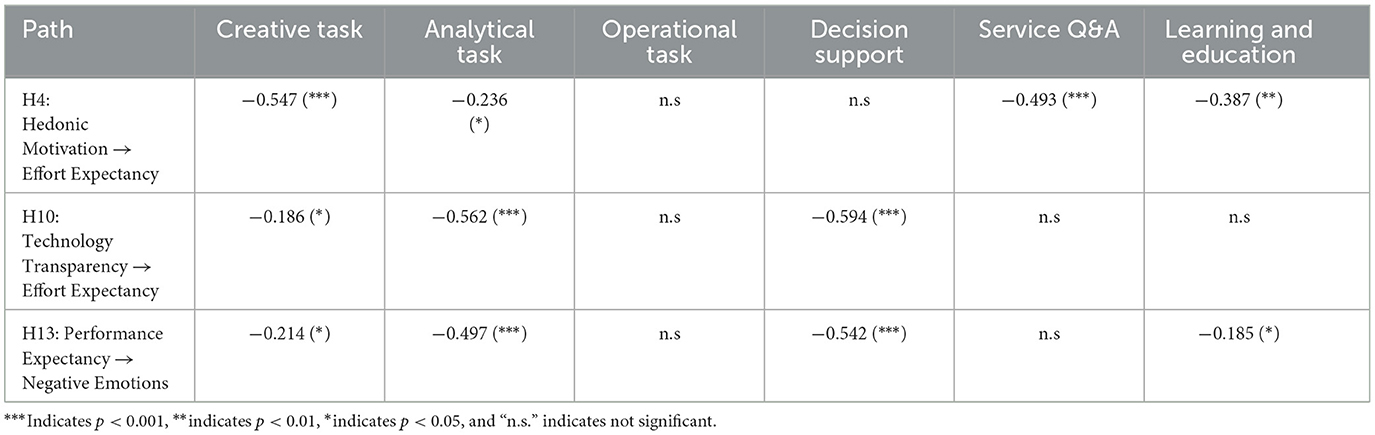

Results: (1) Effects on Key Expectations: Social influence significantly enhances performance expectancy (β = 0.109, p < 0.05) but negatively impacts effort expectancy (β = −0.135, p < 0.01). Hedonic motivation notably mitigates effort expectancy (β = −0.460, p < 0.001), yet shows no significant effect on performance expectancy (β = 0.396, p = 0.76). The newly extended variables—technological transparency (β = 0.428, p < 0.001), system compatibility (β = 0.394, p < 0.001), and human-computer interaction perception (β = 0.326, p < 0.001)—demonstrate positive influences on performance expectancy while generally mitigating effort expectancy. (2) Emotional Mechanisms: Performance expectancy significantly mitigates negative emotions (β = −0.446, p < 0.01), while effort expectancy significantly increases negative emotions (β = 0.493, p < 0.001). Negative emotions exert a significant negative influence on usage intention (β = −0.256, p < 0.001). (3) The MGSEM analysis revealed significant heterogeneity in the extended AIDUA model paths across different user segments. Specifically, systematic variations were observed across demographic characteristics (gender, age, and educational level), occupational backgrounds, and usage patterns (task types and AI tool preferences). These findings underscore the heterogeneous nature of generative AI acceptance mechanisms across diverse user populations and usage contexts.

Discussion: This study reveals several key findings within the extended AIDUA model. Our results indicate that technological transparency emerges as the strongest predictor of performance expectancy, alongside system compatibility and human-computer interaction perception, significantly enhancing users' perceived system performance. Regarding effort expectancy, hedonic motivation and technological transparency demonstrate the most prominent effects, implying that system design should emphasize user experience enjoyability and transparency. Notably, the lack of significant influence of hedonic motivation on performance expectancy, contradicting our initial hypothesis. Furthermore, the MGSEM analysis reveals significant heterogeneity in acceptance mechanisms across user groups, providing crucial implications for the differentiated design of GenAI systems tailored to diverse user needs.

1 Introduction

With the rapid advancement of Artificial Intelligence Generated Content (AIGC), artificial intelligence technology is profoundly transforming various facets of societal production and individual daily life (Wu et al., 2024). AIGC represents a burgeoning technology that leverages AI models to automatically generate multimodal content—including images, text, and videos—tailored to user requirements. The widespread application of this technology is not only reshaping creative production and problem-solving processes but also highlighting the extensive potential of AI technologies across a diverse range of user demographics (Setiawan et al., 2023). The launch of ChatGPT, in particular, has propelled AIGC into the limelight. Developed by OpenAI, ChatGPT is a sophisticated language generation model capable of comprehending complex human language and producing human-like conversational content. Its responses are distinguished by natural interactivity, personalization, and high accuracy. Since its release, ChatGPT has captivated societal interest and demonstrated transformative potential in numerous fields, including education, healthcare, research assistance, and content creation (Wang Y. et al., 2023).

The continuous evolution and differentiated development of AIGC tools have resulted in a diverse array of intelligent applications. ChatGPT, as an advanced natural language generation model, excels at providing engaging conversational interfaces, thereby enhancing user engagement and interactivity (Naveed et al., 2023). Conversely, DeepSeek serves as an AI platform dedicated to data analysis and reasoning, prioritizing scientific reasoning and transparent data processing to offer robust logical support and decision-making assistance (Lu et al., 2024). This emphasis on transparent data logic fosters user trust and cognitive involvement. The distinct functional goals and technical characteristics of these tools may lead to divergent pathways in user acceptance (Xu et al., 2022). However, research examining the mechanisms of user acceptance and the differentiated impacts of ChatGPT and DeepSeek remains limited, creating a theoretical gap that restricts a comprehensive understanding of how users perceive and select among various AI tools and the behavioral mechanisms underlying such choices.

Traditional technology acceptance models, such as the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT), serve as important theoretical frameworks for studying user technology adoption behavior. Proposed by Davis, TAM predicts users' behavioral intentions to adopt a technology primarily through two core variables: perceived usefulness and perceived ease of use (Ammenwerth, 2019). In contrast, UTAUT integrates multiple extended variables to enhance its generalizability, including social influence, performance expectancy, and effort expectancy, among others (Kayali and Alaaraj, 2020). These models have demonstrated high applicability in explaining user adoption behaviors for various technologies with linear functionalities, such as office software, social media tools, and mobile payment platforms (Salimon et al., 2023).

However, these traditional models exhibit several significant limitations when applied to studying artificial intelligence technologies characterized by higher complexity and dynamic features, such as AIGC tools: (1) Overlooking complex interaction experiences and anthropomorphism. TAM and UTAUT are more suited to stable technologies with straightforward functionalities, focusing on aspects like improving work efficiency or ease of use (Kim, 2014). In contrast, AIGC tools, such as ChatGPT and DeepSeek, emphasize rich human-computer interaction and anthropomorphic features as their core appeal (Chou et al., 2022). By leveraging natural language processing, these tools engage users in deep, meaningful dialogues, offering a highly interactive and anthropomorphic experience, which represents a new user experience paradigm. (2) Absence of a transparency dimension. AIGC tools often exhibit a substantial “black-box” attribute, where users are unable to clearly understand the tools' operational logic or the rationale behind their generated content (Carabantes, 2020). Transparency, as a critical dimension, plays a pivotal role in enhancing user trust and driving adoption behavior. However, neither TAM nor UTAUT incorporates transparency into their frameworks, overlooking its unique impact in the context of artificial intelligence technologies. (3) Limited focus on system compatibility. Traditional TAMs largely overlook system compatibility, despite its significance in shaping user adoption decisions. AIGC tools, characterized by diverse application scenarios, rely heavily on seamless integration across platforms, environments, and workflows, which directly influences cross-system operability and user collaboration (Liu et al., 2024). However, traditional models fail to capture the dynamic interplay between technological features and application contexts, limiting their explanatory power in the adoption of AIGC tools.

To address the aforementioned limitations and better respond to the user evaluation mechanisms for complex AI tools such as ChatGPT and DeepSeek, this study extends the AIDUA framework by innovatively introducing three key variables: system compatibility, technology transparency, and human-computer interaction perception. These variables not only enhance the theoretical multidimensional explanatory power for understanding the acceptance pathways of AIGC tools but also provide critical empirical support for optimizing the design and user experience of AI-generated technologies.

The introduction of System compatibility reflects a critical evaluation of technology acceptance theories in AIGC contexts (Ma and Huo, 2023). Drawing from the diffusion of innovations theory, system compatibility traditionally emphasizes the alignment of technology with user values. However, this approach does not fully capture the integration challenges specific to AIGC tools in complex application environments. To address this limitation, this study refines system compatibility into two dimensions: functional compatibility, which examines the alignment with workflows and user practices (Schuengel and van Heerden, 2023), and technical integration, which emphasizes interoperability and cross-platform adaptation (Vorm and Combs, 2022). These refinements offer a multidimensional framework that enhances traditional models and provides deeper insights into the adoption mechanisms of AIGC tools.

Technology transparency directly tackles the “black-box effect” inherent in AI systems, a significant challenge that undermines user trust (Harris and Blair, 2006). While existing TAMs partially address explainability, they often overlook transparency demands unique to AIGC tools. Based on research into AI trust formation (Kuhlmann et al., 2019), this study reconceptualizes transparency across two dimensions: transparency cognition, which focuses on users' understanding of the decision-making processes, and operational clarity, which highlights the predictability of system functionalities. By offering a structured framework for understanding AI transparency, this study provides critical insights into mitigating trust issues caused by system opacity.

Human-computer interaction perception bridges the gap in evaluating anthropomorphic and interactive experiences in AI systems (Wang and Qiu, 2024). Conventional models such as UTAUT fail to account for the intelligent and dynamic characteristics unique to AIGC systems. To address these shortcomings, this study refines human-computer interaction perception into two dimensions: interaction naturalness, which reflects semantic understanding and conversational fluency, and response sensitivity, which evaluates the precision and efficiency of task execution. These constructs advance the boundaries of technology acceptance research and contribute to a user-centered framework for analyzing AIGC adoption mechanisms.

In the context of AIGC, technology acceptance behavior is shaped by diverse factors, including individual characteristics (such as age and education level), usage scenarios (occupation), and task requirements (AI tool type, task type), which not only influence technology preferences and adoption willingness but also alter the strength of key factors driving acceptance pathways, thereby introducing heterogeneity in behavioral mechanisms across different user groups. To address this complexity, this study employs MGSEM to segment the sample and systematically compare the effects of key variables on adoption pathways across identified user groups. By uncovering these group-level differences, this approach provides both theoretical insights and practical guidance for designing personalized strategies to enhance the adoption of AIGC tools.

Building on these analyses, this study introduces significant advancements based on the AIDUA framework to better capture the unique adoption mechanisms of AIGC tools. The primary contributions are reflected in the following dimensions: (1) Theoretical Framework Expansion: By incorporating three critical dimensions—system compatibility, technological transparency, and human-computer interaction perception—into the AIDUA model, this study provides a refined framework to explain technology acceptance behaviors in AI-driven scenarios. These dimensions address the limitations of existing models and offer novel insights into the adoption mechanisms of AI technologies in complex application environments. (2) Differentiated Application Research: This study compares two functionally distinct AIGC tools—ChatGPT and DeepSeek—to investigate how differences in technological features shape user adoption pathways. This comparative analysis enriches the practical applications of technology acceptance frameworks within the AIGC domain and provides empirical evidence for understanding the adoption mechanisms specific to different AI tools. (3) Exploration of Individualized User Behavior: Leveraging MGSEM, this study examines the moderating effects of user characteristics—such as age, education, profession, and task type etc.—on AIGC adoption pathways. By uncovering heterogeneous mechanisms in user acceptance behavior, this research provides theoretical insights and practical strategies for personalized tool design, targeted promotion, and user experience optimization.

To achieve the research objectives, this study employed a questionnaire survey designed around core variables, targeting user groups with diverse backgrounds in age, education, and occupation to ensure representativeness. SEM was used to assess the fit of the theoretical framework and examine relationships between variables. Additionally, MGSEM explored differences in technology acceptance pathways between ChatGPT and DeepSeek, as well as group-level variations based on user characteristics.

The structure of this paper is as follows: Section 1: Introduction. This section provides a systematic overview of the research background and theoretical gap, clarifying the research questions as well as their theoretical and practical significance. Section 2: Literature Review. This section reviews existing studies from three dimensions: user adoption intentions for generative AI, TAMs, and cognitive evaluation theories, identifying theoretical gaps. Section 3: Theoretical Framework. Based on the literature review, this section constructs an integrated research model and proposes corresponding research hypotheses. Section 4: Research Design. This section details the operationalization of variables, data collection methods, and analytical strategies. Section 5: Empirical Analysis. This section presents descriptive statistics, reliability and validity tests, hypothesis testing results, and an in-depth discussion of the findings. Section 6: Research Conclusions. This section summarizes the main findings, discusses theoretical contributions and practical implications, and highlights research limitations and directions for future studies.

2 Literature review

2.1 Integration of generative AI users' acceptance willingness: a systematic literature review from the two-dimensional perspective of technical and social attributes

GenAI have developed at an unprecedented pace. Applications based on large language models (LLMs), such as ChatGPT, have significantly expanded the boundaries of human-computer interaction, driving transformative changes across various domains, including education, healthcare, and creative industries. Unlike traditional information technologies, Generative AI exhibits a high degree of autonomy, complexity, and intelligence, with technical characteristics and user interaction contexts that are notably more dynamic and flexible. These unique features have introduced unparalleled complexity to the mechanisms underlying user acceptance intentions, posing significant challenges to existing theoretical frameworks.

A systematic review of the literature reveals three key limitations in current research: First, most studies adopt a single perspective—either technological or social—to analyze user behavior, resulting in the phenomenon of “theoretical silos.” For instance, studies based on TAM primarily focus on technological functionality variables while neglecting users' socio-emotional needs. Conversely, research rooted in social cognition emphasizes interactive experiences but overlooks the foundational influence of technological usability on user decision-making (Silva, 2015). Second, there is insufficient coverage of critical variables, particularly in the emerging Generative AI context. Variables such as technology transparency, system compatibility, and human-computer interaction perception have yet to be systematically integrated into user acceptance models. Third, while traditional technology acceptance frameworks (such as TAM and UTAUT) are effective in explaining rational decision-making, they lack the necessary explanatory power to capture the complex mechanisms involving emotional, interactive, and social experiences within Generative AI environments.

To address these research gaps, this study conducts a systematic literature review, organizing existing findings along two dimensions: technological attributes and social attributes. Based on this review, an integrated analytical framework is proposed to provide a more comprehensive understanding of user acceptance mechanisms in the context of Generative AI.

In the study of user adoption intentions for GenAI, the rational perspective based on technological attributes remains one of the dominant approaches. Grounded in the TAM, the constructs of perceived usefulness and perceived ease of use are considered core factors in predicting users' acceptance behaviors (Granić and Marangunić, 2019). Research has shown that when users believe that GenAI can effectively improve productivity or learning outcomes, their adoption intentions are significantly enhanced. The UTAUT further extends these core constructs by introducing additional dimensions such as performance expectancy, effort expectancy, and facilitating conditions, all of which have been empirically verified to have strong predictive power (Legris et al., 2003).

However, the inherent complexity of GenAI presents new challenges to these traditional TAMs. Models such as the TAM and the UTAUT often fail to account for certain characteristics that distinguish GenAI from conventional technologies. For example, recent research has started to explore the impact of novel variables, including transparency and system compatibility, which are particularly relevant to the unique features of GenAI. Transparency in the context of technology refers to the degree to which information is communicated to users in an open and clear manner (Gilpin et al., 2018). This variable is particularly significant in building users' trust in the technology and in alleviating their anxieties about its use. Studies suggest that when GenAI provides users with more transparent information—such as the basis for content generation or clear explanations of algorithmic intent—adoption intentions are markedly improved (Zerilli et al., 2022). System compatibility, by contrast, emphasizes the degree to which new technologies align with users' existing technological ecosystems, workflows, and cognitive habits. The multi-scenario applications of GenAI require that it be seamlessly integrated into users' existing systems, such as office productivity tools or enterprise management platforms, to reduce usage barriers and migration costs (Bansal et al., 2019). Nonetheless, current research remains insufficient in its examination of these critical variables, particularly in the context of individual users' experiences with GenAI. This highlights the need for more in-depth studies to better understand how these emerging factors influence user adoption intentions.

The application of GenAI is influenced not only by its technological attributes but also significantly by its social attributes, which have emerged as a critical dimension in the study of user adoption intentions. Specifically, users' acceptance behaviors toward GenAI are not solely driven by rational cognition but are also closely intertwined with psychological emotions and social perceptions.

Anthropomorphism is one of the central topics in the exploration of social attributes associated with GenAI (Złotowski et al., 2015; Salles et al., 2020). Drawing from the Computers Are Social Actors theory and social response theory, it has been found that when GenAI exhibits human-like characteristics—such as emotional expression, natural tones, and responsive interactions—users perceive it as a social entity. This perception fosters emotional bonds, thereby enhancing acceptance. Research has shown that when tools such as ChatGPT incorporate warm qualities—such as humor or personalized tones—while responding to user queries, user satisfaction and trust are significantly increased (Schneider et al., 2019).

Human-Computer Interaction Perception constitutes a key social cognitive dimension shaping user adoption intentions (Diederich et al., 2022). Grounded in social presence theory and media richness theory, users' interactive experiences with GenAI directly influence their attitudes and intentions for continued use. Studies demonstrate that when users perceive the interaction to exhibit high levels of conversational coherence, context comprehension, and social reciprocity, their acceptance significantly improves. Unlike anthropomorphism, which emphasizes the human-like attributes of Artificial Intelligence, Human-Computer Interaction Perception focuses more on the quality and experience of the interaction process. It serves as a crucial bridge connecting the technological capabilities of GenAI with the psychological responses of users.

Emotional factors also play a pivotal role in the study of social attributes. Drawing from affective computing theory, users' emotional reactions during technological interactions directly regulate their adoption intentions. Positive emotions—such as satisfaction and trust—significantly enhance technology acceptance, whereas negative emotions—such as anxiety or concern about the technology—can hinder decision-making (Fernández-Batanero et al., 2021; Gelbrich and Sattler, 2014).

Additionally, Social Influence and Hedonic Motivation have garnered increasing attention for their roles in shaping user adoption intentions for GenAI (Lee et al., 2006; Inan et al., 2022). Within the frameworks of the UTAUT and the Uses and Gratifications Theory, Social Influence is recognized as a key external factor affecting users' decision-making. For example, recommendations from peers, experts, or online communities can strengthen users' positive attitudes toward GenAI (Sudirjo et al., 2023). Furthermore, GenAI can attract users by providing pleasure, enjoyment, and entertainment value. Personalized creativity and diverse expressive formats during interactions with tools like ChatGPT have been found to significantly stimulate users' intentions to adopt the technology.

Unlike existing research that adopts isolated perspectives, this study integrates both technological and social attributes, encompassing characteristics related to GenAI, and constructs a dual-faceted research framework. This study makes several key contributions to theory and methodology.

First, it develops an integrated dual-perspective framework through theoretical triangulation, unveiling the pathways through which these frameworks influence user adoption intentions. By moving beyond the limitations of a single-theory perspective, this integrated framework offers a more robust analytical tool for studying GenAI. Second, it introduces and validates critical variables—specifically, system compatibility, technological transparency, and human-computer interaction perception—and examines their varying impacts across different user groups and application scenarios. This approach enriches the contextual adaptability of acceptance theories related to GenAI. Thirdly, the theoretical contribution of this study lies in extending the boundaries of applicability for traditional TAMs to include the unique environment of GenAI, thereby addressing the limitations of traditional models in adequately explaining user acceptance in the context of GenAI.

2.2 The systematic evolution of the technology acceptance model: from simplistic static frameworks to complex dynamic theoretical advancements

The TAMs has undergone an evolutionary journey, moving from simplicity to complexity and from static frameworks to dynamic theoretical advancements. From the original TAM to the UTAUT, and more recently to frameworks such as the AIDUA Model, these theoretical structures have been progressively enriched and refined to better address the complexity of user behavior in new technological environments.

The TAM is a widely applied theoretical tool in the field of information behavior, designed to explore individuals' acceptance of new technologies. Grounded in the Theory of Reasoned Action, the TAM hypothesizes that two key constructs—perceived usefulness and perceived ease of use—are the primary determinants of users' intention to adopt a given technology (Bawack et al., 2023). These constructs directly influence individuals' acceptance decisions regarding new technologies and, subsequently, drive actual usage behaviors. While the original TAM is admired for its simplicity and elegance, its overly reductive assumptions have faced considerable criticism. Many scholars point out that the model overlooks the social dimensions of technology adoption and argue that relying solely on perceived usefulness and perceived ease of use is insufficient to explain the diversity of user behaviors, particularly in complex technological environments (Sok et al., 2021). This limitation becomes even more pronounced in the context of artificial intelligence devices, as these technologies often feature capabilities such as self-learning and autonomous decision-making, which add layers of complexity to user interaction. Under such circumstances, the explanatory power of the original TAM is significantly constrained.

To overcome the limitations of the TAM, the UTAUT was introduced. By retaining the core ideas of the TAM while integrating several major theoretical frameworks on technology acceptance, the UTAUT offers a more comprehensive and inclusive framework (Dwivedi et al., 2019). Among its key constructs, performance expectancy builds upon and extends the concept of perceived usefulness from the TAM, while effort expectancy originates from perceived ease of use. Additionally, the UTAUT incorporates new dimensions, such as social influence and facilitating conditions, thereby significantly broadening its explanatory scope (Choe et al., 2021). This theoretical integration enables the UTAUT to more accurately predict individuals' acceptance behaviors across diverse technological contexts.

Subsequently, the UTAUT 2 further enhanced the model's explanatory power in the domain of consumer technologies by introducing constructs such as hedonic motivation, price value, and habit (Afifa et al., 2022). These additions allowed the UTAUT 2 to place greater emphasis on consumers' intrinsic motivations, particularly the role of enjoyment and entertainment in influencing users' behavioral intentions to adopt technology. As a result, the model has been widely applied in studies on commercial and consumer-oriented technologies (Edo et al., 2023). However, despite these advancements, such models still primarily focus on traditional technology adoption contexts, limiting their applicability to explaining the adoption mechanisms of more complex technologies like artificial intelligence devices. In particular, when it comes to applications of GenAI, traditional models often fall short in capturing the impacts of key factors such as user emotions, social interactions, and the intelligent characteristics of the technology.

To address this issue, the AIDUA model represents a significant theoretical breakthrough in the field of technology acceptance research (Rudhumbu, 2022). The model's theoretical innovation lies in its departure from the static analytical frameworks of traditional TAMs. By integrating Social Response Theory and Human-Computer Interaction Theory, the AIDUA model provides a more comprehensive theoretical explanation for understanding user adoption behaviors toward artificial intelligence technologies (Schmitz et al., 2022). This model adopts a staged approach, examining user experiences across three key phases: the initial evaluation phase, the intermediate evaluation outcome phase, and the final decision-making phase. Through this framework, the model delves deeply into the process of user acceptance of GenAI technologies. Recent empirical studies have validated the predictive power of the Acceptance of AIDUA model in the context of GenAI, with particular effectiveness in explaining user adoption behaviors concerning large language model tools. These findings highlight the model's notable advantages in addressing complex adoption mechanisms unique to artificial intelligence applications.

The Acceptance of AIDUA model offers three distinct theoretical advantages: (1) Integration of AI-Relevant Variables: It enriches the traditional TAM framework by incorporating variables aligned with AI technologies, such as anthropomorphism, while retaining the traditional model's strengths. (2) Multi-Perspective Approach: The model adopts a multi-perspective framework that synthesizes both technological and social viewpoints to comprehensively explore user acceptance mechanisms. (3) The model adopts a multi-phase analytical framework, allowing for a more dynamic exploration of the mechanisms underlying the formation of user acceptance intentions. Building on these advantages, this study selects the Acceptance of AIDUA model as its theoretical foundation, further expanding its scope to achieve a more precise analysis of the user acceptance mechanisms specific to GenAI. This approach aims to offer theoretical guidance for the design and application of related products and technologies.

2.3 The theoretical expansion and innovation of the AIDUA model: construct integration and a multidimensional analysis framework for artificial intelligence technology acceptance

With the rapid evolution of GenAI technology, the AIDUA model has emerged as a novel tool for validating technology acceptance mechanisms, distinguished by its multidimensional explanatory capacity that integrates social, psychological, and technological factors. It has increasingly become a focal point in the field of artificial intelligence technology acceptance. This theoretical framework has been successfully extended to diverse application scenarios, including banking services, intelligent healthcare, hotel services, and financial services. In these various contexts, researchers have thoroughly examined the critical roles of existing AIDUA variables, such as social influence, hedonic motivation, and anthropomorphism, in technology acceptance. They have also conducted targeted variable expansions to more accurately analyze the technology acceptance mechanisms within each specific scenario.

In the banking services sector, scholars have expanded the explanatory boundaries of the AIDUA model by integrating technology anxiety and risk aversion, revealing that these two emotional variables significantly and negatively influence customers' willingness to accept technology (Cintamür, 2024). Moreover, in another study on sustainable banking services, an extended factor such as technology literacy was found to have a significant positive effect on customers' emotions and performance expectations (Mei et al., 2024). Research in the field of chatbots has confirmed the influence mechanisms of extended factors such as perceived novelty on technology acceptance (Ma and Huo, 2023). Further studies have demonstrated that extended cognitive factors such as personalization, competence, enthusiasm, and empathy significantly enhance consumers' willingness to accept chatbots in contactless shopping scenarios (Kim and Hur, 2024). Research in healthcare services has delved into the significance of empathy and perceived interaction quality as extended variables within the AIDUA model, highlighting their crucial facilitative roles in patients' acceptance of medical service robots (Li et al., 2024). In the hotel service sector, studies further indicate that Generation Z's willingness to accept AI devices is closely associated with the frequency of smartphone usage (Vitezić and Perić, 2021). In specific cultural contexts, such as Indian restaurant environments, extended factors such as technology familiarity have a more pronounced impact on the acceptance of service robots (Pande and Gupta, 2023). Research in educational technology has found that the characteristics of technological tasks and their alignment with task suitability are critical extended factors for students' acceptance of multimodal large language models (Al-Dokhny et al., 2024). In the realm of fintech, a study on facial recognition payment has emphasized the facilitative role of technological extension factors, such as system compatibility, on the intention to continue using such technology (Lee and Pan, 2023).

Despite the significant theoretical insights provided by the aforementioned AIDUA extension studies into the mechanisms of artificial intelligence technology acceptance, there remain critical theoretical limitations: First, existing AIDUA extension research often treats emotional responses or cognitive factors as important antecedent variables, while overlooking the fact that emotions and cognition are inherently derivative responses to artificial intelligence technology. This oversight fails to adequately illuminate the fundamental generative logic behind these emotional or cognitive variables. Second, the existing AIDUA extension studies primarily focus on model validation within specific contexts, lacking generalizability across various scenarios. Furthermore, there is insufficient elaboration on individual background characteristics, which has hindered a comprehensive understanding of the heterogeneous impacts of individual differences on the acceptance of artificial intelligence technologies.

Building upon the identified theoretical gaps, this study intends to incorporate system compatibility, technological transparency, and human-computer interaction perception into the AIDUA model as core antecedent variables. The objective is to systematically address the limitations of existing AIDUA extension research and provide a more comprehensive analytical framework for understanding the complex mechanisms of artificial intelligence technology acceptance. The specific reasons are given as follows:

Firstly, within the complex theoretical framework of technological acceptance, compared to users' cognitive and emotional factors, the fundamental attributes of technology, as an essential driving mechanism, are supported by the Evolutionary Theory of Technology Systems and Complex Adaptive Systems Theory. According to the Evolutionary Theory of Technology Systems, the inherent properties of technology not only influence user behaviors but also determine how technology continuously evolves through feedback interactions with users and their environments. The technological effectiveness and the match between technological evolution and needs constitute critical conditions for technological acceptance (Onik et al., 2017). The Complex Adaptive Systems Theory further deepens this understanding. This theory explores how the complexity and diversity of technological systems directly affect users' decision-making, adaptation strategies, and ultimately their degree of acceptance. The design, functionality, and adaptive capabilities of technology can shape users' learning approaches and feedback mechanisms during the usage process, thereby determining the extent of their acceptance and integration of new technologies. This perspective highlights the core role of technology in user adaptation and acceptance (Nan, 2011). In summary, viewing the fundamental attributes of technology as the primary driving factor and antecedent variable provides more robust theoretical support for understanding the dynamic mechanisms of technological acceptance.

Secondly, within the theoretical lineage of technological innovation, system compatibility, technological transparency, and perceived human-computer interaction constitute a multidimensional deconstruction of fundamental technological attributes. Among these, system compatibility, as a foundational attribute, provides a fundamental structural guarantee for technological acceptance. The theoretical connotation of system compatibility is rooted in the Technology-Task Matching Theory. This theory posits that within the ecosystem of technological innovation, compatibility primarily signifies the structural alignment between technological systems and specific task requirements. Such alignment transcends mere technical functional adaptation, representing a profound task-technology synergy aimed at establishing structural preconditions for technological innovation and mitigating systemic resistance to technological acceptance (Al-Rahmi et al., 2023). Technological transparency can be conceptualized as a connective attribute, with its theoretical foundation derived from Information Asymmetry Theory. Specifically, transparency plays a critical mediating role in technological systems by reducing information uncertainty and enhancing users' cognitive trust. Unlike the foundational assurance of compatibility, transparency manifests more as a connective mechanism, establishing an informational symmetry bridge between technology providers and users (Marwala and Hurwitz, 2015). Perceived human-computer interaction represents a high-order technological attribute. The human-machine interaction paradigm suggests that this attribute not only focuses on the external presentation of technological functionality but also emphasizes deep cognitive negotiation between users and technological systems. Compared to the foundational guarantee of compatibility and the connective mechanism of transparency, perceived human-computer interaction more distinctly embodies a high-order dimension of value realization, optimizing interaction pathways to enhance user experiential value (Hollender et al., 2010). In summation, these three attributes collectively constitute the fundamental properties of technology, providing a systematic theoretical perspective for understanding technological acceptance.

Furthermore, compared to the highly context-specific extended variables in existing AIDUA extension research, the three core constructs introduced in this study possess greater theoretical abstraction and generalizability. These constructs can transcend different technological ecosystems, revealing the deeper generative mechanisms of user technology acceptance behaviors, thereby providing a more macro and fundamental analytical framework for understanding the adoption of artificial intelligence technologies.

Finally, in contrast to the singular group structural equation models prevalent in existing research, this study innovatively employs a MGSEM approach to systematically examine the effects of multidimensional personal background factors—such as gender, age, education level, and occupation type—on the acceptance of artificial intelligence technologies. This methodological innovation not only addresses the theoretical limitations of existing research concerning the consideration of personal background variables but also offers a more nuanced and comprehensive analytical perspective for revealing the heterogeneity of user acceptance behaviors toward artificial intelligence technologies.

In summary, this study expands the existing AIDUA theoretical model by introducing three core constructs: system compatibility, technological transparency, and Perceived human-computer interaction. In contrast to the relatively singular and context-specific variable extensions observed in prior research, these constructs provide a more comprehensive and generalizable framework for AIDUA model expansion. This multidimensional approach systematically elucidates the complex mechanisms underlying user acceptance of artificial intelligence technologies.

2.4 The evolution and integration of cognitive appraisal theory: a multi-stage cognitive-affective perspective on generative AI user acceptance

The rapid rise of GenAI is reshaping the paradigms of human-computer interaction. Leveraging features such as autonomous language interaction, real-time feedback mechanisms, and adaptive learning abilities, these technologies have created interaction experiences that transcend traditional human-computer interface designs. However, to fully understand user acceptance and the intention for sustained use of GenAI, purely technology-oriented analytical frameworks are becoming inadequate. There is an urgent need to delve into the cognitive appraisal processes, emotional response mechanisms, and their respective influence pathways on user behavior and decision-making within the context of human-computer interaction. The Cognitive Appraisal Theory, as a theoretical paradigm for explaining the mechanisms of emotional formation, offers a systematic analytical framework to address these needs (Yam et al., 2021). Nevertheless, existing research based on the Cognitive Appraisal Theory faces two significant limitations. First, it struggles to effectively capture the dynamic characteristics and iterative feedback mechanisms inherent to interactions with GenAI. Second, it lacks a systematic analysis of the phased evolution of user evaluations and the interplay between cognitive and affective mechanisms that occur throughout this process. These theoretical gaps hinder a deeper understanding of the mechanisms driving user acceptance of GenAI and underscore the urgent need to construct novel evaluative frameworks tailored to these technologies.

2.4.1 The theoretical origins and evolution of cognitive appraisal theory

Cognitive Appraisal Theory originated from the pioneering work of Lazarus and his colleagues in the 1960s and has since evolved from a single-dimensional appraisal model to a multidimensional appraisal framework. This theory seeks to explain how individuals evaluate environmental stimuli through subjective cognitive processes, leading to differentiated emotional responses. Unlike direct stimulus-response models, Cognitive Appraisal Theory emphasizes the mediating processes of emotional experiences, arguing that emotions are not directly triggered by external events but are instead derived from individuals' subjective evaluations of the nature and potential implications of those events.

The core appraisal structure of Cognitive Appraisal Theory comprises two interrelated yet conceptually distinct processes: (1) Primary Appraisal: This process involves an individual's evaluation of the relevance of an event to their goals (goal relevance), the congruence of the event with those goals (goal congruence), and the degree of personal involvement (ego-involvement). This stage addresses the fundamental question: “What does this event mean to me?” In the context of GenAI, primary appraisal manifests as users' evaluations of whether the capabilities of the AI are relevant to their task demands, whether they are likely to produce positive outcomes or pose potential threats, and whether they align with the users' values and sense of identity. (2) Secondary Appraisal: This process involves an individual's evaluation of their coping resources, perceived control, and expectations for future outcomes. It addresses the question: “How can I respond to this?” For users of GenAI, secondary appraisal includes assessments of their own technological competence, their ability to control AI outputs, and their expectations regarding the potential outcomes of continued interaction (Munanura et al., 2024). One of the defining characteristics of the evolution of Cognitive Appraisal Theory is its emphasis on the dynamic and context-dependent nature of the appraisal process. The theory posits that individuals' appraisal patterns adjust continuously in response to changing circumstances, interaction depth, and accumulated experiences, forming a cyclical feedback mechanism. This dynamic characteristic aligns closely with the progressive and iterative nature of interactions with GenAI, providing a robust theoretical foundation for understanding the dynamic evolution of user acceptance behaviors in this context.

2.4.2 The evolution of cognitive appraisal theory in information systems research

The application of Cognitive Appraisal Theory in the field of Information Systems has undergone a notable transformation, shifting from a peripheral supplementary perspective to becoming a core analytical framework. Traditional TAMs, such as the TAM and the UTAUT, predominantly focus on cognitive factors, emphasizing rational decision-making drivers such as perceived usefulness and perceived ease of use. In contrast, the unique perspective of Cognitive Appraisal Theory, which highlights the interplay between cognition and emotion, has gradually positioned the theory as a critical complement for explaining user adoption and the sustained use of technology (Yu et al., 2023).

Compared to other cognitive-affective theories, the distinctive value of Cognitive Appraisal Theory lies in three aspects: (1) Dynamic and phased explanation of emotion formation: Unlike theories that statically address emotional outcomes, Cognitive Appraisal Theory provides a dynamic framework that captures the progression of emotions over time. (2) Emphasis on individualized subjective evaluation: The theory considers that individuals may form highly differentiated subjective evaluations of the same technological features, thereby highlighting the behavioral impact of personalized appraisals. (3) Focus on the multi-layered structure of cognitive processes: Cognitive Appraisal Theory uncovers how emotions and appraisals evolve dynamically in response to varying contexts and increased interaction depth.

While Cognitive Appraisal Theory has been widely adopted in information systems research to explain critical emotional responses such as anxiety toward technology, trust formation, and satisfaction development, its applicability to the unique domain of GenAI remains underexplored. Differing from traditional information systems, GenAI introduces an entirely novel user experience characterized by features such as real-time language interaction, autonomous learning, and creative output.

The core of this interaction experience lies in three aspects: (1) Anthropomorphic interaction design: GenAI blurs the conventional boundaries between technological tools and social partners. (2) Increased cognitive load and dynamic uncertainty: Users face higher levels of cognitive demands and unpredictability during their interactions with GenAI. (3) Deeper emotional connections: While such connections may enhance user satisfaction, they can simultaneously increase user dependency. These distinctive characteristics present significant challenges to traditional Cognitive Appraisal Theory frameworks, emphasizing the need for contextual extensions to better accommodate the complexities of GenAI user experiences.

2.4.3 A multi-stage cognitive appraisal framework: an integrated model for generative AI user acceptance

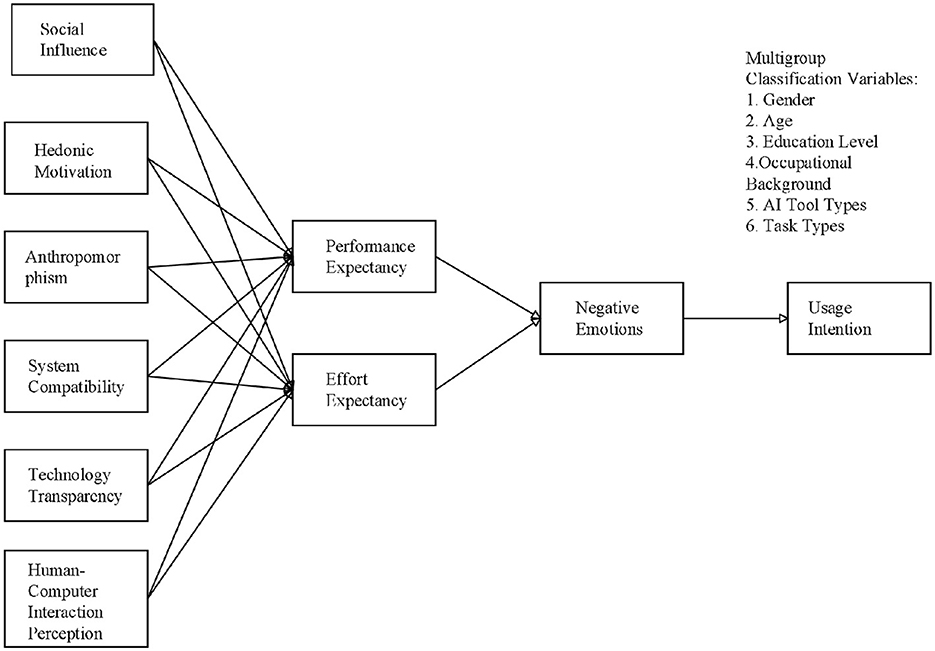

Building on the core concepts of Cognitive Appraisal Theory and incorporating the unique characteristics of GenAI, this study proposes a multi-stage cognitive appraisal framework that comprehensively examines the mechanisms underlying users' cognitive, emotional, and behavioral responses in GenAI use contexts (Figure 1). The framework is structured into three interrelated phases, reflecting the dynamic evolution of user experiences.

2.4.3.1 Initial appraisal phase

The initial appraisal phase represents the cognitive appraisal process users undergo when first encountering GenAI. During this phase, users primarily evaluate the technology based on a rapid perception of its functional attributes and their alignment with individual needs (Wong I. K. A. et al., 2023; Milaković and Ahmad, 2023; Wang et al., 2025). Users' initial cognitive and emotional reactions are shaped by two distinct categories of factors: technological attributes and social attributes. Technological Attributes: This dimension encompasses factors such as technological transparency (the understandability of the system's functionality and underlying mechanisms) and system compatibility (the extent to which the system integrates with the user's existing technological environment and workflow). Social Attributes: This dimension includes factors such as social influence (recommendations and feedback from others), hedonic motivation (the degree of enjoyment derived from the initial interaction), human-computer interaction perception (subjective evaluations of the interaction experience), and degree of anthropomorphism (the sense of social presence and interactive affinity displayed by the system).

It is important to note that while certain appraisal factors may require some hands-on experience for accurate judgment, users often form preliminary expectations based on prior knowledge and perceptions. These early expectations serve as the foundation for a user's mental model during the initial interaction, subsequently shaping their initial appraisal outcomes and early usage intentions.

This dual-dimensional initial appraisal framework, grounded in the application of Cognitive Appraisal Theory within the GenAI context, not only highlights the theory's adaptability to this specific domain but also uncovers the complexity of early-stage cognitive formation mechanisms among users.

2.4.3.2 Deep appraisal phase

The deep appraisal phase marks the point at which users engage in a more nuanced and in-depth cognitive evaluation of their interactions with GenAI (Suh, 2024; Yoon and Lee, 2021; Pei et al., 2024). From the perspective of Expectation-Confirmation Theory, the deep appraisal process is primarily driven by two core factors: performance expectations and effort expectations. Specifically, performance expectations reflect the user's evaluation of the alignment between the system's output and their intended goals, while effort expectations pertain to the user's perceived balance between operational effort and task completion efficiency.

This dynamic interplay between the dual expectations—performance and effort—directly triggers emotional responses, shaping users' experiences of cognitive load and emotional fluctuations. Throughout the deep usage phase, users compare actual system performance with their initial expectations, leading to either positive or negative emotional states. These emotional experiences, in turn, significantly influence users' long-term attitudes toward the continued use of GenAI. This framework sheds light on the cognitive-affective interaction mechanisms during deep engagement with GenAI and underscores the critical moderating role of expectations in the emotion formation process.

2.4.3.3 Integrative decision phase

The integrative decision phase represents the stage where users arrive at a comprehensive evaluation of their interactions with GenAI, forming a final judgment (Chang et al., 2024). At this stage, users synthesize the cognitive assessments from the initial appraisal phase with the emotional experiences from the deep appraisal phase to develop an overarching attitude toward the use of GenAI.

When users achieve a convergence between cognition and emotion, their final usage decision is primarily driven by the cumulative impact of these prior cognitive evaluations and emotional experiences. The knowledge and emotional states accumulated through earlier phases of interaction are ultimately consolidated, translating into a user's intention to continue using GenAI.

The multi-stage cognitive appraisal framework proposed in this study makes two key contributions to the development of existing theories: (1) Addressing the limitations of traditional cognitive appraisal theory: This study constructs a multi-level model, comprising initial appraisal, deep appraisal, and integrative decision phases, by incorporating the unique characteristics of interactions with generative artificial intelligence. This effectively addresses the dimensional limitations of traditional cognitive appraisal theory in technology acceptance research. (2) Providing a Novel Theoretical Perspective for Future Research: The proposed framework offers a robust foundation for future studies to explore variations in user acceptance behaviors across different GenAI application scenarios. It also provides a basis for examining the moderating effects of individual characteristics, usage contexts, and other factors on user behavior, paving the way for further exploration of this evolving field.

3 Research hypotheses

3.1 Initial appraisal phase

In the initial appraisal phase of GenAI interactions, users' evaluations of the technology and their emotional responses are influenced by multiple factors. These factors shape users' performance expectations, effort expectations, and negative emotions through various mechanisms.

3.1.1 Social influence

Both Social Cognitive Theory and the TAM emphasize that social influence is a significant external factor driving technology adoption. Social influence refers to the cognitive and behavioral tendencies individuals develop in the process of adopting technology due to the behaviors, attitudes, and normative expectations of others within their social environment (Fedorko et al., 2021; Figueroa-Armijos et al., 2023). This influence is particularly pronounced in the context of GenAI adoption, manifesting as direct social evaluation (e.g., opinions of family members, friends, or colleagues regarding the technology), indirect information dissemination (e.g., public discussions within communities or on online platforms), and norm-driven professional expectations (e.g., industry trends necessitating the use of GenAI tools). These social factors shape users' cognitive frameworks regarding GenAI, thereby directly influencing their adoption decisions (Achiriani and Hasbi, 2021; Cheng et al., 2022).

Specifically, positive social influence (e.g., high praise for GenAI within peer groups) can bolster users' confidence in the technology's functionality and value, enhancing their performance expectancy, which refers to the belief that the technology will improve task efficiency and effectiveness. On the other hand, social norms and public discourse that emphasize the complexity of the technology may increase users' perceptions of the learning burden, amplifying their effort expectancy, or the anticipated amount of effort required to use the technology effectively.

Based on the above theoretical analysis, the following hypotheses are proposed:

H1: Social influence positively affects users' performance expectancy for GenAI.

H2: Social influence negatively affects users' effort expectancy for GenAI.

3.1.2 Hedonic motivation

Hedonic motivation occupies a central role in technology acceptance research, particularly in contexts where technologies exhibit a high degree of emotional interaction and entertainment attributes. Existing research has found that individuals' decisions to adopt technologies are not only driven by functional considerations but are also significantly influenced by their expectations of the pleasurable experiences the technology can provide (Siyal et al., 2021). Compared to traditional utilitarian-oriented technologies, GenAI, with its unique capacity for creative outputs, personalized interaction features, and real-time feedback mechanisms, presents tremendous potential for hedonic value, making it an ideal context for examining the influence mechanisms of hedonic motivation.

In the evaluation of GenAI applications, hedonic motivation influences users' attitude formation and behavioral intentions through two interrelated yet conceptually distinct pathways. First, when users perceive high levels of enjoyment, interactivity, or entertainment attributes in the technology, their intrinsic engagement significantly increases, thereby enhancing their confidence in and expectations of the technology's functional performance. Specifically, performance expectancy is strengthened as users perceive greater hedonic value in the technology (Mamun et al., 2024). This phenomenon aligns with the positive engagement effects highlighted in Flow Theory, which indicates that hedonic experiences can optimize users' perceptions of the practical value of the technology. Second, hedonic motivation, by increasing users' intrinsic satisfaction and emotional involvement, effectively reduces their perceived threshold for technology complexity or concerns related to cognitive load during usage. This mechanism results in a significant negative association with effort expectancy (Palos-Sanchez et al., 2024; Seng and Hee, 2021).

Based on the above theoretical analysis, this study proposes the following hypotheses:

H3: Hedonic motivation positively affects users' performance expectancy for GenAI.

H4: Hedonic motivation negatively affects users' effort expectancy for GenAI.

3.1.3 Anthropomorphism

Anthropomorphism, as a core feature of technology design, plays a critical role in shaping user experiences and influencing technology acceptance. When a technological system exhibits human-like characteristics, users are more likely to perceive it as a social entity rather than a mere functional tool. In the context of GenAI applications, anthropomorphic design—by simulating human cognitive patterns and social behaviors—significantly impacts users' attitudes and emotional responses toward the technology.

Anthropomorphism influences users' acceptance of GenAI through two interrelated yet conceptually distinct pathways. First, highly anthropomorphic system designs (e.g., natural language conversations, emotionally resonant feedback, and personalized recommendations) can stimulate users' positive perceptions of the technology's intelligence. When users perceive that the technology possesses human-like capabilities, such as understanding intent, contextual reasoning, and creative thinking, they are more inclined to form optimistic expectations regarding its performance. This “anthropomorphism-trust-expectation” progression illustrates how socialized features in technology design can strengthen user confidence and significantly enhance performance expectancy (Balakrishnan et al., 2022; Polyportis and Pahos, 2025). Second, anthropomorphic features provide intuitive and natural interaction modes (e.g., contextual understanding, guided conversations, and error-tolerant mechanisms), effectively reducing the cognitive load associated with technology usage. Compared to traditional command-based or procedural interactions, anthropomorphic interactions align more closely with users' everyday social experiences, making the process of using the technology smoother and more natural. This socially oriented interaction paradigm significantly reduces users' effort expectancy, as it lowers the learning curve and simplifies the perceived complexity of using the technology (Pawlik, 2021; Yang et al., 2022). Specifically, the anthropomorphic features of GenAI—such as personalized conversational styles and intelligent feedback mechanisms—significantly enhance user engagement and trust. A high degree of anthropomorphism transforms the technology from a cold, impersonal tool into an intelligent interactive system capable of understanding, responding to, and supporting users' needs.

Based on the above analysis, this study proposes the following hypotheses:

H5: The degree of anthropomorphism positively affects users' performance expectancy for GenAI.

H6: The degree of anthropomorphism negatively affects users' effort expectancy for GenAI.

3.1.4 System compatibility

According to the TAM, system compatibility is a critical determinant of users' technology adoption decisions. System compatibility refers to the degree to which a new technology aligns with users' existing values, needs, experiences, and technological environments. High compatibility reduces users' perceived risks and uncertainties, thereby facilitating the rapid diffusion of the technology. In the context of GenAI, compatibility influences user attitudes and behaviors through multiple mechanisms.

First, high compatibility enhances performance expectancy. When GenAI can seamlessly integrate with users' existing workflows—such as through open APIs for integration with office software or support for common data formats—users perceive the technology as directly improving efficiency and reducing task complexity. This “plug-and-play” characteristic minimizes cognitive load and increases users' confidence in the technology's capabilities. Moreover, alignment with users' values and preferences—such as GenAI producing outputs in styles that match user expectations—further reinforces performance expectancy (Zhang et al., 2024). Second, compatibility reduces effort expectancy. Low compatibility often results in cognitive dissonance, where the new technology conflicts with users' existing cognitive schemas, increasing learning costs and psychological stress. Conversely, high compatibility—such as when GenAI provides interfaces similar to existing tools or supports familiar interaction modalities—helps to alleviate cognitive conflicts and lower users' effort expectancy. Differences in the compatibility of various types of GenAI (e.g., text generation vs. image generation) with users' existing skills and workflows further influence effort expectancy (Shatta and Shayo, 2021).

Based on the above analysis, the following hypotheses are proposed:

H7: System compatibility positively affects users' performance expectancy for GenAI.

H8: System compatibility negatively affects users' effort expectancy for GenAI.

3.1.5 Technological transparency

Technological transparency refers to the extent to which users can comprehend a system's internal mechanisms, operational principles, and decision-making processes. It is one of the critical features influencing user trust and technology adoption.

According to Technology Trust Theory, technological transparency strengthens users' trust in a technology by reducing the system's uncertainty and unpredictability, thereby shaping their attitudes and behavioral intentions. Systems with a high level of transparency allow users to clearly understand how GenAI functions and makes decisions, thereby boosting their confidence in the system's functional reliability and effectiveness, which in turn significantly enhances performance expectancy (Bodó, 2021; Wang et al., 2021). For instance, when users clearly comprehend the specific decision-making logic and data processing workflows of GenAI, they are more likely to form positive expectations regarding the system's performance. Furthermore, technological transparency can effectively reduce users' cognitive load during the process of learning and operating the system, thereby lowering their perceptions of its complexity. This cognitive simplification effect decreases the effort users perceive to be necessary for mastering the functionality of the system, thereby directly influencing their effort expectancy (Taylor et al., 2023; Durán and Jongsma, 2021). In highly transparent systems, users can more intuitively understand the system's operational principles and modes of interaction, reducing trial-and-error costs and improving overall efficiency during use. As a result, users are more likely to perceive the technology as easier to use. Conversely, systems with low transparency may lead to unclear operational logic that increases users' learning and operational efforts. Additionally, low transparency can give rise to a “black-box effect,” wherein users feel the operation of the system is opaque or unintuitive, thereby raising the perceived barriers to adoption and use.

Based on the above analysis, this study proposes the following hypotheses:

H9: Technological transparency positively affects users' performance expectancy for GenAI.

H10: Technological transparency negatively affects users' effort expectancy for GenAI.

3.1.6 Perceived human-AI interaction

Perceived human-AI interaction refers to users' subjective evaluation of the quality of interaction with artificial intelligence systems. According to human-computer interaction theory, high-quality interaction strengthens users' trust in and sense of control over the system, while also increasing their engagement. This, in turn, significantly influences users' attitudes and behaviors toward the technology. In the context of GenAI, flexible and intelligent interaction designs enable users to establish an efficient and seamless communication experience with the system. Such an experience enhances users' performance expectancy (Shulner-Tal et al., 2023). For instance, when users perceive the system as having strong understanding and responsiveness, as well as the ability to flexibly manage complex tasks, they tend to develop a more favorable evaluation of the system's reliability and value. Conversely, poor interaction quality, such as sluggish system responses, inability to adapt flexibly to user needs, or low levels of intelligence in conversations, may negatively influence users' effort expectancy, which reflects their perceived complexity and cost of using the system (Chou et al., 2022). Poor interaction experiences increase the cognitive and operational effort required and may lead users to feel that the system is cumbersome or inefficient to use.

Based on this analysis, this study proposes the following hypotheses:

H11: Perceived human-AI interaction positively affects users' performance expectancy for GenAI.

H12: Perceived human-AI interaction negatively affects users' effort expectancy for GenAI.

3.2 Intermediate evaluation stage

3.2.1 Performance expectancy

Performance expectancy refers to users' expectations regarding how technology can enhance work efficiency or task performance. In the context of technology use, users assess their emotional responses based on the extent to which a technology's performance aligns with their expectations (Zhu et al., 2024). Specifically, when the generated content meets or even exceeds user needs, this positive experience not only enhances users' trust in the AI system but also alleviates negative emotions during use. For instance, an AI-powered writing assistant that produces accurate and coherent articles can significantly reduce the user's workload while fostering positive emotional responses. However, if the system generates content that is illogical or error-prone, unmet expectations may trigger dissatisfaction, anxiety, or even lead to the abandonment of the technology.

Additionally, Cognitive Dissonance Theory also suggests that a significant discrepancy between performance expectancy and actual experiences can result in strong cognitive conflict, thereby causing emotional imbalances. When users hold high performance expectations for GenAI but find its actual performance falling short of substantially improving task outcomes, this inconsistency can magnify feelings of frustration and unease (Yin et al., 2023).

Based on this analysis, the following hypothesis is proposed:

H13: Performance expectancy negatively affects users' negative emotions.

3.2.2 Effort expectation

Effort expectation refers to the perceived cognitive resources, learning costs, and operational complexity required by users to utilize a technology. It is one of the key psychological factors influencing technology adoption.

According to Cognitive Load Theory, higher effort expectation often increases users' cognitive burden, which can impact their emotional experiences (Zhang et al., 2023). Specifically, in the context of GenAI, the negative effects of effort expectation are primarily reflected in several key pathways. First, when users need to repeatedly fine-tune input commands, correct errors in AI-generated content, or understand the operational logic of the AI system, the associated learning costs and cognitive load increase significantly—leading to what is referred to as “technology fatigue” (St Omer and Chen, 2023). Second, according to the Appraisal Theory of Emotion, users' subjective assessment of the trade-off between operational cost and actual benefit during technology use directly influences their emotional responses. When users perceive the process of engaging with GenAI as overly complex, with insufficient output benefits, they are more likely to experience frustration and aversion. This evaluative process underscores the detrimental emotional effects of high effort expectation. Another critical mechanism is the trust deficit in technology. When high effort expectation leads to a reduced sense of control and comprehension regarding AI operations, users may begin to question the AI's capabilities and reliability, feeling incapable of confidently navigating the technology. This not only exacerbates technology anxiety but also triggers resistance toward the system, potentially diminishing their intention to continue using it. For instance, when users perceive that effective outcomes from GenAI heavily rely on their ability to provide highly precise and detailed input, a lack of trust in the system's responsiveness and adaptability can result in elevated psychological burdens, further destabilizing emotional equilibrium.

Based on the above analysis, the following hypothesis is proposed:

H14: Effort expectation positively affects users' negative emotions.

3.3 Results evaluation stage

3.3.1 Negative emotions

Following the cognitive evaluations in the preceding stages, users ultimately form an overall emotional experience regarding their interaction with GenAI. According to Cognitive Appraisal Theory, emotions play a pivotal role in individual decision-making processes, particularly in the acceptance and use of new technologies (Nguyen et al., 2024). Within the context of technology use, users often rely on emotional responses as key determinants of their behavioral intentions after subjectively evaluating their interaction experiences, such as task performance or system feedback.

When users encounter unmet performance expectations, interaction barriers, or cognitive uncertainty, they may experience a range of negative emotions, including frustration, anxiety, unease, and fear. These negative emotional responses not only reduce user satisfaction but can also erode trust in the technology, undermining their willingness to adopt the system and even leading to abandonment (Peng and Hwang, 2021). In particular, Technology Threat Avoidance Theory suggests that when the perceived complexity, learning costs, or potential risks associated with a technology lead to heightened stress, users may adopt avoidance strategies to mitigate their psychological burden (Chen and Liang, 2019). This avoidance behavior directly suppresses their adoption intentions. Additionally, emotional responses play a critical mediating role by influencing users' perceptions of risk and benefit during the technology experience. Negative emotions, in particular, tend to amplify perceived risks associated with the technology while simultaneously diminishing perceived benefits, making them one of the core psychological variables shaping adoption intentions (Al-Adwan et al., 2022).

Based on the above analysis, the following hypothesis is proposed:

H15: Negative emotions negatively affect users' acceptance intention for GenAI.

4 Research methodology

4.1 Research design and questionnaire development

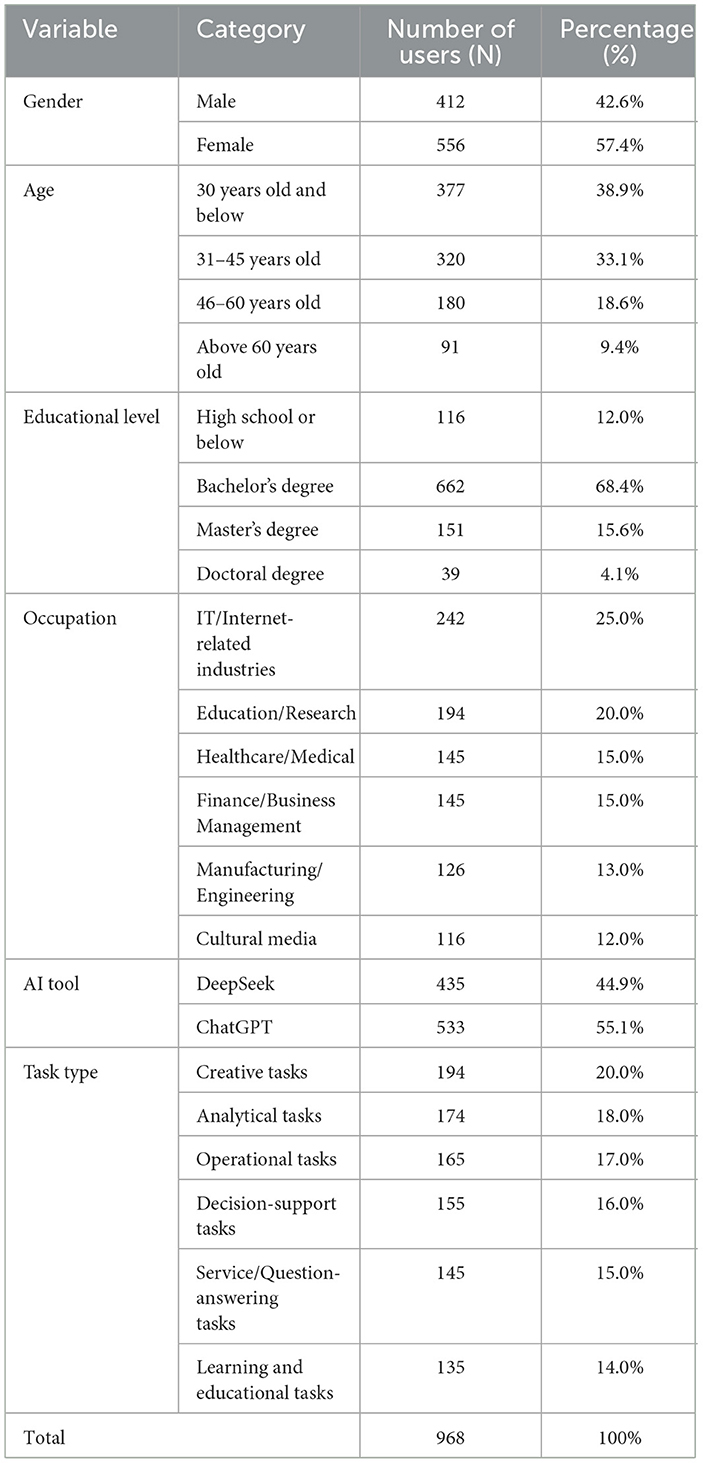

According to the sample size requirements for SEM, Kline, in his book Principles and Practice of Structural Equation Modeling, suggests that for complex models—such as those involving a large number of observed variables—the required sample size is typically between 10 and 20 times the number of observed variables. Following this general recommendation and considering the complexity of this study, which includes 63 observed variables to be estimated, a conservative multiplier of 15 times the number of observed variables was chosen, resulting in a minimum required sample size of 945 valid responses (Kline, 2023). To ensure data reliability and account for potential invalid responses, this study plans to collect 1,000 questionnaires, thereby further enhancing the statistical rigor and robustness of the findings.

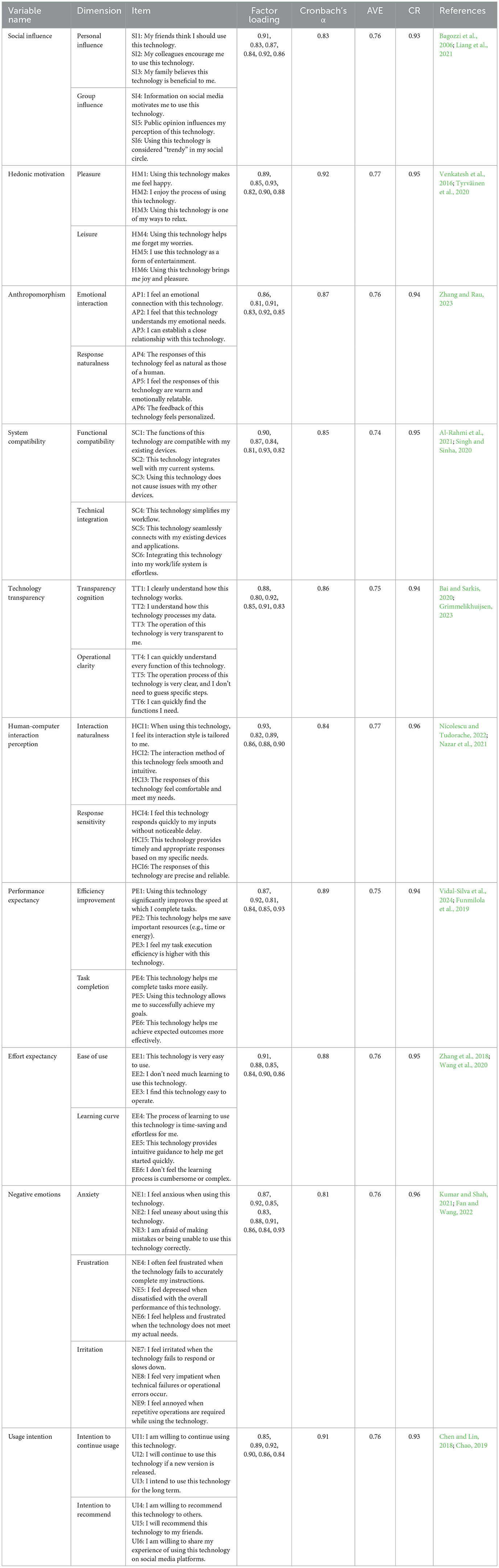

As shown in Table 1, the questionnaire is divided into two main sections.

4.1.1 Demographic variables

This section captures respondent information, including gender, age, education level, occupation type, AI tool usage experience, and the types of tasks for which AI tools are applied.

4.1.2 Core construct measurement scales

This section measures key variables, including technological transparency, system compatibility, hedonic motivation, etc. All measurement items are rated on a 7-point Likert scale (1 = Strongly Disagree, 7 = Strongly Agree). Compared to a 5-point Likert scale, the 7-point scale offers higher measurement precision and greater differentiation, allowing for the capture of subtle variations in respondents' attitudes while maintaining an appropriate level of cognitive load. The 7-point scale has been widely applied and validated in psychology, behavioral sciences, and user experience research.

The measurement scales for all core variables in this study are adapted from well-established scales in classic, peer-reviewed literature (Bagozzi et al., 2006; Liang et al., 2021; Venkatesh et al., 2016; Tyrväinen et al., 2020; Zhang and Rau, 2023; Al-Rahmi et al., 2021; Singh and Sinha, 2020; Bai and Sarkis, 2020; Grimmelikhuijsen, 2023; Nicolescu and Tudorache, 2022; Nazar et al., 2021; Vidal-Silva et al., 2024; Funmilola et al., 2019; Zhang et al., 2018; Wang et al., 2020; Kumar and Shah, 2021; Fan and Wang, 2022; Chen and Lin, 2018; Chao, 2019). These scales were appropriately modified to fit the context of GenAI, detailed information is provided in Table 1.

4.2 Data collection and quality control

Prior to the formal survey, the research team conducted a pilot study with 30 participants using cognitive interview techniques to identify potential issues in the questionnaire design, such as ambiguous wording, logical flaws, or misunderstandings. Based on the feedback, necessary adjustments and optimizations were made to enhance the content validity and contextual suitability of the measurement instrument.

4.2.1 Data collection phases

Phase One (March 2023): Participants with prior experience using ChatGPT or DeepSeek were recruited through social media platforms and university networks. This phase employed convenience sampling, a non-probability method that focuses on recruiting participants through accessible and convenient channels. To minimize selection bias, strict eligibility criteria and quality control measures were applied, ensuring that only participants meeting the research standards and having actual usage experience were included.

Phase Two (April 2023): In order to expand the coverage of the sample and enhance its representativeness, a professional survey organization was commissioned to conduct sample recruitment, employing a combination of stratified sampling and quota sampling methods. First, stratified sampling, as a probabilistic sampling method, divides the population into distinct strata based on demographic variables such as gender, age, and occupation. Quotas are then established within each stratum to ensure proportional representation of each group in the final sample. Within each quota group, random sampling is then employed to select participants. This means that individuals who meet the quota criteria are chosen randomly from all eligible candidates, ensuring fairness in participant selection.

4.2.2 Inclusion criteria

To ensure the relevance and validity of the study sample, this research specifically targeted participants with experience using either ChatGPT or DeepSeek. Eligible participants were identified through two filtering questions in the questionnaire. The first question asked, “Have you ever used ChatGPT or DeepSeek?” Those who answered “No” were automatically excluded, as they did not meet the criteria for actual users. The second question required participants to describe a specific scenario in which they used these tools, allowing for further verification of their usage. Only participants providing valid responses to both questions proceeded to the survey's main sections.

4.2.3 Quality control

Several quality control measures were implemented to maintain data integrity. The questionnaire included three attention-check items (e.g., “Please select ‘Strongly Disagree' for this item”) to detect inattentive responding. Responses that failed these checks were excluded. Submissions with abnormal completion times (< 1/3 or >3 × median time) and systematic response patterns (e.g., straight-lining) were also removed. Through the combination of these screening and quality control processes, participants transitioned from potential respondents to verified research participants. From the initially collected 1,000 surveys, 968 met all inclusion criteria (with a validity rate of 96.8%), providing a solid dataset for subsequent analyses.

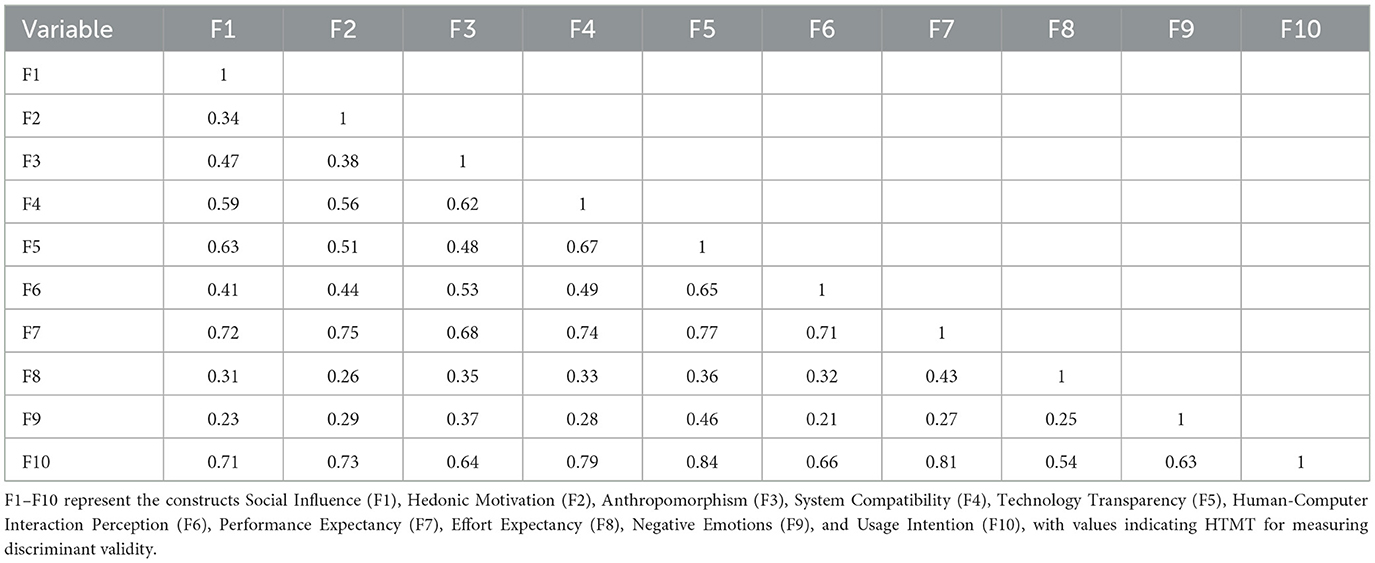

4.2.4 Common method bias