- 1School of Management, Guangzhou College of Technology and Business, Guangzhou, China

- 2Institute for New Quality Productive Forces and GBA, Guangzhou College of Technology and Business, Guangzhou, China

- 3Business School, Shanghai Jian Qiao University, Shanghai, China

This study investigates how gendered anthropomorphism in robots influences human motivation to undertake challenging tasks within human–robot collaborative settings. Through two experiments—a survey-based experiment (Study 1, N = 169) and a behavioral experiment (Study 2, N = 130), we observed how a collocated female- versus male-gendered robot assistant affects participants’ willingness to accept a challenging task. Results revealed that interactions with female-gendered robots elicited significantly greater willingness to undertake a challenging task compared to male-gendered counterparts. This finding advances our understanding of human motivation in modern workplace environments that integrate robotic technologies, and underscores the critical role of gender cues in robot design, particularly in collaborative settings where task engagement and performance are prioritized.

Introduction

The rapid advancement of artificial intelligence (AI) has fundamentally influenced people’s daily life. The field has witnessed an unprecedented surge in the development of AI technologies, manifesting in application domains such as intelligent cognitive architectures (e.g., DeepSeek-V3, GPT-4o) and domestic service robots (e.g., RoboRock S8 Pro Ultra). Notably, many AI-embedded products demonstrate humanlike characteristics in terms of appearance, motivation, and emotional sensing capabilities (Broadbent, 2017; Huang and Rust, 2022; Wan and Chen, 2021).

In academia, human-robot interaction (HRI) research systematically examines how anthropomorphism influences user experience and emotional responses (Fink, 2012; Huang and Rust, 2022; van Straten et al., 2020; Wan and Chen, 2021; Złotowski et al., 2015), and how social skills, mood, and wellbeing may benefit from social-robot-based interventions (Duradoni et al., 2021). Central to these inquiries is the observation that people tend to imbue robots with humanlike characteristics (e.g., motivation, emotion), and tend to interact with robots in ways that resemble human-to-human relationships. For instance, people have the tendency to extend real-life psychological dynamics toward artificial entities (Russo et al., 2021), robots designed with culturally coded feminine or masculine features (e.g., vocal pitch, body shape, or names) elicit stereotypical expectations that influence compliance, trust, and role allocation (Eyssel and Hegel, 2012; Nass and Brave, 2005). This phenomenon is particularly salient in HRI, where anthropomorphic cues can shape trust, engagement, and compliance, thereby mediating collaborative task performance (Kim and McGill, 2011).

The present investigation addresses a critical gap in HRI literature by examining the following research question: How does a robot’s gender influence human motivation to undertake challenging tasks? This inquiry holds particular relevance in achievement contexts where task complexity often exceeds individual cognitive capacity. We propose that anthropomorphic design elements, especially gender cues, may potentially shape human motivation to engage with challenging objectives, as it increases the likelihood of successfully completing such tasks. Focusing on appearance-based anthropomorphism, the present research examines how gendered robot appearance anthropomorphism influences people’s motivation to undertake challenging tasks.

Robot appearance anthropomorphism

People often anthropomorphize intelligent machines, forming partnership with them that closely resemble human-human team interactions (Burgoon et al., 2016; Nass et al., 1995; Nass et al., 1994). In recent years, HRI research emphasizes anthropomorphic design, aiming at enabling robots to operate into human social environments such as healthcare, education, and domestic assistance (Bartneck et al., 2009; Broadbent et al., 2013).

Anthropomorphism is defined as the attribution of human characteristics, intentions, or emotional states to non-human entities (Epley et al., 2007; Fink, 2012; Wan and Chen, 2021). It facilitates people’s engagement in human-technology interactions during complex tasks (Sheehan et al., 2020), similar to their interactions with human counterparts. Humanoid robots, such as Unitree’s H1, SoftBank’s Pepper, and Boston Dynamics’ Atlas, exemplify anthropomorphic designs that enable a diverse range of functions by mirroring human characteristics and capabilities (Walliser et al., 2017).

However, the relationship between robots’ anthropomorphic appearance and user acceptance follows a curvilinear pattern. While moderate human-likeness enhances user acceptance, excessive morphological realism triggers the uncanny valley effect, a well-documented phenomenon where near-human replicas provoke visceral aversion (MacDorman and Chattopadhyay, 2016; Mathur and Reichling, 2016). For instance, MacDorman and Ishiguro (2006) revealed that photorealistic robot faces elicit stronger discomfort than more machine-like counterparts. Thus, it is of significance to optimize anthropomorphic design aiming to mitigate uncanny valley risks while preserving the social affordances of human-like design (Kim et al., 2019; Mathur et al., 2020).

Robot gendered anthropomorphism

Gender stereotypes traditionally characterize women as warmer and men as more competent, a pattern well-documented in social psychology research (Eagly and Karau, 2002; Eagly and Wood, 1999; Fiske et al., 2002). These biases extend to HRI, where people ascribe gender stereotypes to robots through cues such as appearance, voice, and naming (Borau, 2024; Perugia and Lisy, 2023). For instance, Eyssel and Hegel (2012) demonstrated that robots with female traits are perceived as warmer but less competent, while male-appearance robots are viewed as more authoritative—a direct reflection of human gender norms.

Such gender stereotypes may shape perceptions of robots’ suitability for specific tasks. Studies show that male-gendered robots are often favored in technical, leadership, or authoritative contexts, whereas female-gendered robots are preferred in caregiving or service contexts (Carpenter et al., 2009; Sandygulova and O’Hare, 2018). While aligning robots with familiar social scripts may enhance user engagement, this practice risks entrenching harmful stereotypes and reducing acceptance when robots deviate from gendered expectations (Nomura et al., 2006). For instance, anthropomorphic design of the front of cars—such as headlights resembling “eyes” or grilles resembling “mouths”—can trigger gendered anthropomorphism, with users attributing traits like a “friendly female” or “aggressive male” character to inanimate objects. These perceptions, in turn, influence human behavior, including driving habits or brand loyalty (Waytz et al., 2014). Such findings underscore the dual-edged role of gendered anthropomorphism in HRI, necessitating ethical considerations in design to balance relatability with equity.

Gendered anthropomorphism and human motivation to undertake challenging tasks

Specific and challenging tasks/goals can direct attention, mobilize effort, and foster persistence, leading to higher performance (Locke and Latham, 1990; Locke and Latham, 2006). Thus, mobilizing employees to accept and accomplish challenging tasks is critical for personal development and organizational success. In modern workplaces incorporating humanoid robots, understanding how robotic anthropomorphism influences employee’s motivation to accept challenging tasks becomes critically important.

Research suggests that motivation to undertake challenging tasks is influenced by the degree of anthropomorphic appearance in robots. In a recent study, Wang et al. (2025) demonstrated that robots with moderate anthropomorphism elicited greater willingness to accept a challenging task compared to hyper-realistic counterpart, a finding consistent with the “uncanny valley” effect documented in literature (Kim et al., 2019; Mathur et al., 2020; Mathur and Reichling, 2016). While excessive human-likeness often triggers negative affective responses (Ferrari et al., 2016; Ho and MacDorman, 2010; Müller et al., 2021; Pelau and Ene, 2018; Yogeeswaran et al., 2016; Yu, 2020), moderate anthropomorphism enhances task motivation during cognitively demanding tasks (Kim et al., 2022).

Furthermore, gendered anthropomorphism may also influence people’s motivation to undertake challenging tasks. Although gender cues of robots may result in gender-competence stereotyping beliefs as mentioned above (Duan et al., 2024; Loideain and Adams, 2020; Otterbacher and Talias, 2017; Perugia and Lisy, 2023), such stereotype may not necessarily translate to HRI in task-oriented contexts. AI-powered virtual personal assistants like Alexa, Cortana, and Siri are overwhelmingly designed with female-gendered names, voices, and personas (Loideain and Adams, 2020). Similarly, physical service robots already deployed in fields like hospitality or caregiving are often anthropomorphized as female in appearance (Alesich and Rigby, 2017). This feminization of assistive technologies may reinforce perceptions of robots as inherently suited to domestic or service roles. Such design choices could paradoxically subvert gender-competence stereotypes in task-oriented contexts, where users might prioritize functional performance over gender-competence stereotype when evaluating robotic capabilities. For instance, Carpinella et al. (2017) found that female robots were ascribed higher competence ratings and higher warmth ratings than male robots. Kapteijns and Graaf (2024) observed that female-voiced robots were ascribed higher competence than male-voiced robots.

The present study

The present study combined a survey-based experiment (Study 1) and a behavioral experiment (Study 2) to investigate how robot gendering influences challenging task acceptance in human-robot collaboration. Survey-based experiment is a methodology widely validated in social and behavioral research (Aguinis and Bradley, 2014; Atzmüller and Steiner, 2010; Evans et al., 2015). This approach is particularly suited to HRI investigations, due to its capacity to systematically manipulate independent variables while retaining rigorous control over experimental conditions. Study 1 was part of a large project (Wang et al., 2025) in which participants were presented with a scenario depicting a human-robot team is faced with the choice of whether to accept a challenging task in exchange for an intriguing reward. After reading the scenario, participants were asked to imagine themselves collaborating with a humanoid robot partner, shown as either male or female. In the behavioral experiment, participants decided whether to undertake a cognitively demanding computer-based task with the assistance of a robot partner, again depicted as male or female. In both experiments, the robot assumed an assistive role.

Existing literature suggests that female voices and personas are preferred in assistive roles (Nass and Brave, 2005) and that female robots are rated higher in warmth and task collaboration (Carpenter et al., 2009; Siegel et al., 2009). Given these findings, it is reasonable to argue that female robots are perceived as more trustworthy than male robots when performing assistive functions. Furthermore, research indicates that interpersonal trust enhances employee task motivation (Costa et al., 2018; Edmondson, 1999). Building on these foundations, we hypothesized that participants in the female robot partner condition would demonstrate greater willingness to accept a challenging task compared to those in the male robot condition.

Study 1

Methods

Participants

A total of 169 native Chinese students from a public university in southern China were recruited and randomly assigned to either the female robot condition (N = 94, 46 male, Mage = 18.20, SDage = 1.19) or the male robot condition (N = 75, 41 male, Mage = 18.24, SDage = 0.66). These students were recruited from two classrooms, each representing a different experimental condition. In the absence of a precise effect size estimate, we determined our sample size based on previous research (Kim et al., 2019), which indicated that 53 participants per condition would be sufficient. However, the final sample size was ultimately constrained by the number of available participants attending the classes. Moreover, to ensure the validity of the experiment, all participants were undergraduate students whose majors were unrelated to psychology or robotics. Notably, all participants were university students, which limited the sample’s representativeness. All participants volunteered to participate in the experiment and received 5RMB (1USD = 7.2RMB) for participation.

Materials

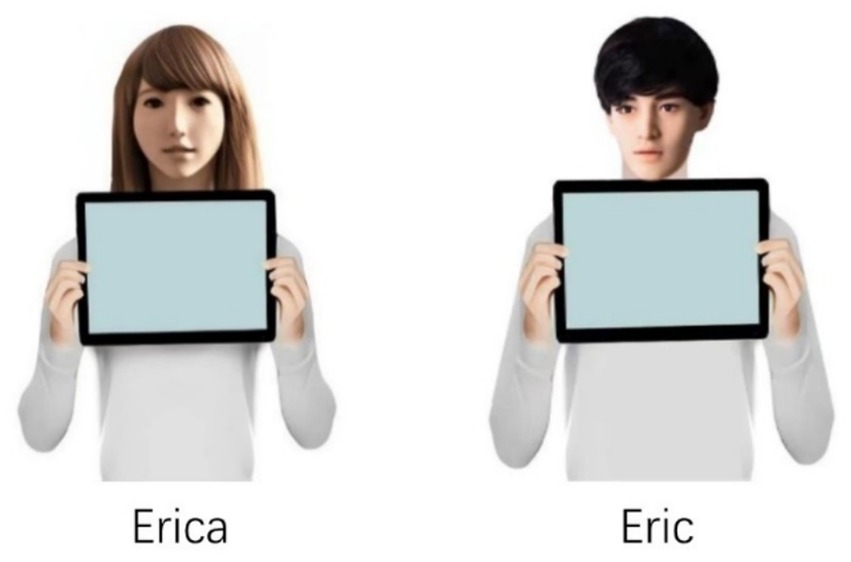

Participants were presented with a scenario in which they imagined themselves as a technician at KC, an IT company, faced with a decision to accept a challenging task in exchange for an attractive reward. Participants were informed that they would collaborate with a robot partner (named Erica or Eric, depicted in Figure 1) applied to assist in the task. The robot’s capabilities depicted in the scenario were modeled after a real-world AI tool used by an IT company to automate the review of loan application data. Participants were instructed to envision working with this robot to complete the task, leveraging its analytical skills while managing their own responsibilities. The scenario is introduced as follows:

KC is an IT company whose core business is to help banks conduct on-site inspection of information provided by companies applying for loans. KC follows the requirements of banks to collect and review information provided by those companies (information that can prove the real existence of the enterprise, such as the building name plate of those companies). Recently, KC temporarily received a business from a bank to help check information provided by their clients. A task that normally takes months must now be completed in just one—making it urgent and extremely difficult. Technician will complete the task with the help of a robot partner (see the picture below). Technician can decide whether to accept the task, and they can opt out of the task, and it will not affect their performance. Accomplishment of the task will bring additional bonuses.

The female robot, Erica, was sourced from the ABOT database1, a resource developed by researchers at Brown University. This database catalogs over 250 real-world anthropomorphic robots designed for research or commercial use, each rated on a 0–100 anthropomorphism scale across three dimensions: body-manipulators, face, and surface. Erica scored 89.6, indicating the high anthropomorphism. To ensure parity in anthropomorphism, the male robot, Eric, was designed based on the three dimensions, closely matching Erica’s human-likeness. In a manipulation check (Wang et al., 2025), adapted from Kim and McGill (2011), participants rated Erica (M = 5.20) and Eric (M = 5.13) similarly on items assessing anthropomorphism (e.g., “The robot looks like a person” and “The robot looks alive”). Both scored significantly higher than Pepper, a robot with moderate anthropomorphic features, which confirms the suitability of the images used in the present study.

Finally, motivation to undertake challenging tasks operationalized as participants’ willingness to accept a challenging task, assessed using a two-item measure: “I would love to accept this task” and “I’m very interested in taking this task,” r = 0.77, p < 0.001. Responses to this scale was given on a 9-point scale ranging from 1 (completely disagree) to 9 (completely agree).

Results

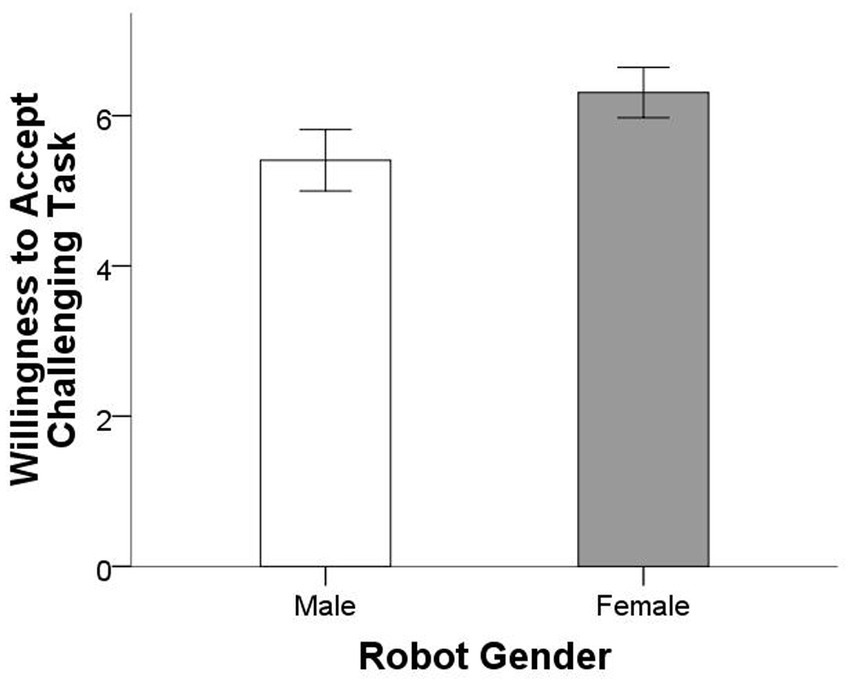

Figure 2 displays the mean willingness to accept the challenging task for the two conditions. Result showed that participants in female robot condition (M = 6.309, SD = 1.635) showed significantly higher willingness to accept challenging task (M = 5.407, SD = 1.774), F = 11.770, p = 0.001 (two-tailed), ηp2 = 0.066, thus confirming our hypothesis that participants in the female robot partner condition were more willing to accept the challenging task compared to those in the male robot condition.

Figure 2. Mean willingness to accept challenging task for the two conditions. Error bars show 95% confidence intervals.

Study 2

In Study 1, we demonstrated that participants interacting with a female robot exhibited greater willingness to accept a challenging task compared to those interacting with a male robot. Building on this result, Study 2 sought to replicate this finding using a behavioral measure within an experimental setting. This behavioral experiment required active engagement with cognitively demanding tasks. This paradigm offers enhanced ecological validity by capturing participants’ behavioral response rather than hypothetical scenario responses.

Methods

Participants

A total of 130 students from a public university in southern China were recruited and randomly assigned to either the female (N = 75, 41 male, Mage = 21.76, SDage = 0.87) or the male (N = 85, 35 male, Mage = 21.82, SDage = 0.77) robot conditions. These students were recruited from two classrooms, each representing a different experimental condition. We determined the sample size for this study using the same method as in Study 1. All participants were undergraduate students whose majors were unrelated to psychology or robotics. All participants volunteered to participate in the experiment and received 20RMB (1USD = 7.2RMB) for their participation.

Experimental procedure

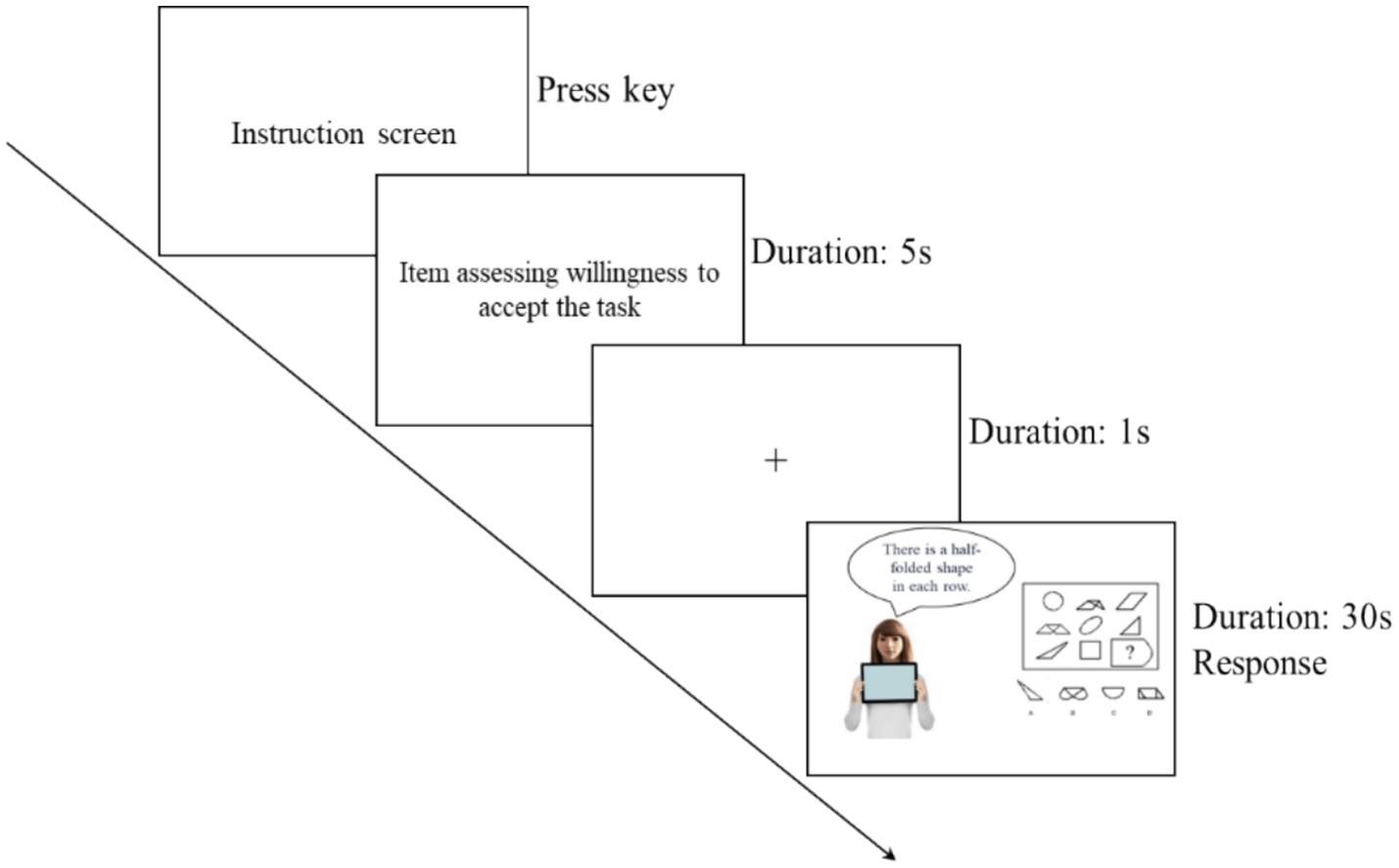

This study was conducted in a controlled computer lab. Upon arrival, participants were assigned predetermined computer stations. The experimenter provided an overview of the task: a cognitive task involving 20 graphical reasoning questions, each with a single correct answer. Participants were instructed to work with a robot assistant that would offer answer prompts throughout the task. Emphasis was placed on responding as quickly and accurately as possible. The session included a practice phase (5 questions) followed by a formal phase (20 questions).

Participants were also shown via their computer screen the detailed of the task, including a practice phase, formal phase, the robot assistant they would collaborate with, as well as the task (Figure 3). After carefully reading the instruction screen, they were instructed to press the spacebar to the next screen in which they completed an item assessing their willingness to accept the task on a 9-point scale anchored at 1 (“very unwilling to perform the task”) and 9 (“very willing to perform the task”). Then participants proceeded to the task. Each trial began with a 1-s fixation cross (“+”) followed by the robot partner and the target question. Participants had 30 s per question to submit answers (via ABCD keys); unanswered items were skipped and excluded from accuracy calculations.

Before beginning the formal task, participants completed a practice session to familiarize themselves with the interface and interaction with the robot. The robot was positioned on the left screen, while the question appeared on the right. Participants could repeat the practice phase as needed; they advanced to the formal phase once they confirmed understanding of the experimental procedure. Participants were informed that the top 10% of participants with the best task performance will receive additional rewards.

After the cognitive task, participants complete a set of questionnaires measuring their robot trust, robot capability, robot attractiveness.

Materials

The experiment utilized the same robot images (i.e., Erica, Eric) as Study 1 (Figure 1). We administered a challenging task comprising of 20 Graphic Reasoning Questions, a task format commonly featured in standardized exams such as China’s Civil Service Exams. These questions evaluate candidates’ logical reasoning, analytical thinking, and spatial–visual processing skills. For the selection process, twenty participants evaluated the perceived difficulty of 40 candidate questions. The formal experimental task incorporated the 20 most challenging items that met dual criteria: ranking in the top 50% for difficulty ratings and requiring completion times exceeding 60 s. This task was supplemented with 5 practice questions for task familiarization. This task was designed using E-Prime 3.0.

Inspired by previous research (Lee and Liang, 2016), the ostensibly intelligent robot helped the participants by providing text-based suggestions to solve a series of cognitive tasks (Figure 3). This design is to simulate real-world human-robot collaboration scenarios, specifically examining how gendered anthropomorphism influence willingness to accept challenging task.

Measures

After the cognitive task, participants complete questionnaires measuring their robot trust, robot capability, and robot attractiveness. Robot trust was assessed with two items (“The robot assistant is dependable” and “The robot assistant is trustworthy”), r = 0.84, p < 0.001. Robot capability was assessed by three items (“The robot assistant is smart,” “The robot assistant is knowledgeable,” “The robot assistant is capable”), α = 0.948. Robot appearance attractiveness was assessed with two items (“The appearance of robot assistant is attractiveness,” “The robot assistant is likeable”), r = 0.610, p < 0.001. Responses to these scales were given on a 9-point scale ranging from 1 (completely disagree) to 9 (completely agree).

Results

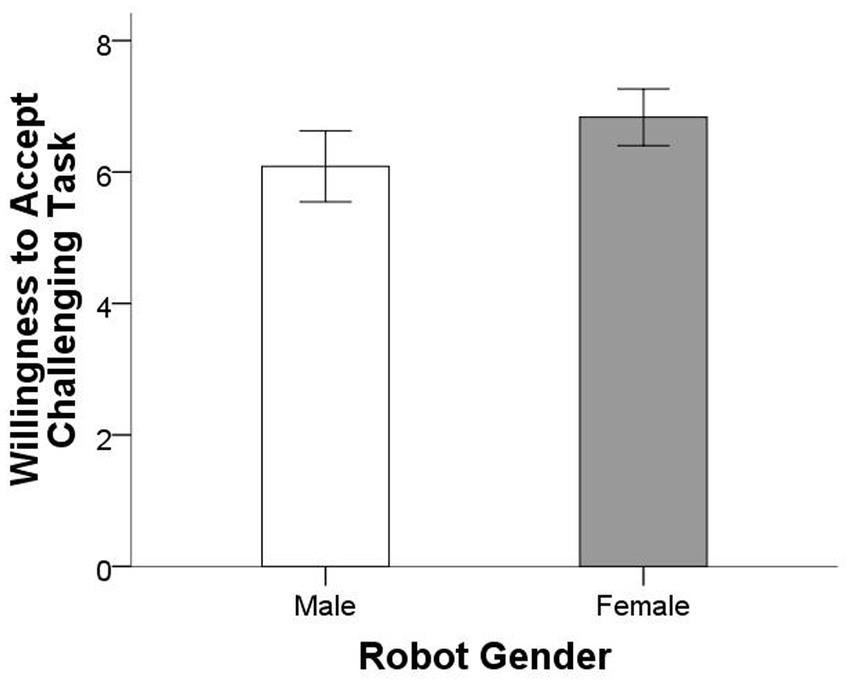

Figure 4 displays the mean willingness to accept the challenging task for the two conditions. Result again revealed a statistically significant difference in willingness to accept challenging tasks between the female (M = 6.83, SD = 1.669) and male (M = 6.09, SD = 2.263) robot conditions, F = 4.464, p = 0.037 (two-tailed), ηp2 = 0.034 (Figure 4). Although the effect was less robust than in Study 1, the finding further supported hypothesis. Moreover, results also showed a significant difference in terms of robot trust between the female (M = 6.767, SD = 1.531) and male (M = 5.971, SD = 1.539) robot conditions, F = 8.669, p = 0.004 (two-tailed), ηp2 = 0.063, suggesting that higher willingness to accept challenging task in the female robot condition could be driven by trustworthiness of robot. Moreover, robot capability (Mfemale = 6.200 vs. Mmale = 6.024, F = 0.296, p = 0.588 (two-tailed), ηp2 = 0.002) and robot appearance attractiveness (Mfemale = 5.600 vs. Mmale = 5.557, F = 0.022, p = 0.883 (two-tailed), ηp2 = 0.000) between the two conditions were not significantly different.

Figure 4. Mean willingness to accept challenging task for the two conditions. Error bars show 95% confidence intervals.

We then examined whether robot trust mediated the effects of robot gender on willing ness to accept a challenging task (Baron and Kenny, 1986). The effect of AI application was reduced to non-significance (from b = 0.748, p = 0.037, to b = 0.434, p = 0.217) when robot trust was included in the equation, and robot trust was a significant predictor of willingness to accept a challenging task (b = 0.429, p = 0.000). The Sobel test confirmed the mediation (z = 2.365, p = 0.018), indicating that robot trust mediated the relationship between robot gender and willingness to accept a challenging task.

Discussion

This study investigates the impact of gender-specific anthropomorphism in robots on human motivation to undertake challenging tasks within human-robot collaborative settings. Through two controlled experimental studies, we demonstrated that participants interacting with female-gendered robot counterparts exhibited a significantly higher willingness to accept challenging tasks compared to those interacting with male-gendered robot counterparts. Our findings reveal a measurable gender-based disparity in challenge acceptance behavior, suggesting that anthropomorphic gender cues in robotic interfaces may substantially influence human decision-making patterns during collaborative task scenarios.

Research consistently demonstrates that gender stereotypes automatically manifest in HRI, with feminine-gendered robots perceived as warmer yet less competent compared to their masculine counterparts, which are typically viewed as more authoritative (Borau, 2024; Eyssel and Hegel, 2012; Perugia and Lisy, 2023). This gender-competence stereotypes have been observed across multiple studies (Duan et al., 2024; Loideain and Adams, 2020; Otterbacher and Talias, 2017; Perugia and Lisy, 2023). However, our experimental findings showed that participants collaborating with a feminine-gendered robot demonstrated significantly greater willingness to undertake challenging tasks than those interacting with a masculine-gendered robot, despite showing no statistically significant difference in their evaluations of robotic capabilities. This suggests that gender stereotypes, while cognitively accessible, may not directly predict functional outcomes in collaborative HRI contexts.

In our experiments, robots were assigned assistive roles, and female-gendered robots received significantly higher trustworthiness ratings compared to their male-gendered counterparts. This finding aligns with prior research demonstrating that robots endowed with female attributes (e.g., voices, personas) are consistently preferred for assistive roles (Nass and Brave, 2005) and perceived as warmer and more effective collaborators in task-oriented contexts (Carpenter et al., 2009; Sandygulova and O’Hare, 2018; Siegel et al., 2009). Our results reinforce the established correlation between feminized robot designs and perceptions of warmth and cooperative utility in caregiving or service-oriented interactions.

Over the past decades, foundational theories of human motivation (Alderfer, 1969; Deci and Ryan, 1985; Hackman and Oldham, 1976; Herzberg et al., 1959; Locke and Latham, 1990; McClelland, 1961; McGregor, 1960; Vroom, 1964) have established critical frameworks for understanding workplace behavior. As contemporary organizational environments increasingly integrate robotic partners, understanding motivational dynamics in HRI becomes crucial for informing effective workplace technology integration. Our research extends this theoretical foundation by revealing significant gender anthropomorphism effects in task engagement. Consistent with prior work demonstrating that interpersonal trust enhances employee task motivation (Costa et al., 2018; Edmondson, 1999), our findings suggest that robot gender promotes task motivation through the mediating role of trust toward robots in HRI. Our finding underscores the importance of intentional gender design in robotic interfaces, particularly for complex task allocation systems where employee engagement directly impacts operational outcomes.

The present study has several limitations: Firstly, its reliance on a convenience sample of university students may restrict the generalizability of findings to broader populations. Given potential disparities in technological familiarity and cognitive schemas about robotics between student groups and the general public, this sampling approach risks overestimating technology acceptance rates. Future investigations should consider encompassing diverse age groups, professional backgrounds, and cross-cultural comparison to validate the external validity of the results. Second, the experimental paradigm’s dependence on static visual stimuli (i.e., images) inadequately captures multimodal interaction dynamics inherent to physical human-robot collaboration. Future research should employ real robots in naturalistic settings to simulate authentic collaborative contexts. Third, the study exclusively compared female-gendered and male-gendered robots, both characterized by high anthropomorphism. To fully elucidate the interaction between robot gender and anthropomorphism on individuals’ motivation to undertake challenging tasks, future work should include robots with moderate anthropomorphism and explore gender-neutral designs. Finally, Study 2 did not collect actual performance data. Future research using a similar methodology should include such measures to provide deeper insight into participants’ task motivation.

In summary, this study investigated the impact of gender-specific anthropomorphism in robots on human motivation to undertake challenging tasks. As hypothesized, our two studies indicate that participants interacting with a female-gendered robot demonstrated a higher propensity to accept a challenging task compared to those engaging with male-gendered counterparts. Despite the contributions of our research, potential limitations should be acknowledged, which might limit the generalizability and applicability of our results. This research provides insights to the design of collaborative robots by highlighting the importance of gender cues in optimizing human engagement and performance in task-oriented settings.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethics committee of Guangzhou College of Technology and Business. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

YZ: Writing – original draft, Conceptualization, Investigation, Writing – review & editing. LS: Funding acquisition, Formal analysis, Writing – review & editing, Methodology, Conceptualization. LZ: Resources, Conceptualization, Methodology, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Aguinis, H., and Bradley, K. J. (2014). Best practice recommendations for designing and implementing experimental vignette methodology studies. Organ. Res. Methods 17, 351–371. doi: 10.1177/1094428114547952

Alderfer, C. P. (1969). An empirical test of a new theory of human needs. Organ. Behav. Hum. Perform. 4, 142–175. doi: 10.1016/0030-5073(69)90004-X

Alesich, S., and Rigby, M. (2017). Gendered robots: implications for our humanoid future. IEEE Technol. Soc. Mag. 36, 50–59. doi: 10.1109/MTS.2017.2696598

Atzmüller, C., and Steiner, P. M. (2010). Experimental vignette studies in survey research. Methodology 6, 128–138. doi: 10.1027/1614-2241/a000014

Baron, R. M., and Kenny, D. A. (1986). The moderator–mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. J. Pers. Soc. Psychol. 51, 1173–1182. doi: 10.1037/0022-3514.51.6.1173

Bartneck, C., Kulić, D., Croft, E., and Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Social Robot. 1, 71–81. doi: 10.1007/s12369-008-0001-3

Borau, S. (2024). Deception, discrimination, and objectification: ethical issues of female AI agents. J. Bus. Ethics 198, 1–19. doi: 10.1007/s10551-024-05754-4

Broadbent, E. (2017). Interactions with robots: the truths we reveal about ourselves. Annu. Rev. Psychol. 68, 627–652. doi: 10.1146/annurev-psych-010416-043958

Broadbent, E., Kumar, V., Li, X., Sollers, J. 3rd, Stafford, R. Q., MacDonald, B. A., et al. (2013). Robots with display screens: a robot with a more humanlike face display is perceived to have more mind and a better personality. PLoS One 8:e72589. doi: 10.1371/journal.pone.0072589

Burgoon, J. K., Bonito, J. A., Lowry, P. B., Humpherys, S. L., Moody, G. D., Gaskin, J. E., et al. (2016). Application of expectancy violations theory to communication with and judgments about embodied agents during a decision-making task. Int. J. Hum.-Comput. Stud. 91, 24–36. doi: 10.1016/j.ijhcs.2016.02.002

Carpenter, J., Davis, J. M., Erwin-Stewart, N., Lee, T. R., Bransford, J. D., and Vye, N. (2009). Gender representation and humanoid robots designed for domestic use. Int. J. Soc. Robot. 1, 261–265. doi: 10.1007/s12369-009-0016-4

Carpinella, C. M., Wyman, A. B., Perez, M. A., and Stroessner, S. J. (2017), The robotic social attributes scale (RoSAS): development and validation. Paper presented at the 2017 12th ACM/IEEE international conference on human-robot interaction (HRI)

Costa, A. C., Fulmer, C. A., and Anderson, N. R. (2018). Trust in work teams: an integrative review, multilevel model, and future directions. J. Organ. Behav. 39, 169–184. doi: 10.1002/job.2213

Deci, E. L., and Ryan, R. M. (1985). The general causality orientations scale: self-determination in personality. J. Res. Pers. 19, 109–134. doi: 10.1016/0092-6566(85)90023-6

Duan, W., McNeese, N., Freeman, G., and Li, L. (2024). Mitigating gender stereotypes toward AI agents through an eXplainable AI (XAI) approach. Proc. ACM Hum.-Comput. Interact. 8. doi: 10.1145/3686969

Duradoni, M., Colombini, G., Russo, P. A., and Guazzini, A. (2021). Robotic psychology: a PRISMA systematic review on social-robot-based interventions in psychological domains. J 4, 664–697. doi: 10.3390/j4040048

Eagly, A. H., and Karau, S. J. (2002). Role congruity theory of prejudice toward female leaders. Psychol. Rev. 109, 573–598. doi: 10.1037/0033-295X.109.3.573

Eagly, A. H., and Wood, W. (1999). The origins of sex differences in human behavior: evolved dispositions versus social roles. Am. Psychol. 54, 408–423. doi: 10.1037/0003-066X.54.6.408

Edmondson, A. (1999). Psychological safety and learning behavior in work teams. Admin. Sci. Q. 44, 350–383. doi: 10.2307/2666999

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295X.114.4.864

Evans, S. C., Roberts, M. C., Keeley, J. W., Blossom, J. B., Amaro, C. M., Garcia, A. M., et al. (2015). Vignette methodologies for studying clinicians’ decision-making: validity, utility, and application in ICD-11 field studies. Int. J. Clin. Health Psychol. 15, 160–170. doi: 10.1016/j.ijchp.2014.12.001

Eyssel, F., and Hegel, F. (2012). (S)he’s got the look: gender stereotyping of robots. J. Appl. Soc. Psychol. 42, 2213–2230. doi: 10.1111/j.1559-1816.2012.00937.x

Ferrari, F., Paladino, M. P., and Jetten, J. (2016). Blurring human–machine distinctions: anthropomorphic appearance in social robots as a threat to human distinctiveness. Int. J. Social Robot. 8, 287–302. doi: 10.1007/s12369-016-0338-y

Fink, J. (2012). Anthropomorphism and human likeness in the design of robots and human-robot interaction. Paper presented at the Social Robotics, Berlin, Heidelberg: Springer.

Fiske, S. T., Cuddy, A. J., Glick, P., and Xu, J. (2002). A model of (often mixed) stereotype content: competence and warmth respectively follow from perceived status and competition. J. Pers. Soc. Psychol. 82, 878–902. doi: 10.1037/0022-3514.82.6.878

Hackman, J. R., and Oldham, G. R. (1976). Motivation through the design of work: test of a theory. Organ. Behav. Hum. Perform. 16, 250–279. doi: 10.1016/0030-5073(76)90016-7

Herzberg, F., Mausner, B., and Snyderman, B. (1959). The motivation to work. 2nd Edn. Oxford, England: John Wiley.

Ho, C. C., and MacDorman, K. F. (2010). Revisiting the uncanny valley theory: developing and validating an alternative to the Godspeed indices. Comput. Human Behav. 26, 1508–1518. doi: 10.1016/j.chb.2010.05.015

Huang, M.-H., and Rust, R. T. (2022). A framework for collaborative artificial intelligence in marketing. J. Retail. 98, 209–223. doi: 10.1016/j.jretai.2021.03.001

Kapteijns, A. I., and Graaf, M. D. (2024). Gender-emotion stereotypes in HRI: the effects of robot gender and speech act on evaluations of a robot. Paper presented at the 2024 33rd IEEE international conference on robot and human interactive communication (ROMAN).

Kim, L. H., Domova, V., Yao, Y., and Paredes, P. E. (2022). Effects of a co-located robot and anthropomorphism on human motivation and emotion across personality and gender. Paper presented at the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) Napoli, Italy.

Kim, S., and McGill, A. L. (2011). Gaming with Mr. slot or gaming the slot machine? Power, anthropomorphism, and risk perception. J. Consum. Res. 38, 94–107. doi: 10.1086/658148

Kim, S. Y., Schmitt, B. H., and Thalmann, N. M. (2019). Eliza in the uncanny valley: anthropomorphizing consumer robots increases their perceived warmth but decreases liking. Mark. Lett. 30, 1–12. doi: 10.1007/s11002-019-09485-9

Lee, S. A., and Liang, Y. (2016). The role of reciprocity in verbally persuasive robots. Cyberpsychol. Behav. Soc. Netw. 19, 524–527. doi: 10.1089/cyber.2016.0124

Locke, E. A., and Latham, G. P. (1990). A theory of goal setting and task performance. Englewood Cliffs, NJ: Prentice Hall.

Locke, E. A., and Latham, G. P. (2006). New directions in goal-setting theory. Curr. Dir. Psychol. Sci. 15, 265–268. doi: 10.1111/j.1467-8721.2006.00449.x

Loideain, N. N., and Adams, R. (2020). From Alexa to Siri and the GDPR: the gendering of virtual personal assistants and the role of data protection impact assessments. Comput. Law Secur. Rev. 36:105366. doi: 10.1016/j.clsr.2019.105366

MacDorman, K. F., and Chattopadhyay, D. J. C. (2016). Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition 146, 190–205. doi: 10.1016/j.cognition.2015.09.019

MacDorman, K. F., and Ishiguro, H. (2006). The uncanny advantage of using androids in cognitive and social science research. Interact. Stud. 7, 297–337. doi: 10.1075/is.7.3.03mac

Mathur, M. B., and Reichling, D. B. J. C. (2016). Navigating a social world with robot partners: a quantitative cartography of the Uncanny Valley. Cognition 146, 22–32. doi: 10.1016/j.cognition.2015.09.008

Mathur, M. B., Reichling, D. B., Lunardini, F., Geminiani, A., Antonietti, A., Ruijten, P. A., et al. (2020). Uncanny but not confusing: multisite study of perceptual category confusion in the Uncanny Valley. Comput. Human Behav. 103, 21–30. doi: 10.1016/j.chb.2019.08.029

Müller, B. C. N., Gao, X., Nijssen, S. R. R., and Damen, T. G. E. (2021). I, robot: how human appearance and mind attribution relate to the perceived danger of robots. Int. J. Soc. Robot. 13, 691–701. doi: 10.1007/s12369-020-00663-8

Nass, C., and Brave, S. (2005). Wired for speech: How voice activates and advances the human-computer relationship. Cambridge, MA, US: Boston Review.

Nass, C., Moon, Y., Fogg, B. J., Reeves, B., and Dryer, D. C. (1995). Can computer personalities be human personalities? Int. J. Hum.-Comput. Stud. 43, 223–239. doi: 10.1006/ijhc.1995.1042

Nass, C., Steuer, J., and Tauber, E. R. (1994) Computers are social actors. Paper presented at the proceedings of the SIGCHI conference on human factors in computing systems. Boston, Massachusetts, USA. doi: 10.1145/191666.191703

Nomura, T., Kanda, T., and Suzuki, T. (2006). Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI Soc. 20, 138–150. doi: 10.1007/s00146-005-0012-7

Otterbacher, J., and Talias, M.. (2017). S/he’s too warm/agentic! The influence of gender on uncanny reactions to robots. Paper presented at the 2017 12th ACM/IEEE international conference on human-robot interaction (HRI).

Pelau, C., and Ene, I. (2018). Consumers’ perception on human-like artificial intelligence devices. Basiq international conference: new trends in sustainable business and consumption 2018, 197-203

Perugia, G., and Lisy, D. (2023). Robot’s gendering trouble: a scoping review of gendering humanoid robots and its effects on HRI. Int. J. Soc. Robot. 15, 1725–1753. doi: 10.1007/s12369-023-01061-6

Russo, P. A., Duradoni, M., and Guazzini, A. (2021). How self-perceived reputation affects fairness towards humans and artificial intelligence. Comput. Human Behav. 124:106920. doi: 10.1016/j.chb.2021.106920

Sandygulova, A., and O’Hare, G. M. P. (2018). Age- and gender-based differences in children’s interactions with a gender-matching robot. Int. J. Social Robot. 10, 687–700. doi: 10.1007/s12369-018-0472-9

Sheehan, B., Jin, H. S., and Gottlieb, U. (2020). Customer service chatbots: anthropomorphism and adoption. J. Bus. Res. 115, 14–24. doi: 10.1016/j.jbusres.2020.04.030

Siegel, M., Breazeal, C., and Norton, M. I.. (2009). Persuasive robotics: the influence of robot gender on human behavior. Paper presented at the 2009 IEEE/RSJ international conference on intelligent robots and systems.

van Straten, C. L., Peter, J., and Kühne, R. (2020). Child–robot relationship formation: a narrative review of empirical research. Int. J. Soc. Robot. 12, 325–344. doi: 10.1007/s12369-019-00569-0

Walliser, J. C., Mead, P. R., and Shaw, T. H. (2017). The perception of teamwork with an autonomous agent enhances affect and performance outcomes. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 61, 231–235. doi: 10.1177/1541931213601541

Wan, E. W., and Chen, R. P. (2021). Anthropomorphism and object attachment. Curr. Opin. Psychol. 39, 88–93. doi: 10.1016/j.copsyc.2020.08.009

Wang, F. L., Chen, R. X., Zhu, Y., and Hu, Y. (2025). The motivational influence of robot appearance: The role of robot anthropomorphism in human willingness to accept challenging task.

Waytz, A., Heafner, J., and Epley, N. (2014). The mind in the machine: anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 52, 113–117. doi: 10.1016/j.jesp.2014.01.005

Yogeeswaran, K., Złotowski, J., Livingstone, M., Bartneck, C., Sumioka, H., and Ishiguro, H. (2016). The interactive effects of robot anthropomorphism and robot ability on perceived threat and support for robotics research. J. Hum.-Robot Interact. 5:29. doi: 10.5898/JHRI.5.2.Yogeeswaran

Yu, C. E. (2020). Humanlike robots as employees in the hotel industry: thematic content analysis of online reviews. J. Hosp. Mark. Manag. 29, 22–38. doi: 10.1080/19368623.2019.1592733

Keywords: HRI, anthropomorphism, group process, AI, human–AI interaction (HAII)

Citation: Zhu Y, Su L and Zheng L (2025) Gendered anthropomorphism in human–robot interaction: the role of robot gender in human motivation in task contexts. Front. Psychol. 16:1593536. doi: 10.3389/fpsyg.2025.1593536

Edited by:

Maurizio Mauri, Catholic University of the Sacred Heart, ItalyReviewed by:

Mirko Duradoni, Unimercatorum University, ItalyManizheh Zand, Santa Clara University, United States

Copyright © 2025 Zhu, Su and Zheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ling Su, bGluZ3BzeUAxNjMuY29t; Lijing Zheng, emhlbmdsaWppbmdAZ2VuY2guZWR1LmNu

Yi Zhu

Yi Zhu Ling Su

Ling Su Lijing Zheng

Lijing Zheng