Abstract

Background:

With the rapid proliferation of misinformation on social media, increasing attention has been paid to its psychological and behavioral mechanisms. Emotional valence—particularly the positive or negative tone of information—is often used in constructing misinformation, facilitating its wide dissemination. However, existing findings on how emotional valence influences misinformation sharing remain mixed, especially among adolescent populations. This study explores the impact of information valence on high school students’ willingness to share misinformation and evaluates the effectiveness of a targeted accuracy prompt.

Methods:

Two experiments were conducted. In Experiment 1, 53 high school students completed a news-sharing task involving both true and false headlines with varying emotional valence. Their willingness to share was measured. In Experiment 2, 40 students received a valence-targeted accuracy prompt designed to highlight common characteristics of misinformation. The effectiveness of the intervention in reducing misinformation sharing was then assessed.

Results:

Experiment 1 showed that participants were significantly more willing to share positive misinformation than negative misinformation, regardless of authenticity. Information valence had a significant effect on response bias. In Experiment 2, students who received the accuracy prompt intervention demonstrated significantly lower willingness to share misinformation compared to the control group, indicating the effectiveness of this brief and targeted approach.

Conclusion:

Information valence plays a critical role in shaping adolescents’ willingness to share misinformation, with positive content being more readily shared. A brief accuracy prompt intervention tailored to information characteristics and emotional valence can effectively reduce misinformation sharing among high school students. These findings provide theoretical and practical insights into combating misinformation in adolescent populations.

1 Introduction

In the digital era, the rapid spread of misinformation has emerged as a serious concern within online networks. Compared with factual information, misinformation tends to propagate more rapidly and widely, resembling the viral nature of a contagion (Vosoughi et al., 2018). Individuals are easily influenced by misinformation and often contribute to its diffusion by sharing it with others (Keselman et al., 2021). Lim and Perrault (2020) argue that the core issue lies more in the act of sharing than in the creation of misinformation, noting that its potential harm remains limited unless it garners public attention. The widespread dissemination of misinformation presents significant challenges to informed decision-making, particularly in critical domains such as public health (Apuke and Omar, 2021; Lewandowsky et al., 2012; Pennycook et al., 2020b; Vogel, 2017) and emergency management (Gupta et al., 2013; Keim and Noji, 2011; Spiro et al., 2012), thereby underscoring the urgency and necessity of research into the mechanisms underlying misinformation sharing.

A common feature of misinformation is the use of emotional language and appeals designed to provoke emotional reactions, which significantly contribute to its virality (Vosoughi et al., 2018; Chen et al., 2021; Igwebuike and Chimuanya, 2021; Kumar et al., 2021; Song et al., 2019). Brady et al. (2020) explored the psychological mechanisms behind the rapid spread of moral and emotional content, finding that such material tends to capture attention and correlates strongly with sharing behaviors on social media. Emotional characteristics—especially valence and arousal—play a pivotal role in influencing individuals’ willingness to share Berger and Milkman (2012), through a large-scale analysis of nearly 7,000 New York Times articles and subsequent experimental studies, concluded that content with positive emotional valence increases the likelihood of sharing. People are more inclined to disseminate content that is entertaining, helpful, or emotionally uplifting, driven by a preference to be perceived as spreaders of optimistic messages rather than sources of upsetting or anger-inducing content. Positive sharing can elevate others’ moods and yield social rewards.

However, this perspective is not universally accepted. Other studies suggest that audiences show a greater interest in negative content, including crises, conflicts, and disasters (Galil and Soffer, 2011; Soroka and McAdams, 2015). Salgado and Bobba (2019) found that negatively toned content on Facebook generates higher user engagement. Supporting this notion, Soroka et al. (2019) conducted an experimental study across 17 countries, revealing a consistent negativity bias: participants presented greater heart rate variability (HRV) in response to bad news compared to positive news. This finding implies that negative content is more attention-grabbing and that the preference for such content is cross-culturally robust. These conflicting findings indicate that the emotional valence of content—both positive and negative—merits deeper exploration in relation to misinformation sharing.

Among various interventions to combat misinformation targeted at adolescents, psychological inoculation (Schubatzky and Haagen-Schützenhöfer, 2023) and indicator-based approaches (Hartwig et al., 2024) are commonly employed, yet both face significant limitations. While effective, psychological inoculation interventions are often time-intensive and may fail to engage those with lower cognitive reflection—the very group most vulnerable to misinformation (Pennycook and Rand, 2021). Indicator-based interventions, on the other hand, require large-scale content monitoring and labeling, which is often impractical and may trigger implied truth effect through repeated exposure to flags (Pennycook et al., 2020a). These challenges are particularly acute for adolescents, who frequently exhibit underdeveloped critical thinking skills, overconfidence, and high exposure to online misinformation due to extensive social media use (Papapicco et al., 2022). Their heightened vulnerability calls for more feasible and scalable interventions. In this context, targeted accuracy prompts—which deliver concise, cues focused on specific features of misinformation—represent a promising alternative. Prompting accuracy before sharing can reduce the spread of misinformation, a conclusion supported by causal evidence from survey experiments and field studies on Twitter (Pennycook et al., 2020b, 2021). Although accuracy prompts have been recognized as an effective intervention, studies such as Gawronski et al. (2023) indicate that their effect sizes tend to be small, potentially due to a lack of specificity in targeting misinformation. Therefore, this study adopts a targeted accuracy prompt approach, focusing on precise cues related to characteristics that may influence adolescents’ sharing of misinformation. Such prompts are not only easy to implement and scale on social media platforms but may also prove more effective for adolescents than generic reminders, as they reduce cognitive load and directly guide attention toward unreliable content.

This study aims to address these gaps by investigating the effect of emotional valence on adolescents’ willingness to share misinformation and evaluating the effectiveness of accuracy prompt interventions. While considerable research has focused on adult behavior, adolescent sharing of misinformation remains underexplored. This is a critical oversight, especially given that, as of June 2024, China had 188 million underage internet users, accounting for 17.1% of its total online population (according to the 54th China Statistical Report on internet Development) (China Internet Network Information Center, 2024). Adolescents are at a unique stage of cognitive and emotional development. Their cognitive control abilities are still maturing (Casey et al., 2008), while their social–emotional neural systems —including the amygdala, ventral striatum, orbitofrontal cortex, and medial prefrontal cortex—are highly active (Steinberg, 2010), often leading to emotionally-driven and impaired decision-making (van Duijvenvoorde et al., 2010). Furthermore, adolescents frequently lack the digital literacy and social experience necessary to navigate the complex online information landscape. Given the continued prevalence of misinformation on social media (as noted by the China Internet Joint Rumor-Refutation Platform) (Cyberspace Administration of China, 2024), many adolescents remain ill-equipped to verify information authenticity or utilize fact-checking tools effectively (China Youth Internet Association, 2024).

Therefore, the present study investigates how the emotional valence of information influences high school students’ willingness to share misinformation. Utilizing the true-false news-sharing task paradigm developed by Pennycook et al. (2020b, 2021), we examine adolescent responses within a social media context to identify patterns in misinformation sharing. Building upon these insights, we develop and assess targeted accuracy prompt interventions based on information valence, aiming to reduce the willingness of adolescents to spread misinformation and to contribute practical solutions to the ongoing challenge of digital misinformation.

2 Experiment 1

This experiment aimed to investigate the impact of emotional valence on high school students’ willingness to share misinformation by comparing their willingness to share true versus false news under different valence conditions. A 2 (authenticity: true vs. false) × 2 (emotional valence: positive vs. negative) within-subjects design was employed. All participants were exposed to both positive and negative news items. After viewing each item, they were asked to rate their willingness to share it.

2.1 Methods

2.1.1 Participants and design

The required sample size was estimated using G*Power 3.1.9.2 software. Based on a small-to-medium effect size (f = 0.20, with α = 0.05 and 1 − β = 0.80), the minimum required sample size was determined to be 36. To meet this requirement, 56 high school students (28 males and 28 females; age range: 16–18 years; M = 16.08, SD = 0.51) with normal or corrected-to-normal vision. The participants selected for the experiment were all self-reported active social media users who regularly share content online. Those who were inactive on social media or engaged in sharing less than once per week were excluded from the study. All participants provided informed consent, and the study was approved by the Ethics Committee of Shandong University of Aeronautics.

The experiment utilized a 2 × 2 within-subjects design. In addition to examining news-sharing intentions, signal detection theory (SDT) was applied to assess participants’ sensitivity to information authenticity. Following the approach of Batailler et al. (2022), we measured discrimination sensitivity (d′) and response bias (c) to provide nuanced insights into participants’ decision-making regarding information sharing. In our analysis, we opted to use the response bias metric c. Although both d’ and c are derived from hit rates and false alarm rates, these two metrics are conceptually independent of each other (Macmillan and Creelman, 2004; Stanislav and Todorov, 1999).

The dependent variables primarily include the following: (1) Sharing Intention: participants rated their willingness to share each news item on a 6-point Likert scale (1 = extremely unlikely to share, 6 = extremely likely to share). (2) Discrimination sensitivity (d’): Calculated as d′ = z(H) − z(FA), where H refers to the proportion of true headlines participants chose to share, and FA refers to the proportion of false headlines shared. (3) Response bias (c): Calculated as c = −×[z(H) + Z(FA)]. Sharing intentions were binarized for SDT analysis (ratings 1–3 = “not share”; ratings 4–6 = “share”).

2.1.2 Materials

True and false headlines were selected based on established methods (Pennycook et al., 2020b, 2021). True headlines were sourced from reputable media outlets such as Xinhua News and People’s Daily Online, while false headlines were verified as misinformation by platforms including the China Internet Joint Rumor-Refutation Platform, Xinhua News Agency App, and Tencent Jiaozhen.

An initial pool of 30 true and 30 false headlines was evaluated by 40 high school students on valence (1 = very negative; 7 = very positive), arousal (1 = very calm; 7 = very excited), and familiarity (1 = very unfamiliar; 7 = very familiar). Based on these ratings, 40 headlines were selected for the formal experiment (20 true, 20 false), equally split between positive and negative valence. Each was presented in a “picture + headline” format mimicking real social media content (Sample headlines see Figure 1). The visual stimuli presented in the figures of this manuscript are AI-generated illustrations created for the purpose of publication. They serve as scientifically accurate representations of the original stimulus set used during data collection, which could not be published due to copyright restrictions. All data analyses, results, and conclusions are based solely on the participants’ responses to the original stimuli.

Figure 1

![Figure 1 was created using jimeng AI, a professional AI image generation tool, [https://jimeng.jianying.com/], the left panel using the prompt “[The mother has suffered from ALS for many years. Her 20-year-old son is feeding her. The family of three is warm and touching. News report illustrations, in cartoon style].” And the right using the prompt “[A female kindergarten teacher was giving medicine to a boy. News report illustrations, in cartoon style].” These images were created to accurately depict the key features of the original stimuli used in the actual experiment.](https://www.frontiersin.org/files/Articles/1664890/xml-images/fpsyg-16-1664890-g001.webp)

Sample headlines (the left panel presents a real positive news headline: Chinese Gen Z Son’s 12-Year ALS Care for Mother Touches Millions. And the right displays a false negative news headline: Shanxi Kindergarten Teacher Allegedly Force-Feeds Birth Control Pills to Male Pupil).

Independent sample t-test confirmed significant differences in valence for both true and false headlines. For true headlines, t(18) = 33.19, p < 0.001 (positive headline valence: M = 6.09, SD = 0.34; negative headline valence: M = 2.29, SD = 0.13); for false headlines, t(18) = 15.86, p < 0.001 (positive headline valence: M = 5.93, SD = 0.50; negative headline valence: M = 2.47, SD = 0.47). For both true and false headlines, there were no significant differences in arousal or familiarly ratings between positive and negative headlines for either authenticity type. In terms of headline arousal levels, for true headlines, t(18) = 0.54, p > 0.05 (positive headline: M = 4.86, SD = 0.59; negative headline: M = 5.01, SD = 0.70); for false headlines, t(18) = 0.07, p > 0.05 (positive headline: M = 4.81, SD = 0.93; negative headline: M = 4.84, SD = 0.45). In terms of headline familiarity levels, for true headlines, t(18) = 0.47, p > 0.05 (positive headline: M = 4.12, SD = 0.70; negative headline: M = 4.27, SD = 0.72); for false headlines, t(18) = 0.47, p > 0.05 (positive headline: M = 4.20, SD = 0.78; negative headline: M = 4.04, SD = 0.79).

2.1.3 Procedure

Participants were informed they would be evaluating real social media news screenshots. The experimental was administered using E-prime 2.0 program. Each trial began with a fixation cross “+” displayed for 1,000 ms, followed by a news screenshot. Participants then rated their willingness to share it using the 6-point scale. The next item was presented automatically upon response. The session lasted approximately 10–15 min. Responses were automatically recorded.

Three participants were excluded due to inattentive responses (mean reaction time <500 ms), resulting in a final sample of 53 participants (25 males, 28 females).

2.2 Results

A 2 (authenticity: true vs. false) × 2 (valence: positive vs. negative) repeated-measures ANOVA was conducted. The main effect of authenticity was not significant, F(1, 52) = 0.35, p > 0.05, ηp2 = 0.01. However, the main effect of valence was significant, F(1, 52) = 17.44, p < 0.001, ηp2 = 0.25, indicating that participants were more willing to share positive headlines (M = 3.66, SD = 1.04) than negative ones (M = 3.00, SD = 0.98).

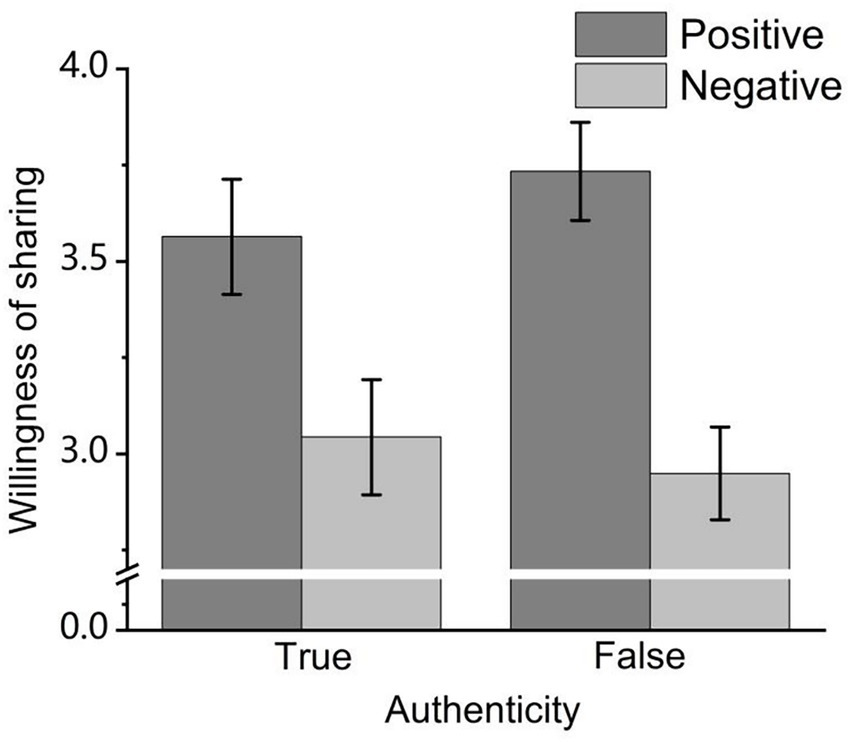

The interaction between authenticity and valence was marginally significant, F(1, 52) = 3.89, p = 0.054, ηp2 = 0.07. Simple effect analyses revealed significant differences in sharing intention between positive and negative headlines for both true, F(1, 52) = 7.03, p < 0.05, ηp2 = 0.12 and false [F(1, 52) = 31.91, p < 0.001, ηp2 = 0.38] conditions, with a stronger effect observed for false headlines (See Figure 2).

Figure 2

Mean willingness to share headlines across different valences in Experiment 1.

Subsequently, discrimination sensitivity (d’) and response bias (c) were calculated, followed by regression analysis to examine the effect of emotional valence on these two indicators. The results showed that emotional valence did not significantly predict discrimination sensitivity, β = −0.09, F(1, 104) = 0.76, p > 0.05. However, emotional valence had a significant effect on response bias, β = −0.33, F(1, 104) = 12.26, p < 0.01. Specifically, as the emotional valence of information became more positive, the response bias value significantly decreased. A positive value of c reflects a conservative bias (i.e., a tendency not to share, regardless of authenticity), while a negative value of c reflects a liberal bias (i.e., a tendency to share, regardless of authenticity). This suggests that participants were more inclined to share information when it was positively valenced, irrespective of whether it was true or false.

To validate the robustness of the binarized classification approach, we performed ROC curve analysis. The results showed that the AUC for the positive condition was 0.627 (95% CI: 0.521–0.733), while for the negative condition it was 0.663 (95% CI, 0.560–0.766). The difference in AUC between the two conditions was not significant (0.036, p = 0.632). These findings support the hypothesis that valence primarily affects response bias rather than discriminative sensitivity. Specifically, the positive condition exhibited a liberal response bias (c = −0.357), whereas the negative condition showed a conservative response bias (c = 0.419), with a significant difference between them (0.776). Threshold sensitivity analysis further confirmed the rationality of using the 3.5 cutoff, indicating that the binarized classification method possesses good robustness.

2.3 Discussion of experiment 1

The findings of Experiment 1 revealed that information authenticity did not significantly affect sharing intentions, which is consistent with previous research indicating that individuals often struggle to differentiate between true and false information when deciding what to share online. More importantly, emotional valence significantly influenced high school students’ willingness to share misinformation, with participants showing a significantly higher willingness to share positive headlines compared to negative ones. This aligns with earlier studies suggesting that emotionally positive content is more likely to be shared (Berger and Milkman, 2012), and the current results extend this pattern to the adolescent population.

Although the interaction between authenticity and valence was only marginally significant, further simple effect analyses revealed that the difference in willingness to share positive vs. negative headlines was significant for both true and false information. Notably, this effect was stronger for false headlines, suggesting that positively-valenced misinformation may be especially compelling and likely to be shared by high school students. This pattern may be explained by the entertaining or altruistic qualities of some of the positively framed misinformation used in the study—for example, stories about miraculous rescues, community donations, or heartwarming technological advancements—which may make them more emotionally engaging or socially rewarding to share.

Ceylan et al. (2023) posit that misinformation sharing is fundamentally habitual—a perspective offering explanatory power for the current findings. Adolescents’ preference for sharing positive-content may reflect not merely valence-based evaluation, but more fundamentally, affectively cued habitual automation. This aligns with the habit-goal interface theory (Wood and Rünger, 2016): positive valence serves as a contextual trigger activating preexisting sharing scripts, with the “single-tap sharing” affordance of social media platforms reinforcing habit loops. Receiving positive feedback (e.g., like notifications) on social media activates reward-processing regions including the striatum and ventral tegmental area (Sherman et al., 2018). Crucially, adolescents’ heightened sensitivity to peer evaluation motivates impression management through sharing positive-content, this may form a situation-response connection, causing sharing behaviors to break away from prudent evaluation.

Additionally, the results from the signal detection theory (SDT) analysis provide further insight: while emotional valence did not significantly impact participants’ ability to distinguish true from false information (i.e., discrimination sensitivity, d′), it did have a significant effect on their response bias. Specifically, participants demonstrated a stronger bias toward sharing positively valenced information, regardless of its truthfulness. This suggests that even when individuals are capable of identifying false information, they may still choose to share it if it carries a positive emotional tone—potentially prioritizing emotional resonance or social value over accuracy. This tendency contributes to the amplification and spread of misinformation on social media platforms.

3 Experiment 2

Experiment 1 demonstrated that emotional valence significantly influences high school students’ willingness to share misinformation, with a preference for sharing positively-valenced headlines. These results suggest that adolescents may overlook the authenticity of information when deciding to share it. Experiment 2 aimed to test the effectiveness of an accuracy prompt intervention, in which participants were encouraged to consider the accuracy of emotionally positive headlines before making sharing decisions.

3.1 Methods

3.1.1 Participants and design

The required sample size was estimated using G*Power 3.1.9.2 software. Assuming a small-to-medium effect size (f = 0.20), with α = 0.05 and (1 − β) = 0.80, the analysis yield a minimum required sample size of 36 participants. To meet this requirement and allow for possible exclusions, 40 high school students (18 males, 22 females; M = 15.53 years, SD = 0.68, age range: 15–17) were recruited, none of whom had participated in Experiment 1. All participants had normal or corrected-to-normal vision. The participants selected for the experiment were all self-reported active social media users who regularly share content online. Those who were inactive on social media or engaged in sharing less than once per week were excluded from the study. Informed consent was obtained prior to the experiment, and ethical approval was granted by the Ethics Committee of Shandong University of Aeronautics.

The experiment used a 2 (Authenticity: true vs. false) × 2 (Intervention: pre- vs. post-intervention) within-subjects design. Each participant read 20 headlines divided into two phases: 10 headlines were presented without intervention, and the remaining 10 with the accuracy prompt intervention. The dependent variable was willingness to share each headline on social media, rated on a 6-point Likert scale (1 = extremely unlikely, 6 = extremely likely).

3.1.2 Materials

The headlines used in this study were selected from Experiment 1. Given that participants exhibited a significantly higher willingness to share positive headlines, the intervention focused solely on positive content to avoid floor effects associated with negative headlines. A total of 20 positive headlines (10 true, 10 false) were included, each formatted as a screenshot combining an image and a headline, mimicking typical social media posts (Sample headlines see Figure 3).

Figure 3

![Figure 3 was created using jimeng AI, a professional AI image generation tool, [https://jimeng.jianying.com/], the left panel using the prompt “[When a fire broke out, police officers rushed into the scene to put out the fire and save people. News report illustrations, in cartoon style].” And the right using the prompt “[A commemorative photo of a cat with an honorary certificate. News report illustrations, in cartoon style].” These images were created to accurately depict the key features of the original stimuli used in the actual experiment.](https://www.frontiersin.org/files/Articles/1664890/xml-images/fpsyg-16-1664890-g003.webp)

Sample headlines (the left panel presents a real positive news headline: Police Dash into Burning Building to Rescue Residents, Fight Blaze in Shanghai. And the right displays a false positive news headline: “Claw-enforcement”: Adopted Stray Cat in Foshan Helps Crack Case, Gets Award).

3.1.3 Procedure

Participants were informed that they would be viewing screenshots of information from social media. The experiment was administered using E-Prime 2.0 software and conducted in two phases.

In Phase 1 (pre-intervention), a fixation cross (“+”) appeared for 1,000 ms, followed by presentation of the first 10 headlines. After each headline, participants rated their willingness to share using the 6-point scale. Once a response was made, the next headline appeared automatically.

In Phase 2 (post-intervention), participants first read the following instruction:” Some information on social media is true, while some is false. False information is sometimes especially positive and appealing. Please carefully scrutinize such content before deciding to share.” Following this, the remaining 10 headlines were presented, and participants again rated their willingness to share each item.

To control for order effects, each headline appeared with equal frequency across the two phases, and their presentation order was fully counterbalanced across all participants. Specifically, each headline was presented in the pre-intervention phase for half of the participants and in the post-intervention phase for the other half. The entire task took approximately 5–10 min, and responses were recorded automatically.

3.2 Results

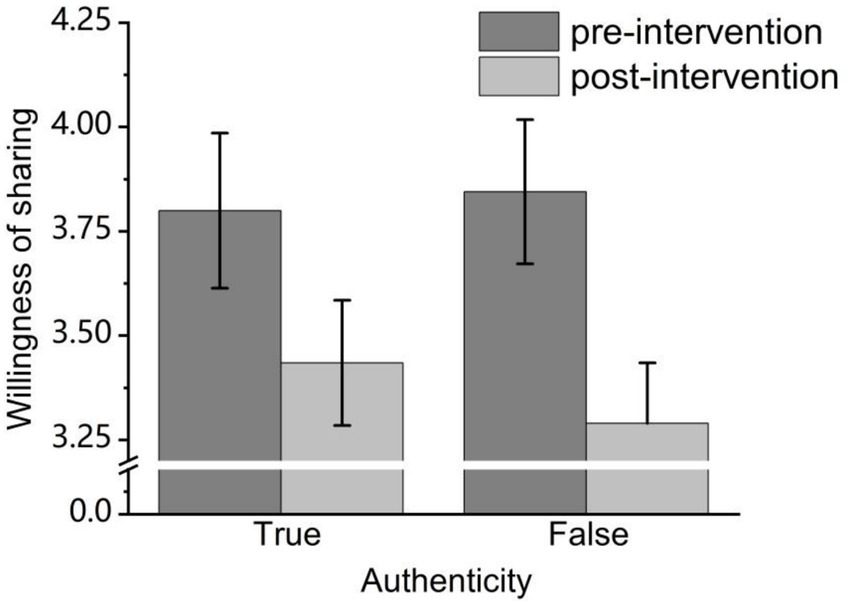

A 2 (Authenticity: true vs. false) × 2 (Intervention: none vs. accuracy prompt) repeated-measures ANOVA was conducted. The results showed that the main effect of authenticity was not significant, F(1, 39) = 0.23, p > 0.05, ηp2 = 0.006; the main effect of the intervention was significant, F(1, 39) = 12.56, p < 0.05, ηp2 = 0.24, 95% CI [0.20, 0.72], which constitutes a large effect size according to Cohen’s (1988) standards. With the willingness to share headlines being significantly lower in the accuracy prompt condition than in the control condition. Specifically, the willingness to share false headlines decreased from 3.85 under the control conditions to 3.29 (average), and the willingness to share real information decreased from 3.80 under the control conditions to 3.44. The interaction effect between authenticity and intervention was not significant (See Figure 4).

Figure 4

Mean willingness to share headlines across different conditions in Experiment 2.

Subsequently, discrimination sensitivity (d’) and response bias (c) were calculated, followed by regression analysis to examine the effect of accuracy prompt intervention these two indicators. The results showed that accuracy prompt intervention did not significantly predict discrimination sensitivity, β = −0.01, F(1, 78) = 0.02, p > 0.05. However, accuracy prompt intervention had a significant effect on response bias, β = −0.34, F(1, 78) = 10.49, p < 0.01. This indicates that the accuracy prompt intervention did not improve adolescents’ ability to distinguish between true and false information, and generally reduced their intention to share positive content, regardless of whether it was true or false.

3.3 Discussion of experiment 2

The findings from Experiment 2 revealed that the accuracy prompt intervention significantly reduced participants’ willingness to share information, with a large effect size (ηp2 = 0.24). This suggests that interventions which prompt individuals to reflect on information accuracy—especially in emotionally positive content—can effectively curb the spread of misinformation.

While the main effect of authenticity was not significant, this result may indicate a spillover effect of the intervention: participants became more cautious overall, reducing their willingness to share both false and true headlines. Although not statistically significant, the interaction trend suggests that the reduction in sharing was greater for false headlines, implying the intervention may be more effective in targeting misinformation. The results from SDT indicate that the primary mechanism of this intervention may not involve enhancing cognitive discriminability, but rather inducing a more cautious response bias in sharing behavior. This shift in response bias may hold particular value for adolescent populations, whose cognitive reflection abilities are still developing. Compared to interventions requiring complex cognitive processing and factual knowledge—such as inoculation or fact-checking—providing simple, specific sharing behavior prompts (e.g., targeting features like “positiveness”) better aligns with adolescents’ cognitive characteristics and imposes a lower cognitive load. Consequently, this approach may offer greater feasibility and scalability in real-world applications.

Besides, given that misinformation often poses a greater threat than the suppression of truthful content, this minor decrease in sharing true information might be a reasonable trade-off. In real-world social media contexts, such accuracy prompt based interventions could offer substantial societal benefits by effectively reducing the dissemination of misinformation among adolescents and potentially broader populations.

4 General discussion

This study conducted two experiments to examine the impact of emotional valence on high school students’ willingness to share misinformation, as well as the effectiveness of accuracy prompt interventions targeting emotional valence in reducing such willingness. Across both experimental studies, we implemented the information-sharing task while deliberately excluding requirements for truthfulness judgments from participants. Empirical research has shown that judging the truthfulness of information itself is an intervention, which can affect subsequent performance in information-sharing tasks (Pennycook et al., 2020b, 2021). Given that the truthfulness judgment task may not fully capture the relevant motivations driving information-sharing decisions in real social contexts, we only included the information-sharing task in our experimental design and did not require participants to judge the truthfulness of the information. The core purpose of this design is to maximally replicate individuals’ decision-making patterns in daily information interactions, reduce research biases caused by the disconnect between experimental task settings and real-world behaviors, thereby effectively enhancing the ecological validity of research conclusions in real social contexts, and ensuring that the research results can be more reliably generalized to the explanation and prediction of information dissemination behaviors in natural settings.

Results from Experiment 1 demonstrated that emotional valence significantly influences students’ sharing intentions, with a notably higher tendency to share positive information over negative information. This findings is consistent with previous research on emotional valence and sharing behavior (Berger and Milkman, 2012), and confirms that this effect is also present in the adolescent population. Experiment 2 revealed that accuracy prompt interventions targeting emotional valence significantly reduced students’ willingness to share misinformation. Sharing intentions under the accuracy prompt condition were markedly lower than those in the control condition, and the intervention showed a large effect size. Collectively, these findings contribute to the experimental literature on emotional valence and misinformation sharing, while also enriching research on misinformation interventions among adolescents.

Specifically, emotional valence influenced response tendencies: students were more likely to share positive content, regardless of its authenticity. Signal Detection Theory (SDT) analysis revealed that while emotional valence had no significant effect on discrimination sensitivity (d’), it significantly affected response bias (c), indicating a general tendency to favor sharing more emotionally positive content. This may be due to two key factors:

First, previous research has shown that positive emotions increase credulity, whereas negative emotions can enhance skepticism (Forgas, 2019). This is explained by the way emotions influence cognitive processing: positive emotions promote heuristic, fluency-based thinking, while negative emotions elicit more deliberate, data-driven processing strategies (Forgas and Eich, 2012; Fredrickson, 2001). Positive headlines may thus increase perceived credibility and facilitate intuitive processing, leading adolescents to share them more readily.

Second, social media sharing involves value-based decision-making. Research indicates that people weigh both content-related factors (Vosoughi et al., 2018; Cappella et al., 2015) and social influences, such as perceived norms and peer approval (Ihm and Kim, 2018; Scholz et al., 2020), when deciding to share information. According to Social exchange theory (SET), users seek intangible social rewards—like reputation—through online sharing (Radecki and Spiegel, 2020; Wu et al., 2006). Adolescents are particularly sensitive to peer evaluation (Oh and Syn, 2015; Watson and Friend, 1969; Jackson et al., 2002), and often fear negative social judgment. Consequently, sharing positive information can enhance social perception and mood elevation, motivating adolescents to prioritize social rewards over informational accuracy.

Consequently, sharing positive information can lead to more favorable social evaluations, which in turn encourages adolescents to share more positive content while avoiding negative information. Moreover, disseminating positive content may uplift others’ moods or yield social rewards (Berger and Milkman, 2012). This value-driven motivation may outweigh the desire for accuracy, prompting individuals to share positively biased misinformation.

The interaction between authenticity and information valence was marginally significant. A simple effects analysis revealed that participants’ willingness to share both positive and negative information varied significantly for both true and false headlines, with stronger significance observed for false headlines. This suggests that perceived authenticity influences the sharing of misinformation among high school students. One possible reason for the significantly higher sharing of positive false information compared to negative false information is the entertaining or altruistic nature of some of the positive content used in the study. Prior research has indicated that when information is entertaining (Altay et al., 2022) or perceived as helpful to others, individuals are more likely to share it without verifying its accuracy, believing it will not harm their reputation. This increases the likelihood of such content being shared on social media (Acerbi, 2019; Sampat and Raj, 2022). As a result, even if individuals can discern between true and false headlines, they may prioritize emotional or social value over factual accuracy when sharing positive information—contributing to the spread of misinformation.

Experiment 2 employed an intervention method involving targeted accuracy prompt. Unlike the accuracy prompt used by Pennycook et al. (2020b, 2021), the accuracy prompt in this study explicitly highlighted that positive content is more likely to be misinformation, making the intervention more targeted than previous approaches. This study employed targeted accuracy prompt, which significantly reduced adolescents’ intention to share misinformation. Moreover, the relatively large effect size demonstrates that targeted accuracy reminders yield better intervention outcomes. Although the intervention did show some spillover effects, slightly reducing willingness to share true information, the reduction was smaller than that for misinformation. This intervention can be regarded as a ‘harm minimization’ strategy—it indirectly reduces opportunities for misinformation to spread by dampening the overall impulse to share, particularly for content with the greatest potential to go viral. Given the greater harm posed by misinformation, this trade-off is acceptable. With its relatively large effect size, the accuracy prompt approach—if applied to real-world social media environments—could yield substantial societal benefits by curbing the dissemination of false information.

The key strengths of this characteristic-based accuracy prompt intervention lie in its simplicity, clarity, and scalability. It only requires brief reminders about accuracy and prompts users to recognize specific features indicative of misinformation. This makes it easily adaptable to real-world social media contexts and suitable for broad implementation across platforms. Furthermore, while the intervention educates users on which content traits may signal misinformation, it still preserves individual autonomy by allowing users to make their own decisions about engagement and sharing. Therefore, characteristic-based accuracy prompt represents a highly promising strategy for mitigating the spread of misinformation online.

While the promising nature of such interventions often warrants investigation into their long-term effects, this study focused on immediate outcomes rather than incorporating a follow-up design—a common approach in many existing intervention studies (Miri et al., 2024). This approach was driven by our primary considerations of enhancing ecological validity and controlling response bias. Since the study participants were adolescents—a group particularly susceptible to evaluation apprehension and social desirability concerns (Jackson et al., 2002; Ollendick and Hirshfeld-Becker, 2002)—we implemented a rigorous anonymous protocol to ensure response authenticity, which, however, made subsequent tracking unfeasible. Furthermore, conducting longitudinal follow-ups outside the classroom setting with high school students posed practical challenges. This study follows the research approach of pioneering work in the field (Pennycook et al., 2020b), which holds that demonstrating a significant immediate effect is a necessary foundation for exploring long-term utility. Thus, the present study provides a valid and rigorous evaluation of the preliminary effectiveness of the intervention. Of course, we acknowledge that the absence of follow-up data somewhat limits inferences regarding the persistence of the effects. Future research could seek to overcome these obstacles by developing longitudinal designs in collaboration with educational institutions to further examine the long-term sustainability of the intervention effects.

In summary, this study used experimental methods to investigate how emotional valence influences adolescents’ willingness to share misinformation, and tested the effectiveness of a characteristic-based accuracy prompt intervention. These findings extend current research on misinformation sharing, enrich our understanding of influencing factors, and provide a foundation for developing more effective intervention strategies.

However, several limitations remain. First, this study used social media headlines and measured sharing intention rather than actual behavior. While previous research suggests that sharing intentions strongly predict actual sharing behavior—even differing little between those who click headlines or not, and that headlines suffice for accuracy judgments—these findings are based on adults (Mosleh et al., 2020; Molina et al., 2021), and their applicability to adolescents remains unclear. Although methodologically similar studies have shown generalizability to real-world behavior (Pennycook et al., 2021; Sampat and Raj, 2022), adolescent-specific factors like social desirability bias may limit such extrapolation. Future research should examine whether these results extend to other formats (e.g., full posts) in ecologically valid social media settings.

Second, while characteristic-based accuracy prompt reduced adolescents’ misinformation-sharing willingness, its spillover effect on true information requires mitigation strategies. Moreover, given adolescents’ underdeveloped information-discernment capacities and the spillover effects of interventions on genuine information-sharing, comprehensive strategies—such as fact-checking, rumor debunking, peer-evaluation sensitivity combined with social norm interventions, and media literacy cultivation—can collectively reduce misinformation sharing while curbing its dissemination.

Third, limited sample size constrains generalizability. Although G*Power-calculated sampling and homogeneous selection (active social media users with regular sharing) bolstered internal validity, restricted sample representativeness may attenuate statistical power for subtle effects, necessitating further verification of external validity.

Finally, the repeated-measures ANOVA employed in this study provided a valid foundation for testing the main effects, though it should be noted that this analytical approach may have certain limitations in capturing the hierarchical nature of the data. In experimental designs involving both participant- and item-related variability, incorporating mixed-effects models (such as linear mixed models or cumulative link models with random effects for participants and items) could offer additional insights for statistical inference. Such models have the potential to better accommodate multi-level data structures, and future studies may consider adopting these approaches to further advance related investigations.

5 Conclusion

This study provides preliminary evidence for the influence of emotional valence on adolescents’ willingness to share misinformation. High school students were significantly more inclined to share positive content than negative content, and emotional valence predicted a more liberal response bias, prompting users to share positively framed information regardless of authenticity. By introducing an accuracy prompt intervention that highlights emotional valence as a potential signal of misinformation, this study demonstrated a marked reduction in students’ sharing intentions. The large effect size suggests this method is both effective and scalable. As such, accuracy prompt strategies targeting content characteristics present a promising avenue for mitigating the spread of misinformation among adolescents in social media contexts.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Ethics Committee of Shandong University of Aeronautics. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin.

Author contributions

ZW: Writing – original draft, Writing – review & editing. HJ: Investigation, Methodology, Writing – review & editing. SL: Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Shandong Provincial Social Science Foundation (23DJYJ01). Doctoral Research Start-up Fund of Shandong University of Aeronautics (2025Y26). Binzhou Social Science Planning Project (25-SKGH-225).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. The authors used Jimeng AI, a professional AI image generation tool, to create Figures 1 and 3. The authors are solely responsible for the content of these AI-generated figures.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Acerbi A. (2019). Cognitive attraction and online misinformation. Palgrave Commun.5:Article 15. doi: 10.1057/s41599-019-0224-y

2

Altay S. Hacquin A. S. Mercier H. (2022). Why do so few people share fake news? It hurts their reputation. New Media Soc.24, 1303–1324. doi: 10.1177/1461444820984843

3

Apuke O. D. Omar B. (2021). Fake news and COVID-19: modelling the predictors of fake news sharing among social media users. Telemat. Inform.56:101475. doi: 10.1016/j.tele.2020.101475

4

Batailler C. Brannon S. M. Teas P. E. Gawronski B. (2022). A signal detection approach to understanding the identification of fake news. Perspect. Psychol. Sci.17, 78–98. doi: 10.1177/174569162199424

5

Berger J. Milkman K. L. (2012). What makes online content viral?J. Mark. Res.49, 192–205. doi: 10.1509/jmr.10.0353

6

Brady W. J. Gantman A. P. Van Bavel J. J. (2020). Attentional capture helps explain why moral and emotional content go viral. J. Exp. Psychol. Gen.149, 746–756. doi: 10.1037/xge0000673

7

Cappella J. N. Kim H. S. Albarracín D. (2015). Selection and transmission processes for information in the emerging media environment: psychological motives and message characteristics. Media Psychol.18, 396–424. doi: 10.1080/15213269.2014.941112

8

Casey B. J. Getz S. Galvan A. (2008). The adolescent brain. Dev. Rev.28, 62–77. doi: 10.1016/j.dr.2007.08.003

9

Ceylan G. Anderson I. A. Wood W. (2023). Sharing of misinformation is habitual, not just lazy or biased. Proc. Natl. Acad. Sci.120:e2216614120. doi: 10.1073/pnas.2216614120

10

Chen S. Xiao L. Mao J. (2021). Persuasion strategies of misinformation-containing posts in the social media. Inf. Process. Manag.58:102665. doi: 10.1016/j.ipm.2021.102665

11

China Internet Network Information Center (2024) The 54th statistical report on China’s internet development. Available online at: https://www3.cnnic.cn/n4/2024/0829/c88-11065.html

12

China Youth Internet Association (2024) Survey report on internet literacy of Chinese teenagers

13

Cohen J. (1988). Statistical power analysis for the behavioral sciences. (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates, Publishers..

14

Cyberspace Administration of China . September 2024 national network report acceptance (2024). Available online at: https://www.12377.cn/tzgg/2024/faa5ab85_web.html (Accessed October 12, 2024).

15

Forgas J. P. (2019). Happy believers and sad skeptics? Affective influences on gullibility. Curr. Dir. Psychol. Sci.28, 306–313. doi: 10.1177/0963721419830319

16

Forgas J. P. Eich E. (2012). “Affective influences on cognition: mood congruence, mood dependence, and mood effects on processing strategies” in Handbook of psychology. ed. WeinerI. B., vol. 4. 2nd ed (Wiley), 61–82.

17

Fredrickson B. L. (2001). The role of positive emotions in positive psychology: the broaden-and-build theory of positive emotions. Am. Psychol.56, 218–226. doi: 10.1037/0003-066X.56.3.218

18

Galil K. Soffer G. (2011). Good news, bad news and rating announcements: an empirical investigation. J. Bank. Financ.35, 3101–3119. doi: 10.1016/j.jbankfin.2011.04.013

19

Gawronski B. Ng N. L. Luke D. M. (2023). Truth sensitivity and partisan bias in responses to misinformation. J. Exp. Psychol. Gen.152, 2205–2221. doi: 10.1037/xge0001399

20

Gupta A. Lamba H. Kumaraguru P. Joshi A. (2013). Faking Sandy: characterizing and identifying fake images on twitter during hurricane Sandy. In Proceedings of the 22nd international conference on World Wide Web (729–736)

21

Hartwig K. Biselli T. Schneider F. & ReuterC. (2024). From adolescents' eyes: assessing an indicator-based intervention to combat misinformation on TikTok. In Proceedings of the 2024 CHI Conference on human factors in computing systems. 1–20

22

Igwebuike E. E. Chimuanya L. (2021). Legitimating falsehood in social media: a discourse analysis of political fake news. Discourse Commun.15, 42–58. doi: 10.1177/1750481320974987

23

Ihm J. Kim E. (2018). The hidden side of news diffusion: understanding online news sharing as an interpersonal behavior. New Media Soc.20, 4346–4365. doi: 10.1177/1461444818772064

24

Jackson T. Fritch A. Nagasaka T. Pope L. (2002). Toward explaining the association between shyness and loneliness: a path analysis with American college students. Soc. Behav. Pers.30, 263–270. doi: 10.2224/sbp.2002.30.3.263

25

Keim M. E. Noji E. (2011). Emergent use of social media: a new age of opportunity for disaster resilience. Am. J. Disaster Med.6, 47–54.

26

Keselman A. Arnott Smith C. Leroy G. Zeng-Treitler Q. (2021). Factors influencing willingness to share health misinformation videos on the internet: web-based survey. J. Med. Internet Res.23:e30323. doi: 10.2196/30323

27

Kumar S. Huang B. Cox R. A. V. Srivastava J. (2021). An anatomical comparison of fake-news and trusted-news sharing patterns on twitter. Comput. Math. Organ. Theory27, 109–133. doi: 10.1007/s10588-020-09312-3

28

Lewandowsky S. Ecker U. K. H. Seifert C. M. Schwarz N. Cook J. (2012). Misinformation and its correction: continued influence and successful debiasing. Psychol. Sci. Public Interest13, 106–131. doi: 10.1177/1529100612451018

29

Lim G. Perrault S. T. (2020). Perceptions of news sharing and fake news in Singapore. arXiv [Preprint]. Available online at: https://arxiv.org/abs/2010.07607v2 (accessed April 8, 2024)

30

Macmillan N. A. Creelman C. D. (2004). Detection theory: a user’s guide. Mahwah, New Jersey London: Taylor & Francis.

31

Miri A. Karimi-Shahanjarini A. Afshari M. Bashirian S. Tapak L. (2024). Understanding the features and effectiveness of randomized controlled trials in reducing COVID-19 misinformation: a systematic review. Health Educ. Res.39, 495–506. doi: 10.1093/her/cyae036

32

Molina M. D. Sundar S. S. Rony M. M. U. Kim Y. (2021). Does clickbait actually attract more clicks? Three clickbait studies you must read. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (1–19). ACM

33

Mosleh M. Pennycook G. Rand D. G. (2020). Self-reported willingness to share political news articles in online surveys correlates with actual sharing on twitter. PLoS One15:e0228882. doi: 10.1371/journal.pone.0228882

34

Oh S. Syn S. Y. (2015). Motivations for sharing information and social support in social media: a comparative analysis of Facebook, Twitter, delicious, YouTube, and Flickr. J. Assoc. Inf. Sci. Technol.66, 2045–2060. doi: 10.1002/asi.23320

35

Ollendick T. H. Hirshfeld-Becker D. R. (2002). The developmental psychopathology of social anxiety disorder. Biol. Psychiatry51, 44–58. doi: 10.1016/s0006-3223(01)01305-1

36

Papapicco C. Lamanna I. D’Errico F. (2022). Adolescents’ vulnerability to fake news and to racial hoaxes: a qualitative analysis on Italian sample. Multimodal Technol. Interact.6:20. doi: 10.3390/mti6030020

37

Pennycook G. Bear A. Collins E. T. Rand D. G. (2020a). The implied truth effect: attaching warnings to a subset of fake news headlines increases perceived accuracy of headlines without warnings. Manag. Sci.66, 4944–4957. doi: 10.1287/mnsc.2019.3478

38

Pennycook G. Epstein Z. Mosleh M. Arechar A. A. Eckles D. Rand D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature592, 590–595. doi: 10.1038/s41586-021-03344-2

39

Pennycook G. McPhetres J. Zhang Y. Lu J. G. Rand D. G. (2020b). Fighting COVID-19 misinformation on social media: experimental evidence for a scalable accuracy-nudge intervention. Psychol. Sci.31, 770–780. doi: 10.1177/0956797620939054

40

Pennycook G. Rand D. G. (2021). The psychology of fake news. Trends Cogn. Sci.25, 388–402. doi: 10.1016/j.tics.2021.02.007

41

Radecki R. P. Spiegel R. S. (2020). Avoiding disinformation traps in COVID-19: July 2020 annals of emergency medicine journal club. Ann. Emerg. Med.76, 111–112. doi: 10.1016/j.annemergmed.2020.05.002

42

Salgado S. Bobba G. (2019). News on events and social media: a comparative analysis of Facebook users’ reactions. Journal. Stud.20, 2258–2276. doi: 10.1080/1461670X.2018.1546584

43

Sampat B. Raj S. (2022). Fake or real news? Understanding the gratifications and personality traits of individuals sharing fake news on social media platforms. Aslib J. Inf. Manag.74, 840–876. doi: 10.1108/AJIM-09-2021-0251

44

Scholz C. Jovanova M. Baek E. C. Falk E. B. Levy I. (2020). Media content sharing as a value-based decision. Curr. Opin. Psychol.31, 83–88. doi: 10.1016/j.copsyc.2019.09.004

45

Schubatzky T. Haagen-Schützenhöfer C. (2023). Inoculating adolescents against climate change misinformation. In Fostering scientific citizenship in an uncertain world: selected papers from the ESERA 2021 conference (pp. 275–292). Cham: Springer International Publishing

46

Sherman L. E. Hernandez L. M. Greenfield P. M. Dapretto M. (2018). What the brain ‘likes’: neural correlates of providing feedback on social media. Soc. Cogn. Affect. Neurosci.13, 699–707. doi: 10.1093/scan/nsy051

47

Song X. Zhao Y. Song S. Dai W. (2019). The role of information cues on users' perceived credibility of online health rumors. Proc. Assoc. Inf. Sci. Technol.56, 760–761. doi: 10.1002/pra2.102

48

Soroka S. Fournier P. Nir L. (2019). Cross-national evidence of a negativity bias in psychophysiological reactions to news. Proc. Natl. Acad. Sci.116, 18888–18892. doi: 10.1073/pnas.1908369116

49

Soroka S. McAdams S. (2015). News, politics, and negativity. Polit. Commun.32, 1–22. doi: 10.1080/10584609.2014.881942

50

Spiro E. S. Fitzhugh S. Sutton J. Pierski N. Greczek M. Butts C. T. (2012). Rumoring during extreme events: a case study of deepwater horizon 2010. In Proceedings of the 4th Annual ACM Web Science Conference (275–283

51

Stanislav H. Todorov N. (1999). Calculation of signal detection theory measures. Behav. Res. Methods Instrum. Comput.31, 137–149. doi: 10.3758/bf03207704

52

Steinberg L. (2010). A dual systems model of adolescent risk-taking. Dev. Psychobiol.52, 216–224. doi: 10.1002/dev.20445

53

van Duijvenvoorde A. C. K. Jansen B. R. J. Visser I. Huizenga H. M. (2010). Affective and cognitive decision-making in adolescents. Dev. Neuropsychol.35, 539–554. doi: 10.1080/87565641.2010.494749

54

Vogel L. (2017). Viral misinformation threatens public health. Can. Med. Assoc. J.189, E1432–E1433. doi: 10.1503/cmaj.109-5526

55

Vosoughi S. Roy D. Aral S. (2018). The spread of true and false news online. Science359, 1146–1151. doi: 10.1126/science.aap9559

56

Watson D. Friend R. (1969). Measurement of social-evaluative anxiety. J. Consult. Clin. Psychol.33, 448–457. doi: 10.1037/h0027806

57

Wood W. Rünger D. (2016). Psychology of habit. Annu. Rev. Psychol.67, 289–314. doi: 10.1146/annurev-psych-122414-033417

58

Wu S. Lin C. S. Lin T. C. (2006). Exploring knowledge sharing in virtual teams: a social exchange theory perspective. In Proceedings of the 39th Annual Hawaii International Conference on System Sciences (HICSS'06) (1, p. 26b). IEEE

Summary

Keywords

emotional valence, misinformation, adolescents, social media, information sharing, accuracy prompt

Citation

Wang Z, Jin H and Li S (2025) Good news or bad news? The impact of information valence on high school students’ willingness to share misinformation and the effectiveness of a targeted accuracy prompt. Front. Psychol. 16:1664890. doi: 10.3389/fpsyg.2025.1664890

Received

13 July 2025

Accepted

03 September 2025

Published

28 October 2025

Volume

16 - 2025

Edited by

Rosanna E. Guadagno, University of Oulu, Finland

Reviewed by

Lidia Scifo, Libera Università Maria SS. Assunta, Italy

Arman Miri, Hamadan University of Medical Sciences, Iran

Updates

Copyright

© 2025 Wang, Jin and Li.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhichao Wang, 772144279@qq.com

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.