- 1Department of Health Policy and Management, Columbia University, New York City, NY, United States

- 2Centre for Business Research, Judge Business School, University of Cambridge, Cambridge, United Kingdom

- 3Centre for Mental Health and Safety, University of Manchester, Manchester, United Kingdom

- 4Faculty of Psychology and Neuroscience, Maastricht University, Maastricht, Netherlands

- 5RAND Europe, Cambridge, United Kingdom

- 6MRC Cognition and Brain Sciences Unit, University of Cambridge, Cambridge, United Kingdom

- 7Department of Psychological Sciences, Birkbeck College, University of London, London, United Kingdom

- 8Center for Open Science, Charlottesville, VA, United States

- 9Psychology Department, Institute of Psychiatry, King's College London, London, United Kingdom

Editorial on the Research Topic

Advancing Methods for Psychological Assessment Across Borders

A New Generation of Psychological and Behavioral Scientists

The nascent push for greater transparency and reproducibility in psychological and behavioral sciences has created a clear call for better standards for research methods across borders and languages. Exponential growth in computing power, access to secondary data, and widespread interest in new statistical methods have ensured a new generation of behavioral researchers will have opportunities for discovery and practice on a level without precedent. As of publication, more than 200 institutions in over 100 countries globally have launched behavioral policy units, and there is immeasurable interest in applications from across specialty areas in psychology. With these trends, there is an undeniable and growing demand for improved methods for assessment in scientific study, industry, and policy.

To maintain progress, it is critical that the next generation of researchers have the awareness, training, and practice for conducting high-quality research in psychological assessment, particularly when studying across populations, borders, and languages. This Editorial summarizes key insights for the Research Topic Advancing methods for psychological assessment across borders, followed by general guidance for early career researchers working in multiple languages and countries, or when adapting existing methods for new settings and populations.

Edition Insights

This Research Topic was launched to support professional development of students and early career behavioral scientists in the Junior Researcher Programme, an initiative that supports six multi-country psychological research projects annually. Senior academics were also invited to contribute manuscripts of their own multinational studies. Mirroring the field generally, there is considerable diversity in the 19 published manuscripts, with protocols and early-stage findings in education, health, development, technology, personality, data privacy, social media, organizational leadership, and financial decision-making.

The first wave of papers covered a variety of techniques for testing existing paradigms with greater cross-cultural appreciation. This included the development of a multilingual app for assessing quality of life, an expanded measurement for standardizing cognitive ability scores across Europe, new approaches to personality measures to link with cognitive ability, measurement of how teachers support students emotionally, psychological constructs and accuracy of subjective scoring in gymnastic judges, and a cross-validation of an empathy scale. Following topics covered new approaches in assessment across mental health and decision-making. Papers included learning methods, the dark triad of personality, moral behavior, and psychopathy from a neuroscience communication lens, the impact of music on the well-being of elderly people, adolescent well-being, validation of PHQ-9 in Norwegian, and assessing videos as an intervention tool on social media. In the final wave, the focus shifted toward more narrow assessment of behaviors, such as the influence of social norms on eating behavior during pregnancy, parental decision to have children vaccinated, Facebook use and preference for privacy options, how identity leadership builds organizational commitment, and the decision to donate to charity. Such diversity in papers highlights the need for early career researchers to have robust training and experience in responsible, replicable scientific methods.

This Research Topic is primarily geared toward Protocols, meaning there is less in the way of new evidence to summarize. Instead, we cogitate on the approaches, challenges, reviewer feedback, and general direction from these manuscripts as a means of guiding the next wave of junior researchers.

Guidance for Early Career Researchers

Across these Protocols, a number of themes emerge in attempts to conduct psychological research across borders, for instance:

1. Translation into a new language is not the only adaptation existing measurements need to function in new settings—there is no one clear answer on how best to conduct comparisons in all cases (i.e., using the items that work well enough in all settings vs. using the items that best fit each location);

2. When designing studies to be carried out in multiple countries, particularly with limited resources to conduct the study, it may be more useful to focus on common, narrowly-defined groups in each, than attempt to be representative across all populations for all countries tested

3. Hungry for data: while positive in terms of how it reflects the enthusiasm of the next generation of behavioral scientists, the omnipresent wish to add more items, recruit more participants, and do more rounds of collection may serve as a barrier to producing high-powered studies on clear questions;

4. Highly cited papers that appear in textbooks over several generations of students may have a sample size (power) that would not even pass as a pilot study under present research standards;

5. WEIRD (Western, educated, industrialized, rich, and democratic) populations are easier to access; studying non-WEIRD populations may offer substantially more insight than simply improving existing instruments in tested populations;

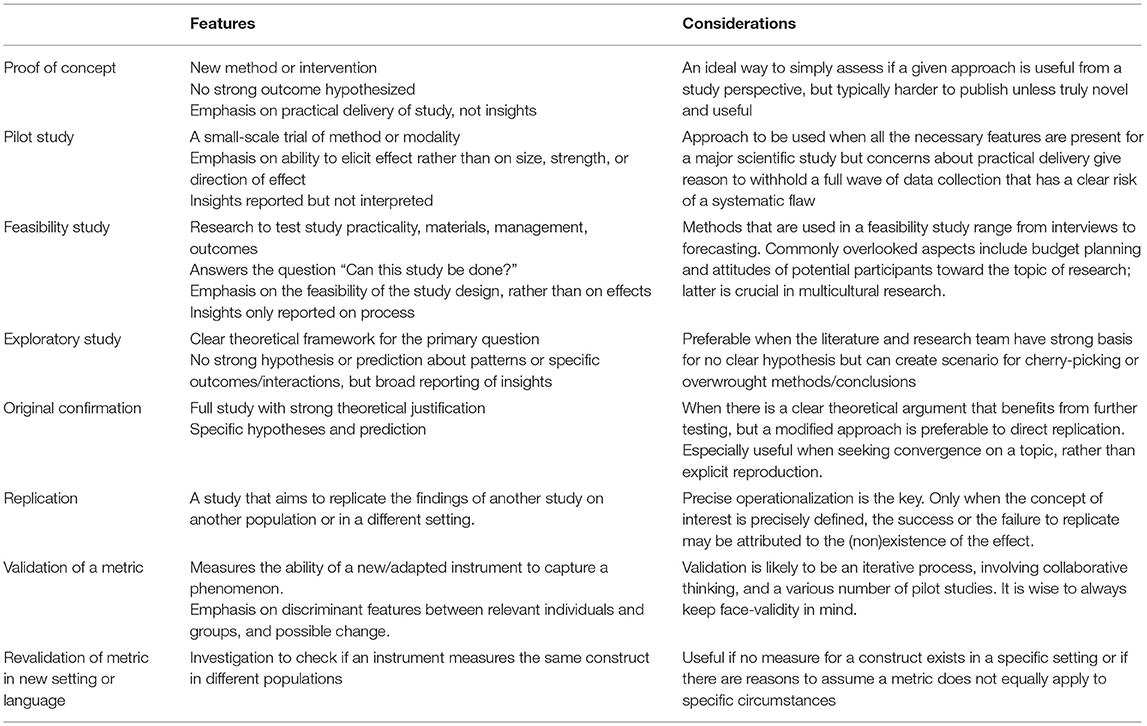

6. Particularly when using extensive assessments, knowing how to position a study for eventual publication is a major challenge, and can slow the development of new insights, particularly when a key feature is the adaptation into multiple languages. This is particularly relevant for junior researchers (see Table 1).

Recommendations

While difficult to provide uniform guidance for all international, multilingual, and other multi-site studies in psychological sciences, there are some common steps that can be taken to ensure a smoother process with greater possibility of meaningful insights, while avoiding common pitfalls. We highlight some of these here:

Focus the Content on What Matters

Empirical papers usually revolve around a primary research question. All text written in the build up to the research question should serve the reader to understand why this question is important. Although researchers often wish to be as thorough and detailed as possible, too much information creates confusion and detracts from a primary message. Be concise and stay on point. Unless absolutely necessary and directly relevant, do not go back to Freud and Jung, and remove tangents or overstated contingencies. Focus on the critical assumptions and make sure no reader has to guess what the question or hypothesis are.

Utilize the Open Science Framework

At the time of writing, the mandate for researchers to ensure transparency and reproducibility in research is young but building. To meet these standards, there are a broad range of resources, tools, guidelines, and examples produced by the Open Science Framework (osf.io). Early career researchers are encouraged to manage project materials, such as questionnaires, instructions, analysis scripts, and datasets in an OSF project (See Supplement 1).

Pre-register Studies

Pre-registration has been suggested as an important tool to combat publication bias and questionable research practices and improve the transparency of the research process (Munafò et al., 2017). Pre-registrations are time-stamped documents specifying all plans for methods, data collection, and analysis. These are produced prior to conducting study. Such documents are expected to settle crucial decisions of the research process a priori, along with transparency about initial hypotheses (Nosek et al., 2018). There may be instances where simulated or pilot data may be necessary to aid in certain aspects of methodological decision-making, but this should be justified if done. Another alternative is to randomly split datasets in order to conduct exploratory analyses prior to confirmation with the held off, un-analyzed dataset (Anderson and Magruder, 2017; Supplement 2).

Pre-registrations must eventually be made public in order to address underreporting biases, but many may be embargoed for various lengths of time (e.g., up to 4 years on the OSF1) be private or public. They also may be submitted for peer review as Registered Reports2 prior to data collection, such that valuable feedback could be obtained ahead of the project execution when early career researchers would be most likely to benefit from it. Researchers should also adhere to institutional review board (IRB) guidelines and include relevant approvals and ethical guidance when they pre-register.

Replicate Before You Explore

Registered reports reviewed and accepted prior to data collection provide incentives for researchers to conduct valuable replication studies. Ambitious early career researchers may initially be more drawn to the thought of testing a completely new idea of their own. However, in attempting to build new hypotheses based on theories that may not have substantial validation, the researcher may find themselves with a lot of unpublishable material. Instead, consider first replicating a critical finding that produces the assumptions for your own work, and see if it holds. If it does, then finding a new avenue to explore can be possible. If it does not, then you have ample opportunity to discover unexpected moderating variables. Either way, you have now made an important contribution to the field while concurrently allowing yourself both confirmatory and exploratory hypotheses to test. Using the Registered Report model for this first step can be crucial, as “successful” replications are often deemed “too boring” to publish, whereas “unsuccessful” replications may be subject to more intense scrutiny than warranted and face obstacles to publication.

Publish Null Findings

We optimize the possibility of finding an effect through power calculations to inform sample sizes, but sometimes our results turn out to be null. Albeit often less desirable, such findings are equally relevant and should not be overlooked by researchers or publishers. Data syntheses and systematic reviews rely on publications of statistically significant effects as well as null findings to yield an accurate and generalized conclusion. Not publishing null findings therefore results in a skewed representation of the reality. Null findings could also inform future studies by providing context to consider potential confounds and moderators. So no matter if it is reject or fail to reject, start with publication in mind.

Apply for Ethical Approval Early

All researchers should seek guidelines for obtaining necessary ethical or IRB clearance as early as possible. As a minimum, review should be obtained at the institution of the principal investigator. When testing in multiple settings, approval may need to be sought from additional boards, such as schools (for collecting student data) or organizations (for collecting data on a centralized platform). Ethical reviews will usually include comments on methods and legal documents such as privacy notices, so earlier review would be better for finalizing research plans, particularly pre-registration. No two IRBs are guaranteed to function in the same way, but most will focus more on protecting participants in the study and the institution, and less on getting you a high-impact publication. We advise you consider methodological input from trusted experts before and after ethical review to ensure the highest quality study. Finally, try to consider aspects of data sharing (Meyer, 2018) and overall project transparency in your IRB applications in order to not face hurdles to transparency later on (Supplement 3).

Use the Oxford Comma

Please.

Challenges

Put bluntly: the goalposts have changed in research. While concerns about replication and sufficiently-powered studies have raised standards across the behavioral sciences, they have also created unprecedented challenges for early career researchers. For example, classic rules of thumb for testing new surveys are no longer permissible and should be replaced with systematic power calculations preceding data collection. While this will unambiguously improve scientific quality, it also requires both the statistical knowledge to produce those estimates as well as the resources to meet those participation thresholds. Likewise, while we are fully in support of pre-registering studies, journals note the difficulty in finding reviewers willing to engage with these, which can slow down the completion of study on time-limited research projects conducted by students.

Current standards developed over time and were not used by previous generations of researchers, meaning new behavioral scientists are being trained by academics who were not subject to them at the same stage in their careers. Even when these new approaches become standard in university lectures, alternative learning resources, hands-on experience with research, and peer-learning are crucial for the development of junior researchers.

Conclusions

Take every word here as a positive. With new challenges come new opportunities, and the next wave of behavioral researchers will have a tremendous impact on society. The earlier that the new standards in the field can be applied across all studies, the (likely) better this will be for the advancement of the field and public perception of the work. As psychological and behavioral scientists cover all domains of life for individuals and societies, this will surely promote the greatest impact for the well-being of the science and of populations. Your professional ancestors are cheering for you.

Author Contributions

KR directed, drafted, framed, edited, proofed, and finalized the entire paper. All other authors contributed equally by proofing, editing, and making direct contributions to various sections of the text.

Conflict of Interest Statement

DM is an employee of the Center for Open Science, which builds and maintains the free and open source platform, OSF.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The handling editor is currently editing co-organizing a Research Topic with the author KR, and confirms the absence of any other collaboration.

Acknowledgments

We are grateful to many contributors to the Junior Researcher Programme between 2015 and 2018: Augustin Mutak, Maja Vovko, Thomas Lind Andersen, Ondrej Kacha, Daphnee Chabal, Irina Camps Ortueta, Eduardo Garcia Garzon, Lea Jakob, Guillermo Varela, Felicia Sundstrom, Irina Gioaba, Charles Jacob, Richard Griffith, Brian Nosek, Gabriela Roman, Agnieszka Walczak, and Kristina Egumenovska. Finally, we sincerely thank Dr. Pietro Cipresso for diligence, selflessness, enthusiasm, and relentlessness at supporting our initiative.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2019.00503/full#supplementary-material

Footnotes

References

Anderson, M., and Magruder, J. (2017). Split-Sample Strategies for Avoiding False Discoveries (No. w23544). Cambridge, MA: National Bureau of Economic Research.

Meyer, M. N. (2018). Practical tips for ethical data sharing. Adv. Methods Pract. Psychol. Sci. 1, 131–144. doi: 10.1177/2515245917747656

Munafò, M. R., Nosek, B. A., Bishop, D. V., Button, K. S., Chambers, C. D., Du Sert, N. P., et al. (2017). A manifesto for reproducible science. Nat. Hum. Behav. 1:0021. doi: 10.1038/s41562-016-0021

Keywords: psychological methods, reproducibility, multinational research, replication, early career researchers

Citation: Ruggeri K, Bojanić L, van Bokhorst L, Jarke H, Mareva S, Ojinaga-Alfageme O, Mellor DT and Norton S (2019) Editorial: Advancing Methods for Psychological Assessment Across Borders. Front. Psychol. 10:503. doi: 10.3389/fpsyg.2019.00503

Received: 18 January 2019; Accepted: 20 February 2019;

Published: 19 March 2019.

Edited and reviewed by: Pietro Cipresso, Istituto Auxologico Italiano (IRCCS), Italy

Copyright © 2019 Ruggeri, Bojanić, van Bokhorst, Jarke, Mareva, Ojinaga-Alfageme, Mellor and Norton. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kai Ruggeri, a2FpLnJ1Z2dlcmlAY29sdW1iaWEuZWR1

Kai Ruggeri

Kai Ruggeri Lana Bojanić

Lana Bojanić Lindsey van Bokhorst

Lindsey van Bokhorst Hannes Jarke

Hannes Jarke Silvana Mareva

Silvana Mareva Olatz Ojinaga-Alfageme

Olatz Ojinaga-Alfageme David T. Mellor8

David T. Mellor8