- MRC Cognition and Brain Sciences Unit, University of Cambridge, Cambridge, United Kingdom

Cognitive neuroscience increasingly relies on complex data analysis methods. Researchers in this field come from highly diverse scientific backgrounds, such as psychology, engineering, and medicine. This poses challenges with respect to acquisition of appropriate scientific computing and data analysis skills, as well as communication among researchers with different knowledge and skills sets. Are researchers in cognitive neuroscience adequately equipped to address these challenges? Here, we present evidence from an online survey of methods skills. Respondents (n = 307) mainly comprised students and post-doctoral researchers working in the cognitive neurosciences. Multiple choice questions addressed a variety of basic and fundamental aspects of neuroimaging data analysis, such as signal analysis, linear algebra, and statistics. We analyzed performance with respect to the following factors: undergraduate degree (grouped into Psychology, Methods, and Biology), current researcher status (undergraduate student, PhD student, and post-doctoral researcher), gender, and self-rated expertise levels. Overall accuracy was 72%. Not surprisingly, the Methods group performed best (87%), followed by Biology (73%) and Psychology (66%). Accuracy increased from undergraduate (59%) to PhD (74%) level, but not from PhD to post-doctoral (74%) level. The difference in performance for the Methods vs. non-methods (Psychology/Biology) groups was especially striking for questions related to signal analysis and linear algebra, two areas particularly relevant to neuroimaging research. Self-rated methods expertise was not strongly predictive of performance. The majority of respondents (93%) indicated they would like to receive at least some additional training on the topics covered in this survey. In conclusion, methods skills among junior researchers in cognitive neuroscience can be improved, researchers are aware of this, and there is strong demand for more skills-oriented training opportunities. We hope that this survey will provide an empirical basis for the development of bespoke skills-oriented training programs in cognitive neuroscience institutions. We will provide practical suggestions on how to achieve this.

Introduction

Cognitive neuroscientists use physical measurements of neural activity and behavior to study information processing in mind and brain. The development of novel experimental methodology has been a strong driving force behind psychological research in general (Greenwald, 2012). In recent decades, neuroimaging techniques such as functional magnetic resonance imaging (fMRI) and electro-/magnetoencephalography (EEG/MEG) have become standard tools for cognitive neuroscientists. These techniques produce large amounts of data reflecting changes in metabolism or electrical activity in the brain (Huettel et al., 2009; Supek and Aine, 2014). Neuroimaging data can be analyzed in a myriad of ways, involving basic pre-processing (e.g., filtering and artifact correction), model fitting (e.g., general linear models, structural equation modeling), and statistical analysis (e.g., t-tests, permutation tests, Bayes factor) (see1). Researchers involved in this work come from diverse backgrounds in psychology, cognitive science, medicine, biology, physiology, engineering, computer science, physics, mathematics, etc. This poses challenges with respect to the acquisition of the appropriate scientific computing and data analysis skills, as well as for communication among researchers from very different scientific backgrounds2.

A number of neuroimaging software packages offer standardized analysis pipelines for certain types of analysis problems (e.g.,3,4), such as standard event-related fMRI or resting-state data, or conventional event-related potentials. Does this mean that empirical scientists no longer have to understand how their analysis pipelines work? There are several reasons why this is not the case. First, few experiments are ever purely “standard” or “conventional.” A new research question, or a small change in a conventional experimental paradigm, may require a significant change of the analysis pipeline or parameter choices. The application of a novel, non-established, high-level analysis method is often the highlight of a study, e.g., in the case of multivariate pattern information analysis or graph-theoretical methods. It may not be possible to apply these methods in the same way as in similar previous studies, because there aren’t any. Second, even for relatively standard analysis problems, there is often more than one solution, depending on how many people you ask. It is not uncommon to ask the nearest methods expert for advice, but it can be frustrating for a beginner to hear exactly the opposite advice from the next expert, especially if it is the reviewer of a paper or grant. One contributing factor to the recently reported “replication crisis” in cognitive science may be the large uncertainties in analysis procedures (Ioannidis, 2005; Open-Science-Collaboration, 2015; Millman et al., 2018). They may lead to unintended or deliberate explorations of the parameter space (data mining or “fishing”). Even apparently simple issues relating to data analysis, such as “double dipping” or how to infer statistical interactions, are still discussed in high-ranking publications (Kriegeskorte et al., 2009; Nichols and Poline, 2009; Vul et al., 2009; Nieuwenhuis et al., 2011). It should be good research practice to discuss any uncertainties in parameter choices and the implications these may have for interpretation of their findings or for future research. Third, even researchers who do not employ high-level analysis methods or who are not directly involved in neuroimaging projects need a certain level of understanding of data analysis methods in order to interpret the literature. The inferential chain from measurement through analysis to models of information processing in mind and brain can be complex (Henson, 2005; Page, 2006; Poldrack, 2006; Carandini, 2012; Coltheart, 2013; Mather et al., 2013). Even a ubiquitous term such as “brain activation” can mean very different things in different contexts (Singh, 2012). The same holds for popular concepts such as “connectivity,” “oscillations,” “pattern information,” and so forth (Friston, 2011; Haxby, 2012; Bastos and Schoffelen, 2015; Diedrichsen and Kriegeskorte, 2017).

Methods skills are not only relevant to data analysis, but also theory development. Interest in computational modeling of cognition in combination with neuroimaging has grown over the last decade or so (Friston and Dolan, 2010; Kriegeskorte, 2015; Turner et al., 2015). It is often said that the brain is the most complex information processing system we know. It would be puzzling if studying this complex information processing system required less methods skill than investigating “simple” radios or microprocessors (Lazebnik, 2002; Jonas and Kording, 2017). If cognitive neuroscience is to become a quantitative natural science, then cognitive neuroscientists need quantitative skills.

It will not be difficult to convince most cognitive neuroscientists that good methods skills are advantageous and will make their research more efficient. The greater their skills set, the greater their options and opportunities. At the same time, methods skills are of course not the only skills required in cognitive neuroscience, raising the question of how much time a researcher should spend honing them relative to time spent on cognitive science, computational modeling, physiology, anatomy, etc. There cannot be a one-fits-all answer, as it depends on the goals and research environment of the individual researcher. However, in order to address this question, one can try to break the bigger problem down into several smaller ones.

In a first step, we can find out whether there is a problem at all, i.e., whether cognitive neuroscientists already have the basic skills required to understand the most common neuroimaging analysis methods. If there is room for improvement, then in a second step we can determine specifically where improvement is most needed, e.g., for which type of researcher and which type of methods skills. And finally, once we know where improvement is needed, we can find out whether researchers are aware of this and what opportunities there are to do something about it.

Surprisingly, there is currently no empirical basis for answering these questions. Because researchers enter the field from many different backgrounds, there is no common basic training for all researchers. Students from an engineering or physics background will have advanced skills in physical measurement methods, mathematics, signal processing and scientific computing, but may lack a background in statistics (as well as cognitive science and behavioral experimental techniques, which are not within the scope of this survey). Students of medicine and biology may have had basic training in physics and biostatistics, but not in scientific computing and signal processing. Students in psychology and social sciences usually have training in statistical methods, but not in signal processing and physical measurement methods. It is therefore likely that many of these researchers, whatever their backgrounds, start their careers in cognitive neuroscience with an incomplete methods skills set. Neuroimaging training programs vary considerably across institutions. It is therefore unclear to what degree junior researchers acquire appropriate methods skills for cognitive neuroscience at different stages of their academic development.

Here, we evaluated the level of methods skills for researchers at different stages of their careers, mostly at post-graduate and post-doctoral level. We present results from an online survey of methods skills from 307 participants, mostly students and post-doctoral researchers working in the cognitive neurosciences. Questions in the survey covered basic aspects of data acquisition and analysis in the cognitive neurosciences, with a focus on neuroimaging research. We report results broken down by undergraduate degree (grouped into Psychology, Methods, and Biology), current researcher status (undergraduate, PhD student, and post-doctoral researcher), and gender. The purpose of this survey was to estimate the current skills level in the field, as a starting point for an evidence-based discussion of current skills-levels in cognitive neuroscience. We hope that this will usefully inform the development of future skills-oriented training opportunities, and we will provide practical suggestions on how to achieve this.

Materials and Methods

Participants

The survey was set up on the SurveyMonkey web-site5 (San Mateo, United States), and was advertised via neuroimaging software mailing lists and posts on the MRC Cognition and Brain Sciences Unit’s Wiki pages. It was first advertised in January 2015, and most responses (about 90%) were collected in 2015. Participation was voluntary and no monetary or other material reward was offered. Participants were informed about the purpose and nature of the survey, e.g., that it should take around 10–15 min of their time. They were asked not to take part more than once, and to not use the internet or books to answer the questions. The study was approved by the Cambridge Psychology Research Ethics Committee.

The number of respondents in the final analysis was 307. They were selected from the group of all respondents as follows. 578 participants gave consent for their data to be used for analysis (by ticking a box). Among those, we only analyzed data from participants who provided responses to all methods-related questions (i.e., gave a correct, error or “no idea” response). This resulted in 322 respondents. 214 respondents skipped all methods-related questions. Although it would have been interesting to analyze the demographics of these “skippers,” many of them also skipped most of the demographic questions. For example, only 66 of them disclosed their undergraduate degree (37 Psychology, 14 Methods, and 8 Biology).

Given the wide range of undergraduate degrees of our respondents, and the relatively small group of respondents for some of them, we grouped them into three broader categories. “Psychology” contained those who responded that their undergraduate degrees were in “psychology,” “cognitive science” and “cognitive neuroscience.” “Methods” included those who responded “physics,” “math,” “computer science,” or “biomedical engineering.” “Biology” summarized those who responded “biology” or “medicine.” For 39 respondents who indicated “Other” (e.g., “artificial intelligence,” “physiology,” and “linguistics”), an appropriate group assignment was made by hand by the author. For another 17 respondents such an assignment could not be made (e.g., musicology), and they were removed from the analysis, reducing the number of respondents in the final analysis to 307.

Forty eight percent of the remaining respondents were located in the United Kingdom at the time of the survey (n = 145, 83 of which from Cambridge), followed by other European countries (68, 22%), the United States of America (n = 33) and Canada (5), Asia (12), Australia (4), and South America (3). 35 respondents did not provide clear information about their location.

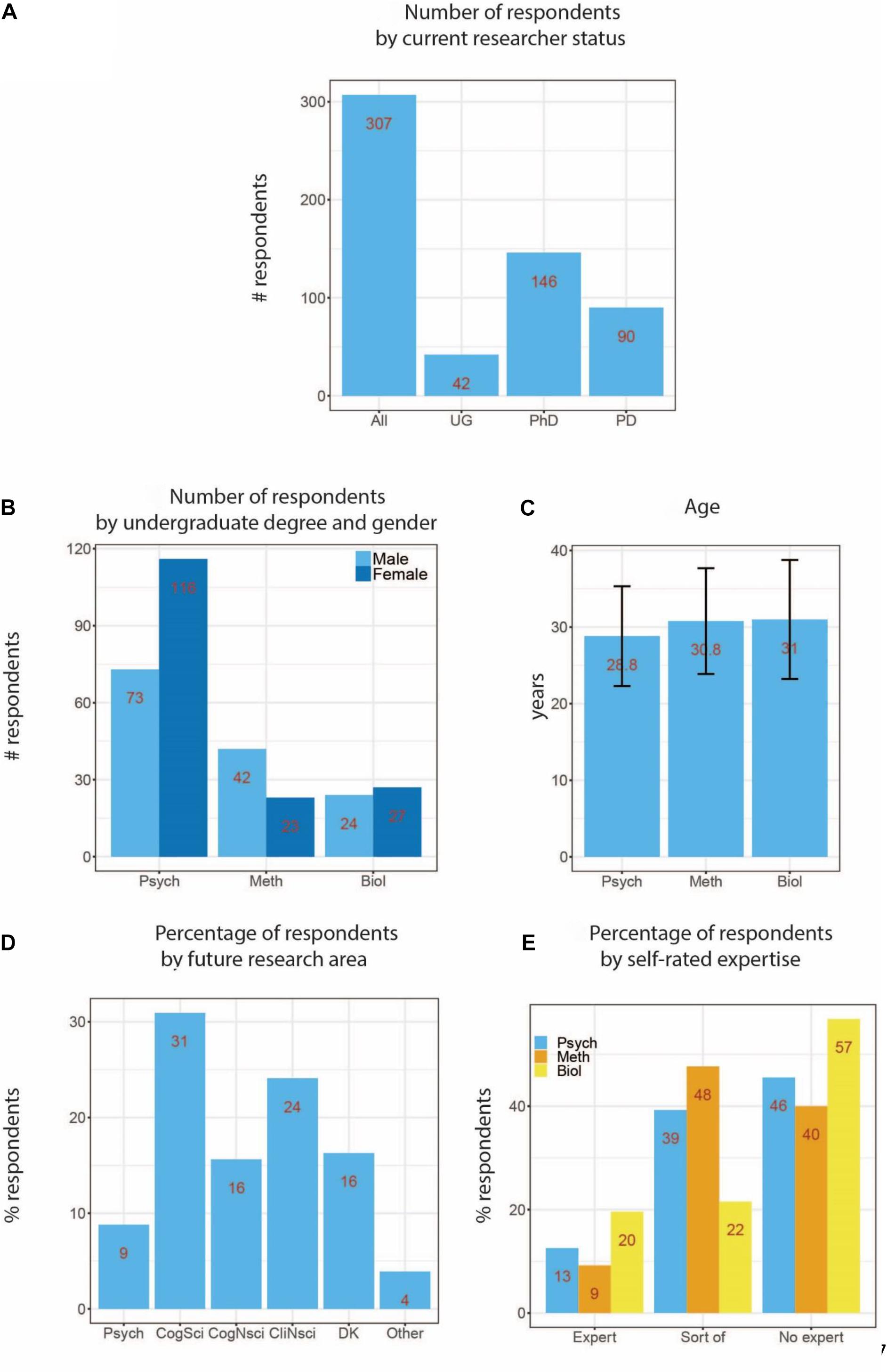

Figure 1 shows the number of participants broken down by current researchers status (undergraduate student, PhD or post-doc) (A), type of undergraduate degree and gender (B), mean age of respondents according to type of undergraduate degree (C), self-reported future areas of research (in percent, D), and self-rated methods-expertise level according to undergraduate degree (in percent, E). We had more female (n = 166) than male (139) respondents. Most respondents were PhD students (146), followed by post-doctoral researchers (90), undergraduate students (42), and research assistants (7). The mean age of all respondents was 29.6 (SD 6.9) years, and varied only slightly with respect to undergraduate degree. Most respondents expected to continue working in cognitive science (31%), clinical neuroscience (23%), or cognitive neuroscience (16%). The majority rated themselves as “no experts” or “sort of” experts, though this varied with undergraduate degree. The Biology group rated themselves mostly as “no experts” (57%, compared to 22% “sort of expert” and 20% “expert”). The pattern was similar for the Psychology group, although with a smaller difference between “sort of expert” (39%) and “no expert” (46 and 13% “expert”). The Methods group consisted mostly of “sort of experts” (48%) and “no experts” (40%), compared to 9% “experts.”

Figure 1. Demographics of survey participants. (A) Number of participants grouped by current researcher status (UG, undergraduate student; PhD, PhD student; PD, post-doctoral researcher). (B) Number of participants grouped by undergraduate degree and gender (Psych, undergraduate degree in psychology-related subjects; Meth, methods-related subjects; Biol, biology-related subjects). (C) Average age by undergraduate degree (error bars represent ± SD). (D) Percentage of participants depending on planned future research area (Psych, future research planned in psychology; CogSci, cognitive science; CogNsci, cognitive neuroscience; CliNsci, clinical neuroscience; DK, don’t know). (E) Percentage of participants who rated themselves as methods “expert,” “sort of expert,” and “no expert,” according to undergraduate degree. As some respondents did not provide clearly identifiable responses to some demographic questions (e.g., undergraduate degree or future research area), some counts across sub-categories do not add up to the total. Furthermore, scores inside bar graphs were rounded up to the nearest integer for easier visualization, and some percentages may therefore sum up to 101%.

The Survey

The survey started with questions about demographics, such as gender, age, undergraduate degree etc. (see6 for original survey). This was followed by 18 methods-related questions (see Supplementary Appendix A). Questions in the survey were chosen to cover basic aspects of data acquisition and analysis in the cognitive neurosciences, especially neuroimaging (see also Supplementary Appendix B). Questions were subjectively grouped by the author into domains of signal analysis (n = 5), scientific computing (n = 3), linear algebra (n = 2), calculus (n = 3), statistics (n = 3), and physics (n = 2). In brief, statistics questions probed knowledge on correlation, power analysis and the multiple comparisons problem; signal processing probed the signal-to-noise ratio, Fourier analysis, frequency spectra, complex numbers, trigonometry; calculus probed derivatives, integrals and algebraic equations; linear algebra probed vector orthogonality and vector multiplication; scientific computing probed knowledge about Linux, “for loops,” and integer numbers; physics probed Ohm’s law and electric fields. Questions were presented in multiple-choice form, with four possible answers plus a “no idea” option. This option was included in order to test whether participants had at least an inkling about how to approach the problems, and also to highlight that it was ok not to.

The choice of questions was constrained by:

• the limited amount of time the voluntary participants were expected to invest in this survey (10–15 min),

• the difficulty level of the questions, which should neither bore away experts nor scare away non-experts,

• the relevance to cognitive neuroscience.

The inclusion of particular questions, based on their relevance to cognitive neuroscience research was further determined through local discussions with researchers involved in methods training, as well as by consulting textbooks.

Data Analysis

Data were exported from the SurveyMonkey web-site and converted to an Excel spreadsheet using MATLAB. They were then further processed in the Software package R (Version 3.1.37). Only data from respondents who responded to every methods question were included in the analysis. For demographic questions, the total number of respondents within each category are reported. For methods skills questions, analyses focus on correct responses unless indicated otherwise. We considered the number of error and no-idea responses too low to allow meaningful interpretation of their differences. Considering the number of respondents per group (Figure 1), we think that breaking down our results by up to two factors (e.g., undergraduate degree and gender) is appropriate. The R scripts used for data analysis are available on8. The data from this survey are available on request from the author.

Our conclusions with respect to methods skills are based on the percentage of correct responses in the respective respondent groups, rather than measures of significance. A significant but small (e.g., 1%) effect would not have strong practical implications. Nevertheless, we ran ordered logistic regression analysis with the following simultaneous factors: undergraduate degree, current researcher status and gender. We used the function polr() from the R (i386 3.1.3) package MASS, and assessed significance using the function pnorm(). This analysis was run for overall performance as well as for sub-groups of methods questions as described below. We also present 95% confidence intervals for binomial probabilities for correct responses as error bars in our figures where appropriate (using the “exact” method of R’s binom() function).

Results

As described in the Methods section, our conclusions are mostly based on the mean percentages of correct responses (rather than “error” and “no idea” responses), rather than statistical measures. Not surprisingly with the large number of participants, the results for all factors in our ordered logistic regression analysis of overall performance reached significance (p < 0.05). For the six sub-groups of questions, all results for the factor Undergraduate Degree were significant except for the questions about Statistics (p > 0.2), for the factor Gender except for Physics and Statistics (both p > 0.8), and for the factor Researcher Status except for Linear Algebra and Calculus (both p > 0.3). In the following, we interpret results only if they are based on non-overlapping confidence intervals. The practical relevance of these effects is discussed in the discussion section.

Overall Performance

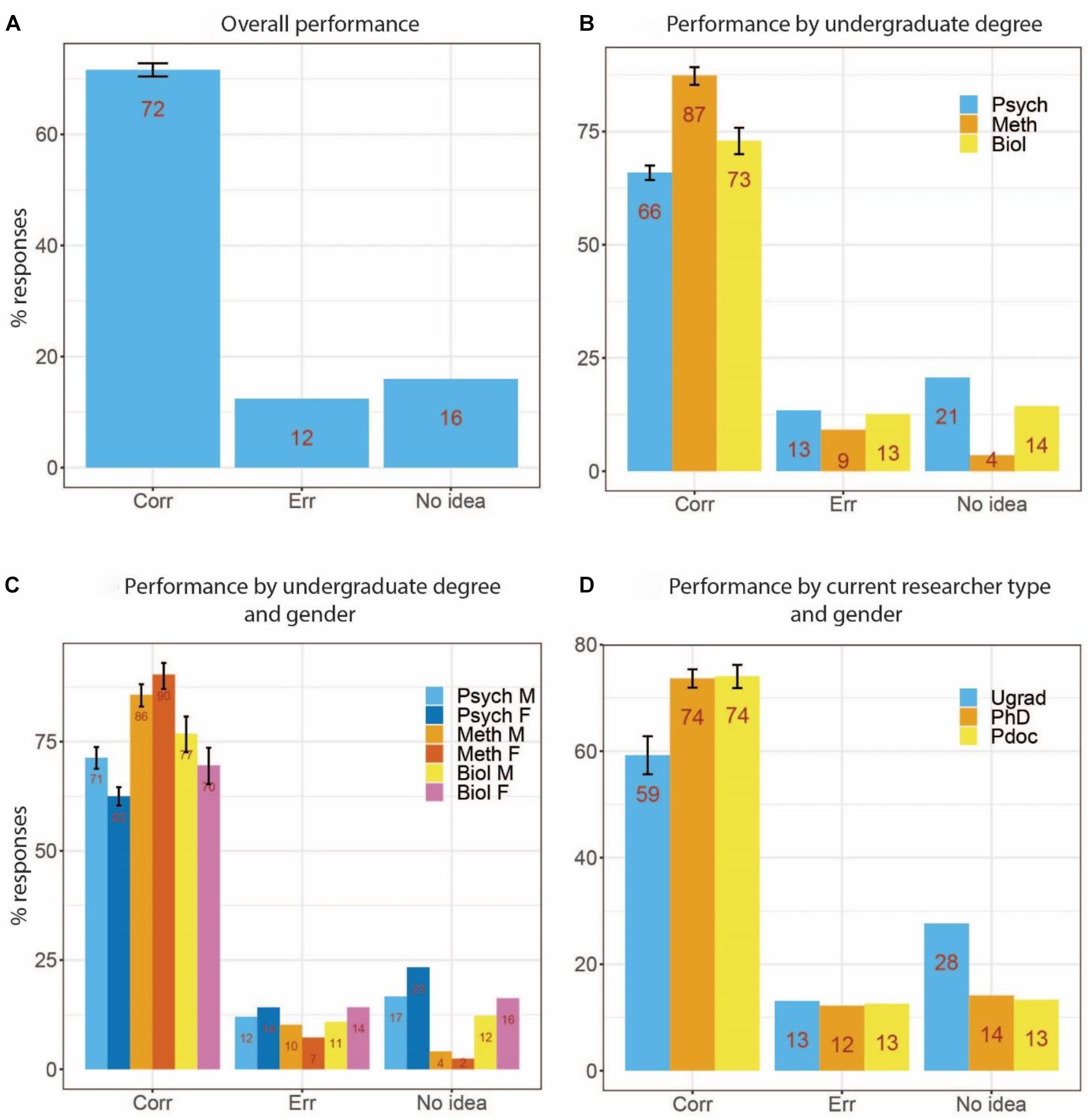

Performance across all methods questions is summarized in Figure 2A. The figure shows percentages of correct, error and “no idea” responses, respectively. Averaged across all methods questions, 72% of responses were correct, 12% error and 16% “no idea.” These results show that overall performance was not at floor or ceiling, and thus our survey should be sufficiently sensitive to be informative about differences among respondent groups.

Figure 2. Performance across all methods questions. (A) Performance across all respondents (Corr, Correct responses; Err, Incorrect responses). (B) Performance broken down by undergraduate degree (Psych, Psychology subjects; Meth, Methods subjects; Biol, Biology subjects). (C) Performance broken down by undergraduate degree and gender (M, Male; F, Female). (D) Performance broken down by current researcher status (Ugrad, undergraduate students; Pdoc, Post-doctoral researchers). Error bars for correct responses reflect 95% confidence intervals for binomial probabilities.

Undergraduate Degree

It is to be expected that the subject of undergraduate study strongly affects the skills being assessed in the methods questions in this survey. As described above, participants were divided into three broad undergraduate degree groupings: Psychology, Methods, and Biology. The results for these groups are presented in Figure 2B. Not surprisingly, respondents with Methods undergraduate degrees provided the highest number of correct responses (87%), followed by those with Biology (73%) and Psychology (66%) undergraduate degrees.

Because the proportion of males to females differed across these groups, we also show these results broken down by gender in Figure 2C. For Psychology and Biology undergraduates, males performed slightly better than females (71 vs. 62% and 77 vs. 70% correct, respectively, with non-overlapping confidence intervals only for Psychology), and vice versa for Methods undergraduates (86 vs. 90%, non-overlapping confidence interval). Thus, gender differences were small and depended on undergraduate degree.

Current Degree

Figure 2D presents results depending on current researcher status (undergraduate student, PhD student and post-doctoral researcher). Interestingly, there is some improvement from the undergraduate (59%) to the PhD (74%) and post-doctoral (74%) level, but no improvement from PhD to post-doctoral level.

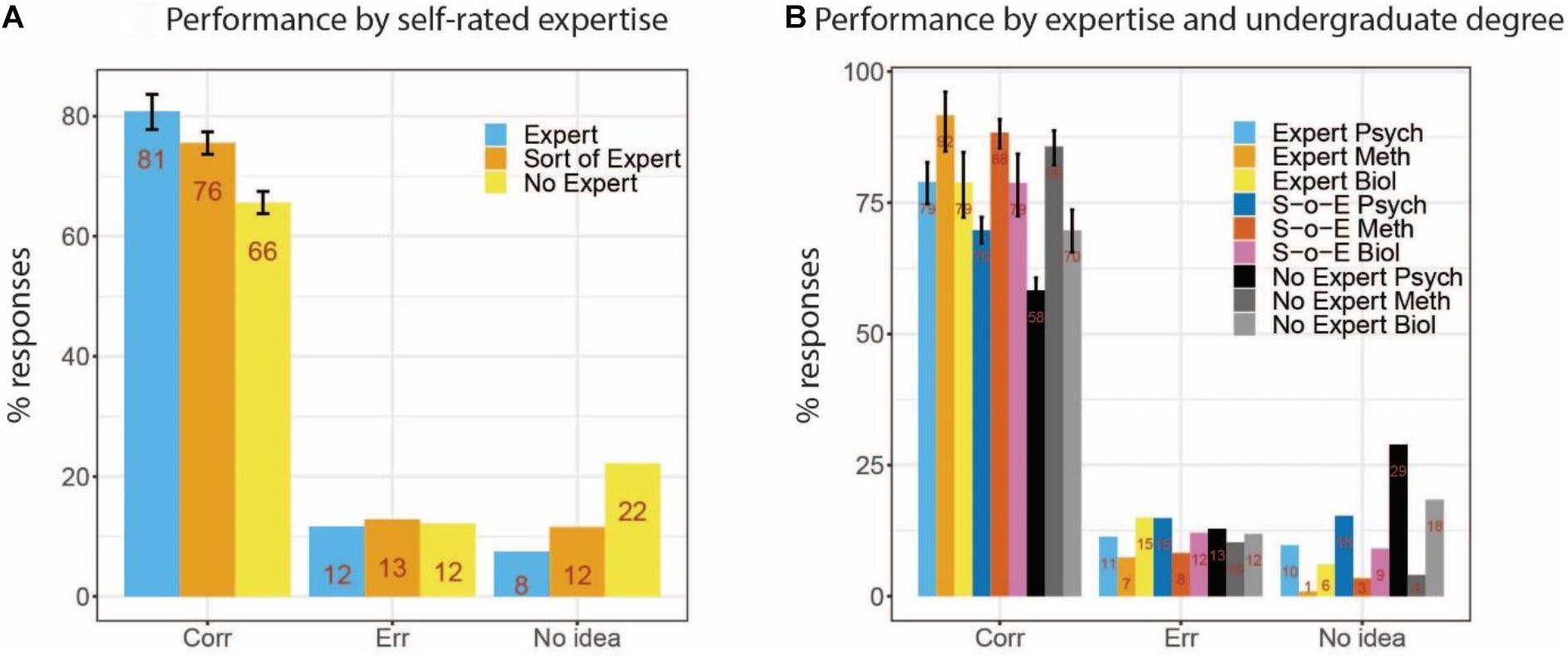

Self-Rated Expertise

Though not all cognitive neuroscientists need methods skills at the same level, it is nonetheless important that students and post-doctoral researchers have a realistic view of their own methods skills. We therefore asked participants to rate themselves as “Expert,” “Sort of expert,” or “No expert” (see also Figure 1E). We present our results broken down into these categories in Figure 3A. Performance differs surprisingly little between experts and sort-of experts (81 vs. 75%), but there is a bigger gap to the non-experts (66%). The results are further broken down by undergraduate degree in Figure 3B. In the Methods group, performance was generally high with small differences among expertise levels (“expert”: 92%, “sort of expert”: 88%, “no expert”: 86%). This gradient was somewhat steeper in the Biology group (83, 79, and 71%), and largest for Psychology (79, 70, and 58%). Respondents from the Methods group who rated themselves as “no experts” still performed better than experts from the other two groups. Thus, performance differences among expertise groups are not dramatic, but show that a participant’s background affects their definition of “expert.”

Figure 3. Performance by self-rated methods expertise level (A) and expertise by undergraduate degree (B) (S-o-E, Sort of Expert).

Sub-Groups of Methods Questions

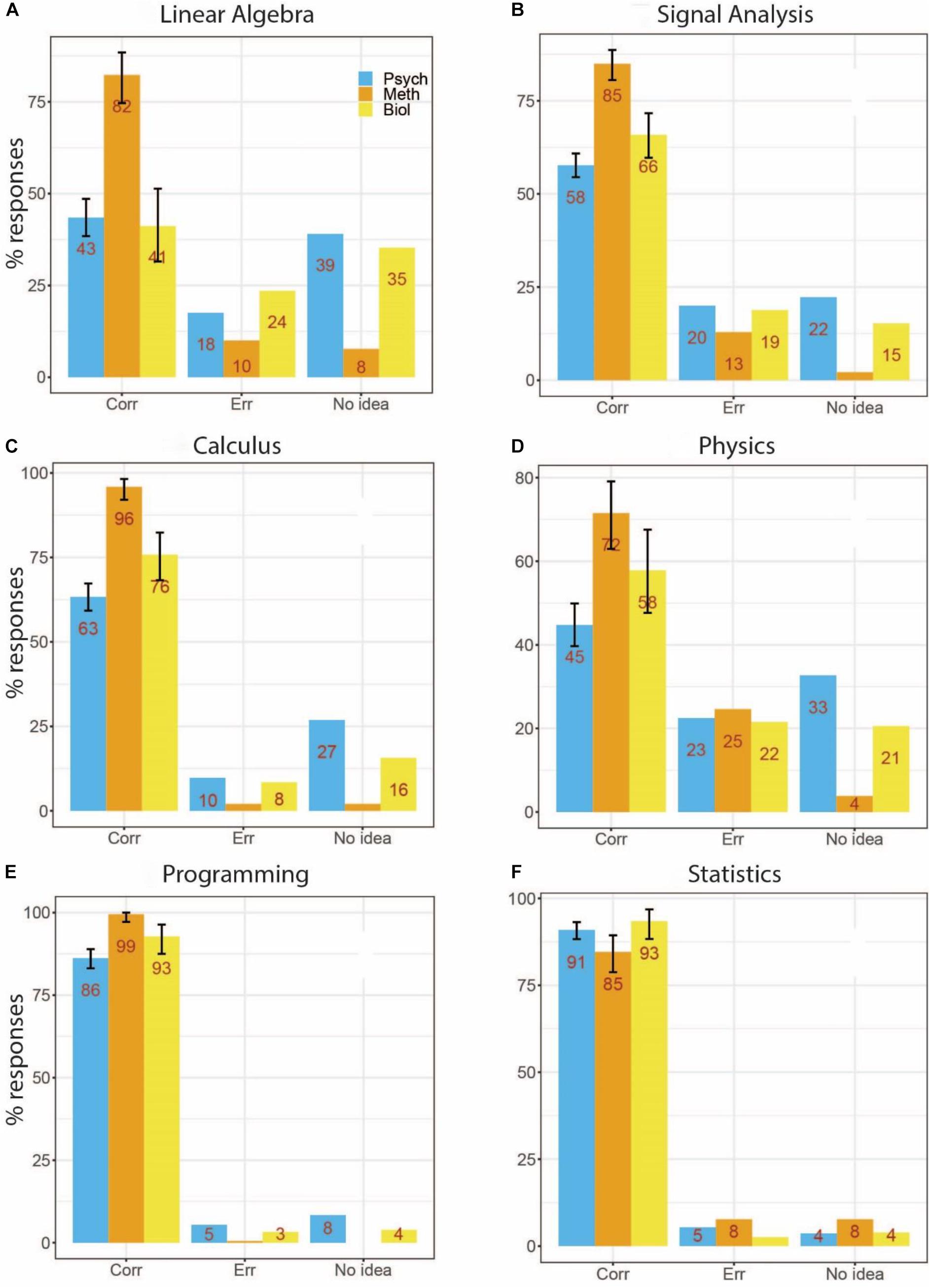

Our questions covered different aspects of neuroimaging data acquisition and analysis. We grouped our questions into six categories that reflect important aspects of neuroimaging data analysis, i.e., Linear Algebra, Signal Analysis, Calculus, Programming, Physics, and Statistics. Results for these groups are presented in Figure 4. Because in our previous analyses the largest differences occurred with respect to undergraduate degree, results were split accordingly.

Figure 4. Performance for specific question groups by undergraduate degree. (A) Linear Algebra (questions about vector orthogonality and vector multiplication). (B) Signal Analysis (signal-to-noise ratio, Fourier analysis, frequency spectra, complex numbers, and trigonometry). (C) Calculus (derivatives, integrals, and algebraic equations). (D) Programming (Linux, “for loops,” integer numbers). (E) Physics (Ohm’s law, electric field and potential). (F) Statistics (correlation, power analysis, and multiple comparisons problem). Error bars for correct responses reflect 95% confidence intervals for binomial probabilities.

Differences among groups were most striking for Linear Algebra (Figure 4A), where performance for Psychology and Biology undergraduate degree holders was only 43 and 41% correct, respectively, compared to 82% for Methods undergraduates. Similar results were obtained for Signal Analysis (4B, 58 and 66% compared to 85%), and Calculus (4C, 63 and 76% compared to 96%). A similar pattern, although at generally lower performance, was observed for Physics (4D, 44 and 58% compared to 72%). For Programming (4E) performance was more equally distributed (86 and 93% compared to 99%), and for Statistics (4F) the pattern was reversed (91 and 93% compared to 85%).

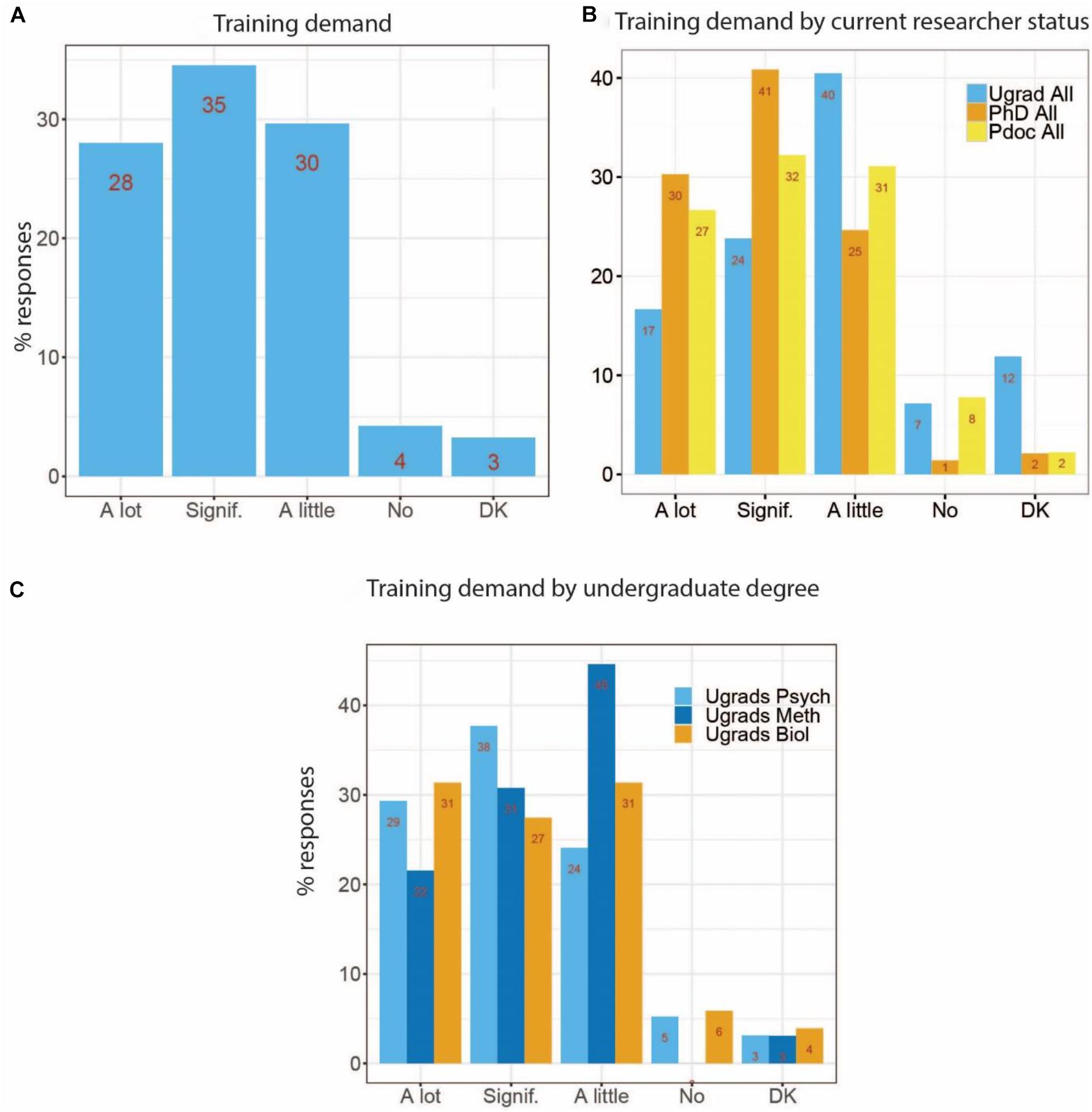

Demand for More Skills-Oriented Training

Finally, we asked participants whether they would like to receive more training on the methods topics covered by this survey. Figure 5A shows that the majority (63%) of participants would like to receive “a lot” or “significantly” more training on these topics, with an additional 30% asking for “a little” more training. Only 4% responded that they do not want more training at all, and another 3% did not know. There was more demand among female (31%) compared to male (24%) respondents for “a lot” more training. Figures 5B,C break these results down further, and show that the general pattern is the same for different researcher types (Figure 5B) and undergraduate groups (Figure 5C). Methods undergraduate degree holders have lower demand for methods training than their Psychology and Biology counterparts, but most of them still vote for “a little” more training, and about 50% still for “a lot” or “significantly” more.

Figure 5. Demand for more training on topics covered in this survey for all participants (A), by current researcher status, (B) as well as by undergraduate degree (C). Signif., Significantly. DK, Don’t know.

Discussion

We performed an online survey among 307 mostly junior scientists in the cognitive neurosciences, and evaluated their basic methods skills in the areas of signal processing, linear algebra, calculus, statistics, programming, and physics. The topics of this survey covered basic textbook knowledge relevant to the analysis of neuroimaging data and computational modeling, as well as to the interpretation of results in the cognitive neuroscience literature. Our results suggest that there is room for improvement with respect to the methods skills among junior researchers in cognitive neuroscience, that researchers are aware of this, and that there is strong demand for more skills-oriented training opportunities.

Performance varied with respect to undergraduate degree and current researcher status. Overall, performance was at 72% correct, i.e., neither at bottom nor at ceiling. It is difficult to interpret this overall performance level without knowing more about the motivation and response style of participants. For example, it is impossible to determine whether some participants did not complete this survey because it was too challenging or too boring or irrelevant to them, which could potentially have biased our results. It is also a challenge to find questions that reflect similar levels of difficulty across different topics. This is a problem with many surveys, not just online. The questions in the present survey relate to early chapters in the corresponding textbooks as well as to every-day problems in cognitive neuroscience (Supplementary Appendix A and B). The survey was short and could be completed within about 15 min. Thus, the results for different survey topics were based on only a few questions. However, the pattern of results is highly plausible. For example, performance was high where expected, e.g., for basic questions about programming (“What is Linux?” and “What is a for-loop?”), and respondents with methods undergraduate degrees performed generally better than those with psychology or biology degrees. Most participants were recruited through e-mail announcements on software mailing lists, and it is likely that they noticed these e-mails because they are actively engaged in neuroimaging projects. Most participants indicated that they would like to receive significantly or a lot more training on the topics covered by this survey. This suggests that the participants of this survey took an interest in the subject and were motivated to perform well. Thus, we conclude that the group differences discussed below are informative about junior researchers in the cognitive neurosciences.

Not surprisingly, we found the most striking differences in performance among respondents with a Methods undergraduate degree vs. those with a Psychology or Biology degree (note that we grouped different undergraduate degrees together). Overall performance of the Psychology group was about 20% lower than the Methods group, with Biology in-between. This difference was of similar magnitude for questions on signal processing, and was much larger (almost 50%) for questions on linear algebra. These two topics are fundamental to the analysis and interpretation of neuroimaging data. The particular questions addressed common problems in neuroimaging analysis, such as the orthogonality of vectors and the interpretation of a frequency spectrum. The multiplication of vectors and matrices is central to the general linear model (GLM), which is ubiquitous in fMRI and EEG/MEG data analysis9. On a practical level, understanding why vectors and matrices of certain dimensions cannot be multiplied with each other can help with the debugging of analysis scripts (e.g., “matrix dimensions do not agree” in MATLAB, Python or R). For example, a lack of awareness that for matrices the commutative law does not necessarily hold (i.e., that A∗B is not necessarily the same as B∗A, unlike for scalar numbers), can potentially lead to incorrect results. In the domain of signal analysis, familiarity with the basic concepts of frequency spectra and Fourier analysis is a pre-requisite for time-frequency (e.g., using wavelets) and spectral connectivity (e.g., using coherence) analyses (e.g., Bastos and Schoffelen, 2015). Calculus (e.g., differentiation and integration) is required to deal with differential equations, which are the basis for dynamic causal modeling and Bayesian inference (Friston, 2010, 2011).

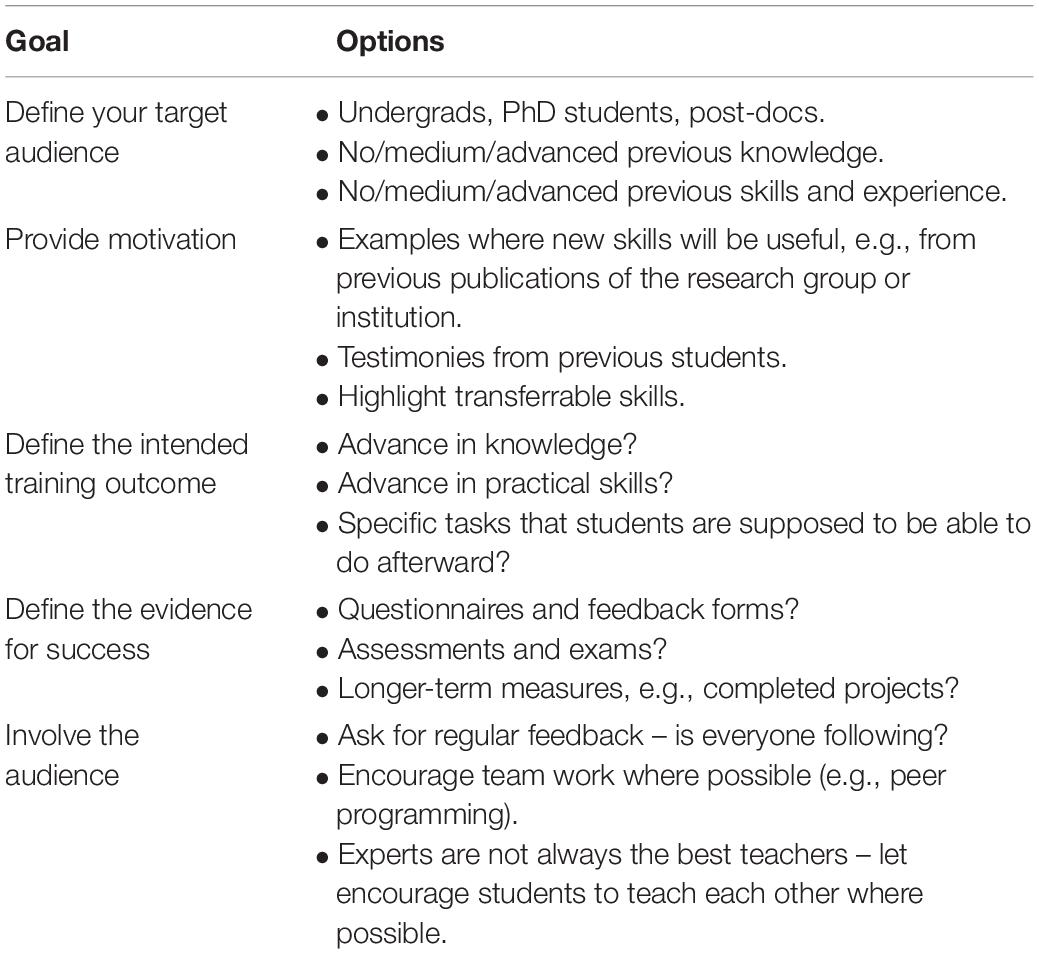

Our results should be taken into account by methods developers and tutors. Methods should be described using concepts and terminology that the target audience understand. If a paper starts with the most general theoretical description of a method in order to then derive special cases that are relevant for practical purposes, many respondents of this survey will be lost straightaway. For example, starting with Bayesian model estimation in order to derive ordinary linear least-squares regression may not produce the desired learning outcome for researchers from a psychology or biology background. It may be better to try it the other way round: start with a problem the target audience are familiar with, and then address its limitations and how to look at the problem at increasingly general levels. Quantum physics can serve as an analogy: While it is undeniably more accurate and more general than Newtonian physics, the latter is still the obvious framework to explain macroscopic phenomena. It is not only “good enough” for lazy people, but also much more efficient to use, therefore less error-prone in practice, and easier to comprehend and teach. To our knowledge, most if not all textbooks on physics start with classical physics before they move on to quantum physics. This from-simple-to-complex approach also seems appropriate to teach data analysis in cognitive neuroscience, even if the right level of complexity for any given problem (and researcher) may not always be straightforward to determine. We provide some suggestions for general guidelines to set up a bespoke training program in Table 1.

Table 1. General guidelines to set up a bespoke training program for a specific research group or institution.

Interestingly, we found an increase in performance from the undergraduate to the PhD and post-doctoral level, but no difference between the latter two. This suggests that researchers maintain the skills level they have acquired during their undergraduate and PhD degrees. This can impede progress in a fast developing interdisciplinary field where methodological innovations are a strong driving force, and where many researchers may end up in research areas that they did not even know at the beginning of their career.

In order to choose the right type of training, or the right research topic to work on, it is important to know your own strengths and weaknesses if it comes to methods skills. We asked our respondents to rate themselves as “experts,” “sort of experts,” and “no experts.” Interestingly, only 9% in the Methods undergraduate group rated themselves as experts, less than for Psychology and Biology (the latter led the table with 20%, Figure 1E). However, “no expert” Methods respondents still outperformed all other non-methods groups. This suggests that researchers estimate their own skill level relative to members of their own group. Biologists and psychologists who work in neuroimaging may have more experience with data analysis than their peers who work in other domains, but still less than researchers from an engineering or physics background. The latter, in turn, may think that because they are not working in engineering or physics anymore, their skill level is lower, even though it may still be higher than for many other researchers in their field. This discrepancy may lead to a lack of awareness of one’s own limitations and the need for advice on the one hand, and an undervaluation of one’s own capacity to provide training and advice to peers on the other. It is important to know what you know and what you do not know. Not everyone needs or wants to be a methods expert. But those who do will need to spend a significant amount of time and effort on appropriate training. Those who do not should know where to get advice when needed.

We also found overall performance differences with respect to gender, albeit these effects were small and depended on undergraduate groups. Performance was numerically higher for males compared to females in the Psychology and Biology groups, but this effect was reversed for Methods undergraduates. We can only speculate about the reasons. Our finding that women outperformed men in the Methods undergraduate group demonstrates the obvious, namely that both genders can achieve similar skill levels when given the same opportunities. Women are less likely to choose methods-related subjects at school or at the undergraduate level (Stoet and Geary, 2018). However, women may feel less encouraged to develop methods skills because methods development and scientific computing are currently dominated by males. While girls generally outperform boys in science subjects (and others) at school, they are underrepresented in STEM degrees, which may be related to their attitude toward science and their self-estimation of academic skills (Stoet and Geary, 2018). Skills-oriented training programs may therefore contribute to equal opportunities in cognitive neuroscience. Thus, it is particularly important for methods-related research and training staff to achieve a high level of diversity, as this can affect perception and feelings of inclusion of more junior members of the research community.

We found the largest differences between groups of respondents with different undergraduate degrees. This suggests that researchers from psychological and biological backgrounds are not necessarily entering the field of cognitive neuroscience with the skills required to analyze data and meaningfully interpret cognitive neuroscience findings reported in the literature. In addition, it is not clear which factors determine who is choosing different types of undergraduate studies (e.g., methods, biology or psychology), and therefore our results may reflect other selection biases that should be studied in the future. Whatever these differences are, it is possible that small differences at early career stages, if not corrected, may amplify over time. For example, researchers who get frustrated with methodological challenges early-on may decide to change fields. This may also be relevant for the gender differences discussed above. It is therefore important to provide opportunities for researchers at different stages, especially at PhD and junior post-doctoral level, to develop and maintain their methods skills.

Furthermore, it is important to communicate the relevance of these topics to students and post-doctoral researchers as well as supervisors. The necessary skills for a research project should be identified both by supervisors and students or post-doctoral researchers. Our survey was dominated by respondents from the United Kingdom (48%) and Europe (22%). Skills levels may vary depending on country and institution, and ideally should be evaluated locally before students enter a project.

While a range of software-related workshops exist that focus on the “doing” part of data analysis, our results indicate that more needs to be done about the “understanding” part. In the future, the cognitive neuroscience community could agree on a core skills set for cognitive neuroscientists, taking into account different sub-disciplines such as neuroimaging, computational modeling and clinical neuroscience. Published examples for possible formats of such a training program already exist (e.g., Millman et al., 2018). These can be adopted to the specific needs and goals of individual research groups and institutions. We provide a list of possible components for a skills-oriented training program aimed at neuroimagers in Supplementary Appendix B.

Good teaching, whether on methods or other subjects, should be valued and appreciated [as lamented previously, e.g., Anonymous Academic (2014)]. This needs to be budgeted for both in terms of money and time. As a bonus, methods skills are highly transferrable and can be useful in careers outside academia. We hope that our survey, despite its limitations, provides a starting point for further evidence-based discussion about the way cognitive neuroscientists are trained.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Cambridge Psychology Research Ethics Committee, University of Cambridge, United Kingdom. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

OH has conceptualized this study, collected and analyzed the data, and wrote the manuscript.

Funding

I grateful for the funding I received from the Medical Research Council United Kingdom (SUAG/058 G101400 to OH).

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

I would like to thank Fionnuala Murphy, Alessio Basti, and Federica Magnabosco for very helpful comments and corrections on a previous version of this manuscript, Rogier Kievit for glancing over my R code (but he should remain unblamed for any incorrectly placed brackets or hyphens), and members of the Cognition and Brain Sciences Unit who have engaged in numerous discussions about training-related issues over the years. A previous version of this manuscript has been released as a pre-print at https://www.biorxiv.org/content/10.1101/329458v2 (Hauk, 2018).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2020.587922/full#supplementary-material

Footnotes

- ^ https://en.wikibooks.org/wiki/SPM

- ^ The title of this paper is a reference to Mark Twain’s essay “English as she is taught,” a humorous teacher’s account of how his pupils responded to questions for which they were ill prepared. A previous version of this manuscript has been released as a pre-print (Hauk, 2018).

- ^ http://nipype.readthedocs.io

- ^ http://automaticanalysis.org

- ^ https://www.surveymonkey.com/

- ^ https://www.surveymonkey.com/r/3JL2CZX

- ^ https://cran.r-project.org/

- ^ https://github.com/olafhauk/MethodsSkillsSurvey

- ^ https://en.wikibooks.org/wiki/SPM

References

Anonymous Academic (2014). Available online at: https://www.theguardian.com/higher-education-network/blog/2014/jun/20/why-no-value-place-on-teaching-experience-uk-universities (accessed November 11, 2020).

Bastos, A. M., and Schoffelen, J. M. (2015). A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Front. Syst. Neurosci. 9:175. doi: 10.3389/fnsys.2015.00175

Coltheart, M. (2013). How can functional neuroimaging inform cognitive theories? Perspect. Psychol. Sci. 8, 98–103. doi: 10.1177/1745691612469208

Diedrichsen, J., and Kriegeskorte, N. (2017). Representational models: a common framework for understanding encoding, pattern-component, and representational-similarity analysis. PLoS Comput. Biol. 13:e1005508. doi: 10.1371/journal.pcbi.1005508

Friston, K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138. doi: 10.1038/nrn2787

Friston, K. J. (2011). Functional and effective connectivity: a review. Brain Connect. 1, 13–36. doi: 10.1089/brain.2011.0008

Friston, K. J., and Dolan, R. J. (2010). Computational and dynamic models in neuroimaging. Neuroimage 52, 752–765. doi: 10.1016/j.neuroimage.2009.12.068

Greenwald, A. G. (2012). There is nothing so theoretical as a good method. Perspect. Psychol. Sci. 7, 99–108.

Hauk, O. (2018). Is there a problem with methods skills in cognitive neuroscience? Evidence from an online survey. bioRxiv [Preprint]. doi: 10.1101/329458

Haxby, J. V. (2012). Multivariate pattern analysis of fMRI: the early beginnings. Neuroimage 62, 852–855. doi: 10.1016/j.neuroimage.2012.03.016

Henson, R. N. (2005). What can functional neuroimaging tell the experimental psychologist? Q. J. Exp. Psychol. Sect. Hum. Exp. Psychol. 58, 193–233.

Huettel, S., Song, A. W., and McCarthy, G. (2009). Functional Magnetic Resonance Imaging. Sunderland, MA: Sinauer Associates.

Ioannidis, J. P. (2005). Why most published research findings are false. PLoS Med. 2:e124. doi: 10.1371/journal.pmed.0020124

Jonas, E., and Kording, K. P. (2017). Could a neuroscientist understand a microprocessor? PLoS Comput. Biol. 13:e1005268. doi: 10.1371/journal.pcbi.1005268

Kriegeskorte, N. (2015). Deep neural networks: a new framework for modeling biological vision and brain information processing. Annu. Rev. Vis. Sci. 1, 417–446. doi: 10.1146/annurev-vision-082114-035447

Kriegeskorte, N., Simmons, W. K., Bellgowan, P. S., and Baker, C. I. (2009). Circular analysis in systems neuroscience: the dangers of double dipping. Nat. Neurosci. 12, 535–540. doi: 10.1038/nn.2303

Lazebnik, Y. (2002). Can a biologist fix a radio?–Or, what I learned while studying apoptosis. Cancer Cell 2, 179–182.

Mather, M., Cacioppo, J. T., and Kanwisher, N. (2013). Introduction to the special section: 20 years of fMRI-What has it done for understanding cognition? Perspect. Psychol. Sci. 8, 41–43. doi: 10.1177/1745691612469036

Millman, K. J., Brett, M., Barnowski, R., and Poline, J. B. (2018). Teaching computational reproducibility for neuroimaging. Front. Neurosci. 12:727. doi: 10.3389/fnins.2018.00727

Nichols, T. E., and Poline, J. B. (2009). Commentary on Vul et al.’s (2009) “puzzlingly high correlations in fMRI Studies of emotion, personality, and social cognition”. Perspect. Psychol. Sci. 4, 291–293. doi: 10.1111/j.1745-6924.2009.01126.x

Nieuwenhuis, S., Forstmann, B. U., and Wagenmakers, E. J. (2011). Erroneous analyses of interactions in neuroscience: a problem of significance. Nat. Neurosci. 14, 1105–1107. doi: 10.1038/nn.2886

Open-Science-Collaboration (2015). Psychology. Estimating the reproducibility of psychological science. Science 349:aac4716. doi: 10.1126/science.aac4716

Page, M. P. (2006). What can’t functional neuroimaging tell the cognitive psychologist? Cortex 42, 428–443.

Poldrack, R. A. (2006). Can cognitive processes be inferred from neuroimaging data? Trends Cogn. Sci. 10, 59–63. doi: 10.1016/j.tics.2005.12.004

Singh, K. D. (2012). Which “neural activity” do you mean? fMRI, MEG, oscillations and neurotransmitters. Neuroimage 62, 1121–1130. doi: 10.1016/j.neuroimage.2012.01.028

Stoet, G., and Geary, D. C. (2018). The gender-equality paradox in science, technology, engineering, and mathematics education. Psychol. Sci. 29:956797617741719. doi: 10.1177/0956797617741719

Supek, S., and Aine, C. J. (2014). Magnetoencephalography – From Signals to Dynamic Cortical Networks. Berlin: Springer.

Turner, B. M., van Maanen, L., and Forstmann, B. U. (2015). Informing cognitive abstractions through neuroimaging: the neural drift diffusion model. Psychol. Rev. 122, 312–336. doi: 10.1037/a0038894

Keywords: neuroimaging, training, skills, survey, fMRI, EEG, MEG, computational modeling

Citation: Hauk O (2020) Human Cognitive Neuroscience as It Is Taught. Front. Psychol. 11:587922. doi: 10.3389/fpsyg.2020.587922

Received: 14 August 2020; Accepted: 28 October 2020;

Published: 24 November 2020.

Edited by:

Pei Sun, Tsinghua University, ChinaReviewed by:

Sashank Varma, Georgia Institute of Technology, United StatesWilliam Grisham, University of California, Los Angeles, United States

Copyright © 2020 Hauk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Olaf Hauk, b2xhZi5oYXVrQG1yYy1jYnUuY2FtLmFjLnVr

Olaf Hauk

Olaf Hauk