- 1Department of Physics, Bar-Ilan University, Ramat Gan, Israel

- 2Department of Philosophy, The University of Memphis, Memphis, TN, United States

- 3Institute for Intelligent Systems, The University of Memphis, Memphis, TN, United States

In recent decades, the scientific study of consciousness has significantly increased our understanding of this elusive phenomenon. Yet, despite critical development in our understanding of the functional side of consciousness, we still lack a fundamental theory regarding its phenomenal aspect. There is an “explanatory gap” between our scientific knowledge of functional consciousness and its “subjective,” phenomenal aspects, referred to as the “hard problem” of consciousness. The phenomenal aspect of consciousness is the first-person answer to “what it’s like” question, and it has thus far proved recalcitrant to direct scientific investigation. Naturalistic dualists argue that it is composed of a primitive, private, non-reductive element of reality that is independent from the functional and physical aspects of consciousness. Illusionists, on the other hand, argue that it is merely a cognitive illusion, and that all that exists are ultimately physical, non-phenomenal properties. We contend that both the dualist and illusionist positions are flawed because they tacitly assume consciousness to be an absolute property that doesn’t depend on the observer. We develop a conceptual and a mathematical argument for a relativistic theory of consciousness in which a system either has or doesn’t have phenomenal consciousness with respect to some observer. Phenomenal consciousness is neither private nor delusional, just relativistic. In the frame of reference of the cognitive system, it will be observable (first-person perspective) and in other frame of reference it will not (third-person perspective). These two cognitive frames of reference are both correct, just as in the case of an observer that claims to be at rest while another will claim that the observer has constant velocity. Given that consciousness is a relativistic phenomenon, neither observer position can be privileged, as they both describe the same underlying reality. Based on relativistic phenomena in physics we developed a mathematical formalization for consciousness which bridges the explanatory gap and dissolves the hard problem. Given that the first-person cognitive frame of reference also offers legitimate observations on consciousness, we conclude by arguing that philosophers can usefully contribute to the science of consciousness by collaborating with neuroscientists to explore the neural basis of phenomenal structures.

Introduction

The Hard Problem of Consciousness

As one of the most complex structures we know of nature, the brain poses a great challenge to us in understanding how higher functions like perception, cognition, and the self arise from it. One of its most baffling abilities is its capacity for conscious experience (van Gulick, 2014). Thomas Nagel (1974) suggests a now widely accepted definition of consciousness: a being is conscious just if there is “something that it is like” to be that creature, i.e., some subjective way the world seems or appears from the creature’s point of view. For example, if bats are conscious, that means there is something it is like for a bat to experience its world through its echolocational senses. On the other hand, under deep sleep (with no dreams) humans are unconscious because there is nothing it is like for humans to experience their world in that state.

In the last several decades, consciousness has transformed from an elusive metaphysical problem into an empirical research topic. Nevertheless, it remains a puzzling and thorny issue for science. At the heart of the problem lies the question of the brute phenomena that we experience from a first-person perspective—e.g., what it is like to feel redness, happiness, or a thought. These qualitative states, or qualia, compose much of the phenomenal side of consciousness. These qualia are arranged into spatial and temporal patterns and formal structures in phenomenal experience, called eidetic or transcendental structures1. For example, while qualia pick out how a specific note sounds, eidetic structures refer to the temporal form of the whole melody. Hence, our inventory of the elusive properties of phenomenal consciousness includes both qualia and eidetic structures.

One of the central aspects of phenomenal features is privacy. It seems that my first-person feeling of happiness, for example, cannot be measured from any other third-person perspective. One can take indirect measurements of my heart rate or even measure the activity in the brain networks that create the representation of happiness in my mind, but these are just markers of the feeling that I have, and not the feeling itself (Block, 1995). From my first-person perspective, I don’t feel the representation of happiness. I just feel happiness. Philosophers refer to this feature of phenomenal consciousness as “transparency”: we seem to directly perceive things, rather than mental representations, even though mental representations mediate experience. But this feeling cannot be directly measured from the third-person perspective, and so is excluded from scientific inquiry. Yet, let us say that we identify a certain mental representation in a subject’s brain as “happiness.” What justifies us in calling it “happiness” as opposed to “sadness?” Perhaps it is correlated with certain physiological and behavioral measures. But, ultimately, the buck stops somewhere: at a subject’s phenomenological report otherwise, we cannot know what this representation represents (Gallagher and Zahavi, 2020). If we categorize the representation as “happiness” but the subject insists they are sad, we are probably mistaken, not the subject. Phenomenal properties are seemingly private and, by some accounts, even beyond any physical explanation. For being physical means being public and measurable and is epistemically inconsistent with privacy. Take an electron, for example. We know it is physical because we can measure it with e.g., a cathode ray tube. If electrons were something that only I could perceive (i.e., if they were private), then we would not include them as physical parts of the scientific worldview. Affective scientists, for example, measure aspects of feeling all the time, like valence and arousal, but measuring these is not the same as measuring the feeling of happiness itself. What affective scientists measure are outcomes of the feeling, while the feeling itself is private and not measurable by the scientists. To date, it is not clear how to bridge the “explanatory gap” (Levine, 1983) between “private” phenomenal features and public, measurable features (e.g., neurocomputational structures), leaving us stuck in the “hard problem” of consciousness (Chalmers, 1996). This gap casts a shadow on the possibility for neuroscience to solve the hard problem because all explanations will always remain within a third-person perspective (e.g., neuronal firing patterns and representations), leaving the first-person perspective out of the reach of neuroscience. This situation divides consciousness into two separate aspects, the functional aspect and the phenomenal one (Block, 1995). The functional aspect (‘functional consciousness’), is the objectively observable aspect of consciousness (Franklin et al., 2016; Kanai et al., 2019). [In that sense, it’s similar to Ned Block’s definition of access conscious, but with less constraints. All phenomenal consciousness has a functional aspect and vice versa, whereas for Block this strict equivalence doesn’t hold (Block, 2011).] But the subjective aspect (phenomenal consciousness) is not directly observable except on the part of the person experiencing that conscious state. As we saw above, because they are private, phenomenal properties are distinct from any cognitive and functional property (which can be publicly observable). Any theory of consciousness should explain how to bridge this gap—How can functional, public, aspects give rise to phenomenal, private aspects?

Nevertheless, in recent decades, consciousness has become increasingly amenable to empirical investigation by focusing on its functional aspect, finally enabling us to begin to understand this enigmatic phenomenon. For example, we now have good evidence that consciousness doesn’t occur in a single brain area. Rather, it seems to be a global phenomenon in widespread areas of the brain (Baars, 1988; Varela et al., 2001; Mashour et al., 2020).

In studying functional consciousness, we take consciousness to be a form of information processing and manipulation of representations, and we trace its functional or causal role within the cognitive system. Widely successful theories, such as global workspace theory (Baars, 1988; Dehaene, 2014; Franklin et al., 2016), attention schema theory (Graziano, 2019), recurrent processing theory (Lamme, 2006; Fahrenfort et al., 2007), and integrated information theory (Tononi, 2008; Oizumi et al., 2014) are virtually premised on this information processing account. Despite our advances in the study of functional consciousness, we still lack a convincing way to bridge the explanatory gap to phenomenal consciousness. The questions, “Why does it feel like anything at all to process information?”, and “How can this feeling be private?” still remain controversial. The hard problem primarily remains a philosophical rather than scientific question. Phenomenal consciousness must be the ultimate reference point for any scientific theory of consciousness. Ultimately, theories of consciousness as information processing, i.e., theories of functional consciousness, only approximate full-blown consciousness by abstracting away its phenomenal features. But they must ultimately refer to phenomenal features in order to give a full explanation of consciousness. Otherwise, there are no grounds for labeling them theories of consciousness, rather than theories of cognition or global informational access.

Let’s take Integrated Information Theory (IIT), for example. IIT claims to bridge between the phenomenal and functional aspects of consciousness and to answer the question of what qualia are (Tononi et al., 2016). According to IIT, consciousness is the result of highly integrated information in the brain [which is “the amount of information generated by a complex of elements, above and beyond the information generated by its parts” (Tononi, 2008)]. A mathematical formula can determine how much integrated information, and thus how much consciousness, is present in any specific system. This measure is referred to as ϕ, and if ϕ is higher than zero, the system is conscious. In this situation, the system has a “maximally irreducible conceptual structure” which is identical to its phenomenal experience (Oizumi et al., 2014). These maximally irreducible conceptual structures are composed of integrated information states, and thus a quale is identical to a specific relation between integrated information states. The problem is that these maximally irreducible conceptual structures are physical states and even though they can be very complex, they are still public and observable from a third-person perspective, while qualia are private and only perceptible from the first-person perspective. How can there be an identity between opposite properties like public and privacy properties? Yet, this is what IIT suggests without any explanation about how to bridge this contradiction. Let’s choose a conceptual structure such that it is a quale of happiness according to IIT. However, it cannot truly be the quale of happiness because, in principle, when we measure it, we observe a physical process and not the qualitative happiness itself (that’s why it’s not enough to measure the physical process, and eventually we need to ask the participants what they feel). Again, we remain stuck within the explanatory gap and the hard problem. In other words, IIT hasn’t solved the hard problem and hasn’t bridged the explanatory gap between private and public properties (Mindt, 2017). In order to avoid this problem, IIT needs to assume phenomenal consciousness as a primitive element separated from other physical elements like space and time. In that case, there is no gap to bridge because now these maximally irreducible conceptual structures are not qualia anymore. They are just physical structures that have properties that correspond to the properties of phenomenal consciousness, but that are not identical to them. In that sense, IIT just shows correspondence between two separate kinds of elements, phenomenal and physical. In the next paragraph we will see that this kind of solution is exactly what Chalmers refers to as ‘naturalistic dualism.’ The problem with that is that instead of understanding what phenomenal consciousness is and what the relations are between the physical world and the phenomenal world, it just assumes that phenomenal consciousness exists as a separate basic element in the world, and it does not solve how there can be any interaction between physical and phenomenal elements (Carroll, 2021).

This is not an issue of only IIT, but a problem to all physicalist’s theories. As David Chalmers (1995) has put it, “the structure and dynamics of physical processes yield only more structure and dynamics, so structures and functions are all we can expect these processes to explain.” The structure and dynamics of information tell us nothing of the story of how one gets from these public structures and dynamics to our private phenomenal experience (and how there can be an equality between these opposite properties). This is known as the ‘structure and dynamics argument’ (Alter, 2016; Chalmers, 2003; Mindt, 2017), stating that structure and dynamics alone are not enough to account for consciousness. This raises a concern about whether any physicalist theory can solve the hard problem of consciousness. In the next sections, however, we will show that physicalism is broader than describing only structures and dynamics, and that we can use this fact in order to solve the hard problem.

Views about the relation between phenomenal and functional consciousness exist across a spectrum. On one end, illusionists seek to erase the hard problem by referring only to functional consciousness, taking phenomenal properties to be cognitive illusions. On the other end, naturalistic dualists or panpsychists seek to promote phenomenal consciousness as a fundamental, non-material, and undecomposable constituent of nature (Chalmers, 2017). The controversy over phenomenal consciousness can be traced to one central problem: naturalization. The project of naturalization involves taking folk psychological concepts and subjecting them to physical laws and empirical scrutiny (Hutto, 2007). Illusionists take the current scientific approach to consciousness and argue that this eliminates the messy problem with supposedly private, immaterial qualia. According to them, functional consciousness generates an illusion of special phenomenal properties, which create the persistent “user illusion” (Dennett, 1991) of a first-person perspective. Dualists start from the same problem of naturalization, but take it that phenomenal consciousness is simply not amenable to third-person scientific inquiry due to its sui generis properties. What is needed, according to the naturalistic dualist, is an expanded understanding of what counts as “natural.” “Given that reductive explanation fails, nonreductive explanation is the natural choice” (Chalmers, 2017, p. 359). Chalmers proposes that consciousness is a fundamental property, something like the strong nuclear force that is irreducible to other forces. A complete ontology of the natural world simply must include phenomenal consciousness as a basic, undecomposable constituent.

The Zombie Argument and the Paradox of Phenomenal Judgment

Chalmers (1996) discusses the logical possibility of a zombie, a being physically, cognitively, and behaviorally identical to a human, but lacking phenomenal consciousness altogether. One would think that such a creature would be dull, like a robot with basic automated responses, but this is not the case. Because a zombie is physically identical to a human, it means that it has the same cognitive system as us: a system that gets inputs from the environment, processes them, and creates behavior responses. In fact, there are no differences between the human and the zombie’s cognitive dynamics, representations, and responses. But, for the zombie, there is nothing that is like to do all these processes. According to Chalmers (1996), the zombie has phenomenal judgments. This concept is very important for our argument, so let’s examine it a bit. Phenomenal judgements are higher-order cognitive functions that humans and zombies have in common. Humans are aware of their experience and its contents and they can form judgments about it (e.g., when we think ‘There is something red’), then, usually, they are led to make claims about it. These various judgments in the vicinity of consciousness are phenomenal judgments. They are not phenomenal states themselves, but they are about phenomenology. Phenomenal judgments are often reflected in claims and reports about consciousness, but they start as a mental process. Phenomenal judgments are themselves cognitive acts that can be explained by functional aspects like the manipulation of mental representations. That’s why zombies also have phenomenal judgements. We can think of a judgment as what is left of a belief after any associated phenomenal property is subtracted. As a result, phenomenal judgements are part of the functional aspect of consciousness. As Chalmers puts it (1996, p.174):

“Judgments can be understood as what I and my zombie twin have in common. A zombie does not have any conscious experience, but he claims that he does. My zombie twin judges that he has conscious experience, and his judgments in this vicinity correspond one-to-one to mine. He will have the same form, and he will function in the same way in directing behavior as mine… Alongside every conscious experience there is a content-bearing cognitive state. It is roughly information that is accessible to the cognitive system, available for verbal report, and so on.”

In other words, phenomenal judgments can be described as the representations of a cognitive system that bear content about phenomenology (which are not necessarily linguistic representations. E.g., such representation can be a representation of the color of the apple). In this paper, we will identify functional consciousness with the creation of phenomenal judgments.

As a result of the zombie’s capacity to create phenomenal judgements, we reach a peculiar situation: The zombie has functional consciousness, i.e., all the physical and functional conscious processes studied by scientists, such as global informational access. But there would be nothing it is like to have that global informational access and to be that zombie. All that the zombie cognitive system requires is the capacity to produce phenomenal judgments that it can later report. For example, if you asked it if it sees a red rose in front of it, using information processing, it might respond, “Yes, I’m definitely conscious of seeing a red rose,” although it is ultimately mistaken and there is truly nothing that is like for the zombie to see that rose. In order to produce this phenomenal judgment, despite having no phenomenal consciousness, the zombie cognitive system needs representations and a central system with direct access to important information enabling it to generate behavioral responses. It needs direct access to perceptual information, a concept of self to differentiate itself from the world, an ability to access its own cognitive contents, and the capacity to reflect. Such a cognitive system could presumably reason about its own perceptions. It would report that it sees the red rose, and that it has some property over and above its structural and functional properties—phenomenal consciousness. Of course, this report would be mistaken. It is a paradoxical situation in which functional consciousness creates phenomenal judgments without the intervention of phenomenal consciousness—yet phenomenal judgments are purportedly about phenomenal consciousness. This paradox of phenomenal judgment (Chalmers, 1996) arises because of the independence of phenomenal consciousness from physical processes. The hidden assumption here is that consciousness is private. Consequently, it is not possible to measure it. It seems that one aspect of consciousness (physical, functional consciousness) can come without the other (phenomenal consciousness). For example, in IIT there is a variant of the phenomenal judgement paradox. According to IIT, there can be a cognitive system that will manipulate information and infer that it has phenomenal experience, that there is something that is like to be that cognitive system, yet it doesn’t have any consciousness because its neural network creates all these judgements in a feed-forward way, meaning that  in the system (Doerig et al., 2019). Again, we see that even in IIT the functional part of consciousness (phenomenal judgements) can come without the phenomenal aspect of consciousness. This paradox arises because in IIT consciousness and cognitive content are not conditioned to correspond to each other. As a result, although the cognitive system has phenomenal judgements about phenomenal consciousness, still, it is just a zombie with no phenomenal consciousness because

in the system (Doerig et al., 2019). Again, we see that even in IIT the functional part of consciousness (phenomenal judgements) can come without the phenomenal aspect of consciousness. This paradox arises because in IIT consciousness and cognitive content are not conditioned to correspond to each other. As a result, although the cognitive system has phenomenal judgements about phenomenal consciousness, still, it is just a zombie with no phenomenal consciousness because  in the system (this kind of a zombie is termed functional zombie, see Oizumi et al., 2014; Tononi et al., 2016).

in the system (this kind of a zombie is termed functional zombie, see Oizumi et al., 2014; Tononi et al., 2016).

In order to solve this paradox, we need to explain two aspects of consciousness: How there could be natural phenomena that are private and thus independent of physical processes (or how come they seem private), and what the exact relationship between cognitive content and phenomenal consciousness is.

The illusionist position is that phenomenal properties are cognitive illusions generated by the brain. If a zombie with developed cognitive abilities can mistakenlythink it has phenomenal consciousness, how do we know that this is not the case with ourselves, as well? For the illusionist, this is exactly the predicament we are in, albeit we are zombies with a rich inner life (Frankish, 2017)—whatever that means. The illusionist takes the purported scientific intractability of phenomenal consciousness to be evidence against phenomenal consciousness. “Illusionists deny that experiences have phenomenal properties and focus on explaining why they seem to have them” (Frankish, 2017, p. 18). While this position might seem to be counterintuitive, it saves a conservative understanding of physics and obviates any call for exotic properties of the universe, as Chalmers (2017) argues for. As Graziano et al. (2020) state:

“‘I know I have an experience because, Dude, I’m experiencing it right now.’ Every argument in favour of the literal reality of subjective experience … boils down sooner or later to that logic. But the logic is circular. It is literally, ‘X is true because X is true.’ If that is not a machine stuck in a logic loop, we don’t know what is” (Graziano et al., 2020, p. 8).

The problem, however, is that it’s not clear what the claim that phenomenal consciousness is an illusion means. What exactly does “illusion” mean in this context? To be clear, the illusionist is not denying that you see, e.g., a red rose in front of you. You do “see” it, but it only seems like it has phenomenal properties. You have non-phenomenal access to the perceptual representation, sufficient to enable a phenomenal judgment about that representation (e.g., “I see a red rose”). This contention runs in the face of all our intuitions, but the illusionist claims those intuitions are illusory. Frankish (2017) states that these seeming-properties are quasi-phenomenal properties, which are physical properties that give the illusion of phenomenal properties. But are quasi-phenomenal properties any less mysterious than phenomenal properties? How is it that seeming to be phenomenally conscious is not just being phenomenally conscious?

One way to address these problems and understand the illusionist position is to understand them as agreeing with Chalmers about phenomenal consciousness’s privacy, and that zombies are logically possible. Then, the paradox of phenomenal judgment already notes that just such a functionally conscious cognitive system could produce phenomenal judgments without having phenomenal consciousness. However, illusionists take this to be evidence against phenomenal consciousness. A purely physical system creates phenomenal judgments, therefore there are nothing but purely physical processes involved (and hence, no qualia). However, this position is problematic. Only if phenomenal properties are private could there be such a paradoxical situation of having phenomenal judgments about phenomenal consciousness, yet without any phenomenal consciousness. If we can argue against the privacy of phenomenal properties, then we can escape the trap into which both the dualist and illusionist fall.

We interpret the dualist and illusionist extremes as unfortunate consequences of a mistaken view of naturalism. The illusionist’s commitment to naturalism leads them to exclude supposedly non-natural properties like qualia. The scientific dualist is also committed to naturalism, but takes it that the current inventory of nature is simply incomplete and that there must be a new, exotic fundamental property in the universe, that of phenomenality.

The Relativistic Approach

A common thread connecting both extremes of dualism and illusionism is that both assume that phenomenal consciousness is an absolute phenomenon, wherein an object O evinces either property P or ¬P. We will show that we need to abandon this assumption. The relativistic principle in modern physics posits a universe in which for many properties an object O evinces either property P or ¬P with respect to some observer X. In such a situation, there is no one answer to the question of whether object O has property P or not. We propose a novel relativistic theory of consciousness in which consciousness is not an absolute property but a relative one. This approach eschews both extremes of illusionism and dualism. The relativistic theory of consciousness will show that phenomenal consciousness is neither an illusion created by a “machine stuck in a logic loop” nor a unique fundamental property of the universe. It will give a coherent answer to the question of the (supposed) privacy of phenomenal consciousness, will bridge the explanatory gap, and will provide a solution to the hard problem based in relativistic physics. General notions of this approach can be found in some dual aspect monisms such as Max Velmans’ reflexive monism. According to Velmans (2009, p. 298), “[i]ndividual conscious representations are perspectival.” Here, however, we develop a physical theory of consciousness as a relativistic phenomenon and formalize the perspectival relations in light of the relativistic principle. To do that, in the following section, we will develop more formally the relativistic principle and introduce the equivalence principle of consciousness.

In physics, relativity means that different observers from different frames of reference will nevertheless measure the same laws of nature. If, for example, one observer is in a closed room in a building and the other observer is in a closed room in a ship (one moving smoothly enough on calm water), then the observer in the ship would not be able to tell whether the ship is moving or stationary. Each will obtain the same results for any experiment that tries to determine whether they are moving or not. For both of them, the laws of nature will be the same, and each will conclude that they are stationary. For example, if they throw a ball toward the room’s ceiling, each will determine that the ball will return directly into their hands (because the ship moves with constant velocity and because of Newton’s First Law, the ball will preserve the velocity of the ship while in the air, and will propagate forward with the same pace as the ship. As a result, it will fall directly into the observer’s hands). There will be no difference in the results of each observer’s measurements, trying to establish whether they are stationary or not. They will conclude that they have the same laws of nature currently in force, causing the same results. These results will be the results of a stationary observer and thus both of them will conclude that they are at rest. Because each of them will conclude that they are the stationary one, they will not agree about one another’s status. Each of them will conclude that the other is the one that moves (common sense will tell the observer in the ship that they are the one moving, but imagine an observer locked on the ship in a room with no windows. Such an observer cannot observe the outside world. This kind of observers will conclude that they are stationary because velocity is relativistic).

To state that consciousness is a relativistic phenomenon is to state that there are observers in different cognitive frames of reference, yet they will nevertheless measure the same laws of nature currently in force and the same phenomenon of consciousness in their different frames. We will start with an equivalence principle between a conscious agent, like a human being, and a zombie agent, like an advanced artificial cognitive system. As a result of this equivalence, we will show that if the relativistic principle is true, then zombies are not possible. Instead, every purported zombie will actually have phenomenal consciousness and any system with adequate functional consciousness will exhibit phenomenal consciousness from the first-person cognitive frame of reference. Others have similarly claimed that zombies are physically impossible (Brown, 2010; Dennett, 1995; Frankish, 2007; Nagel, 2012), but our aim here is to show why that is according to the relativistic principle. As a result of this equivalence, observations of consciousness fundamentally depend on the observer’s cognitive frame of reference. The first-person cognitive frame of reference is the perspective of the cognitive system itself (Solms, 2021). The third-person cognitive frame of reference is the perspective of any external observer of that cognitive system. Phenomenal consciousness is only seemingly private because in order to measure it one needs to be in the appropriate cognitive frame of reference. It is not a simple transformation to change from a third-person cognitive frame of reference to the first-person frame, but in principle it can be done, and hence phenomenal consciousness isn’t private anymore. We avoid the term “first-person perspective” because of its occasional association with immaterial views of consciousness; cognitive frames of reference refer to physical systems capable of representing and manipulating inputs. These systems have physical positions in space and time and instantiate distinct dynamics.

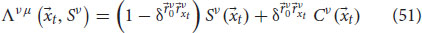

In section “The Equivalence Principle of Consciousness: Mathematical Description,” we will show that from its own first-person cognitive frame of reference, the observer will observe phenomenal consciousness, but any other observer in a third-person cognitive frame of reference will observe only the physical substrates that underlie qualia and eidetic structures. The illusionist mistake is to argue that the third-person cognitive frame of reference is the proper perspective. To be clear, the first-person cognitive frame of reference is still a physical location in space and time (it is not immaterial); it is just the position and the dynamics of the cognitive system itself. As we will see, this is the position from which phenomenal consciousness can be observed. The principle of relativity tells us that there is no privileged perspective in the universe. Rather, we will get different measurements depending on the observer’s position. Since consciousness is relativistic, we get different measurements depending on whether the observer occupies or is external to the cognitive system in question. Both the first-person and third-person cognitive frames of reference describe the same reality from two different points of view, and we cannot prefer one point of view upon the other (Solms, 2021). The dualist mistake is to argue that phenomenal consciousness is private. For any relativistic phenomenon there is a formal transformation between the observers of different frames of reference, meaning that both frames can be accessible to every observer with the right transformations. Consciousness as a relativistic phenomenon also has such transformation rules. We will describe the transformations between first-person (i.e., phenomenological) and third-person (i.e., laboratory point of view) cognitive frames of reference. There are several consequences of these transformations. First, qualia and eidetic structures are not private. Rather, they only appear private, because in order to measure them one needs to be in the appropriate cognitive frame of reference, i.e., within the perspective of the cognitive system in question. We can use these transformations to answer questions like, “What is it like to be someone else?” Because of the transformations, results that we obtain from third-person methodology should be isomorphic to first-person structures. Isomorphism between two elements means that they have the same mathematical form and there is a transformation between them that preserves this form. Equality is when two objects are exactly the same, and everything that is true about one object is also true about the other. However, an isomorphism implies that everything that is true about some properties of one object’s structure is also true about the other. In section “The Equivalence Principle of Consciousness: Mathematical Description,” we will show that this is the case with measurements obtained from first-person and third-person frames of reference. We will show that this isomorphism is a direct result of the relativistic principle and the notion that phenomenal judgements and phenomenal structures are two sides of the same underlying reality. All that separates them are different kinds of measurements (causing different kinds of properties). An unintuitive consequence of the relativistic theory is that the opposite is also true, and first-person structures also bear formal equivalence to third-person structures. We advocate for interdisciplinary work between philosophers and cognitive neuroscientists in exploring this consequence.

The Equivalence Principle of Consciousness

The Principle of Relativity and the Equivalence Principle

Our task is to establish the equivalence principle of consciousness, namely, that qualitative and quantitative aspects of consciousness are formally equivalent. We start by establishing the equivalence between conscious humans and zombies, and then we expand that equivalence to all structures of functional consciousness. We begin with a philosophical defense of the equivalence principle and then develop a mathematical formalization. We must first present the principle of relativity and the equivalence principle in physics. Later, we will use these examples to develop a new equivalence principle and a new transformation for consciousness. To be more formal than earlier, the principle of relativity is the requirement that the equations describing the laws of physics have the same form in all admissible frames of reference (Møller, 1952). In physics, several relativistic phenomena are well-known, such as velocity and time, and the equivalence principle between uniformly accelerated system and a system under a uniform gravitational field. Let’s examine two examples with the help of two observers, Alice and Bob.

In the first example of a simple Galilean transformation, Alice is standing on a train platform and measures the velocity of Bob, who is standing inside a moving train. Meanwhile, Bob simultaneously measures his own velocity. As the train moves with constant velocity, we know that the laws of nature are the same for both Alice’s and Bob’s frames of reference (and thus that the equations describing the laws of physics have the same form in both frames of reference; Einstein et al., 1923/1952, p. 111). According to his measurements, Bob will conclude that he’sstationary, and that Alice and her platform are moving. However, Alice will respond that Bob is mistaken, and that she is stationary while Bob and the train are moving. At some point, Alice might say to Bob that he has an illusion that he is stationary and that she is moving. After all, it is not commonsensical that she, along with the platform and the whole world, are moving. Still, although it doesn’t seem to make common sense, in terms of physics all of Bob’s measurements will be consistent with him being stationary and Alice being the one who moves. In a relativistic universe, we cannot determine who is moving and who is stationary because all experiment results are the same whether the system is moving with constant velocity or at rest. Befuddled, Bob might create an elaborate argument to the effect that his measurement of being stationary is a private measurement that Alice just can’t observe. But, of course, both of their measurements are correct. The answer depends on the frame of reference of the observer. Yet, both of them draw mistaken conclusions from their correct measurements. Bob has no illusions, and his measurements are not private. Velocity is simply a relativistic phenomenon and there is no answer to the question of what the velocity of any given body is without reference to some observer. Their mistakes are derived from the incorrect assumption that velocity is an absolute phenomenon. As counterintuitive as it may seem, the relativity principle tells us that Bob is not the one who is “really” moving. Alice’s perspective is not some absolute, “correct” perspective that sets the standard for measurement, although we may think that way in our commonsense folk physics (Forshaw and Smith, 2014). Alice is moving relative to Bob’s perspective, and Bob is moving relative to Alice’s perspective. Furthermore, the fact that Alice and Bob agree about all the results from their own measurements means that these two frames of reference are physically equivalent (i.e., they have the same laws of nature in force and cannot be distinguished by any experiment). Because of this physical equivalence, they will not agree about who is at rest and who is moving.

Later, Einstein extended the relativity principle by creating special relativity theory. The Galilean transformation showed that there is a transformation between all frames of reference that have constant velocity relative to each other (inertial frames). Einstein extended this transformation and created the Lorentz transformation, which describes more accurately the relativistic principle. enabling us to move from measurements in one inertial frame of reference to measurements in another inertial frame of reference, even if their velocity is near the speed of light (Einstein, 1905; Forshaw and Smith, 2014). According to the transformation, each observer can change frames of reference to any other inertial frame by changing the velocity of the system. The transformation equation ensures that in each frame we’ll get the correct values that the system will measure. For example, the observer will always measure that its own system is at rest and that it is the origin of the axes. Indeed, this is the outcome of the transformation equation for the observer’s own system. Mathematically, a frame of reference consists of an abstract coordinate system, and (in tensor formulation) we denote each frame of reference by a different Greek letter (and by adding a prime symbol), usually by μ and ν, which are indexes for the elements of the vectors in each coordinate system. The Lorentz transformation is denoted by  . It is a matrix that gets elements of a vector in one frame of reference (

. It is a matrix that gets elements of a vector in one frame of reference ( ) and gives back the elements of a vector in another frame of reference (

) and gives back the elements of a vector in another frame of reference ( ):

):

This equation coheres with the relativistic principle. That is, it describes the laws of physics with the same form in all admissible frames of reference. This form will stay the same regardless of which frame we choose. It allows us to switch frames of reference and get the measured result in one frame, depending only on the other frame and the relative velocity between the two.

The second example comes from Albert Einstein’s (1907) observation of the equivalence principle between a uniformly accelerated system (like a rocket) and a system under a uniform gravitational field (like the Earth). Here, Einstein extended the relativity principle even more, not only to constant velocity but also to acceleration and gravity (for local measurements that measure the laws of nature near the observer). He started with two different frames of reference that have the exact same results from all measurements made in their frames of reference. Then he used the relativity principle, concluding that because they cannot be distinguished by any local experiment they are equivalent and have the same laws of nature. Lastly, he concluded that because of the equivalence, we can infer that phenomena happening in one frame of reference will also happen in the other. Now, assume that Alice is skydiving and is in freefall in the Earth’s atmosphere, while Bob is floating in outer space inside his spaceship. Although Alice is falling and Bob is stationary in space, both will still obtain the same results of every local experiment they might do. For example, and if they were to release a ball from their hand, both of them will measure the ball floating beside of them (in freefall, all bodies fall with the same acceleration and appear to be stationary relative to one another—this is why movies sometimes use airplanes in freefall to simulate outer space). Although they are in different physical scenarios, both will infer that they are floating at rest. Einstein concluded that because they would measure the same results, there is an equivalence between the two systems. In an equivalence state, we cannot distinguish between the systems by any measurement, and thus a system under either gravity or acceleration will have the same laws of nature in force, described by the same equations regardless of which frame it is.

Because of this equivalence, we can infer physical laws from one system to the other. For example, from knowing about the redshift effect of light in accelerated systems with no gravitational force, Einstein predicted that there should be also a redshift effect of light in the presence of a gravitational field as if it were an accelerated system. This phenomenon was later confirmed (Pound and Rebka, 1960). According to Einstein (1911),

“By assuming this [the equivalence principle] to be so, we arrive at a principle which…has great heuristic importance. For by theoretical consideration of processes which take place relative to a system of reference with uniform acceleration, we obtain information as to the behavior of processes in a homogeneous gravitational field” (p. 899).

Next, we argue that phenomenal consciousness is a relativistic physical phenomenon just like velocity. This allows us to dissolve the hard problem by letting consciousness be relativistic instead of absolute. Moreover, we develop a similar transformation for phenomenal properties between first-person and third-person perspectives. The transformation describes phenomenal consciousness with the same form in all admissible cognitive frames of reference and thus satisfying the relativistic principle.

The Equivalence Principle of Consciousness: Conceptual Argument

Before we begin the argument, it is essential to elaborate on what ‘observer’ and ‘measurement’ mean when applying relativistic physics to cognitive science. In relativity theory, an observer is a frame of reference from which a set of physical objects or events are being measured locally. In our case, let’s define a ‘cognitive frame of reference’. This is the perspective of a specific cognitive system from which a set of physical objects and events are being measured. Cognitive frame of reference is being determined by the dynamics of the cognitive system (for more details, see section “The Equivalence Principle of Consciousness: Mathematical Description”).

We use the term ‘measurement’ in as general a way as possible, from a physical point of view, such that a measurement can occur between two particles like an electron and a proton. Measurement is an interaction that causes a result in the world. The result is the measured property, and this measured property is new information in the system. For example, when a cognitive system measures an apple, it means that there is a physical interaction between the cognitive system and the apple. As a result, the cognitive system will recognize that this is an apple (e.g., the interaction may be via light and the result of it will be activation of retinal cells which eventually, after sufficient interactions, will lead to the recognition of the apple). In the case of cognitive systems, there are measurements of mental states. This kind of measurement means that the cognitive system interacts with a content-bearing cognitive state, like a representation, using interactions between different parts of the system. It is a strictly physical and public process (accessible for everybody with the right tools). As a result of this definition, the starting point of the argument is with measurements that are non-controversial, i.e., measurements that are public. These measurements include two types, measurements of behavioral reports and measurements of neural representations (like the phenomenal judgement-representations). For example, physical interaction of light between an apple and a cognitive system causes, in the end of a long process of interactions, the activation of phenomenal judgement-representation of an apple and possibly even a behavioral report (“I see an apple”).

Let us start from the naturalistic assumption that phenomenal consciousness should have some kind of physical explanation. The physicalism we assume includes matter, energy, forces, fields, space, time, and so forth, and might include new elements still undiscovered by physics. Panpsychism, naturalistic dualism, and illusionism all fall under such a broad physicalism. (It might be, for example, that physics hasn’t discovered yet that there is a basic private phenomenal element in addition to the observed known elements. If this element exists, it needs to be part of the broad physicalism.) In addition, let’s assume that the principle of relativity holds, i.e., all physical laws in force should be the same in different frames of reference, provided these frames of references agree about all the results of their measurements. Since we have accepted that consciousness is physical (in the broad sense), we can obtain a new equivalence principle for consciousness. Let’s assume two agents—Alice, a conscious human being—and a zombie in the form of a complex, artificial cognitive system that delusionally claims to have phenomenal consciousness. Let’s call this artificially intelligent zombie “Artificial Learning Intelligent Conscious Entity,” or ALICE. ALICE is a very sophisticated AI. It has the capacity to receive inputs from the environment, learn, represent, store and retrieve representations, focus on relevant information, and integrate information in such a manner that it can use representations to achieve human-like cognitive capabilities.

ALICE has direct access to perceptual information and to some of its own cognitive contents, a developed concept of self, the capacity to reflect by creating representations of its internal processes and higher-order representations, and can create outputs and behavioral responses (it has a language system and the ability to communicate). In fact, it was created to emulate Alice. It has the same representations, memories, and dynamics as Alice’s cognitive system and as a result, it has the same behavioral responses as Alice. But ALICE is a zombie (we assume) and doesn’t have phenomenal consciousness. On the other hand, conscious Alice will agree with all of zombie ALICE’s phenomenal judgments. After sufficient time to practice, ALICE will be able to produce phenomenal judgments and reports nearly identical to those of Alice. After enough representational manipulation, ALICE can say, e.g., “I see a fresh little madeleine, it looks good and now I want to eat it because it makes me happy.” It can also reflect about the experience it just had and might say something like, “I just had a tasty madeleine cake. It reminded me of my childhood, like Proust. There’s nothing more I can add to describe the taste, it’s ineffable.”

Alice and ALICE will agree about all measurements and observations they can perform, whether it’s a measurement of their behavior and verbalizations, or even an “inner” observation about their own judgments of their experience, thoughts and feelings. They will not find any measurement that differs between them, although Alice is conscious, and ALICE is not. For example, they can use a Boolean operation (with yes/no output) to compare their phenomenal judgments (e.g., do both agree that they see a madeleine and that it’s tasty?). Now, let’s follow in Einstein’s footsteps concerning the equivalence principle between a uniformly accelerated system and a system under a uniform gravitational field. Alice and ALICE are two different observers, and the fact that they obtain the same measurements agrees with the conditions of the relativity principle. Accordingly, because both of them completely agree upon all measurements, they are governed by the same physical laws. More precisely, these two observers are cognitive systems, each with its own cognitive frame of reference. For the same input, both frames of reference agree about the outcome of all their measurements, and thus both currently have the same physical laws in force. As a result, these two systems are equivalent to each other in all physical aspects and we can infer physical laws from one system to the other. According to the naturalistic assumption, phenomenal consciousness is part of physics, so the equivalence between the systems applies also to phenomenal structures. Consequently, we can infer that if Alice has phenomenal properties, then ALICE also must have them. Both Alice and ALICE must have phenomenal consciousness! ALICE cannot be a zombie, like we initially assumed, because their systems are physically equivalent. This equivalence makes it impossible for us to speak of the existence of absolute phenomenal properties in the human frame of reference, just as the theory of relativity forbids us to talk of the absolute velocity in a system. For, by knowing that there is phenomenal consciousness in the human frame of reference and by using the broad physicalist premise, we conclude that there are physical laws that enable phenomenal consciousness in the human frame of reference. Because of the equivalence principle, we can infer that the same physical laws will be present also in the supposed “zombie” cognitive system’s frame of reference. The conclusion is that if there is phenomenal consciousness in the human frame of reference, then the “zombie” cognitive system’s frame of reference must also harbor phenomenal consciousness.

We started from the premise that ALICE is a zombie and concluded that it must have phenomenal consciousness. One of our premises is wrong: either the broad physicalism, the relativistic principle, or the existence of zombies. Most likely the latter is the odd man out, because we can explain these supposed “zombies” using a relativistic, physicalist framework. As a result, although we started from a very broad notion of physicalism and an assumption that the human has phenomenal consciousness and the “zombie” cognitive system does not, the relativity principle forces us to treat phenomenal structures as relativistic. According to the relativity principle, there is no absolute frame of reference; there are only different observers that obtain different measurements. If the observers obtain the same measurements, there cannot be anything else that influences them. There is nothing over and above the observers (no God’s-eye-view), and if they observe that they have phenomenal properties, then they have phenomenal consciousness. Because there is nothing over and above the observer, we can generalize this result even further for every cognitive system that has phenomenal judgments: Any two arbitrary cognitive observers that create phenomenal judgment-representations also have phenomenal consciousness. Zombies cannot exist (assuming their cognitive systems create phenomenal judgments). In other words, we obtain an equivalence between functional consciousness (which creates phenomenal judgments) and phenomenal consciousness. Notice that even if we start from the naturalistic dualism of Chalmers and assume a broader physics including phenomenal elements alongside other aspects in the universe, the relativistic principle still forces this kind of broad physics to have the same consequences, viz., that zombies cannot exist and that there is an equivalence between phenomenal judgement-representations and phenomenal properties. (Formally, phenomenal judgement-representations and phenomenal properties are isomorphic. They have the same mathematical form and there is a transformation between them that preserves this form. See section “The Equivalence Principle of Consciousness: Mathematical Description”). As a result, the relativistic principle undermines the dualist approach altogether.

In the next section, we develop the mathematical proof of the argument. The mathematical description elaborates the fine details of the theory and reveals new insights. Notice that a mathematical background is not necessary to understand this section, as every step includes a comprehensive explanation.

The Equivalence Principle of Consciousness: Mathematical Description

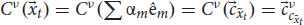

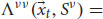

In this section we develop a mathematical description of consciousness as a relativistic phenomenon. In the beginning of the argument, we apply the relativistic principle only to publicly-observable measurements. Then, we show that we can also apply the relativistic principle to phenomenal properties and structures. To that end, we use two different arguments. Then, using the relativistic principle, we prove the equivalence principle between a conscious human agent and a purported “zombie.” Then, we expand that equivalence to all cognitive frames of reference having functional consciousness (i.e., the cause of phenomenal judgments). As a result, we prove that phenomenal judgements are equal to phenomenal properties in the cognitive frame of reference that generates them. Then, we describe the difference between the first-person and third-person perspectives and develop a transformation between them and between measurements of any cognitive frames of reference that have phenomenal consciousness. We show that this transformation preserves the form of the equation regardless of which frame we choose, and thus satisfies the relativity principle. That is, it describes the laws of physics with the same form in all admissible frames of reference.

The Three-Tier Information Processing Model for Cognitive Systems

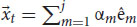

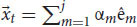

Let’s once more assume two agents, Alice, a conscious human agent, and ALICE, a “zombie” artificial cognitive system (at least, supposedly) with phenomenal judgments. These systems parallel Chalmers’ thought experiment: ALICE is the cognitive duplicate of Alice, but lacks (we suppose) phenomenal consciousness. In order to develop a fully mathematical description, we need to know the exact equations of the cognitive systems in question. Unfortunately, to date there is no complete mathematical description of a human cognitive system, let alone an artificial one that can mimic human cognition. Instead, we use a general, simplified version, a mathematical toy model, to describe a cognitive system that creates phenomenal judgments. We use a three-tier information processing model that divides a cognitive system (S) into three parts: sensation (T), perception (P), and cognition (Dretske, 1978, 2003; Pageler, 2011). Sensation picks up information from the world and transforms it, via transduction, into neural signals for the brain. Later, perceptual states are constructed via coding and representations until a percept is created. The point at which cognitive processing is thought to occur is when a percept is made available to certain operations, such as recognition, recall, learning, or rational inference (Kanizsa, 1985; Pylyshyn, 2003). Cognition has many different operations, but our main interest is the module that specializes in functional consciousness (and that creates phenomenal judgments), which we label C. After recognition, this functional consciousness module needs to integrate the input and emotional information, the state of the system, the reaction of the system to the input, and self-related information in order to create representations of phenomenal judgments. We can summarize this model with the following equation:

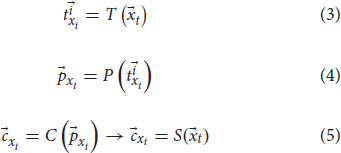

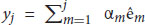

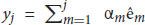

where T is the sensation subsystem, P is the perception subsystem, C is the functional consciousness subsystem of cognition, which is ultimately responsible for phenomenal judgments (we’re not considering other cognitive subsystems here). Finally, S is the entire cognitive system as a whole. These subsystems are a 3-tuple that form the cognitive system in a sequential manner. From T to P and to C. The three-tier model yields:

where  is an input from the physical space outside the cognitive system that interacts with the cognitive system at time t. The input has physical properties, yj (E.g., temperature, position, velocity, etc. j = 1,2,3… is an index of the properties). These physical properties can be detected by the sensors of the sensation subsystem (e.g., the input can be an apple and the cognitive system interacts with it by a beam of light that reached its retina at time t. Using photoreceptors in the retina, the sensation subsystem can detect physical properties of the apple like its color.) T is the sensation subsystem, and

is an input from the physical space outside the cognitive system that interacts with the cognitive system at time t. The input has physical properties, yj (E.g., temperature, position, velocity, etc. j = 1,2,3… is an index of the properties). These physical properties can be detected by the sensors of the sensation subsystem (e.g., the input can be an apple and the cognitive system interacts with it by a beam of light that reached its retina at time t. Using photoreceptors in the retina, the sensation subsystem can detect physical properties of the apple like its color.) T is the sensation subsystem, and  are the outputs of the subsystem, i = 1,2,3…n is an index for each sensory module (i.e.,

are the outputs of the subsystem, i = 1,2,3…n is an index for each sensory module (i.e.,  is the output vector of the sensation subsystem for visual information,

is the output vector of the sensation subsystem for visual information,  for auditory information,

for auditory information,  for interoception information etc.). Using the sensation subsystem, the cognitive system picks up information from the world and transforms it into neural patterns. Each pattern is a different possible state in the state space of the cognitive system. In this state space every degree of freedom (variable) of the dynamic of the system is represented as an axis of a multidimensional space and each point is a different state (e.g., the axes can represent firing rate, membrane potential, position, etc.). After a learning period a subset of these states is formed and being used by the sensation subsystem to encode inputs. This is the state space of the sensation subsystem, and it is part of the state space of the whole cognitive system.

for interoception information etc.). Using the sensation subsystem, the cognitive system picks up information from the world and transforms it into neural patterns. Each pattern is a different possible state in the state space of the cognitive system. In this state space every degree of freedom (variable) of the dynamic of the system is represented as an axis of a multidimensional space and each point is a different state (e.g., the axes can represent firing rate, membrane potential, position, etc.). After a learning period a subset of these states is formed and being used by the sensation subsystem to encode inputs. This is the state space of the sensation subsystem, and it is part of the state space of the whole cognitive system.  are vectors that point to such states in the state space of the sensation subsystem. There is a correspondence between the states

are vectors that point to such states in the state space of the sensation subsystem. There is a correspondence between the states  and the captured physical properties yj (e.g., correspondence between the color of an apple and the neural pattern that it causes in the system. light from a red apple activates photoreceptors which create a neural pattern. In this pattern, cones sensitive to red frequencies will fire more.) P is the perception subsystem. It gets

and the captured physical properties yj (e.g., correspondence between the color of an apple and the neural pattern that it causes in the system. light from a red apple activates photoreceptors which create a neural pattern. In this pattern, cones sensitive to red frequencies will fire more.) P is the perception subsystem. It gets  as inputs from the sensation subsystem and returns output

as inputs from the sensation subsystem and returns output  , the percept of the input

, the percept of the input  . The perception subsystem creates representations. These are gists of important information about the input

. The perception subsystem creates representations. These are gists of important information about the input  that the cognitive system can use even when the input is absent. the percept

that the cognitive system can use even when the input is absent. the percept  is a representation unifying information about the current input from all sensory modules. To that end, the perception subsystem using yet another subset of states from the state space. This is the state space of the perception subsystem, and it is part of the state space of the whole cognitive system.

is a representation unifying information about the current input from all sensory modules. To that end, the perception subsystem using yet another subset of states from the state space. This is the state space of the perception subsystem, and it is part of the state space of the whole cognitive system.  is a vector that points to such a state in the state space of the perception subsystem. There is a correspondence between the states and the operations that can act on these states and between the captured physical properties yj (e.g., one operation on states can be addition of two states to get a third state in the state space of the perception subsystem. In our toy model, this operation can correspond to integration of different physical properties. A red round shaped object, for example, can be a state that is a summation of the red and round states in the state space of the perception subsystem.) C is the functional consciousness subsystem that creates phenomenal judgments (representations that are not phenomenal states themselves, but they are about phenomenology. see introduction for details). It gets percepts as inputs from the perception subsystem and returns phenomenal judgment representation,

is a vector that points to such a state in the state space of the perception subsystem. There is a correspondence between the states and the operations that can act on these states and between the captured physical properties yj (e.g., one operation on states can be addition of two states to get a third state in the state space of the perception subsystem. In our toy model, this operation can correspond to integration of different physical properties. A red round shaped object, for example, can be a state that is a summation of the red and round states in the state space of the perception subsystem.) C is the functional consciousness subsystem that creates phenomenal judgments (representations that are not phenomenal states themselves, but they are about phenomenology. see introduction for details). It gets percepts as inputs from the perception subsystem and returns phenomenal judgment representation,  as output. As before, the functional consciousness subsystem using yet another subset of states from the state space.

as output. As before, the functional consciousness subsystem using yet another subset of states from the state space.  is a vector that points to such a state in the state space of the functional consciousness subsystem. There is a correspondence between the operations that can act on these states in the functional consciousness subsystem state space and between the captured physical properties yj. Combining these equations with eq. 2, the conclusion is that the cognitive system S, which uses the sensation, perception and functional consciousness subsystem sequentially, has a state space that is a combination of the three subsystems state spaces. It gets as input,

is a vector that points to such a state in the state space of the functional consciousness subsystem. There is a correspondence between the operations that can act on these states in the functional consciousness subsystem state space and between the captured physical properties yj. Combining these equations with eq. 2, the conclusion is that the cognitive system S, which uses the sensation, perception and functional consciousness subsystem sequentially, has a state space that is a combination of the three subsystems state spaces. It gets as input,  from the physical space, and returns as output,

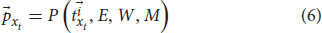

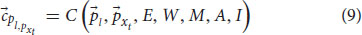

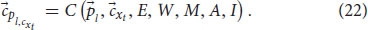

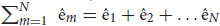

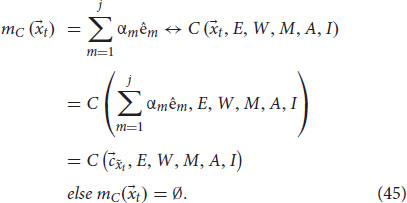

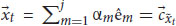

from the physical space, and returns as output,  , the phenomenal judgment representation, from the state space of the cognitive system. To sum, when Alice sees a red apple, for example, her perception subsystem creates a representation of sensory information, a unification of representations for “red,” “round,” and possibly a smell and texture. This integrated representation is her current percept. Later, the functional consciousness subsystem will recognize the object as an “apple” and will create an integrated representation of all relevant information about this red apple. This phenomenal judgment can be, for example, “good-looking, red, and roundish apple,” which can later be reported. Such a cognitive system S needs to integrate information from previous layers and from different modules. It needs an attention module (W) that can change the weights of representations and filter information to focus on relevant information. It needs a long-term memory module (M) to store and retrieve representations. It also needs an emotion evaluation module (E), which assesses information and creates preferences, rewards, and avoidances (e.g., does the input have a positive or negative affective valence?). Other modules could include an affordance module (A) that detects possibilities for action in the environment according to the input, and a self-module (I) that collects self-related information to create a unified representation of the self. Lastly, the cognitive system needs modules to create outputs and behavioral responses. In particular, it has a language module (L) that creates lexical, syntactic, and semantic representations and responses that the functional consciousness subsystem (C) can use. Adding them to Equations 4, 5, we obtain:

, the phenomenal judgment representation, from the state space of the cognitive system. To sum, when Alice sees a red apple, for example, her perception subsystem creates a representation of sensory information, a unification of representations for “red,” “round,” and possibly a smell and texture. This integrated representation is her current percept. Later, the functional consciousness subsystem will recognize the object as an “apple” and will create an integrated representation of all relevant information about this red apple. This phenomenal judgment can be, for example, “good-looking, red, and roundish apple,” which can later be reported. Such a cognitive system S needs to integrate information from previous layers and from different modules. It needs an attention module (W) that can change the weights of representations and filter information to focus on relevant information. It needs a long-term memory module (M) to store and retrieve representations. It also needs an emotion evaluation module (E), which assesses information and creates preferences, rewards, and avoidances (e.g., does the input have a positive or negative affective valence?). Other modules could include an affordance module (A) that detects possibilities for action in the environment according to the input, and a self-module (I) that collects self-related information to create a unified representation of the self. Lastly, the cognitive system needs modules to create outputs and behavioral responses. In particular, it has a language module (L) that creates lexical, syntactic, and semantic representations and responses that the functional consciousness subsystem (C) can use. Adding them to Equations 4, 5, we obtain:

the perception subsystem P receives as variables the outputs of the sensation subsystem  and uses the long-term memory module M, the attention module W, and the evaluation module E to create a percept

and uses the long-term memory module M, the attention module W, and the evaluation module E to create a percept  of the input

of the input  , a unified representation of perception from all sensory modules. This yields:

, a unified representation of perception from all sensory modules. This yields:

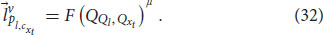

the functional consciousness subsystem C receives as a variable the output of the perception subsystem  and uses the long-term memory module M, the attention module W, the evaluation module E, the affordance module A and the self-module I to create a phenomenal judgment

and uses the long-term memory module M, the attention module W, the evaluation module E, the affordance module A and the self-module I to create a phenomenal judgment  (a complex representation that carries content about phenomenology) concerning the input

(a complex representation that carries content about phenomenology) concerning the input  . We can also add the language module for the cognitive system, so that S can understand and answer questions:

. We can also add the language module for the cognitive system, so that S can understand and answer questions:

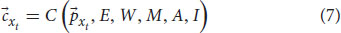

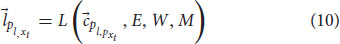

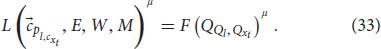

where L is the language module,  is the input of the language module, a low-level processed representation from the perception subsystem (without loss of generality, let us assume it to be auditory information. It can also be a low-level representation of other sensory modules). The language module L gets a low-level representation from the perception subsystem

is the input of the language module, a low-level processed representation from the perception subsystem (without loss of generality, let us assume it to be auditory information. It can also be a low-level representation of other sensory modules). The language module L gets a low-level representation from the perception subsystem  and uses the long-term memory module M, the attention module W, and the evaluation module E to create several representations. Semantic representations for words, syntactic representations, and eventually sentence comprehension representation,

and uses the long-term memory module M, the attention module W, and the evaluation module E to create several representations. Semantic representations for words, syntactic representations, and eventually sentence comprehension representation,  . As before, the language module using yet another subset of states from the state space.

. As before, the language module using yet another subset of states from the state space.  is a vector that points to such a state in the state space of the language module. The functional consciousness subsystem C uses the output of the language module to perform its tasks and create phenomenal judgments (answering a question about a red apple, for example):

is a vector that points to such a state in the state space of the language module. The functional consciousness subsystem C uses the output of the language module to perform its tasks and create phenomenal judgments (answering a question about a red apple, for example):

Now we add  to Equation 7, which is the sentence comprehension representation (a question, for example) as another variable of C. The answer,

to Equation 7, which is the sentence comprehension representation (a question, for example) as another variable of C. The answer,  , is sent to the language module, this time creating a linguistic response:

, is sent to the language module, this time creating a linguistic response:

where L is the language module and  is a vector of the linguistic response. As before, the language module using yet another subset of states from the state space.

is a vector of the linguistic response. As before, the language module using yet another subset of states from the state space.  is a vector that points to such a state in the state space of the language module. This state is a complex neural pattern caused by motor neurons that innervate muscle fibers. The pattern causes contraction patterns in the muscles to create a linguistic response. The response is according to

is a vector that points to such a state in the state space of the language module. This state is a complex neural pattern caused by motor neurons that innervate muscle fibers. The pattern causes contraction patterns in the muscles to create a linguistic response. The response is according to  , the phenomenal judgement representation that captures both the question and the answer of C. For example, suppose that Alice asks ALICE what she sees, and ALICE sees a red apple. The visual information will be transduced and processed in the perception subsystem until a percept is created (equation 6). The language system creates a representation of the question,

, the phenomenal judgement representation that captures both the question and the answer of C. For example, suppose that Alice asks ALICE what she sees, and ALICE sees a red apple. The visual information will be transduced and processed in the perception subsystem until a percept is created (equation 6). The language system creates a representation of the question,  = “what do you see?” (eq. 8) and the functional consciousness subsystem uses the representation of the question,

= “what do you see?” (eq. 8) and the functional consciousness subsystem uses the representation of the question,  and the percept of the apple,

and the percept of the apple,  , as variables to build a phenomenal judgement to answer the question,

, as variables to build a phenomenal judgement to answer the question,  (eq. 9). Finally, the language module uses this representation to produce a proper linguistic response,

(eq. 9). Finally, the language module uses this representation to produce a proper linguistic response,  = “I see an apple” (eq. 10).

= “I see an apple” (eq. 10).

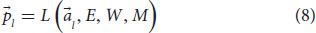

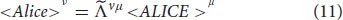

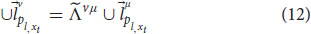

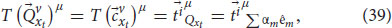

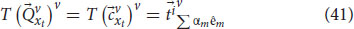

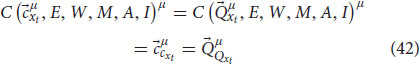

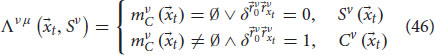

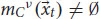

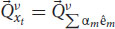

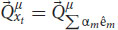

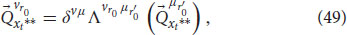

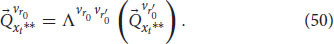

The Equivalence Principle of Consciousness

In parallel with Chalmers’ (1996) assumptions about zombies, Alice and ALICE have equivalent cognitive systems. But Alice, being human, also has phenomenal consciousness (without loss of generality, let’s assume Alice has a quale  about input

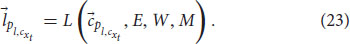

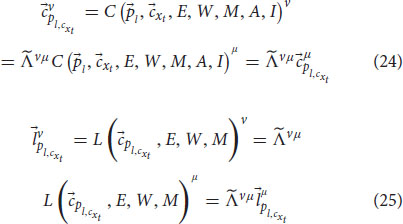

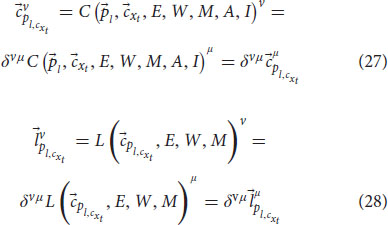

about input  . We will not assume any inner structure for the quale). After we establish the equations for the cognitive system, we can use them to prove the equivalence principle for consciousness. Let’s start with general considerations about cognitive frames of reference. A cognitive frame of reference is determined by the dynamics of a cognitive system (represented by Equations 1–10). Because the equations create and use representations, the representations are an inseparable part of the dynamics of a cognitive system. If two cognitive systems have different dynamics and representations, they are in different cognitive frames of reference. But if they have the same dynamics and representations, then they are in the same cognitive frame of reference. We will denote cognitive frames of reference by μ, and ν.

. We will not assume any inner structure for the quale). After we establish the equations for the cognitive system, we can use them to prove the equivalence principle for consciousness. Let’s start with general considerations about cognitive frames of reference. A cognitive frame of reference is determined by the dynamics of a cognitive system (represented by Equations 1–10). Because the equations create and use representations, the representations are an inseparable part of the dynamics of a cognitive system. If two cognitive systems have different dynamics and representations, they are in different cognitive frames of reference. But if they have the same dynamics and representations, then they are in the same cognitive frame of reference. We will denote cognitive frames of reference by μ, and ν.

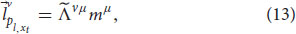

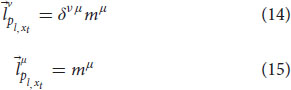

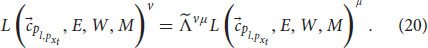

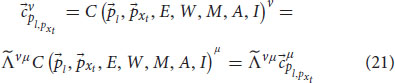

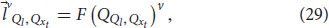

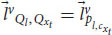

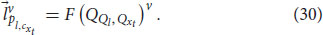

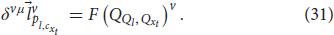

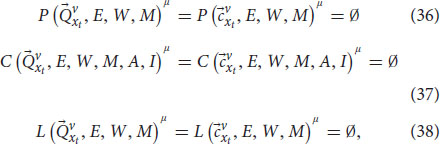

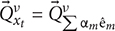

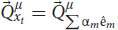

We started with the assumption that ALICE is a zombie. Hence, ALICE will obtain the same behavioral reports (i.e.,  ) and the same phenomenal judgments (