- 1College of Tourism and Landscape Architecture, Guilin University of Technology, Guilin, China

- 2Endicott College of International Studies, Woosong University, Daejeon, Republic of Korea

- 3Weifang University of Science and Technology, School of Civil Engineering, Weifang, China

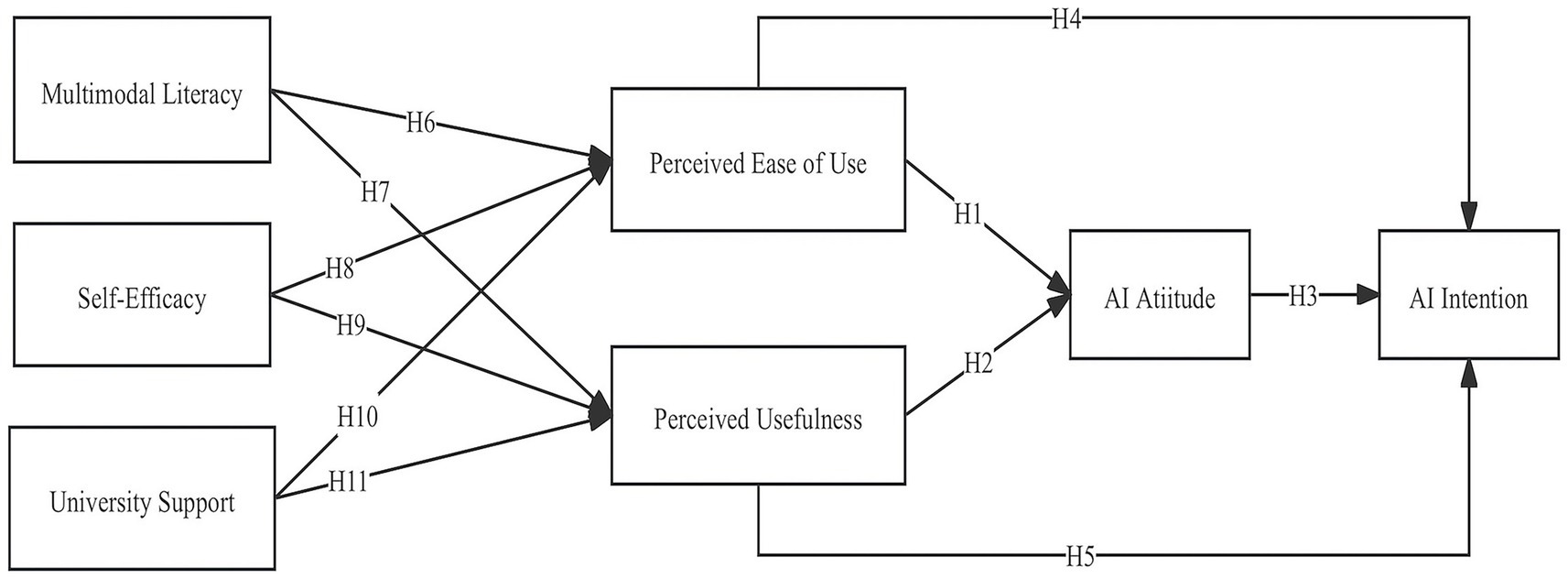

Introduction: Framed within the technology acceptance model, this study examines how multimodal literacy, self-efficacy, and university support affect students’ attitudes toward artificial intelligence tools and the students’ intentions to adopt them.

Methods: Survey data from 498 students were analyzed using PLS-SEM 4.0 and SPSS 29.

Results: The findings showed that the perceived usefulness of the AI tools was the strongest predictor of both attitude toward the tools and intention to use them. All three antecedent variables (multimodal literacy, self-efficacy, and university support) significantly impacted perceived usefulness and perceived ease of use.

Discussion: By integrating individual dimensions and also institutional dimensions into the technology acceptance model, this study offers fresh insight into how AI tools might take root more effectively in higher education.

1 Introduction

Artificial intelligence (AI) is pushing for a fast transformation in global higher education (Zouhaier, 2023), and it is changing how students learn and how educators teach and evaluate students (Holmes and Porayska-Pomsta, 2023). Several AI technologies have proved that they can help with the acquisition of knowledge and can, among other features, make personalized learning possible by creating custom-made educational experiences (Adiguzel et al., 2023). These tools also make things more accessible (Caldarini et al., 2022) and support the decision-making of academic professionals (Farhi et al., 2023). Indeed, with the acceleration of digital transformation throughout the education sector, the use of AI technologies in education has increased significantly (Viswanath Venkatesh et al., 2003). These smart tools not only involve learners more and help them understand better, they also help students to develop the basic skills needed to prepare for a career (Adiguzel et al., 2023). However, Holmes and Porayska-Pomsta (2023) showed that students demonstrate markedly different levels of acceptance and engagement with AI technologies. Students tend to embrace AI tools for their studies when they find them practical and easy to use for their specific tasks (Ma et al., 2024).

To account for the variability in adoption behaviors, this study employed the technology acceptance model (TAM), which says that how useful people think something is (i.e., its perceived usefulness [PU]) and how easy they think it is to use (i.e., its perceived ease of use [PEOU]) are the main factors that decide how people judge and act toward new technologies (Chen et al., 2024). Multimodal literacy (ML) (Slimi, 2023), self-efficacy (SE) (Fan and Cui, 2024), and university support (US) (Sova et al., 2024) are presumed to affect students’ adoption of AI tools (Yao and Wang, 2024), but the processes by which these relationships occur remain underexplored. That may be because prior TAM-based studies have typically emphasized broad psychological determinants and have paid less attention to the specific learner competencies and institutional contexts relevant to educational technologies. Given the rapidly evolving educational environment and increasing complexity of digital tool adoption, there is a clear need to revisit and extend existing acceptance models to better reflect contemporary higher-education contexts. This research therefore addresses that critical gap by explicitly examining how ML, SE, and US affect students’ acceptance of AI technologies in academic contexts.

This study had two main goals. First, it looked at how these three factors affect how students think about AI tools’ usefulness and ease of use. Then, the study checked how the main TAM factors work together to shape students’ integration of AI tools into their learning activities. To guide the analysis, this research sought to answer the following research questions: (1) To what extent do multimodal literacy, self-efficacy, and university support influence students’ perceived ease of use and perceived usefulness of AI tools in academic contexts? (2) How do perceived ease of use and perceived usefulness shape students’ attitudes toward using AI tools? (3) In what ways do students’ attitudes, perceived ease of use, and perceived usefulness contribute to their behavioral intention to use AI tools in higher-education settings? Because university students are not only primary users but also are potential advocates of AI in education (Fošner, 2024), an in-depth understanding of the drivers of students’ AI adoption behavior can help institutions develop more effective strategies for supporting AI-enhanced learning and promoting meaningful, enduring incorporation of AI technologies within tertiary educational contexts.

2 Literature review

2.1 Key relationships in the technology acceptance model

The technology acceptance model (Davis, 1989) centers around several critical relationships among core constructs—the perceived usefulness and perceived ease of use of AI, and users’ attitudes and behavioral intentions—that have been extensively tested and validated across diverse technological contexts (Venkatesh and Davis, 2000). Despite its robust predictive capabilities, however, the TAM has faced significant theoretical critiques regarding the simplicity and assumed linearity of these foundational relationships (Bagozzi, 2007; Marangunić and Granić, 2015). For example, Tarhini et al. (2017) noted that the TAM typically presupposes stable, rational user behaviors and neglects emotional, situational, and dynamic psychological factors that might mediate or moderate its core relationships. Furthermore, the assumption of universality in these key relationships overlooks potential variability due to cultural influences, disciplinary contexts, and individual differences, thus raising questions about the generalizability of TAM findings in complex educational settings (Teo and Milutinovic, 2015). Given these limitations, a deeper, contextually sensitive exploration of the TAM’s core relationships is necessary to build a full understanding of technology acceptance behaviors, especially in higher education, where a diverse range of contextual and institutional elements shapes learners’ perceptions. Addressing these contextual influences explicitly could significantly extend the TAM’s explanatory power and practical utility in academic environments.

2.1.1 Perceived ease of use (PEOU) and AI attitude

When users perceive that a technology is easy to understand and work with, this factor is called perceived ease of use (Davis, 1989). According to Venkatesh and Bala (2008), users are more likely to accept technologies that are easy to understand and that work well. In this study, perceived ease of AI use reflects how easy students perceive using AI tools to be (Li et al., 2024) and whether the experience is smooth and not stressful (Fošner, 2024). Previous research has found that systems that are easy to use usually lead to users having more positive attitudes toward them (Fagan et al., 2008) because such systems lower the users’ cognitive load (Karahanna and Straub, 1999) and increase people’s satisfaction with using them (Shroff et al., 2011). Hence, this study proposed the following hypothesis:

Hypothesis 1 (H1): Perceived ease of use positively affects students’ attitudes toward AI tools.

2.1.2 Perceived usefulness (PU) and AI attitude

Perceived usefulness means how useful or valuable users think a system is for accomplishing their tasks (Davis, 1989). In this study, students’ perceived usefulness of AI tools was largely characterized by the tools’ potential to boost academic performance (Fagan et al., 2008), simplify learning processes, and enhance task completion (Falebita and Kok, 2024). Several studies have indicated that users’ attitudes toward technology adoption are strongly influenced by perceived usefulness (Aljarrah et al., 2016), and offering additional perceived benefits usually leads to a higher level of acceptance (Toros et al., 2024). When students believe that AI gives clear academic benefits, they are likelier to have a more positive attitude (Farhi et al., 2023) and a stronger intention to use it (Slimi, 2023). Thus, the following hypothesis was proposed:

Hypothesis 2 (H2): Perceived usefulness positively affects students’ attitudes toward AI tools.

2.1.3 AI attitude and AI intention

Artificial intelligence attitudes are the positive and negative opinions that users form about AI tools on the basis of their interactions and experiences with them (Ajzen and Fishbein, 2000). These attitudes encompass the users’ thoughts, emotional responses, and behavioral inclinations during interactions with AI (Slimi, 2023). Attitude is thought to be a basic psychological thing that affects people’s intention to adopt and keep using new technologies (Kim et al., 2024). A positive attitude is seen as an important factor that influences people to accept new technologies (Ayanwale et al., 2024) and prepares users to use these tools in their daily learning and work tasks (Toros et al., 2024). Prior studies also have suggested that individuals with more favorable attitudes toward AI are generally more willing to adopt such tools (Chen et al., 2024; Teo and Zhou, 2014). Therefore, this study proposed the following hypothesis:

Hypothesis 3 (H3): Students’ AI attitudes positively affect their intention to use AI tools.

2.1.4 Perceived ease of use (PEOU) and AI intention

Perceived ease of use (PEOU) affects students’ adoption decisions, particularly concerning emerging AI-based tools and platforms (Venkatesh and Davis, 2000). Users are more inclined to adopt and continue using technologies that have simple interfaces, because the reduced complexity lowers the mental effort required for learning (Yi and Hwang, 2003). Recent research has further underscored PEOU as a critical determinant of user acceptance across educational settings that involve AI-driven tools.

In particular, Rafique et al. (2020) provided empirical support that PEOU significantly predicted students’ behavioral intentions toward mobile library applications, indicating that ease of use was a critical factor influencing user acceptance in digital learning environments. Salar and Hamutoglu (2022) underscored the notion that perceived ease of use plays a predictive role in users’ willingness to engage with cloud computing systems. Similarly, Al-Maroof et al. (2020) demonstrated through an extended technology acceptance model that PEOU directly influenced users’ behavioral intentions to use machine translation tools such as Google Translate, further reinforcing the role of user-perceived ease of use in driving AI adoption. Falebita and Kok (2024) found that PEOU significantly shaped undergraduate students’ intentions in adopting AI technologies, highlighting that user-friendly and intuitive interfaces positively affected acceptance and sustained use. In addition, Al-Adwan et al. (2023) reported a notable link between PEOU and students’ intention to adopt metaverse-based learning platforms, noting variations depending on technological complexity and individual self-efficacy levels.

In that light, given the robust evidence highlighting the impact of PEOU in shaping technology adoption intentions across various studies, particularly in educational settings involving AI tools, this study proposed the following hypothesis:

Hypothesis 4 (H4): Perceived ease of use positively affects students’ intention to use AI tools.

2.1.5 Perceived usefulness (PU) and AI intention

Perceived usefulness is a factor that influences user attitudes and directly affects technology adoption behaviors (Venkatesh and Davis, 2000). When users find a technology to be helpful in achieving their goals, they tend to use it more frequently (Adelana et al., 2024; Das and Madhusudan, 2024). Recognized for its impact on user adoption decisions, perceived usefulness has become a core factor in recent research (Yao and Wang, 2024), especially in environments such as online learning platforms (Al-Maroof et al., 2020), Massive Open Online Courses (MOOCs) (Alraimi et al., 2015), educational technologies, and AI-driven applications (Nikolopoulou et al., 2020). Specifically, Alraimi et al. (2015) found that PU was a strong driver of learners’ continuance intention in MOOCs, and that drive was particularly shaped by perceived openness and institutional reputation. Nikolopoulou et al. (2020) provided empirical evidence supporting PU as a key factor in mobile learning acceptance among university students. Both Yao and Wang (2024) and Al-Maroof et al. (2020) demonstrated that perceived usefulness had a significant and direct influence on pre-service teachers’ intention to adopt AI tools, across contexts such as special education and intelligent tutoring systems in biology. Saravanos et al. (2022) explored the adoption of digital educational technologies within an extended TAM3 framework and confirmed that perceived usefulness directly predicted university students’ behavioral intentions toward adopting emerging technologies, including AI-enhanced learning environments. Their findings highlighted that perceived usefulness was consistently influential across multiple contexts, such as interactive AI-driven learning tools and virtual learning platforms, thereby aligning closely with this study’s emphasis on AI adoption in higher education. Accordingly, this study proposed the following hypothesis:

Hypothesis 5 (H5): Perceived usefulness positively affects students’ intention to use AI tools.

2.2 Multimodal literacy (ML)

In the digital age, relying on reading and writing skills is no longer enough. Multimodal literacy has now become crucial for interpreting, analyzing, and conveying information with the help of AI tools. Emerging from social semiotics (Jewitt, 2008), ML involves the ability to understand and express ideas (Kress, 2010) through various forms of communication (Walsh, 2010). Multimodal literacy combines forms such as text and images along with sounds and gestures to help people understand and interact with content more effectively. As Kress (2010) observed, multimodal literacy enhances meaning by combining the analysis of sounds, images and text, which reflects the diversity of current digital communication (Bulut et al., 2015). The increasing use of visuals and interactive tools (Bezemer and Kress, 2008) in education highlights their influence on customizing the educational experience and transforming how information is learned (De Oliveira, 2009). In addition, multimodal literacy promotes flexibility (Kress and Selander, 2012) by allowing students to adjust their communication methods on a situational basis and encourages creativity in environments (Rowsell and Walsh, 2011). However, assessing multimodal literacy across disciplines presents challenges: Tan et al. (2020) noted that educators often rely on the traditional rubrics aligned with writing, thus undervaluing multimodal performances and lacking consistent, discipline-sensitive criteria.

A growing trend shows that people with greater levels of multimodal literacy are more engaged cognitively (Gee, 2014) and more adept at using digital technologies effectively (Rapp et al., 2007) for understanding intricate information (Jewitt, 2008) and tackling problem-solving tasks (Ng, 2012). Lu et al. (2024) indicated that people who possess stronger multimodal literacy are more open to using platforms with complex visual content. As Mayer (2009) emphasized, those with stronger multimodal skills are usually better at processing and remembering multimedia content. This process often leads students to perceive digital tools as more intuitive and accessible (Kress, 2010), thereby enhancing their perceived ease of use. Moreover, students with high levels of multimodal literacy often report experiencing less cognitive strain when interacting with complex interfaces, and that in turn lowers their psychological barriers to adoption (Paas and Sweller, 2012).

Indeed, multimodal literacy not only helps users navigate systems more easily, it also enhances perceived usefulness by enabling users to derive valuable information from complex multimodal sources (Lu et al., 2024). Because many AI systems present content through visuals, sound, and interactive elements (Seufert, 2018), students with stronger multimodal literacy skills are better able to interpret and apply the content they encounter. In AI-supported learning environments, this ability allows them to apply multimedia resources more effectively (Moreno and Mayer, 2007), thereby enhancing their perceived value of AI tools. Consequently, this study proposed the following hypotheses:

Hypothesis 6 (H6): Multimodal literacy positively affects perceived ease of use of AI tools.

Hypothesis 7 (H7): Multimodal literacy positively affects perceived usefulness of AI tools.

2.3 Self-efficacy (SE)

Bandura (1986) defined self-efficacy as individuals’ confidence in their ability to take the actions necessary to achieve specific goals. Self-efficacy also involves a sense of assurance in handling tasks successfully (Sáinz and Eccles, 2012) and overcoming obstacles (Wang and Wang, 2024). Zimmerman (2000) argued that highly self-efficacious individuals typically demonstrate persistence and show stronger resilience when facing challenges, and they are more committed to problem-solving (Serap Kurbanoglu et al., 2006), traits closely linked to better academic performance (Wang and Li, 2024). Moreover, self-efficacy is not limited to prior experiences, it also influences how individuals approach future obstacles (Huang et al., 2024). Scholars have increasingly emphasized the important function of self-efficacy in academic contexts (Fathi et al., 2021). For instance, Beile and Boote (2004) reported a clear positive link between self-efficacy and how well students do academically (Berweger et al., 2022). Similarly, Wang et al. (2024) showed that students possessing high self-efficacy displayed increased resilience when confronted with difficulties. Other studies have suggested that self-efficacy also promotes students’ cognitive engagement (Girasoli and Hannafin, 2008) and helps them solve problems more efficiently (Hoffman and Spatariu, 2008).

Prior studies have explored the association between perceived ease of use and perceived usefulness and self-efficacy (Marakas et al., 1998). Pan (2020) demonstrated that college students who were more confident in using tech were more open to trying AI tools, Liwanag and Galicia (2023) emphasized AI’s role in promoting self-directed learning, and Masry-Herzallah and Watted (2024) highlighted the positive effect of AI on engaging with online platforms. Confident users are typically less anxious than their counterparts (Igbaria, 1995) because they tend to stay curious and are good at solving problems independently (Holden and Rada, 2011), and they often find AI technologies approachable and helpful, which enables them to unlock the technologies’ full potential (Agarwal and Karahanna, 2000). Conversely, a lack of self-efficacy frequently creates fear and anxiety about failure, which then prevents the individual from adopting a technology (Moos and Azevedo, 2009). The following hypotheses were consequently proposed:

Hypothesis 8 (H8): Self-efficacy positively affects perceived ease of use of AI tools.

Hypothesis 9 (H9): Self-efficacy positively affects perceived usefulness of AI tools.

2.4 University support (US)

When universities offer reliable infrastructure and clear guidelines, students tend to engage more actively and meaningfully with new technologies such as AI (Claro et al., 2018). This support often takes the form of AI training programs (Brown et al., 2010), curricula that reflect technological advances, and a supportive environment that encourages an exploration of these tools (Hammond et al., 2011). Research increasingly has emphasized the positive outcomes brought about by such initiatives (Cho and Yu, 2015). For instance, AI applications have been linked to more personalized learning experiences (D’Mello et al., 2012), greater learner motivation (Deng and Yu, 2023), and improved academic performance (Winkler and Soellner, 2018). In addition to providing infrastructure, universities also shape students’ ethical and effective use of AI through guidance and structured training programs (Denecke et al., 2023; Sova et al., 2024). Jeilani and Abubakar (2025) demonstrated that perceived institutional support significantly enhanced students’ technology self-efficacy and positively influenced their perceptions toward AI-assisted learning. University support was especially beneficial for students who initially exhibited lower self-efficacy, thus highlighting its crucial role in facilitating their adoption of technology.

Embedding AI into courses (Slimi, 2023) can help students understand its relevance to academic achievements (Chiu, 2024) and future careers (Stutz et al., 2023). In addition to offering courses related to AI, universities can also contribute by providing technical assistance, which not only lowers the barriers to using AI tools (Mori, 2000) but also helps students grow into confident and capable users throughout the process (Zhao et al., 2022). Moreover, evidence from De Oliveira (2024) showed that embedding multimodal literacy training within institutionally supported courses significantly enhanced students’ proficiency with multimodal resources, emphasizing that institutional backing directly amplifies the personal competencies that are essential for technology adoption.

In essence, strong university support has been shown to enhance perceived usefulness by emphasizing academic and career benefits while also contributing to improved perceived ease of use (Williams, 2025). Consequently, this study proposed the following hypotheses:

Hypothesis 10 (H10): University support positively affects perceived ease of use of AI tools.

Hypothesis 11 (H11): University support positively affects perceived usefulness of AI tools.

Building on insights from prior TAM research, this study began by developing a conceptual model (see Figure 1).

3 Research methodology

3.1 Research design

Grounded in the TAM, this study investigated students’ adoption of AI tools through a quantitative cross-sectional approach (Bryman, 2016). As Creswell (2009) pointed out, this method effectively supports the analysis of hypothesized relationships. To capture relevant data, a questionnaire was developed to assess the core TAM constructs (PEOU, PU, attitude, intention) and the influencing factors (ML, SE, US). After completion of the data-collection stage, SPSS 29 and SmartPLS 4 were employed to analyze the data. We relied on exploratory factor analysis (EFA) to reduce the risk of common method bias (Iacobucci, 2010), and we verified the variance inflation factor (VIF) values. Subsequently, we assessed internal consistency and construct validity through Cronbach’s alpha, composite reliability, and average variance extracted (AVE). To examine discriminant validity, the study followed Hair et al. (2017) and applied both the Fornell–Larcker criterion and the heterotrait-monotrait (HTMT) ratio test methods. In addition, we employed partial least squares-structural equation modeling (PLS-SEM) to examine the relationships among the constructs and evaluate the proposed hypotheses, because the technique is well suited to exploratory research and handles latent variables effectively (Sova et al., 2024). This study further explored its model fit through key indicators such as path coefficients and R2 values (Al-Abdullatif and Alsubaie, 2024). To determine the significance of the hypothesized paths, we performed bootstrapping with 5,000 resamples, following the procedure in Hair (2019).

3.2 Research instruments

On the basis of the mature tools from previous studies, we developed a questionnaire to ensure reliability and practical relevance, and we ultimately created a tool consisting of nine sections. The first section collected demographic information to provide a clear profile of the research sample (Li et al., 2024). The subsequent seven sections assessed the core constructs of our theoretical model. Each construct was measured using multiple items, rated by participants on a five-point Likert scale ranging from “strongly disagree” to “strongly agree.” Specifically, multimodal literacy was evaluated through 11 items sourced from Bulut et al. (2015), self-efficacy with five items drawn from Falebita and Kok (2024), and university support with three items based on Sykes et al. (2009). For the TAM constructs, we drew four items from Falebita and Kok (2024) to assess perceived ease of use and three items to measure AI attitude. Perceived usefulness and AI intention were assessed with four and three items, respectively, derived from Ayanwale and Molefi (2024). These items have demonstrated high degrees of reliability and validity in prior research, lending support to the measurement instrument adopted for the current study. We also included an open-ended question to gather qualitative insights.

After translating the original English questionnaire into Chinese, two bilingual professionals validated the translation’s clarity. Following Behling and Law (2000), we conducted a back-translation to ensure accuracy and then carefully addressed any discrepancies. Prior to formal distribution of the main questionnaire, a pilot study was carried out with 238 university students to evaluate item clarity. On the basis of participant feedback, minor wording revisions were made.

3.3 Ethical considerations

This study abided by ethical standards, with informed consent obtained after delivery of a clear explanation of the study’s aims and its voluntary nature. Participants’ anonymity was respected, and all data were kept confidential. The findings were used solely for academic purposes and presented truthfully, ensuring both transparency and research integrity.

3.4 Population and sampling

The population was university students across various disciplines and degree levels who had experienced a past exposure to engaging with AI tools in academic settings. This group was chosen because students are often early adopters of educational technology and are increasingly exposed to AI-driven tools that shape their learning experiences (Selwyn, 2022; Teo, 2011). Participants were recruited from universities nationwide in China, including undergraduates and postgraduates, to capture diversity in educational levels and fields. Data collection was achieved using a non-probability convenience sampling method (Etikan, 2016). Although convenience sampling can limit generalizability, it is widely accepted in research on exploratory technology adoption that requires rapid large-scale data collection (Evans and Mathur, 2005). In total, 498 valid responses were included in the analysis, thereby ensuring sufficient power for statistical testing (Sax et al., 2003).

3.5 Data collection methods

The questionnaire was distributed online through Sojump.com from February to March 2025, and the link was distributed through multiple outlets (e.g., WeChat, QQ, in-class QR codes, and the Xiaohongshu social platform) to reach a broad range of students. To encourage more people to complete the survey, the study included a small monetary incentive in the form of a WeChat “red envelope.” This multi-channel distribution strategy and incentive helped improve the response rate and the diversity of respondents (Dillman et al., 2014). To ensure data quality and prevent duplicate submissions, a set of technical safeguards was implemented before launch. A verification step was enabled to block automated or bot-based responses, and IP-level restrictions were applied to ensure that each participant could only submit the questionnaire and receive the reward once. Distribution of the survey was limited to group-based social media platforms, and Sojump’s backend system did not collect any identifying information about participants (e.g., names, phone numbers, or user IDs), thereby ensuring complete anonymity. Altogether, 669 responses were collected. After data cleaning (removing incomplete submissions and those with all identical answers, extremely short completion times, or failed attention-check questions), 498 responses remained for analysis.

3.6 Pilot study

A trial study was first implemented with 238 university students to evaluate and refine the questionnaire before the main study. Thomander and Krosnick (2024) emphasized that pilot studies are essential for refining instruments and ensuring their clarity, validity, and reliability. The initial questionnaire contained 51 items (including demographic questions, an attention check item, an open-ended question, and 44 items covering the seven theoretical constructs).

Because some measurement items were adapted to the AI-in-education context, an exploratory factor analysis (EFA) was first carried out to identify the underlying dimensions, verify construct unidimensionality, and refine the scale. This EFA-to-PLS-SEM sequence followed the methodological recommendations outlined by Hair et al. (2022) for scale development and validation.

On the basis of the pilot feedback and analysis, several modifications ultimately were made. Notably, a “Preferring Multimodal Structures” subdimension of the ML scale (five items) was removed due to its subjective nature and misalignment with the TAM’s focus on user behavior. This change improved the conceptual clarity of the ML construct. In addition, any survey items with low factor loadings (below 0.70), high multicollinearity (VIF > 3.3), or poor discriminant validity in the pilot phase were removed to enhance construct quality (Comrey and Lee, 1992; Hair, 2019; Henseler et al., 2015; Kock, 2015; Tabachnick and Fidell, 2013). These revisions resulted in a final instrument of 39 items for the subsequent studies.

3.7 Main study

The finalized questionnaire was deployed as described above, and 498 valid responses were retained for analysis. The comprehensive analysis outlined in section 3.1 was applied to this dataset to assess the conceptual framework. Hoyle (2012) noted that PLS-SEM enables researchers to examine multiple relationships simultaneously while controlling for measurement error. It should be noted that, as with any self-reported survey, there was a risk of common method bias (CMB) or social desirability effects, although statistical procedures indicated these were not significant issues. In addition, the sample had a higher concentration of students from eastern and central China, which is acknowledged in the Limitations section as a consideration with regard to generalizability.

4 Results

4.1 Descriptive statistics

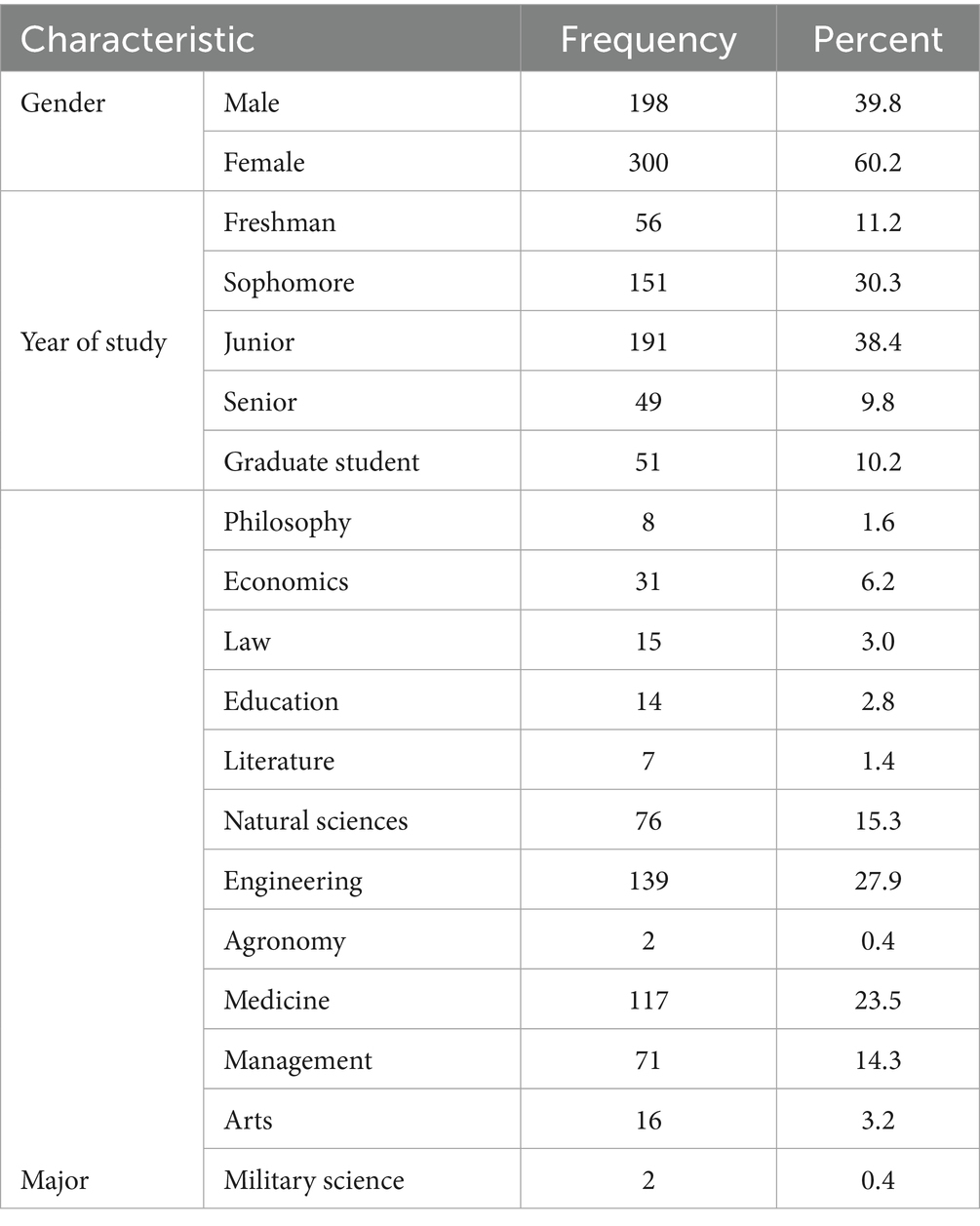

Among the 498 valid participants, 60.2% were females and 39.8% were males. The vast majority (89.8%) were undergraduate students (most in their second or third year), with 10.2% being graduate students. The sample was predominantly drawn from science-technology-engineering-math (STEM)-related majors: Engineering (27.9%) and Medicine (23.5%) together accounted for about half of the respondents, followed by students from the Natural Sciences (15.3%). Non-STEM fields had smaller representations (e.g., Management 14.3%, Economics 6.2%, Arts 3.2%, Law 3.0%, and others were below 3%). The demographic characteristics of the sample are shown in detail in Table 1.

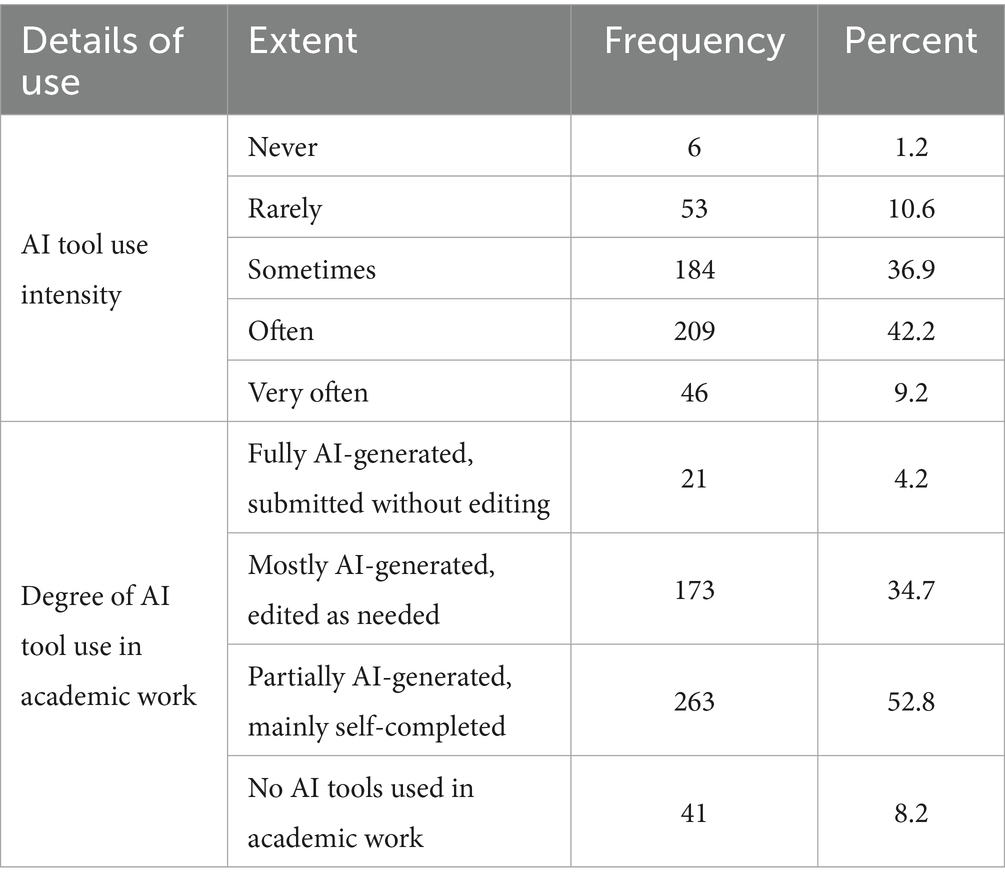

As is shown in Table 2, regarding their frequency of AI tool usage, approximately 79.1% of the students stated that they used AI tools at least occasionally, with 42.2% using them often and 36.9% sometimes, while only 1.2% had never used such tools. These data indicated that AI tools are now embedded in students’ digital routines. As Crompton and Burke (2023) noted, this reflects a global trend of widespread AI integration across contexts.

Regarding how extensively the students relied on AI-generated content in their academic work, 52.8% indicated that they used AI for partial content generation while completing the majority of their work independently, 34.7% used AI to generate most content but then edited it themselves, and only 4.2% submitted fully AI-generated content without modification. Meanwhile, 8.2% reported not using AI in their academic work at all. These results suggest that the utilization of AI technologies is widespread among students, but those technologies are primarily used as auxiliary tools rather than replacements for conventional tasks. Therefore, this disparity underlines the necessity for institutions to enhance students’ AI-related competencies through targeted training in AI skills (Zhai et al., 2021).

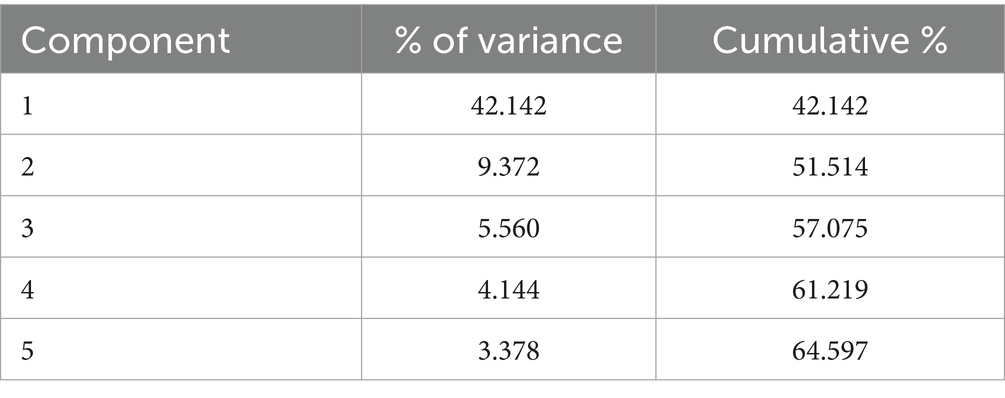

4.2 Common method bias

We followed the guidelines of Podsakoff and Organ (1986) and conducted a single-factor test to assess the presence of common method bias (CMB). Exploratory factor analysis revealed a multidimensional structure, with the first factor alone contributing 42.142% to the total explained variance (see Table 3). Because this value falls short of the widely recognized 50% threshold (Hew et al., 2018), we do not consider CMB to be a major concern in the present study.

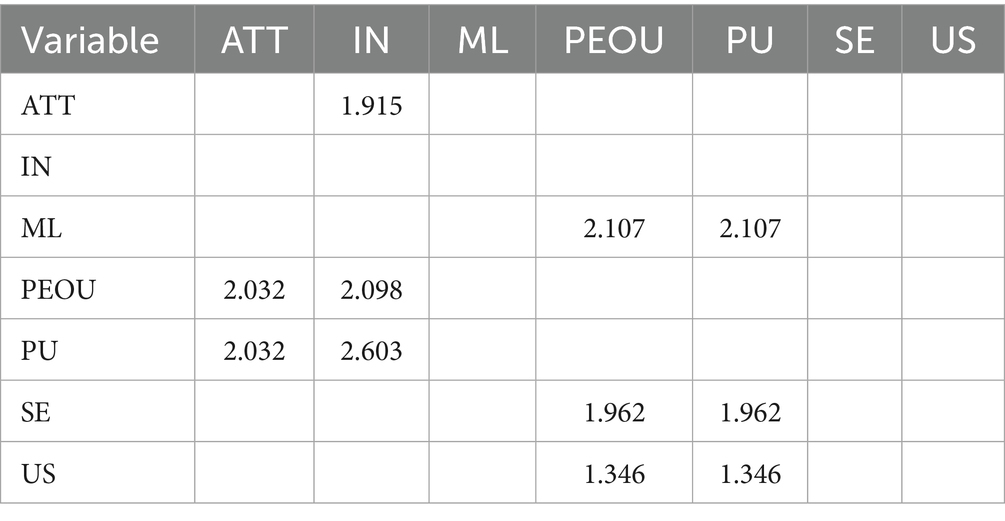

To further validate the absence of CMB, a VIF analysis was performed (Kock, 2015). Table 4 shows that all VIF values remained well within the acceptable range, spanning from 1.346 to 2.107 and staying under the recommended maximum of 3.3. Therefore, the combined results of the Harman’s test and VIF analysis confirmed that CMB did not materially influence the study’s results.

4.3 Measurement model

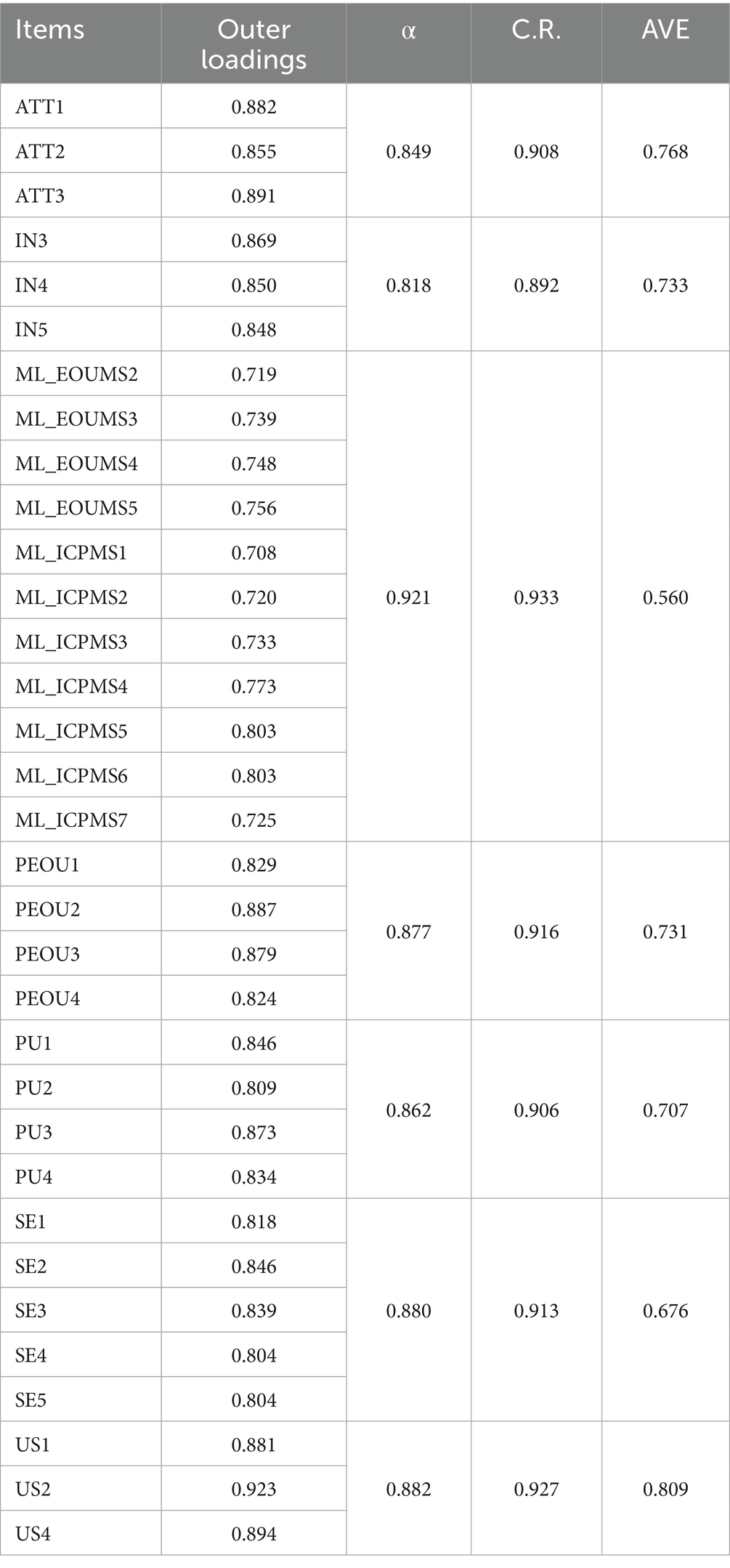

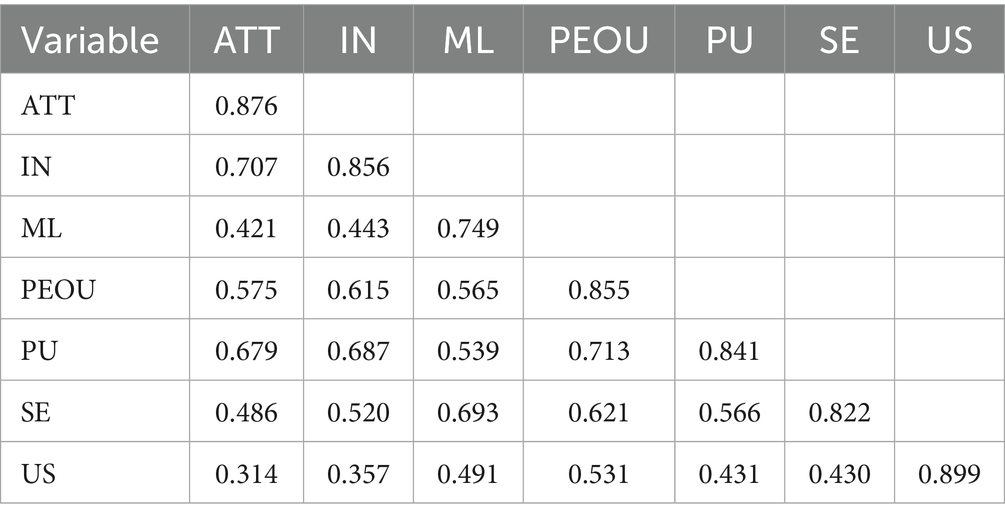

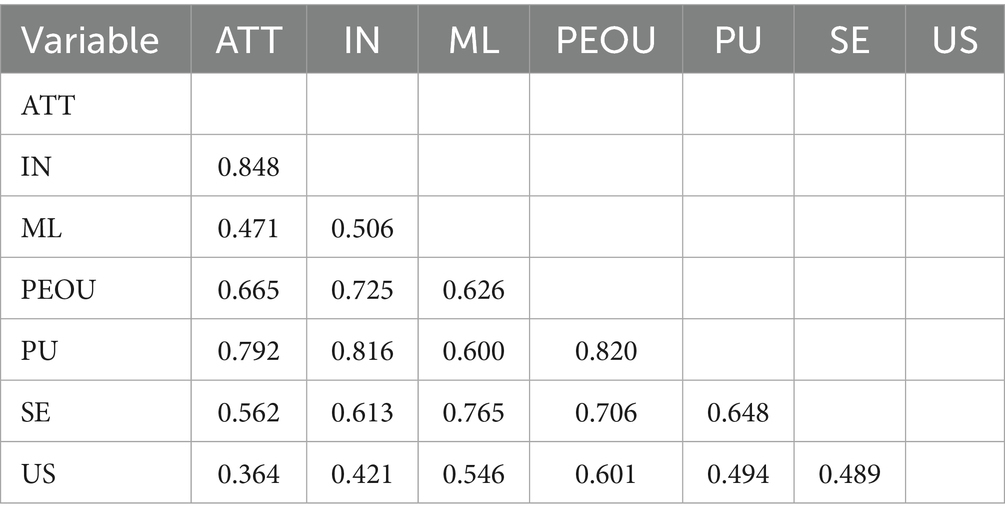

The results of the tests just described confirmed that the measurement model met the criteria for both reliability and validity. Table 5 presents evidence supporting both internal consistency and convergent validity. Cronbach’s alphas and composite reliability values consistently exceeded 0.80 (Fornell and Larcker, 1981), while each construct’s AVE surpassed the 0.50 threshold (Hair et al., 2012) and all indicator loadings exceeded 0.70 with statistical significance (Hair, 2019). Discriminant validity was supported through both criteria: Table 6 shows that the square root of AVE for each construct exceeded its inter-construct correlations (Sarstedt et al., 2014), while Table 7 reports HTMT ratios that were consistently below 0.85 (Henseler et al., 2015). Together, these findings provide robust support for the model’s convergent and discriminant validity.

4.4 Structural equation modeling (SEM)

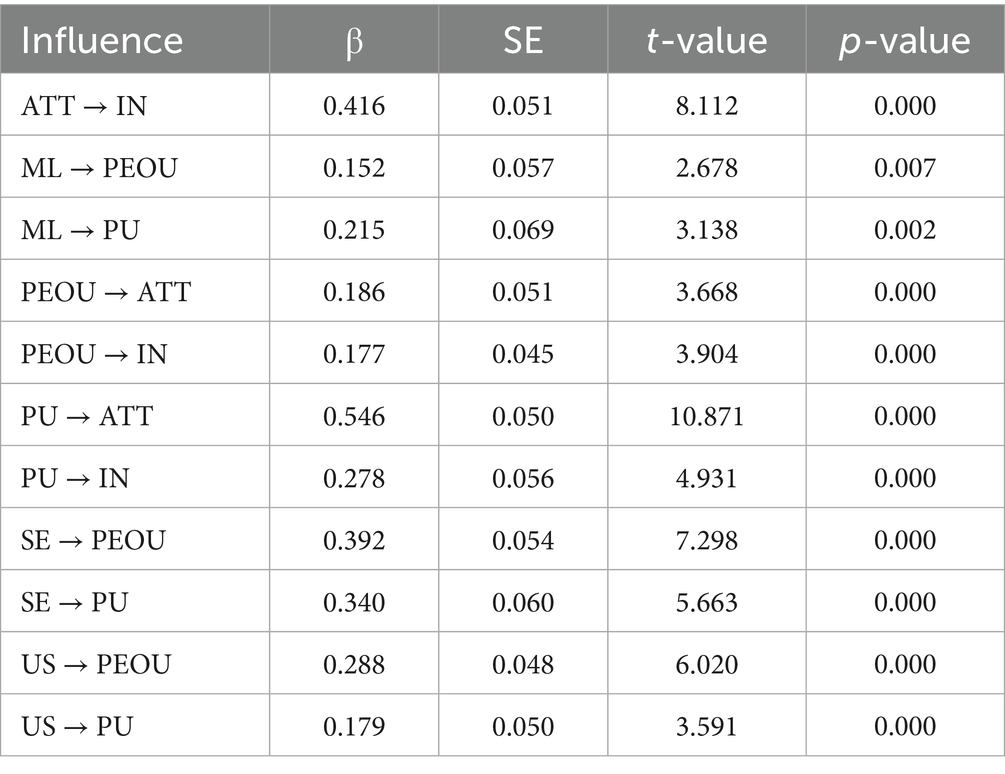

As evidenced by the data in Table 8, the structural model outcomes provided support for all of the hypothesized relationships (H1 through H11), with most paths reaching statistical significance. The students’ attitudes toward AI tools (ATT) markedly influenced their AI usage intention (IN) (β = 0.416, p < 0.001), thus confirming H3. Moreover, PEOU exerted a significant positive effect on the students’ intention to use AI tools (IN) (β = 0.177, p < 0.001), providing empirical support for H4. Likewise, PU directly predicted intention to use AI tools (IN) (β = 0.278, p < 0.001), providing solid empirical support for H5.

The findings further confirmed that PU had a strong positive influence on AI attitude (ATT) (β = 0.546, p < 0.001), thus lending support to H2. Similarly, H1 was supported because the path from PEOU to AI attitude (ATT) also was both significant and positive (β = 0.186, p < 0.001). The data demonstrate that both perceived ease of use and perceived usefulness contributed to students’ attitudes toward AI tools, although the influence of usefulness was the more pronounced of the two.

Multimodal literacy positively influenced both PEOU (β = 0.152, p = 0.007) and PU (β = 0.215, p = 0.002). While the effect sizes were relatively small, the findings nonetheless supported H6 and H7. Similarly, SE substantially affected both PEOU (β = 0.392, p < 0.001) and PU (β = 0.340, p < 0.001), lending support to H8 and H9. This suggests that students who exhibit greater self-efficacy and multimodal literacy are predisposed to regard AI tools as simple to use and helpful, thus reinforcing the role of digital competencies in AI adoption.

Moreover, university support (US) proved to enhance both PEOU (β = 0.288, p < 0.001) and PU (β = 0.179, p < 0.001), thereby supporting H10 and H11. These results highlight that institutional support significantly influences students’ attitudes toward AI tools, particularly regarding the tools’ user-friendliness and perceived value.

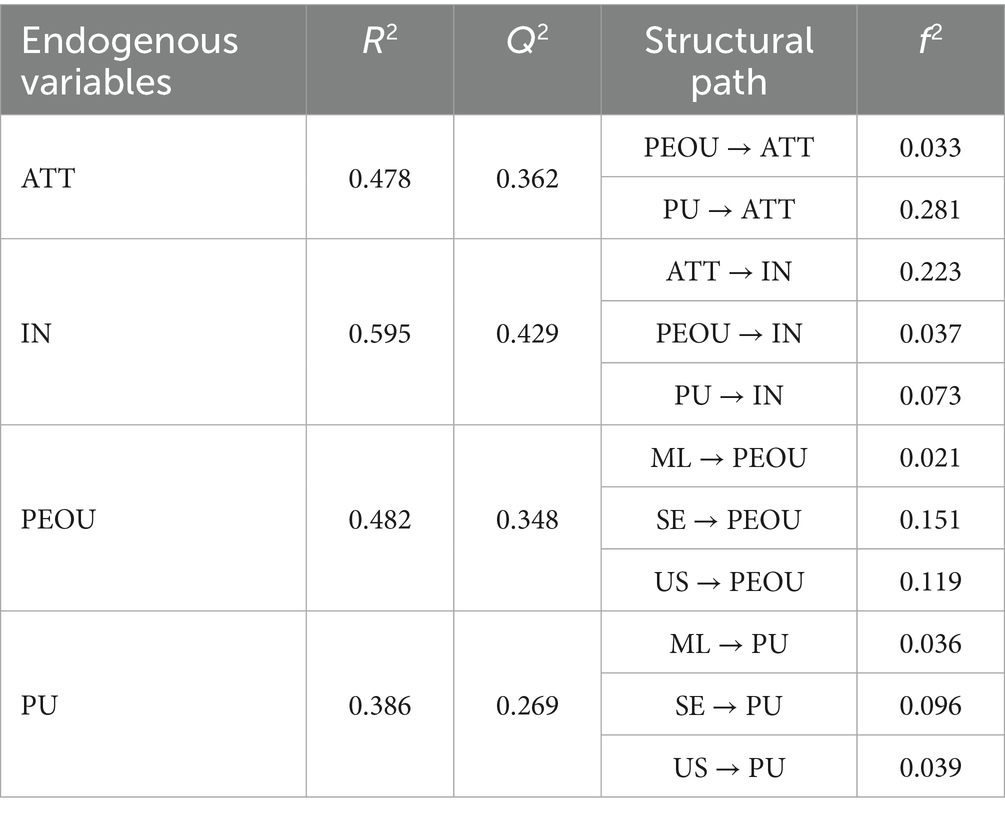

The structural model’s explanatory and predictive power was assessed through the coefficient of determination (R2), effect size (f2), and predictive relevance (Q2), as presented in Table 9. The data suggest that the model contributed 47.8% to the explained variance in Attitude (R2 = 0.478), 59.5% in Intention (R2 = 0.595), 48.2% in PEOU (R2 = 0.482), and 38.6% in PU (R2 = 0.386), therefore demonstrating moderate to substantial explanatory power (Chin, 2010; Hair et al., 2012). Effect sizes (f2) were assessed in accordance with Cohen’s (1988) guidelines, wherein values of 0.02, 0.15, and 0.35 are construed as small, medium, and large, respectively. The statistical results affirmed that all of the exogenous variables had adequate influence on their respective endogenous constructs. Furthermore, all endogenous constructs showed Q2 values greater than zero (ATT = 0.362, IN = 0.429, PEOU = 0.348, PU = 0.269), thus confirming the model’s good predictive relevance (Fornell and Larcker, 1981).

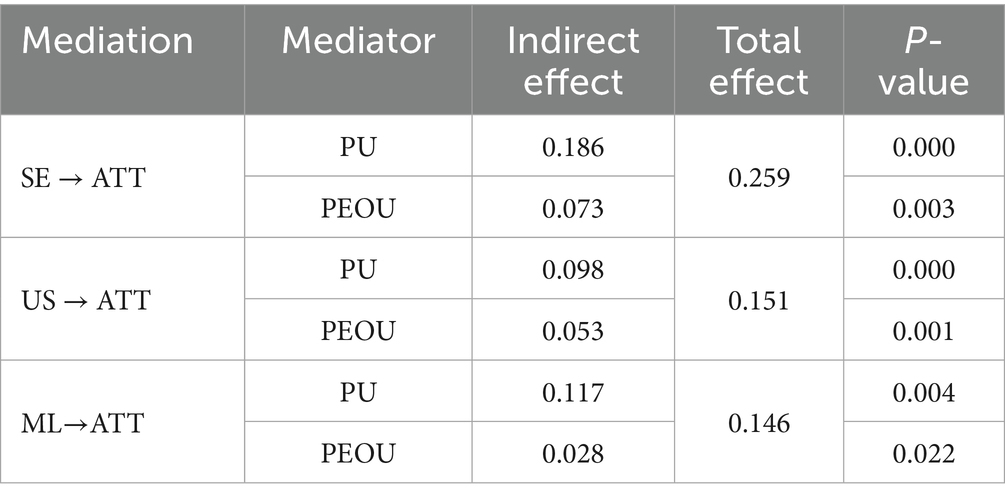

To examine potential mediation effects, bootstrapping was performed following the model specifications provided by Hair et al. (2017), and the findings are summarized in Table 10. It was revealed that SE, US, and ML each exerted significant indirect effects on attitude (ATT), mediated by both PU and PEOU. All of the indirect effects proved significant (p < 0.05), thus affirming PU and PEOU as mediators in the formation of users’ attitude toward AI tools. Because the link from attitude (ATT) toward AI to intention (IN) to use it has been extensively validated in prior theory, only the mediation effects leading to ATT are reported here.

4.5 Open-ended question insights

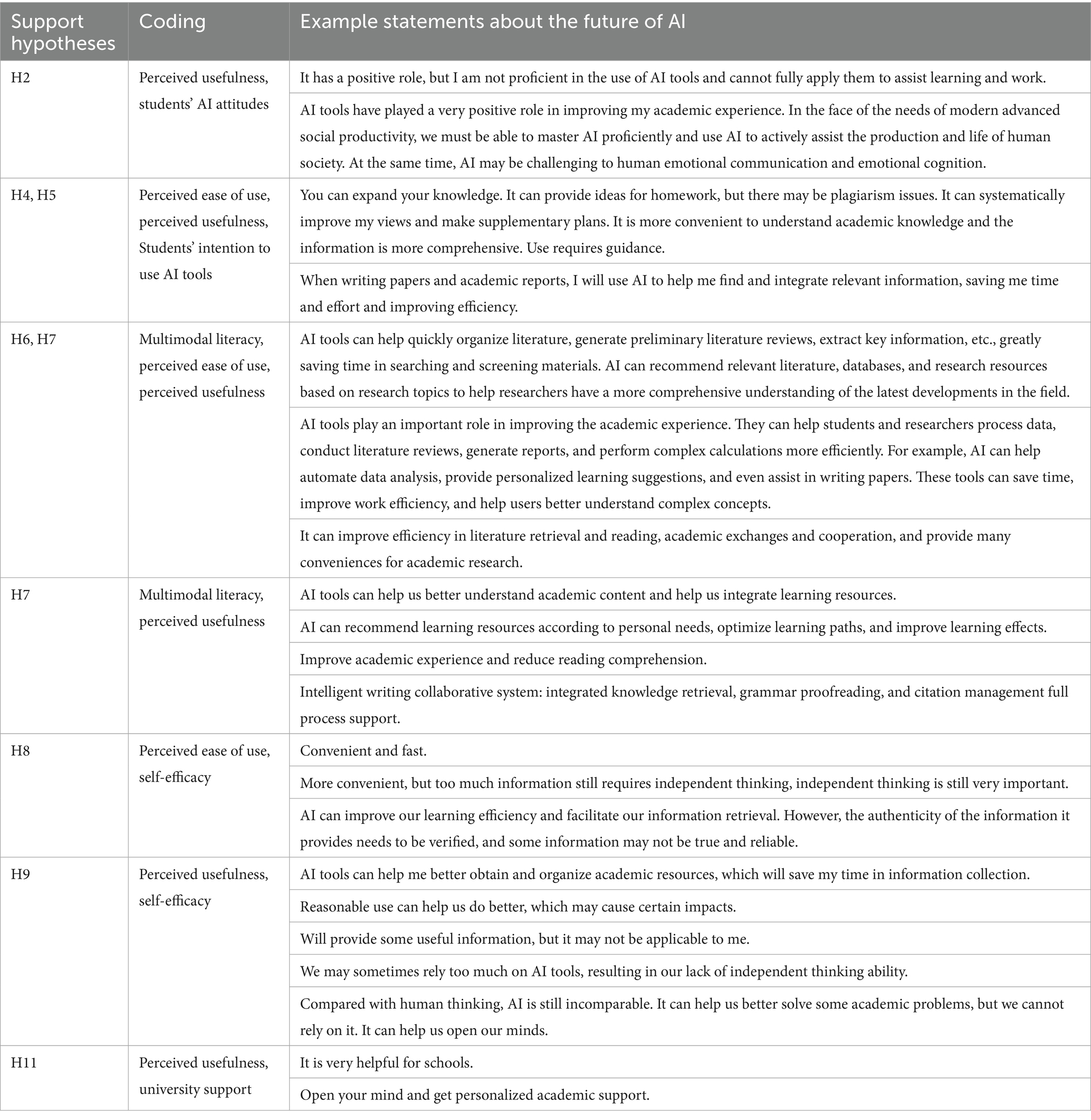

The questionnaire concluded with two open-ended questions: “What is your view on the role of AI tools in enhancing your academic experience? What challenges or limitations do you think may arise from using these tools?”

Many participants provided detailed responses, some of which are presented in Table 11. As can be seen in Table 11, after those statements had been filtered and coded, the majority were found to support most of the proposed hypotheses. These qualitative data offer additional validation of the hypotheses, from a different perspective.

5 Discussion and conclusions

5.1 Theoretical implications

This study integrated multimodal literacy, self-efficacy, and university support as determinants of AI tool acceptance for university students, drawing upon the technology acceptance model as the theoretical basis. The findings demonstrate that both multimodal literacy and self-efficacy play vital roles in improving students’ perceived ease of use and perceived usefulness of AI-based technologies. This finding aligns with those from earlier studies concerning digital competencies and technology use (Bulut et al., 2015): students who are adept at processing multimodal information and who possess confidence in their abilities are inclined to find AI tools intuitive and beneficial (Compeau and Higgins, 1995; Falebita and Kok, 2024). In addition, the significant role of university support in shaping both the students’ perceived ease of use and their perceived usefulness of AI highlights the critical impact of institutional and environmental factors. Institutional backing—through resources, training, and encouragement—can bridge the gap between personal ability and adoption of technology, underscoring that even capable students benefit from a supportive infrastructure (Sova et al., 2024). The study also confirmed the main TAM relationships regarding the acceptance of AI tools and emphasized that usefulness appears to be a greater determinant of attitude than ease of use is (Almasri, 2024).

5.2 Managerial implications

This study suggests practical ways to support AI technologies in academic environments. The first essential step is to develop students’ multimodal literacy. Embedding related skills into coursework can help students better interpret digital content (Davis, 1989; Liang et al., 2023), making AI tools seem more accessible and useful (Ng, 2012). The development of self-efficacy stands as another essential factor. Universities can offer training, peer support, and low-stress environments (Bandura, 1997) in which students feel safe exploring AI tools (Ifenthaler and Schweinbenz, 2013). Finally, universities should establish a robust support system that includes technical assistance, ethical AI workshops, and a modern digital infrastructure to ensure the seamless availability of AI tools for learners (Zawacki-Richter et al., 2019). As Chan and Lo (2025) emphasized, such efforts should also address risks associated with data privacy and algorithmic transparency, because insufficient safeguards in AI deployment may lead to unintended human rights consequences.

6 Conclusion

This study examined the key factors that influence students’ adoption of AI tools in higher education and yielded several important insights. First, multimodal literacy, self-efficacy, and university support were all found to positively shape students’ perceptions of AI tools’ ease of use and usefulness. Of the three, self-efficacy and institutional support had relatively stronger effects than multimodal literacy did, highlighting the roles of student confidence and organizational infrastructure in promoting favorable perceptions. Second, perceived ease of use and perceived usefulness were confirmed as important determinants of students’ attitudes toward AI tools, suggesting that their perceptions of functionality and value work in tandem to shape their receptiveness. Finally, students’ behavioral intentions were significantly predicted by the perceived usefulness and ease of use of the AI tools, along with the students’ attitude, with perceived usefulness standing out as the most significant contributor. These findings underscore the fact that although usability and a positive attitude matter, students’ decisions about AI adoption are ultimately anchored in whether they believe AI to be genuinely beneficial to their academic success.

These results also carry important implications for institutions that seek to integrate AI meaningfully into higher education. The findings highlight the value of providing consistent institutional support and of embedding into university curricula digital competencies that will enhance students’ confidence in using technology. At the same time, effective adoption must address the broader ethical and social challenges that accompany increased reliance on AI—such as authorship ambiguity, academic integrity, and the evolving conceptions of student agency (Lo et al., 2025). By proactively responding to these complexities, universities can foster more thoughtful and sustainable engagement with AI while still upholding the core values of higher education in an increasingly digital landscape.

7 Limitations and future research

7.1 Limitations

The study had several limitations that need to be acknowledged. First, respondents came predominantly from central and eastern China, with limited representation from the western regions, and convenience sampling was employed, thus potentially restricting the representativeness and broader applicability of the findings. Second, the study relied exclusively on self-reported measures, raising concerns about possible social desirability and response biases because some of the participants may have given responses that were influenced by social desirability bias, rather than providing fully accurate reflections of their true standpoints or behaviors. Last, the cross-sectional design limited the study’s capacity to track changes in students’ views or behaviors over time, thereby constraining the ability to infer causal relationships and capture dynamic shifts in attitudes and technology usage.

7.2 Future research

Future studies should incorporate more geographically diverse samples, particularly from the underrepresented western regions of China, using probability sampling techniques to enhance generalizability of their findings. Combining self-reported data with objective measures or behavioral tracking could also mitigate potential response and social desirability biases. Future studies may wish to adopt longitudinal or experimental designs to track evolving student perceptions, employ mixed-methods approaches for richer insights, and continuously refine measurement tools to align with the rapid evolution of AI technologies. Finally, future research might specifically investigate the educational impact and effective pedagogical integration of generative AI tools, in order to provide concrete recommendations for educational institutions, policymakers, and curriculum designers.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

ZZ: Formal analysis, Methodology, Writing – review & editing, Validation, Writing – original draft, Investigation, Data curation, Conceptualization. QA: Supervision, Writing – review & editing, Formal analysis, Project administration. JL: Data curation, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors would like to express their sincere gratitude to QA for his invaluable guidance and support throughout the research process. Special thanks also go to JL for their generous assistance in data collection, which greatly contributed to the completion of this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Since the authors’ native language is not English, we used ChatGPT for language polishing.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adelana, O. P., Ayanwale, M. A., and Sanusi, I. T. (2024). Exploring pre-service biology teachers’ intention to teach genetics using an AI intelligent tutoring-based system. Cogent. Educ. 11, 1–26. doi: 10.1080/2331186X.2024.2310976

Adiguzel, T., Kaya, M. H., and Cansu, F. K. (2023). Revolutionizing education with AI: exploring the transformative potential of ChatGPT. Cont. Ed. Technol. 15:ep429. doi: 10.30935/cedtech/13152

Agarwal, R., and Karahanna, E. (2000). Time flies when you’re having fun: cognitive absorption and beliefs about information technology usage. MIS Q. 24, 665–694. doi: 10.2307/3250951

Ajzen, I., and Fishbein, M. (2000). Attitudes and the attitude-behavior relation: reasoned and automatic processes. Eur. Rev. Soc. Psychol. 11, 1–33. doi: 10.1080/14792779943000116

Al-Abdullatif, A. M., and Alsubaie, M. A. (2024). ChatGPT in learning: assessing students’ use intentions through the lens of perceived value and the influence of AI literacy. Behav. Sci. 14:845. doi: 10.3390/bs14090845

Al-Adwan, A. S., Li, N., Al-Adwan, A., Abbasi, G. A., Albelbisi, N. A., and Habibi, A. (2023). Extending the technology acceptance model (TAM) to predict university students’ intentions to use metaverse-based learning platforms. Educ. Inf. Technol. 28, 15381–15413. doi: 10.1007/s10639-023-11816-3

Aljarrah, E., Elrehail, H., and Aababneh, B. (2016). E-voting in Jordan: assessing readiness and developing a system. Comput. Human Behav. 63, 860–867. doi: 10.1016/j.chb.2016.05.076

Al-Maroof, R. S., Salloum, S. A., Alhamadand, A. Q., and Shaalan, K. A. (2020). Understanding an extension technology acceptance model of Google translation: a multi-cultural study in United Arab Emirates. Int. J. Interact. Mob. Technol. 14, 157–178. doi: 10.3991/ijim.v14i03.11110

Almasri, F. (2024). Exploring the impact of artificial intelligence in teaching and learning of science: a systematic review of empirical research. Res. Sci. Educ. 54, 977–997. doi: 10.1007/s11165-024-10176-3

Alraimi, K. M., Zo, H., and Ciganek, A. P. (2015). Understanding the MOOCs continuance: the role of openness and reputation. Comput. Educ. 80, 28–38. doi: 10.1016/j.compedu.2014.08.006

Ayanwale, M. A., Frimpong, E. K., Opesemowo, O. A. G., and Sanusi, I. T. (2024). Exploring factors that support pre-service teachers’ engagement in learning artificial intelligence. J. STEM Educ. Res. 8, 199–229. doi: 10.1007/s41979-024-00121-4

Ayanwale, M. A., and Molefi, R. R. (2024). Exploring intention of undergraduate students to embrace chatbots: from the vantage point of Lesotho. Int. J. Educ. Technol. High. Educ. 21, 1–20. doi: 10.1186/s41239-024-00451-8

Bagozzi, R. P. (2007). The legacy of the technology acceptance model and a proposal for a paradigm shift. J. Assoc. Inf. Syst. 8, 244–254. doi: 10.17705/1jais.00122

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Englewood Cliffs: Prentice-Hall.

Behling, O., and Law, K. (2000). Translating questionnaires and other research instruments. Thousand Oaks: SAGE Publications.

Beile, P. M., and Boote, D. N. (2004). Does the medium matter?: a comparison of a web-based tutorial with face-to-face library instruction on education students’ self-efficacy levels and learning outcomes. Res. Strateg. 20, 57–68. doi: 10.1016/j.resstr.2005.07.002

Berweger, B., Born, S., and Dietrich, J. (2022). Expectancy-value appraisals and achievement emotions in an online learning environment: within- and between-person relationships. Learn. Instr. 77:101546. doi: 10.1016/j.learninstruc.2021.101546

Bezemer, J., and Kress, G. (2008). Writing in multimodal texts: a social semiotic account of designs for learning. Written Commun. 25, 166–195. doi: 10.1177/0741088307313177

Brown, S. A., Dennis, A. R., and Venkatesh, V. (2010). Predicting collaboration technology use: integrating technology adoption and collaboration research. J. Manag. Inf. Syst. 27, 9–54. doi: 10.2753/MIS0742-1222270201

Bulut, B., Ulu, H., and Kan, A. (2015). Multimodal literacy scale: a study of validity and reliability. EJER 15, 45–60. doi: 10.14689/ejer.2015.61.3

Caldarini, G., Jaf, S., and McGarry, K. (2022). A literature survey of recent advances in chatbots. Information 13:41. doi: 10.3390/info13010041

Chan, H. W. H., and Lo, N. P. K. (2025). A study on human rights impact with the advancement of artificial intelligence. J. Posthumanism 52:490. doi: 10.63332/joph.v5i2.490

Chen, D., Liu, W., and Liu, X. (2024). What drives college students to use AI for L2 learning? Modeling the roles of self-efficacy, anxiety, and attitude based on an extended technology acceptance model. Acta Psychol. 249:104442. doi: 10.1016/j.actpsy.2024.104442

Chin, W. W. (2010). “How to write up and report PLS analyses” in Handbook of partial least squares. eds. V. E. Vinzi, W. W. Chin, J. Henseler, and H. Wang (Springer Berlin Heidelberg), 655–690.

Chiu, T. K. F. (2024). Future research recommendations for transforming higher education with generative AI. Comput. Educ. 6:100197. doi: 10.1016/j.caeai.2023.100197

Cho, J., and Yu, H. (2015). Roles of university support for international students in the United States: analysis of a systematic model of university identification, university support, and psychological well-being. J. Stud. Int. Educ. 19, 11–27. doi: 10.1177/1028315314533606

Claro, M., Salinas, A., Cabello-Hutt, T., San Martín, E., Preiss, D. D., Valenzuela, S., et al. (2018). Teaching in a digital environment (TIDE): defining and measuring teachers’ capacity to develop students’ digital information and communication skills. Comput. Educ. 121, 162–174. doi: 10.1016/j.compedu.2018.03.001

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. 2nd Edn. Hillsdale: L. Erlbaum Associates.

Compeau, D. R., and Higgins, C. A. (1995). Computer self-efficacy: development of a measure and initial test. MIS Q. 19, 189–211. doi: 10.2307/249688

Comrey, A. L., and Lee, H. B. (1992). A first course in factor analysis. 2nd Edn. New York: L.Erlbaum Associates.

Creswell, J. W. (2009). Research design: Qualitative, quantitative, and mixed methods approaches. Los Angeles: Canadian Journal of University Continuing Education.

Crompton, H., and Burke, D. (2023). Artificial intelligence in higher education: the state of the field. Int. J. Educ. Technol. High. Educ. 20:22. doi: 10.1186/s41239-023-00392-8

D’Mello, S., Olney, A., Williams, C., and Hays, P. (2012). Gaze tutor: a gaze-reactive intelligent tutoring system. Int. J. Hum.-Comput. Stud. 70, 377–398. doi: 10.1016/j.ijhcs.2012.01.004

Das, S. R., and Madhusudan, J. V. (2024). Perceptions of higher education students towards ChatGPT usage. IJTE 7, 86–106. doi: 10.46328/ijte.5832024

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–339. doi: 10.2307/249008

De Oliveira, J. M. (2009). Technology, literacy and learning: a multimodal approach. J. High. Educ. 80, 356–358. doi: 10.1080/00221546.2009.11779019

De Oliveira, J. M. (2024). Enhancing multimodal literacy through community service learning in higher education. Front. Educ. 9:1448805. doi: 10.3389/feduc.2024.1448805

Denecke, K., Glauser, R., and Reichenpfader, D. (2023). Assessing the potential and risks of AI-based tools in higher education: results from an esurvey and SWOT analysis. Trends High. Educ. 2, 667–688. doi: 10.3390/higheredu2040039

Deng, X., and Yu, Z. (2023). A meta-analysis and systematic review of the effect of chatbot technology use in sustainable education. Sustainability 15:2940. doi: 10.3390/su15042940

Dillman, D. A., Smyth, J. D., and Christian, L. M. (2014). Internet, phone, mail, and mixed mode surveys: The tailored design method. 4th Edn. Hoboken, NJ: John Wiley & Sons.

Etikan, I. (2016). Comparison of convenience sampling and purposive sampling. Am. J. Theor. Appl. Stat. 5:11. doi: 10.11648/j.ajtas.20160501.11

Evans, J. R., and Mathur, A. (2005). The value of online surveys. Internet Res. 15, 195–219. doi: 10.1108/10662240510590360

Fagan, M. H., Neill, S., and Wooldridge, B. R. (2008). Exploring the intention to use computers: an empirical investigation of the role of intrinsic motivation, extrinsic motivation, and perceived ease of use. J. Comput. Inf. Syst. 48, 31–37. doi: 10.1080/08874417.2008.11646019

Falebita, O. S., and Kok, P. J. (2024). Artificial intelligence tools usage: a structural equation modeling of undergraduates’ technological readiness, self-efficacy and attitudes. J. STEM Educ. Res. 8, 257–282. doi: 10.1007/s41979-024-00132-1

Fan, L., and Cui, F. (2024). Mindfulness, self-efficacy, and self-regulation as predictors of psychological well-being in EFL learners. Front. Psychol. 15:1332002. doi: 10.3389/fpsyg.2024.1332002

Farhi, F., Jeljeli, R., Aburezeq, I., Dweikat, F. F., Al-shami, S. A., and Slamene, R. (2023). Analyzing the students’ views, concerns, and perceived ethics about chat GPT usage. Comput. Educ. 5:100180. doi: 10.1016/j.caeai.2023.100180

Fathi, J., Greenier, V., and Derakhshan, A. (2021). Self-efficacy reflection and burnout among Iranian EFL teachers: the mediating role of emotion regulation. Iran. J. Lang. Teach. Res. 9:43. doi: 10.30466/ijltr.2021.121043

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18, 39–50. doi: 10.2307/3151312

Fošner, A. (2024). University students’ attitudes and perceptions towards AI tools: implications for sustainable educational practices. Sustainability 16:8668. doi: 10.3390/su16198668

Girasoli, A. J., and Hannafin, R. D. (2008). Using asynchronous AV communication tools to increase academic self-efficacy. Comput. Educ. 51, 1676–1682. doi: 10.1016/j.compedu.2008.04.005

Hair, J. F., Hult, G. T. M., Ringle, C. M., and Sarstedt, M. (2017). A primer on partial least squares structural equation modeling (PLS-SEM). 2nd Edn. Los Angeles: SAGE.

Hair, J. F., Hult, G. T. M., Ringle, C. M., and Sarstedt, M. (2022). A primer on partial least squares structural equation modeling (PLS-SEM). Int. J. Res. Method Educ. 38, 220–221. doi: 10.1080/1743727X.2015.1005806

Hair, J. F., Sarstedt, M., Ringle, C. M., and Mena, J. A. (2012). An assessment of the use of partial least squares structural equation modeling in marketing research. J. Acad. Mark. Sci. 40, 414–433. doi: 10.1007/s11747-011-0261-6

Hammond, M., Reynolds, L., and Ingram, J. (2011). How and why do student teachers use ICT? Comput. Assist. Learn. 27, 191–203. doi: 10.1111/j.1365-2729.2010.00389.x

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Hew, J.-J., Leong, L.-Y., Tan, G. W.-H., Lee, V.-H., and Ooi, K.-B. (2018). Mobile social tourism shopping: a dual-stage analysis of a multi-mediation model. Tour. Manag. 66, 121–139. doi: 10.1016/j.tourman.2017.10.005

Hoffman, B., and Spatariu, A. (2008). The influence of self-efficacy and metacognitive prompting on math problem-solving efficiency. Contemp. Educ. Psychol. 33, 875–893. doi: 10.1016/j.cedpsych.2007.07.002

Holden, H., and Rada, R. (2011). Understanding the influence of perceived usability and technology self-efficacy on teachers’ technology acceptance. J. Res. Technol. Educ. 43, 343–367. doi: 10.1080/15391523.2011.10782576

Holmes, W., and Porayska-Pomsta, K. (2023). The ethics of artificial intelligence in education: Practices, challenges, and debates. Bostan, MA: Taylor & Francis Group.

Huang, F., Wang, Y., and Zhang, H. (2024). Modelling generative AI acceptance, perceived teachers' enthusiasm and self-efficacy to English as a foreign language learners’ well-being in the digital era. Eur. J. Educ. 59:e12770. doi: 10.1111/ejed.12770

Iacobucci, D. (2010). Structural equations modeling: fit indices, sample size, and advanced topics. J. Consum. Psychol. 20, 90–98. doi: 10.1016/j.jcps.2009.09.003

Ifenthaler, D., and Schweinbenz, V. (2013). The acceptance of tablet-PCs in classroom instruction: the teachers’ perspectives. Comput. Human Behav. 29, 525–534. doi: 10.1016/j.chb.2012.11.004

Igbaria, M. (1995). The effects of self-efficacy on computer usage. Omega 23, 587–605. doi: 10.1016/0305-0483(95)00035-6

Jeilani, A., and Abubakar, S. (2025). Perceived institutional support and its effects on student perceptions of AI learning in higher education: the role of mediating perceived learning outcomes and moderating technology self-efficacy. Front. Educ. 10:1548900. doi: 10.3389/feduc.2025.1548900

Jewitt, C. (2008). Multimodality and literacy in school classrooms. Rev. Res. Educ. 32, 241–267. doi: 10.3102/0091732X07310586

Karahanna, E., and Straub, D. W. (1999). The psychological origins of perceived usefulness and ease-of-use. Inf. Manag. 35, 237–250. doi: 10.1016/S0378-7206(98)00096-2

Kim, B.-J., Kim, M.-J., and Lee, J. (2024). Examining the impact of work overload on cybersecurity behavior: highlighting self-efficacy in the realm of artificial intelligence. Curr. Psychol. 43, 17146–17162. doi: 10.1007/s12144-024-05692-4

Kock, N. (2015). Common method bias in PLS-SEM: a full collinearity assessment approach. Int. J. E Collab. 11, 1–10. doi: 10.4018/ijec.2015100101

Kress, G. (2010). Multimodality: A social semiotic approach to contemporary communication. Abingdon: Routledge.

Kress, G., and Selander, S. (2012). Multimodal design, learning and cultures of recognition. Internet High. Educ. 15, 265–268. doi: 10.1016/j.iheduc.2011.12.003

Li, X., Gao, Z., and Liao, H. (2024). An empirical investigation of college students’ acceptance of translation technologies. PLoS One 19:e0297297. doi: 10.1371/journal.pone.0297297

Liang, J.-C., Hwang, G.-J., Chen, M.-R. A., and Darmawansah, D. (2023). Roles and research foci of artificial intelligence in language education: an integrated bibliographic analysis and systematic review approach. Interact. Learn. Environ. 31, 4270–4296. doi: 10.1080/10494820.2021.1958348

Liwanag, M. F., and Galicia, L. S. (2023). Technological self-efficacy, learning motivation, and self- directed learning of selected senior high school students in a blended learning environment. Tech. Soc. Sci. J. 44, 534–559. doi: 10.47577/tssj.v44i1.8980

Lo, N., Wong, A., and Chan, S. (2025). The impact of generative AI on essay revisions and student engagement. Comput. Educ. Open 9:100249. doi: 10.1016/j.caeo.2025.100249

Lu, Y., Yang, Y., Zhao, Q., Zhang, C., and Li, T. J.-J. (2024). AI assistance for UX: a literature review through human-centered AI. Arxiv [Preprint]. doi: 10.48550/arXiv.2402.06089

Ma, D., Akram, H., and Chen, H. (2024). Artificial intelligence in higher education: a cross-cultural examination of students’ behavioral intentions and attitudes. Int. Rev. Res. Open Distrib. Learn. 25, 134–157. doi: 10.19173/irrodl.v25i3.7703

Marakas, G. M., Yi, M. Y., and Johnson, R. D. (1998). The multilevel and multifaceted character of computer self-efficacy: toward clarification of the construct and an integrative framework for research. Inf. Syst. Res. 9, 126–163. doi: 10.1287/isre.9.2.126

Marangunić, N., and Granić, A. (2015). Technology acceptance model: a literature review from 1986 to 2013. Univ. Access Inf. Soc. 14, 81–95. doi: 10.1007/s10209-014-0348-1

Masry-Herzallah, A., and Watted, A. (2024). Technological self-efficacy and mindfulness ability: key drivers for effective online learning in higher education beyond the COVID-19 era. Cont. Ed. Technol. 16:ep505. doi: 10.30935/cedtech/14336

Mayer, R. E. (2009). “Table I: definitions of key terms” in Multimedia learning (Cambridge: Cambridge University Press).

Moos, D. C., and Azevedo, R. (2009). Learning with computer-based learning environments: a literature review of computer self-efficacy. Rev. Educ. Res. 79, 576–600. doi: 10.3102/0034654308326083

Moreno, R., and Mayer, R. (2007). Interactive multimodal learning environments: special issue on interactive learning environments: contemporary issues and trends. Educ. Psychol. Rev. 19, 309–326. doi: 10.1007/s10648-007-9047-2

Mori, S. C. (2000). Addressing the mental health concerns of international students. J. Counsel. Dev. 78, 137–144. doi: 10.1002/j.1556-6676.2000.tb02571.x

Ng, W. (2012). Can we teach digital natives digital literacy? Comput. Educ. 59, 1065–1078. doi: 10.1016/j.compedu.2012.04.016

Nikolopoulou, K., Gialamas, V., and Lavidas, K. (2020). Acceptance of mobile phone by university students for their studies: an investigation applying UTAUT2 model. Educ. Inf. Technol. 25, 4139–4155. doi: 10.1007/s10639-020-10157-9

Paas, F., and Sweller, J. (2012). An evolutionary upgrade of cognitive load theory: using the human motor system and collaboration to support the learning of complex cognitive tasks. Educ. Psychol. Rev. 24, 27–45. doi: 10.1007/s10648-011-9179-2

Pan, X. (2020). Technology acceptance, technological self-efficacy, and attitude toward technology-based self-directed learning: learning motivation as a mediator. Front. Psychol. 11:564294. doi: 10.3389/fpsyg.2020.564294

Podsakoff, P. M., and Organ, D. W. (1986). Self-reports in organizational research: problems and prospects. J. Manage 12, 531–544. doi: 10.1177/014920638601200408

Rafique, H., Almagrabi, A. O., Shamim, A., Anwar, F., and Bashir, A. K. (2020). Investigating the acceptance of mobile library applications with an extended technology acceptance model (TAM). Comput. Educ. 145:103732. doi: 10.1016/j.compedu.2019.103732

Rapp, D. N., Broek, P. V. D., McMaster, K. L., Kendeou, P., and Espin, C. A. (2007). Higher-order comprehension processes in struggling readers: a perspective for research and intervention. Sci. Stud. Read. 11, 289–312. doi: 10.1080/10888430701530417

Rowsell, J., and Walsh, M. (2011). Rethinking literacy education in new times: multimodality, multiliteracies, & new literacies. Brock Educ. J. 21:236. doi: 10.26522/brocked.v21i1.236

Sáinz, M., and Eccles, J. (2012). Self-concept of computer and math ability: gender implications across time and within ICT studies. J. Vocat. Behav. 80, 486–499. doi: 10.1016/j.jvb.2011.08.005

Salar, H. C., and Hamutoglu, N. B. (2022). The role of perceived ease of use and perceived usefulness on personality traits among adults. Open Praxis 14, 133–147. doi: 10.55982/openpraxis.14.2.142

Saravanos, A., Zervoudakis, S., and Zheng, D. (2022). Extending the technology acceptance model 3 to incorporate the phenomenon of warm-glow. Information 13:429. doi: 10.3390/info13090429

Sarstedt, M., Ringle, C. M., Smith, D., Reams, R., and Hair, J. F. (2014). Partial least squares structural equation modeling (PLS-SEM): a useful tool for family business researchers. J. Fam. Bus. Strategy 5, 105–115. doi: 10.1016/j.jfbs.2014.01.002

Sax, L. J., Gilmartin, S. K., and Bryant, A. N. (2003). Assessing response rates and nonresponse bias in web and paper surveys. Res. High. Educ. 44, 409–432. doi: 10.1023/A:1024232915870

Selwyn, N. (2022). The future of AI and education: some cautionary notes. Eur. J. Educ. 57, 620–631. doi: 10.1111/ejed.12532

Serap Kurbanoglu, S., Akkoyunlu, B., and Umay, A. (2006). Developing the information literacy self-efficacy scale. J. Doc. 62, 730–743. doi: 10.1108/00220410610714949

Seufert, T. (2018). The interplay between self-regulation in learning and cognitive load. Educ. Res. Rev. 24, 116–129. doi: 10.1016/j.edurev.2018.03.004

Shroff, R. H., Deneen, C. C., and Ng, E. M. W. (2011). Analysis of the technology acceptance model in examining students’ behavioural intention to use an e-portfolio system. Australas. J. Educ. Technol. 27, 600–618. doi: 10.14742/ajet.940

Sova, R., Tudor, C., Tartavulea, C. V., and Dieaconescu, R. I. (2024). Artificial intelligence tool adoption in higher education: a structural equation modeling approach to understanding impact factors among economics students. Electronics 13:3632. doi: 10.3390/electronics13183632

Stutz, P., Elixhauser, M., Grubinger-Preiner, J., Linner, V., Reibersdorfer-Adelsberger, E., Traun, C., et al. (2023). Ch(e)atGPT? An anecdotal approach addressing the impact of ChatGPT on teaching and learning GIScience. GI Forum 1, 140–147. doi: 10.1553/giscience2023_01_s140

Sykes, T., Venkatesh, V., and Gosain, S. (2009). Model of acceptance with peer support: a social network perspective to understand employees’ system use. MIS Q. 33:371. doi: 10.2307/20650296

Tan, L., Zammit, K., D’warte, J., and Gearside, A. (2020). Assessing multimodal literacies in practice: a critical review of its implementations in educational settings. Lang. Educ. 34, 97–114. doi: 10.1080/09500782.2019.1708926

Tarhini, A., Hone, K., Liu, X., and Tarhini, T. (2017). Examining the moderating effect of individual-level cultural values on users’ acceptance of E-learning in developing countries: a structural equation modeling of an extended technology acceptance model. Interact. Learn. Environ. 25, 306–328. doi: 10.1080/10494820.2015.1122635

Teo, T. (2011). Factors influencing teachers’ intention to use technology: model development and test. Comput. Educ. 57, 2432–2440. doi: 10.1016/j.compedu.2011.06.008

Teo, T., and Milutinovic, V. (2015). Modelling the intention to use technology for teaching mathematics among pre-service teachers in Serbia. Australas. J. Educ. Technol. 31, 363–380. doi: 10.14742/ajet.1668

Teo, T., and Zhou, M. (2014). Explaining the intention to use technology among university students: a structural equation modeling approach. J. Comput. High. Educ. 26, 124–142. doi: 10.1007/s12528-014-9080-3

Thomander, S. D., and Krosnick, J. A. (2024). “Question and questionnaire design” in The Cambridge handbook of research methods and statistics for the social and behavioral sciences. eds. J. E. Edlund and A. L. Nichols (Cambridge: Cambridge University Press), 52–370.

Toros, E., Asiksoy, G., and Sürücü, L. (2024). Refreshment students’ perceived usefulness and attitudes towards using technology: a moderated mediation model. Humanit. Soc. Sci. Commun. 11:333. doi: 10.1057/s41599-024-02839-3

Venkatesh, V., and Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 39, 273–315. doi: 10.1111/j.1540-5915.2008.00192.x

Venkatesh, V., and Davis, F. D. (2000). A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag. Sci. 46, 186–204. doi: 10.1287/mnsc.46.2.186.11926

Viswanath Venkatesh,, Morris, M. G., and Davis, G. B. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27:425. doi: 10.2307/30036540

Walsh, M. (2010). Multimodal literacy: what does it mean for classroom practice? AJLL 33, 211–239. doi: 10.1007/BF03651836

Wang, X., and Li, P. (2024). Assessment of the relationship between music students’ self-efficacy, academic performance and their artificial intelligence readiness. Eur. J. Educ. 59:e12761. doi: 10.1111/ejed.12761

Wang, X., and Wang, S. (2024). Exploring Chinese EFL learners’ engagement with large language models: a self-determination theory perspective. Learn. Motiv. 87:102014. doi: 10.1016/j.lmot.2024.102014

Wang, S., Wang, F., Zhu, Z., Wang, J., Tran, T., and Du, Z. (2024). Artificial intelligence in education: a systematic literature review. Expert Syst. Appl. 252:124167. doi: 10.1016/j.eswa.2024.124167

Williams, A. (2025). Integrating artificial intelligence into higher education assessment. Intersection, 6, 128–154. doi: 10.61669/001c.131915

Winkler, R., and Soellner, M. (2018). Unleashing the potential of chatbots in education: a state-of-the-art analysis. Acad. Manage. Proc. 2018:15903. doi: 10.5465/AMBPP.2018.15903abstract

Yao, N., and Wang, Q. (2024). Factors influencing pre-service special education teachers’ intention toward AI in education: digital literacy, teacher self-efficacy, perceived ease of use, and perceived usefulness. Heliyon 10:e34894. doi: 10.1016/j.heliyon.2024.e34894

Yi, M. Y., and Hwang, Y. (2003). Predicting the use of web-based information systems: self-efficacy, enjoyment, learning goal orientation, and the technology acceptance model. Int. J. Hum.-Comput. Stud. 59, 431–449. doi: 10.1016/S1071-5819(03)00114-9

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educ. Technol. High. Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Zhai, X., Chu, X., Chai, C. S., Jong, M. S. Y., Istenic, A., Spector, M., et al. (2021). A review of artificial intelligence (AI) in education from 2010 to 2020. Hoboken: Wiley.

Zhao, G., Wang, Q., Wu, L., and Dong, Y. (2022). Exploring the structural relationship between university support, students’ technostress, and burnout in technology-enhanced learning. Asia Pac. Educ. Res. 31, 463–473. doi: 10.1007/s40299-021-00588-4

Zimmerman, B. J. (2000). Self-efficacy: an essential motive to learn. Contemp. Educ. Psychol. 25, 82–91. doi: 10.1006/ceps.1999.1016

Keywords: technology acceptance model, multimodal literacy, self-efficacy, university support, structural equation modeling

Citation: Zhao Z, An Q and Liu J (2025) Exploring AI tool adoption in higher education: evidence from a PLS-SEM model integrating multimodal literacy, self-efficacy, and university support. Front. Psychol. 16:1619391. doi: 10.3389/fpsyg.2025.1619391

Edited by:

Angelo Rega, Pegaso University, ItalyReviewed by:

Noble Lo, Lancaster University, United KingdomIsaac Ogunsakin, Obafemi Awolowo University, Nigeria

Copyright © 2025 Zhao, An and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qi An, NDcwNjcwNTIxQHFxLmNvbQ==

Zixuan Zhao

Zixuan Zhao Qi An

Qi An Jiaqi Liu

Jiaqi Liu