- 1School of Materials Science and Engineering, Nanyang Technological University, Singapore, Singapore

- 2Singapore-HUJ Alliance for Research and Enterprise (SHARE), Singapore, Singapore

- 3The Robert H. Smith Faculty of Agriculture, Food and Environment, The Hebrew University of Jerusalem, Rehovot, Israel

- 4National Institute of Education, Nanyang Technological University, Singapore, Singapore

- 5Nanyang Environment and Water Research Institute (NEWRI), Singapore, Singapore

- 6School of Computer Science and Engineering, The Hebrew University, Jerusalem, Israel

Accurate estimation of leaf nitrogen concentration and shoot dry-weight biomass in leafy vegetables is crucial for crop yield management, stress assessment, and nutrient optimization in precision agriculture. However, obtaining this information often requires access to reliable plant physiological and biophysical data, which typically involves sophisticated equipment, such as high-resolution in-situ sensors and cameras. In contrast, smartphone-based sensing provides a cost-effective, manual alternative for gathering accurate plant data. In this study, we propose an innovative approach to estimate leaf nitrogen concentration and shoot dry-weight biomass by integrating smartphone-based RGB imagery with Light Detection and Ranging (LiDAR) data, using Amaranthus dubius (Chinese spinach) as a case study. Specifically, we derive spectral features from the RGB images and structural features from the LiDAR data to predict these key plant parameters. Furthermore, we investigate how plant traits, modeled using smartphone data based indices, respond to varying nitrogen dosing, enabling the identification of the optimal nitrogen dosage to maximize yield in terms of shoot dry-weight biomass and vigor. The performance of crop parameter estimation was evaluated using three regression approaches: support vector regression, random forest regression, and lasso regression. The results demonstrate that combining smartphone RGB imagery with LiDAR data enables accurate estimation of leaf total reduced nitrogen concentration, leaf nitrate concentration, and shoot dry-weight biomass, achieving best-case relative root mean square errors as low as 0.06, 0.15, and 0.05, respectively. This study lays the groundwork for smartphone-based estimate leaf nitrogen concentration and shoot biomass, supporting accessible precision agriculture practices.

1 Introduction

Nitrogen is a cornerstone mineral nutrient essential for regulating the yield and overall health of crop plants, as it is a key component of plant amino acids, proteins, and nucleic acids. Subtropical leafy vegetable crops, such as spinach, are experiencing a growing global market demand due to their exceptional nutritional profile and relatively affordable price (Morelock and Correll, 2008). These crops respond notably well to nitrogen fertilization, attributed to their efficient nitrogen uptake mechanisms paired with less efficient nitrogen reductive systems (Cantliffe, 1972; Qiu et al., 2021). However, the nitrogen fertilizer use efficiency for leafy vegetable crops typically remains below 50% of the applied amount (Govindasamy et al., 2023; Valenzuela, 2024), reducing profitability and contributing to substantial nitrogen loss to the environment. Such loss leads to increased nitrogen runoff into water bodies and elevated greenhouse gas emissions (Zhao et al., 2012). Balancing crop nitrogen needs with fertilizer application is thus critical to optimize crop performance, enhancing nutritional quality, protecting the environment, and maximizing returns for farmers. Given nitrogen’s vital role in improving both nutritional value and market appeal of leafy vegetables, accurate estimation of leaf nitrogen concentration and shoot dry-weight biomass is essential for determining the specific nitrogen requirements of crop species and, consequently, for optimizing fertilizer application to maximize yield and profitability.

Traditional methods for assessing leaf nitrogen concentration, such as laboratory-based micro Kjeldahl analysis (Bradstreet, 1954), and for determining shoot dry-weight biomass through drying, rely on destructive sampling, making them impractical and costly in terms of time, financial resources, and labor. This necessitates the development of innovative approaches that allow timely, non-destructive estimation of these plant parameters. Smartphone sensing offers a non-invasive method for analyzing crop dynamics, presenting a promising pathway for phenological monitoring to improve crop management and optimize yields (Hufkens et al., 2019). Extensive research has demonstrated a robust relationship between Red-Green-Blue (RGB) imagery and leaf nutrient composition (Gausman, 1985; Lee and Lee, 2013). For example, studies indicate that leaf chlorophyll concentration, which correlates positively with leaf nitrogen levels, provides a reliable indicator of nitrogen concentration (Evans, 1983). The emergence of optical smartphone RGB imaging has thus provided a cost-effective approach for capturing crop traits compared to the use of expensive cameras. The widespread availability and affordability of smartphones have driven the development of methods that use smartphone-based RGB imagery to estimate leaf nitrogen concentration in staple crops, such as rice (Tao et al., 2020) and wheat (Chen et al., 2016). Although RGB imagery can provide estimates of leaf nitrogen by capturing variations in visible reflectance (Evans, 1983; Fan et al., 2022), its limited ability to capture three-dimensional structural information reduces its effectiveness for crop physiological trait estimation. While photogrammetry can offer some structural insights from RGB images, its accuracy depends on image quality, lighting, and scene complexity (Burdziakowski and Bobkowska, 2021). In contrast, Light Detection and Ranging (LiDAR) provides direct and highly accurate 3D measurements, making it better suited for estimating structural traits such as vegetation height, canopy span, and biomass Lefsky et al (2002). The recent integration of LiDAR sensors into smartphones marks a groundbreaking advancement, enabling the collection of structural plant data, including biomass estimation. Combining smartphone RGB data with LiDAR data offers potential for estimating both biophysical and physiological traits in leafy vegetable crops. For instance, Bar-sella et al. successfully estimated leaf transpiration in maize using smartphone RGB imagery and LiDAR data collected with an iPhone 13 Pro Max smartphone (Bar-Sella et al., 2024).

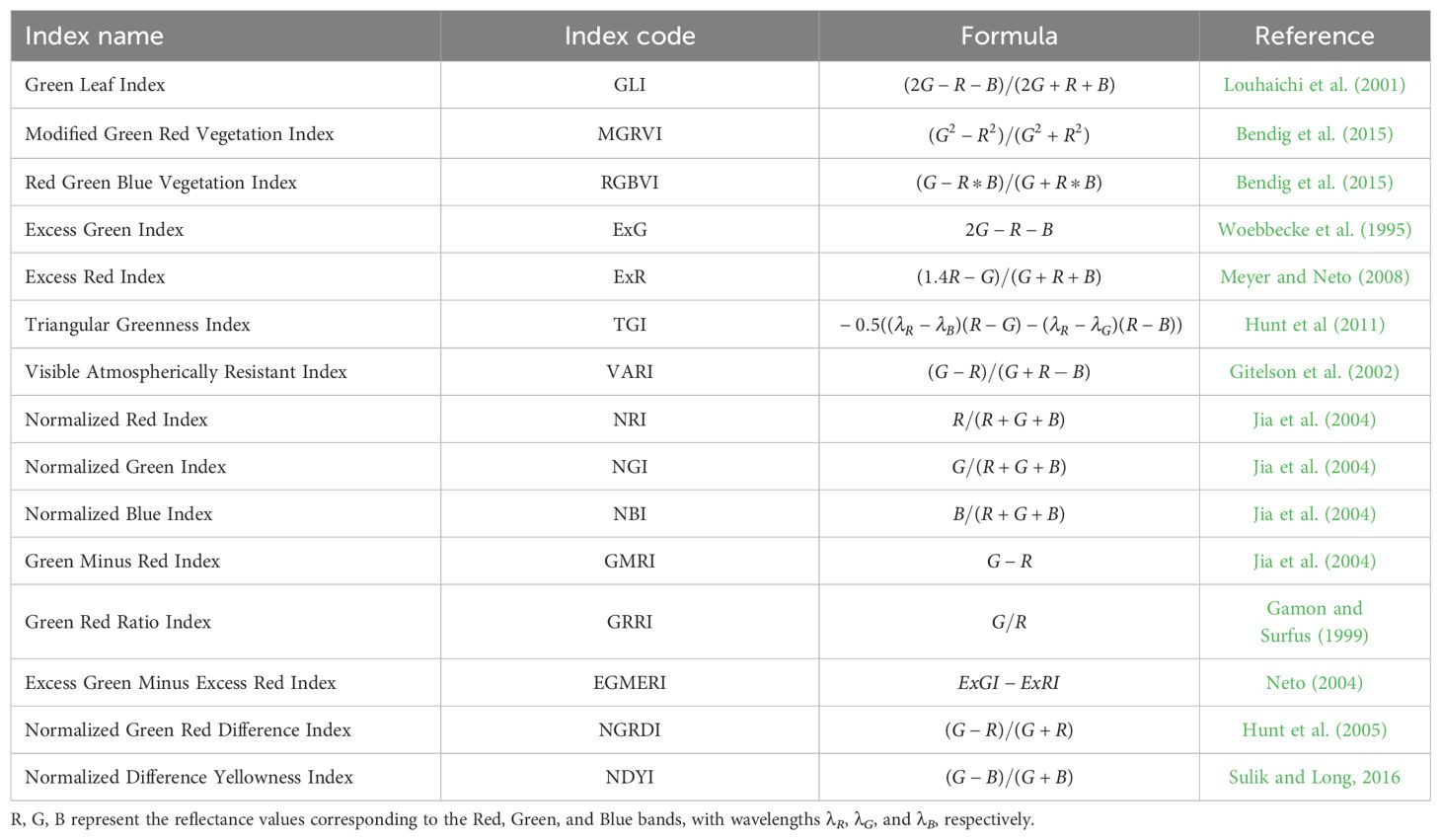

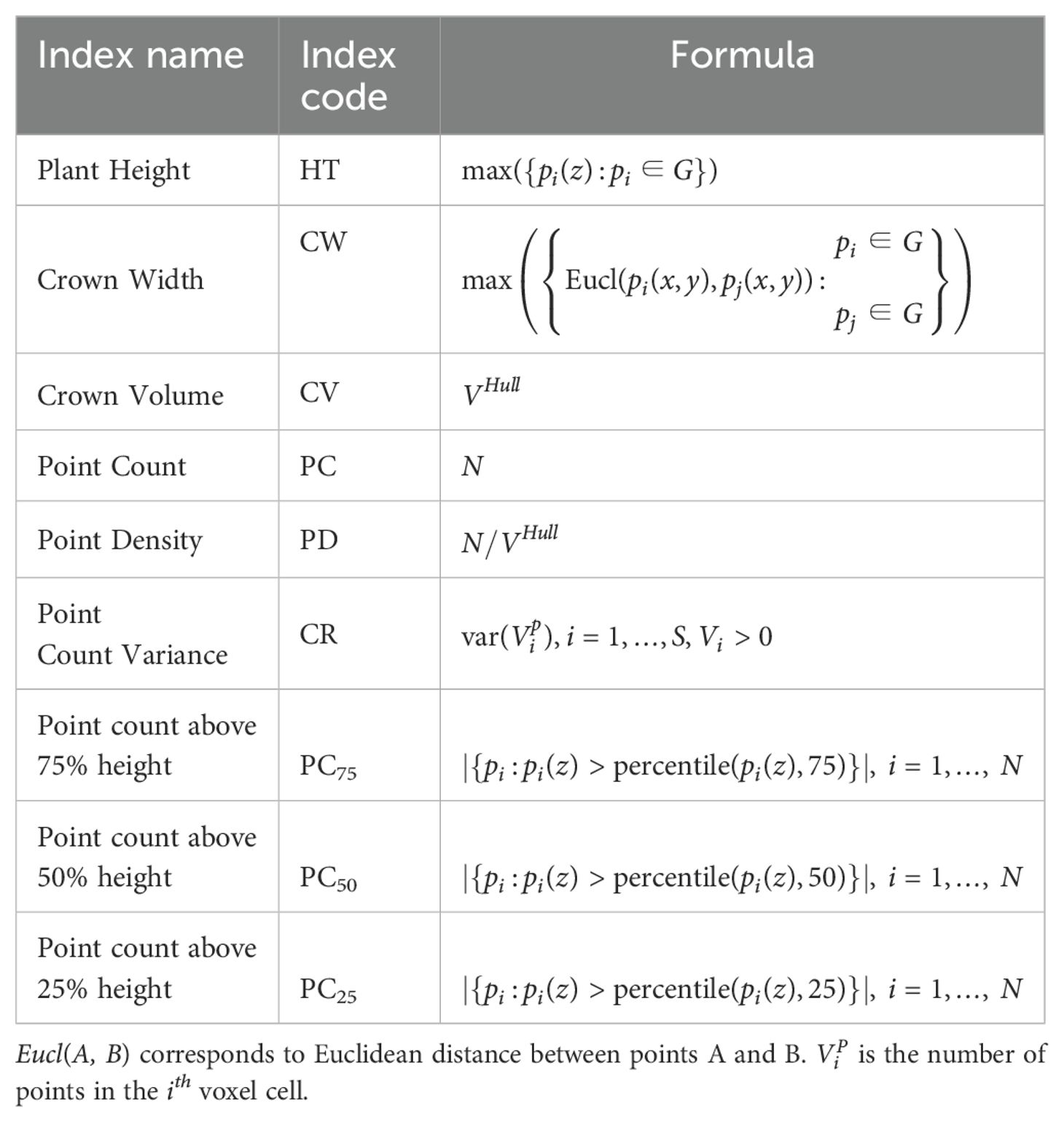

A comprehensive literature review reveals a significant research gap in the integration of relatively low-cost smartphone RGB and LiDAR data for estimating plant traits, including leaf nitrogen concentration and shoot biomass. Moreover, few studies have focused on nitrogen concentration and biomass estimation specifically for high-demand tropical leafy vegetables, such as Chinese spinach. Additionally, many of the existing such studies have been conducted in open-field conditions, which are subject to considerable variations by biotic and abiotic factors (Valenzuela, 2024; Chevalier et al., 2025; Hassona et al., 2024), whereas the current study focuses on semi-controlled tropical greenhouse conditions. Specifically, this study aims to achieve three main objectives: (a) to evaluate the effectiveness of combined smartphone-based RGB imagery and LiDAR data for accurately estimating leaf nitrogen concentration and shoot biomass in leafy vegetable crops within a semi-controlled greenhouse setting, (b) to quantify the relevance of the RGB and the LiDAR-based vegetation indices in Tables 1, 2 for estimating leaf nitrogen concentration and shoot dryweight biomass in Chinese spinach, and (c) to identify the optimal nitrogen dosage for maximizing crop dry-weight biomass yield using integrated smartphone RGB imagery and LiDAR data.

2 Materials

2.1 Study site, experimental setup and crop management

The experiment was conducted in a semi-controlled tropical greenhouse (Oasis Living Lab, Singapore), specifically designed for leafy vegetable production, from September to November 2023. The average temperature inside the greenhouse was 30.6°C, with a minimum of 24.5°C and a maximum of 45.1°C. The average humidity was 76.2%, ranging from a minimum of 43.9% to a maximum of 94.6%.

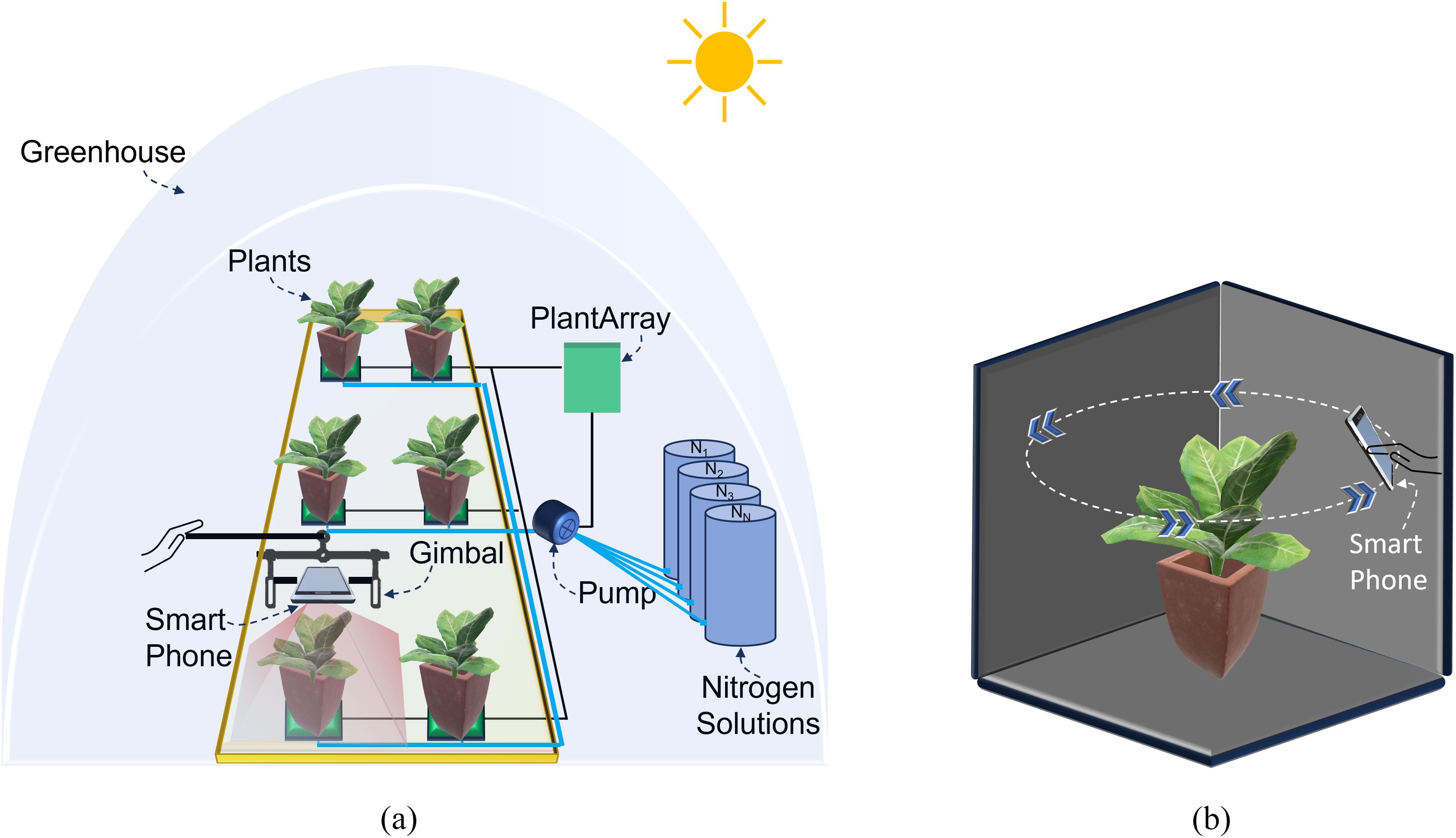

A total of 84 Chinese spinach plant species, known for their high productivity and nutritious value, were selected for this study (Figure 1). Seeds were initially sown in seedling trays and nurtured for 4 weeks to ensure optimal growth prior to transplantation (Shenhar et al., 2024). At the appropriate growth stage, the plants were transplanted into 4-L pots (Tefen Ltd., Nahsholim, Israel) filled with coco-peat substrate (Riococo Ltd., Sri Lanka) at the experiment’s commencement.

Figure 1. Experimental setup of 84 Chinese spinach plants in the semi-controlled greenhouse at the OasisLab, Singapore. Nitrogen dosing for each plant is precisely regulated using the PlantArray system, allowing controlled assessment of varying nitrogen treatments (in randomized block design) on plant growth and performance.

To evaluate the effects of different nitrogen dosages on plant growth and performance, plants were assigned to eight treatment groups, each consisting of 10 or 11 replicates. Each group received a specific nitrogen dosage - 20, 40, 80, 120, 160, 200, 400, or 800 parts per million (ppm) delivered in an aqueous solution. The recipe of the nutrient formulation is provided in Table A1 section A.1 in Appendix A. Nitrogen fertigation was carefully regulated using precision pumps (Shurflo 5050-1311-H011, Pentair, MN, USA) controlled by the PlantArray system (Plant-Ditech, Yavne, Israel), as outlined in (Halperin et al., 2017). Irrigation was applied proportionally based on real-time transpiration measurements from the PlantArray system (Plant-Ditech, Yavne, Israel), with four brief irrigation events per night to maintain soil at field capacity and minimize variations in soil moisture content across treatments (Halperin et al., 2017).

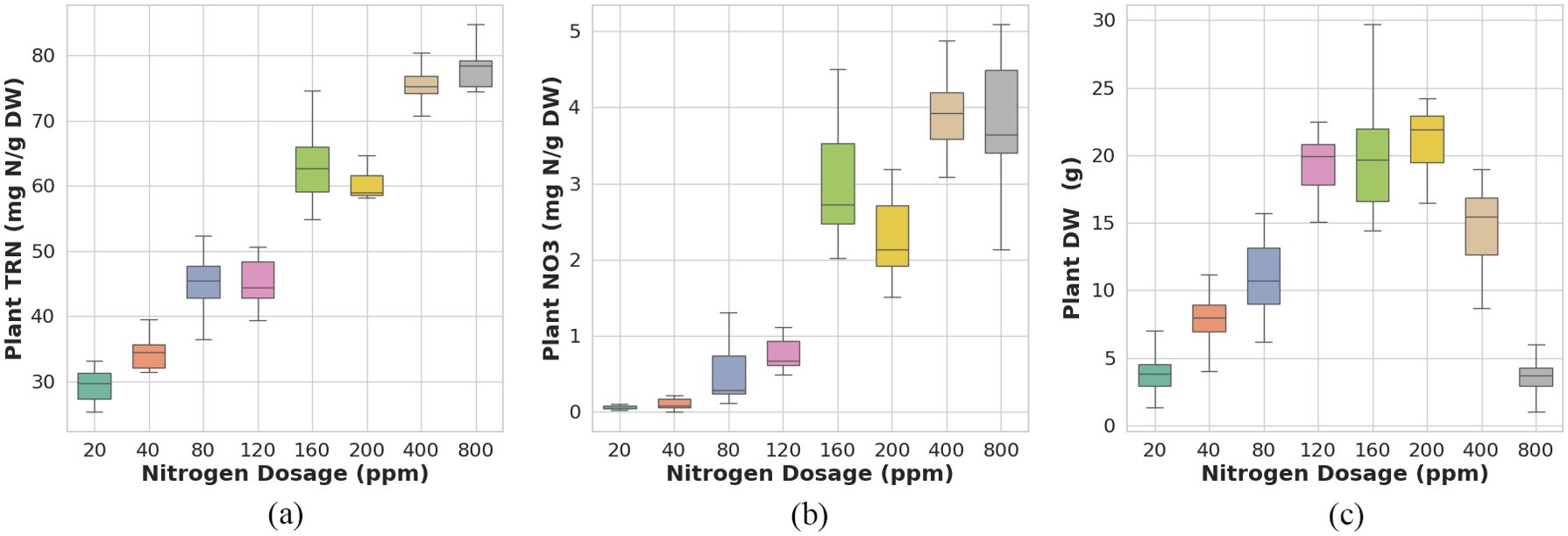

The plants were cultivated for five weeks, from September 11 to October 17, 2023, prior to harvesting. Post-harvest, the shoots were manually separated into leaves and stems. The separated shoots were ovendried at 60°C for one week to ensure complete desiccation before laboratory analysis. The dried samples were then analyzed for leaf total reduced nitrogen (TRN) concentration, leaf nitrate (NO3) concentration, and shoot dry weight (DW) biomass. Here, TRN refers to the nitrogen content in its reduced forms, including nitrogen incorporated into amino acids, proteins, and other organic compounds, NO3 represents the inorganic nitrogen absorbed from the soil, and DW is an indicator of yield. Leaf nitrate () concentration was measured following (He et al., 2020). Dried leaf samples (0.01 g) were ground in 10 mL Milli-Q water, shaken at 37°C (200 rpm) for 2 hours, and filtered through a 0.45 m pore membrane using a vacuum filter. Quantification of concentration was carried out using a Quikchem 8000 Flow Injection Analyzer (Lachat Instruments Inc., Milwaukee, WI, USA), with absorbance of the resulting magenta complex measured at 520 nm to derive the concentration. concentration was determined by digesting 0.05 g of dried sample with a Kjeldahl tablet in 5 mL of concentrated sulfuric acid at 350°C for 60 minutes, following the method of Allen et al. in (Allen, 1989). After digestion, TRN concentration was quantified using a Kjeltec 8400 analyzer (Foss Tecator AB, Höganäs, Sweden). The concentrations, concentrations, and the , of individual plants measured in the lab by destructive analysis of the harvest shoot tissue of individual plants are provided in Table A2 in section A.2. The boxplots of the measured , the , and the parameter values for the different nitrogen dosage plant sets, are presented in Figure 2.

Figure 2. Reference data distribution for (a) plant total reduced nitrogen (TRN), (b) nitrate (NO3), and (c) dry weight (DW) in Chinese spinach across different nitrogen dosages.

2.2 Smartphone data collection

In this study, an iPhone 14 Pro Max smartphone (Apple, Cupertino, CA, USA) with a field of view (FoV) of 69°C, pixel size (PL) of 1.9 µm, and focal length (F) of 26 mm was mounted on a manually held ZHIYUN Smooth 5 gimbal platform (Guilin Zhishen Information Technology Co., Ltd., Shenzhen, China) to acquire RGB imagery from a nadir perspective (Figure 3a). RGB images for all 84 Chinese spinach plants were captured within a 10-minute timeframe under clear skies, between 11:30 am and 12:00 pm, on the day preceding harvest (Figure 4). The RGB data acquisition height was set to ensure the entire plant crown fell within the camera’s field of view, specifically at 50 ± 5 cm above the highest point of the plant crown. Precise horizontal alignment and smartphone orientation during data capture were verified using the Pocket Bubble Level iPhone application (ExaMobile S.A., Bielsko-Biała, Poland) to maintain a level device position throughout the imaging process. At the end of data collection, an RGB image of a 99.9% circular white reference panel was taken to facilitate the conversion of raw RGB digital numbers to reflectance values.

Figure 3. Schematic representation of the smartphone-based data acquisition setup in the indoor greenhouse. (a) RGB imagery acquisition setup showing the smartphone mounted on a gimbal platform and the PlantArray system managing nitrogen dosing for individual plants. (b) 3D LiDAR point cloud data acquisition setup, where the smartphone is maneuvered around the plant to capture detailed structural data of the plant crown.

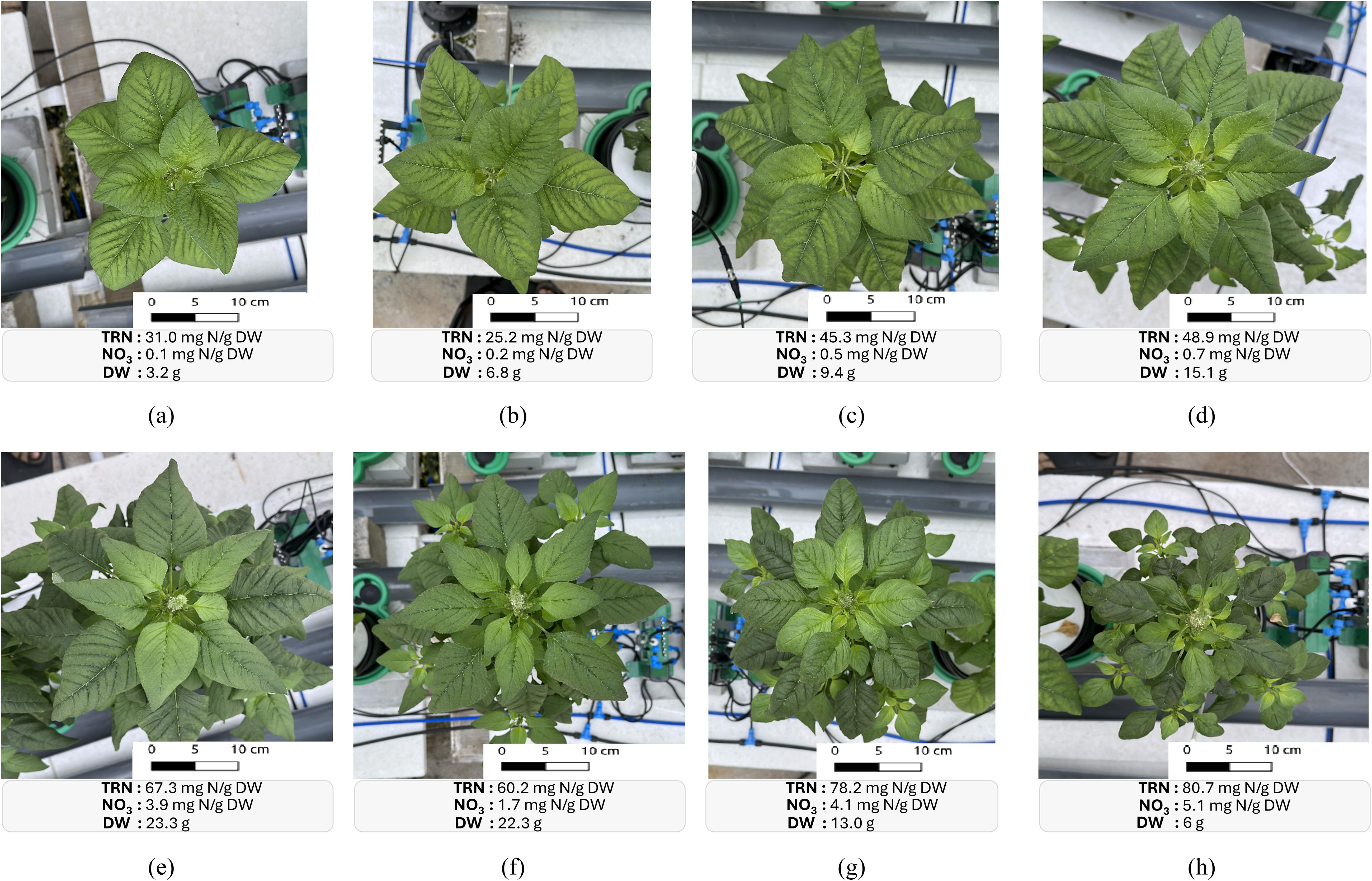

Figure 4. RGB images of Chinese spinach plants treated with varying nitrogen dosages: (a) 20 ppm, (b) 40 ppm, (c) 80 ppm, (d) 120 ppm, (e) 160 ppm, (f) 200 ppm, (g) 400 ppm, and (h) 800 ppm. The lab-based measurements of the leaf total reduced nitrogen (TRN) concentrations, the leaf nitrate (NO3) concentrations, and the shoot dry weight (DW) which is an indicator of yield for individual plants are reported at the bottom of the respective subfigure.

Additionally, 3D LiDAR point cloud data of each of the 84 plants were captured in under 10 seconds using the iPhone 14 Pro Max’s in-built LiDAR sensor, operated via the Polycam 3D scanning application (Polycam, Inc., San Francisco, California). The LiDAR sensor specifications are consistent across iPhone 13 and iPhone 14 Pro models, allowing them to be used interchangeably for 3D data acquisition. Plants were positioned in direct sunlight against a dark background, 10–20 cm from the pot, to reduce background noise and enhance point cloud quality. The Polycam application’s LiDAR capture mode collected high-density point cloud data (i.e., 5–20 points per cm3). During LiDAR data acquisition, the smartphone was maneuvered in a circular path around and above each plant (Figure 3b) to obtain a comprehensive, detailed representation of the plant’s crown structure. The Polycam application generated the final raw 3D point cloud, with each point containing R, G, and B value attributes, within 20–30 seconds of scan completion and preprocessing initiation. Here, the decision to use LiDAR over photogrammetry was driven by its ability to directly capture accurate 3D structural information, even under challenging conditions, such as variable lighting and plant motion.

3 Methods

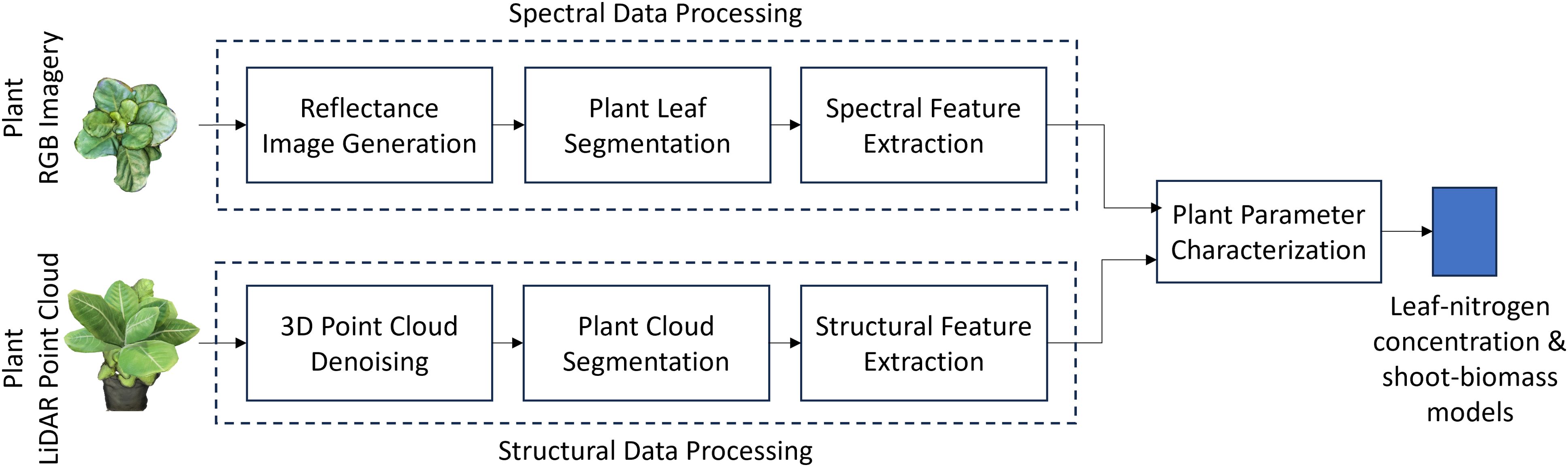

The proposed approach characterizes physiological and biophysical crop traits by leveraging spectral and structural data obtained from smartphone RGB imagery and LiDAR point cloud data (Figure 5). In our case, the physiological trait of interest is , and the biophysical ones are and (as defined in 2.1). RGB imagery processing includes generating reflectance values to account for environmental variations in solar illumination, followed by leaf segmentation and extraction of spectral-reflectance features. Structural feature extraction from LiDAR data involves denoising and segmenting the 3D point cloud to isolate plant data from background objects and noise.

Figure 5. Block diagram of the smartphone-based leaf nitrogen concentration and shoot dry-weight biomass estimation setup for Chinese spinach. Spectral features derived from smartphone RGB imagery and structural features from LiDAR point cloud data are combined to estimate key leaf nitrogen concentration and shoot biomass.

3.1 Spectral data processing

The inherent sensor-electronic noise present in the measured values was mitigated by performing dark image subtraction on the raw optical smartphone RGB images. A dark image was generated by covering the smartphone’s rear camera with a dark cloth. The resulting RGB images were then converted to reflectance values by dividing each digital number (DN) value by the average DN value from a 99.9% white reference image (Näsi et al., 2015).

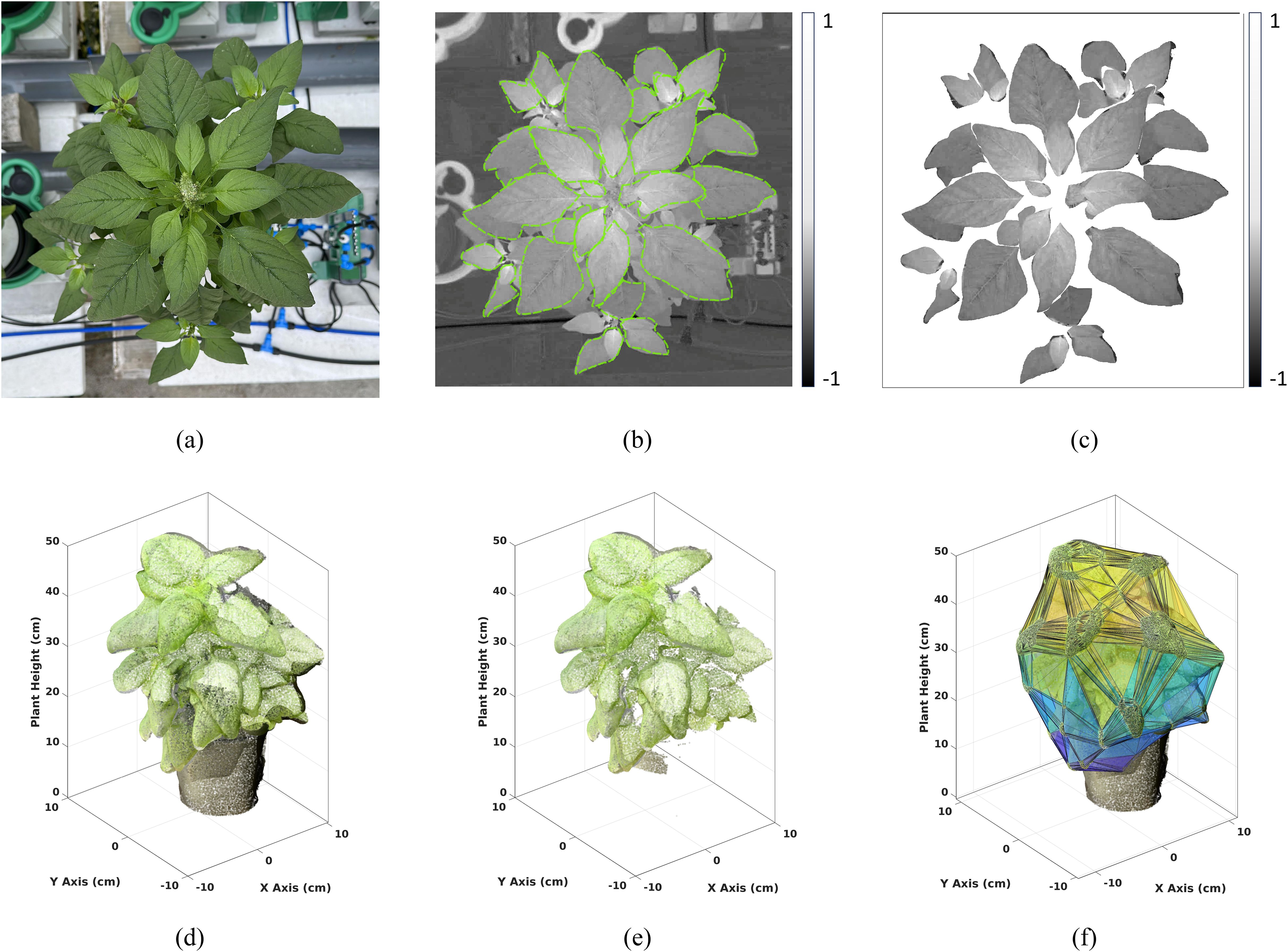

The individual RGB reflectance images captured during our experiment contained extraneous details, including background objects such as pots and other experimental hardware (Figure 6a). To minimize estimation errors, accurate delineation of plant leaves from the background was essential. We employed the Mask Convolutional Neural Networks (MaskCNN) algorithm (Zhang et al., 2021) for automatic leaf segmentation, chosen for its precision in identifying leaf structures (i.e., the objects of interest) within images. The CNN was trained on 245 individual leaf images, each carefully annotated by an expert from 150 randomly selected plant images. To enhance dataset size, diversity, and robustness, data augmentation techniques—such as rotations (45°, 90° and 135°) and scalings (0.10, 0.25, 0.50, 0.75, and 1.25) - were applied to each original image, resulting in a total of 2,250 leaf samples for model training. The MaskCNN algorithm used distinct leaf traits such as shape, texture, and color to automatically extract leaf boundaries from the background (Figure 6b). The output of the MaskCNN segmentation process was a mask layer overlaying the original RGB reflectance image, where pixels corresponding to plant leaves were assigned a value of 1 and background pixels a value of 0. This binary mask effectively isolated the leaf regions from the background by multiplying it with the vegetation spectral index image, providing a clean extraction of the plant leaves in the vegetation spectral index imagery (Figure 6c).

Figure 6. Visualization of Data Processing: (Top row): Top view (a) RGB image of a Chinese spinach plant captured using a smartphone. (b) Spectral index image, with boundaries delineated using Mask-CNN for leaf segmentation. (c) Resulting leaf-segmented image. (Bottom row): (d) 3D LiDAR point cloud of the plant, (e) filtered 3D point cloud after K-means clustering to isolate plant data, and (f) convex hull fitted to the filtered plant point cloud to extract structural features.

To assess the accuracy of the MaskCNN-based leaf delineation, we utilized the Intersection over Union (IoU) metric (see Equation 1). This metric compares the estimated leaf mask against a manually delineated leaf mask. Let denotes the estimated leaf-mask area and denotes the manually delineated leaf-mask area, then IoU is estimated as

An IoU value of 1 indicates perfect overlap, while a value of 0 indicates no overlap (Thoma, 2016). MaskCNN segmentation performance was assessed using a set of 245 manually extracted leaves from 25 randomly selected plants across all nitrogen fertigation treatments.

The leaf regions identified by the MaskCNN segmentation algorithm were then extracted from the RGB reflectance images to estimate vegetation indices. These segmented leaf regions were used to calculate various vegetation indices that serve as proxies for different plant traits. The RGB spectral indices were quantitative measures derived from plant leaf reflectance values, reflecting their sensitivity to specific plant characteristics, such as chlorophyll content and overall vegetation vigor.

Chlorophyll concentration in leaves is a critical parameter for assessing plant greenness, as it reflects photosynthetic capacity, vigor, and shoot biomass (Pavlovica et al., 2014; Wagner, 2022). The Excess Green Index (ExG), Green Leaf Index (GLI), and Normalized Green-Red Difference Index (NGRDI) are commonly used to evaluate chlorophyll content in leaves (Rasmussen et al., 2016; Bassine et al., 2019). The ExG index, calculated by subtracting the red and blue reflectance from twice the green reflectance, is highly responsive to changes in chlorophyll concentration. The GLI measures vegetation “greenness” by assessing green reflectance relative to other wavelengths (Louhaichi et al., 2001). Similarly, the NGRDI, which normalizes the difference between green and red reflectance, also serves as an indicator of chlorophyll concentration.

In addition to chlorophyll content, certain indices are designed to evaluate other leaf characteristics, such as pigmentation, stress, and structure. For example, the Triangular Greenness Index (TGI) (Hunt et al., 2011) and Normalized Difference Yellowness Index (NDYI) (Sulik and Long, 2016) are valuable in this regard. Healthier leaves often have certain thicknesses and internal structures that influence light absorption and reflection; therefore, variations in TGI values can indicate differences in leaf morphology, such as thickness or density, affecting light interaction (Hunt et al., 2011). The NDYI, which measures the difference between green and blue reflectance, provides insights into yellow pigments, suggesting potential stress or senescence (Sulik and Long, 2016). Both indices are instrumental for monitoring vegetation health and detecting stress conditions (Barton, 2012; Zhang and Kovacs, 2012).

In this study, we used a total of 15 widely recognized RGB spectral indices from the literature. Table 1 provides a detailed summary of these RGB spectral indices, including the parameters and calculations used to derive them from the smartphone-based RGB imagery. The average value of all leaf pixels were computed to obtain a plant-level RGB feature estimate for each index.

3.2 Structural data processing

L et , with ρi= (x i, yi, zi), be the 3D LiDAR point cloud obtained using the smartphone (Figure 6d). Each point in the raw point cloud is composed of the three-dimensional space characterized by the x, y, and z Euclidean coordinates derived from the iPhone LiDAR sensor, together with the R, G, and B attribute values derived internally using the iPhone camera. However, the raw 3D point cloud often contains noisy points which arise from various sources such as suspended particles in the air, glitches in electronics, or errors in data acquisition and processing. To ensure accurate downstream analysis, it is essential to remove these noisy points from the point cloud. This denoising process involved several steps: a) voxelization: The volume covered by the 3D point cloud was divided into S regular cubic voxels {Vi| i = 1, 2,…, S}, each with sides of equal length (i.e., 1cm), b) point density (PD) calculation: The number of points contained within each voxel was calculated, c) points with a voxel PD below a certain threshold were identified as noisy points and subsequently removed from the point cloud. The threshold value was chosen carefully to minimize both omission (failure to detect noisy points) and commission (incorrectly identifying valid points as noisy) errors. In our case, this threshold has been identified to be equal to 4 data points, i.e., we analyze all voxels whose cardinality is greater than 4. By implementing this denoising procedure, the resulting point cloud was cleansed of isolated points, facilitating accurate structural parameter estimation (Figure 6e).

The subset of points which is part of the plant object was identified by using the two-class (i.e., K = 2) K-means clustering approach. This clustering method delineates the background data points from the plant data jointly using the R, G, and B attributes. By clustering the points into two distinct classes, one representing the black background and the other representing the green plant, we can effectively separate the vegetation from other objects in the scene (Figure 6f). This segmentation process played a crucial role in isolating the plant canopy from the surrounding environment, enabling more accurate analysis and interpretation of the structural parameters derived from the LiDAR point cloud.

Structural attributes encapsulating the physiological characteristics of the plant were derived from G, including plant height, maximum crown span, point density, and crown volume, providing valuable insights into the plant’s structural composition. The plant height (HT) was determined as the elevation of the highest point within the plant point cloud G. Meanwhile, the maximum crown span (CW) was calculated as the greatest distance between any two points within the point cloud G when projected onto the ground plane (X-Y plane), effectively capturing the lateral extent of the plant canopy. To assess the crown density (CD), we calculated the average point count within each 1cm voxel containing at least one point. This voxel-based approach provides a robust measure of crown density, offering insights into the spatial distribution of foliage within the plant canopy. By encapsulating the plant canopy within a convex envelope, an estimate of the plant’s crown volume (CV) is obtained, facilitating a comprehensive understanding of the vegetation structure Harikumar et al. (2017). The CV was estimated as the volume enclosed by the convex hull VHull formed around G (Figure 6f). Here, the boundary fraction parameter α which controls the compactness of the convex hull, was set to 0.5 to produce a 3D hull that is neither too tight nor too loose. We acknowledge that convex hulls can overestimate canopy volume, particularly for sparse or irregular plant structures, as they enclose all outermost points. In this study, however, we focus on relative rather than absolute volume differences. Other structural traits included point count, point density and point count variance. The number of points above 75%, 50% and 25% of the plant height were represented as PC75, PC50 and PC25, respectively. The 9 plant-level LiDAR structural features used in the study are defined in Table 2.

3.3 Plant traits characterization using regression modeling

In this study, plant traits were estimated using machine learning regression models based on spectral (Table 1) and structural (Table 2) features derived from RGB imagery and LiDAR point clouds. The spectral features primarily captured reflectance signals associated with leaf nitrogen content, while the structural features provided volumetric and height-related information essential for accurate biomass estimation. We employed three powerful regression techniques including the Support Vector Regression (SVR) (Vapnik, 2013), the Random Forest (RF) (Ho, 1995), and the Lasso Regression (Tibshirani, 1996), to model leaf nitrogen and dry weight biomass, using the structural and the spectral features. Each model was optimized via grid search and validated using cross-validation. A brief overview of each modeling approach is provided below, with their mathematical formulations included in section A.3 in Appendix A.

4 Results and discussion

Fifteen RGB spectral features were generated for individual Chinese spinach plants from RGB leaf data, which were first segmented using the MaskCNN algorithm as a preprocessing step. The MaskCNN algorithm achieved a high Intersection over Union (IoU) accuracy of 0.92 for the leaf segmentation. Additionally, nine LiDAR structural features were estimated from the plant point cloud segment identified through a 2-class K-means clustering algorithm.

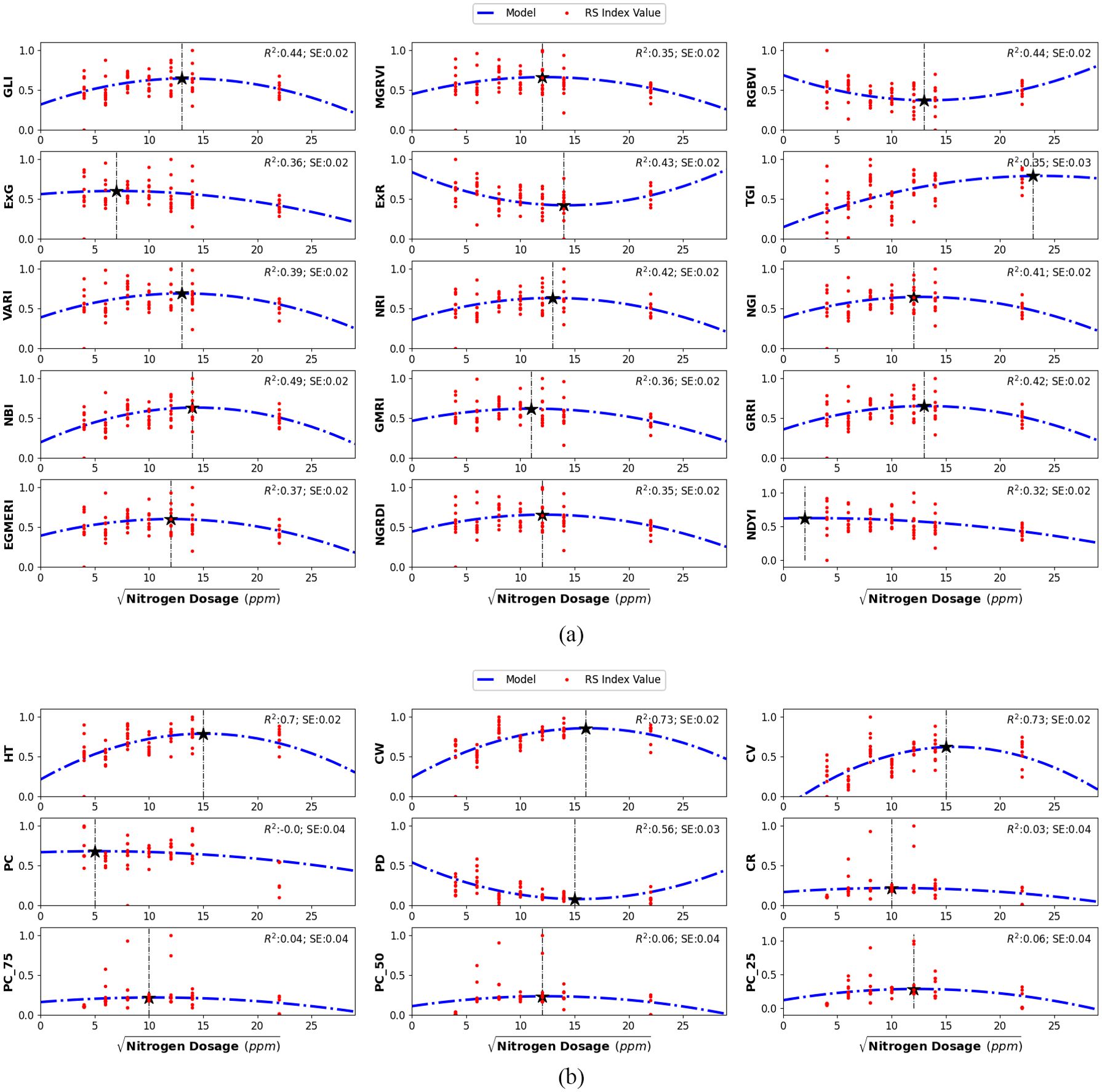

Variations in nitrogen dosing affected all 24 features, each serving as a proxy for specific plant traits or overall stress indicators. For example, RGB spectral features such as the Visible Atmospherically Resistant Index (VARI) and the Modified Green-Red Vegetation Index (MGRVI) proxies for chlorophyll concentration and greenness, initially increased with nitrogen dosage but declined beyond a certain level. In contrast, indices like the Excess Red (ExR) and the Red-Green-Blue Vegetation Index (RGBVI), which are associated with plant stress, displayed an inverse relationship with nitrogen dosage. Figure 7 illustrates the effect of nitrogen dosage on the RGB spectral and the LiDAR structural feature values. The visual assessments in Figure 4 provide further insight into the effect of nitrogen treatments. Specifically, plants treated with both the lowest and highest nitrogen dosages exhibited reduced yields in terms of plant height and biomass, suggesting that extreme nitrogen levels may inhibit growth and development in Chinese spinach.

Figure 7. Second-order polynomial regression models illustrating the non-linear relationship between (a) RGB spectral features and (b) LiDAR structural features with nitrogen dosage in leaves. A square-root transformation was applied to the independent variable to normalize the initially skewed distribution. The minimum and maximum index values in each plot are indicated by the vertical dashed line. The Coefficient of Regression (R2) and Standard Error (SE) are provided for each model.

The relationship between nitrogen dosing and individual RGB spectral and LiDAR structural features was modeled using three types of regression models: linear, second-order polynomial, and third-order polynomial. In our case, the second-order polynomial regression model provided the best fit for all the features, effectively capturing the non-linear relationships between features and nitrogen dosage. Additionally, to address the initially skewed distribution of nitrogen dosages, a square-root transformation was applied, which normalized the nitrogen dosage distribution and improved the linearity of the modeled relationships. This transformation facilitated accurate estimation of plant parameters. Figure 7 displays second-order polynomial models (dotted blue lines) that illustrate the relationships between various smartphone-derived indices and nitrogen dosages.

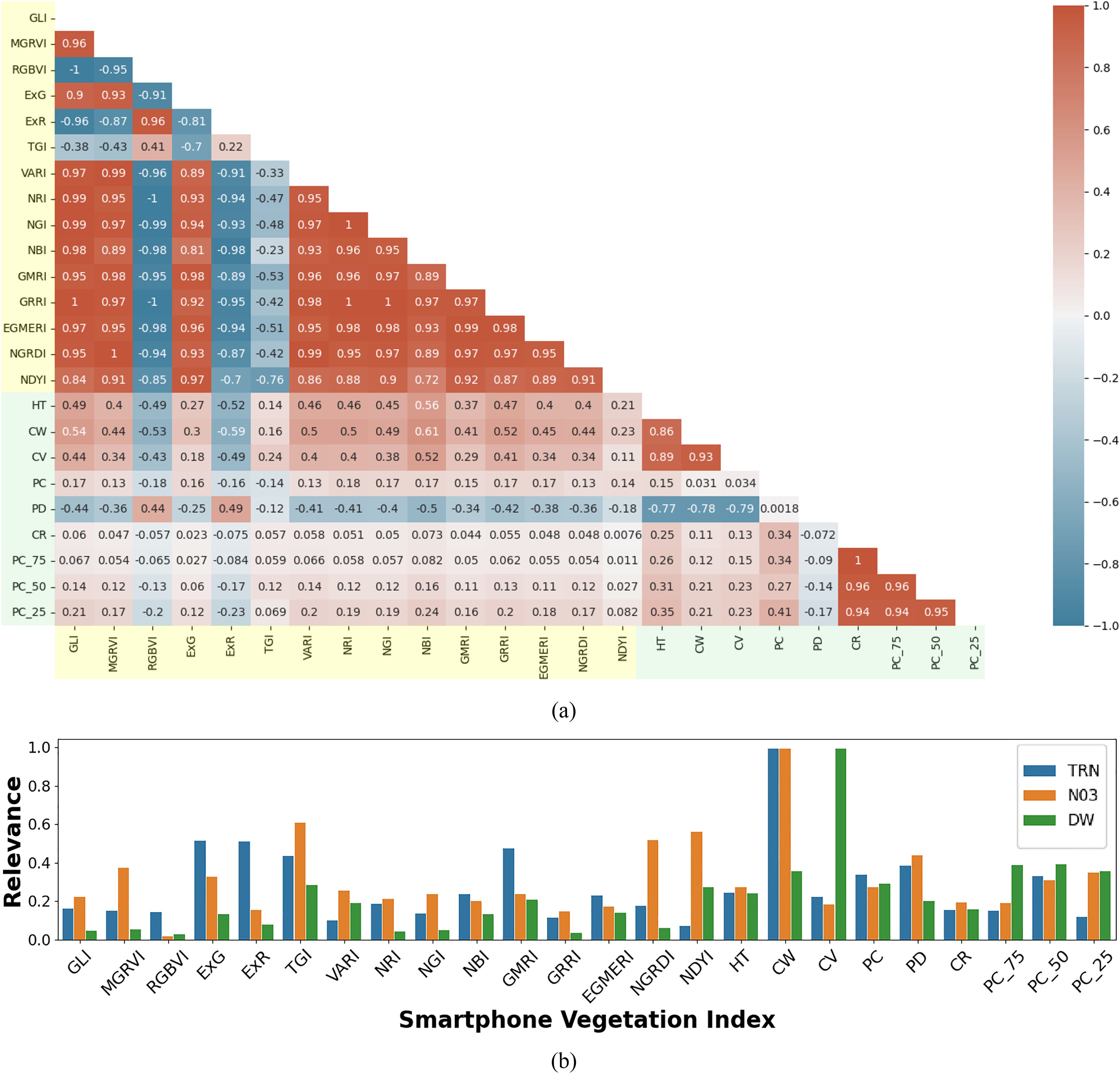

In the case of spectral features, the high covariance observed was primarily due to the limited spectral bands captured by the RGB imagery (i.e., 3 bands). A similar pattern was evident in the structural features derived from LiDAR data, which also exhibit high inter-feature correlation. However, as shown in the correlation matrix (Figure 8a), spectral and structural features are generally uncorrelated, indicating their complementary nature. This allows them to capture distinct physiological signals relevant to modeling leaf nitrogen content and shoot dry-weight biomass. The low correlation between the two feature sets, alongside higher correlations within each set, confirms that they provide unique and complementary information. Hence, the joint use of RGB spectral and LiDAR structural features has the greater potential to produce accurate crop trait estimates.

Figure 8. Multimodal feature correlation and relevance analysis for smartphone data: (a) Correlation matrix of RGB spectral indices (highlighted in light yellow) and LiDAR structural indices (highlighted in light green) derived from smartphone RGB imagery and LiDAR data. The matrix illustrates the relationships between different indices, with color intensity indicating the strength and direction of the correlation (red for positive, blue for negative correlations); (b) Normalized weights from the SVR model, indicating the relative importance of each RGB spectral and LiDAR structural feature in estimating leaf total reduced nitrogen (TRN), leaf nitrate (NO3), and shoot dry weight (DW).

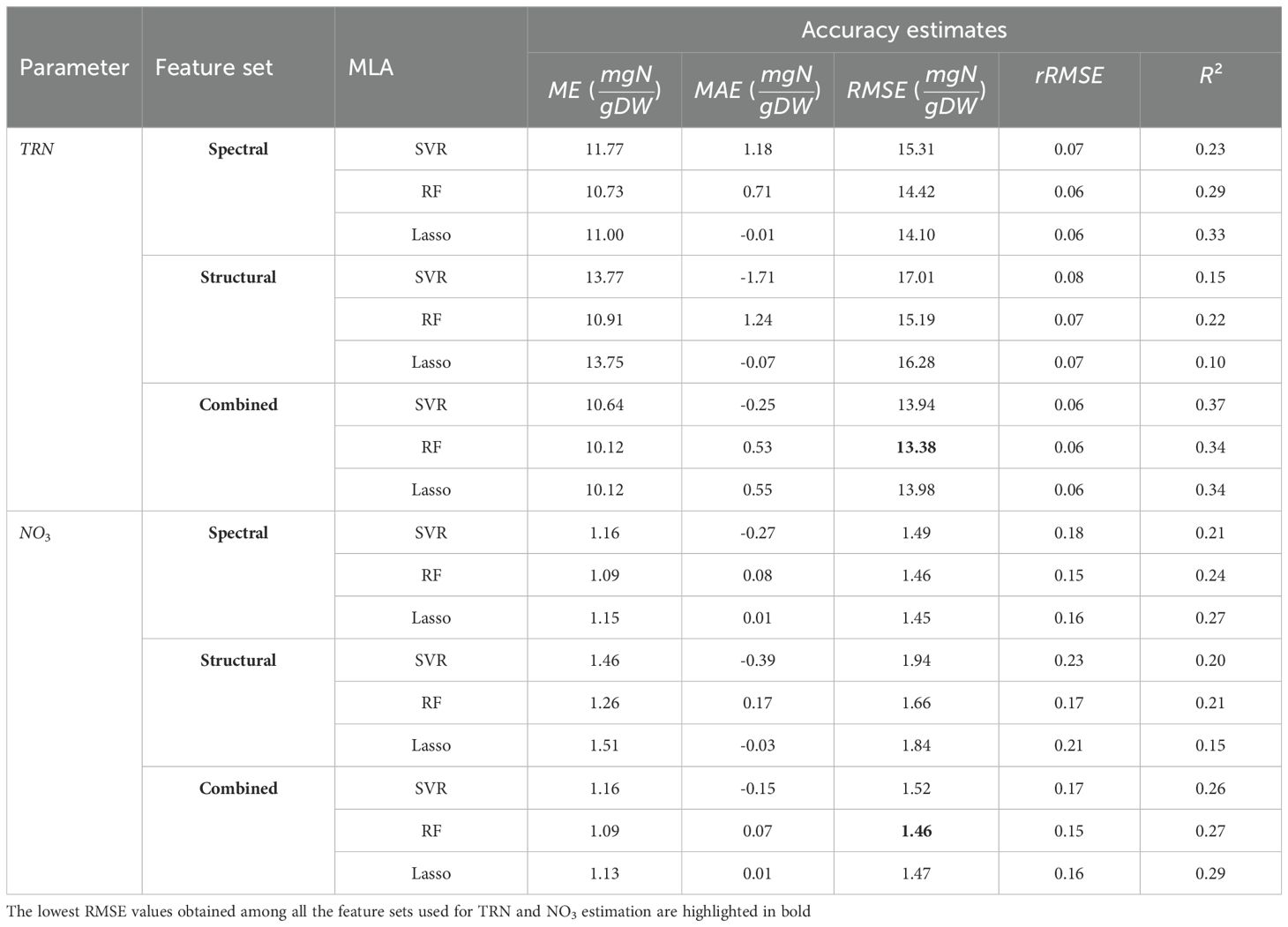

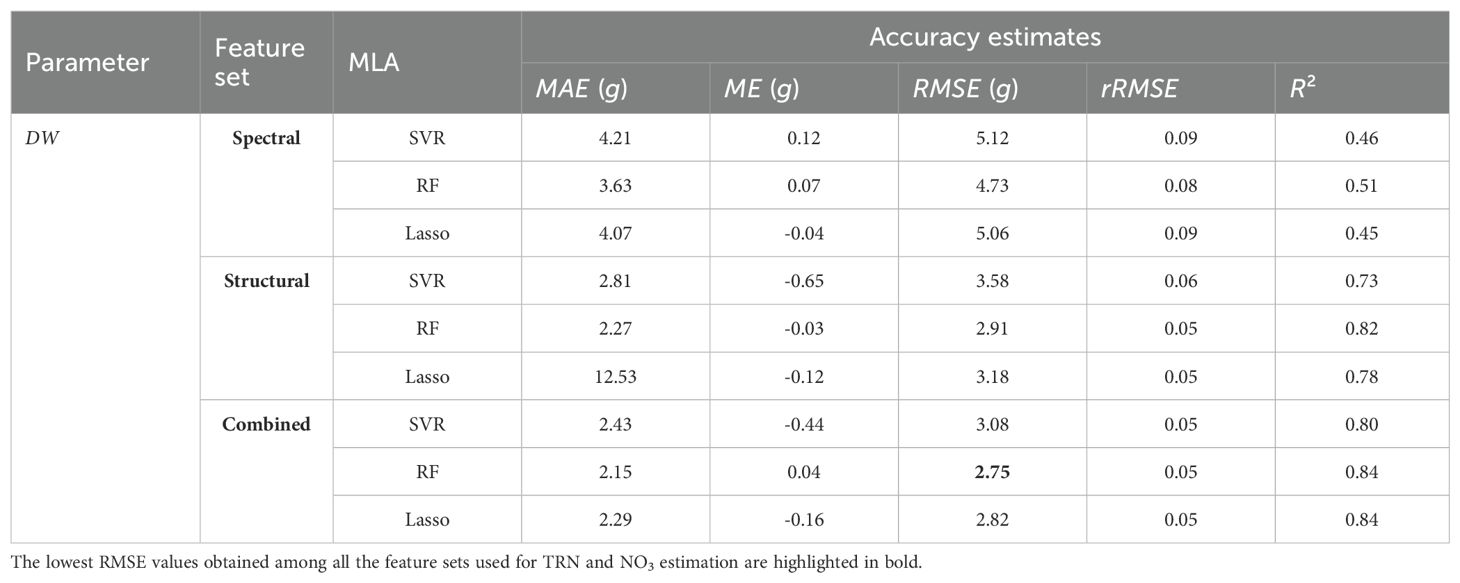

We modeled the three plant parameters: leaf TRN concentration, leaf NO3 concentration, and shoot DW biomass, using three machine learning algorithms including the Support Vector Regression (SVR), the Random Forest (RF), and the Lasso Regression. Each model was trained on one of three feature sets: RGB spectral features only, LiDAR structural features only, or a combined set of both RGB and LiDAR features. This multi-configuration approach allowed us to evaluate the unique contributions of each feature set and their combinations in predicting key plant parameters. For all the regression frameworks, the total available labeled data were divided into training (70%) and testing (30%) sets. The accuracy assessment of the regression models was quantified using the Mean Absolute Error (MAE), Mean Error (ME), Root Mean Squared Error (RMSE), Relative Root Mean Squared Error (rRMSE), and Coefficient of Determination (R2) matrices (see equations A4-A8 in Section A.3 in Appendix A). Across all feature-set configurations, the RF model consistently performed well, yielding relatively lower Root Mean Squared Error (RMSE) and higher Coefficient of Determination (R2) values compared to SVR and Lasso, particularly when using the combined feature set. Lasso Regression, however, showed high Mean Error (ME) and Mean Absolute Error (MAE) for the structural feature set (e.g., ME of 12.53 g), indicating possible overfitting or instability in Lasso’s performance for DW estimation. For the best-performing model (i.e., the combined set), the optimal hyperparameters were estimated as follows: for SVR, C = 10 and ∈ = 0.1; for RF, the number of decision trees T = 100 and maximum tree depth D = 5; and for Lasso regression, the regularization parameter λ = 0.01.

Further, a clear and consistent trend was observed across the feature sets: models trained only on the RGB spectral features resulted in the lowest prediction errors for TRN and NO3 estimation, while models trained only on the LiDAR-based structural features achieved the highest accuracy and lowest errors in predicting shoot DW. However, models trained using the combined set of both RGB and LiDAR features provided the lowest estimation errors (Tables 3, 4). Overall, the results demonstrate the effectiveness of combining RGB imagery and LiDAR data in crop parameter modeling and highlight the robustness of the RF algorithm in managing complex multivariate data. The model estimation performance for TRN concentration, NO3 concentration, and shoot DW, quantified using MAE, ME, RMSE, rRMSE, and R2 values, is provided in Tables 3, 4.

Table 3. The MAE, ME, RMSE, rRMSE, and R2 for leaf nitrogen concentration estimations done using the SVR, the RF, and the Lasso regression.

Table 4. The MAE, ME, RMSE, rRMSE, and R2 for shoot DW estimation done using the SVR, the RF, and the Lasso regression.

Beyond plant parameter estimation, we also focused on determining the optimal nitrogen dosage that maximizes plant chlorophyll and biomass using smartphone-derived RGB and LiDAR data. We consider optimal nitrogen dosage as the one that maximizes key traits such as leaf nitrogen and shoot biomass. These traits were assessed using RGB spectral indices and LiDAR-derived structural metrics, which provide reliable proxies for photosynthetic efficiency and overall plant growth. For example, as shown in Figure 5a, chlorophyll-sensitive spectral indices including ExR, VARI, and GMRI peak at approximately 149.3 ppm, indicating that this nitrogen level is generally associated with maximum chlorophyll concentration and photosynthetic efficiency Qiang et al. (2019). This supports the idea that the plant is able to utilize nitrogen most efficiently at this dosage. Additionally, LiDAR-derived metrics, including plant height, canopy volume, and estimates of dry weight, peak at 150.47 ppm, which corresponds to the nitrogen dosage that maximizes shoot dry-weight biomass, which is a key indicator of overall plant growth and vigor. By averaging these peak values, we derive an optimal nitrogen dosage of 149.9 ppm, which represents the most balanced nitrogen level for maximizing chlorophyll concentration, vigor, and shoot dry-weight biomass. This dosage of 149.9 ppm is close to the 120 ppm derived from the PlantArray system (Shenhar et al., 2024), providing further support for the validity of our smartphone-based approach. Although Shenhar et al. did not examine intermediate dosages between 120 ppm and 160 ppm, the alignment between these two studies strengthens the confidence in our findings, confirming that 149.9 ppm of nitrogen dosing provides the best overall plant vigor and shoot dry-weight biomass.

A detailed feature relevance analysis was conducted to assess the importance of various RGB and LiDAR-derived features in predicting key target variables: leaf TRN concentration, NO3 concentration, and DW biomass. Feature relevance was inferred based on the normalized weights assigned to each feature during SVR model training. These normalized weights were averaged across 100 modeling iterations, with each iteration using randomly generated training and test datasets to ensure robustness. The results indicate that feature importance varied significantly across models, reflecting the specific demands of each prediction task. For example, models developed to predict TRN and NO3 concentration assigned higher importance to the RGB features, likely because these spectral indices are closely related to nitrogen content, which affects reflectance in the visible spectrum. In contrast, models estimating shoot DW generally assigned greater weight to structural features obtained from LiDAR data, reflecting that biomass estimation can be more reliably modeled using plant morphology rather than spectral characteristics. Specifically, DW estimation showed high reliance on LiDAR-derived structural indices such as crown volume (CV) and point count at 75% height (PC75), which describe the plant’s physical structure and distribution. Conversely, for TRN and NO3 estimation, the models placed more emphasis on spectral features such as the Triangular Greenness Index (TGI) and Excess Green (ExG), both closely related to chlorophyll content and leaf greenness. These findings suggest a clear pattern: LiDAR-derived structural features are more effective for estimating morphological traits like biomass, while RGB features are better suited for predicting physiological traits like nitrogen and chlorophyll concentration. This distinction underscores the complementary nature of smartphone RGB imagery and LiDAR data in crop parameter modeling, with each sensor type contributing uniquely to different aspects of plant structure and function (Figure 8b).

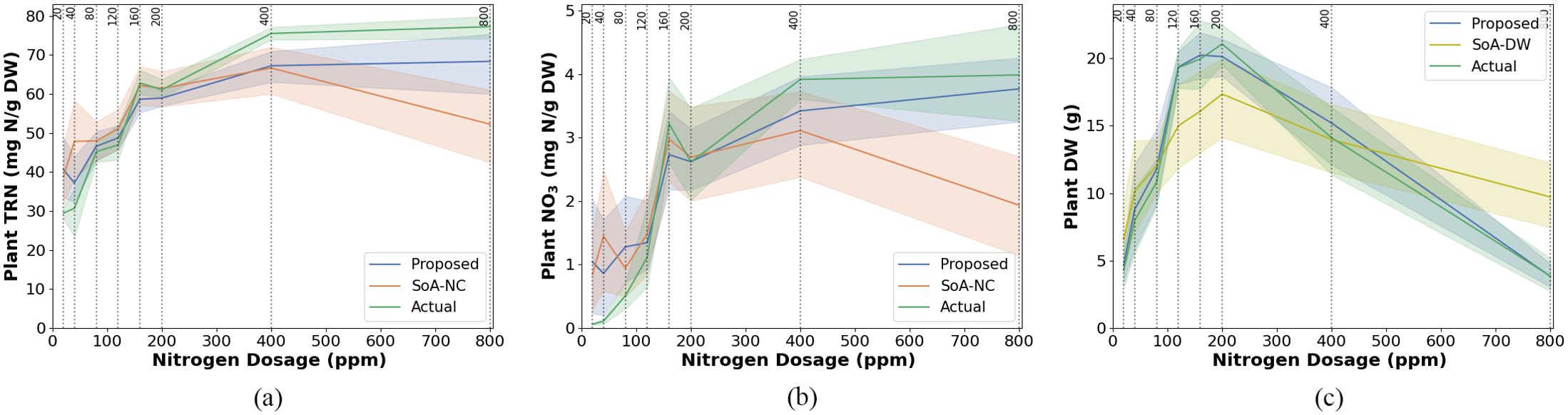

To evaluate our methodology, we compared its performance with a leading state-of-the-art leaf nitrogen concentration estimation approach (SoA-NC) that relies solely on smartphone RGB imagery. This benchmark method uses the Dark Green Color Index (DGCI), derived from intensity, hue, and saturation metrics of the plant leaves (Paleari et al., 2022). However, using only a single RGB image feature index limits its accuracy in estimating leaf nitrogen concentration. Our results illustrate the superior performance of our models, which consistently produced more accurate and precise estimates of leaf TRN and NO3 concentrations compared to the SoA-NC method. This improvement highlights the advantage of integrating advanced machine learning algorithms with both spectral and structural features derived from smartphone RGB and LiDAR sensors. Unlike the SoA-NC method, which focuses solely on spectral data, our approach leverages the complementary strengths of RGB features (e.g., color indices) and LiDAR structural features (e.g., plant height and canopy shape). Whatsoever, high variance in individual feature index values, even within a nitrogen treatment group, due to non-uniform lighting or shading on plant leaves, is a known source of error, which manifests as significant variance in TRN, NO3 and DW estimates. Additionally, certain LiDAR-derived features based on point count and point density exhibited low values, likely due to the limited variation in these features across the sampled plants.

We also compared shoot DW estimation performance with a state-of-the-art leaf-area-based shoot DW estimation (SoA-DW) method (Li et al., 2020). The SoA-DW method estimates shoot DW by: (a) segmenting plant leaves using Otsu’s thresholding on the green channel, (b) calculating leaf area (LAp) as the product of the total number of plant pixels (PPN) in the image, the individual pixel length (PL) of the phone camera, and the magnification factor (MF) of the phone camera, and (c) estimating shoot DW using a linear model based on LAp and actual DW values obtained from destructive sampling. Our proposed shoot DW estimation method more accurately tracked actual shoot DW compared to the SoA-DW method. The reduced performance of the SoA-DW method likely results from its inability to capture plant height from images and from errors in leaf delineation due to non-uniform lighting and shading.

A direct comparison of the TRN, NO3, and DW estimates obtained from our non-destructive model with those derived from destructive sampling method is presented in Figure 9, providing validation of the model’s accuracy and reliability. The results demonstrate that the proposed handheld smartphone-based system can accurately estimate leaf TRN, leaf NO3, and shoot DW without the need for manual harvesting, outperforming state-of-the-art methods that rely solely on RGB data.

Figure 9. Model estimation performance for (a) total reduced nitrogen (TRN), (b) nitrate (NO3), and (c) dry weight (DW) in Chinese spinach under varying nitrogen dosages. Each subfigure compares the performance of the proposed estimation method against state-of-the-art (SOA) estimation approaches, with reference data obtained from destructive leaf analysis. The SoA methods for nitrogen concentration and dry weight are denoted as SoA-NC and SoA-DW, respectively. Standard errors (SE) are shown as shaded regions around the model lines.

5 Summary and conclusion

Smartphone-based RGB imagery and LiDAR data show strong potential for modeling the leaf nitrogen and shoot dry-weight biomass of Amaranthus dubius (Chinese spinach), serving as a case study for subtropical leafy vegetable crops. The combined use of the spectral and the structural features derived from smartphone-acquired RGB and LiDAR data, respectively, enables accurate estimation of leaf TRN, NO3, and DW in Chinese spinach, with RMSE values as low as 3.9 mg N/g DW, 1.46 mg N/g DW, and 2.7 g, respectively. Spectral indices were found to be more effective for estimating leaf nitrogen concentration, whereas structural indices provided better performance for shoot biomass estimation. The optimal nitrogen dosage for maximizing yield and vigor in Chinese spinach was determined to be 149.9 ppm. The encouraging results from this study demonstrate the strong potential of using smartphones for yield management in leafy vegetable crops. Planned future works include a) investigating the effectiveness of the proposed methodology on other leafy vegetable crops such as the Brassica oleracea (Chinese broccoli), b) using different generalized linear and or additive machine learning modeling techniques, and c) expanding the application of this methodology to assess plant requirements for other essential mineral nutrients.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

AH: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. IS: Data curation, Formal Analysis, Resources, Validation, Writing – review & editing. MP: Formal Analysis, Validation, Writing – review & editing, Data curation. LQ: Data curation, Resources, Writing – review & editing. MM: Formal Analysis, Funding acquisition, Methodology, Supervision, Validation, Writing – review & editing. JH: Formal Analysis, Funding acquisition, Resources, Writing – review & editing. KN: Formal Analysis, Funding acquisition, Resources, Writing – review & editing. MG: Formal Analysis, Funding acquisition, Project administration, Resources, Supervision, Writing – review & editing. IH: Conceptualization, Formal Analysis, Methodology, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The Israel-Singapore Urban Farm (iSURF) is supported by the National Research Foundation, Prime Minister’s Office, Singapore, under its Campus for Research Excellence And Technological Enterprise (CREATE) programme [NRF2020-THE003-0008]. This work was supported in part by the Hebrew University of Jerusalem Center for Interdisciplinary Data Science Research (CIDR).

Acknowledgments

We gratefully acknowledge assistance from iSURF program collaborators Netatech Pte Ltd, Singapore, Mr. David Tan, and the Singapore Food Agency.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allen, S. (1989). “Analysis of vegetation and other organic materials.” in Chemical Analysis of Ecological Materials, ed. Allen, D. (Oxford, UK: Blackwell), 46–61.

Bar-Sella, G., Gavish, M., and Moshelion, M. (2024). From selfies to science–precise 3d leaf measurement with iphone 13 and its implications for plant development and transpiration. doi: 10.1101/2023.12.30.573617

Bassine, F. Z., Errami, A., and Khaldoun, M. (2019). “Vegetation recognition based on uav image color index,” in 2019 IEEE international conference on environment and electrical engineering and 2019 IEEE industrial and commercial power systems Europe (EEEIC/I&CPS Europe) (Piscataway, New Jersey, USA: IEEE), 1–4.

Bendig, J., Yu, K., Aasen, H., Bolten, A., Bennertz, S., Broscheit, J., et al. (2015). Combining uavbased plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Observation Geoinformation 39, 79–87.

Burdziakowski, P. and Bobkowska, K. (2021). Uav photogrammetry under poor lighting conditions—accuracy considerations. Sensors (Basel Switzerland) 21, 3531., PMID: 34069500

Cantliffe, D. J. (1972). Nitrate accumulation in vegeta ble crops as affected by photoperiod and light duration1. J. Am. Soc. Hortic. Sci. 97, 414–418.

Chen, J., Yao, X., Huang, F., Liu, Y., Yu, Q., Wang, N., et al. (2016). N status monitoring model in winter wheat based on image processing. Trans. Chin. Soc. Agric. Eng. 32, 163–170.

Chevalier, L., Christina, M., Ramos, M., Heuclin, B., Fevrier, A., Jourdan, C., et al. (2025). Root biomass plasticity in response to nitrogen fertilization and soil fertility in sugarcane cropping systems. Eur. J. Agron. 167, 127549.

Evans, J. R. (1983). Nitrogen and photosynthesis in the flag leaf of wheat (triticum aestivum l.). Plant Physiol. 72, 297–302.

Fan, Y., Feng, H., Jin, X., Yue, J., Liu, Y., Li, Z., et al. (2022). Estimation of the nitrogen content of potato plants based on morphological parameters and visible light vegetation indices. Front. Plant Sci. 13, 1012070., PMID: 36330259

Gamon, J. and Surfus, J. (1999). Assessing leaf pigment content and activity with a reflectometer. New Phytol. 143, 105–117.

Gausman, H. W. (1985). Plant leaf optical properties in visible and near-infrared light (Lubbock, TX, USA.: Texas Tech Press).

Gitelson, A. A., Kaufman, Y. J., Stark, R., and Rundquist, D. (2002). Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 80, 76–87.

Govindasamy, P., Muthusamy, S. K., Bagavathiannan, M., Mowrer, J., Jagannadham, P. T. K., Maity, A., et al. (2023). Nitrogen use efficiency—a key to enhance crop productivity under a changing climate. Front. Plant Sci. 14, 1121073., PMID: 37143873

Halperin, O., Gebremedhin, A., Wallach, R., and Moshelion, M. (2017). High-throughput physiological phenotyping and screening system for the characterization of plant–environment interactions. Plant J. 89, 839–850., PMID: 27868265

Harikumar, A., Bovolo, F., and Bruzzone, L. (2017). An internal crown geometric model for conifer species classification with high-density lidar data. IEEE Trans. Geosci. Remote Sens. 55, 2924–2940.

Hassona, M. M., Abd El-Aal, H. A., Morsy, N. M., and Hussein, A. M. (2024). Abiotic and biotic factors affecting crop growth and productivity: Unique buckwheat production in Egypt. Agriculture 14, 1280

He, J., Chua, E. L., and Qin, L. (2020). Drought does not induce crassulacean acid metabolism (cam) but regulates photosynthesis and enhances nutritional quality of mesembryanthemum crystallinum. PloS One 15, e0229897.

Ho, T. K. (1995). “Random decision forests,” in Proceedings of 3rd international conference on document analysis and recognition, vol. 1. (Piscataway, New Jersey, USA: IEEE), 278–282.

Hufkens, K., Melaas, E. K., Mann, M. L., Foster, T., Ceballos, F., Robles, M., et al. (2019). Monitoring crop phenology using a smartphone based near-surface remote sensing approach. Agric. For. meteorology 265, 327–337.

Hunt, E. R., Cavigelli, M., Daughtry, C. S., Mcmurtrey, J. E., and Walthall, C. L. (2005). Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 6, 359–378.

Hunt, E. R., Jr., Daughtry, C., Eitel, J. U., and Long, D. S. (2011). Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 103, 1090–1099.

Jia, L., Bürkert, A., Chen, X., Roemheld, V., and Zhang, F. (2004). Low-altitude aerial photography for optimum n fertilization of winter wheat on the north China plain. Field Crops Res. 89, 389–395.

Lee, K.-J. and Lee, B.-W. (2013). Estimation of rice growth and nitrogen nutrition status using color digital camera image analysis. Eur. J. Agron. 48, 57–65.

Lefsky, M. A., Cohen, W. B., Parker, G. G., and Harding, D. J. (2002). Lidar remote sensing for ecosystem studies: Lidar, an emerging remote sensing technology that directly measures the three-dimensional distribution of plant canopies, can accurately estimate vegetation structural attributes and should be of particular interest to forest, landscape, and global ecologists. BioScience 52, 19–30.

Li, C., Adhikari, R., Yao, Y., Miller, A. G., Kalbaugh, K., Li, D., et al. (2020). Measuring plant growth characteristics using smartphone based image analysis technique in controlled environment agriculture. Comput. Electron. Agric. 168, 105123.

Louhaichi, M., Borman, M. M., and Johnson, D. E. (2001). Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 16, 65–70.

Meyer, G. E. and Neto, J. C. (2008). Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 63, 282–293.

Morelock, T. E. and Correll, J. C. (2008). “Spinach,” in Vegeta bles I: Asteraceae, brassicaceae, chenopodicaceae, and cucurbitaceae (Berlin, Germany: Springer), 189–218.

Näsi, R., Honkavaara, E., Lyytikäinen-Saarenmaa, P., Blomqvist, M., Litkey, P., Hakala, T., et al. (2015). Using uav-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sens. 7, 15467–15493.

Neto, J. C. (2004). A combined statistical-soft computing approach for classification and mapping weed species in minimum-tillage systems (Lincoln, Nebraska, USA: The University of Nebraska-Lincoln).

Paleari, L., Movedi, E., Vesely, F. M., Invernizzi, M., Piva, D., Zibordi, G., et al. (2022). Estimating plant nitrogen content in tomato using a smartphone. Field Crops Res. 284, 108564.

Pavlovica, D., Nikolic,´, B., urovic,´, S., Waisi, H., Anelkovic,´, A., and Marisavljevic,´, D. (2014). Chlorophyll as a measure of plant health: Agroecological aspects. Pesticidi i fitomedicina 29, 21–34.

Qiang, L., Rong, J., Wei, C., Xiao-lin, L., Fan-lei, K., Yong-pei, K., et al. (2019). Effect of low-nitrogen stress on photosynthesis and chlorophyll fluorescence characteristics of maize cultivars with different low-nitrogen tolerances. J. Integr. Agric. 18, 1246–1256.

Qiu, Z., Ma, F., Li, Z., Xu, X., Ge, H., and Du, C. (2021). Estimation of nitrogen nutrition index in rice from uav rgb images coupled with machine learning algorithms. Comput. Electron. Agric. 189, 106421.

Rasmussen, J., Ntakos, G., Nielsen, J., Svensgaard, J., Poulsen, R. N., and Christensen, S. (2016). Are vegetation indices derived from consumer-grade cameras mounted on uavs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 74, 75–92.

Shenhar, I., Harikumar, A., Pebes Trujillo, M. R., Zhao, Z., Lin, Q., Setyawati, M. I., et al. (2024). Nitrogen ionome dynamics on leafy vegetab les in tropical climate. bioRxiv: Cold Spring Harbor Laboratory, New York, USA. doi: 10.1101/2024.10.17.618770

Sulik, J. J. and Long, D. S. (2016). Spectral considerations for modeling yield of canola. Remote Sens. Environ. 184, 161–174.

Tao, M., Ma, X., Huang, X., Liu, C., Deng, R., Liang, K., et al. (2020). Smartphone-based detection of leaf color levels in rice plants. Comput. Electron. Agric. 173, 105431.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. J. R. Stat. Society: Ser. B (Methodological) 58, 267–288.

Valenzuela, H. (2024). Optimizing the nitrogen use efficiency in vegeta ble crops. Nitrogen 5, 106–143.

Vapnik, V. (2013). The nature of statistical learning theory (New York, USA: Springer Science & Business Media).

Wagner, J. (2022). Comparing chlorophyll concentrations and plant vigor characteristics between strawberry (Fragaria X ananassa) accessions. Ph.D. thesis (Wolfville, Nova Scotia, Canada: Acadia University).

Woebbecke, D. M., Meyer, G. E., Von Bargen, K., and Mortensen, D. A. (1995). Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 38, 259–269.

Zhang, C. and Kovacs, J. M. (2012). The application of small unmanned aerial systems for precision agriculture: a review. Precis. Agric. 13, 693–712.

Zhang, L., Zou, L., Wu, C., Jia, J., and Chen, J. (2021). Method of famous tea sprout identification and segmentation based on improved watershed algorithm. Comput. Electron. Agric. 184, 106108.

Zhao, X., Zhou, Y., Min, J., Wang, S., Shi, W., and Xing, G. (2012). Nitrogen runoff dominates water nitrogen pollution from rice-wheat rotation in the taihu lake region of China. Agriculture Ecosyst. Environ. 156, 1–11.

A APPENDIX A

A.1 Nutrient Recipe

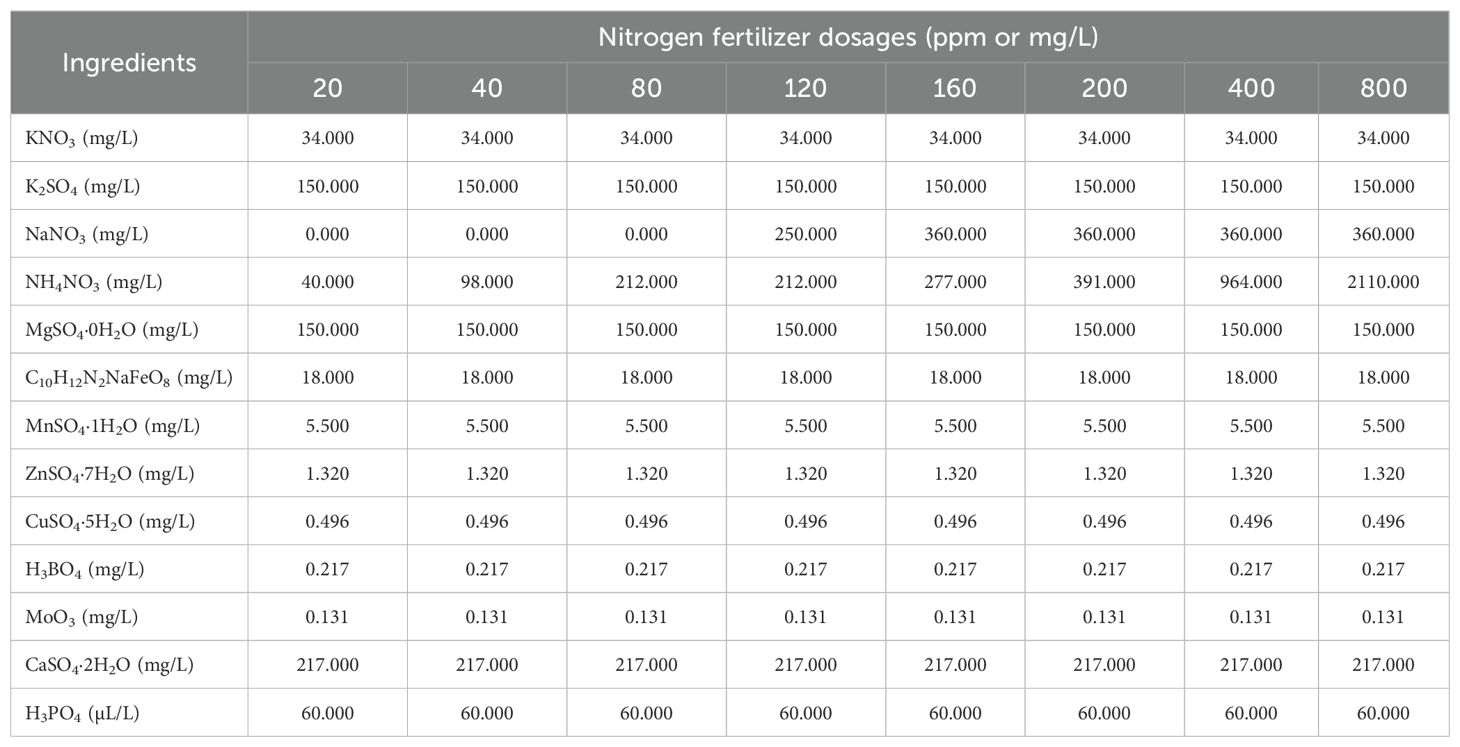

The composition of the different nutrient solutions used for nitrogen fertigation treatments for the 84 Chinese spinach plants is shown in Table A1.

Table A1. Composition of the different nutrient solutions used for the nitrogen fertigation treatments.

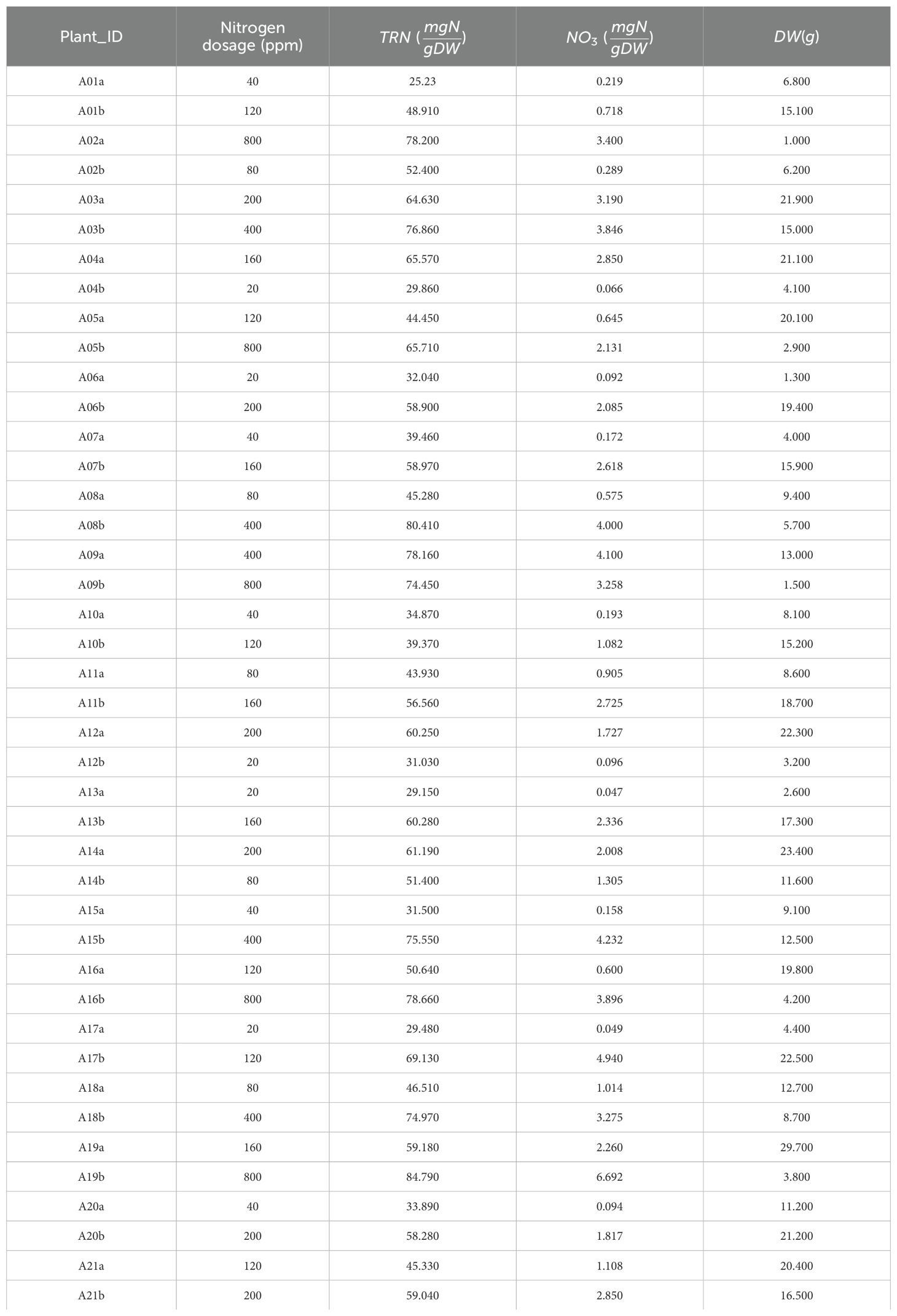

A.2 Destructive Sampling Results: Leaf Nitrogen and Shoot Dry-weight Biomass Traits

The TRN concentrations in the leaves, the NO3 concentrations, and the DW were measured in the laboratory through destructive sampling of the post-harvest shoot tissue for individual plants, are reported in Table A2.

Table A2. The leaf total reduced nitrogen (TRN) concentrations, the leaf nitrate (NO3) concentrations, and the shoot dry weight (DW) measured in lab by destructive analysis of the post-harvest shoot tissue, for the individual plants.

A.3 Regression Models

The SVR model with the linear kernel (Vapnik, 2013) is used to accurately model the plant parameters, for its exception ability to model underlying relationship and quantifying feature relevance. Equation A1 shows the objective function and constraints of SVR with the linear kernel.

L et x k∈ RF be a F dimensional feature vector of the kth training sample, ykbe the target value corresponding to the kth training sample, w = [w1, w2,…, wF] be the vector containing the scalar weights associated with individual features in the feature set, bbe the bias term, ∈ be the margin of tolerance,

and ξk be the slack variables associated with each data point, and C be the regularization factor. The SVR is mathematically formulated as

Here, the parameters C and є were estimated using grid search. The value of C was varied over the range [0.01, 0.1, 1, 10, 100], while є was varied over the range [0.01, 0.1, 0.5, 1], employing 5-fold crossvalidation to assess model performance. The model fitting was repeated 100 times using different random sets of training and testing data, and the individual parameter estimates were averaged to obtain a reliable target parameter estimate.

The RF regression (Ho, 1995) follows an ensemble learning approach, where a collection of decision tree regression models operates independently to generate parameter estimates. The predictions from these individual trees are then averaged to produce a more reliable estimate. If, the RF model consists of T individual decision tree regression functions f t(x) where t = 1, 2,…, T, the target parameter estimate is calculated as the average of estimates obtained from the individual trees using Equation A2.

Here, the T and tree depth D of each tree were estimated using grid search on T for the range [1, 200] with a step size of 10, and D for the range [3, 5, 7, 10, 15] utilizing 5-fold cross-validation with, to assess the model performance.

We also model the plant parameters using the Lasso Regression (Tibshirani, 1996) which is a widely used linear regression technique that incorporates feature regularization. Let, yk is the true target variable value, Xk be the vector of predictors for the kth observation, w be the vector of coefficients to be estimated, and λ > 0 be the regularization parameter that controls the strength of the penalty. Then, Lasso Regression is mathematically represented as

The λ was set by performing grid search on the range [0.01,0.1,1,10,100]. Once (A3) is optimized, the target variable estimate for test data points t is obtained as .

where, y is the true response, is the estimated response, and is the sample mean.

Keywords: biomass estimation, smartphone, lidar, crop parameter modelling, smart farming

Citation: Harikumar A, Shenhar I, Pebes-Trujillo MR, Qin L, Moshelion M, He J, Ng KW, Gavish M and Herrmann I (2025) Harnessing smartphone RGB imagery and LiDAR point cloud for enhanced leaf nitrogen and shoot biomass assessment - Chinese spinach as a case study. Front. Plant Sci. 16:1592329. doi: 10.3389/fpls.2025.1592329

Received: 12 March 2025; Accepted: 30 June 2025;

Published: 13 August 2025.

Edited by:

Julio Nogales-Bueno, Universidad de Sevilla, SpainReviewed by:

Vinay Pagay, University of Adelaide, AustraliaFan Yiguang, Beijing Academy of Agriculture and Forestry Sciences, China

Copyright © 2025 Harikumar, Shenhar, Pebes-Trujillo, Qin, Moshelion, He, Ng, Gavish and Herrmann. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ittai Herrmann, aXR0YWkuaGVycm1hbm5AbWFpbC5odWppLmFjLmls

Aravind Harikumar

Aravind Harikumar Itamar Shenhar

Itamar Shenhar Miguel R. Pebes-Trujillo

Miguel R. Pebes-Trujillo Lin Qin4

Lin Qin4 Menachem Moshelion

Menachem Moshelion Jie He

Jie He Kee Woei Ng

Kee Woei Ng Ittai Herrmann

Ittai Herrmann