- 1College of Mechanical and Electrical Engineering, Shihezi University, Shihezi, China

- 2Key Laboratory of Northwest Agricultural Equipment, Ministry of Agriculture and Rural Affairs, Shihezi University, Shihezi, China

- 3Xinjiang Production and Construction Corps Mechanization Engineering Laboratory for Special Crop Production, Shihezi University, Shihezi, China

- 4College of Engineering, Northeast Agricultural University, Harbin, China

- 5College of Medicine, Shihezi University, Shihezi, China

- 6State Key Laboratory for Crop Stress Resistance and High-Efficiency Production, Shaanxi Key Laboratory of Agricultural and Environmental Microbiology, College of Life Sciences, Northwest A&F University, Yangling, Shaanxi, China

- 7Yantai Agricultural Technology Popularization Center, Yantai, China

Introduction: Weeds represent a critical component of agricultural biodiversity and contribute to a range of ecosystem services, yet they remain a major constraint on global crop production. Remote sensing technology, particularly hyperspectral imaging, has advanced from spectral response patterns to species identification and vegetation monitoring. Consequently, the ability to accurately map weed species and assess their physiological activity in agricultural settings is of growing important.

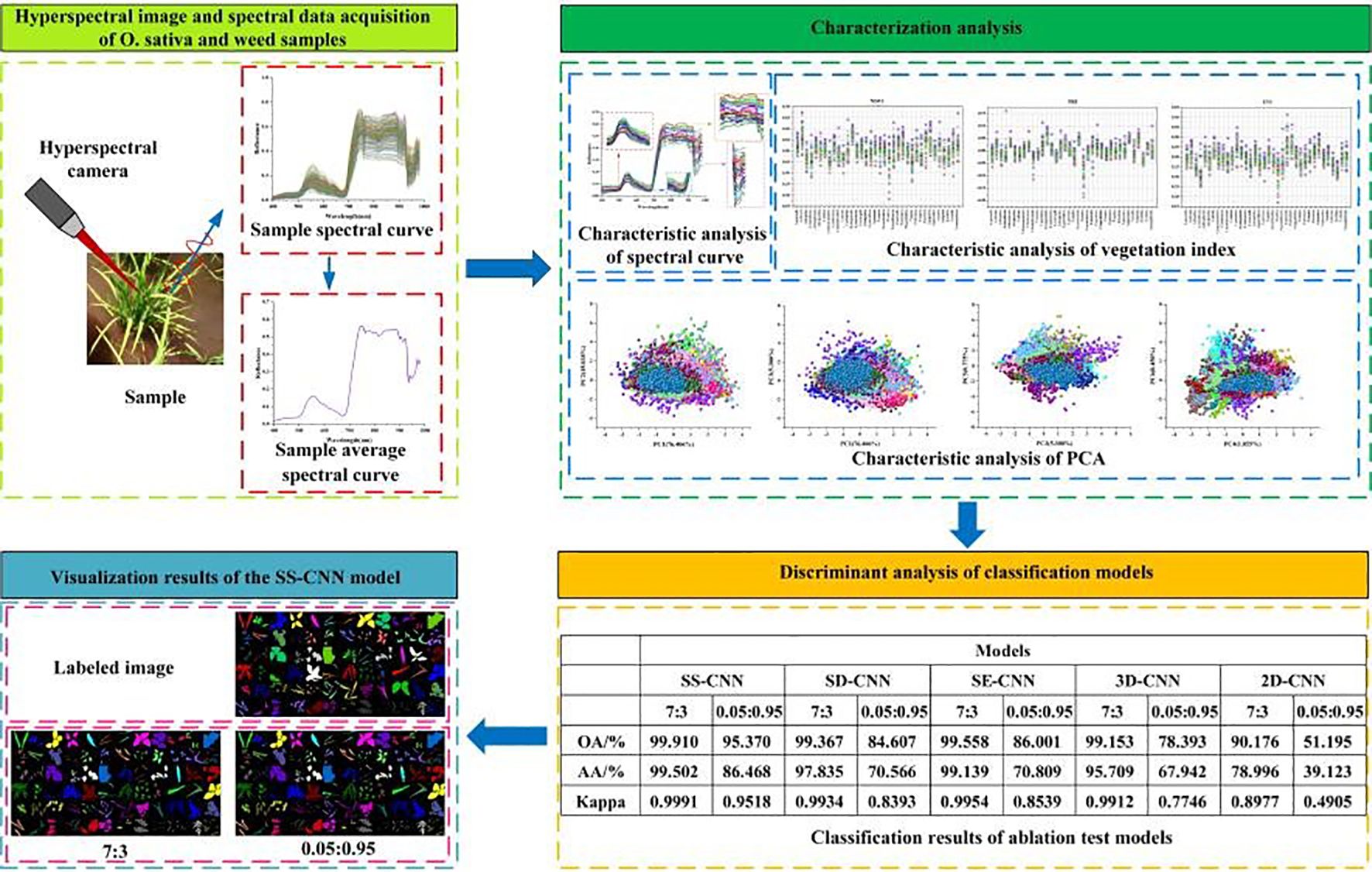

Methods: In this study, we established a hyperspectral library of rice and weed species in cold regions of northern China, comprising a total of 36 species. Using a ground-based hyperspectral camera (SPECIM-IQ), we collected 1080 hyperspectral images and extracted representative spectral reflectance curves for rice and 35 weed species. We employed canopy spectral profile characteristics, vegetation indices, and principal component analysis (PCA) to characterize and explain the differences among various weeds.

Results: A novel deep learning network, SS-CNN, was developed to identify rice and weed species from hyperspectral imagery, and ablation experiments were conducted to evaluate its performance. When the training sample size (Tr) was set at 70%, the SS-CNN model outperformed the comparative models with the best identification results (overall accuracy (OA): 99.910%, average accuracy (AA): 99.502%, Kappa: 0.9991). Even at a reduced training sample size of 5%, the SS- CNN algorithm maintained optimal classification performance (OA: 95.370%; AA: 86.468%; Kappa: 0.9518).

Discussion: This study demonstrates the application of proximal hyperspectral remote sensing and deep learning networks for rice and weed identification and characterization in harsh field scenarios. It provides a valuable baseline for understanding the hyperspectral characteristics of paddy field weed stress and monitoring their growth status.

1 Introduction

Weeds play a crucial role in enriching biodiversity and ecosystem services, such as promoting biological evolution, improving ecological environments, and maintaining climate stability (Korres et al., 2016; Guo et al., 2018; Gage and Schwartz-Lazaro, 2019). Maintaining a certain level of weed diversity in farmlands also benefits nutrient cycling, protecting natural enemies of pests, and halting soil erosion (Librán-Embid et al., 2020; Quan et al., 2023). An important constraint on rice yield and quality is weed competition for natural resources, which can cause crop growth disruptions, changes in external morphological characteristics, biochemical component content, photosynthetic mechanism efficiency, chlorophyll, and other pigments in rice plants, and, in extreme cases, crop failure (Zhang et al., 2019; Sulaiman et al., 2022). Furthermore, the extent of these adverse effects often varies with weed species, their growth habits, and environmental conditions (Malik and Singh, 1995; Dass et al., 2017). Therefore, in order to promptly estimate the heterogeneity, weed stress and mitigate adverse effects on crop productivity and the environment, the ability to create weed species maps for agricultural fields and analyze weed physiological activity has become of paramount importance. However, weed information collection and regular updates of biophysical conditions for weed data are essential for tracking weed status, which in turn poses challenges to current weed physiological activity monitoring and species identification using traditional visual weed assessment (Chen et al., 2013; Liu et al., 2022). Furthermore, traditional methods are associated with escalating costs, as they involve time-consuming, labor-intensive, and financially burdensome fieldwork. Consequently, there is an urgent demand for a reliable, efficient approach capable of detecting subtle variations in plant growth, physiological status, and productivity parameters that are specific to different plant species. Importantly, such an approach should also identify the key drivers of these variations, thereby facilitating the optimization of subsequent crop management strategies and resource allocation.

With the advancement of remote sensing technology, it has become possible to conduct large-scale, regular observations, create weed distribution maps, and monitor changes in agricultural environments using state-of-the-art satellite and aerial sensors. These satellite and aerial sensors offer a wide range of imagery, including RGB, multi-spectral and hyperspectral images. However, despite the recent launch of satellites providing sub-meter ground resolution (Congalton et al., 2014), the satellites’ altitude, weather conditions, and highly mixed farmland communities, as well as the Chinese BeiDou Navigation Satellite System (BDS), Geostationary Orbit (GEO), and Inclined Geostationary Orbit (IGSO), also have systematic errors in orbital angles related to solar radiation pressure (SRP) (Guo et al., 2016). These factors greatly affect the retrieval accuracy of aviation and aerospace images. This implies that, in many cases, detection is only possible when defects are already widespread. On the other hand, drones can offer ground sample distances (GSD) of less than 1 centimeter. However, this technology also comes with some drawbacks: operating drones requires specific training, flight regulations may be stringent, crashes are common, and weather conditions can reduce image quality or even impede flights (Barbedo, 2019). Additionally, due to the heterogeneity of agricultural systems, complex crop cycles, and the similarity in color and spectral characteristics between weeds and crops, weed and crop detection from hyperspectral images is a challenging task (Wang et al., 2022b; Coleman et al., 2023). The classification process becomes even more challenging in the presence of crop occlusion and complex background environments, such as soil. Therefore, the use of in-situ or ground-level spectroscopy for close-range image measurements is of-ten a more feasible option to achieve high spatial resolution.

Some hyperspectral databases composed of plant-specific information have been established, providing important support for the development of environmental protection, sustainable agriculture, and other fields. Laporte-Fauret et al (Laporte-Fauret et al., 2020). conducted a comprehensive multi-scale survey of coastal dune systems located approximately about 20 km southwest of France, and generated a spectral library of vegetation community ground cover types in dune areas to characterize the spatial distribution of stability patterns in coastal dunes. Prasad et al (Prasad et al., 2015). collected canopy-level field spectra and leaf-level laboratory spectra of 34 species from two different mangrove ecosystems on the eastern coast of India, and the removal of water vapor absorption bands and smoothing of spectra to develop an exclusive spectral library of mangrove species to generate species level mangrove mapping of other regions will be taken up in future studies. Chi et al (Chi et al., 2021). developed a spectral library of 16 common vegetation species and mosses in the Ant-arctic ice-free region using field spectrometers, and analyzed them using spectral dis-crimination measures to observe the spectral characteristics and potential separability of Antarctic plants in different wavelength ranges.Su et al (Su et al., 2022). analyzed (spectrally) and mapped the distribution of triticale weeds in wheat fields by integrating unmanned aerial vehicle (UAV), multispectral imagery, and machine learning techniques. Mkhize et al (Mkhize et al., 2024). recorded 165 GPS points of weed, maize and mixed categories in the early growth stages of six maize farms, these GPS points were superimposed on Sentinel-2 images within two days after obtaining field data to guide the collection of spectral features of maize, mixed and weed categories. Dai et al (Dai et al., 2023). collected 700 sets of spectral data of wheat and weeds, and the classical support vector machine (SVM) based on spectral data was applied to identify weeds to study the classification performance of wheat and weeds in different bands. While existing spectral libraries encompass a broad range of spectral information, they offer limited coverage of spectral characteristics for weed species in agricultural ecosystems, especially in paddy fields. Unique to paddy fields, species such as E.crus-galli and S.trifolia, are unlikely to be found from these spectral libraries. Northern Chinese cold paddy fields are renowned for their high weed species diversity and distinctive agricultural landscape features, with most weed growth occurring in the intense field environment characterized by a network of canals and vast fertile expanses. Therefore, this study takes the rich weed species in paddy fields in northern China as the research object, and pro-vides useful contributions to the development of hyperspectral images and databases to fill the gap in the study of weed stress species in paddy fields in northern China.

Considering the above background, the work conducted in this study is outlined as follows:(1) A hyperspectral library for rice fields in northern China was established. From May to September 2023, a total of 1080 high-spectral images were collected in various rice cultivation areas, covering 36 species (belonging to 18 families and 36 genera) of both rice and weeds. (2) The physiological activity of rice and weed was indirectly characterized using canopy spectral profile characteristics and vegetation indices. (3) A deep neural network was developed to identify rice and weed species in real agricultural field conditions. We also analyzed the results of model ablation experiments conducted under different modeling strategies, discussing the roles of different modules within the deep neural networkxa.

2 Materials and methods

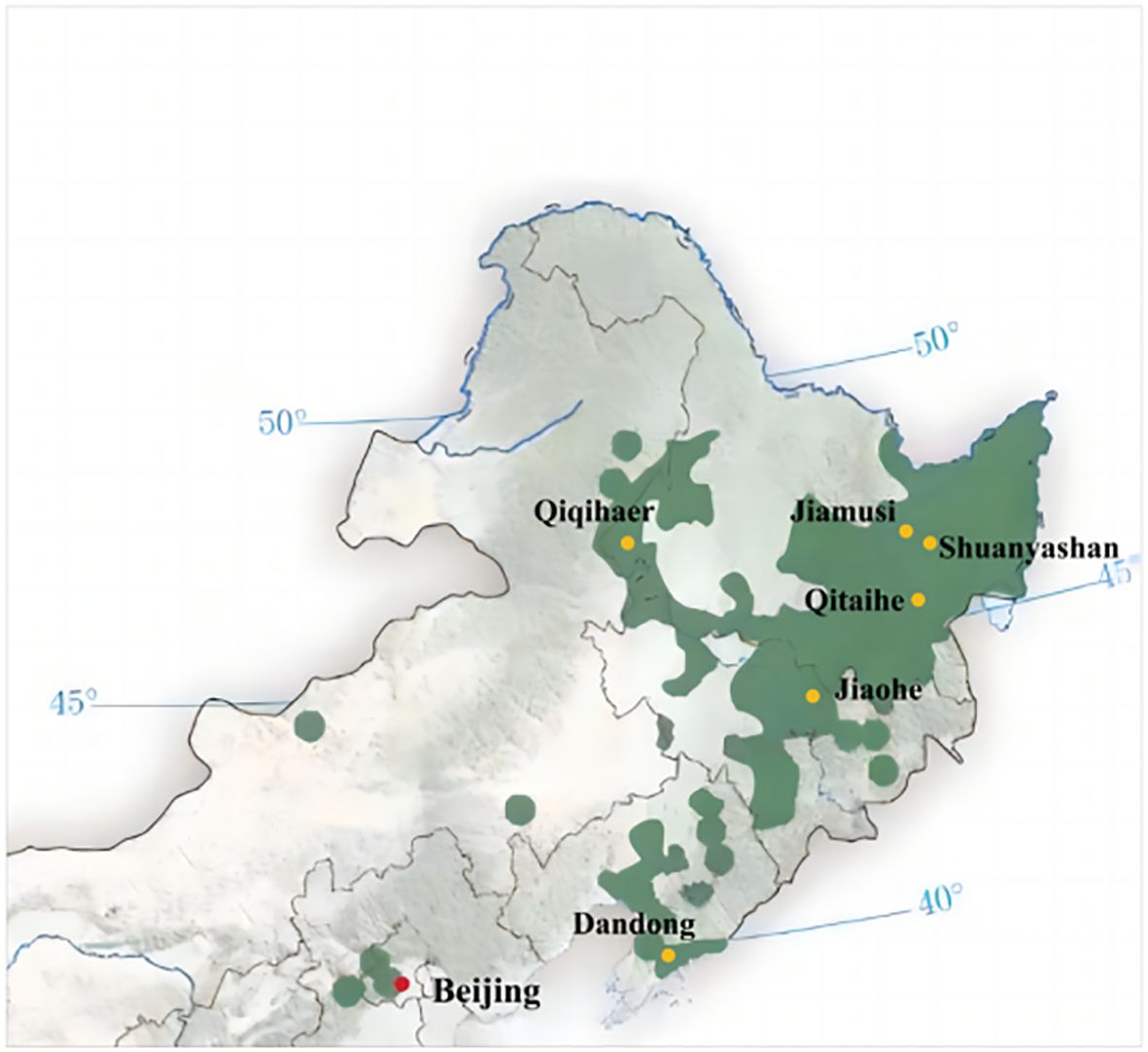

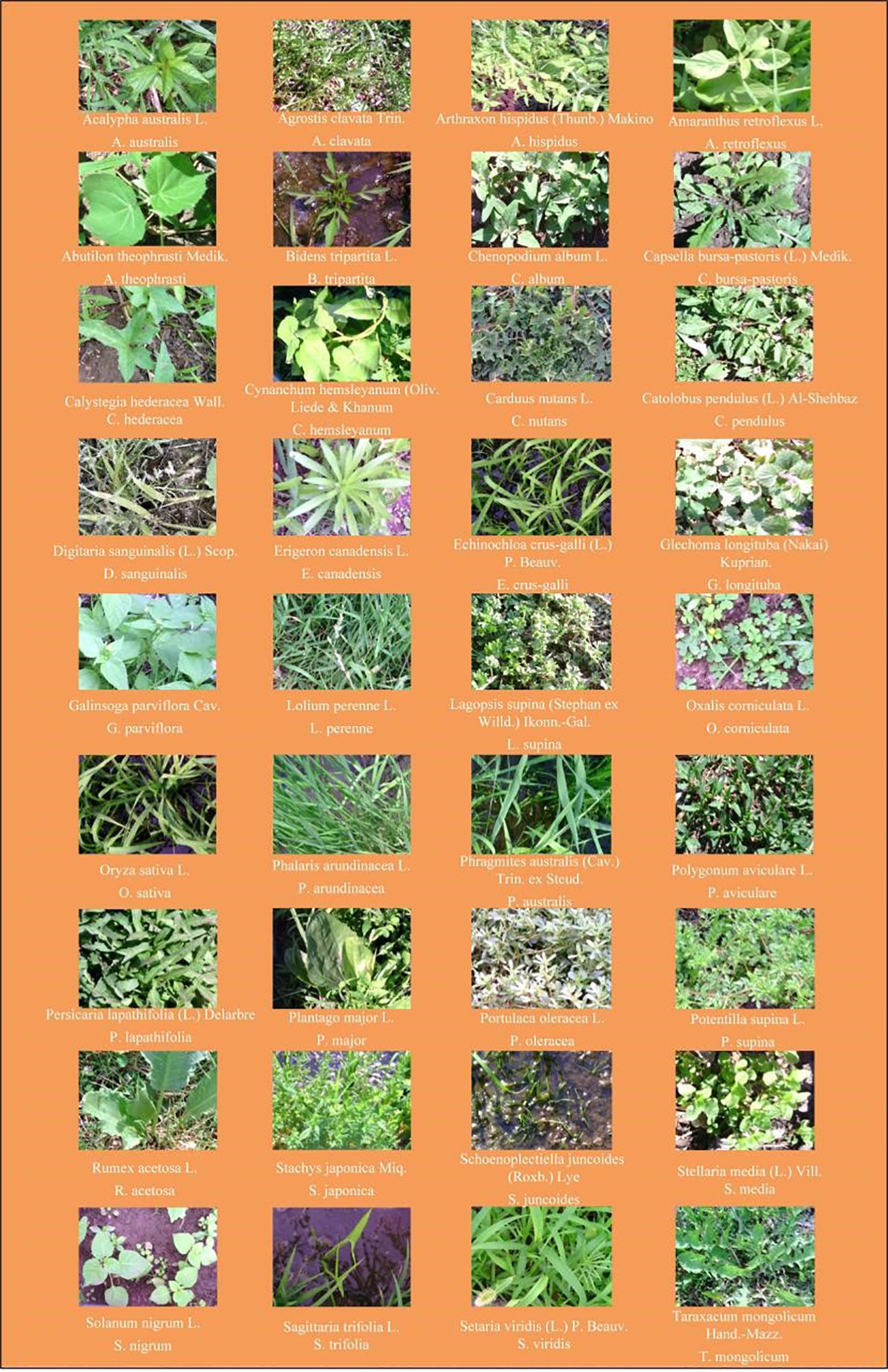

2.1 Test sample acquisition

Aromatic rice type 2 (O. sativa) with 13 leaves and 135 days of growth period, which is widely planted in the rice-growing areas of northern China, was selected for this study. The criteria for selecting weed species in paddy fields included but were not limited to factors such as weed species, size, spatial distribution within the field, proximity to field dikes, traffic flow on adjacent agricultural roads, and sample spatial distribution. The chosen weed species were collected from six different locations in the major rice-producing regions of northern China, as depicted in Figure 1. These sampling points encompassed (1) Dandong City (123°37′16.59″N ~ 125°10′24.77″N, 40°44′50.65″E ~ 41°5′12.16″E), (2) Jiaohe City (127°3′30.82″N ~ 128°1′33.04″N, 43°28′44.08″E ~ 44°9′58.18″E), (3) Qitaihe (130°13′22.97″N ~ 131°42′11.23″N, 45°42′41.36″E ~ 46°9′51.20″E), (4) Qiqihar City (123°22′59.83″N ~ 126°14′44.84″N, 46°19′56.73″E ~ 48°10′13.77″E), (5) Shuangyashan City (131°49′28.03″N ~ 134°7′27″N, 45°50′3.93″E ~ 47°28′29.04″E), and (6) Jiamusi City (129°42′51.22″N ~ 134°24′46.84″N, 46°33′5.85″E ~ 48°21′41.30″E). Detailed information regarding the weed species is provided in Figure 2, encompassing a total of 18 families, 35 genera, and 35 species.

2.2 Hyperspectral image acquisition and correction

In this study, a Proximal hyperspectral camera (Model SN: 190-1100381, SPECIM, Spectral Imaging Ltd., Oulu, Finland) was used to obtain images of rice and weed samples. The camera, with dimensions of 207×91×74 mm and a 12.5 mm lens, featured a total of 204 spectral bands covering a wavelength range from 397 to 1003 nm. The spectral resolution was 7 nm, and the images were captured at a resolution of 512×512 pixels. The laptop and hyperspectral camera were connected via a USB cable, and high-resolution hyperspectral images were acquired using Specim IQ Studio software. The process of hyperspectral image information acquisition is shown in Figure 3.

Before commencing the image acquisition experiments, the hyperspectral camera was preheated for 30 minutes. In order to ensure the accuracy of the data, this study used the Labsphere Spectralon panel for calibration. Since the Labsphere Spectralon panel (nominal reflectance of 99%) has almost perfect reflectance in the range of 250–2500 nm, it is used as a reference in field measurements. The camera’s and stand’s positions were adjusted to ensure that the samples fell within the camera’s scanned range. Due to atmospheric effects, the solar radiation changes in the process of returning from the ground object to the camera, leading to discrepancies between the recorded spectral information in hyperspectral images and the actual information (Zhao, 2004). To ensure consistency between the information captured in the field and the actual data in the acquired hyperspectral images, the Quick Atmospheric Correction (QUAC) algorithm was employed for atmospheric correction. This correction eliminates the effects of illumination as well as atmospheric components such as water vapor, oxygen, carbon dioxide, methane, and ozone on the reflectance of the ground objects, thus enabling the retrieval of the actual surface reflectance of ground objects (Bernstein et al., 2012). The QUAC algorithm calculates atmospheric transmittance and atmospheric reflectance based on the uncorrected image and the reflectance model. It then applies this information to correct the image, removing atmospheric influences.

In order to obtain the representative spectral information of the sample, refer to the research of Wang et al (Wang et al., 2022a). This study initially extracts a single-band image from the atmospherically corrected hyperspectral image, which serves as a grayscale image. Subsequently, the grayscale image undergoes mask processing to generate a binary image. Finally, individual rice or weed plants in the binary image are selected as regions of interest (ROI). The average spectral information extracted from these ROIs is considered as the hyperspectral data for the sample. Additionally, for each pixel, a reflectance transformation is applied using the Labsphere Spectralon panel as a reference region, following Equation 1.

where is the reflectance data of a pixel; is the raw data of that pixel; is the current value taken from the dark frame; and is the average value of the selected white reference region.

2.3 Construction of deep neural network classification model for rice and weed species identification

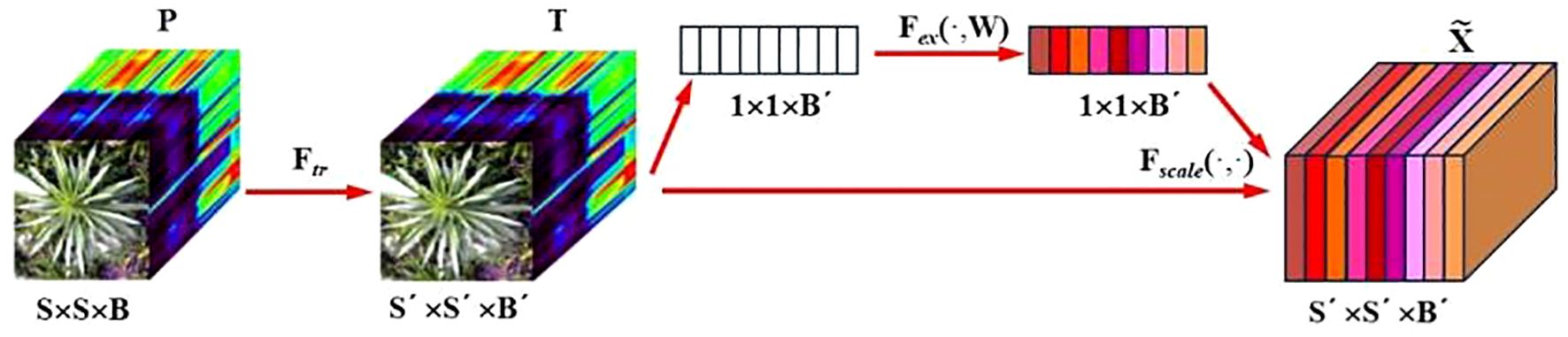

2.3.1 SS-CNN

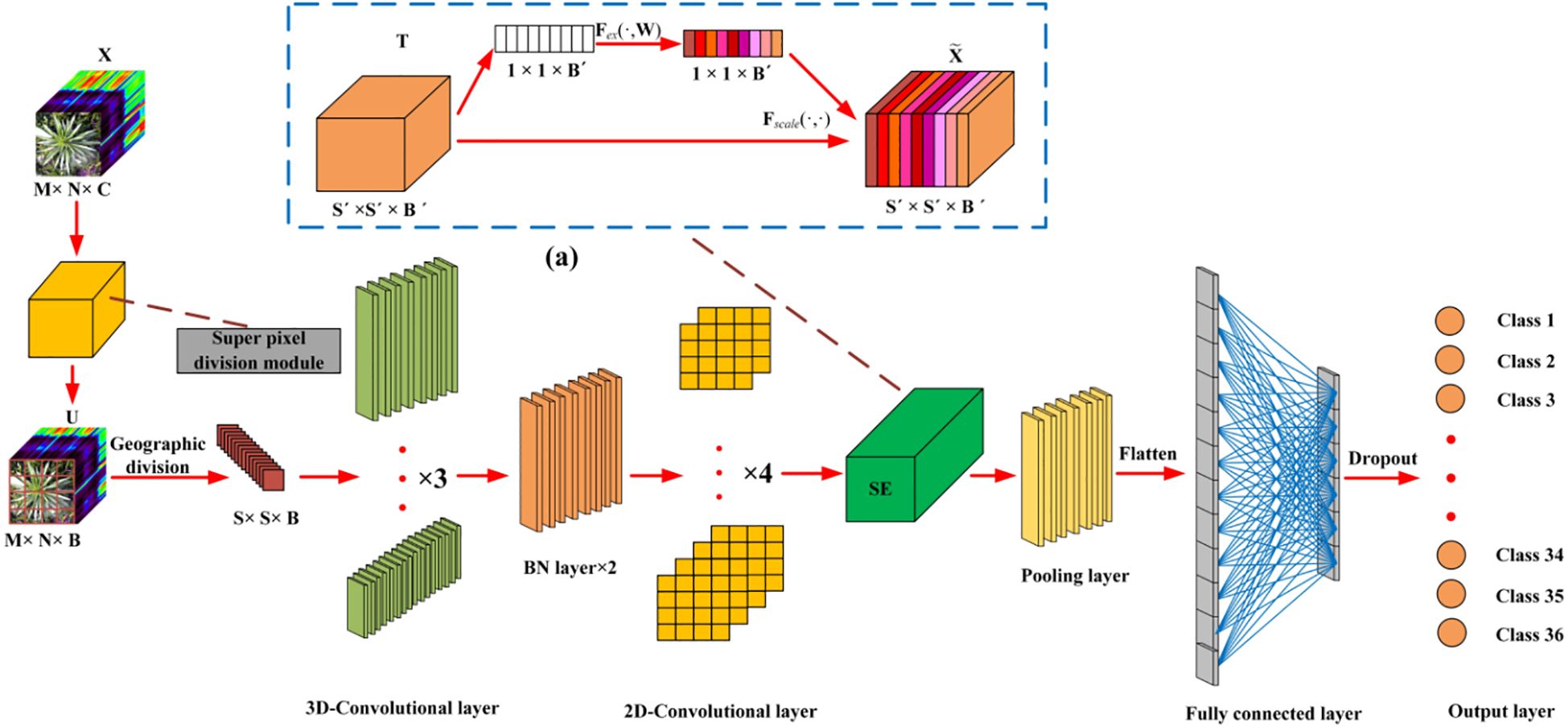

A deep neural network framework was developed for training and validating species-level classification of rice and weed species based on hyperspectral image spatial-spectral features. Neural networks, inspired by the human brain, are a set of algorithms designed for pattern recognition. With the rapid advancement of computer hardware and the proliferation of data, neural networks have proven to be one of the most effective tools for data analysis (He et al., 2015). We established a deep neural network named SS-CNN (Superpixelwise Division-Squeeze-and-Excitation Convolutional Neural Network) for identifying 35 weed species and rice in paddy fields in northern China. The network’s architecture is illustrated in Figure 4. The network takes hyperspectral images as input and the probabilities of rice and weed species as output. It comprises an input layer, a superpixel segmentation module, three 3D convolutional layers (with 8, 16, and 32 neurons, respectively), two Batch Normalization (BN) layers, four 2D convolutional layers (with 32, 64, 128, and 256 neurons, respectively), an SE attention mechanism module, a pooling layer, a flatten layer, two fully connected layers, and an output layer with 36 neurons. The SoftMax function is applied at the output layer to generate probabilities.

After the hyperspectral image is input into the input layer, in order to make full use of the spatial information contained within the HSI cube, the superpixelwise division module is used to segment the hyperspectral image by using the entropy rate superpixel (ERS). Here, the original hyperspectral image (M is the height of the image, N is the width of the image, and C is the number of bands of the spectrum) is reduced by the superpixelwise division module. The size of the data cube U becomes MNB. The number of spectral bands is reduced from C to B, while maintaining the same spatial dimensions, namely, width M and height N. This operation retains crucial spatial information for object recognition while eliminating spectral redundancy. Subsequently, the HSI data cube processed by the superpixel segmentation module is divided into small overlapping 3D blocks to serve as input data for the model, with the true labels determined by the labels of the center pixels. We generate 3D neighboring patches, denoted as, from U with spatial location (α, β) as the center, covering a -window or spatial range and all B spectral bands.

To concurrently enhance the number of spectral-spatial feature maps, 3D convolutions are employed in triplicate, effectively preserving the spectral characteristics of the input hyperspectral (HSI) data within the output volume (He et al., 2023). The dimensions of the 3D convolution kernels are set to 3x3x7, 3x3x5, and 3x3x3, corresponding to two spatial dimensions and one spectral dimension. The number of convolution kernels is 8, 16, and 32, respectively. Furthermore, Batch Normalization (BN) layers are incorporated following the 3D convolution layers to process the extracted image features. Specifically, BN layers expedite network training and convergence while mitigating overfitting. Subsequently, 2D convolution layers are employed to extract spatial features, enabling a robust differentiation of spatial information within distinct spectral bands without significant loss of spectral information. The dimensions of the 2D convolution kernels are uniformly set to 3x3, encompassing two spatial dimensions, and the number of convolution kernels is 32, 64, 128, and 256.

As a measure to mitigate the issues of gradient vanishing or exploding, the present study employed the Softsign activation function. The Softsign activation function exhibits a gentler curve, and the derivative decreases slowly. This characteristic enables it to alleviate the gradient vanishing problem and facilitate more efficient learning (Wang et al., 2019). Its mathematical expression is represented as in Equation 2:

Convolutional features introduce nonlinearity into the model through activation functions (Roy et al., 2019). In 3D convolutional layers, activation values at spatial position (x, y, z) of the jth feature map in the ith layer are computed using Equation 3 and denoted as .

where is the activation function, is the bias parameter for the jth feature map of the ith layer, is the number of feature map in (l-1)th layer and the depth of kernel for the jth feature map of the ith layer, is the depth of kernel along spectral dimension, is the width of kernel, is the height of kernel and is the value of weight parameter for the jth feature map of the ith layer.

In the 2D convolution layer, the activation value at spatial position (x, y), in the jth feature map of the ith layer, denoted as , as shown in Equation 4:

The SE attention module (Squeeze-and-Excitation Networks) proposed by Hu et al (Hu et al., 2020). is introduced after the 3D-2D convolutional layer (Figure 5). Features P extracted by the convolution layers initially undergo a squeeze operation, which aggregates feature maps across the spatial dimensions a×a to generate channel descriptors. These descriptors embed the global distribution of channel feature responses, enabling information from the network’s global receptive field to be utilized by lower layers. Subsequently, an excitation operation follows, in which an auto-gating mechanism based on channel dependencies is employed to learn specific activations for each channel, controlling the excitations for each channel. The feature map P is then reweighted to produce the output of the SE block, which can be directly fed into the subsequent pooling layer.

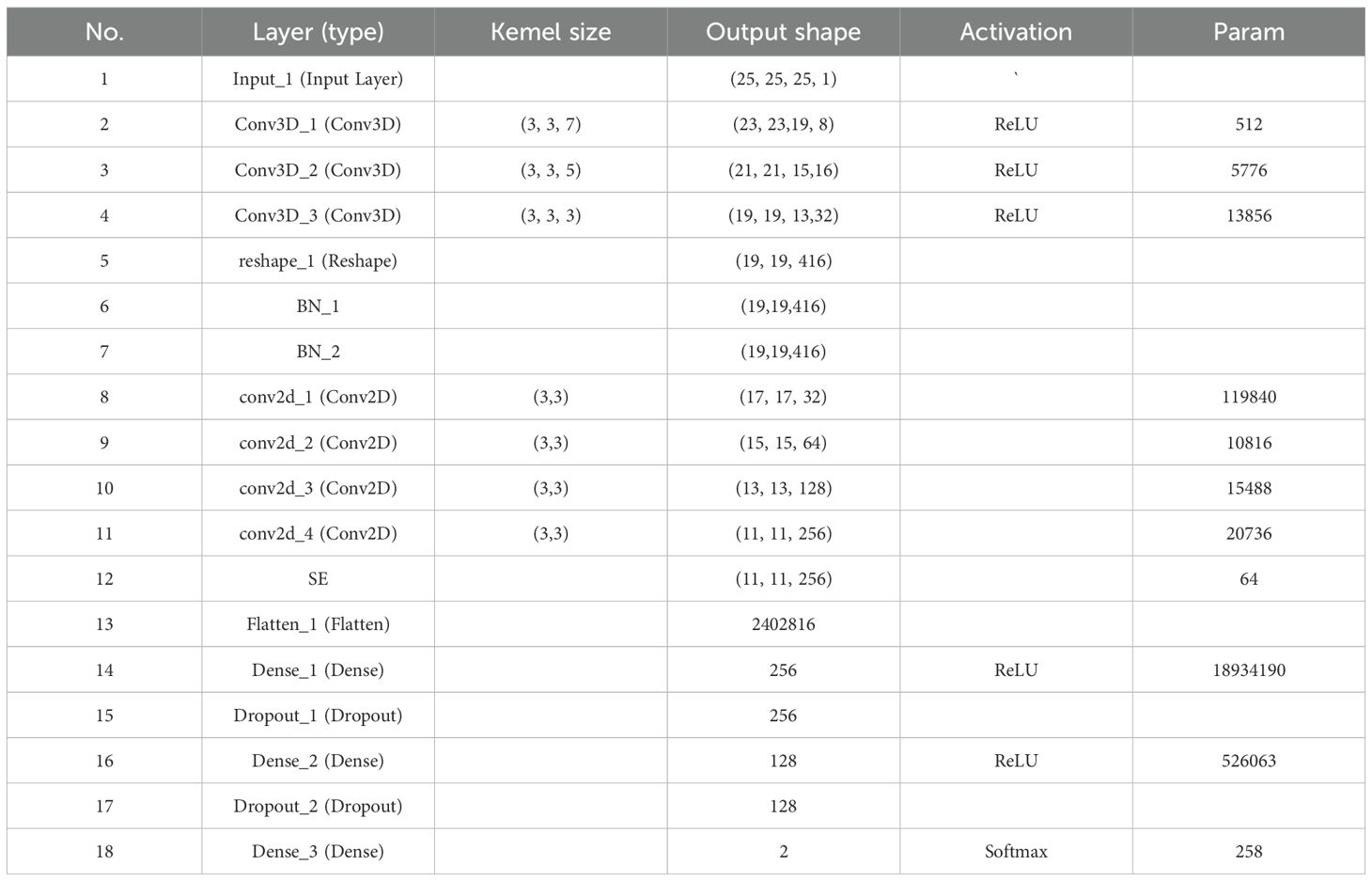

In the final step, the processed feature maps are flattened into one-dimensional vectors using a ‘flatten’ layer and fed into fully connected layers for further abstract feature restructuring. This study incorporates two fully connected layers with 256 and 128 neurons, respectively. Furthermore, Dropout regularization is applied to prevent overfitting, with a parameter set to 0.4. The SS-CNN algorithm employs the backpropagation algorithm with random weight initialization and training. We employ mini-batches of size 32 and train the network for 50 epochs, without batch normalization and data augmentation. The optimal learning rate is chosen to be 0.0001.The optimizer is Adam, and the loss function is the category cross entropy loss. The layer wise summary of the proposed SS-CNNt architecture with window size 25×25 (Table 1).

2.3.2 Ablation experiments

In this study, we propose a Spectral-Spatial 3D-2D Hybrid Deep Neural Network (SS-CNN) based on hyperspectral images. It uses 3D-2DCNN as the feature extraction network, and superpixelwise division module and SE attention module as the enhanced spatial-spectral feature extraction module. To demonstrate the superiority of the SS-CNN network and evaluate the interactions between network modules, it is essential to conduct ablation experiments to compare and analyze the performance of the deep neural network before and after improvement. Ablation experiments are commonly used with complex neural networks as a method to understand network behavior by removing portions of the network and studying its performance, and have been widely employed by many researchers. In this study, the models subjected to ablation research include SS-CNN, SD-CNN, SE-CNN, 3DCNN, and 2DCNN. Specifically, SD-CNN represents the SS-CNN without the SE attention module, SE-CNN represents the SS-CNN without the superpixelwise division module, 3DCNN represents the SS-CNN without the superpixelwise division module, SE attention module, and 2DCNN, while 2DCNN represents the SS-CNN without the superpixelwise division module, SE attention module, and 3DCNN. Additionally, this study conducted 10-fold cross-validation under different modeling strategies (Tr=0.7 and Tr=0.05) to evaluate model performance.

2.4 PCA

PCA (Principal Component Analysis) is an unsupervised learning technique that facilitates data visualization through dimensionality reduction and cluster analysis. It offers a means to gain insights into complex multivariate data and finds extensive use in the processing of Hyperspectral Imaging (HSI) data (Amigo et al., 2013; Bro and Smilde, 2014). It transforms the original spectral information variables into a set of new variables, known as principal components (PCs), which are mutually exclusive and non-overlapping, capturing distinct information. By retaining only, the top PCs with the highest contribution to variance, it effectively represents the primary information within the original data. It’s worth noting that the number of PCs to retain depends on the percentage of cumulative variance they account for within the total variance (Guo et al., 2021). This method yields a simplified set of factors, which can be used for exploration and serves as an efficient graphical representation of the data, offering a precise description of the entire extensive spectral dataset.

2.5 Vegetation index

In the realm of remote sensing, vegetation indices derived from remote sensing data provide an effective and direct means for qualitative and quantitative mapping of species identification, vegetation coverage, health status, physiological activity, leaf nitrogen content, leaf area, canopy coverage, and structure. Notable examples of these indices include the Normalized difference vegetation index (NDVI), Photochemical reflectance index (PRI), Enhanced vegetation index (EVI), and Plant senescence reflectance index (PSRI), among other empirical and semi-empirical spectral metrics (Maes and Steppe, 2019). NDVI, in particular, stands as the most widely employed indicator for monitoring crop growth, health status, and vegetative greenness (Li et al., 2019). It is calculated as the disparity between near-infrared and red-region reflectance, divided by the sum of near-infrared and red-region reflectance. NDVI demonstrates a strong correlation with vegetation health, green biomass, and vegetation productivity, as shown in Equation 5:

where represents the reflectance values in the near-infrared spectral range, while signifies the reflectance values in the red spectral range.

PRI is a photosynthetic index based on narrow-band reflectance, which is expressed as the difference between the reflectance at 531 nm and the reflectance at 570 nm divided by the sum of the reflectance at 531 nm and the reflectance at 570 nm, as shown in Equation 6. It is considered an effective indicator of plant net photosynthetic rate, CO2 uptake, and nutrient deficiency. Net photosynthetic rate is a crucial metric for assessing photosynthetic capacity, vegetation productivity, and the overall growth of plants. A higher net photosynthetic rate indicates better leaf structure and functionality. It is noteworthy that the PRI index is particularly associated with the conversion of carotenoids in the xanthophyll cycle, which is essential to prevent excessive light exposure (Gamon et al., 1992).

where represents the reflectance value at the 531nm wavelength band, and represents the reflectance value at the 570nm wavelength band.

The enhanced vegetation index (EVI) was analyzed as an indicator of vegetation activity. The index is strongly correlated with chlorophyll content and photosynthetic activity (Huete et al., 2002), and is a normalized ratio of the red, near-infrared, and blue spectral reflectance bands. EVI can have values from −1 to +1. The EVI equation is as shown in Equation 7:

where represents the reflectance values in the near-infrared spectral band, signifies the reflectance values in the red spectral band, and denotes the reflectance values in the blue spectral band.

2.6 Classification model evaluation (OA, AA, Kappa)

In this study, we used the overall accuracy (OA), average accuracy (AA), Kappa coefficient, producer’s accuracy (PA) and user’s accuracy (UA) to judge the discriminant effect of deep neural network classification algorithm on rice and weed samples. OA represents the number of correctly classified samples in the overall test sample, which is usually used to evaluate the overall performance of the model (Zhang et al., 2019). AA represents the average accuracy, and Kappa is an index used to measure classification accuracy or consistency, which can reflect the real degree of consistency of classification results. PA represents the probability that the category is correctly classified, while UA represents the probability that the classifier correctly classifies the samples belonging to a particular category (Cao et al., 2018). The closer OA, AA, and Kappa values are to 1, the better the algorithm’s classification performance. The formulas for OA, AA, Kappa, PA, and UA are provided in Equations 8–12:

where , represents the number of categories of the real samples; , represents the number of categories of the predicted samples; n represents the total number of categories; represents the true number of samples of class i, but the predicted number of samples of class j; represents the number of samples with both true and predicted i class.

3 Results and discussion

3.1 Analysis of spectral characteristics of samples

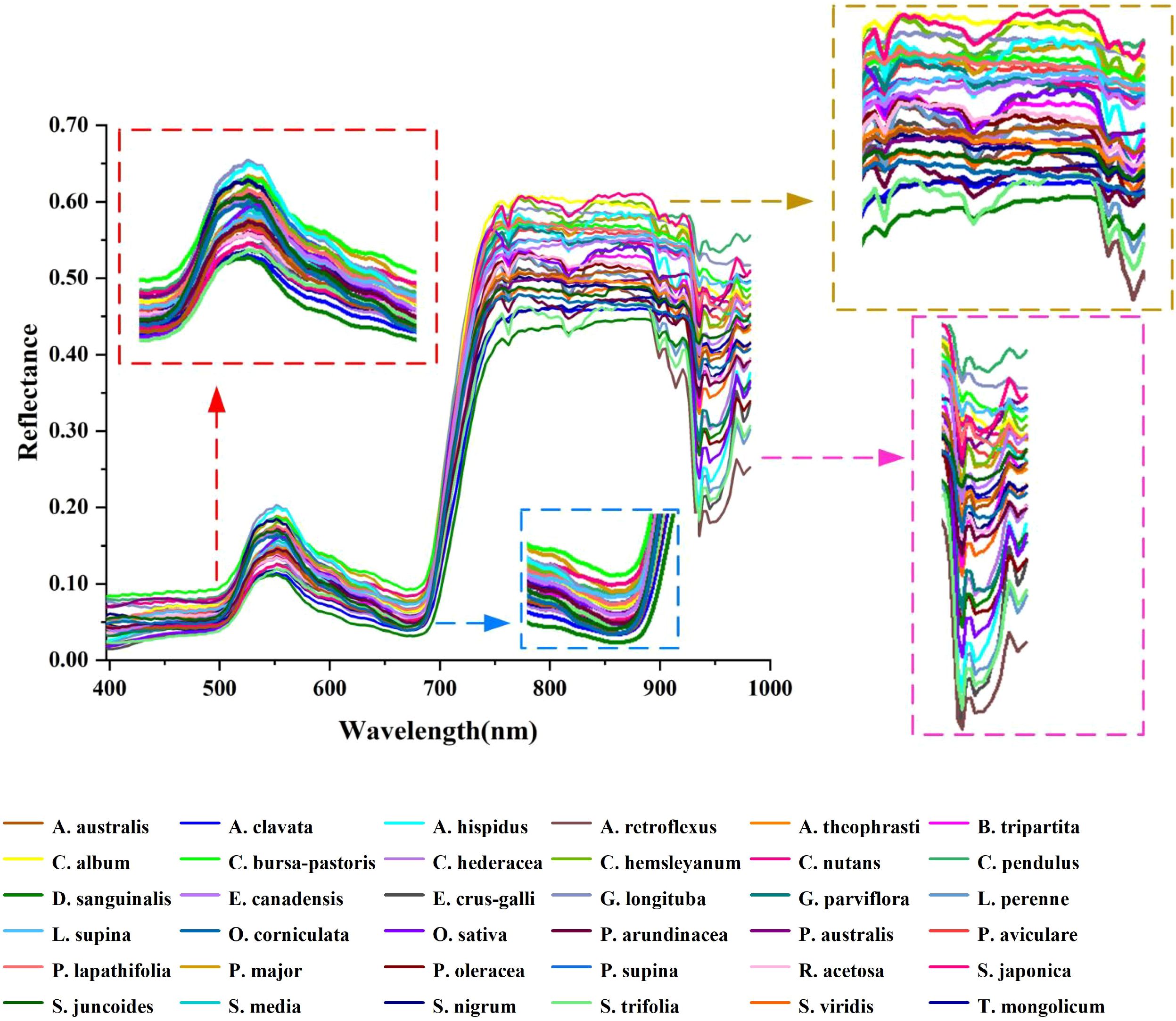

There is a high noise spectral region (982–1003 nm) in the image acquisition process of rice and weed samples. Consequently, after excluding this region, the spectral acquisition range extracted from the hyperspectral images was 397–982 nm (consisting of 197 wavebands). The reflectance spectra of rice and weed samples are depicted in Figure 6. In general, plant spectra will mainly be affected by leaf pigment content in the visible region (400–700 nm) (Yoder and Pettigrew-Crosby, 1995), while the near infrared (NIR) region (700–1100 nm) is highly influenced by leaf and canopy structure that can be affected by phenology as well as species (Gausman, 1985). Rice (Oryza sativa, O. sativa) and weed samples exhibit a ‘green peak’ at 550 nm in the visible green region (520–600 nm). This phenomenon occurs because rice and weeds exhibit low photosynthetic activity in this wavelength range, leading to reduced light absorption and consequently higher reflectance (Feret et al., 2008). In the visible red region (630–690 nm), a ‘red valley’ is observed at 680 nm. This spectral band represents the region with the highest chlorophyll absorption and photosynthetic activity in plants, resulting in increased light absorption and decreased reflectance (Herrmann et al., 2011). In the near-infrared region (700–930 nm), the hyperspectral curve rises rapidly, and the curve basically rises to the highest point at 760 nm, forming a reflection platform (Shapira et al., 2013). Near-infrared reflectance is influenced by internal light scattering in leaves, with scattering dependent on anatomical characteristics such as leaf thickness, density, stomatal structure, and so on (Cavender-Bares et al., 2020). A dicotyledonous leaf has more air spaces among its spongy mesophyll tissue than a monocotyledonous leaf (Raven et al., 2005) of the same thickness and age, resulting in a higher reflectance in the NIR region (Gausman, 1985).

In terms of details, several downward reflection valleys are observable, reflecting the pronounced spectral characteristics depicted in Figure 6. The reflectance band at 760 nm may be linked to the third overtone O-H stretching of water within plant leaves. Furthermore, a decline in the photosynthetic process could also be indicated by reducing fluorescence energy around 760–810 nm wavelength. When absorbed by chlorophyll, solar energy is used for carbon fixation and heat dissipation, followed by the release of the emission source at longer wavelengths in the form of chlorophyll fluorescence (Krause and Weis, 1991). Furthermore, at 935 nm in the original spectral curve, an absorption trough emerges. At this point, the sharp decrease in spectral reflectance beyond 935 nm suggests that reflectance in the wavelength region exceeding 935 nm is no longer influenced by the leaf’s intrinsic structure. It is well-established that higher water content in plants results in lower spectral reflectance in the NIR range (780 nm-1300 nm) (Zhang et al., 2022b). The decrease in spectral reflectance around the 935 nm wavelength is inferred to be a consequence of cellular fluids, absorbed water, and carbon dioxide emissions within the leaf, as well as the structural properties of cell membranes (Qu et al., 2017).

3.2 PCA preliminary analysis

PCA was applied to explore the spectral differences and examine the natural pattern among samples in more detail. As illustrated in the PCA analysis graph in Figure 7, the first 6 principal components accounted for 99.024% of the total spectral data variance, revealing a complex clustering scenario. Specifically, PC1, PC2, PC3, PC4, PC5, and PC6 contributed to 76.406%, 15.038%, 5.300%, 1.055%, 0.775%, and 0.450% of the variance, respectively. In the PC1-PC2 analysis (Figure 7a), rice (O. sativa) and weed samples such as O. corniculata and C. pendulus showed a certain cluster scenario, but the sample clusters of other weeds were very close and not well separated. The PC1-PC3 analysis (Figure 7b) demonstrated clearer separability for A. clavata, S. japonica, and O. corniculata. In the PC3-PC5 analysis (Figure 7c), species like L. supina, A. clavata, and R. acetosa predominantly occupied the negative side of PC3, O. sativa, S. nigrum, and A. hispidus samples were primarily located on the positive side of PC3. In the PC4-PC6 analysis (Figure 7d), distinct clustering and separation patterns were observed among A. hispidus, P. arundinacea, and S. media weed species. A. hispidus was predominantly situated on the positive side of PC6, P. arundinacea was located in proximity to the origin of PC6, and S. media was primarily distributed on the negative side of PC6. Based on the aforementioned PCA analysis results, it becomes evident that the clustering of most weed samples exhibits a high degree of overlap, weakening their separability. This may be attributed to the fact that rice and various weed species are all green plants with similar spectral fingerprints. In summary, while PCA analysis does not provide precise differentiation among sample types, it does reveal clustering and separation patterns between rice and weed samples. Therefore, further differentiation can be achieved using end-to-end deep learning modeling.

Figure 7. PCA analysis diagram of rice and weed samples. (a) PC1-PC2; (b) PC1-PC3; (c) PC3-PC5; (d) PC4-PC6.

3.3 Vegetation index analysis

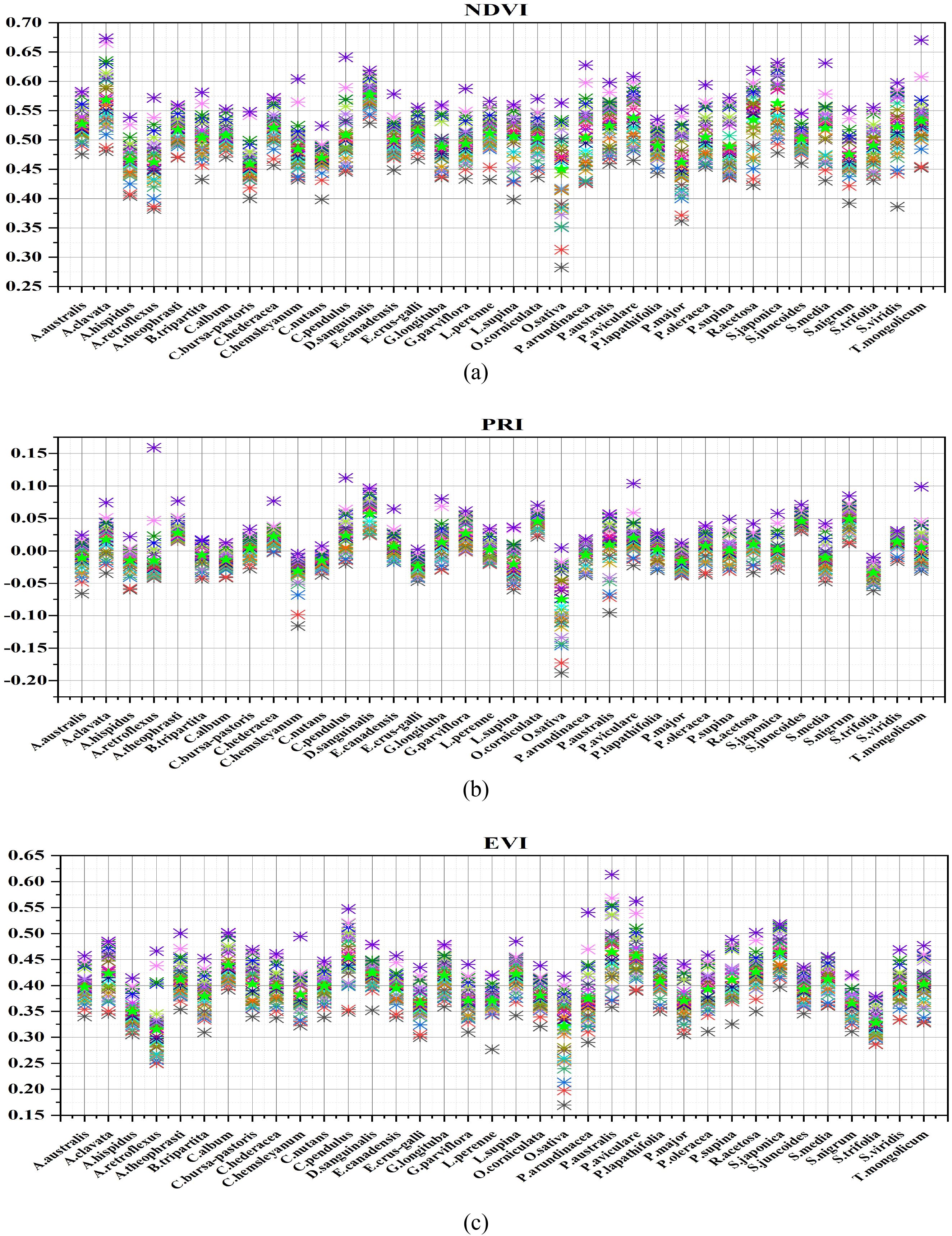

The high spectral resolution (more narrowband) images obtained by the SPECIM IQ provide ample spectral information, particularly in the visible and near-infrared spectral wavelength regions. These narrow bands can be effectively employed for the study of various weed species with different growth and physiological conditions in the Northern Chinese paddy field environment. Figure 8 illustrates the variations of NDVI, PRI, and EVI indices among different weed species. In Figure 8a), the NDVI levels reflect the levels of plant growth and physiological condition, indicating that the growth of various weeds is not the same (Bai et al., 2019). Samples of D. sanguinalis, A. clavata, and S. japonica exhibit higher NDVI values overall, while O. sativa samples display lower overall NDVI values. P. oleraceum, B. tripartita, and P. arundinacea exhibit intermediate NDVI values. The underlying reasons for this phenomenon likely stem from differences in the growth environments of rice and weed species, as well as the inherent physiological structure and activity of each species. Furthermore, the NDVI values of A. australis, P. australis, R. acetosa, S. viridis, and T. mongolicum tend to be consistent, indicating that these weed species share similar growth status.

Figure 8. Vegetation index distribution map of rice weed samples (a NDVI, b PRI, c EVI), ★ represents the average value of vegetation index for each sample, ✱ represents the average vegetation index value of the sample on each hyperspectral image of a species (each hyperspectral image is represented by different colors).

In the realm of plant vegetation indices, another crucial metric is PRI (Photochemical Reflectance Index), which is associated with the plant’s xanthophyll cycle and the photosynthetic light use efficiency (Fréchette et al., 2016). Figure 8B depicts the distribution of PRI results among various samples. In comparison to the distribution of NDVI, the distribution of PRI of each sample species changed. D. sanguinalis, S. nigrum, and S. juncoides display the highest PRI values. T. mongolicum, C. bursa-pastoris, and P. lapathifolia fall within the intermediate range of PRI values. Conversely, PRI values are lowest for C. hemsleyanum, E. crus-galli, O. sativa, and S. trifolia. This suggests variations in xanthophyll content levels and the efficiency of photosynthetic light utilization among different rice and weed species. The underlying reasons may be linked to the synthesis of xanthophylls within chloroplasts, which is related to the photosynthetic process of plants. Nevertheless, inherent physiological traits of plants, as well as disparities in photosynthetic light use efficiency, contribute to differing levels of photosynthesis, resulting in distinct PRI distributions among plant species. It’s worth noting that, in contrast to the distribution of NDVI values, the distinction among PRI values for the samples is somewhat reduced. Notably, O. sativa exhibits the lowest PRI value and displays a substantial PRI difference compared to other weed species, indicating its lower xanthophyll content.

Figure 8C illustrates the distribution of EVI values for various samples. P. australis, S. japonica, and P. aviculare samples exhibit higher EVI values, while O. sativa and A. retroflexus samples generally show lower EVI values. Additionally, C. nutans, C. hederacea, and S. viridis fall in the middle range of EVI values. This phenomenon can be attributed to the relationship between EVI and the internal chlorophyll content and photosynthetic activity of the plants. Chlorophyll content, a key component of photosynthesis, significantly influences light absorption and scattering. With increasing chlorophyll content and photosynthetic activity, the photosynthetic process of the plants is enhanced. Notably, P. australis exhibits the highest EVI values, likely due to its unique physiological structure, resulting in the highest chlorophyll content and photosynthetic activity. The analysis of NDVI, PRI, and EVI results reveals variations in vegetation indices among individual samples due to differences in plant growth conditions, physiological activities, and environmental factors. However, the vegetation index distributions for most samples are similar, lacking significant differentiation. Therefore, it is essential to further differentiate different species samples using deep learning models.

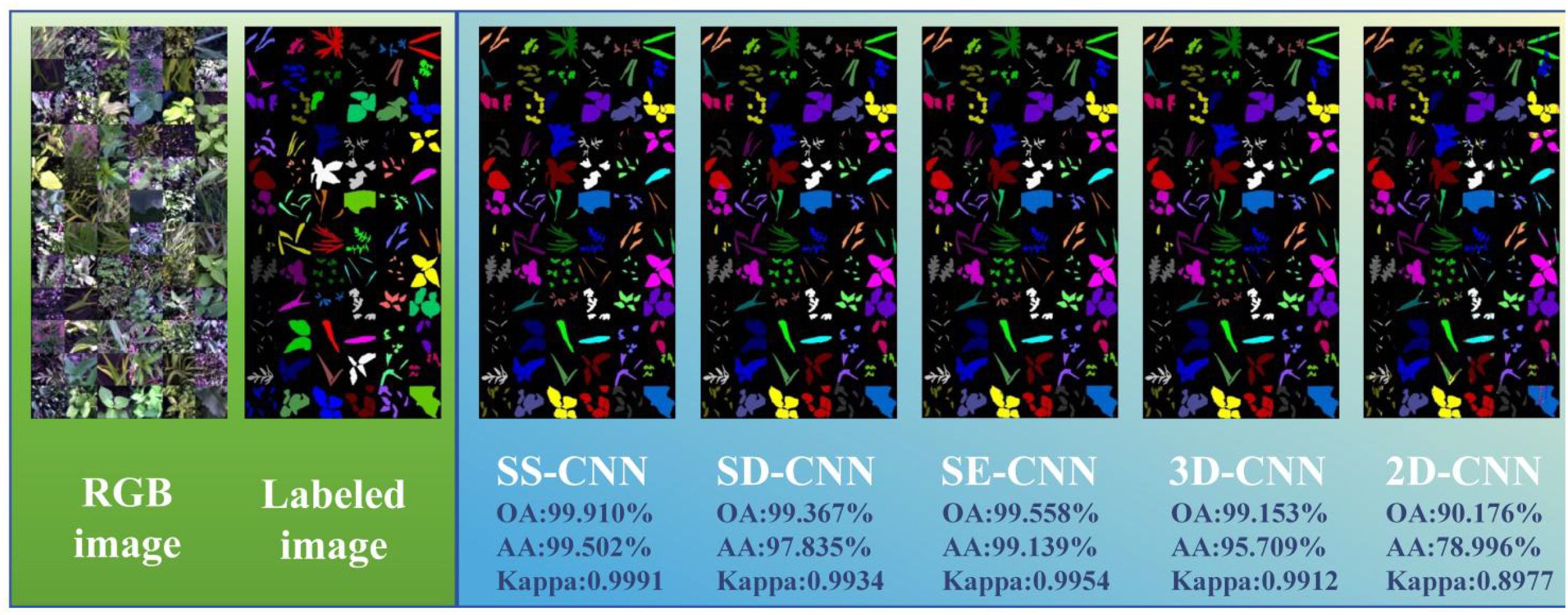

3.4 Classification and visualization results under normal training samples (Tr = 0.7)

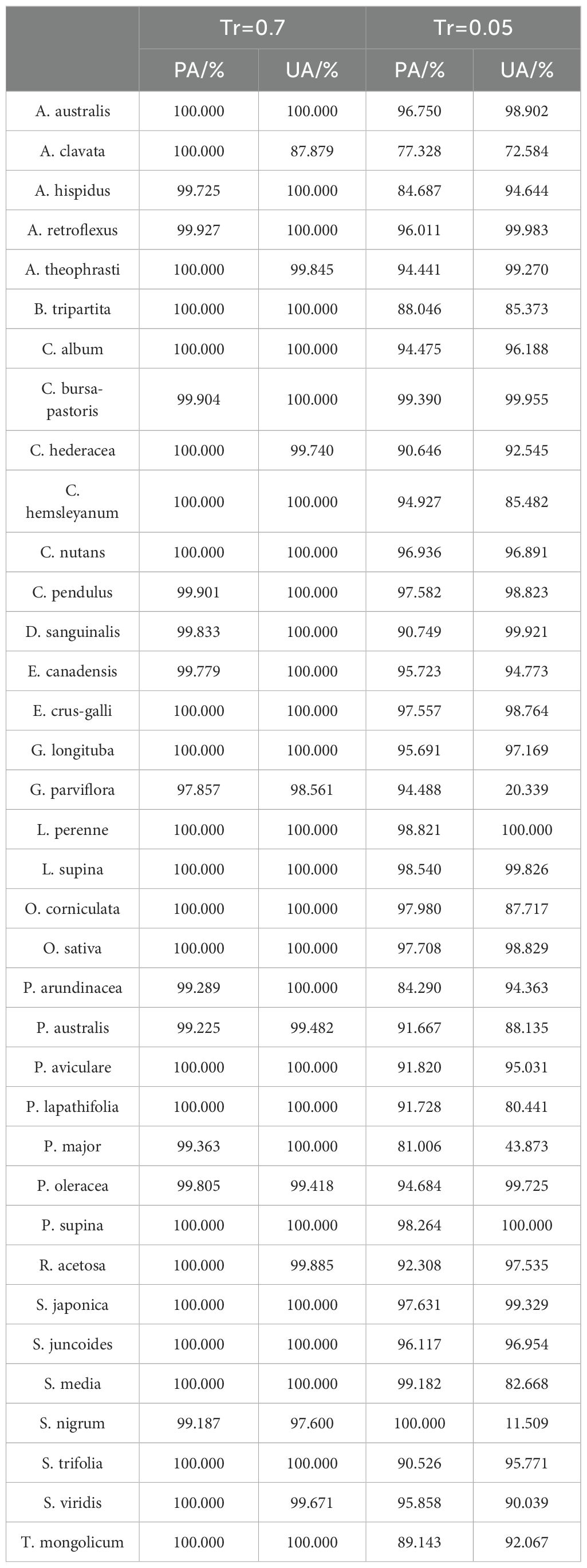

In this study, discrimination analysis of rice and weed samples was performed using various classification algorithms, including SS-CNN, SD-CNN, SE-CNN, 3DCNN, and 2DCNN. According to the discriminant effect of the model, the optimal model suitable for the classification of rice and weed samples was obtained. Figure 9 illustrates the classification results and visualizations of SS-CNN, SD-CNN, SE-CNN, 3DCNN, and 2DCNN algorithms on the test dataset. The results from the discrimination analysis reveal that the classification algorithm constructed by SS-CNN outperforms other classification algorithms in terms of OA, AA, and Kappa values, signifying a significant improvement in modeling accuracy. Notably, compared to the 2D-CNN algorithm, SS-CNN exhibits a more substantial enhancement in modeling accuracy. Specifically, the OA value of the SS-CNN algorithm is 0.543% higher than that of SD-CNN, 0.352% higher than SE-CNN, 0.757% higher than 3D-CNN, and 9.734% higher than 2D-CNN (Table 1). The AA value increases by 1.667%, 0.363%, 3.793%, and 20.506% in the same order, while the Kappa value increases by 0.0057, 0.0037, 0.0079, and 0.1014. Furthermore, the results of PA and UA for the optimal SS-CNN algorithm (Table 1) demonstrate that the accuracy is highest for species such as A. australis, B. tripartita, C. album, C. hemsleyanum, C. nutans, E. crus-galli, G. longituba, L. perenne, L. supina, O. corniculata, O. sativa, P. aviculare, P. lapathifolia, P. supina, S. japonica, S. juncoides, S. media, S. trifolia, and T. mongolicum, all reaching a PA and UA value of 100%. In contrast, species like A. clavata, G. parviflora, and S. nigrum exhibit relatively lower accuracy, with UA values of 87.879%, 98.561%, and 97.600%, respectively. The reason for this result may be that A. clavata, G. parviflora, S. nigrum have no obvious distinguishable morphological features and exhibit high similarities to samples of other species. When classifying samples, they are misclassified as other species to a greater extent, resulting in a smaller UA value.

Figure 9. The classification and visualization results of each classification model under normal training samples (Tr =0.7) (Label image:  A. australis,

A. australis,  A. clavata,

A. clavata,  A. hispidus,

A. hispidus,  A. retroflexus,

A. retroflexus,  A. theophrasti,

A. theophrasti,  B. tripartita,

B. tripartita,  C. album,

C. album,  C. bursa-pastoris,

C. bursa-pastoris,  C. hederacea,

C. hederacea,  C. hemsleyanum,

C. hemsleyanum,  C. nutans,

C. nutans,  C. pendulus,

C. pendulus,  D. sanguinalis,

D. sanguinalis,  E. canadensis,

E. canadensis,  E. crus-galli,

E. crus-galli,  G. longituba,

G. longituba,  G. parviflora,

G. parviflora,  L. perenne,

L. perenne,  L. supina,

L. supina,  O. corniculata,

O. corniculata,  O. sativa,

O. sativa,  P. arundinacea,

P. arundinacea,  P. australis,

P. australis,  P. aviculare,

P. aviculare,  P. lapathifolia,

P. lapathifolia,  P. major,

P. major,  P. oleracea,

P. oleracea,  P. supina,

P. supina,  R. acetosa,

R. acetosa,  S. japonica,

S. japonica,  S. juncoides,

S. juncoides,  S. media,

S. media,  S. nigrum,

S. nigrum,  S. trifolia,

S. trifolia,  S. viridis,

S. viridis,  T. mongolicum9).

T. mongolicum9).

As shown in Figure 9, the discrimination results of rice and weed species across different deep neural network classification models are visualized, and corresponding color labeling is applied to samples of different species. From the original RGB images, it is evident that both rice and weed samples generally appear green in color. Apart from a few samples with distinct visual characteristics, the majority of samples cannot be easily distinguished by the naked eye. Comparing the image recognition results of different classification models, the SS-CNN algorithm achieved excellent detection performance, with an OA of 99.910% on the test set, correctly labeling almost all samples. In contrast, the 2D-CNN algorithm demonstrated relatively poor detection performance, with an OA of 90.176% on the test set, showing mislabeling among different sample types. This phenomenon may be attributed to the fact that 2D-CNN primarily focuses on local features when extracting image information, providing limited grasp of the global image information, thus leading to reduced image classification accuracy. However, for the classification results under Tr=0.7, the detection performance of the optimized classification algorithms (SS-CNN, SD-CNN, SE-CNN) after superpixel segmentation and SE attention mechanism modules outperformed 3D-CNN and 2D-CNN. Importantly, the proposed SS-CNN in this study achieved a classification accuracy of 99.910%, exhibiting high consistency with the ground truth images. Consequently, classifying rice and weed using the SS-CNN algorithm is deemed feasible.

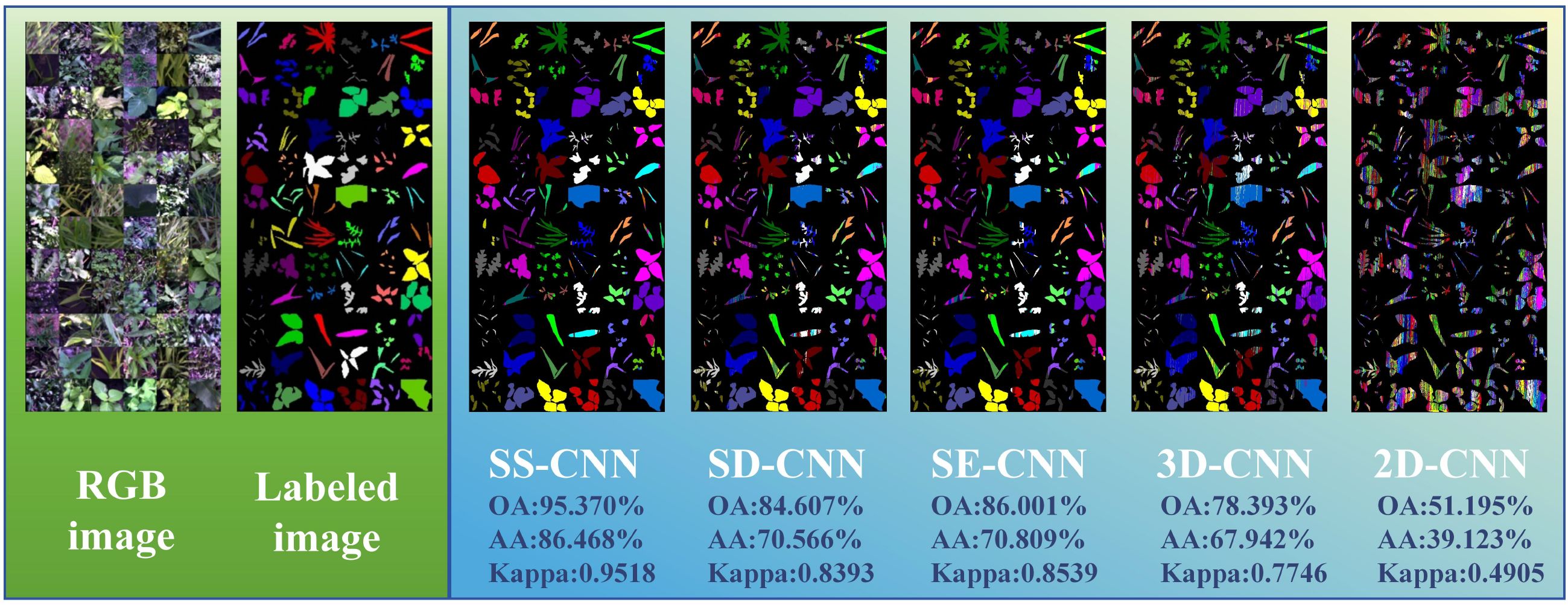

3.5 The classification and visualization results under few-shot learning (Tr =0.05)

Deep learning algorithms has been widely used in hyperspectral image classification tasks and have achieved favorable classification results. As long as there is available data, it can represent the changes in practice. However, in the field of agriculture, the available data for building robust models is severely limited due to challenging field conditions, extended experiment periods and budget constraints. To address this, as depicted in Figure 10, we explore the classification outcomes of deep learning algorithms in the case of few-shot learning, where the training dataset accounts for only 5% of the total samples. Among these algorithms, the SS-CNN algorithm achieves the highest classification accuracy for rice and weeds, with OA, AA, and Kappa values of 95.370%, 86.468%, and 0.9518, respectively. Compared to the SD-CNN, SE-CNN, 3D-CNN, and 2D-CNN algorithms, the SS-CNN algorithm exhibits successive improvements in OA by 10.763%, 9.369%, 16.977%, and 44.175%, AA by 15.902%, 15.659%, 18.526%, and 47.345%, and Kappa by 0.1125, 0.0979, 0.1772, and 0.4613, respectively. From the classification results of deep neural network algorithms, it can be seen that in the case of Tr=0.05 training samples, the classification accuracy of each algorithm is lower than that of Tr=0.7 training samples. Notably, for SS-CNN, its OA declines by 4.540% in the case of Tr=0.05 training samples but still achieves satisfactory results. This underscores the robust applicability of SS-CNN even in scenarios with limited training samples.

Figure 10. The classification and visualization results of each classification model in the case of few-shot learning (Tr =0.05) (Label image:  A. australis,

A. australis,  A. clavata,

A. clavata,  A. hispidus,

A. hispidus,  A. retroflexus,

A. retroflexus,  A. theophrasti,

A. theophrasti,  B. tripartita,

B. tripartita,  C. album,

C. album,  C. bursa-pastoris,

C. bursa-pastoris,  C. hederacea,

C. hederacea,  C. hemsleyanum,

C. hemsleyanum,  C. nutans,

C. nutans,  C. pendulus,

C. pendulus,  D. sanguinalis,

D. sanguinalis,  E. canadensis,

E. canadensis,  E. crus-galli,

E. crus-galli,  G. longituba,

G. longituba,  G. parviflora,

G. parviflora,  L. perenne,

L. perenne,  L. supina,

L. supina,  O. corniculata,

O. corniculata,  O. sativa,

O. sativa,  P. arundinacea,

P. arundinacea,  P. australis,

P. australis,  P. aviculare,

P. aviculare,  P. lapathifolia,

P. lapathifolia,  P. major,

P. major,  P. oleracea,

P. oleracea,  P. supina,

P. supina,  R. acetosa,

R. acetosa,  S. japonica,

S. japonica,  S. juncoides,

S. juncoides,  S. media,

S. media,  S. nigrum,

S. nigrum,  S. trifolia,

S. trifolia,  S. viridis,

S. viridis,  T. mongolicum).

T. mongolicum).

Specifically, the results of PA and UA for SS-CNN are presented in Table 2, demonstrating high accuracy for the following plant species: A. australis, A. retroflexus, C. bursa-pastoris, C. nutans, C. pendulus, E. crus-galli, G. longituba, L. perenne, L. supina, O. sativa, P. supina, S. japonica, and S. juncoides, with both PA and UA values exceeding 95%. On the other hand, the accuracy for A. clavata, B. tripartita, and P. major species is relatively lower, with A. clavata having PA and UA values of 77.328% and 62.584%, B. tripartita with PA and UA values of 88.046% and 85.373%, and P. major with PA and UA values of 81.006% and 43.873%. Compared to the results of normal training samples, the accuracy of species such as A. australis, A. clavata, B. tripartita, C. nutans, E. crus-galli, G. longituba, L. perenne, L. supina, O. sativa, P. supina, S. japonica, and S. juncoides have decreased. Notably, A. clavata exhibited the most significant reduction in accuracy, with PA and UA values decreasing from 100% and 87.879% to 77.328% and 62.584%. The reason behind this phenomenon may be attributed to the relatively small proportion of pixel points in the original hyperspectral images of A. clavata. During deep learning algorithm training with a limited sample size, it may fail to capture these pixel points, resulting in the decline of PA and UA values.

Table 2. The recognition results of rice and weeds by the optimal algorithm SS-CNN under different training ratio conditions.

The visualization results at Tr = 0.05 show the change of classification results of deep neural networks under different modeling strategies. Both 3D-CNN and 2D-CNN algorithms exhibit issues related to misclassification among diverse weed species. This phenomenon can be attributed not only to the limited global information comprehension of the entire image by the 3D-CNN and 2D-CNN algorithms but also to the challenge of these models in learning and capturing the underlying non-linear relationships within the rice and weed sample data as the labeled samples decrease. Similarly, consistent with the modeling strategy of Tr =0.7, in the case of Tr =0.05, the classification algorithm (SS-CNN, SD-CNN, SE-CNN) optimized by the superpixelwise division module and the SE attention mechanism module is superior to the detection effect of 3D-CNN and 2D-CNN. Notably, the SS-CNN algorithm proposed in this study achieved a classification accuracy of 95.370%, with recognition performance highly consistent with labeled images.

3.6 Discussion

In recent years, the increase in summer temperatures and precipitation in northern China has created favorable conditions for the frequent occurrence of plant diseases, pests, and species invasions. Consequently, the establishment of a spectral library for northern Chinese paddy field weeds and weed stress species identification monitoring has become increasingly crucial. Previous studies have primarily focused on analyzing the spatial distribution and species identification of weeds in farmlands using large-scale remote sensing data and multispectral data from drones. Some studies have also assessed the impact of competitive pressure on ecological weed management (Osorio et al., 2020; Reedha et al., 2022). However, weed stress identification and monitoring at the field scale pose significant challenges, as applications such as precision agriculture and targeted spraying require more detailed information about weed species and physiological activities than ever before (Li et al., 2023). Therefore, on-site and laboratory-based hyperspectral analysis, especially in the challenging field environments, holds immense potential in the characterization and discriminative analysis of weed stress, thanks to its high resolution. Based on this premise, our research utilizes hyperspectral imaging technology to establish a hyperspectral library of rice and weed species in the cold regions of northern China. Compared to the study by Dmitriev et al (Dmitriev et al., 2022), which examined five weed species, our research benefits from a greater diversity of weed species, broader geographical coverage, and a larger volume of spectral data. Canopy spectral profile characteristics, vegetation indices, and principal component analysis (PCA) explain the differences in physiological activities of different weed species and the inherent distribution patterns of spectral data. The wavelengths around 550 nm, 680 nm, 760 nm, and 935 nm, as well as their neighboring spectral bands, are considered to represent the most prominent spectral features of weeds. They reflect the spectral diversity of weed species and indirectly indicate their physiological activities, potentially playing a crucial role in weed identification. The results of Bai et al (Bai et al., 2013). showed that there were obvious reflection peaks in the spectral curves of all weeds in the wavelength range of 508–576 nm, and the reflectance of the spectral curves in the wavelength range of 682–739 nm increased rapidly, and the reflectance curves of all weeds in the wavelength range of 780–1000 nm were significantly different, which was similar to the results of this study. In addition to the species and physiological activity of weeds, the spectral characteristics of weeds may also vary under different environmental conditions. Within agricultural ecosystems, distinct soil and climate conditions can lead to variations in weed species and their growth patterns (Carlesi et al., 2013; Steponavičienė et al., 2021). For example, the study of Henry et al (Henry et al., 2004). shows that the characteristics of Discrete Wavelet Transformation (DWT) spectral reflectance curves of weed (Xanthium strumarium L. and Cassia obtusifolia L.) are greatly affected by different soil moisture, and the trend of their spectral curves in the visible region (400-780nm) and the near-infrared (NIR) region (780-2400nm) differ significantly from the original curve.

Moreover, research conducted by Zhang and Slaughter (2011) explored the impact of environmental temperature changes on the hyperspectral imaging characteristics of weeds in the visible and near-infrared regions. Increased temperature leads to higher spectral reflectance in the visible range (480-670nm) of weed canopies, while, during the same conditions, reflectance in the range of 720-810nm decreases. Additionally, vegetation indices may vary with the growth stages of weeds. A vegetation index shows statistical differences in different weed phenological stages and provides a valuable reference for the differentiation of weeds in different growth periods (Peña-Barragán et al., 2006). However, due to the limitation of conditions, the current research did not consider the physiological activity of various weeds in different growth environments and growth periods, as well as the biochemical and physiological responses of rice under different growth conditions and their spectral characteristics. Future studies should aim to bridge the gap between sensitive spectral features and the potential biochemical and physiological processes that rice undergoes throughout its entire growth cycle in response to various modes of weed stress.

In the discrimination analysis of rice and weed stress, this study established a deep learning network, termed SS-CNN, and conducted ablation experiments. Under the condition of Tr=0.7, the SS-CNN model outperformed the comparative models in terms of recognition (OA: 99.910%, AA: 99.502%, Kappa: 0.9991). Similarly, at Tr=0.05, the SS-CNN classification algorithm still achieved the best classification results (OA: 95.370%, AA: 86.468%, Kappa: 0.9518). The ablation experiments investigated the impact of the superpixelwise division (SD) module and SE Attention module on the detection performance of the SS-CNN algorithm. The classification results under Tr=0.7 showed an improved detection performance of the SS-CNN algorithm with both SD and SE modules compared to the SD-CNN algorithm (OA: 99.367%) and the SE-CNN algorithm (OA: 99.558%). It also outperformed the 3D-CNN (OA: 99.153%) and the 2D-CNN (OA: 90.176%) to a greater extent. The detection accuracy of the SS-CNN was 0.543% higher than that of the SD-CNN, demonstrating the SE Attention module’s enhancement of the deep learning algorithm for discriminating between rice and weed samples. Furthermore, the detection accuracy of the SS-CNN was 0.352% higher than that of the SE-CNN, confirming the effectiveness of the superpixelwise division module in improving the deep learning algorithm’s detection performance. Especially in the case of Tr=0.05, the detection accuracy of the SS-CNN significantly exceeded that of the SD-CNN and SE-CNN, underscoring the positive role of the SE attention and superpixelwise division modules in deep learning algorithms. These findings further highlight the superior performance of the SS-CNN.

In comparison to the most advanced existing research, for example, Xu et al (Xu et al., 2020). proposed a natural weed identification method that integrates RGB image features and deep features. This method extracts color, positional, textural, and depth features from RGB images and depth images during the tillering and jointing stages of crops. The AdaBoost algorithm was then used to identify weeds, achieving an accuracy of 88% at the tillering stage and 81.08% at the jointing stage. Zhang et al (Zhang et al., 2022a). proposed an EM-YOLOv4-Tiny weed recognition model based on machine vision technology, which combines multi-scale detection and attention mechanism. This model was used to identify six weed species in peanut fields (Portulaca oleracea L., Eleusine indica (L.), Chenopodium album L., Amaranthus blitum L., Abutilon theophrasti Medicus and Calystegia hederacea Wall. ex Roxb), achieving a Mean Average Precision (mAP) of 94.54% on the test dataset. The SS-CNN model still achieved the best results, and it is worth mentioning that the weed species targeted in this study are the most. This suggests that the SS-CNN model’s effectiveness in distinguishing various weeds from rice plants remains robust even in challenging field conditions. Furthermore, the utility of such predictions can be used in the area of global food security, especially in the detection of rice weed stress.

Compared with the satisfactory accuracy obtained by the SS-CNN model in hyperspectral, similar studies have used multi-spectral aerial photos to detect rice weeds (Yu et al., 2022). Hyperspectral technology provides better accuracy for rice weed identification, because a large number of studies have shown that the mapping accuracy of plants is improved with higher spectral resolution (Ferreira et al., 2016; Awad, 2018). However, a major limitation of the widespread use of hyperspectral technology for weed species identification is that it is difficult to determine reliable calibration procedures and spectral algorithms in many environments (such as soil type, growth stage, variety, and weather). In practical scenarios, remote sensing alone cannot quantify the relationship between plant spectral characteristics and soil, growth period, weather, variety or management at a specific time and place. Hence, understanding the distinctions between these factors and developing a universal algorithm becomes crucial, which may involve techniques such as physiological analysis, mechanistic models, crowdsourcing, and transfer learning. We aim to formulate objective solutions to improve resource productivity in the future. In addition, the research results will contribute to variable spraying, targeted weed management, and growth monitoring based on weed species specificity.

4 Conclusion

This study employed hyperspectral imaging technology to characterize and discriminate between Northern Chinese rice and weeds. In this research, canopy spectral profiles, along with PCA and vegetation indices of rice and weed samples, were utilized for spectral feature analysis and physiological activity characterization, revealing characteristic wavelengths for weeds, including 550 nm, 680 nm, 760 nm, and 935 nm, indicative of species traits. A deep learning algorithm, SS-CNN, was developed, incorporating a superpixel division module and SE attention module, and subjected to ablation experiments under different modeling strategies. The results demonstrated that the SS-CNN classification algorithm performed best on sample identification at Tr=0.7 training (OA: 99.910%, AA: 99.502%, Kappa: 0.9991). Similarly, at Tr=0.05 training, although the identification performance of the SS-CNN classification algorithm declined compared to Tr=0.7, it still achieved optimal classification results (OA: 95.370%, AA: 86.468%, Kappa: 0.9518). The proposed methodology plays a significant role in characterizing and discriminating between rice and weeds in harsh field environments. Furthermore, this study not only enriches the spectral library data for field weed stress but also holds potential value in precise monitoring and management decision-making for field crops, advancing plant phenotyping development.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

ZW: Visualization, Data curation, Writing – review & editing. TL: Software, Visualization, Data curation, Writing – review & editing. HL: Writing – review & editing, Data curation, Software, Visualization. YY: Validation, Resources, Conceptualization, Supervision, Formal Analysis, Writing – review & editing. YS: Methodology, Investigation, Software, Writing – review & editing, Formal Analysis, Writing – original draft. HY: Validation, Resources, Conceptualization, Supervision, Formal Analysis, Writing – review & editing. YL: Funding acquisition, Resources, Validation, Supervision, Writing – review & editing, Conceptualization. FC: Writing – review & editing, Conceptualization, Investigation, Validation, Data curation, Formal Analysis. LX: Resources, Validation, Supervision, Writing – review & editing, Conceptualization.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This work was conducted with the support of the High-level Talents Research Initiation Project of Shihezi University (RCZK202559), Project of Tianchi Talented Young Doctor (CZ002559), and China’s National Key R & D Plan (2021YFD200060502).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amigo, J. M., Martí, I., and Gowen, A. (2013). Hyperspectral Imaging and Chemometrics: A perfect Combination for the Analysis of Food structure, Composition and Quality. Data Handl. Sci. Technol. 28, 343–370. doi: 10.1016/B978-0-444-59528-7.00009-0

Awad, M. M. (2018). Forest mapping: a comparison between hyperspectral and multispectral images and technologies. J. For. Res. 29, 1395–1405. doi: 10.1007/s11676-017-0528-y

Bai, Y. Y., Gao, J. L., and Zhang, B. L. (2019). Monitoring of crops growth based on NDVI and EVI. Trans. Chin. Soc Agric. Mach. 50, 153–161. doi: 10.6041/j.issn.1000-1298.2019.09.017

Bai, J., Xu, Y., Wei, X. H., Zhang, J. M., and Shen, B. G. (2013). Weed identification from winter rape at seedling stage based on spectrum characteristics analysis. Trans. Chin. Soc Agric. Eng. 29, 128–134. doi: 10.3969/j.issn.1002-6819.2013.20.018

Barbedo, J. G. A. (2019). A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses. Drones. 3, 40. doi: 10.3390/drones3020040

Bernstein, L. S., Jin, X. M., Gregor, B., and Adler-Golden, S. M. (2012). Quick atmospheric correction code: algorithm description and recent upgrades. Opt. Eng. 51, 111719. doi: 10.1117/1.OE.51.11.111719

Bro, R. and Smilde, A. K. (2014). Principal component analysis. Anal. Methods 6, 2812–2831. doi: 10.1039/c3ay41907j

Cao, J. J., Liu, K., Liu, L., Zhu, Y. H., Li, J., and He, Z. (2018). Identifying mangrove species using field close-range snapshot hyperspectral imaging and machine-learning techniques. Remote Sens. 10, 2047. doi: 10.3390/rs10122047

Carlesi, S., Bocci, G., Moonen, A. C., Frumento, P., and Bàrberi, P. (2013). Urban sprawl and land abandonment affect the functional response traits of maize weed communities in a heterogeneous landscape. Agric. Ecosyst. Environ. 166, 76–85. doi: 10.1016/j.agee.2012.12.013

Cavender-Bares, J., Gamon, J. A., and Townsend, P. A. (2020). “How the optical properties of leaves modify the absorption and scattering of energy and enhance leaf functionality,” in Remote sensing of plant biodiversity (Springer, Cham).

Chen, S. R., Zou, H. D., Wu, R. M., Yan, R., and Mao, H. P. (2013). Identification for weedy rice at seeding stage based on hyper-spectral imaging technique. Trans. Chin. Soc Agric. Mach. 44, 253–257. doi: 10.6041/j.issn.1000-1298.2013.05.044

Chi, J., Lee, H., Hong, S. G., and Kim, H. C. (2021). Spectral characteristics of the antarctic vegetation: A case study of barton peninsula. Remote Sens. 13, 2470. doi: 10.3390/rs13132470

Coleman, G. R. Y., Bender, A., Walsh, M. J., and Neve, P. (2023). Image-based weed recognition and control: Can it select for crop mimicry? Weed Res. 63, 77–82. doi: 10.1111/wre.12566

Congalton, R. G., Gu, J. Y., Yadav, K., Thenkabail, P., and Ozdogan, M. (2014). Global land cover mapping: A review and uncertainty analysis. Remote Sens. 6, 12070–12093. doi: 10.3390/rs61212070

Dai, X. X., Lai, W. H., Yin, N. N., Tao, Q., and Huang, Y. (2023). Research on intelligent clearing of weeds in wheat fields using spectral imaging and machine learning. J. Clean Prod. 428, 139409. doi: 10.1016/j.jclepro.2023.139409

Dass, A., Shekhawat, K., Choudhary, A. K., Sepat, S., Rathore, S. S., Mahajan, G., et al. (2017). Weed management in rice using crop competition-a review. Crop Prot. 95, 45–52. doi: 10.1016/j.cropro.2016.08.005

Dmitriev, P. A., Kozlovsky, B. L., Kupriushkin, D. P., Dmitrieva, A. A., Rajput, V. D., Chokheli, V. A., et al. (2022). Assessment of invasive and weed species by hyperspectral imagery in agrocenoses ecosystem. Remote Sens. 14, 2442. doi: 10.3390/rs14102442

Feret, J. B., Francois, C., Asner, G. P., Gitelson, A. A., Martin, R. E., Bidel, L. P. R., et al. (2008). PROSPECT-4 and 5: Advances in the leaf optical properties model separating photosynthetic pigments. Remote Sens Environ. 112, 3030–3043. doi: 10.1016/j.rse.2008.02.012

Ferreira, M. P., Zortea, M., Zanotta, D. C., Shimabukuro, Y. E., and de Souza Filho, C. R. (2016). Mapping tree species in tropical seasonal semi-deciduous forests with hyperspectral and multispectral data. Remote Sens Environ. 179, 66–78. doi: 10.1016/j.rse.2016.03.021

Fréchette, E., Chang, C. Y. Y., and Ensminger, I. (2016). Photoperiod and temperature constraints on the relationship between the photochemical reflectance index and the light use efficiency of photosynthesis in Pinus strobus. Tree Physiol. 36, 311–324. doi: 10.1093/treephys/tpv143

Gage, K. L. and Schwartz-Lazaro, L. M. (2019). Shifting the paradigm: an ecological systems approach to weed management. Agriculture-Basel. 9, 179. doi: 10.3390/agriculture9080179

Gamon, J. A., Peñuelas, J., and Field, C. B. (1992). A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens Environ. 41, 35–44. doi: 10.1016/0034-4257(92)90059-s

Gausman, H. W. (1985). Plant leaf optical properties in visible and near infrared light (Lubbock: Texas Tech University), 78.

Guo, L. B., Qiu, J., Li, L. F., Lu, B. R., Olsen, K., and Fan, L. J. (2018). Genomic clues for crop-weed interactions and evolution. Trends Plant Sci. 23, 1102–1115. doi: 10.1016/j.tplants.2018.09.009

Guo, F., Xu, Z., Ma, H. H., Liu, X. J., Tang, S. Q., Yang, Z., et al. (2021). Estimating chromium concentration in arable soil based on the optimal principal components by hyperspectral data. Ecol. Indic. 133, 108400. doi: 10.1016/j.ecolind.2021.108400

Guo, J., Xu, X. L., Zhao, Q. L., and Liu, J. N. (2016). Precise orbit determination for quad-constellation satellites at Wuhan University: strategy, result validation, and comparison. J. Geod. 90, 143–159. doi: 10.1007/s00190-015-0862-9

He, H. M., He, J., and Liu, G. (2023). Improved hybrid 2D-3D convolutional neural network for hyperspectral image classification. J. Spatio-Temporal Inf. 30, 184–192. doi: 10.20117/j.jsti.202302004

He, K. M., Zhang, X. Y., Ren, S. Q., and Sun, J. (2015). Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37, 1904–1916. doi: 10.1109/TPAMI.2015.2389824

Henry, W. B., Shaw, D. R., Reddy, K. R., Bruce, L. M., and Tamhankar, H. D. (2004). Spectral reflectance curves to distinguish soybean from common cocklebur (Xanthium strumarium) and sicklepod (Cassia obtusifolia) grown with varying soil moisture. Weed Sci. 52, 788–796. doi: 10.1614/WS-03-051R

Herrmann, I., Pimstein, A., Karnieli, A., Cohen, Y., Alchanatis, V., and Bonfil, D. J. (2011). LAI assessment of wheat and potato crops by VENμS and Sentinel-2 bands. Remote Sens Environ. 115, 2141–2151. doi: 10.1016/j.rse.2011.04.018

Hu, J., Shen, L., Albanie, S., Sun, G., and Wu, E. H. (2020). Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42, 2011–2023. doi: 10.1109/CVPR.2018.00745

Huete, A., Didan, K., Miura, T., Rodriguez, E. P., Gao, X., and Ferreira, L. G. (2002). Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens Environ. 83, 195–213. doi: 10.1016/s0034-4257(02)00096-2

Korres, N. E., Norsworthy, J. K., Tehranchian, P., Gitsopoulos, T. K., Loka, D. A., Oosterhuis, D. M., et al. (2016). Cultivars to face climate change effects on crops and weeds: a review. Agron. Sustain. Dev. 36, 12. doi: 10.1007/s13593-016-0350-5

Krause, G. H. and Weis, E. (1991). Chlorophyll fluorescence and photosynthesis: the basics. Annu. Rev. Plant Physiol. Plant Mol. Biol. 42, 313–349. doi: 10.1146/annurev.pp.42.060191.001525

Laporte-Fauret, Q., Lubac, B., Castelle, B., Michalet, R., Marieu, V., Bombrun, L., et al. (2020). Classification of atlantic coastal sand dune vegetation using in situ, UAV, and airborne hyperspectral data. Remote Sens. 12, 2222. doi: 10.3390/rs12142222

Li, C. C., Li, H. J., Li, J. Z., Lei, Y. P., Li, C. Q., Manevski, K., et al. (2019). Using NDVI percentiles to monitor real-time crop growth. Comput. Electron Agric. 162, 357–363. doi: 10.1016/j.compag.2019.04.026

Li, H. L., Quan, L. Z., Guo, Y. H., Pi, P. F., Shi, Y. H., Lou, Z. X., et al. (2023). Improving agricultural robot patch-spraying accuracy and precision through combined error adjustment. Comput. Electron Agric. 207, 107755. doi: 10.1016/j.compag.2023.107755

Librán-Embid, F., Klaus, F., Tscharntke, T., and Grass, I. (2020). Unmanned aerial vehicles for biodiversity-friendly agricultural landscapes - A systematic review. Sci. Total Environ. 732, 139204. doi: 10.1016/j.scitotenv.2020.139204

Liu, M. C., Gao, T. T., Ma, Z. X., Song, Z. H., Li, F. D., and Yan, Y. F. (2022). Target detection model of corn weeds in field environment based on MSRCR algorithm and YOLOv4-tiny. Trans. Chin. Soc Agric. Mach. 53, 246–255. doi: 10.6041/j.issn.1000-1298.2022.02.026

Maes, W. H. and Steppe, K. (2019). Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 24, 152–164. doi: 10.1016/j.tplants.2018.11.007

Malik, R. K. and Singh, S. (1995). Littleseed Canarygrass (Phalaris minor) resistance to Isoproturon in India. Weed Technol. 9, 419–425. doi: 10.1017/s0890037x00023629

Mkhize, Y., Madonsela, S., Cho, M., Nondlazi, B., Main, R., and Ramoelo, A. (2024). Mapping weed infestation in maize fields using Sentinel-2 data. Phys. Chem. Earth. 134, 103571. doi: 10.1016/j.pce.2024.103571

Osorio, K., Puerto, A., Pedraza, C., Jamaica, D., and Rodríguez, L. (2020). A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering. 2, 471–488. doi: 10.3390/agriengineering2030032

Peña-Barragán, J. M., López-Granados, F., Jurado-Expóosito, M., and García-Torres, L. (2006). Spectral discrimination of Ridolfia segetum and sunflower as affected by phenological stage. Weed Res. 46, 10–21. doi: 10.1111/j.1365-3180.2006.00488.x

Prasad, K. A., Gnanappazham, L., Selvam, V., Ramasubramanian, R., and Kar, C. S. (2015). Developing a spectral library of mangrove species of Indian East Coast using field spectroscopy. Geocarto Int. 30, 580–599. doi: 10.1080/10106049.2014.985743

Qu, J. H., Sun, D. W., Cheng, J. H., and Pu, H. B. (2017). Mapping moisture contents in grass carp (Ctenopharyngodon idella) slices under different freeze drying periods by Vis-NIR hyperspectral imaging. LWT-Food Sci. Technol. 75, 529–536. doi: 10.1016/j.lwt.2016.09.024

Quan, L. Z., Lou, Z. X., Lv, X. L., Sun, D., Xia, F. L., Li, H. L., et al. (2023). Multimodal remote sensing application for weed competition time series analysis in maize farmland ecosystems. J. Environ. Manage. 344, 118376. doi: 10.1016/j.jenvman.2023.118376

Raven, P. H., Evert, R. F., Eichhorn, S. E., Raven, H. P., and Franklin, E. R. (2005). Biology of plants (New York: W. H. Freeman and Company).

Reedha, R., Dericquebourg, E., Canals, R., Hafiane, and Adel (2022). Transformer neural network for weed and crop classification of high resolution UAV images. Remote Sens. 14, 592. doi: 10.3390/rs14030592

Roy, S. K., Krishna, G., Dubey, S. R., and Chaudhuri, B. B. (2019). HybridSN: exploring 3-D-2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote. Sens. Lett. 17, 1–5. doi: 10.1109/lgrs.2019.2918719

Shapira, U., Herrmann, I., Karnieli, A., and Bonfil, D. J. (2013). Field spectroscopy for weed detection in wheat and chickpea fields. Int. J. Remote Sens. 34, 6094–6108. doi: 10.1080/01431161.2013.793860

Steponavičienė, V., Marcinkevičienė, A., Butkevičienė, L. M., Skinulienė, L., and Bogužas, V. (2021). The effect of different soil tillage systems and crop residues on the composition of weed communities. Agronomy-Basel. 11, 1276. doi: 10.3390/agronomy11071276

Su, J. Y., Yi, D. W., Coombes, M., Liu, C. J., Zhai, X. J., McDonald-Maier, K., et al. (2022). Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 192, 106621. doi: 10.1016/j.compag.2021.106621

Sulaiman, N., Che’Ya, N. N., Mohd Roslim, M. H., Juraimi, A. S., Mohd Noor, N., and Fazlil Ilahi, W. F. (2022). The application of hyperspectral remote sensing imagery (HRSI) for weed detection analysis in rice fields: A review. Appl. Sci. 12, 2570. doi: 10.3390/app12052570

Wang, Z. T., Fu, Z. D., Weng, W. X., Yang, D. Z., and Wang, J. F. (2022a). An efficient method for the rapid detection of industrial paraffin contamination levels in rice based on hyperspectral imaging. LWT-Food Sci. Technol. 171, 114125. doi: 10.1016/j.lwt.2022.114125

Wang, C., Wu, X. H., Zhang, Y. Q., and Wang, W. J. (2022b). Recognizing weeds in maize fields using shifted window Transformer network. Trans. Chin. Soc Agric. Eng. 38, 133–142. doi: 10.11975/j.issn.1002-6819.2022.15.014

Wang, H. X., Zhou, J. Q., Gu, C. H., and Lin, H. (2019). Design of activation function in CNN for image classification. J. Zhejiang Univ. 53, 1363–1373. doi: 10.3785/j.issn.1008-973X.2019.07.016

Xu, K., Li, H. M., Cao, W. X., Zhu, Y., Chen, R. J., and Ni, J. (2020). Recognition of weeds in wheat fields based on the fusion of RGB images and depth images. IEEE Access. 8, 110362–110370. doi: 10.1109/ACCESS.2020.3001999

Yoder, B. J. and Pettigrew-Crosby, R. E. (1995). Predicting Nitrogen and Chlorophyll Content and Concentrations from Reflectance Spectra (400–2500 nm) at Leaf and Canopy Scales. Remote Sens Environ. 53, 199–211. doi: 10.1016/0034-4257(95)00135-n

Yu, F. H., Jin, Z. Y., Guo, S. E., Guo, Z. H., Zhang, H. G., Xu, T. Y., et al. (2022). Research on weed identification method in rice fields based on UAV remote sensing. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.1037760

Zhang, Y. C., Gao, J. F., Cen, H. Y., Lu, Y. L., Yu, X. Y., He, Y., et al. (2019). Automated spectral feature extraction from hyperspectral images to differentiate weedy rice and barnyard grass from a rice crop. Comput. Electron Agric. 159, 42–49. doi: 10.1016/j.compag.2019.02.018

Zhang, Y. and Slaughter, D. C. (2011). Hyperspectral species mapping for automatic weed control in tomato under thermal environmental stress. Comput. Electron Agric. 77, 95–104. doi: 10.1016/j.compag.2011.04.001

Zhang, H., Wang, Z., Guo, Y. F., Ma, Y., Gao, W. K., Chen, D. X., et al. (2022a). Weed detection in peanut fields based on machine vision. Agriculture-Basel. 12, 1541. doi: 10.3390/agriculture12101541

Zhang, Y., Wu, J. B., and Wang, A. Z. (2022b). Comparison of various approaches for estimating leaf water content and stomatal conductance in different plant species using hyperspectral data. Ecol. Indic. 142, 109278. doi: 10.1016/j.ecolind.2022.109278

Keywords: rice, weed, hyperspectral imaging technology, deep learning, identification

Citation: Wang Z, Lin T, Li H, Yin Y, Suo YT, Yang H, Li Y, Cai F and Xiao L (2025) Proximal hyperspectral detection of rice and weed: characterization and discriminant analysis. Front. Plant Sci. 16:1685985. doi: 10.3389/fpls.2025.1685985

Received: 14 August 2025; Accepted: 13 November 2025; Revised: 05 November 2025;

Published: 11 December 2025.

Edited by:

Deepak Kumar, Chitkara University, IndiaReviewed by:

Fei Dai, Gansu Agricultural University, ChinaXiaozhen Liu, Chinese Academy of Agricultural Sciences, China

Copyright © 2025 Wang, Lin, Li, Yin, Suo, Yang, Li, Cai and Xiao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fengjie Cai, Y2FpZmpjY0AxNjMuY29t; Li Xiao, eGlhb2xpMjAyNXNoekAxNjMuY29t

†These authors have contributed equally to this work

Zhentao Wang1,2,3,4†

Zhentao Wang1,2,3,4† Yulin Li

Yulin Li Fengjie Cai

Fengjie Cai