- 1College of Tobacco Science, National Tobacco Cultivation and Physiology and Biochemistry Research Center, Key Laboratory for Tobacco Cultivation of Tobacco Industry, Henan Agricultural University, Zhengzhou, China

- 2Anhui Fermented Food Engineering Research Center, School of Food and Biological Engineering, Hefei University of Technology, Hefei Anhui, China

- 3Tobacco Leaf Production Department, Zhangjiajie City Branch of Hunan Tobacco Company, Zhangjiajie Hunan, China

Introduction: The cigar leaves moisture content (CLMC) is a critical parameter for controlling curing barn conditions. Along with the continuous advancement of deep learning (DL) technologies, convolutional neural networks (CNN) have provided a way of thinking for the non-destructive estimation of CLMC during the air-curing process. Nevertheless, relying merely on single-perspective imaging makes it difficult to comprehensively capture the complementary morphological features of the front and back sides of cigar leaves during the air-curing process.

Methods: This study constructed a dual-view image dataset covering the air-curing process, and proposes a regression framework named CADFFNet (channel attention weight-based dual-branch feature fusion network) for the non-destructive estimation of CLMC during the curing process based on dual-view RGB images. Firstly, the model utilizes two independent and parallel ResNet as its backbone structure to capture the heterogeneous features of dual-view images. Secondly, the Dual Efficient Channel Attention (DECA) module is introduced to dynamically adjust the channel attention weights of the features, thereby facilitating interaction between the two branches. Lastly, a Multi-scale convolutional feature fusion (MSCFF) module is designed for the deep fusion of features from the front and back images to aggregate multi-scale features for robust regression.

Results: On five-fold cross-validation, CADFFNet attains R2 of 0.974±0.007 and mean absolute error (MAE) of 3.80±0.37%. On an independent cross-region, cross-variety testing set, it maintains strong generalization (R2=0.899, MAE=5.82%), compared with the classic CNN models ResNet18, GoogLeNet, VGG19Net, DenseNet121, and MobileNetV2, its R2 value has increased by 0.047, 0.041, 0.055, 0.098, and 0.090 respectively.

Discussion: Generally, the proposed CADFFNet offers an efficient and convenient method for non-destructive detection of CLMC, providing a theoretical basis for automating the air-curing process. It also provides a new perspective for moisture content prediction during the drying process of other crops, such as tea, asparagus, and mushrooms.

1 Introduction

As a significant economic crop, tobacco plays a vital role in enhancing farmers' income (Soneji et al., 2022). Notably, the consumption of hand-rolled cigars, a key tobacco product, has risen significantly in recent years (Yang et al., 2024a), driving an increasing demand for high-quality cigar leaf raw materials. The air-curing process is critical to the morphological changes and quality formation of cigar leaves (Zhao et al., 2024b). Among various factors, the CLMC is a vital parameter for controlling temperature and humidity in curing barns. Typically, operators estimate CLMC levels through visual inspection and tactile judgment, which highly rely on experience and are prone to inaccuracies. Conventional methods for quantifying moisture content, such as hot-air drying (Rahman et al., 2025) and Karl Fischer titration (Kosfeld et al., 2022), are complex, destructive, and incapable of providing real-time, non-invasive measurements. Recently, several novel indirect moisture content detection technologies have been proposed for agricultural products production, including spectral analysis (Guo et al., 2023; Liu et al., 2023), microwave technology (Jin et al., 2023), and nuclear magnetic resonance (NMR) (Qu et al., 2021). For instance, Wei et al. (2021) employed multispectral images of tea leaves to develop a moisture prediction model. Similarly, Yang et al. (2024b) collected spectral and morphological data of alfalfa seeds under varying moisture levels and distinguished them based on machine learning discriminant models. Moreover, Wu et al. (2022) utilized multifrequency microwave signals to detect tea leaves moisture content during the withering process. Despite these advancements enabling the non-invasive detection of agricultural products, methods such as moisture content extensively depend on expensive equipment, limiting their scalability in practical production scenarios. Therefore, the accurate, convenient, and non-destructive estimation of CLMC is crucial for improving the standardization level of the cigar leaf air-curing process.

With advancements in technology, computer vision has demonstrated significant potential in various aspects of tobacco production, including disease identification (Lin et al., 2022) and determining harvest maturity (Dai et al., 2024). Indeed, by training a model on extensive image datasets, it can learn the correlation between moisture content and the subtle changes in color, texture, and shape of leaves (Xing et al., 2025), enhancing the automation of tobacco leaf curing processes. For instance, Condorí et al. (2020) developed a high-precision prediction model to learn the relationship between color changes in tobacco leaf images and weight loss, enabling automated control of curing barns during the curing stage. Wu et al. (2014) utilized color features from tobacco leaf images to construct an artificial neural network model for predicting the temperature rise time during the bulk curing process. Furthermore, Pei et al. (2024) integrated data from stem weight sensors, temperature and humidity sensors, and digital cameras to introduce the comprehensive predictive curing model (CPBM), which facilitates stage recognition during the tobacco curing process. Traditional machine learning (ML) algorithms often require manual feature design, typically relying on domain-specific expertise and task-specific requirements. Nevertheless, such an approach often yields incomplete feature extraction, compromising model performance, particularly with complex and diverse image datasets (Shafik et al., 2024). In contrast, Deep Learning (DL) utilizes more complex neural networks to progressively transform input data into more abstract representations, enabling the model to automatically extract features without human intervention. Among them, convolutional neural networks (CNN) have shown exceptional performance in handling large-scale image data due to their inherent ability to learn features and their generalizability autonomously (Chen et al., 2023). In recent years, CNN-based solutions have been widely studied in the field of crop production (Xu et al., 2020; Wang et al., 2023). For example, Dai et al. (2025) designed a new feature extraction network structure, for determining lightness levels during various stages of tobacco curing, which facilitates the exchange of information between features at different image levels, thereby enhancing the model's classification accuracy. By integrating multi-scale features, the network effectively combines the global features and local features of the image, leading to improved performance. Zhao et al. (2024a) proposed a lightweight recognition model for tobacco leaf curing stages, named TCSRNet. By integrating Inception branches and Multi-scale Adaptive Attention Mechanism (MAAM), this model achieves a classification accuracy of approximately 90.35% with 158.136 MFLOPS and 1.749M parameters. Feng et al. (2025) compared the model's recognition accuracy under different image acquisition conditions during the tobacco curing process. The results indicated that combining images from multiple angles significantly improved the model's accuracy in determining the curing stage.

The above researches indicate that CNNs demonstrate applicability in the intensive curing process of flue-cured tobacco. However, the intensive curing process of flue-cured tobacco has a relatively short cycle, and each curing stage is equipped with its corresponding process parameters. In contrast, the air-curing process of cigar leaves is relatively slow, and the environmental temperature and humidity are not strictly controllable (Zhao et al., 2022). Therefore, the accurate estimation of moisture content during the air-curing process of cigar leaves has become a problem that urgently needs to be solved. For example, Yang et al. (2023) demonstrated that, compared to traditional machine learning algorithms, CNNs offer superior performance in predicting the CLMC during the air-curing process. Yin et al. (2024) used Visible and Near-infrared hyperspectral imaging (VNIR-HSI) combined with a Diversified Region-based Convolutional Neural Network (DR-CNN) to predict the CLMC during the curing process. The results showed that in terms of prediction accuracy, DR-CNN outperformed PLSR and traditional CNN models. Notably, current studies have primarily focused on the single-view image features of tobacco leaves (Gao et al., 2021; Wu et al., 2024). However, during the air-curing stage, as water lost, the leaves gradually curl inward, causing the front surface to be obscured by the back. Additionally, the vein of the leaf becomes fully exposed on the back surface. Consequently, relying solely on the single-view images for modeling entails a significant loss of crucial information.

To address the above issues, we propose a channel attention weight-based dual-branch feature fusion network (CADFFNet). Specifically, the contributions of this study are as follows:

● Construction of the cigar leaf air-curing process image dataset: To replicate the authentic curing process of cigar leaves, images of the cigar leaf in their naturally suspended state were used to predict moisture content.

● Design of the CADFFNet: The model employs two parallel ResNet-18 backbones to extract features from front- and back-view leaf images. A DECA module performs cross-view channel alignment, and an MSCFF module carries out multi-scale feature fusion. A subsequent regression head outputs the CLMC. By leveraging dual-view imagery, this design improves the predictive accuracy of CLMC estimation.

● Experimental Validation: The CADFFNet model successfully predicts the CLMC during the air-curing process, demonstrating robust performance across different planting regions and varieties.

The remainder of the paper is organized as follows. Section 2 describes the datasets constructed and the proposed method. Section 3 reports the experiment results, Section 4 discusses the advantages and limitations of the proposed methodology, and Section 5 concludes this work.

2 Materials and method

2.1 Datasets

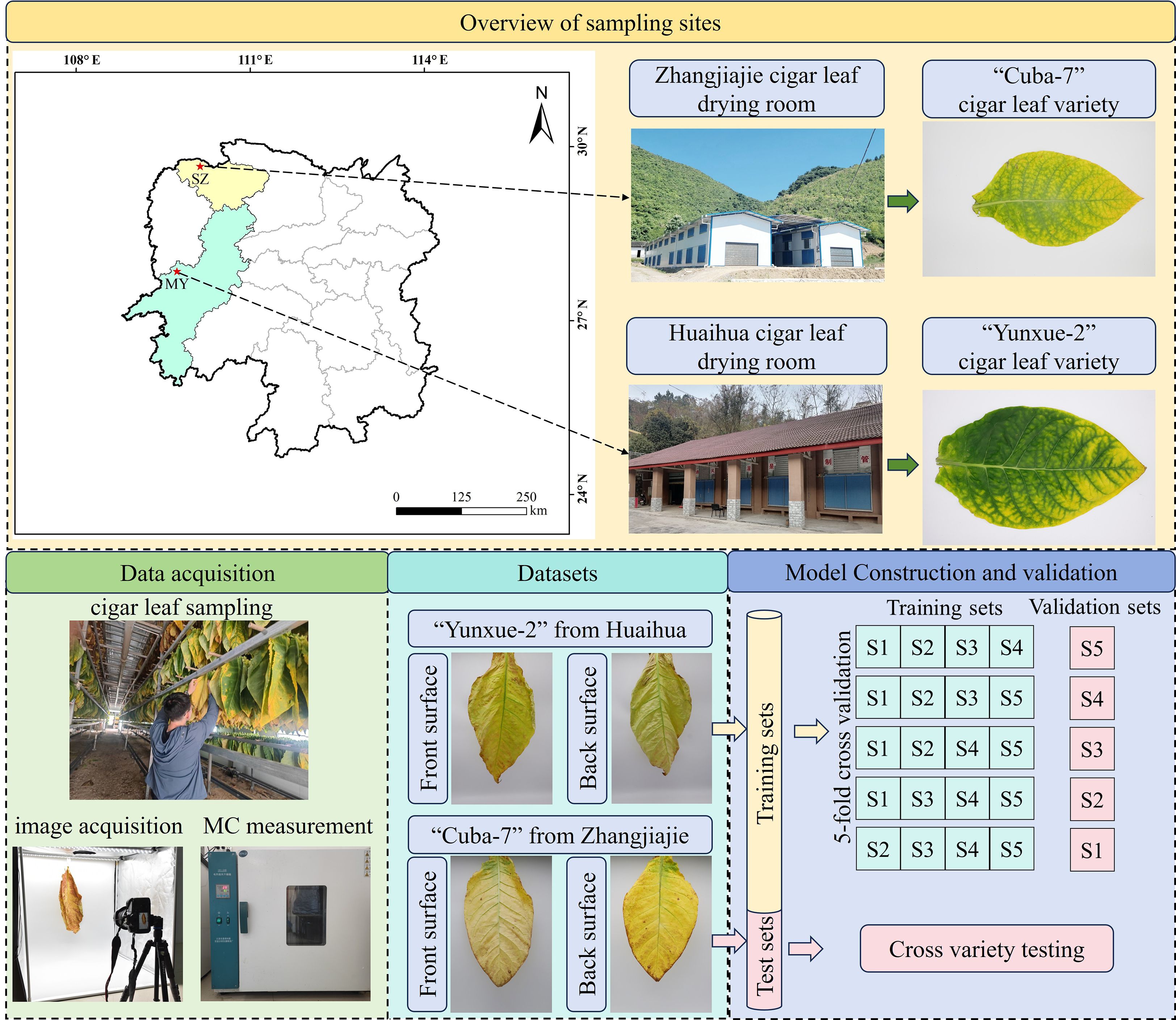

Figure 1 illustrates the data collection process of this study. After harvest, cigar leaves were fixed onto wooden rods using cotton threads for curing. To closely replicate the authentic curing process, the image collection procedure followed the method described in Ma et al. (2024). Specifically, after sampling the leaves, they were suspended in a dark chamber equipped for image capture. A digital camera (Canon Mark II, 24-70mm/f4 lens, focal length of 35 mm, 2,080×3,120 pixels resolution) was positioned 90 cm away from the leaves, capturing images of both the front and back surfaces. Inside the dark chamber, a white background board was placed, and two 40W lamps were positioned at the top to provide lighting. After the image was captured, the fresh weight of the leaves was immediately measured. Subsequently, the CLMC was determined using the hot-air drying method (Chen et al., 2021). The cigar leaves MC can be calculated as shown in Equation 1:

where MC is the moisture content of cigar leaves, and Mfresh and Mdry are the fresh weight and dry weight of cigar leaves, respectively.

Where MC is the cigar leaves moisture content, and Mfresh and Mdry are the fresh weight and dry weight of cigar leaves, respectively.

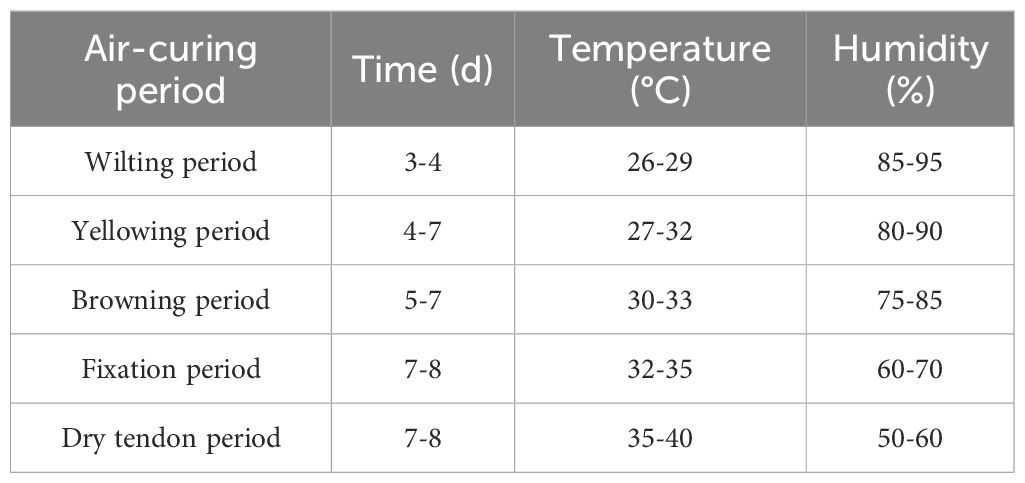

The datasets used in this study were obtained from Mayang County, Huaihua City, Hunan Province (109°39′E, 27°54′N, at an altitude of 300 m). The data were gathered from the day of leaf harvest (0d) until the leaves were removed from the curing barn. The barn temperature and humidity parameters of the drying process are shown in Table 1. In total, 1,005 cigar leaves were collected, yielding 2,010 dual-view images for model training. To fully exploit the practical information contained in this small-scale dataset and enhance the reliability of model evaluation, we applied a 5-fold cross−validation scheme. Specifically, the entire dataset was randomly divided into 5 non−overlapping parts of roughly equal size, in each fold, one subset was used for validation, while the remaining four subsets were used for training, this rotation ensures that each sample served as the validation set exactly once, which enables a quantitative assessment of the model's robustness and stability across different data partitions (Niu et al., 2025).

To further assess the model’s ability to generalize across diverse production regions and cigar varieties, an independent test set was additionally constructed. This test set comprised 175 front-side and 175 back-side images of the "Cuba-7" cigar leaves, collected from Sangzhi County, Zhangjiajie City, Hunan Province (110°16′E, 29°39′54″N; at an altitude of 308 m). The resolution of all images was resized to 224×224, and the corresponding CLMC labels were generated to match the model's input requirements and reduce computational complexity.

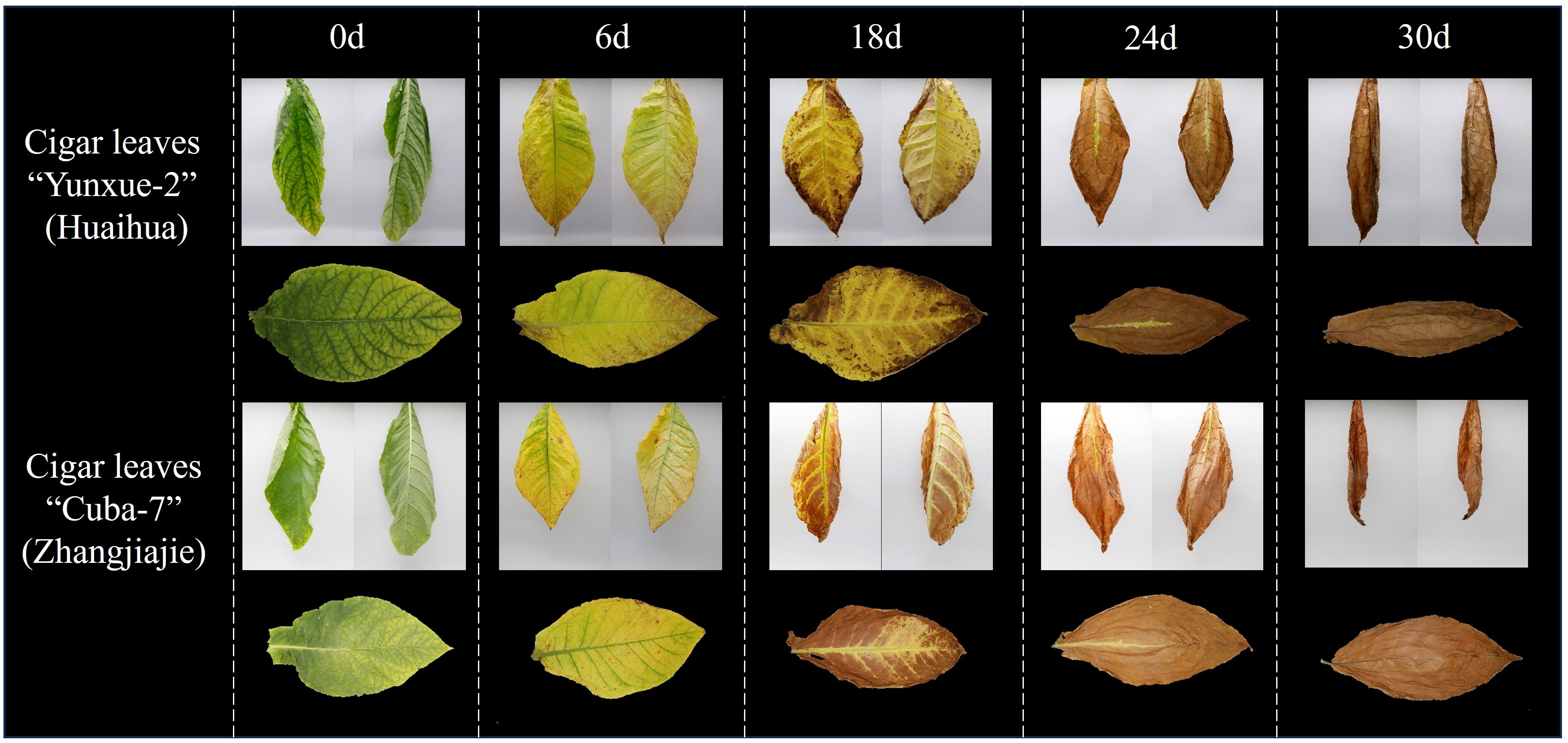

After harvest, the cigar leaves are initially green, as curing progresses, its color shifts to yellow and then deepens. As shown in Figure 2, the leaves undergo noticeable phenotypic changes during air-curing: surface color shifts, the lamina curls inward, and venation becomes increasingly exposed on the abaxial (back) surface. Consequently, dual-view (front–back) imaging captures more comprehensive information than single-view acquisition. In addition, Significant visual differences are observed in the leaves from different cultivation areas during curing, which are primarily attributed to the cigar variety and local climatic conditions (Jiang et al., 2024). Based on these differences, this study utilized images of cigar leaves from the Huaihua cultivation area for model training and validation. In contrast, photos from the Zhangjiajie cultivation area were employed to evaluate the model's performance, thereby testing its generalization ability.

2.2 Proposed CADFFNet

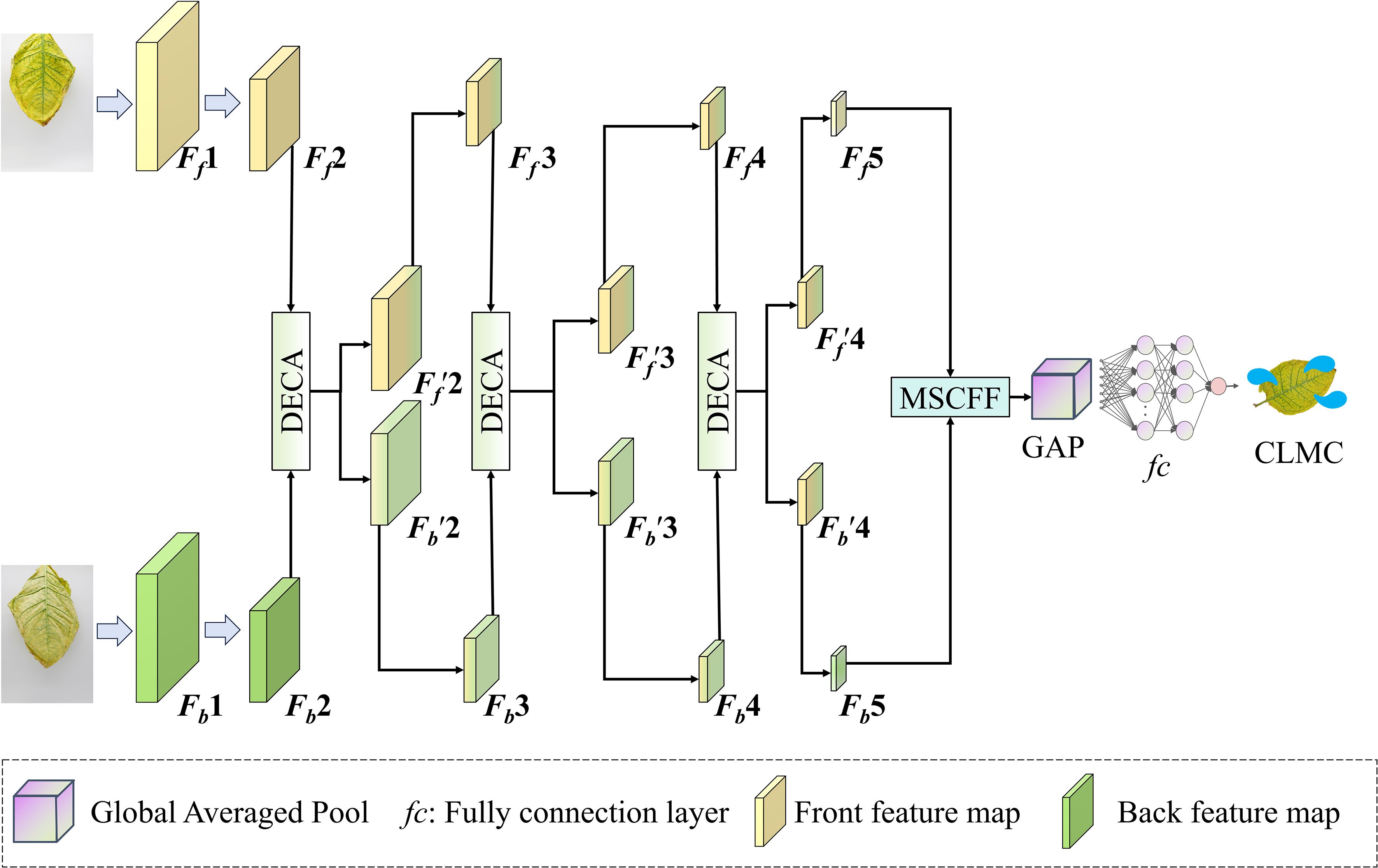

During the CLMC prediction in the air-curing process, the dual-view images of the leaves often exhibit similar or complementary patterns. To obtain a comprehensive depiction of leaf morphology and exploit the complementary information from both surfaces, while the interaction between the two sides enhances the feature expression capability. This study introduces CADFFNet, as depicted in Figure 3, which comprises four modules: the front-side feature extraction branch, the back-side feature extraction branch, the DECA module, and the MSCFF module. The model relies on ResNet (He et al., 2016) as its backbone structure to extract image features. Specifically, the preprocessed front and back images are first input into the dual-branch feature extraction modules, each branch uses ResNet to derive hierarchical features from the front and back images independently. Then, the feature maps from three intermediate stages of the two branches are dynamically interacted with using the DECA module, which highlights key channel information and improves the network’s ability to capture common patterns on both sides. Subsequently, the feature maps output by Stage 5 of both extraction modules are concatenated along the channel dimension, and input to the MSCFF module to enhance multi−scale perception. Finally, followed by global pooling, and then input into an fc layer to predict the results. Since the CLMC prediction is a regression problem, the model is trained using mean squared error (MSE) as the loss function, which is shown in Equation 2:

2.3 DECA module

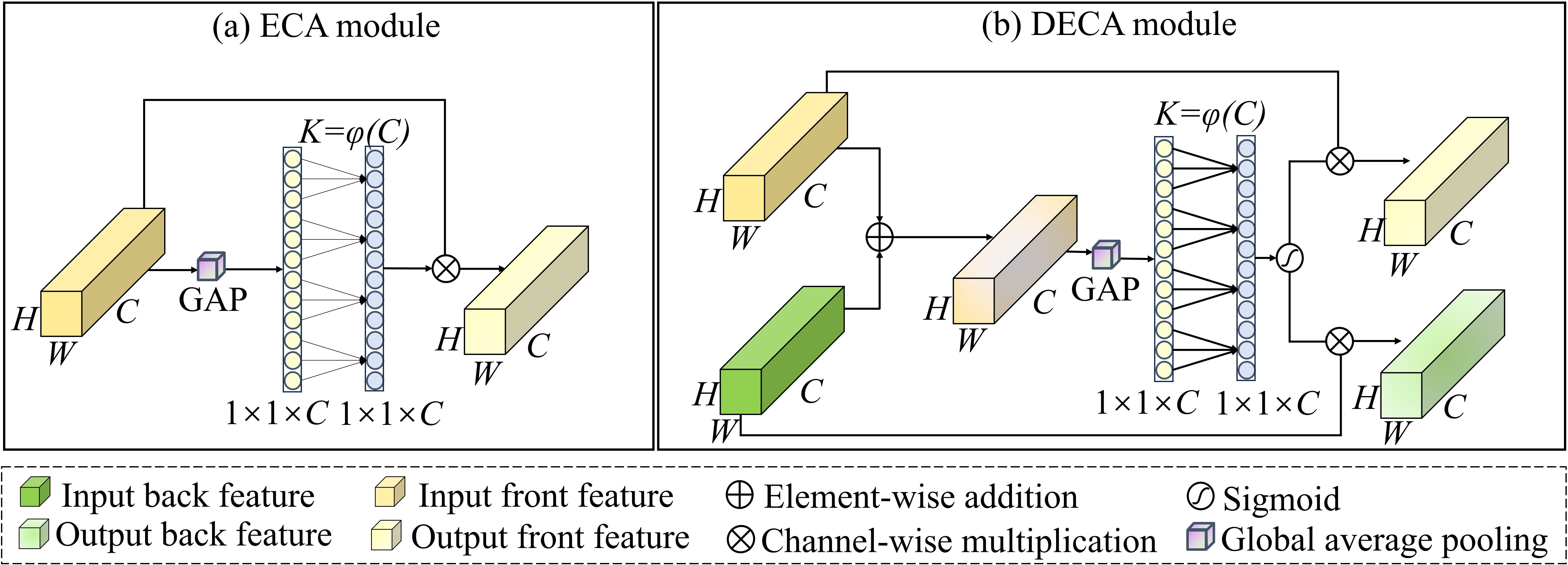

Conventional convolution operations are inherently limited by the receptive field, preventing sufficient attention to key information in the image. To address this, the channel attention mechanism, such as the ECA module, has been widely adopted, where 1D convolutions are applied to model inter-channel dependencies with minimal computational overhead (Lee et al., 2024). By dynamically assigning weights to each channel, the model's expressive capability and predictive performance are enhanced.

Following the architecture of the ECA module depicted in Figure 4a, we devise a dual-branch variant, termed DECA, which is tailored to our two-stream network. DECA generates channel weight descriptors for the fused features and assigns them to the dual-branch network structure, enabling feature interaction between features and enhancing the model's response to similar pattern changes in both images (Wang et al., 2020). The corresponding architecture is depicted in Figure 4b. Given the feature map of front surface image Ff ∈ RH × W × Cand the back surface image Fb ∈ RH × W × C, where H, W, and C denote the height, width, and number of channels of the feature map, respectively, the two maps are first fused by element-wise addition to obtain P ∈ RH × W × C. This process is shown in Equation 3:

Subsequently, a global average pooling is applied to obtain the channel descriptor, which is then processed by a 1D convolution with a kernel size K to model local inter-channel interactions. The resulting vector is passed through a sigmoid activation to generate the channel-attention weights W which is shown in Equation 4:

where the kernel size K is an adaptive parameter determined by the number of channels in the feature map. The calculation method of K is shown in Equation 5:

where γ = 2, and b = 1. The term "odd" ensures that the kernel size is an odd number by rounding the absolute value to the nearest odd integer. Finally, the obtained channel attention weights are applied to the two input feature maps via channel-wise multiplication, resulting in the re-weighted feature maps and , which can be expressed as Equation 6:

This module optimizes the channel weights of the fused front and back features, enhancing the model's ability on key patterns while suppressing the interference from redundant background information. Additionally, the module improves the independent feature representation of each branch and facilitates effective interaction between the front and back features, thereby further boosting the model's overall performance.

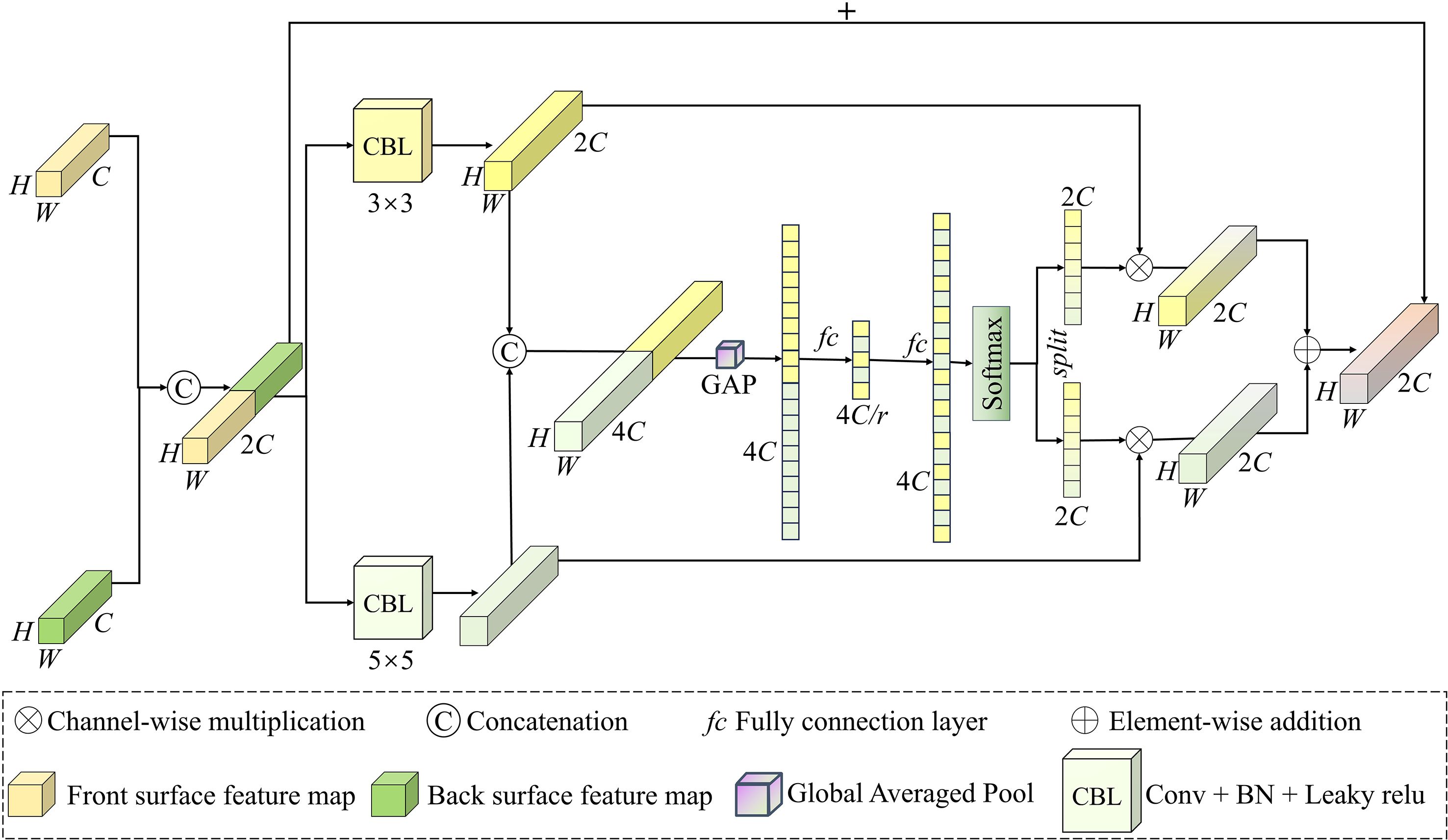

2.4 MSCFF module

In order to effectively extract the different scale information of two feature maps, this study designs a multi-scale convolutional feature fusion module, inspired by the channel attention mechanism and multi-scale feature fusion concepts (Li et al., 2019). The MSCFF module architecture is illustrated in Figure 5, which comprises two convolutional kernels of different sizes, a global pooling layer, a fully connected layer, and a Softmax function. Let the front and the back surface feature maps be denoted as Ff∈ RH × W × Cand Fb ∈ RH × W × C, where H, W, and C denote the height, width, and number of channels of the feature map. First, the two feature maps are concatenated along the channel axis to obtain Y ∈ RH × W ×2C, which can be denote as Equation 7:

Then, two convolution kernels of different sizes are used to perform multi-scale feature extraction on Y to obtain feature representations under different receptive fields. The outputs of the two branches Y1, Y2 ∈ RH × W ×2C are then concatenated to obtain ∈ RH × W ×4C the calculation process is shown in Equations 8–10:

Where Conv3×3 and Conv5×5 denote convolution kernels with sizes of 3 and 5, respectively, and the BN represents batch normalization, σ represents Leaky ReLU activation function. To coordinate the contributions of the two scale branches at the channel level, we apply global average pooling over the spatial dimensions of to obtain the channel descriptor S ∈ R1× 1 ×4C. The descriptor is then passed through two series fully connected layers with reduction ratio r (fixed to r =16 in this study) to obtain S’ ∈ R1× 1 ×4C. Using ReLU function between the two layers, this process is shown in Equation 11:

where , the channel reduction ratio is fixed to r =16 throughout this paper. a Softmax operation is applied to S′ along the channel dimension to produce a normalized weight vector, which is equally split into two parts S1, S2 ∈ R1× 1 ×2C. After broadcasting S1 and S2 to the spatial dimensions, element-wise multiplications with the corresponding scale features to obtain ∈ RH × W ×2C. Finally, the two modulated feature maps are added element-wise and fused with the original Y through a residual connection to obtain the feature map O ∈ RH × W ×2C, which is shown in Equation 12:

3 Result

3.1 Experimental conditions and evaluation metrics

The experimental setup comprises a Core (TM) i7–12700 CPU, an NVIDIA GeForce RTX 3060 Ti with 16 GB of memory, configured with CUDA 12.6 for GPU-based training, and the operating system is Windows 10. The software environment is Python 3.9, utilizing the PyTorch 4.2.1 framework. The model training process uses the Adam optimizer, the batch size is set to 16, and the initial learning rate is 0.0001. The performance and generalization ability of CADFFNet for estimating CLMC during air curing are quantified using the coefficient of determination (R2), root mean squared error (RMSE), and mean absolute error (MAE) as evaluation metrics, the calculation formulas are shown in Equations 13–15. R2 reflects the overall goodness of fit of the model to the prediction results, RMSE indicates the degree of dispersion of prediction errors, and MAE represents the overall average error of the model's predictions. A higher R2 value and lower RMSE and MAE values indicate that the model has better predictive performance.

Where, ŷi and yi represent the measured CLMC of the i-th and the model-predicted value, respectively, and n represents the total number of samples.

3.2 Prediction results of different model variants

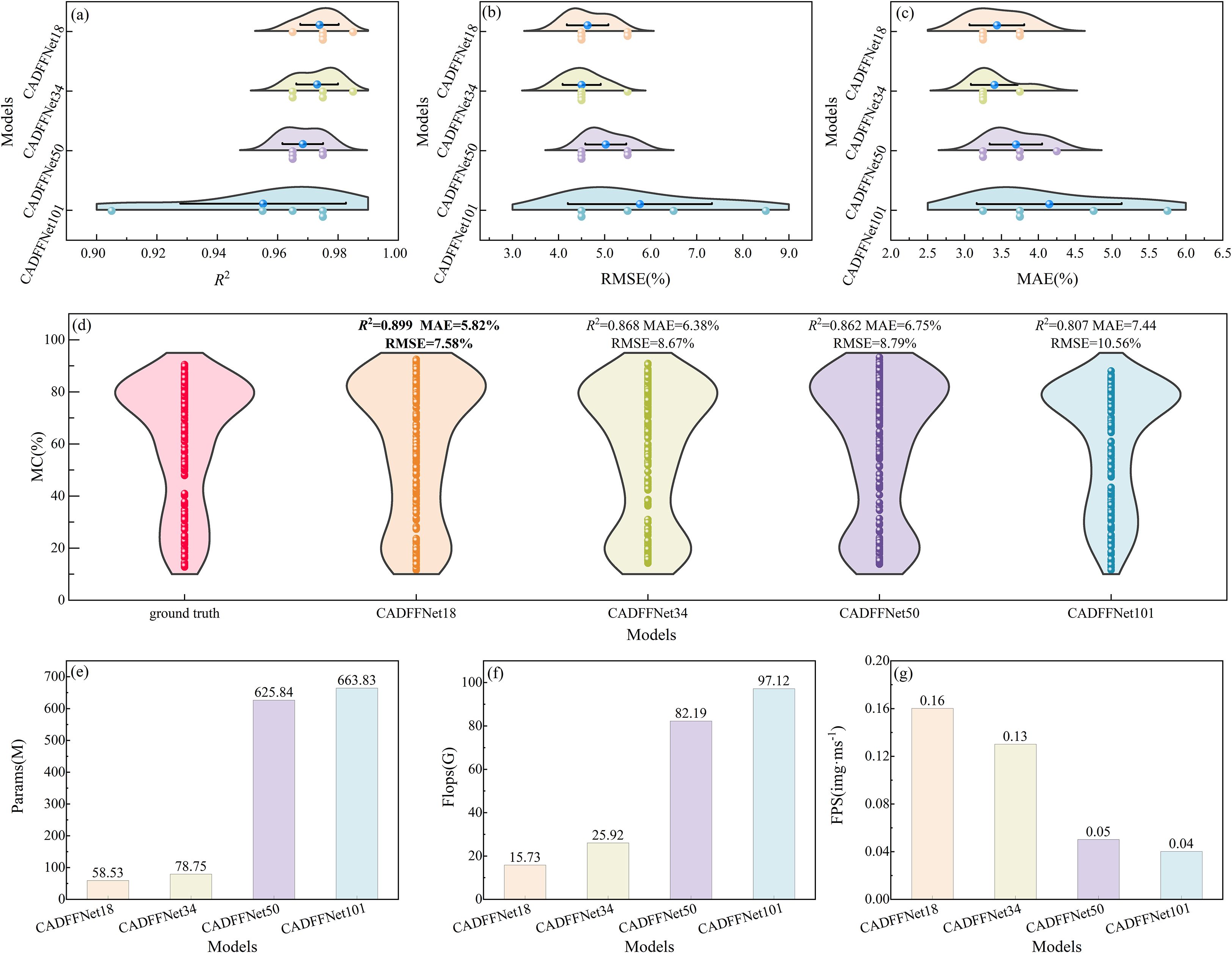

Based on the number of network layers, four CADFFNet variants are constructed: CADFFNet18, CADFFNet34, CADFFNet50, and CADFFNet101. Each model is evaluated through 5-fold cross-validation and independently tested on an external dataset.

Figure 6 provides a comprehensive comparison of these four models, including their performance on cross-validation, prediction accuracy on the independent test set, and overall model complexity. Figures 6a–c highlight that CADFFNet18 outperforms all variants in terms of accuracy and consistency, in the five-fold cross-validation, the mean values of the R2, MAE, and RMSE reached 0.974, 3.80%, and 4.63%, respectively, and there was less variation between each fold.

Figure 6. Performance comparison and complexity analysis of CADFFNet models with different layers under 5-fold cross-validation and independent testing. Subfigures (a-c) shows the distribution of R2, RMSE, and MAE results from the five-fold cross-validation. The colored dots represent the evaluation metric values for each fold for the four model variants. The box distribution is the kernel density estimate of the metric, and its width reflects the probability density of the result. Narrower boxes indicate less fluctuation between folds and greater stability. The blue dots and error bars represent the five-fold means and their 95% confidence intervals, respectively. The blue dots represent the five-fold cross-validation mean. Subfigure (d) shows the ground truth and the prediction results of different layers of models for the testing set, Subfigures (e-g) shows the parameter counts (Params), floating-point computation (Flops), and inference speed (FPS) of the four model variants, respectively.

When increasing the network depth, CADFFNet34 and CADFFNet50 demonstrate a stronger representational capacity; however, their performance declines slightly due to overfitting on the limited dataset. In contrast, CADFFNet101 exhibits poor prediction performance and generalization ability, with mean values of R2, MAE, and RMSE reached 0.955, 4.15%, and 5.77%, and standard deviations reached 0.027, 0.98%, and 1.56%, respectively. Figure 6d compares the distribution of predicted moisture content against the ground truth on the independent test set. Among all models, CADFFNet18 demonstrates the best generalization ability, with R2, RMSE, and MAE values reaching 0.899, 5.82%, and 7.58%, respectively. Figures 6e–g further illustrates that among the four model variants, CADFFNet18 achieves the best prediction performance and generalization ability while having fewer parameters, lower computational complexity, and faster inference speed.

In summary, CADFFNet18 offers the optimal balance between model accuracy and computational efficiency, outperforming deeper variants in both robustness and practicality. Therefore, we will only discuss CADFFNet18 in the following chapters of this research.

4 Discussion

4.1 Ablation experiment

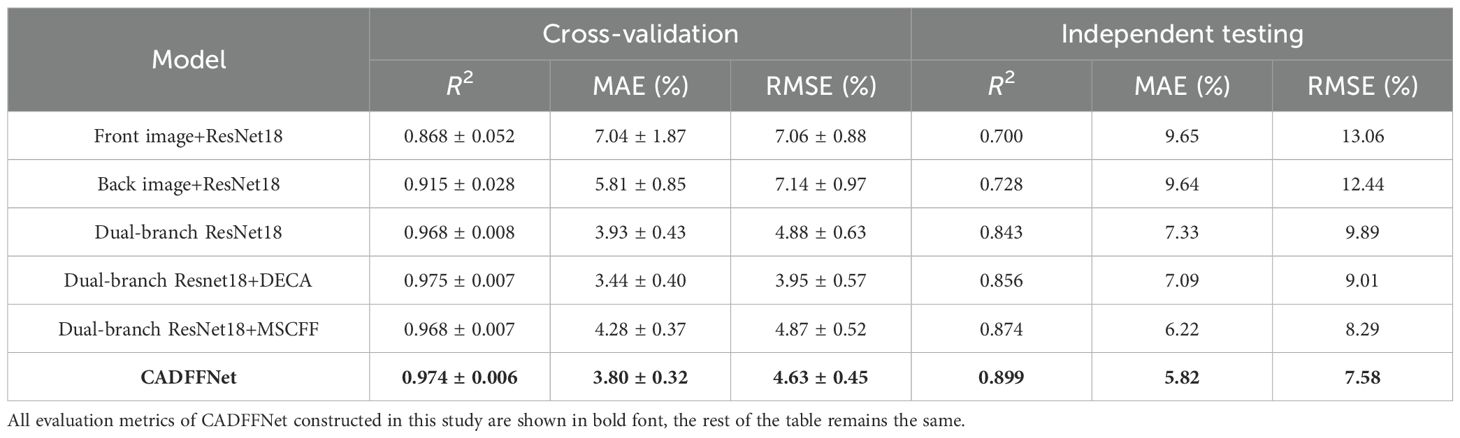

An ablation study was conducted using the best-performing variant, CADFFNet18, on the constructed dataset to validate the effectiveness of the CADFFNet architecture. The single-branch ResNet18 models using front-view and back-view images were set as baseline models. Specifically, a dual-branch ResNet18 was used as the backbone, upon which the DECA and MSCFF modules were incrementally incorporated. Each configuration was evaluated on both the validation and independent test sets using five-fold cross-validation to assess predictive performance and generalization capability. Table 2 summarizes the results, highlighting that the dual-branch variant significantly improves accuracy and robustness compared to the single-branch ResNet18 models. This demonstrates that jointly utilizing front and back-view images enhances the model’s ability to capture the structural characteristics of cigar leaves throughout the air−curing stage.

Furthermore, introducing the DECA module further improves the predictive performance, suggesting its effectiveness in enhancing the network's ability to model symmetric and complementary patterns between dual-view features. Similarly, the MSCFF module contributes to better integration of the extracted multi-branch features. Notably, relative to the dual-branch ResNet-18 baseline, adding DECA alone outperforms adding MSCFF alone in five-fold cross-validation, whereas the independent test set shows the opposite trend. A plausible explanation is that DECA emphasizes channel-level alignment of complementary front and back features, thereby reducing bias under same source folds; in contrast, MSCFF focuses on multi-scale salient information and robustness, making it more sensitive to appearance and scale variations of cigar leaves during air-curing and thus suited on the independent test set.

Ultimately, integrating DECA and MSCFF into the full CADFFNet architecture achieves the best performance across all evaluation settings, with R2, MAE, and RMSE reached 0.974 ± 0.007, 3.80 ± 0.37%, and 4.63 ± 0.45% in cross-validation, and 0.899, 5.82%, and 7.58% in the independent test set. These results confirm the complementary strengths of the two modules and highlight the overall effectiveness of the proposed network design.

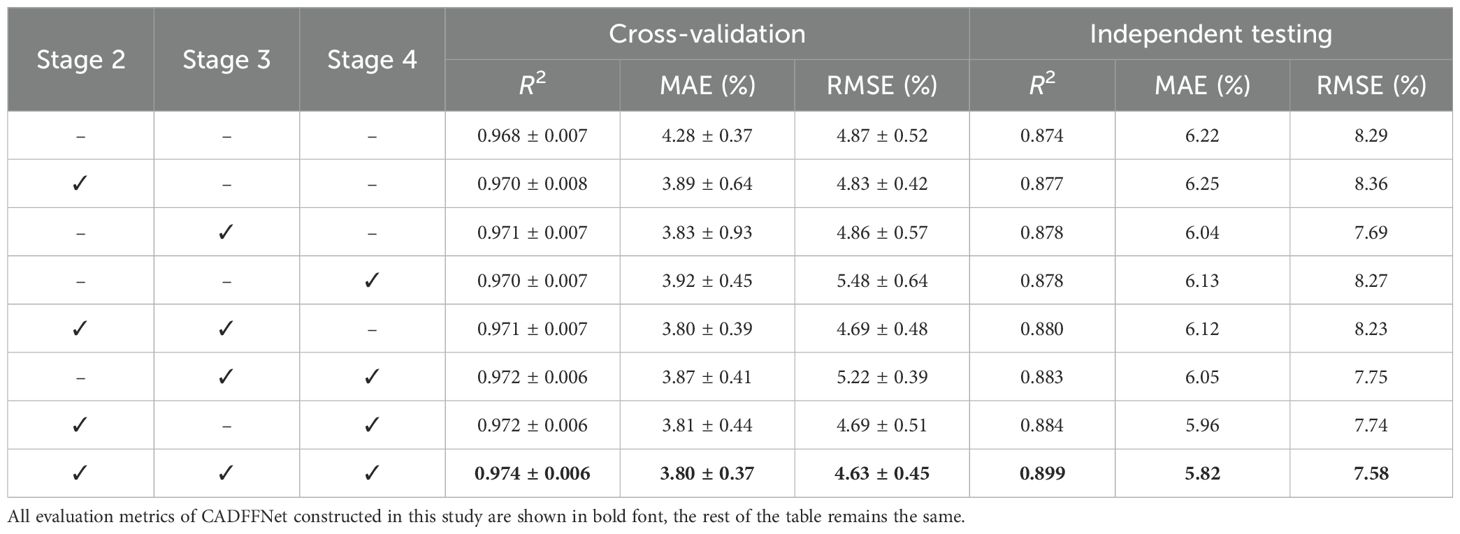

4.2 Impact of the number of DECA modules on the model

The DECA module developed is placed at three key stages in the dual-branch network. To further explore the impact of the number and position of DECA modules on model performance, eight different configurations were constructed based on the number and insertion positions of DECA modules within the network. Their corresponding performance on five-fold cross-validation, the independent testing set is presented in Table 3.

During cross-validation, all configurations exhibited similar predictive performance, with R2 values ranging from 0.968 to 0.974 and MAE values ranging from 3.80% to 4.28%, indicating stable model behavior under limited data conditions. However, noticeable differences emerged in the independent testing results.

Specifically, when a single DECA module was inserted, the model's prediction accuracy on the test set improved compared to the baseline, and the module's insertion position had a minimal effect on performance. Further improvements were observed when two DECA modules were incorporated, with test set R2 values reaching 0.880, 0.883, and 0.884, depending on the placement strategy. The best performance was achieved when all three DECA modules were inserted into the network.

These results suggest that inserting multiple DECA modules at different levels of the dual-branch architecture facilitates the interaction and fusion of multi-scale features. Hence, this design enhances the model's ability to capture low-level features, such as edges and textures, from both front and back views, and improves its understanding of high-level, abstract representations. Consequently, the model's predictive performance and generalization capability are further enhanced. The potential reason for this is that when multiple channel attention modules are inserted at different positions in the dual-branch model, they facilitate the interaction and fusion of feature maps at various scales. This enables the model to capture correlations between shallow features, such as edges and textures, of the front and back images, thereby improving its understanding of the deeper, abstract information in these images and enhancing its predictive performance and generalization ability.

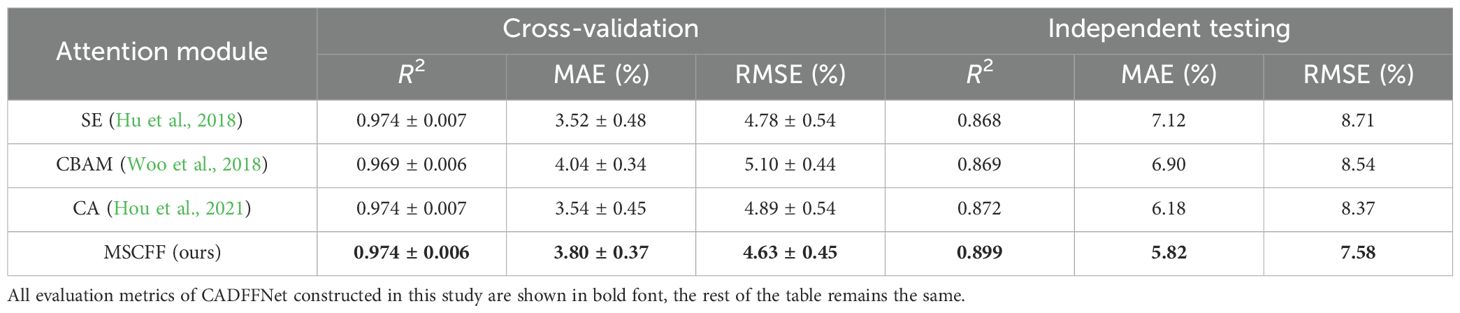

4.3 Impact of different feature fusion strategies on the model

To verify the advantages of the MSCFF module in the feature fusion stage, we replaced it with three popular attention modules for comparison: Squeeze-and-Excitation (SE), Convolutional Block Attention Module (CBAM), and Coordinate Attention (CA). Table 4 highlights that the proposed MSCFF module demonstrates the highest predictive performance and the lowest standard deviation during the five-fold cross-validation, and also achieves the best accuracy on the independent test set. The CA module attains the second-best performance, while the SE module yields the poorest results.

These findings suggest that constructing multi-scale features in combination with adaptive channel weight assignment significantly improves the network’s capacity to integrate both local and global information from feature maps. Thus, this combination effectively improves the model’s predictive performance and generalization ability.

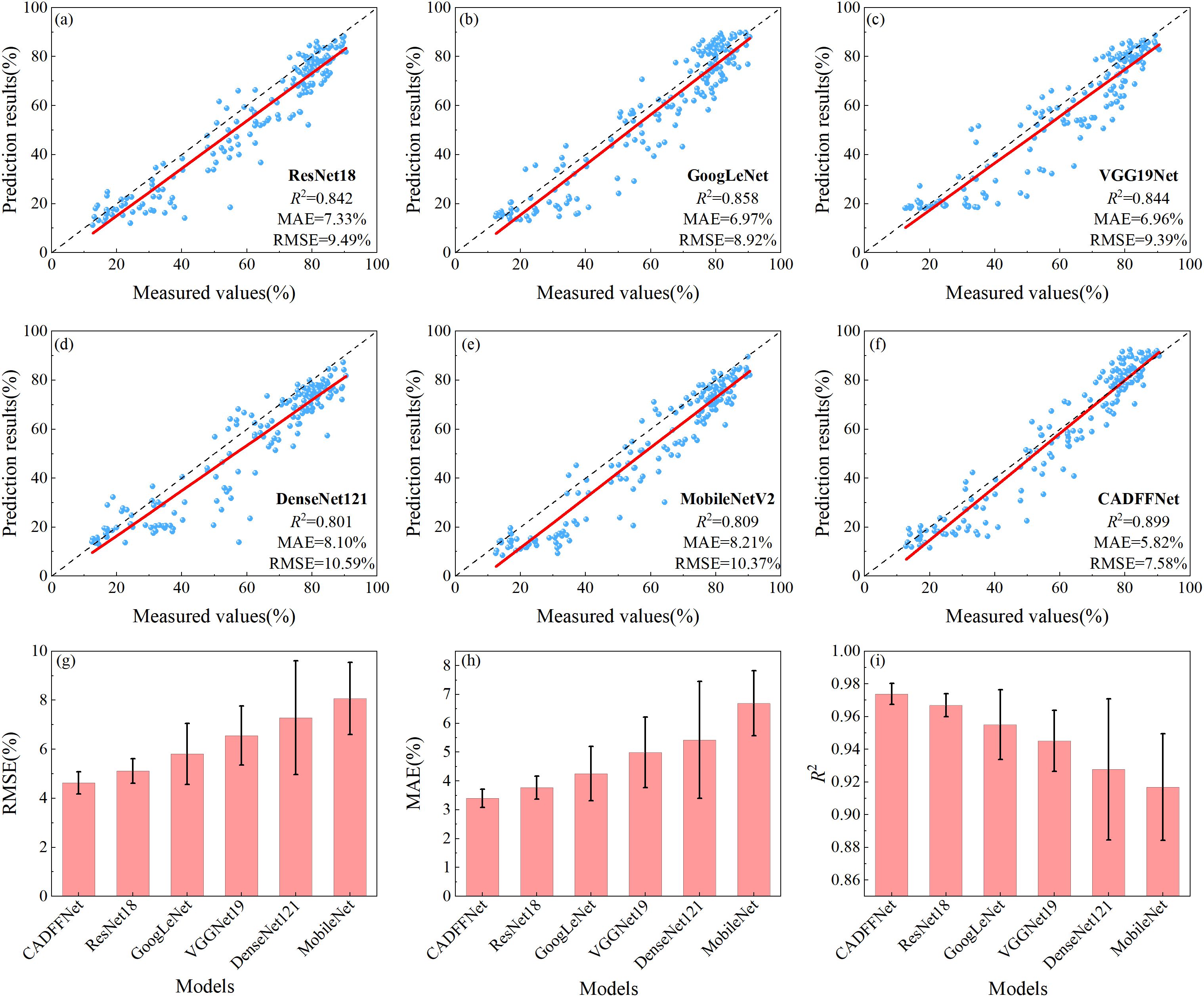

4.4 Comparison with other CNN models

We compared CADFFNet against dual-branch network models with different neural network architectures as backbones for predicting cigar leaf moisture content during the curing process. The models employed were ResNet18 (He et al., 2016), GoogLeNet (Szegedy et al., 2016), VGG19Net (Simonyan and Zisserman, 2015), DenseNet121 (Huang et al., 2017), and MobileNetV2 (Sandler et al., 2018).

Figures 7a–f present the prediction results of different models on the independent test set. The results demonstrate that CADFFNet achieves the highest agreement between predicted and measured moisture content values of cigar leaves, with the highest R2 and the lowest MAE and RMSE, significantly outperforming other CNN-based architectures. Among the remaining five models, GoogLeNet exhibits the best predictive performance, followed by VGG19Net, which indirectly validates the effectiveness of multi-scale feature fusion and residual connections. In contrast, DenseNet121 provides the weakest results, with R2, MAE, and RMSE of 0.801, 8.10%, and 10.59%.

Figure 7. Performance comparison of different CNN models. Subfigures (a-f) prediction results of various models on the independent testing, Subfigures (g-i) comparison of R2and MAE values obtained from five-fold cross-validation for each model.

Figures 7g–i showcase the R2, MAE, and RMSE values of each model under five-fold cross-validation. The results highlight that CADFFNet exhibits the highest robustness and generalization capability, further confirming the superiority of the proposed architecture.

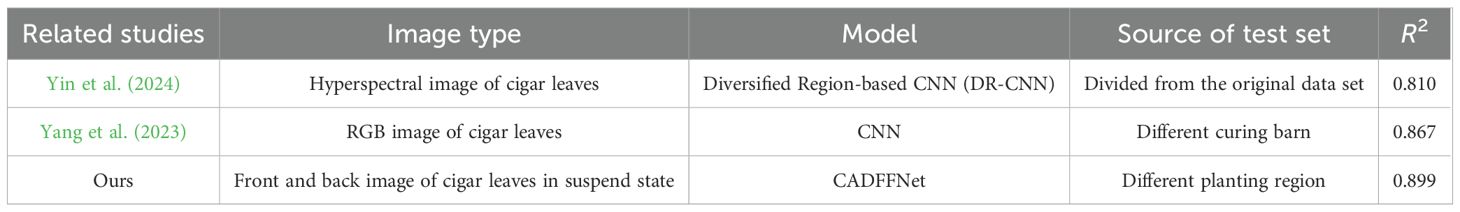

4.5 Comparison with other researches

In this study, we develop a dual-branch CNN model that dynamically perceives and fuses the features of dual-view images of cigar leaves to enhance the model's performance. To evaluate the performance of CADFFNet, we compared our results with those of current methods in this domain, using different original data and methods. Specifically, we evaluated the prediction performance of CADFFNet in comparison to Yin et al. (2024) and Yang et al. (2023), as reported in Table 5. The results demonstrate that CADFFNet outperforms both approaches in terms of CLMC prediction accuracy. This improvement suggests that the dual-branch network model can better capture the changes in the apparent morphology of cigar leaves resulting from water loss during the air-curing stage. Overall, our findings demonstrate that CADFFNet can efficiently and accurately predict CLMC, achieving high accuracy and better generalization ability.

4.6 Limitation and research prospect

During the air-curing process, cigar leaves change color and morphology due to water loss, with the operators assessing the leaf's appearance to estimate leaf moisture content (Fu et al., 2024). Accurately and non-destructively estimating the CLMC during the air-curing stage allows technicians to monitor the leaf's condition better and make more precise decisions regarding timely adjustments to the air-curing technique. Despite the strong predictive performance achieved by the proposed model, certain limitations remain. Firstly, as illustrated in Figure 6, when applied to cigar tobacco leaf samples from different production regions and varieties, the model still faces challenges in accurately predicting CLMC, suggesting that its generalization ability under heterogeneous data conditions requires further improvement. Secondly, due to the dual-branch architecture that necessitates parallel processing of two input images, the model—while benefiting from enhanced accuracy and robustness—inevitably incurs increased computational overhead. Finally, this study is based on static imaging of individual leaves, whereas in actual production cigar leaves are hung on whole stalks and air-cured at room scale. In-situ image acquisition in such settings faces occlusions and illumination variations, and therefore a gap remains to practical deployment.

The future research is multi-folded. First, we will collect cigar leaf images from multiple regions and varieties to expand the dataset, and utilize annual curing data for model iteration and updates to further enhance its robustness. Second, we will incorporate prior knowledge, such as fresh cigar leaf quality, barn temperature, humidity, and curing duration, into the model training process, and conduct continuous, high-frequency, multi-view in-situ imaging of whole-stalk leaves throughout curing. Based on these data, we aim to develop a non-destructive predictive model of the moisture-content–time trajectory over the curing process. Finally, another priority is to explore the correlation between cigar leaf images and chemical indicators, such as total sugars and nicotine content, as well as other chemical characteristics that directly characterize the quality of the cigar leaf, to further optimize the cigar leaf air-curing process.

5 Conclusion

This study introduces CADFFNet, a novel CNN model for non-destructive CLMC detection during the curing process, which integrates dual-view images of the cigar leaves. To better capture the similar pattern changes in the front and back images, we employ a dual-branch network structure to process the images in parallel. Additionally, we design the DECA and MSCFF modules, enabling feature maps at different levels to interact and fuse at various stages of the network. Moreover, introducing a channel attention mechanism allows the model to enhance key features of the leaves while suppressing irrelevant information that may interfere with the prediction results. Experimental results show that CADFFNet achieves excellent prediction performance on cigar leaves from the same region and variety. A strong performance is also demonstrated in cross-region and cross-variety predictions, with R2 and MAE values of 0.899 and 5.82%, respectively, on the test set.

In summary, this study provides a convenient and non-destructive method for detecting CLMC, offering a theoretical basis for the automation of the cigar leaf air-curing process. Furthermore, the proposed approach, which integrates the interaction and fusion of front and back leaf images, provides a novel solution for pattern recognition tasks in plant leaves, such as leaf disease identification, crop classification, and assessment of plant growth stages.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

ZX: Data curation, Software, Writing – original draft, Investigation, Formal analysis. YS: Validation, Resources, Formal analysis, Visualization, Data curation, Investigation, Writing – review & editing. YP: Formal analysis, Conceptualization, Writing – review & editing. KZ: Project administration, Writing – review & editing, Methodology, Investigation, Resources, Conceptualization. ZW: Writing – review & editing. BL: Writing – review & editing, Validation, Funding acquisition, Visualization. XS: Data curation, Investigation, Writing – review & editing, Validation, Visualization. SD: Formal analysis, Validation, Project administration, Data curation, Methodology, Conceptualization, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. We are grateful to the editor and anonymous reviewers. This research works were supported by the National Natural Science Foundation of China (32101851); Key R&D and promotion projects in Henan Province (222102110163); Science and technology project of Hunan Branch of China National Tobacco Corporation (HN2022KJ03).

Conflict of interest

Author ZW was employed by Zhangjiajie City Branch of Hunan Tobacco Company.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abbreviations

CLMC, cigar leaf moisture content; NMR, nuclear magnetic resonance; CADFFNet, channel attention weight-based dual-branch feature fusion network; DL, Deep learning; ML, machine learning; CNN, Convolution Neural network; DECA, dual-branch efficient channel attention; MSCFF, multi scale convolution feature fusion; R, Coefficient of determinationand; MAE, Mean absolute error; SE, Squeeze-and-Excitation; CBAM, Convolutional Block Attention Module; CA, Coordinate Attention.

References

Chen, Y., Huang, Y., Zhang, Z., Wang, Z., Liu, B., Liu, C., et al. (2023). Plant image recognition with deep learning: A review. Comput. Electron. Agric. 212, 108072. doi: 10.1016/j.compag.2023.108072

Chen, J., Li, Y., He, X., Jiao, F., Xu, M., Hu, B., et al. (2021). Influences of different curing methods on chemical compositions in different types of tobaccos. Ind. Crops Prod. 167, 113534. doi: 10.1016/j.indcrop.2021.113534

Condorí, M., Albesa, F., Altobelli, F., Duran, G., and Sorrentino, C. (2020). Image processing for monitoring of the cured tobacco process in a bulk-curing stove. Comput. Electron. Agric. 168, 105113. doi: 10.1016/j.compag.2019.105113

Dai, Y., Meng, L., Sun, F., and Wang, S. (2025). Lightweight multi-scale feature dense cascade neural network for scene understanding of intelligent autonomous platform. Expert Syst. Appl. 259, 125354. doi: 10.1016/j.eswa.2024.125354

Dai, Y., Zhao, P., and Wang, Y. (2024). Maturity discrimination of tobacco leaves for tobacco harvesting robots based on a Multi-Scale branch attention neural network. Comput. Electron. Agric. 224, 109133. doi: 10.1016/j.compag.2024.109133

Feng, C., Zhu, S., Tang, M., Zhao, H., Yuan, Q., and Wang, B. (2025). Study on image acquisition and camera positioning of depth recognition model in the tobacco curing stage. Eng. Appl. Artif. Intell. 143, 109992. doi: 10.1016/j.engappai.2024.109992

Fu, K., Song, X., Cui, Y., Zhou, Q., Yin, Y., Zhang, J., et al. (2024). Analyzing the quality differences between healthy and moldy cigar tobacco leaves during the air-curing process through fungal communities and physicochemical components. Front. Microbiol. 15. doi: 10.3389/fmicb.2024.1399777

Gao, R., Wang, R., Feng, L., Li, Q., and Wu, H. (2021). Dual-branch, efficient, channel attention-based crop disease identification. Comput. Electron. Agric. 190, 106410. doi: 10.1016/j.compag.2021.106410

Guo, J., Huang, H., He, X., Cai, J., Zeng, Z., Ma, C., et al. (2023). Improving the detection accuracy of the nitrogen content of fresh tea leaves by combining FT-NIR with moisture removal method. Food Chem. 405, 134905. doi: 10.1016/j.foodchem.2022.134905

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition (Caesars: IEEE). doi: 10.1109/CVPR.2016.90

Hou, Q., Zhou, D., and Feng, J. (2021). “Coordinate attention for efficient mobile network design,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 13708–13717. doi: 10.1109/CVPR46437.2021.01350

Hu, J., Shen, L., and Sun, G. (2018). “Squeeze-and-excitation networks,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA. 7132–7141. 2018. doi: 10.1109/CVPR.2018.00745

Huang, G., Liu, Z., van der Maaten, L., and Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, Vol. 2017. 2261–2269. doi: 10.1109/CVPR.2017.243

Jiang, X., Wang, J., Qin, Y., Li, Y., Ji, Y., Yang, A., et al. (2024). Whole-genome resequencing reveals genetic differentiation in cigar tobacco population. Ind. Crops Prod. 210, 118153. doi: 10.1016/j.indcrop.2024.118153

Jin, G., Zhu, Z., Wu, Z., Wang, F., Li, J., Raghavan, V., et al. (2023). Characterization of volatile components of microwave dried perilla leaves using GC–MS and E-nose. Food Biosci. 56, 103083. doi: 10.1016/j.fbio.2023.103083

Kosfeld, M., Westphal, B., and Kwade, A. (2022). Correct water content measuring of lithium-ion battery components and the impact of calendering via Karl-Fischer titration. J. Energy Storage 51, 104398. doi: 10.1016/j.est.2022.104398

Lee, S. J., Yun, C., Im, S. J., and Park, K. R. (2024). CNCAN: Contrast and normal channel attention network for super-resolution image reconstruction of crops and weeds. Eng. Appl. Artif. Intell. 138, 109487. doi: 10.1016/j.engappai.2024.109487

Li, X., Wang, W., Hu, X., and Yang, J. (2019). “Selective kernel networks,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA. 510–519. doi: 10.1109/CVPR.2019.00060

Lin, J., Chen, Y., Pan, R., Cao, T., Cai, J., Yu, D., et al. (2022). CAMFFNet: A novel convolutional neural network model for tobacco disease image recognition. Comput. Electron. Agric. 202, 107390. doi: 10.1016/j.compag.2022.107390

Liu, H., Meng, L., Wang, S., Wang, A., Du, H., Zhao, P., et al. (2023). “Study on moisture content prediction of tobacco leaf based on near infrared spectroscopy,” in 2023 7th Asian Conference on Artificial Intelligence Technology (ACAIT). 1413–1418. doi: 10.1109/ACAIT60137.2023.10528519

Ma, C., Zhang, T., Zheng, H., Yang, J., Chen, R., and Fang, C. (2024). Measurement method for live chicken shank length based on improved ResNet and fused multi-source information. Comput. Electron. Agric. 221, 108965. doi: 10.1016/j.compag.2024.108965

Niu, Q., Gui, R., Liu, H., Li, L., Shi, L., Jia, K., et al. (2025). Automated sleep staging model for older adults based on CWT and deep learning. Sci. Rep. 15, 22398. doi: 10.1038/s41598-025-07630-1

Pei, W., Zhou, P., Huang, J., Sun, G., and Liu, J. (2024). State recognition and temperature rise time prediction of tobacco curing using multi-sensor data-fusion method based on feature impact factor. Expert Syst. Appl. 237, 121591. doi: 10.1016/j.eswa.2023.121591

Qu, Y., Liu, Z., Zhang, Y., Yang, J., and Li, H. (2021). Improving the sorting efficiency of maize haploid kernels using an NMR-based method with oil content double thresholds. Plant Methods 17, 2. doi: 10.1186/s13007-020-00703-4

Rahman, A., Street, J., Wooten, J., Marufuzzaman, M., Gude, V. G., Buchanan, R., et al. (2025). MoistNet: Machine vision-based deep learning models for wood chip moisture content measurement. Expert Syst. Appl. 259, 125363. doi: 10.1016/j.eswa.2024.125363

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and Chen, L.-C. (2018). “MobileNetV2: inverted residuals and linear bottlenecks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT. 4510–4520. doi: 10.1109/CVPR.2018.00474

Shafik, W., Tufail, A., De Silva Liyanage, C., and Apong, R. A. A. H. M. (2024). Using transfer learning-based plant disease classification and detection for sustainable agriculture. BMC Plant Biol. 24, 136. doi: 10.1186/s12870-024-04825-y

Simonyan, K. and Zisserman, A. (2015). Very deep convolutional networks for large-scale image recognition. doi: 10.48550/arXiv.1409.1556

Soneji, S., Mann, C., and Fong, S. (2022). Growth in imported large premium cigar sales, USA 2008–2019. Tob. Control 31, 775–776. doi: 10.1136/tobaccocontrol-2020-056230

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna, Z. (2016). “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA. 2818–2826. doi: 10.1109/CVPR.2016.308

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., and Hu, Q. (2020). “ECA-net: efficient channel attention for deep convolutional neural networks,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA. 11531–11539. doi: 10.1109/CVPR42600.2020.01155

Wang, B., Zhang, C., Li, Y., Cao, C., Huang, D., and Gong, Y. (2023). An ultra-lightweight efficient network for image-based plant disease and pest infection detection. Precis. Agric. 24, 1836–1861. doi: 10.1007/s11119-023-10020-0

Wei, Y., He, Y., and Li, X. (2021). Tea moisture content detection with multispectral and depth images. Comput. Electron. Agric. 183, 106082. doi: 10.1016/j.compag.2021.106082

Woo, S., Park, J., Lee, J. Y., and Kweon, I. S. (2018). “CBAM: convolutional block attention module,” in Computer vision – ECCV 2018. ECCV 2018. Lecture notes in computer science, vol. 11211 . Eds. Ferrari, V., Hebert, M., Sminchisescu, C., and Weiss, Y. (Springer, Cham). doi: 10.1007/978-3-030-01234-2_1

Wu, Y., Huang, J., Yang, C., Yang, J., Sun, G., and Liu, J. (2024). TobaccoNet: A deep learning approach for tobacco leaves maturity identification. Expert Syst. Appl. 255, 124675. doi: 10.1016/j.eswa.2024.124675

Wu, C., Qian, J., Zhang, J., Wang, J., Li, B., and Wei, Z. (2022). Moisture measurement of tea leaves during withering using multifrequency microwave signals optimized by ant colony optimization. J. Food Eng. 335, 111174. doi: 10.1016/j.jfoodeng.2022.111174

Wu, J., Yang, S. X., and Tian, F. (2014). A novel intelligent control system for flue-curing barns based on real-time image features. Biosyst. Eng. 123, 77–90. doi: 10.1016/j.biosystemseng.2014.05.008

Xing, Z., Shi, Y., Zhang, K., Ding, S., and Shi, X. (2025). Moisture content prediction of cigar leaves air-curing process based on stacking ensemble learning model. Front. Plant Sci. 16. doi: 10.3389/fpls.2025.1553110

Xu, X., Li, H., Yin, F., Xi, L., Qiao, H., Ma, Z., et al. (2020). Wheat ear counting using K-means clustering segmentation and convolutional neural network. Plant Methods 16, 106. doi: 10.1186/s13007-020-00648-8

Yang, S., Jia, Z., Yi, K., Zhang, S., Zeng, H., Qiao, Y., et al. (2024b). Rapid prediction and visualization of safe moisture content in alfalfa seeds based on multispectral imaging technology. Ind. Crops Prod. 222, 119448. doi: 10.1016/j.indcrop.2024.119448

Yang, L., Liu, L., Ji, L., Jiang, C., Jiang, Z., Li, D., et al. (2024a). Analysis of differences in aroma and sensory characteristics of the mainstream smoke of six cigars. Heliyon 10, e26630. doi: 10.1016/j.heliyon.2024.e26630

Yang, H., Zhang, T., Yang, W., Xiang, H., Liu, X., Zhang, H., et al. (2023). Moisture content monitoring of cigar leaves during drying based on a Convolutional Neural Network. Int. Agrophysics 37, 225–234. doi: 10.31545/intagr/165775

Yin, J., Wang, J., Jiang, J., Xu, J., Zhao, L., Hu, A., et al. (2024). Quality prediction of air-cured cigar tobacco leaf using region-based neural networks combined with visible and near-infrared hyperspectral imaging. Sci. Rep. 14, 31206. doi: 10.1038/s41598-024-82586-2

Zhao, S., Li, Y., Liu, F., Song, Z., Yang, W., Lei, Y., et al. (2024b). Dynamic changes in fungal communities and functions in different air-curing stages of cigar tobacco leaves. Front. Microbiol. 15. doi: 10.3389/fmicb.2024.1361649

Zhao, P., Wang, S., Duan, S., Wang, A., Meng, L., and Hu, Y. (2024a). TCSRNet: a lightweight tobacco leaf curing stage recognition network model. Front. Plant Sci. 15. doi: 10.3389/fpls.2024.1474731

Keywords: convolution neural networks, cigar leaves, dual-view images, feature fusion, moisture content prediction

Citation: Xing Z, Shi Y, Pan Y, Zhang K, Wang Z, Liu B, Shi X and Ding S (2025) CADFFNet: a dual-branch neural network for non-destructive detection of cigar leaf moisture content during air-curing stage. Front. Plant Sci. 16:1698427. doi: 10.3389/fpls.2025.1698427

Received: 04 September 2025; Accepted: 15 October 2025;

Published: 05 November 2025.

Edited by:

Zhenghong Yu, Guangdong Polytechnic of Science and Technology, ChinaReviewed by:

Muhammad Waseem, Auburn University, United StatesZhiyu Li, Henan Polytechnic University, China

Xuemei Guan, Northeast Forestry University, China

Copyright © 2025 Xing, Shi, Pan, Zhang, Wang, Liu, Shi and Ding. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Songshuang Ding, c2h1YW5nc2RAaGVuYXUuZWR1LmNu; Xiangdong Shi, c3hkQGhlbmF1LmVkdS5jbg==

†These authors have contributed equally to this work

Zhuoran Xing

Zhuoran Xing Yaqi Shi

Yaqi Shi Yihao Pan1

Yihao Pan1 Kai Zhang

Kai Zhang Xiangdong Shi

Xiangdong Shi Songshuang Ding

Songshuang Ding