- 1College of Electrical Engineering and Information, Northeast Agricultural University, Harbin, China

- 2National Key Laboratory of Smart Farm Technologies and Systems, Harbin, China

- 3College of Agriculture, Northeast Agricultural University, Harbin, China

Introduction: The Leaf Area Index (LAI) is a critical biophysical parameter for assessing crop canopy structure and health. Unmanned Aerial Vehicles (UAVs) equipped with multispectral sensors offer a high-throughput solution for LAI estimation, but flight altitude compromises between efficiency and image resolution, ultimately impacting accuracy. This study investigates the integration of super-resolution (SR) image reconstruction with multi-sensor data to enhance LAI estimation for soybeans across varying UAV flight altitudes.

Methods: RGB and multispectral images were captured at four flight altitudes: 15 m, 30 m, 45 m, and 60 m. The acquired images were processed using several SR algorithms (SwinIR, Real-ESRGAN, SRCNN, and EDSR). Texture features were extracted from the RGB images, and LAI estimation models were developed using the XGBoost algorithm, testing data fusion strategies that included RGB-only, multispectral-only, and a combined RGB-multispectral approach.

Results: (1) SR performance declined with increasing altitude, with SwinIR achieving superior image reconstruction quality (PSNR and SSIM) over other methods. (2) Texture features from RGB images showed strong sensitivity to LAI. The XGBoost model leveraging fused RGB and multispectral data achieved the highest accuracy (relative error: 4.16%), outperforming models using only RGB (5.25%) or only multispectral data (9.17%). (3) The application of SR techniques significantly improved model accuracy at 30 m and 45 m altitudes. At 30 m, models incorporating Real-ESRGAN and SwinIR achieved an average R2 of 0.86, while at 45 m, these methods yielded models with an average R2 of 0.77.

Discussion: The results demonstrate that the fusion of SR-reconstructed imagery with multi-sensor data can effectively mitigate the negative impact of higher flight altitudes on LAI estimation accuracy. This approach provides a robust and efficient framework for UAV-based crop monitoring, enhancing data-driven decision-making in precision agriculture.

1 Introduction

Soybean is a globally important food and oil crop that contributes significantly to food security, serving as a major source of high-quality vegetable protein and essential fatty acids for both human and animal nutrition (Hartman et al., 2011). As both a source of protein and oil, soybeans play a crucial role in enhancing global food security and sustainable development (Chen et al., 2022). The Leaf Area Index (LAI), defined as the area of leaves per unit surface area of a field crop with flat leaves (Watson, 1947), measures the density and extent of vegetation leaf cover (Su et al., 2019). LAI is a critical structural variable in vegetation, indicative of crop growth and health, and is thus employed as an input variable in models that predict crop growth and yield (Fang et al., 2019).

The measurement of crop LAI involves a range of techniques, categorized into direct and indirect methods (Strachan et al., 2005). The direct method typically requires personnel to collect leaf samples from various locations and measure the length, maximum width, and number of leaves. These data are then used to calculate LAI (Shi et al., 2022). Indirect methods, on the other hand, include close-range detection techniques such as the use of handheld measuring devices and fisheye lens photography (Confalonieri et al., 2013), among others. Both methods, however, have limitations regarding sampling range and efficiency.

The adoption of remote sensing technology presents a more effective approach to deriving crop LAI from spectral data (Sadeh et al., 2021; Dhakar et al., 2021). Recent advances have demonstrated that UAV-based multispectral imaging can substantially improve the accuracy of crop LAI and chlorophyll estimation, benefiting from its high spatial resolution and spectral sensitivity (Parida et al., 2024). This technology significantly mitigates the constraints of traditional measurement methods, allowing researchers to economically and swiftly gather extensive crop canopy data (Furbank and Tester, 2011). Nonetheless, the temporal and spatial resolutions of most satellite data do not meet the rigorous demands of precision agriculture. In this context, Unmanned Aerial Vehicle (UAV) technology plays a pivotal role by facilitating high-throughput phenotypic analysis (Chapman et al., 2018). The integration of UAV-based LiDAR and multispectral imaging has proven effective for high-throughput phenotyping of dry bean, enabling accurate, non-destructive estimation of plant height, lodging, and seed yield, highlighting the potential of UAV-based phenotyping in precision agriculture and crop breeding programs (Panigrahi et al., 2025).Offering lower altitudes and capturing data with higher spatial resolution, UAVs provide more detailed information compared to satellite data (Araus and Cairns, 2014). Combining UAV RGB and multispectral indices enables accurate field-scale monitoring of nitrogen status and yield prediction (Chatraei Azizabadi et al., 2025).In addition, multi-source UAV data fusion frameworks have been developed for real-time LAI estimation, highlighting the importance of integrating structural and spectral information (Du et al., 2024).

The flight efficiency of UAVs varies with flying altitude. In summary, the time required for large-scale data collection presents significant challenges to precision agriculture. To reduce collection costs, an increase in flying altitude is necessary; however, this compromises image quality and decreases image resolution. Previous studies have typically adjusted flight altitudes to balance the relationship between flight duration and data accuracy (Jay et al., 2019). However, high-resolution sensors are often costly and limited in coverage, while low-altitude flights are time-consuming and less practical for large-scale monitoring. Therefore, a cost-effective solution is needed to enhance image resolution without additional flight or hardware costs. To address the issue of low resolution in high-altitude imagery, employing Image Super-Resolution (SR) algorithms is considered an appropriate method (Jonak et al., 2024). Image SR involves reconstructing low-resolution images (LR) into high-resolution images (HR) using computer vision algorithms. In remote sensing, SR technology enhances image detail, resolution, and quality through AI models, proving to be of extensive and practical value in fields such as land cover classification, crop monitoring, and object detection. In particular, SR not only improves visual quality but also enhances the extraction of fine textural and structural features from UAV imagery, thereby potentially improving model robustness and predictive accuracy for biophysical variables such as LAI. Nevertheless, few studies have combined UAV-based SR imagery with multispectral data for quantitative crop trait estimation such as LAI, especially for soybean canopies.

This study introduces a novel method that utilizes UAVs and SR techniques to estimate the LAI. By enhancing the resolution of the collected RGB images by a factor of four, the clarity of these images is improved, thereby minimizing the impact of image resolution on feature detection and enhancing the precision of the model. Additionally, this research develops an integrated learning regression model that combines light and multispectral (MS) information, increasing both the model’s precision and computational efficiency. This approach aims to address the current gap in linking SR-enhanced UAV data with multispectral information for high-accuracy soybean LAI estimation. The goals of this study are to: (1) enhance the resolution of UAV imagery using SR techniques and use these enhanced images to estimate the LAI of soybeans, selecting an appropriate estimation model; (2) determine the combination that yields the highest precision in a multivariate remote sensing imagery dataset (RGB+MS, MS, RGB); (3) assess the key features associated with the LAI.

2 Material and methods

2.1 Study site

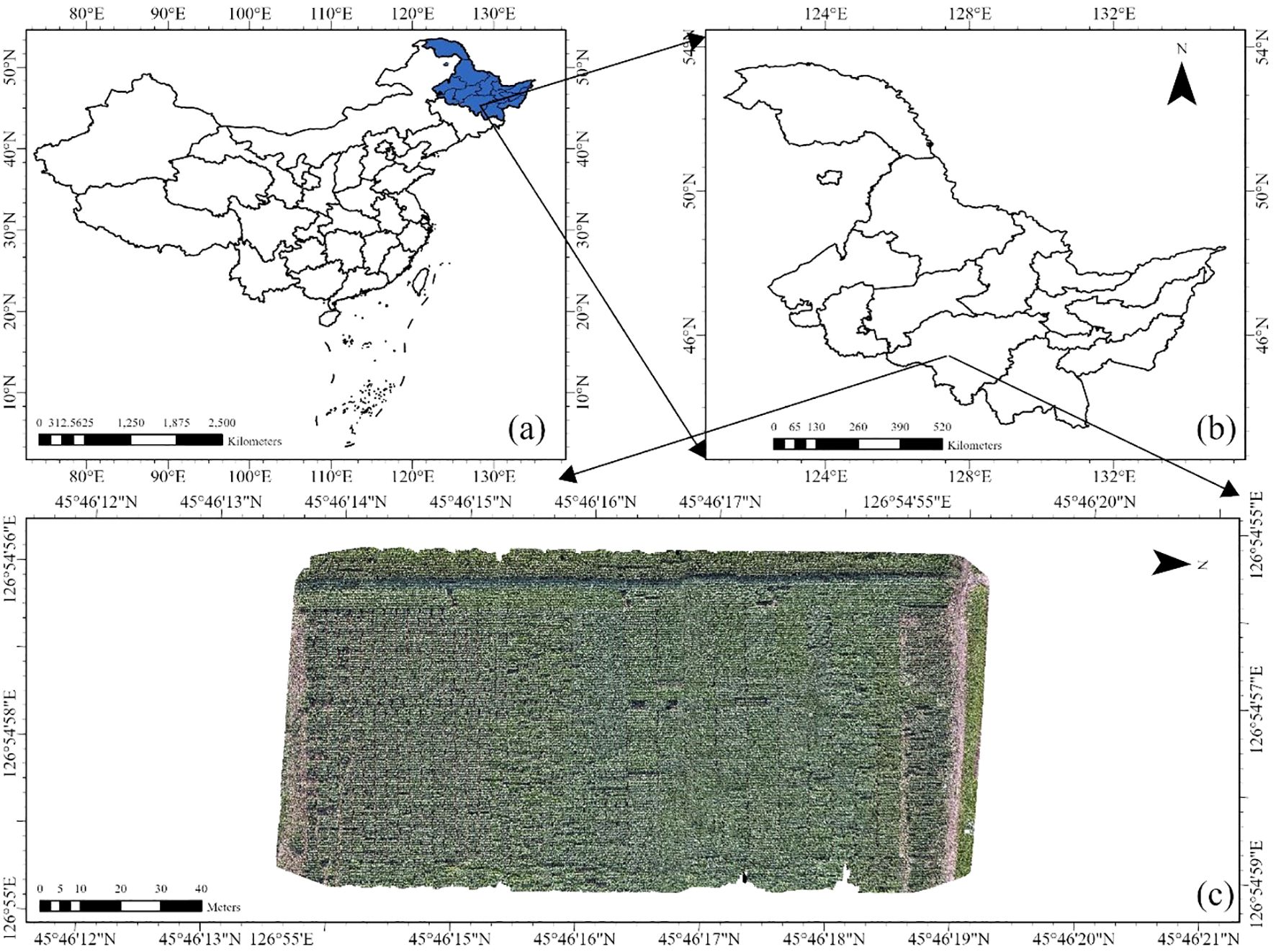

As shown in Figure 1, the study was conducted in 2024 at the Xiangyang Farm, located in Harbin City, Heilongjiang Province, China, within the Northeast Plain—one of the world’s three largest black soil regions. This area is renowned for its fertile soil, which is particularly well-suited for soybean cultivation. Heilongjiang Province, where the site is located, is responsible for producing over 50% of China’s soybean output (National Bureau of Statistics of China, 2022). The geographic coordinates of the site are 45°45’N latitude and 126°54’E longitude, with an average elevation of approximately 150 m. The region experiences a temperate monsoon climate, characterized by an average annual precipitation of 552.9 mm and an average annual temperature of 3.8°C. The majority of the rainfall, over 70%, occurs between May and August (Wikipedia contributors, 2025). In May 2024, 100 soybean varieties, selected for their suitability in Northeast China and varying in yield and growth periods, were planted. These varieties were also used for model validation to assess the robustness of the estimation results across different genotypes. The names of all varieties are listed in Appendix Table A2.The management of pesticides and fertilizers adhered to local practices.

Figure 1. Location of the study area and experimental site design at the Xiangyang site of Northeast Agricultural University in Harbin, Heilongjiang Province, China. (a) Geographic location of Heilongjiang Province (highlighted in blue) within China, (b) Map of Heilongjiang Province showing the specific location of the study area, (c) Layout of the experimental areas and treatments at the Xiangyang site.

2.2 LAI estimation framework

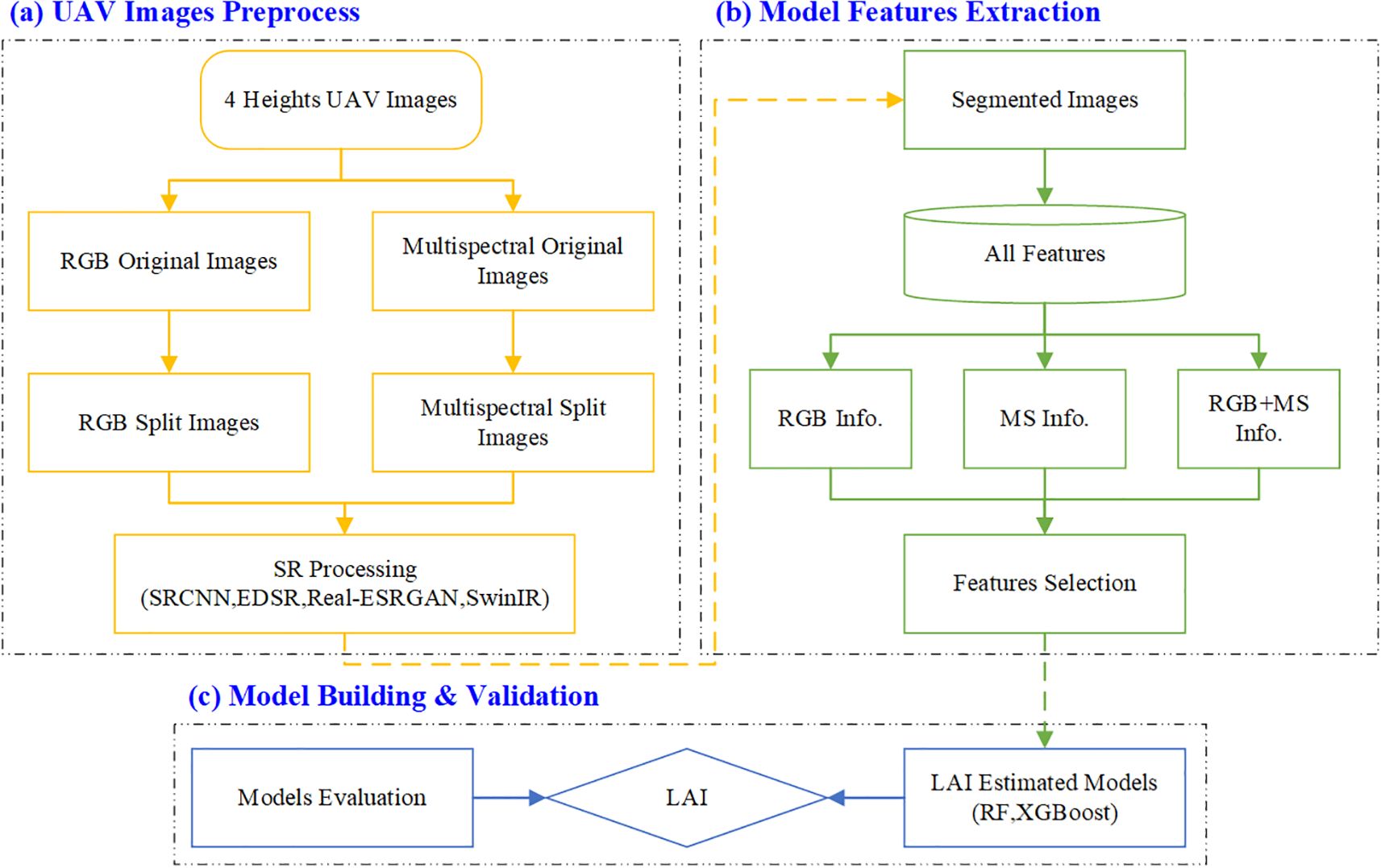

This study developed a framework for estimating the LAI using UAV-based imagery. As depicted in Figure 2, the framework involves a comprehensive workflow that includes image preprocessing using SR techniques, integrating three distinct types of input features, and employing a regression model for LAI estimation. Initially, RGB images at four different altitudes were captured using a UAV. These images underwent preprocessing, which included stitching and segmentation. The segmented images were then enhanced in resolution through four SR methods. The next phase involved extracting features from these enhanced images and determining three different combinations of input features for LAI estimation.

Figure 2. Workflow diagram of the proposed methodology. (a) UAV Images Preprocess, (b) Model Features Extraction, (c) Model Building & Validation.

2.3 Field data collection

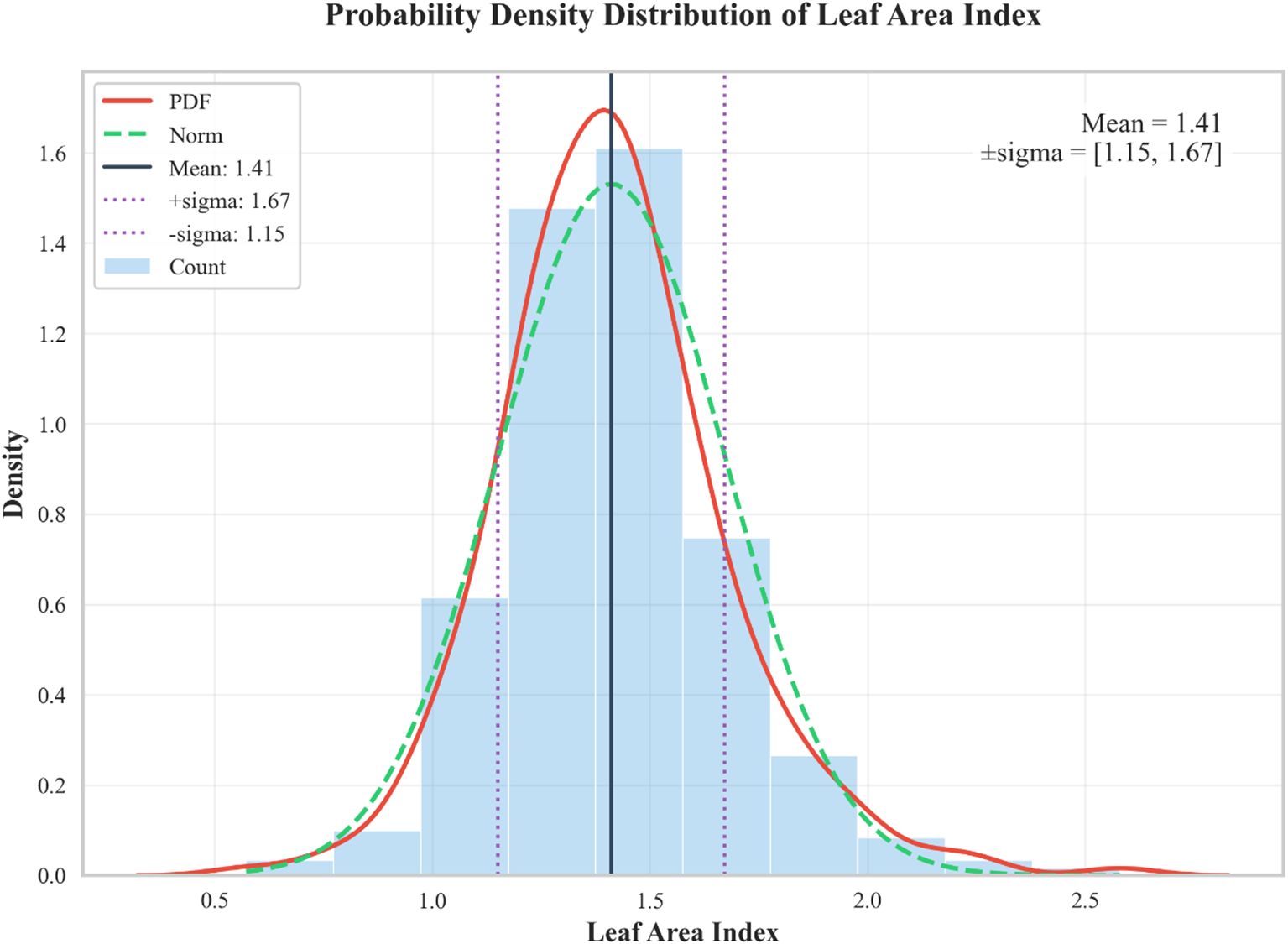

From August to September 2024, during three distinct growth stages—V6 (sixth trifoliate leaf), R1 (beginning bloom), and R3 (beginning pod)—the LAI of each soybean variety was measured using an AccuPAR LP-80 Plant Canopy Analyzer (Decagon Devices Inc., Pullman, WA, USA). Measurements were conducted between 10:00 a.m. and 1:00 p.m. local time on clear, calm days under stable sunlight conditions to ensure consistent illumination and measurement accuracy. For each of the 100 soybean varieties, the experimental plot was divided into four sampling zones, and LAI was measured once per zone to calculate the average LAI, resulting in 400 measurements per growth stage. Measurements were conducted at three different growth stages, yielding a total of 1,200 LAI measurements across all varieties, zones, and growth stages. Figure 3 shows the distribution of LAI measurements across the three periods. These measurements were performed concurrently with the UAV flights.

Figure 3. Histogram of LAI. The green dashed line represents a normal distribution fit, the red curve indicates the probability density function (PDF) fit, the purple dashed line shows data within one standard deviation, and the black solid line represents the mean value.

2.4 UAV data collection

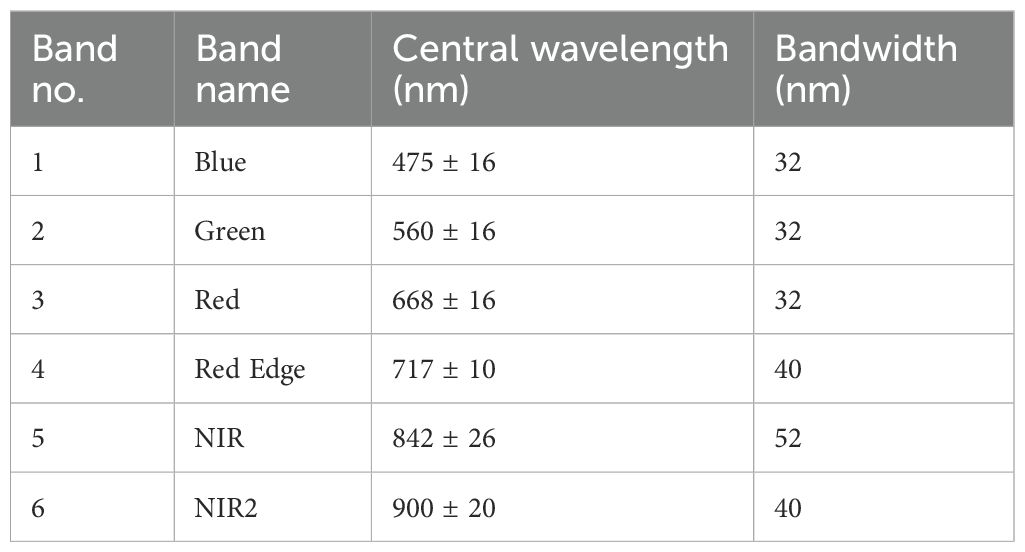

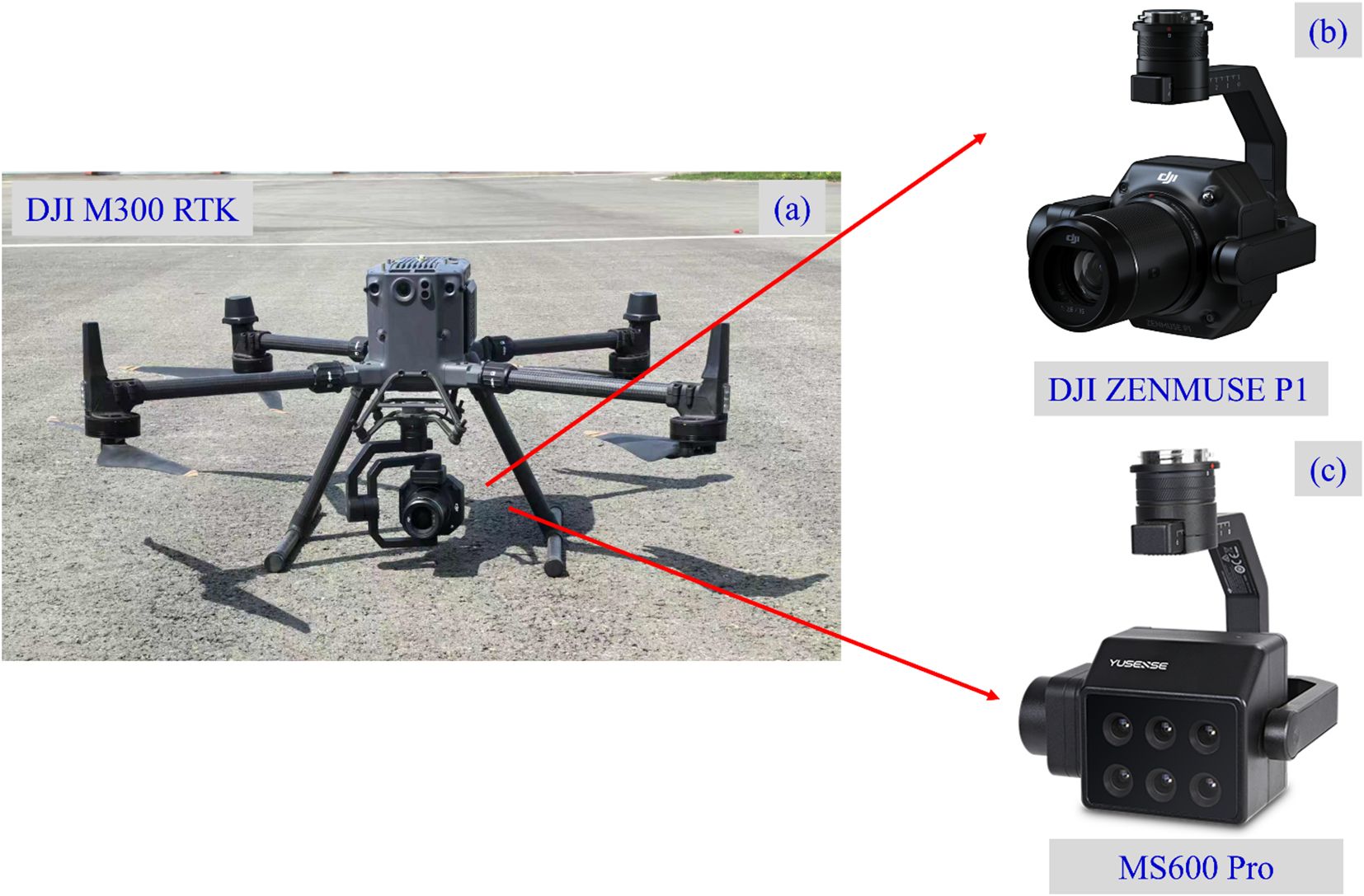

In this research, UAV data were collected using a DJI M300 drone (Shenzhen, China) equipped with a DJI ZENMUSE P1 camera and an MS600 Pro MS camera (Qingdao UVS Technology Co., Ltd), as shown in Figure 4. The MS camera features six monochromatic channels: near-infrared (NIR, 840 ± 15 nm), red edge at 750 nm (RE750, 750 ± 5 nm), red edge at 720 nm (RE720, 720 ± 5 nm), red (R, 660 ± 11 nm), green (G, 550 ± 14 nm), and blue (B, 450 ± 15 nm). The DJI M300 includes an integrated Global Navigation Satellite System (GNSS) module for recording the geolocation data of the imagery. The main specifications of the multispectral camera, including each band and its central wavelength range, are summarized in Table 1, providing a clear reference for subsequent vegetation index calculations and image analysis. Data collection flights were conducted on August 7, August 23, and September 11, 2024, between 10:00 a.m. and 1:00 p.m. local time, under low wind and cloud-free conditions. The flight altitude was set at 15 m, 30 m, 45 m, and 60 m.

Figure 4. UAV-based crop phenotyping observation platform. (a) DJI M300 RTK UAV; (b) Zenmuse P1 RGB camera; (c) MS600 Pro MS camera.

2.5 Image preprocessing

For MS images, standard reflectance calibration and radiometric correction were performed using Yusense Map software (Qingdao, China) to ensure comparability of images collected at different growth stages. The raw MS images were subsequently stitched together. To eliminate soil pixels, the Normalized Difference Vegetation Index (NDVI) for each pixel was calculated, and pixels with an NDVI value below 0.2 were identified as soil and excluded from the orthoimages.

Following the experimental layout’s dimensions, images were batched by varietal category to produce corresponding RGB and MS images. As previously described, images were categorized based on collection altitude and time, resulting in a total of 2400 images (1200 RGB images and 1200 MS images) for model construction.

2.6 Image SR and feature extraction

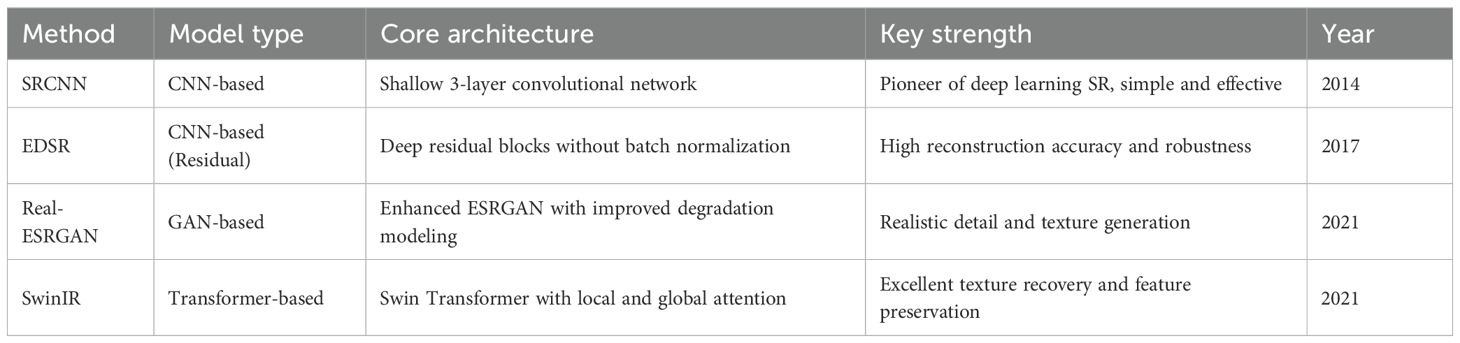

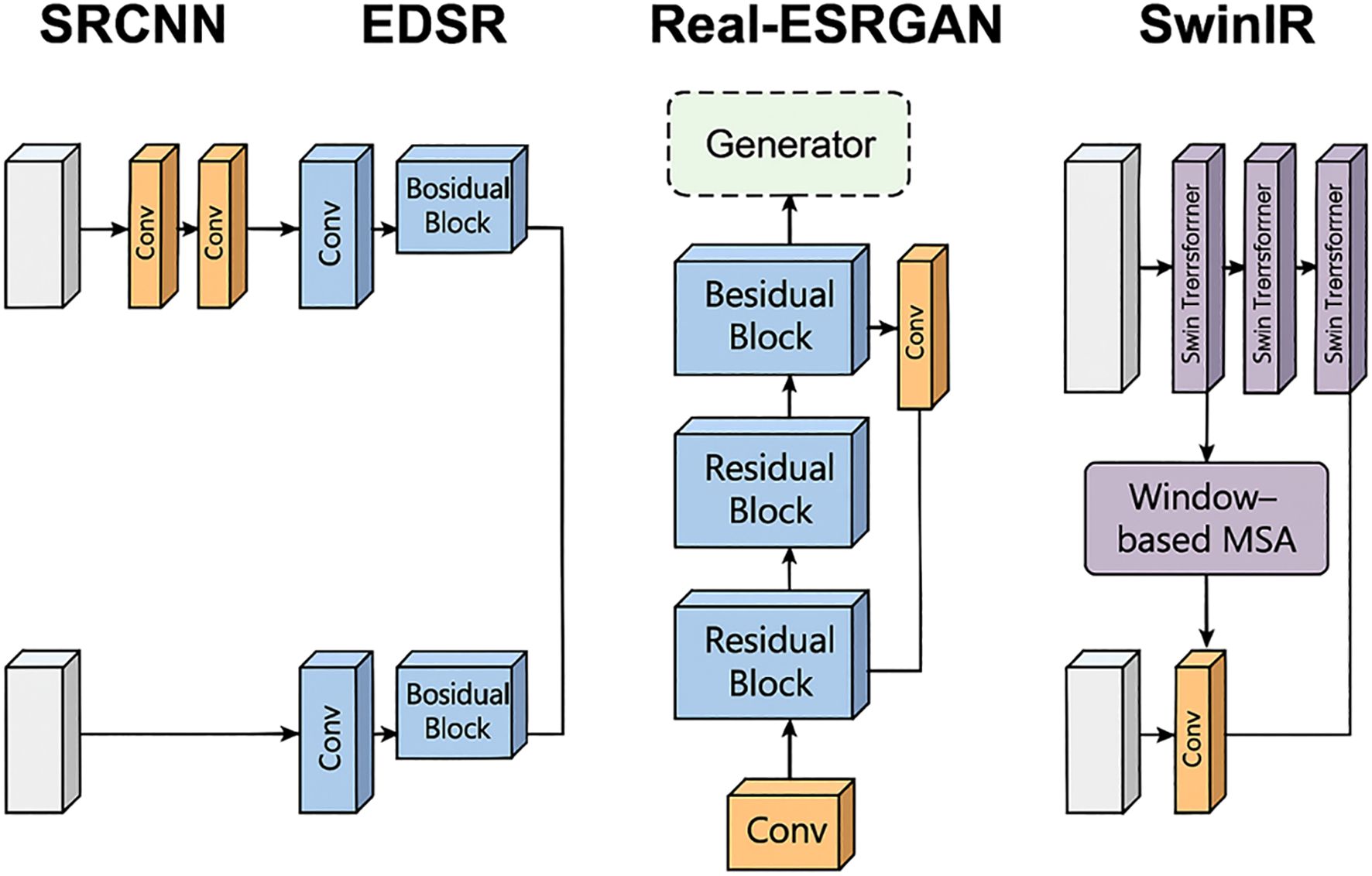

Building on the regionally segmented RGB images, this study explored four representative image SR techniques to enhance the resolution and quality of soybean leaf images: Super-Resolution Convolutional Neural Network (SRCNN), Enhanced Deep Super-Resolution Network (EDSR), Real-Enhanced Super-Resolution Generative Adversarial Network (Real-ESRGAN), and Swin Transformer for Image Restoration (SwinIR). These methods span from early con-volutional neural networks (CNNs) to recent generative adversarial networks (GANs) and Transformer architectures, providing a broad representation and complementarity suited to the diversity and complexity of soybean leaf images. SRCNN serves as a baseline model with a simple structure and high computational efficiency. EDSR is noted for its superior performance in various SR tasks, particularly excelling in the restoration of image details (Bashir et al., 2021). Real-ESRGAN effectively addresses real-world image degradation issues, enhancing texture details and improving visual quality. SwinIR integrates local and global attention mechanisms, effectively capturing both local and global features of images, especially those with complex textures (Ali et al., 2023). This study introduces these four distinct architectural SR algorithms to identify the most suitable SR methods for this research context.

These four SR methods were chosen over other alternatives because they collectively represent the main categories and technical milestones in SR development—namely, the foundational CNN-based models (SRCNN, EDSR), the GAN-based generative model (Real-ESRGAN), and the Transformer-based attention model (SwinIR). This selection ensures a comprehensive coverage of methodological diversity, allowing the study to evaluate performance differences across architectures with varying capacities for detail enhancement, texture restoration, and noise suppression. Such representativeness makes them particularly suitable for assessing SR performance under the complex texture and illumination conditions of soybean leaf imagery.

This study therefore employs these four distinct SR architectures to identify the most suitable approach for high-quality soybean leaf image reconstruction and analysis. To provide a clear overview, the main characteristics of these four SR methods are summarized in Table 2.

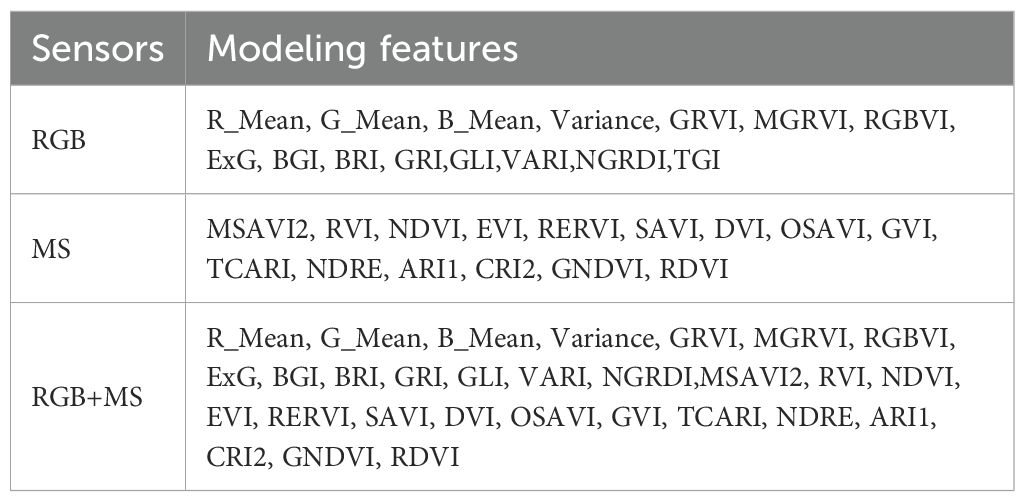

RGB images provide high-resolution spatial information, capturing the morphological features of soybean leaves such as edges, textures, and color variations. MS images, spanning multiple bands from visible light to near-infrared, offer richer spectral information. This is especially true in the near-infrared band, where higher vegetation reflectance can effectively indicate the physiological characteristics and health status of the leaves. Utilizing these sources, this study extracted 15 features from RGB image datasets and 15 features from MS images.

To accurately estimate the soybean LAI, we adopted a multi-source remote sensing data fusion method. This approach combines the morphological features from RGB images with the spectral features from MS images to create an integrated RGB+MS dataset. By leveraging the sensitivity of different bands to vegetation characteristics, this method enhances the accuracy and stability of LAI estimation. Consequently, the combined dataset features 15 morphological indicators from RGB and 15 spectral indicators from MS, totaling 30 indicators. Table 3 summarizes the features used for modeling, which were extracted from the RGB, MS, and combined RGB+MS datasets. The full names and calculation formulas of these features are provided in Appendix Table A1.

2.7 LAI regression models

In this research, two machine learning approaches—Random Forest (RF) and Extreme Gradient Boosting (XGBoost)—were applied to construct models for soybean leaf area index estimation. RF (Natekin and Knoll, 2013), a well-known ensemble algorithm, enhances the predictive power of individual decision trees by aggregating them into a strong learner. Compared with traditional single-tree models, RF reduces the risk of overfitting and provides stable regression outcomes through the collective contribution of multiple weak learners.

XGBoost, on the other hand, has gained wide attention in recent years, particularly within agricultural studies. Unlike RF, it introduces a regularization term (Ω) to penalize model complexity, which effectively controls overfitting and improves robustness when handling high-dimensional datasets. Beyond accuracy, XGBoost is also capable of efficiently managing sparse data and supports distributed parallel training, making it highly suitable for large-scale regression problems (Karthikeyan and Mishra, 2021).

To maximize predictive performance, a systematic hyperparameter tuning procedure was adopted. Among the parameters, the learning rate was given particular importance since it determines the step size of error correction during training, directly influencing both convergence stability and final model quality. A two-stage grid search combined with 5-fold cross-validation was carried out: the initial stage identified suitable parameter ranges, followed by a refined search for optimal values. Model performance under each setting was assessed using the loss function, where lower values indicated improved prediction accuracy.

Given the stochastic nature of RF and XGBoost, the training and validation process was repeated 10 times for each hyperparameter configuration to account for variability due to random initialization and data sampling. The performance metrics, including loss and prediction accuracy, were averaged across these repetitions to provide robust estimates, and the standard deviations were calculated to quantify variability. This procedure ensures that the reported model performance is statistically reliable and not driven by random fluctuations in individual runs.

This rigorous optimization process notably enhanced both computational efficiency and generalization ability of the models. The joint use of grid search and cross-validation is a widely accepted practice for reducing overfitting and improving reliability in complex regression or classification tasks. For example, Adnan et al. (2022) reported significant improvements in model accuracy when employing this strategy in combination with adaptive boosting techniques.

2.8 Feature selection method

In machine learning, feature selection is the process of identifying a subset of the most valuable features from an original set for prediction tasks, aiming to eliminate redundant and irrelevant features, simplify the model structure, improve training efficiency, and enhance generalizability. Redundant features are highly correlated with other features, providing information that may already be contained elsewhere, while unrelated features have little or no significant impact on the target variable. Retaining such features not only increases model complexity but can also introduce noise, leading to overfitting and reduced performance on new data.

In this study, a model-based feature selection method was adopted to improve the predictive performance of the model and reduce computational burden. Specifically, the SelectFromModel(SFM) class provided by the scikit-learn library in Python version 3.6.13 (https://scikit-learn.org) was utilized for feature selection.

This method trained a basic estimator (such as a linear model or tree model), which evaluated the importance of each feature using its provided feature importance index (Raschka, 2018).Subsequently, based on the set threshold, features with importance higher than the threshold were selected to form a new feature subset. This approach integrates the feature selection process into model training, avoiding the need to train multiple models repeatedly, thus enhancing processing efficiency.

In this study, ‘mean’ was selected as the threshold, which means retaining features with importance scores higher than the average importance of all features. This strategy can be beneficial in removing redundant or irrelevant features. Moreover, the strategy can also reduce the risk of overfitting and improve the model’s generalizability.

By applying the SelectFromModel method, the most contributing features to the inversion of soybean LAI were selected from the original feature set. These features can offer a more streamlined and efficient data foundation for subsequent modeling and analysis.

2.9 Evaluation index

For the verification of the LAI model’s estimation accuracy, 70% of the samples and observed leaf age were randomly selected for training the model. While the remaining 30% of the samples were used to validate the performance and accuracy of the estimation model. Coefficient of determination R2 (Equation 1), root mean square error (Equation 2), relative RMSE, and mean absolute error were employed to assess the stability of the model in various aspects. The calculation formula is formulated as follows:

Among them, yi denotes the total number of samples. yi and represent the LAI of the measured and estimated values of the samples, respectively. is the average value of measured LAI. R2indicates the correlation between measured results and estimated results. RMSE and rRMSE (Equation 3) represent the loss and loss rate between measurement results and estimated results. MAE (Equation 4) represents the absolute value between measured result and estimated result. The lower the RMSE or rRMSE, the better the model estimation accuracy.

To comprehensively assess the quality of image SR reconstruction, two widely used objective evaluation metrics were adopted: Peak Signal to Noise Ratio (PSNR, Equation 5) and Structural Similarity Index (SSIM, Equation 6).

PSNR is a commonly utilized metric for assessing the quality of image reconstruction, primarily used to evaluate the differences between reconstructed images and original images, based on mean squared error (MSE). Here, MAXI represents the potential maximum pixel value in an image, which for an 8-bit image is MAXI = 255. PSNR is measured in decibels (dB), with higher values indicating closer proximity of the reconstructed image to the original, denoting better quality. SSIM is an image quality metric more aligned with human visual perception, assessing similarity between two images across three dimensions: luminance, contrast, and structure. Here, μx and μy are the mean luminance values of images x and y, respectively, and are the variances, σxy is the covariance, and C1 and C2 are small constants used to stabilize the denominator. The SSIM value ranges from [–1, 1], with values closer to 1 indicating greater similarity between the two images.

3 Results

3.1 Super resolution results

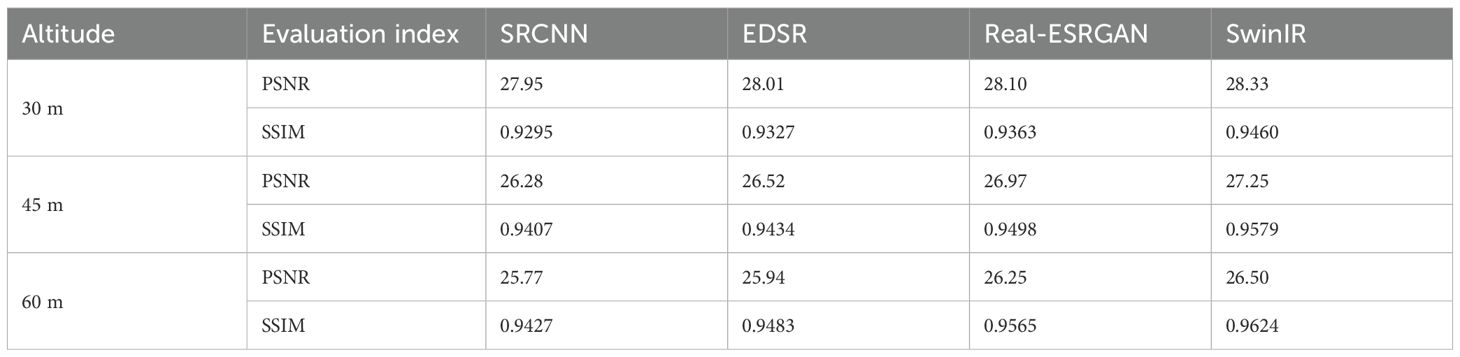

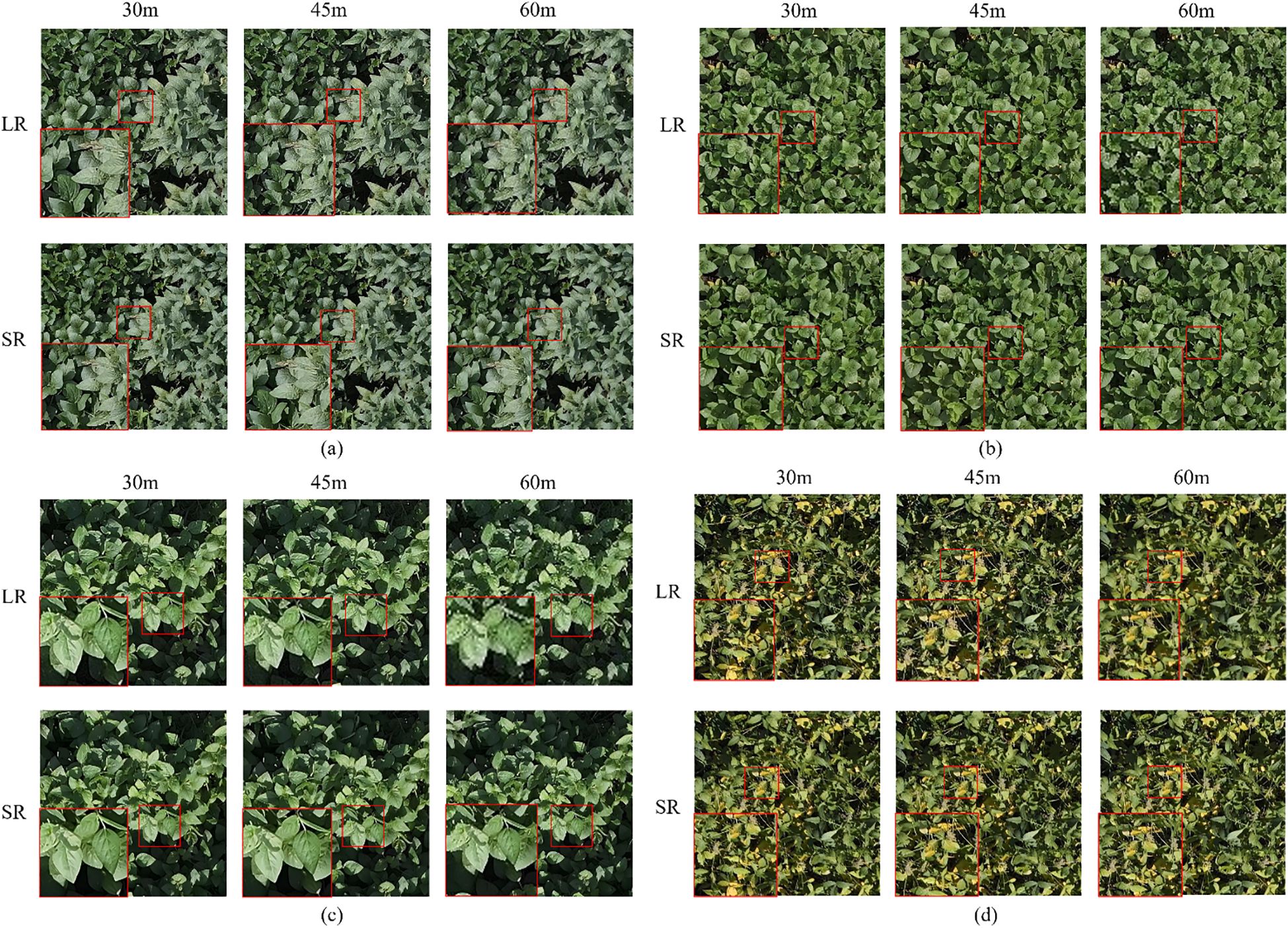

The outcomes of applying four SR techniques to enhance the resolution of RGB images are depicted in Figure 5, whereas Table 4 presents a comparative analysis of the metrics before and after the application of these SR techniques. The data clearly indicates that the best SR effects were achieved at a 30-meter altitude, with an average PSNR of 28.09, surpassing the results at 45-meter (26.76 PSNR) and 60-meter (26.12 PSNR) altitudes. This suggests that the effectiveness of SR techniques diminishes with increasing flight altitude, with minimal enhancement in image quality observed at a higher altitude of 60 m. Among the four SR methods evaluated, SwinIR demonstrated superior performance, achieving enhanced results in both PSNR and SSIM compared to the other three methods. This underscores the advantages of SwinIR’s hierarchical window-based attention mechanisms in processing and restoring the edges of soybean leaves.

Figure 5. Results of resolution enhancement using SRCNN (a), EDSR (b), Real-ESRGAN (c),and SwinIR (d). Each panel presents the reconstructed soybean canopy images after applying the corresponding model. SRCNN (a) and EDSR (b) improve overall clarity and detail definition compared to the original low-resolution input. Real-ESRGAN (c), based on a generative adversarial network (GAN), effectively enhances texture realism and reduces artifacts. SwinIR (d), employing a Transformer architecture, produces visually sharp and natural results with well-preserved canopy structures. Overall, both the GAN- and SwinIR-based methods exhibit superior enhancement quality compared with the other approaches.

In general, a higher PSNR value (typically above 30 dB for high-quality natural images) indicates better reconstruction fidelity, whereas an SSIM value closer to 1.0 reflects greater structural similarity between the reconstructed and reference images. Therefore, the observed PSNR and SSIM values in this study suggest that SwinIR achieved relatively higher image fidelity and structural consistency compared with the other SR methods.

3.2 Feature selection results

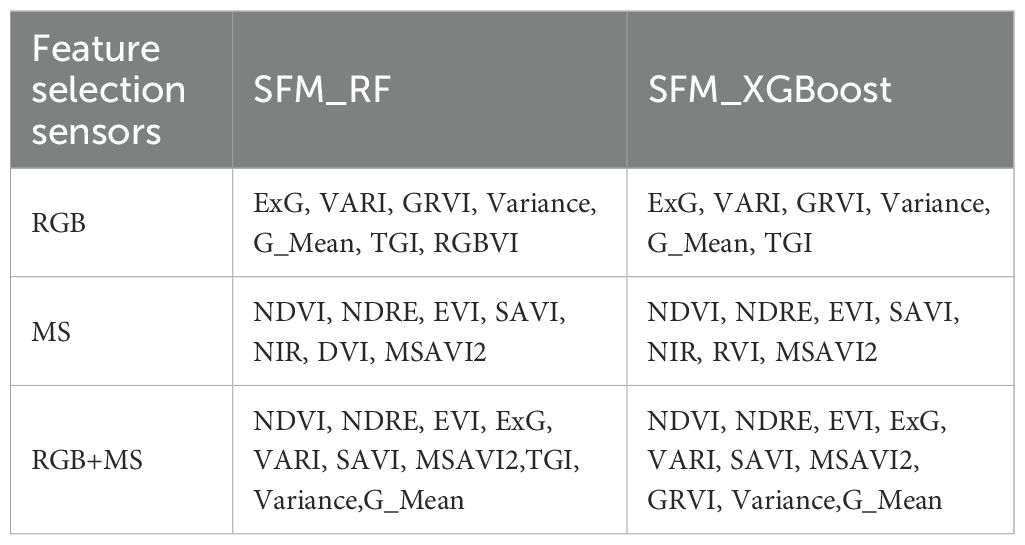

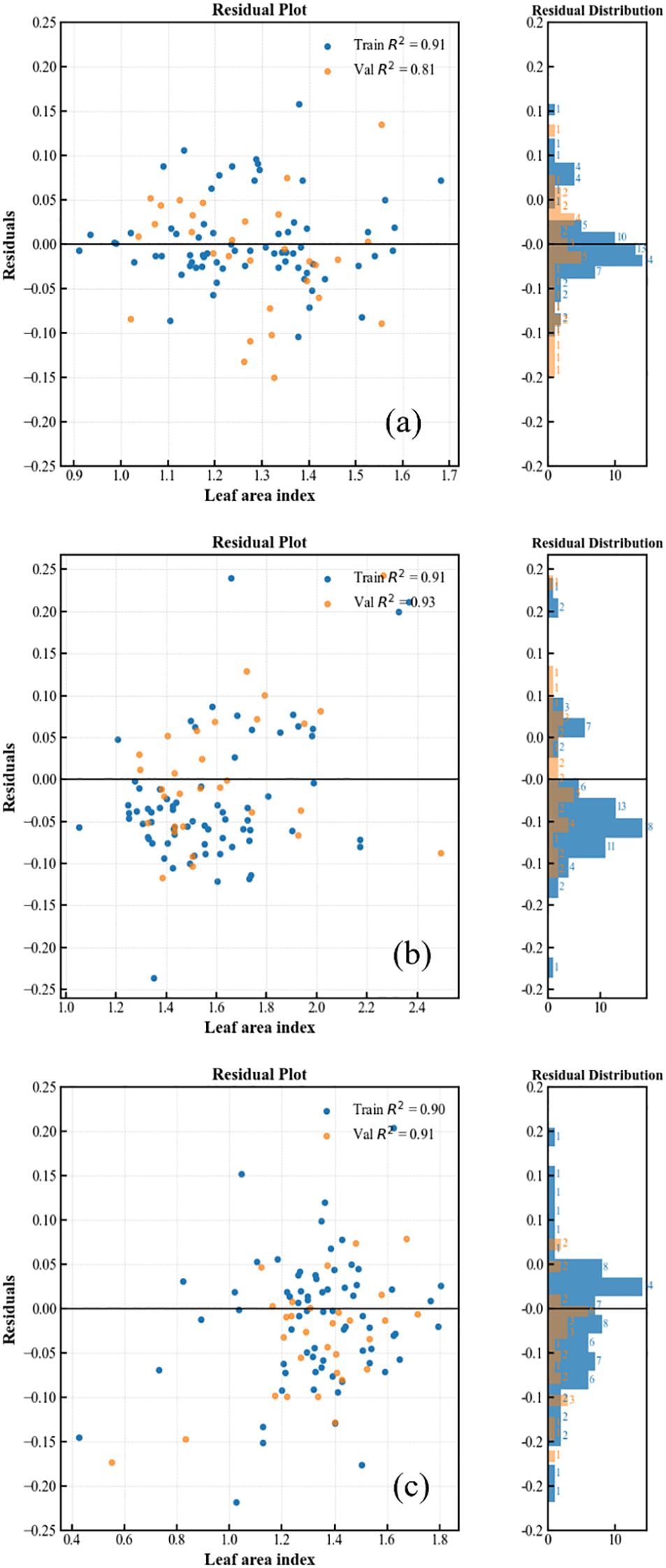

A feature dataset comprising all input features was constructed based on each cropped plot image. To mitigate the impact of inter-feature correlation, feature selection was performed using the SelectFromModel (SFM) method described in Section 2.8.In this study, two machine learning methods were utilized; therefore, RF and XGBoost models were employed for feature selection. Table displays the subsets of features selected by the SFM method from three types of input datasets, all chosen from images at a 15-meter altitude without SR.

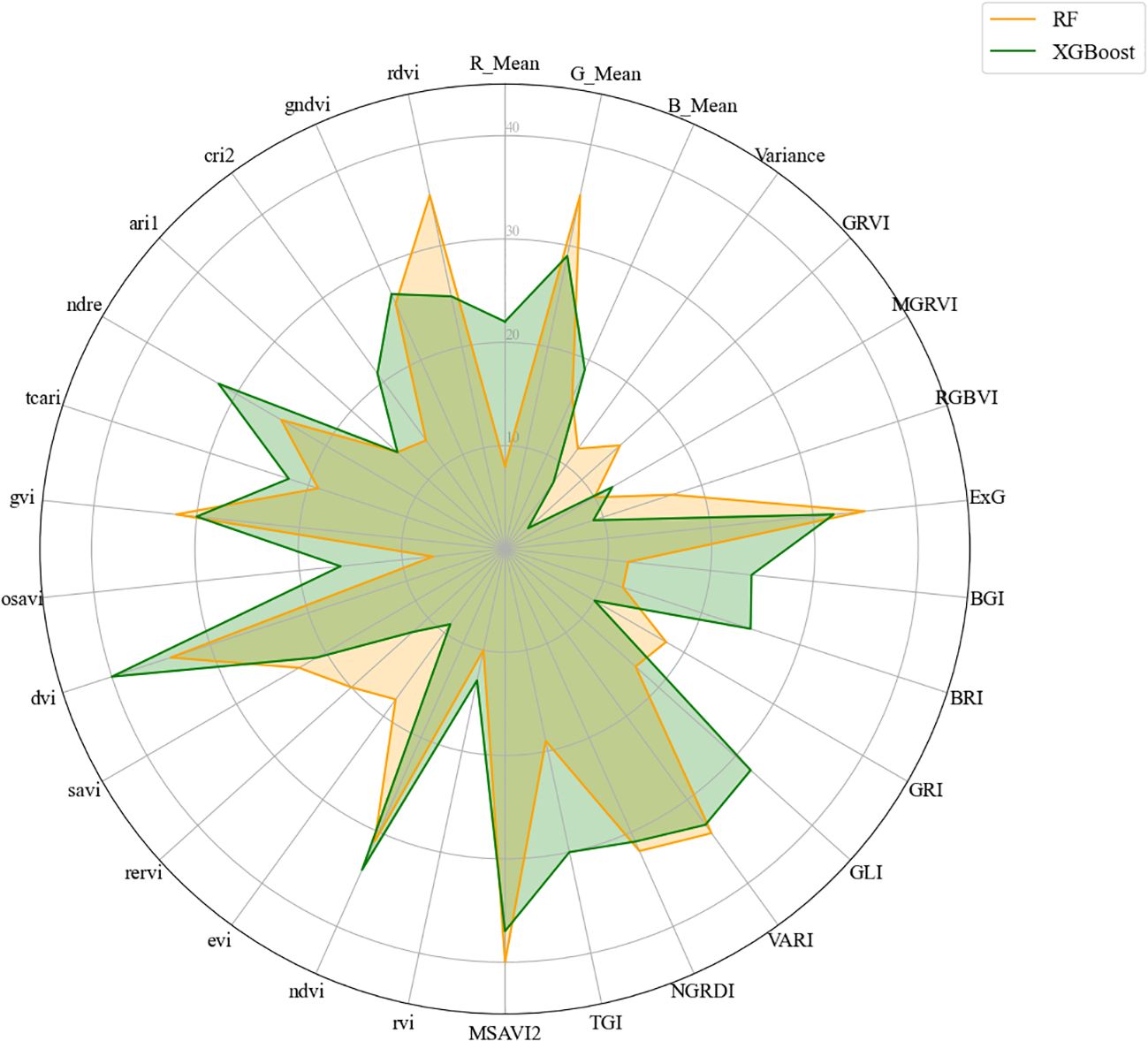

The data in Table 5 reveals that among the two SFM methods, several features such as ExG, Variance, VARI, NIR, and G_Mean were consistently selected across the three input datasets, indicating a strong correlation with the LAI. Additionally, indices such as MSAVI2 and NDVI were prioritized in the modeling workflow due to their established sensitivity to canopy structure and leaf area, which makes them particularly informative for estimating LAI. The relative contribution of each feature to the predictive performance of the RF and XGBoost models is illustrated in Figure 6, where the importance scores reflect the influence of each feature in the modeling process. Overall, it is evident that both the textural and spectral characteristics of soybean leaves are pertinent to the construction of the model.

Table 5. Feature combinations selected from the complete set of input features from three sensors, using different feature selection methods.

Figure 6. Feature importance of 30 selected features evaluated using two tree-based ensemble methods: (a) Random Forest (RF) and (b) XGBoost. The importance scores shown reflect the relative contribution of each feature to the model’s predictive performance. Differences between RF and XGBoost reflect variations in how each method assesses feature relevance.

3.3 Modeling and validation of LAI estimation

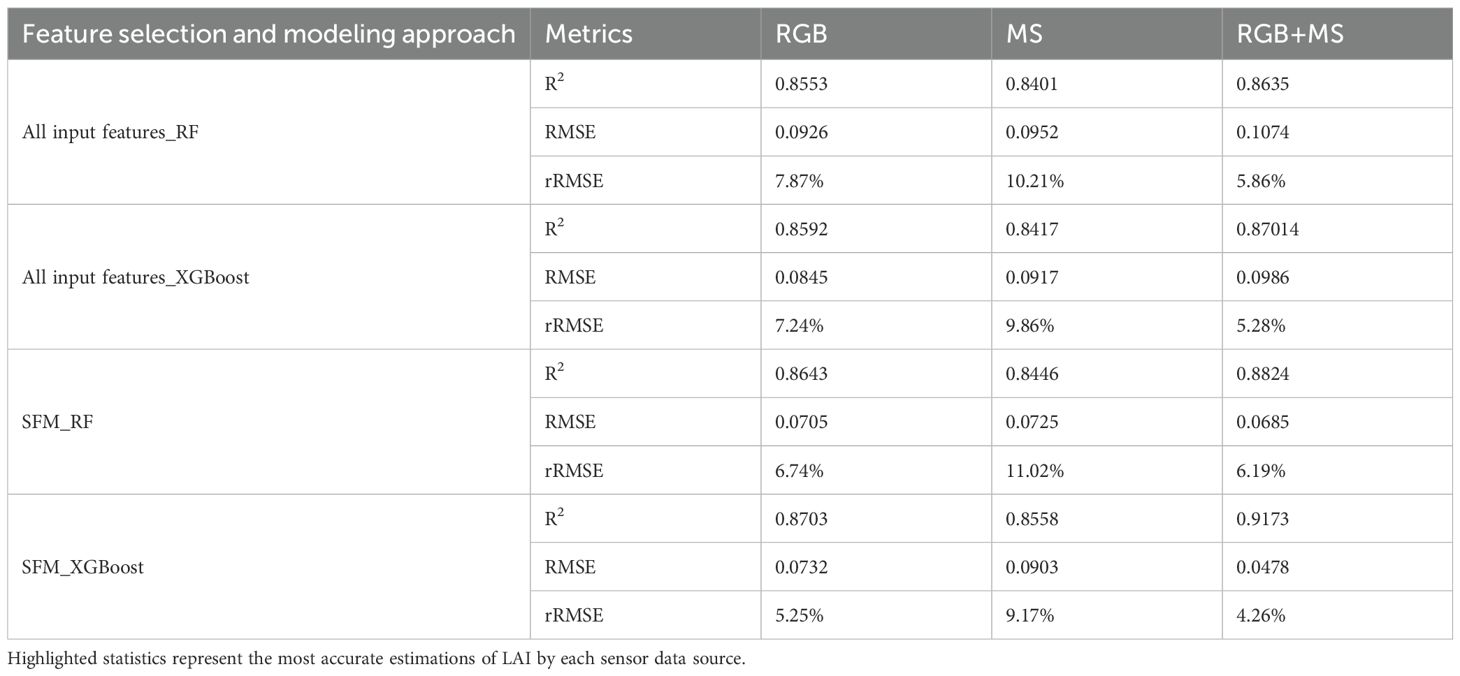

3.3.1 Evaluation of different sensors and regression models

Table 6 presents the validation statistics for various input combinations, specifically the coefficients of determination (R2), RMSE, and relative RMSE. All data within the table were derived from images taken at an altitude of 15 m. It is evident that among the three feature input combinations, the XGBoost model exhibited the most proficient performance in regressions related to leaf area, achieving the highest overall precision. When considering the source of input variables, the combination of RGB and MS sensors provided the most favorable outcomes. For the XGBoost model, the R² varied between 0.8703 and 0.9173, while rRMSE ranged from 4.26% to 6.17%. These metrics confirm the robustness of the models developed in this study. Concurrently, the use of XGBoost for feature selection and model construction, coupled with the fusion of multiple data sources (RGB+MS), emerged as the most precise approach.

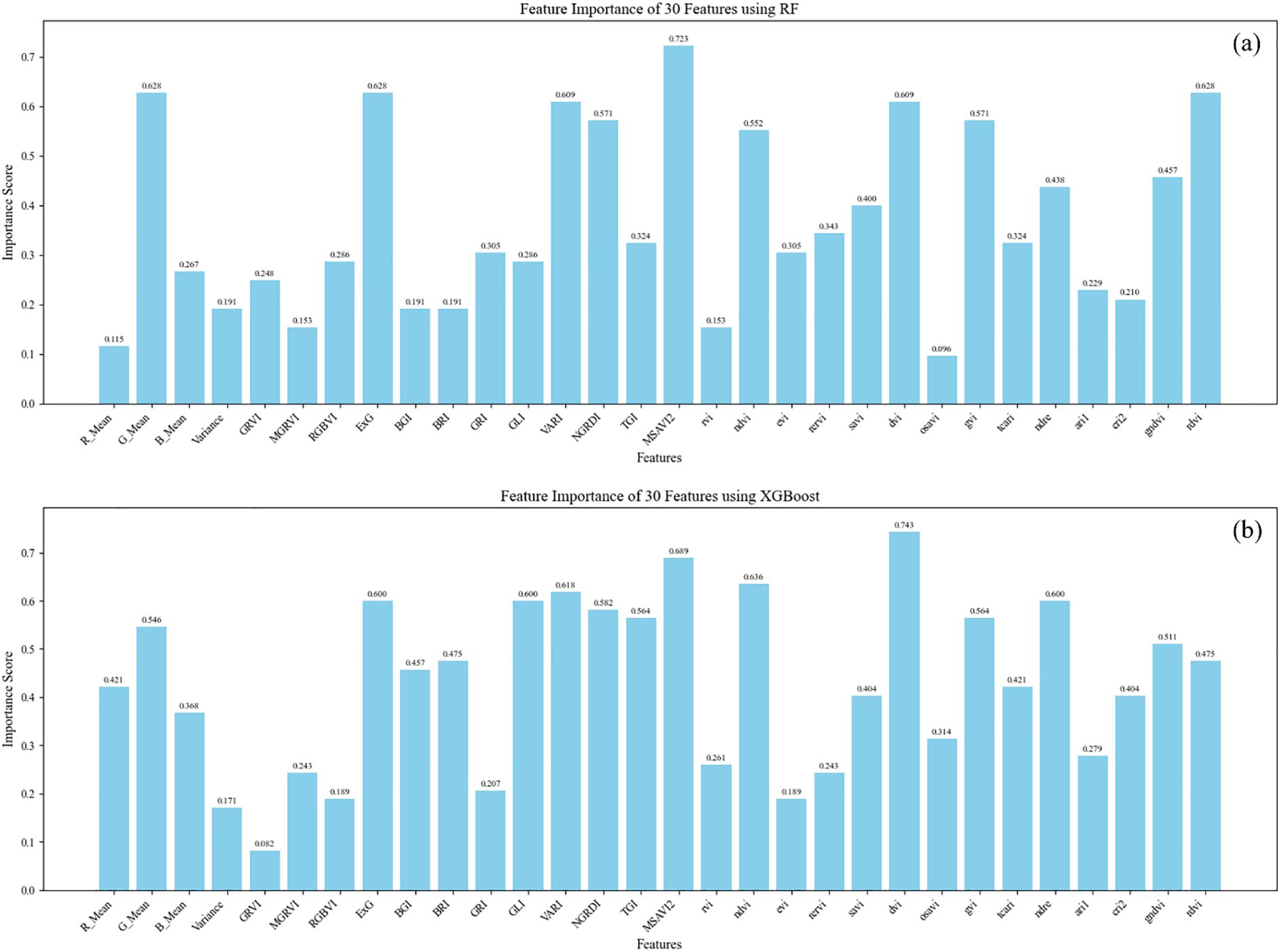

3.3.2 Performance of different LAIs on model accuracy

In this section, the performance of the XGBoost model combined with multisource information fusion (RGB+MS) was evaluated across different periods for leaf area estimation. Figure 7 displays the final evaluation results. In each panel, the scatter plot on the left plots the estimated leaf area (x-axis) against the residuals, which are the differences between the estimated and true leaf areas (y-axis). On the right, a histogram illustrates the distribution of these residuals. The analysis indicates that the model adapts well to leaf area estimation, with absolute residuals less than 0.25, and the residuals across three dates approximating a normal distribution.

Figure 7. Residual distribution of the XGBoost model for RGB sensor (a), MS sensor (b), and RGB+MS sensor (c). Train: Training dataset. Val: Validation dataset.

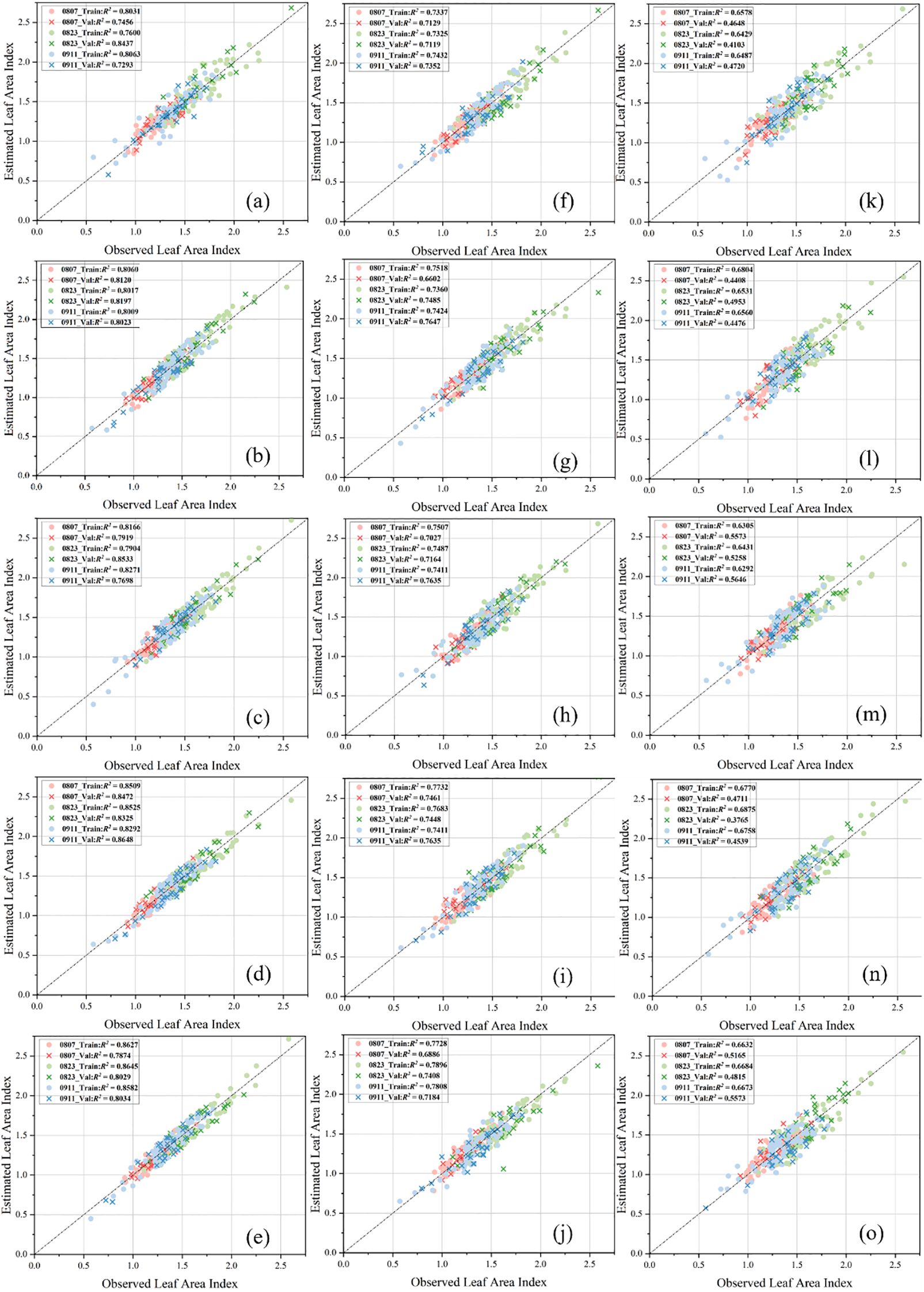

3.3.3 Evaluation of various SR techniques

This study employed four distinct SR methods—SRCNN, EDSR, Real-ESRGAN, and SwinIR—to assess their efficacy in estimating the LAI within a regression model. The original altitudes evaluated were 30 m, 45 m, and 60 m. For each altitude, the LAI was enhanced using the aforementioned SR techniques, maintaining identical input features as those used at a baseline altitude of 15 m. Figure 8 illustrates the relationship between the measured LAI and the estimated LAI at an altitude of 30 m, following optimization with the four algorithms. It was observed that the introduction of SR techniques significantly enhanced the overall precision of the model. Specifically, the SRCNN and EDSR methods showed improvements in their R2 values compared to the original 30-meter images, although the increases were not substantial. Conversely, the Real-ESRGAN and SwinIR methods demonstrated a noticeable rise from an R2 of approximately 0.80 to around 0.86. Figure 8 also depicts the relationships between the LAI values at an altitude of 45 m after optimization with the four algorithms. At this altitude, the application of SR methods continued to positively influence the model’s precision. The performance enhancements with SRCNN and EDSR were similar to those at 30 m, whereas the Real-ESRGAN and SwinIR methods did not show as significant an increase as seen in the 30-meter scenario, indicating a diminishing return in model precision enhancement. Furthermore, Figure 8 displays the relationship between the LAI values at 60 m following the application of the four algorithms. At this altitude, none of the SR methods demonstrated a noticeable change in model precision compared to the unprocessed data, suggesting that SR techniques did not contribute to precision enhancement under these conditions.

Figure 8. Relationship between the estimated and measured LAI across three different altitudes and four SR methods within both training and validation datasets. At 30-meter altitude: (a) without SR, (b) using SRCNN, (c) using EDSR, (d) using Real-ESRGAN, (e) using SwinIR. At 45-meter altitude: (f) without SR, (g) using SRCNN, (h) using EDSR, (i) using Real-ESRGAN, (j) using SwinIR. At 60-meter altitude: (k) without SR, (l) using SRCNN, (m) using EDSR, (n) using Real-ESRGAN, (o) using SwinIR. The color coding indicates the dates of LAI estimation: red for August 7, green for August 23, and blue for September 11. The data points in the figures are differentiated into training and validation datasets, as indicated by distinct shapes.

4 Discussion

4.1 Evaluation metrics for SR algorithms

This study employs the PSNR and SSIM indices to evaluate the SR effect. Despite their widespread use in image quality assessment, PSNR and SSIM exhibit numerous limitations in the agricultural sector. They struggle to reflect the semantic fidelity of key agricultural features within images, respond sensitively to changes in critical areas, and are highly sensitive to environmental variations (Hore and Ziou, 2010; Nigam and Jain, 2020). Therefore, in agricultural image processing, a comprehensive evaluation should incorporate perceptual indices or task-related metrics. This study evaluates the effect of SR based on the accuracy of the final LAI evaluation.

4.2 Selection of data features for LAI evaluation

In Table 6, the model performance was evaluated using the coefficient of determination (R²), RMSE, and relative RMSE (rRMSE). These three indicators were selected because they collectively assess both the accuracy and relative deviation of model predictions, which are essential for comparing different feature combinations and model configurations. In contrast, the mean absolute error (MAE) was reserved for analyzing LAI estimation performance across different growth stages (Section 4.4), where absolute differences provide more intuitive insights into temporal variations. MAE is often preferred in such analyses because it expresses the average absolute error in the same units as the original data, allowing more straightforward interpretation of deviations over time (Chai and Draxler, 2014).

These results underscore the importance of high spatial resolution in revealing more details and enhancing the accuracy of feature data, thereby confirming the significant role of SR techniques in this study. Previous studies have shown that the fusion of information from different sensors can significantly enhance crop growth monitoring and yield prediction. For example, incorporating both RGB and multispectral (MS) features leads to more accurate estimation of rice LAI (Yang et al., 2021).

Although Table 6 indicates that the results using RGB data alone are better than those using MS data alone, MS data remains of great importance. The introduction of MS provides an additional dimension of information for estimating leaf age from a spectroscopic perspective. MS imagery can detect fine spectral variations related to distinct physiological components of leaves more effectively than RGB data, thanks to its narrowband sensitivity (Reddy and Pawar, 2020). MS information offers critical insights for tracking physiological, biochemical, and structural dynamics across crop growth stages (Zhao et al., 2023). Leaf spectral reflectance is influenced by factors such as chlorophyll concentration, equivalent water thickness, and leaf inclination angle, which vary notably over time (Zeng et al., 2022). As leaves mature, significant changes also occur in water content, pigment levels, and other chemical constituents (Sims and Gamon, 2002). These changes are effectively captured through spectral imaging and feature extraction, reflecting trends over time.

In addition, leaf spectral characteristics play a crucial role in estimating LAI, as they capture both biochemical and structural variations of the canopy. Spectral reflectance in the visible region, particularly in the red and green bands, is primarily influenced by pigment content. In contrast, reflectance in the near-infrared (NIR) region is affected by leaf internal structure and canopy density (Haboudane et al., 2004). A higher LAI typically corresponds to stronger absorption in the visible range and higher reflectance in the NIR range, reflecting the integrated effects of canopy structure and photosynthetic activity.

Therefore, incorporating spectral information from multiple sensors provides a more comprehensive representation of canopy characteristics. In this study, the multi-source data fusion approach (RGB + MS) enabled the joint use of spectral and structural features, effectively improving LAI estimation accuracy. This emphasizes the importance of combining spectral indices with textural and canopy-structural features in the inversion process, demonstrating the advantage of multi-source fusion for capturing LAI-related variability (Delegido et al., 2011).

The study investigated the impact of input image features on model performance by incrementally adding modeling features. As shown in Figure 9, in both the RF and XGBoost models, MSAVI2 and NDVI are the most influential features, underscoring their pivotal roles in predicting target variables. These vegetation indices collectively highlight the importance of spectral features in estimating LAI.

4.3 Analysis of SR algorithms

Previous research, as summarized in Table 6, indicates that the combination of the XGBoost model with multisource information fusion (RGB + MS) achieves the highest precision. Therefore, the subsequent analyses in this study are based on this combination. Following this determination, SR techniques were introduced during the data preprocessing phase. To investigate the enhancement effect of SR methods, flights were conducted at four different altitudes: 15 m, 30 m, 45 m, and 60 m, corresponding to spatial resolutions of 0.1875 cm, 0.375 cm, 0.5625 cm, and 0.75 cm, respectively. The results show that SR methods positively impact model precision. Among all four methods, Real-ESRGAN and SwinIR performed better than SRCNN and EDSR. This superiority is attributed to the intrinsic characteristics of agricultural images, such as fine textures (e.g., leaf details and crop textures) and repetitive leaf structures, which are prevalent in the RGB images used in this study.

The application of CNNs in image SR tasks was initiated by Dong et al. with the introduction of SRCNN, a three-layer, end-to-end convolutional network (Dong et al., 2015). This architecture demonstrated superior performance compared to traditional methods based on interpolation or sparse representation. With the rapid evolution of deep convolutional networks in the field of SR, EDSR, proposed in 2017, incorporated residual modules and deepened the network architecture to further improve SR performance (Lim et al., 2017). Within a CNN, a single convolutional kernel observes only a small local portion of the input image. Despite stacking multiple convolutional layers, the receptive field expands slowly. If a pixel is to perceive information from a distant location, it must traverse numerous layers, which can lead to attenuation or distortion of information during transmission. Furthermore, downsampling operations such as pooling, while capable of enlarging the receptive field, often result in loss of detail and texture degradation, making them unsuitable for restoring delicate structures in soybean RGB images. Consequently, employing CNNs to enhance the SR of soybean leaf images is not considered an appropriate approach.

Real-ESRGAN, introduced in 2021, builds upon EDSR by incorporating a more complex GAN structure and enhancing the training data degradation model to support more realistic low-quality inputs (Wang et al., 2021). SwinIR, also from 2021, is based on the Swin Transformer, a sliding window transformer that naturally excels at modeling long-distance dependencies and understanding distant texture correlations in images, which is particularly crucial in crop imaging (e.g., the directionality of leaf veins and textures) (Liang et al., 2021). Figure 10 illustrates the structural differences among the four network architectures. SRCNN and EDSR rely solely on local convolution to interpret images, whereas Real-ESRGAN introduces a GAN structure that establishes an adversarial process between the generator and the discriminator. This process not only seeks numerical closeness but also aims to visually deceive the discriminator, thereby generating more natural images with high-frequency details (Zhang et al., 2023). SwinIR employs localized window attention and a sliding window mechanism, allowing for regional modeling while also capturing information from distant areas. Overall, Real-ESRGAN exhibits significant advantages in realistic degradation modeling and detail enhancement, while SwinIR achieves breakthroughs in feature modeling capability and distant texture reconstruction. Both methods surpass traditional CNN approaches in terms of visual perception quality and practical application results. These findings are consistent with the conclusions of this study, which indicate that models optimized with Real-ESRGAN and SwinIR exhibit higher precision than those using traditional CNN techniques. The differences in the network architectures of the four methods are shown in Figure 10.

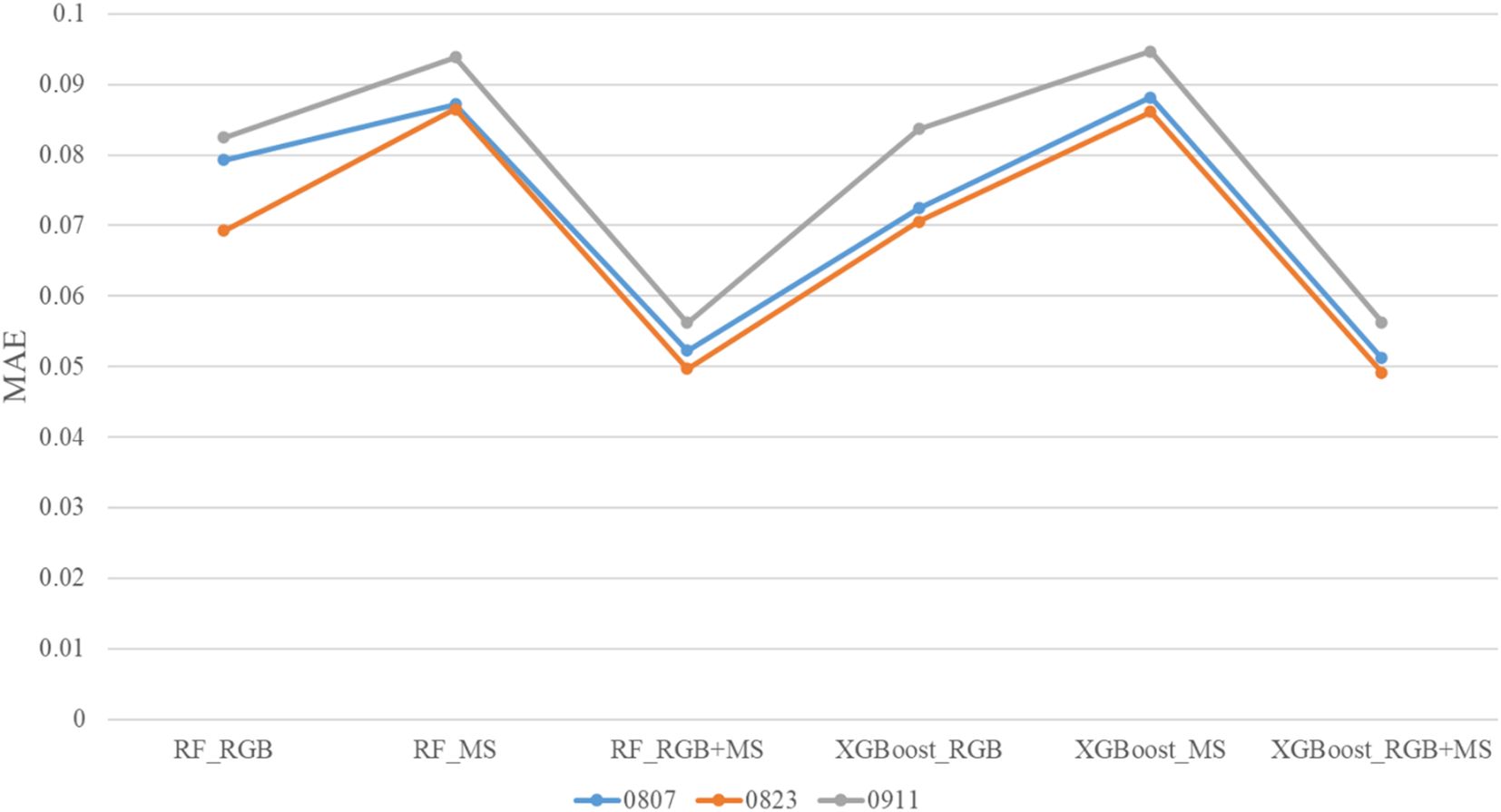

4.4 Comparative analysis of soybean LAI across different time periods

To examine the influence of soybean LAI on the accuracy of estimation models, Figure 11 illustrates the distribution of MAE for LAI estimates over three distinct time periods. Statistical analysis of the results revealed that the estimation model more accurately reflected LAI during August. However, estimation precision noticeably decreased during the late growth stage in September. One plausible explanation for this decline is the reduced sensitivity of vegetation indices, such as NDVI and EVI, to LAI. As the growth season progresses, leaf senescence and thinning occur, leading to a situation in which, despite a reduction in LAI, changes in NDVI and EVI become minimal, resulting in inaccurate model estimations. Leaf discoloration and the concomitant decrease in chlorophyll content cause vegetation indices to reflect not only leaf area but also information indicative of physiological aging. With reduced vegetation cover, more of the ground surface—including bare soil and dry grass—is exposed, mixing soil signals with those received by the sensors. Consequently, while vegetation indices may show a decline, substantial leaf area may still remain, causing the model to erroneously interpret a greater decrease in LAI. Moreover, spectral information primarily originating from leaves reduces the MAE of MS data used in LAI estimation.

To mitigate this limitation, future studies could incorporate vegetation indices that are more sensitive to senescence and pigment variation, such as the Red Edge Chlorophyll Index (CIred-edge) or the Normalized Difference Senescence Index (NDSI), which may enhance model robustness during late growth stages. In addition, although this study involved multiple soybean varieties, the current model’s generalizability across different genotypes, growth environments, and phenological stages remains to be further validated. Future research should therefore consider multi-environment and multi-temporal datasets to improve the universality and adaptability of the proposed model.

4.5 Limitations and future prospects

This study employed multi-source remote sensing imagery to estimate the LAI of soybean crops. The integration of SR techniques during the data preprocessing phase yielded favorable outcomes. However, the models’ ability to account for morphological and phenological variations among soybean crops at different growth stages and across varieties was not fully validated, which may limit the generalizability of the estimation models. Future research should incorporate multi-temporal and multi-varietal datasets to better capture these variations, thereby enhancing the model’s robustness and transferability under diverse field conditions. Compared to traditional destructive methods for collecting soybean LAI data, UAVs equipped with multiple sensors can rapidly and efficiently gather extensive crop information. This approach not only saves considerable labor and costs but also achieves higher data accuracy. Although the study covered several soybean varieties with identical sowing times, noticeable differences in growth conditions were observed. The methods developed here demonstrated strong adaptability in estimating LAI across different soybean varieties, indicating significant practical value and providing robust support for researchers.

The SR technique introduced at the data preprocessing stage was designed to maintain data accuracy despite higher UAV flying altitudes. It was demonstrated that within a specific altitude range, the SR method significantly enhanced model precision. The improvement in the resolution of RGB images at an altitude of 30 m was found to be comparable to that at the original flying altitude of 15 m. This highlights the considerable potential of deep learning models in agricultural surveillance. In future studies, additional modules could be integrated to further optimize the SR model, improving its ability to address texture and leaf-repetition issues in soybean imagery. Such advancements could inspire new approaches to enhance UAV data collection efficiency and model generalization across phenological stages and varieties.

5 Conclusion

This study aimed to estimate the LAI of soybeans using UAVs equipped with high spatial resolution RGB and MS imagery. We employed appropriate feature selection methods to enhance the efficiency of the modeling process. The study identified the most precise combinations of modeling techniques and datasets and subsequently developed a high-precision model for estimating the LAI.

Additionally, SR techniques were introduced during the data preprocessing stage to enhance the RGB image data before its incorporation into the model, resulting in higher precision outcomes. The key findings of this research are as follows:

1. The combination of features from both RGB and MS imagery was superior to using either type of data alone. Features derived from the MS data significantly enhanced model precision.

2. The use of feature selection methods improved the operational efficiency of the model. Among the models constructed in this study, namely Random Forest (RF) and XGBoost, the latter proved more effective for both feature selection and model construction.

3. The application of SR methods during data preprocessing improved model precision. Specifically, data optimized using the GAN-based Real-ESRGAN method and the Transformer-based SwinIR method yielded better results than those processed by CNN-based methods such as SRCNN and EDSR.

The results of this research offer new insights into efficient UAV crop monitoring. By leveraging the enhancement capabilities of SR technology on UAV RGB images, UAVs can potentially operate at higher altitudes while maintaining high model precision. Combined with machine learning techniques, this provides an efficient method for monitoring soybean growth and managing agricultural fields.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Author contributions

ZZ: Writing – review & editing. HY: Writing – original draft, Writing – review & editing. DZ: Writing – review & editing. ZJ: Writing – review & editing. XZ: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by the National Key Laboratory of Smart Farm Technologies and Systems, Key R&D Program Project of Heilongjiang Province of China under Grant No. JD2023GJ01-13, the Natural Science Foundation of Heilongjiang Province, China under Grant No. ZL2024C004, the Programs for Science and Technology Development of Heilongjiang Province of China under Grant No. 2024ZX01A07, and the Key R&D Program of Heilongjiang Province of China under Grant No.2022ZX01A23.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2025.1700660/full#supplementary-material

References

Adnan, M., Alarood, A. A. S., Uddin, M. I., and Rehman, I. U. (2022). Utilizing grid search cross validation with adaptive boosting for augmenting performance of machine learning models. PeerJ Comput. Sci. 8, e803. doi: 10.7717/peerj-cs.803

Ali, A. M., Benjdira, B., Koubaa, A., El Shafai, W., Khan, Z., and Boulila, W. (2023). Vision transformers in image restoration: A survey. Sensors 23, 2385. doi: 10.3390/s23052385

Araus, J. L. and Cairns, J. E. (2014). Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 19, 52–61. doi: 10.1016/j.tplants.2013.09.008

Bashir, S. M. A., Wang, Y., Khan, M., and Niu, Y. (2021). A comprehensive review of deep learning-based single image super-resolution. PeerJ Comput. Sci. 7, e621. doi: 10.7717/peerj-cs.621

Chai, T. and Draxler, R. R. (2014). Root mean square error (RMSE) or mean absolute error (MAE)? – Arguments against avoiding RMSE in the literature. Geosci Model. Dev. 7, 1247–1250. doi: 10.5194/gmd-7-1247-2014

Chapman, S. C., Zheng, B., Potgieter, A. B., Guo, W., Baret, F., Liu, S., et al. (2018). “Visible, near infrared, and thermal spectral radiance on-board UAVs for high-throughput phenotyping of plant breeding trials,” in Biophysical and biochemical characterization and plant species studies (CRC Press, Boca Raton, FL), 275–299.

Chatraei Azizabadi, E., El-Shetehy, M., Cheng, X., Youssef, A., and Badreldin, N. (2025). In-season potato nitrogen prediction using multispectral drone data and machine learning. Remote Sens. 17, 1860. doi: 10.3390/rs17111860

Chen, W., Zhang, B., Kong, X., Wen, L., Liao, Y., and Kong, L. (2022). Soybean production and spatial agglomeration in China from 1949 to 2019. Land 11, 734. doi: 10.3390/land11050734

Confalonieri, R., Foi, M., Casa, R., Aquaro, S., Tona, E., Peterle, M., et al. (2013). Development of an app for estimating leaf area index using a smartphone: Trueness and precision determination and comparison with other indirect methods. Comput. Electron. Agric. 96, 67–74. doi: 10.1016/j.compag.2013.04.019

Delegido, J., Verrelst, J., Alonso, L., and Moreno, J. (2011). Evaluation of Sentinel-2 red-edge bands for empirical estimation of green LAI and chlorophyll content. Sensors 11, 7063–7081. doi: 10.3390/s110707063

Dhakar, R., Sehgal, V. K., Chakraborty, D., Sahoo, R. N., and Mukherjee, J. (2021). Field scale wheat LAI retrieval from multispectral Sentinel-2A MSI and Landsat-8 OLI imagery: Effect of atmospheric correction, image resolutions and inversion techniques. Geocarto Int. 36, 2044–2064. doi: 10.1080/10106049.2019.1655715

Dong, C., Loy, C. C., He, K., and Tang, X. (2015). Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38, 295–307. doi: 10.1109/TPAMI.2015.2439281

Du, X., Zheng, L., Zhu, J., and He, Y. (2024). Enhanced leaf area index estimation in rice by integrating UAV-based multi-source data. Remote Sens. 16, 1138. doi: 10.3390/rs16071138

Fang, H., Baret, F., Plummer, S., and Schaepman-Strub, G. (2019). An overview of global leaf area index (LAI): Methods, products, validation, and applications. Rev. Geophys. 57, 739–799. doi: 10.1029/2018RG000608

Furbank, R. T. and Tester, M. (2011). Phenomics: Technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 16, 635–644. doi: 10.1016/j.tplants.2011.09.005

Haboudane, D., Miller, J. R., Tremblay, N., Zarco-Tejada, P. J., and Dextraze, L. (2004). Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 81, 416–426. doi: 10.1016/j.rse.2002.10.022

Hartman, G. L., West, E. D., and Herman, T. K. (2011). Crops that feed the world 2: Soybean—worldwide production, use, and constraints caused by pathogens and pests. Food Secur. 3, 5–17. doi: 10.1007/s12571-010-0108-x

Hore, A. and Ziou, D. (2010) in Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR). 2366–2369 (Istanbul, Turkey: IEEE). doi: 10.1109/ICPR.2010.579

Jay, S., Baret, F., Dutartre, D., Malatesta, G., Héno, S., Comar, A., et al. (2019). Exploiting the centimeter resolution of UAV multispectral imagery to improve remote sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sens Environ. 231, 110898. doi: 10.1016/j.rse.2018.11.038

Jonak, M., Mucha, J., Jezek, S., Kovac, D., and Cziria, K. (2024). SPAGRI AI: Smart precision agriculture dataset of aerial images at different heights for crop and weed detection using super resolution. Agric. Syst. 216, 103876. doi: 10.1016/j.agsy.2023.103876

Karthikeyan, L. and Mishra, A. K. (2021). Multi-layer high resolution soil moisture estimation using machine learning over the United States. Remote Sens Environ. 266, 112706. doi: 10.1016/j.rse.2021.112706

Liang, J., Cao, J., Sun, G., Zhang, K., Van Gool, L., and Timofte, R. (2021). “SwinIR: Image restoration using swin transformer,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 1833–1844 (Montreal, QC: IEEE). doi: 10.1109/ICCVW54120.2021.00204

Lim, B., Son, S., Kim, H., Nah, S., and Mu Lee, K. (2017). “Enhanced deep residual networks for single image super-resolution,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). 136–144 (Honolulu, HI: IEEE). doi: 10.1109/CVPRW.2017.151

Natekin, A. and Knoll, A. (2013). Gradient boosting machines, a tutorial. Front. Neurorobot 7. doi: 10.3389/fnbot.2013.00021

National Bureau of Statistics of China (2022). Soybean production in Heilongjiang Province in 2022. Available online at: https://english.www.gov.cn/news/topnews/202212/18/content_WS639e757fc6d0a757729e48f1.html (Accessed October 8, 2025).

Nigam, S. and Jain, R. (2020). Plant disease identification using deep learning: A review. Indian J. Agric. Sci. 90, 249–257. doi: 10.56093/ijas.v90i2.98996

Panigrahi, S. S., Singh, K. D., Balasubramanian, P., Wang, H., Natarajan, M., and Ravichandran, P. (2025). UAV-based liDAR and multispectral imaging for estimating dry bean plant height, lodging and seed yield. Sensors 25, 3535. doi: 10.3390/s25113535

Parida, P. K., Somasundaram, E., Krishnan, R., Radhamani, S., Sivakumar, U., Parameswari, E., et al. (2024). Unmanned aerial vehicle-measured multispectral vegetation indices for predicting LAI, SPAD chlorophyll, and yield of maize. Agriculture 14, 1110. doi: 10.3390/agriculture14071110

Raschka, S. (2018). Model evaluation, model selection, and algorithm selection in machine learning. arXiv. 1811.12808. doi: 10.48550/arXiv.1811.12808

Reddy, P. L. and Pawar, S. (2020). Multispectral image denoising methods: A literature review. Mater. Today Proc. 33, 4666–4670. doi: 10.1016/j.matpr.2020.02.958

Sadeh, Y., Zhu, X., Dunkerley, D., Walker, J. P., Zhang, Y., Rozenstein, O., et al. (2021). Fusion of Sentinel-2 and PlanetScope time series data into daily 3 m surface reflectance and wheat LAI monitoring. Int. J. Appl. Earth Obs Geoinf 96, 102260. doi: 10.1016/j.jag.2020.102260

Shi, M., Du, Q., Fu, S., Yang, X., Zhang, J., Ma, L., et al. (2022). Improved estimation of canopy water status in maize using UAV-based digital and hyperspectral images. Comput. Electron. Agric. 197, 106982. doi: 10.1016/j.compag.2022.106982

Sims, D. A. and Gamon, J. A. (2002). Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens Environ. 81, 337–354. doi: 10.1016/S0034-4257(02)00010-X

Strachan, I. B., Stewart, D. W., and Pattey, E. (2005). Determination of leaf area index in agricultural systems. Micrometeorol. Agric. Syst. 47, 179–198. doi: 10.2134/agronmonogr47.c9

Su, W., Huang, J., Liu, D., and Zhang, M. (2019). Retrieving corn canopy leaf area index from multitemporal Landsat imagery and terrestrial LiDAR data. Remote Sens 11, 572. doi: 10.3390/rs11050572

Wang, X., Xie, L., Dong, C., and Shan, Y. (2021). “Real-ESRGAN: Training real-world blind super-resolution with pure synthetic data,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 1905–1914 (Montreal, QC: IEEE). doi: 10.1109/ICCVW54120.2021.00216

Watson, D. J. (1947). Comparative physiological studies on the growth of field crops: I. Variation in net assimilation rate and leaf area between species and varieties, and within and between years. Ann. Bot. 11, 41–76. doi: 10.1093/oxfordjournals.aob.a083148

Wikipedia contributors (2025). “Heilongjiang,” in Wikipedia. (San Francisco, CA: Wikimedia Foundation). Available online at: https://en.wikipedia.org/wiki/Heilongjiang.

Yang, K., Gong, Y., Fang, S., Duan, B., Yuan, N., Peng, Y., et al. (2021). Combining spectral and texture features of UAV images for the remote estimation of rice LAI throughout the entire growing season. Remote Sens 13, 3001. doi: 10.3390/rs13153001

Zeng, Z., Sun, H., Wang, X., Zhang, S., and Huang, W. (2022). Regulation of leaf angle protects photosystem I under fluctuating light in tobacco young leaves. Cells 11, 252. doi: 10.3390/cells11020252

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., and Fu, Y. (2023). Deep learning for image super-resolution: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 45, 3–39. doi: 10.1109/TPAMI.2022.3147986

Keywords: UAV remote sensing, machine learning, super resolution, leaf area index, multi-source data fusion

Citation: Zhao Z, Yao H, Zeng D, Jiang Z and Zhang X (2025) UAV multi-source data fusion with super-resolution for accurate soybean leaf area index estimation. Front. Plant Sci. 16:1700660. doi: 10.3389/fpls.2025.1700660

Received: 07 September 2025; Accepted: 04 November 2025; Revised: 03 November 2025;

Published: 20 November 2025.

Edited by:

Imran Ali Lakhiar, Jiangsu University, ChinaReviewed by:

Mohamed Mouafik, Université Mohammed V., MoroccoXiaowen Wang, Jiangsu University, China

Osman Ilniyaz, Academia Turfanica, China

Copyright © 2025 Zhao, Yao, Zeng, Jiang and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xihai Zhang, eGh6aGFuZ0BuZWF1LmVkdS5jbg==

†These authors share first authorship

Zhenqing Zhao

Zhenqing Zhao Huabo Yao

Huabo Yao Depeng Zeng

Depeng Zeng Zhenfeng Jiang

Zhenfeng Jiang Xihai Zhang

Xihai Zhang