- 1Department of Plant Pathology, Kansas State University, Manhattan, KS, United States

- 2Interdepartmental Genetics, Kansas State University, Manhattan, KS, United States

- 3Instituto Nacional de Investigación Agropecuaria (INIA), Programa de Cultivos de Secano, Estación Experimental La Estanzuela, Colonia del Sacramento, Uruguay

- 4Department of Statistics, Kansas State University, Manhattan, KS, United States

- 5Global Wheat Program, International Maize and Wheat Improvement Center, Mexico City, Mexico

The development of high-throughput genotyping and phenotyping has provided access to many tools to accelerate plant breeding programs. Unmanned Aerial Systems (UAS)-based remote sensing is being broadly implemented for field-based high-throughput phenotyping due to its low cost and the capacity to rapidly cover large breeding populations. The Structure-from-Motion photogrammetry processes aerial images taken from multiple perspectives over a field to an orthomosaic photo of a complete field experiment, allowing spectral or morphological trait extraction from the canopy surface for each individual field plot. However, some phenotypic information observable in each raw aerial image seems to be lost to the orthomosaic photo, probably due to photogrammetry processes such as pixel merging and blending. To formally assess this, we introduced a set of image processing methods to extract phenotypes from orthorectified raw aerial images and compared them to the negative control of extracting the same traits from processed orthomosaic images. We predict that standard measures of accuracy in terms of the broad-sense heritability of the remote sensing spectral traits will be higher using the orthorectified photos than with the orthomosaic image. Using three case studies, we therefore compared the broad-sense heritability of phenotypes in wheat breeding nurseries including, (1) canopy temperature from thermal imaging, (2) canopy normalized difference vegetation index (NDVI), and (3) early-stage ground cover from multispectral imaging. We evaluated heritability estimates of these phenotypes extracted from multiple orthorectified aerial images via four statistical models and compared the results with heritability estimates of these phenotypes extracted from a single orthomosaic image. Our results indicate that extracting traits directly from multiple orthorectified aerial images yielded increased estimates of heritability for all three phenotypes through proper modeling, compared to estimation using traits extracted from the orthomosaic image. In summary, the image processing methods demonstrated in this study have the potential to improve the quality of the plant trait extracted from high-throughput imaging. This, in turn, can enable breeders to utilize phenomics technologies more effectively for improved selection.

Introduction

In the past 20 years, spectacular advances in “next-generation” DNA sequencing have rapidly reduced the costs of genotyping and provided almost unlimited access to high-density genetic markers, thus allowing genetic improvement of several economically important crops worldwide (Crossa et al., 2017). Accurate plant trait (i.e., phenotypes) observations have long been the key to enhancing genetic gains through classical plant breeding (Eathington et al., 2007) and also to training prediction models and predict the performance of non-phenotyped individuals from their marker scores (Hayes and Goddard, 2001). Thus, phenotyping plays an essential role in the success of standard phenotypic selection as well as genomic selection models. Reflecting this, the lack of methods for rapid and accurate phenotyping on large sets of germplasm under field conditions remains a bottleneck to genomic selection and plant improvement (Cabrera-Bosquet et al., 2012; Araus and Cairns, 2014). High-throughput phenotyping (HTP) platforms are needed to measure plant traits non-invasively (Reynolds and Langridge, 2016), reduce the labor of manual phenotyping (Cabrera-Bosquet et al., 2012; Cobb et al., 2013), and measure multiple traits, plots, or both efficiently and simultaneously (Barker et al., 2016; Wang et al., 2018).

Unmanned Aerial Systems (UAS)-based remote sensing is being broadly implemented for field-based high-throughput phenotyping due to its low cost and the capacity to cover large field trials with thousands or tens-of-thousands of plots (Shi et al., 2016). Recently, multi-rotor UAS in various sizes have been widely deployed at a low altitude (<50 m) in HTP of plant canopy spectrum features (Haghighattalab et al., 2016; Li et al., 2018), plant growth status (Chu et al., 2017; Singh et al., 2019), and crop water use (Thorp et al., 2018). With the rapid development of low-cost consumer-grade sensors and platforms, UAS phenotyping holds great potential to be an integral part of plant genomics and breeding for precise, quantitative assessment of complex traits on large populations.

Structure-from-Motion (SfM) based photogrammetry is a process widely used to quantify plant phenotypes from aerial images (Shi et al., 2016). In SfM, a large number of aerial images taken from multiple perspectives over a field are used to create an orthomosaic image of a complete field experiment. Then plant traits can be extracted from a defined area (i.e., a shapefile of boundary coordinates for individual plots) within the orthomosaic image. However, during the generation of the orthomosaic image through SfM photogrammetry, pixels within the overlapped area from multiple raw images are blended. For instance, there are multiple optional blending modes such as mosaic and average for orthomosaic image generation in Agisoft Photoscan (Agisoft, 2018). The blending of pixel values has the potential to introduce changes in values intrinsic to the raw images.

Here, we use a complex plant trait – canopy temperature (CT) – as an example of a trait difficult to accurately quantify in the field environment. CT is an indicator of plant water stress and is often correlated with grain yield (Balota et al., 2007). Compared to measuring CT by sensors on the ground (Crain et al., 2016), using the aerial vehicles integrated with thermal imaging sensors can rapidly cover the observation area and potentially reduce diurnal temperature variations. Sagan et al. (2019) compared the performance of measuring CT in soybean and energy sorghum using three commercial thermal cameras on a UAS platform. They demonstrated a high correlation (R2 > 0.9) between temperature extracted from orthomosaic images and ground measurements at noon time assuming minor temperature changes in a short period of UAV-based imaging. By contrast, on much larger field trials with many thousands of entries, longer measurement windows (i.e., hours) often give raise to temperature fluctuations. In such cases, a single CT value extracted from an orthomosaic image may not accurately reflect the actual temperature at each imaging time point. Yet, variation in CT during the measurement window could be thoroughly characterized and accounted for by extracting thermal values directly from the series of raw images, rather than the single orthomosaic image.

An additional confounding factor for thermal imaging is more technical, specifically the flat field correction (FFC) from the thermal camera that may negatively impact the thermal image quality. The FFC consists of a re-calibration of the thermal camera core while the camera is working1. FFC is helpful to regulate the thermal data within a defined range of temperature readings but leads to continual recalibration and hence, yields varying values during image acquisition, including temperature differences from the same target in two consecutive images. The error may be compensated using the temperature references on the ground, but the referencing target may not be present in every image. This adds additional complexity to the field operations and image processing. If images with inaccurate thermal measurements are used for mosaicking, the CT values extracted from the orthomosaic image will likely reflect artifacts from both the FFC and the variability of thermal values from changing ambient conditions during the measurement window. To reduce this effect, Deery et al. (2016) proposed an approach to extract CT from images on a frame-by-frame basis. However, this method requires automation if it is to be used for processing data from large nurseries and for genetic studies with thousands or tens-of-thousands of plots.

Based on the issues described above, measurements of CT using thermal cameras are hypothesized to be substantially influenced by the SfM processing required for mosaicking. Meanwhile, other types of remote sensing datasets are expected to have similar issues from the SfM processing pipeline. For example, the canopy normalized difference vegetation index (NDVI) and the leaf ground cover are widely used remote-sensing traits associated with grain yield and agronomic traits (Rutkoski et al., 2016; Duan et al., 2017). During aerial image acquisition, the reflectance measurements from the canopy changes according to the camera position and the solar angle. This reflectance change can be accurately quantified using a goniometer system and the bidirectional reflectance distribution function (BRDF) under natural illumination conditions on the ground (Sandmeier and Itten, 1999). Also, the leaf area may appear in different densities depending on camera view angles at nadir or off-nadir. Therefore, a single orthomosaic image composed of blended pixels can be expected to have similar problems to reflect variability throughout the measurement window.

According to the theory of SfM processing and the issues previously described, the overall objective of this study is to enhance quality of trait extraction from field-based data collection and measurement using high-throughput phenotyping by UAS remote sensing. For this purpose, we developed a set of image processing methods to extract phenotypes from orthorectified aerial images. We then compared these extracted remote-sensed phenotypes with the same phenotypes extracted from orthomosaic images. In addition to evaluation of CT, we investigated applying this trait extraction method to NDVI and the early-stage ground cover (GC). We fitted four competing linear mixed models to each trait and compared the models using estimates of broad-sense heritability and the Bayesian Information Criterion. Broad-sense heritability is a measure of the proportion of phenotypic variance that is due to all genetic effects relative to unaccounted error variance (Holland et al., 2003). The main purpose of estimating heritability is to understand the level of genetic control of a given phenotype which directly relates to the expected gain from different selection strategies, which is the fundamental concept of plant breeding. A high heritability value is indicative of higher precision and less error and is also connected to higher predictive ability for a given trait (Crain et al., 2017). In comparing methods for analysis of a fixed dataset, a higher heritability reflects that a given method accounts for more variance through decreasing the experimental error. We illustrate the proposed approach using data from three wheat breeding nurseries planted at different locations and in different years as case studies, whereby each nursery provided data on one type of phenotype.

Materials and Methods

Plant Material and Field Layout

Spring wheat (Triticum aestivum L.) breeding lines used for CT measurements were from the International Maize and Wheat Improvement Center (CIMMYT) wheat breeding program. The trials were planted on November 21, 2017, at Norman E Borlaug Experiment Station (27°22′57.6″N, 109°55′34.7″W) in Ciudad Obregon, Sonora, Mexico during the 2017–18 season. The experiment consisted of 1800 unique spring wheat entries distributed in 60 trials. Each trial was arranged as an alpha lattice design in two blocks. Plots served as experimental units and were 1.7 m × 3.4 m in size, consisting of raised bed planting on two beds spaced 0.8 m apart with paired rows on each bed at 0.15 m spacing for each plot. Details are in the Supplementary Table 1.

Winter wheat (Triticum aestivum) breeding lines from Kansas State University wheat breeding program were used for canopy NDVI and early-stage ground cover measurements. One trial for canopy NDVI measurements was sown on September 19, 2017 at the KSU Ashland Bottom Agronomy Farm (39°7′54.2″N, 96°37′12.6″W), Manhattan, Kansas, and the other trial for early-stage ground cover measurements was sown on September 17, 2018 at the KSU farm (39°7′56.4″N, 96°37′10.1″W). A total of 146 and 150 winter wheat entries were planted during the 2017–18 and 2018–19 season, respectively. During each season, the entire field experiment was arranged in two blocks. The entries included breeding lines and check varieties. In each block, a breeding line was planted in a single plot, while the checks were planted multiple times. The experimental plot was an individual six-row plot with 20 cm (8″) row spacing with plot dimensions of 1.5 m × 2.4 m. Details of each field experiment are listed in the Supplementary Table 1.

To improve the geospatial accuracy of orthomosaic and orthorectified images, ground control points (G) consisting of bright white/reflective square markers were uniformly distributed in the field experiment before image acquisition and surveyed to cm-level resolution. The GCPs in Obregon, Mexico were surveyed using a Trimble R4 RTK (Trimble Inc., Sunnyvale, California, United States) Global Positioning System (GPS). The GCPs in Kansas were surveyed using the Precis BX305 Real-Time Kinematic (RTK) Global Navigation Satellite System (GNSS) unit (Tersus GNSS Inc., Shanghai, China).

UAS, Sensors, and Image Acquisition

The UAS used for image acquisition was a DJI Matrice 100 (DJI, Shenzhen, China). The flight plans were created using Litchi Android App (VC Technology Ltd., United Kingdom) and CSIRO mission planner application2 for DJI Matrice100. Accordingly, the flight speed, the flight elevation above the ground, and the width between two parallel flight paths were adjusted based on the overlap rate and the camera field of view. Both cameras were automatically triggered with the onboard GNSS unit following a constant interval of distance traveled. A summary of flight settings is listed in the Supplementary Table 2.

To collect the thermal image from the spring wheat nurseries, a FLIR VUE Pro R thermal camera (FLIR Systems, United States) was carried by the DJI Matrice 100. Ten 0.25 m × 0.25 m square white metal sheets mounted on 0.50 m posts were used as GCPs. Two data collections were conducted between 11AM and 1PM on March 2 and March 19, 2018, during the grain filling stage. The aerial image overlap rate between two geospatially adjacent images was set to 80% both sequentially and laterally to ensure optimal orthomosaic photo stitching quality. Both flights were set at 60 m above ground level (AGL) at 5 m/s and could cover the 3600 breeding plots in around 16 min. To preserve the image pixel information, the FLIR camera was set to capture Radiometric JPEG (R-JPEG) images.

A MicaSense RedEdge-M multispectral camera (MicaSense Inc., United States) was used to collect winter wheat canopy images in both the 2017–18 and the 2018–19 seasons. White square tiles with a dimension of 0.30 m × 0.30 m were used as GCPs. Nine and four GCPs were placed and surveyed in the field during the 2017–18 and 2018–19 season, respectively. All UAS flights were conducted between 11AM to 2PM. A total of five UAS flights were made during the grain-filling stage in the 2017–18 season, and four UAS flights were made in the early Fall establishment period for 2018–19 season. Detailed flight dates are listed in the Supplementary Table 2. The aerial image overlap rate between two geospatially adjacent images was set to 80% both sequentially and laterally to ensure optimal orthomosaic photo stitching quality. All UAS flights were set at 20 m AGL at 2 m/s and could cover 360 (2017–18 season) and 336 (2018–19 season) plots in 14 and 11 min, respectively. To preserve the image pixel intensity, the MicaSense RedEdge-M camera was set to capture uncompressed TIFF images.

Orthomosaic and Orthorectified Images Generation

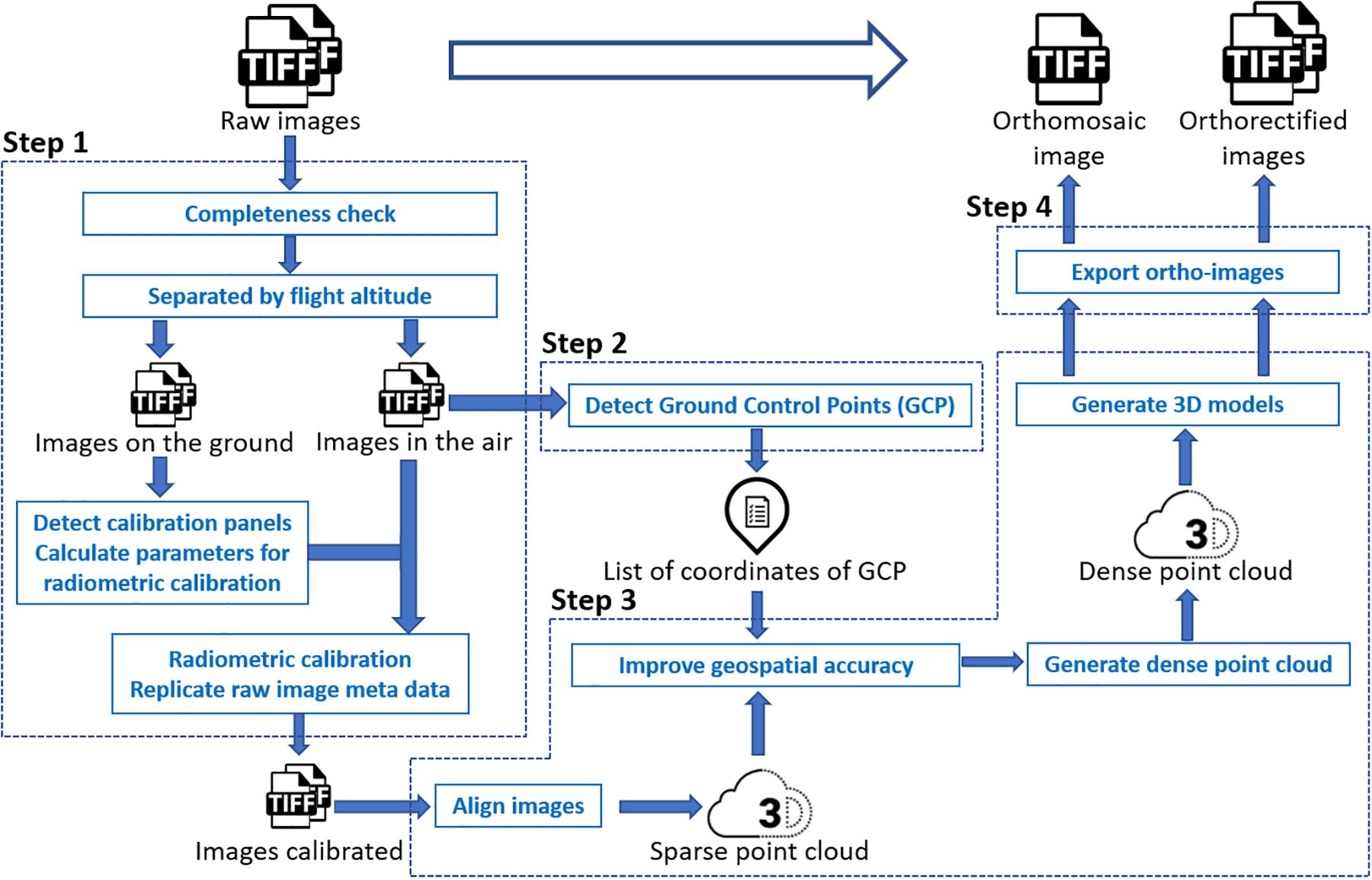

In this study, models fitted to a trait extracted from the orthomosaic image were used as a benchmark control, against which to compare estimates from models fitted to the same traits extracted from multiple individual orthorectified images. Unlike the approach proposed by Deery et al. (2016), in this study we still needed to generate the orthomosaic image of a complete field as a starting point to calculate the position of each individual image. Through the photogrammetry process, pixels in a raw image were projected to their real geographical location. Following this orthorectification, each individual raw image was converted to an orthorectified image. Therefore, there was no need to manually identify field plots in each orthorectified image because the same shapefile with plot boundaries could be used to identify a plot existing in different orthorectified images. Generating orthomosaic and orthorectified images from raw images consisted of (step 1) image preprocessing (including radiometric calibration), (step 2) GCPs detection, (step 3) photogrammetry process, (step 4) and export of orthomosaic image and orthorectified images (as shown in Figure 1), as explained below in detail. The procedure was implemented using Python, and the source code is available online3.

The image preprocessing procedure for the multispectral images converted the pixel value in each raw spectral image to reflectance before the photogrammetry process. Pixel values in raw thermal images, however, were not converted to temperature values in this step. As each trigger of the MicaSense RedEdge-M camera generated five images of every single spectral band (Blue, Red, Green, Near-infrared, and RedEdge), the completeness check removed images having less than five bands. According to the altitude (i.e., the camera height above the mean sea level) embedded in the image properties, images were divided into two groups – images captured on the ground and images captured in the air. The MicaSense radiometric calibration panels were then automatically detected from images captured on the ground if existing. Following the MicaSense radiometric calibration procedure4, calibration factors of all five bands were calculated and then applied to images captured in the air, converting raw images to reflectance images for subsequent photogrammetry process.

The GCPs detection procedure automatically identified the GCP in each image captured in the air if existing and matched the GCP with the surveyed position of the closest GCP from the image position. As white square tiles with the pre-known size were used as GCPs in the wheat field, clear patterns of GCPs could be detected through image processing. According to the image position (i.e., the longitude and latitude) embedded in the image properties, the surveyed GCP, whose coordinates were geographically close to the image position, was matched with the detected GCP in the image. Sufficient space (i.e., > 20 m) was left between every two GCPs during placement in the field to avoid having multiple GCPs in a single image and to enable sufficiently accurate geolocation of the UAS to determine which GCP was being imaged. All image file names and detected GCP coordinates were saved in a list for geospatial optimization in the photogrammetry process. Due to the low resolution of the thermal camera and the unclear pattern of GCPs in thermal images, GCPs were manually detected during the photogrammetry process of thermal images.

The photogrammetric processing of aerial images included sparse point cloud generation, geospatial optimization, dense point cloud generation, and 3-dimensional (3D) model generation. The process was implemented using the Agisoft PhotoScan Python API (Version 1.4.0, Agisoft LLC, Russia). An orthomosaic image of a complete field experiment was exported after the process. All images used to generate the orthomosaic image were exported as orthorectified images with the image boundary (i.e., the northwest and southeast corners) coordinates and the original camera position (i.e., longitude, latitude, and altitude) where the image was captured embedded in the image properties.

Plot-Level Traits Extraction

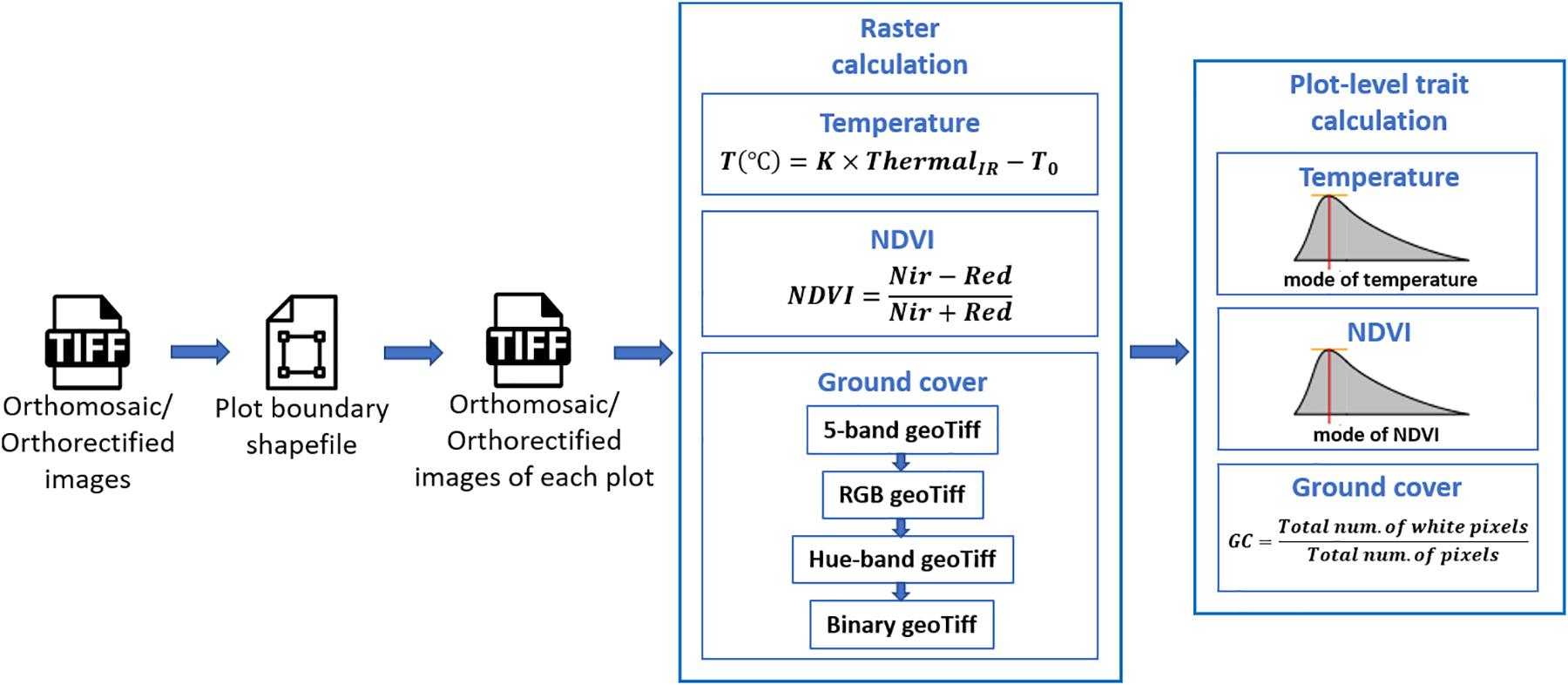

Extraction of plot-level phenotypic values from orthomosaic and orthorectified images consisted of (1) cropping single-plot images from an orthomosaic of the complete field or from multiple orthorectified images, each of which covered a small portion of the entire field, (2) converting pixel values to trait values through raster calculation, and (3) summarizing the plot-level trait in each image (as shown in Figure 2). The procedure was implemented using Python, and the source code is available online5.

Following the generation of the orthomosaic image of an entire field, a field map – a shapefile of polygons delineating the four corners of each plot was generated semi-automatically in Quantum Geographic Information System (QGIS, www.qgis.org) with the HTP Geoprocessor plugin (Wang et al., 2016). Specifically, the four corner points of the entire experiment field were first manually defined in QGIS. Then the coordinates of the four corners of the polygon for each plot were automatically calculated with the pre-known plot geometric size (length and width) using a QGIS Python script. Finally, each plot polygon was assigned a plot ID using the HTP Geoprocessor plugin (Wang et al., 2016). According to the field map, an image of each plot could be cropped from the orthomosaic image of the entire field experiment and saved as a GeoTiff image. Unlike the orthomosaic image of the complete field experiment, each orthorectified image only covered a small portion of the entire field. Therefore, only the plots that were completely included in the orthorectified image were cropped and saved as GeoTiff images. As a result, each plot was represented by a single cropped orthomosaic GeoTiff image and multiple orthorectified GeoTiff images.

To extract the CT trait, the pixel values within each GeoTiff image containing the thermal infrared band were directly used as indicators of absolute temperature measurements, as (1) the R-JPEG images have temperature data embedded in each pixel6 and (2) Sagan et al. (2019) has demonstrated the absolute temperature can be converted from the pixel value following a linear equation:

where ThermalIR is the pixel value within the thermal infrared band of the GeoTiff image, T is the absolute temperature measurement in Celsius degrees, and K and T0 are constant parameters. In this study, K and T0 were set as 0.04 and −273.15 (Flir Systems Inc., 2017; Williamson et al., 2019; Song and Park, 2020).

To generate the NDVI trait from the GeoTiff image from the five-band multispectral GeoTiff image, the following equation was used during raster calculation:

where NIR and Red are the near-infrared and red band of the multispectral GeoTiff images, respectively, and NDVI is the output raster layer.

For the canopy GC calculation, the five-band multispectral GeoTiff image was first converted to an RGB GeoTiff image by rendering the Red, Green, and Blue bands. Then the RGB image was converted to a Hue-Saturation-Value (HSV) GeoTiff image. Finally, a binary image was generated from the Hue band of the HSV image by manually selected threshold values leaving white pixels representing the canopy area in the RGB image. In this study, the threshold value was selected from the first image data set (October 3, 2018) and was applied to the subsequent image data sets.

For extraction of CT and NDVI traits, we used the mode of all non-zero values (Figure 2) in a plot area as the plot-level CT and NDVI, respectively. This was intended to compensate for noise from the non-vegetative pixels within the plot area, although most of the plots were fully covered by canopies during image acquisition. The plot-level early-stage ground cover (GC in Figure 2) was calculated as the overall percentage of white pixels within the binary image. As a result, each type of plot-level trait extracted from the orthomosaic image had only one observation per plot, whereas the same traits extracted from orthorectified images had multiple observations, one per orthorectified image in which that given plot appeared complete.

Orthomosaic and orthorectified images collected on two dates (Supplementary Table 2), were used to extract two independent datasets for the CT trait. Similarly, images collected on five and four dates were used to extract five and four independent datasets for the NDVI and the GC traits, respectively (Supplementary Table 2).

Statistical Analysis

Four general linear mixed models (models I to IV) were specified and fitted to each of the traits extracted, namely CT, NDVI and GC. For each trait, a null model (Model I) was fitted to observations extracted from the orthomosaic image (one observation per plot; Model Ia) and to the average of the multiple observations per plot, as extracted from the orthorectified images (Model Ib). The remaining three models (II, III, and IV) were fitted to the traits extracted from orthorectified images (i.e., multiple observations per plot) and were intended to recognize different aspects of the data collection process. Model fitting was implemented using the ASReml-R (Ver. 4) package in R (Butler et al., 2009; Gilmour et al., 2015), with variance components estimated by residual maximum likelihood (REML) (Butler et al., 2009; Gilmour et al., 2015). Additional details for each model follow.

Model I

Model I was developed to fit a single observation per plot, with this single observation being either extracted from a single orthomosaic image per plot (ym, Model Ia) or by averaging multiple plot-level observations ( extracted from orthorectified images (Model Ib). Specifically,

where superscripts (Ia) and (Ib) indicate the model that each parameter corresponds to. Within each model, μ represents the intercept, Gi is the random effect of the ith entry assumed distributed as iid is the random effect of the jth block assumed distributed as iid is the random effect of the kth row nested within a block and assumed distributed as iid is the random effect of the lth column nested within block and distributed as iid Finally, and are model-specific left-over residuals unique to the ijklth plot.

Model II

Given the multiple observations on each plot that were extracted from orthorectified images (yr), it is possible to assess the variability between observations within a plot (i.e., within-plot variance) by expanding Model I as follows.

where μ, Gi,Bj,Rk(j), and Cl(j) are defined as for Model I. Meanwhile, (R×C)(B)kl(j)(II) is the random effect of an individual plot identified by the combination of the kth row and the lth column within the jth block, assumed iid distributed , and is the leftover residual noise of the observation collected on the mth orthorectified image of the ijklth plot, and assumed as iid distributed Notably, in Model II, left-over residual terms are unique to each ijklmth observation within a given plot and thus represent technical replication (i.e., subsampling) of plots in the data collection process.

Model III

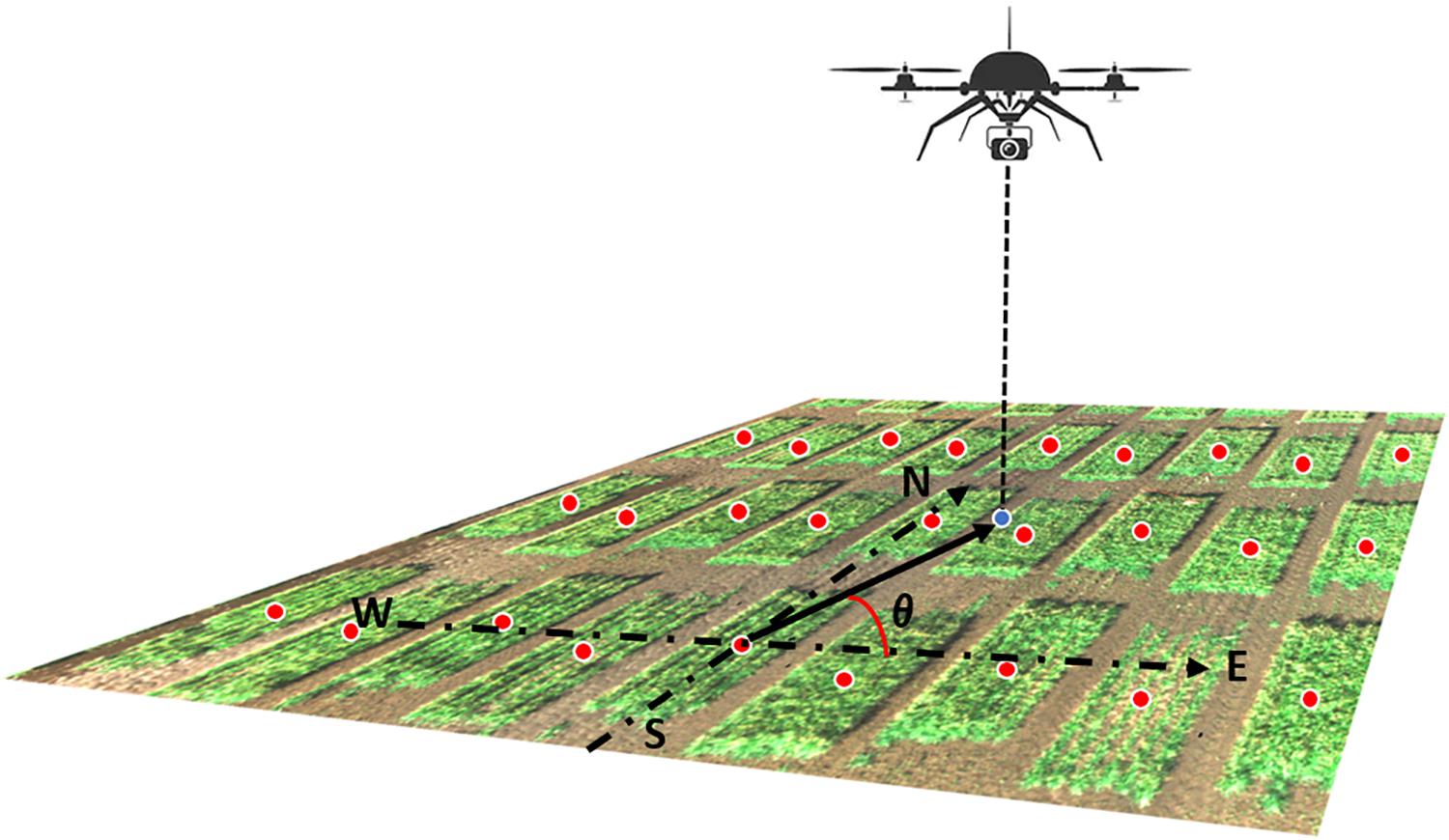

Recall that each orthorectified image includes multiple plots in the camera field of view (Figures 3–5) and that images were captured by the UAS following a serpentine trajectory (moving along the column direction and turning around at the boundary rows) to cover the entire field. Therefore, for Model III, we consider replacing the specification of spatial effects of row and column with a clustering effect of image, as follows:

Figure 3. Illustration of the camera azimuth angle. The RGB image was captured by the UAS showing a small part of the field. The blue dot represented the camera projected position on the ground. Red dots represented the center of each plot. The camera azimuth angle (θ) was the angle between the true east (as 0°) and the vector from the plot center to the camera position.

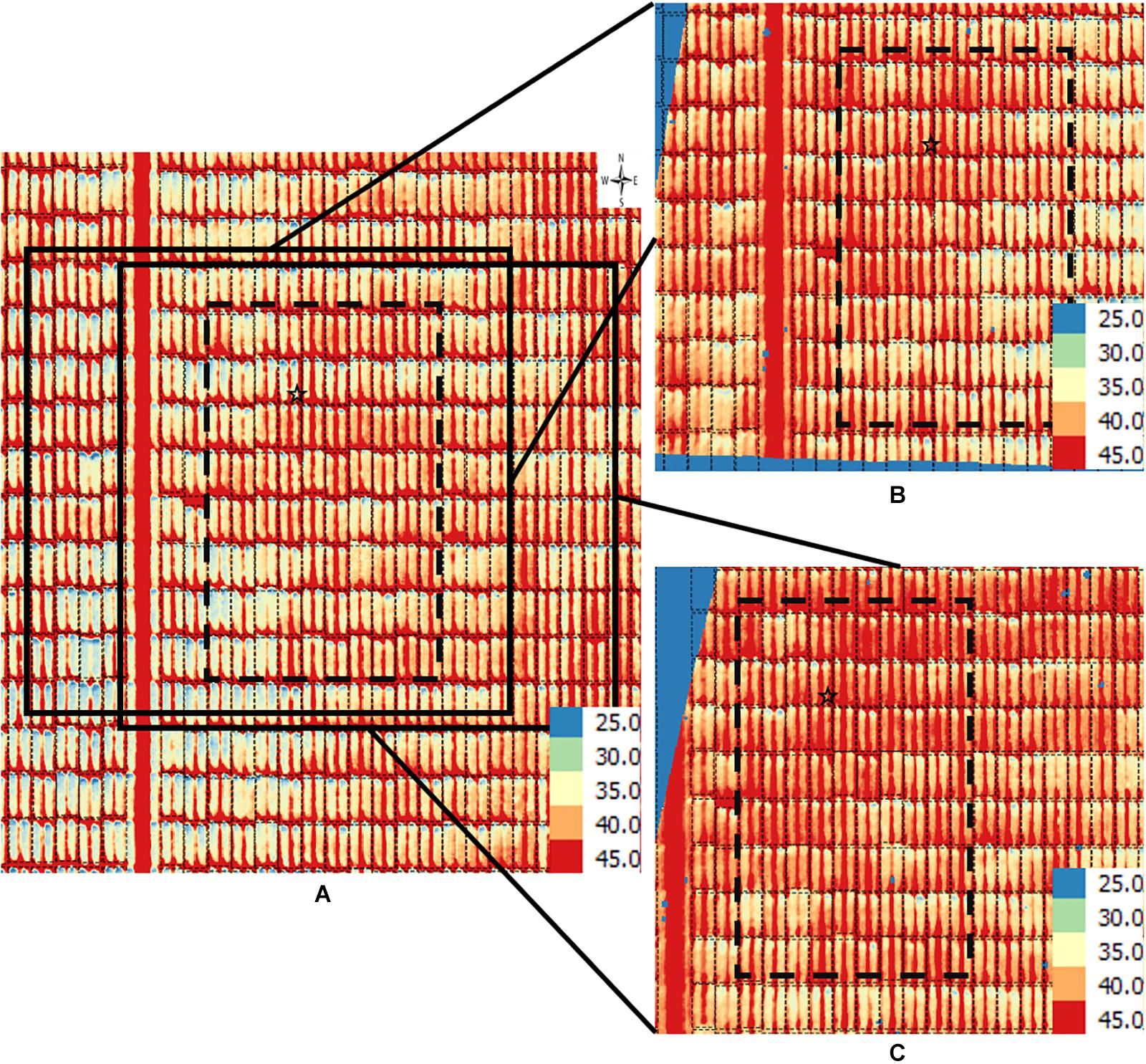

Figure 4. Orthomosaic and orthorectified images of CT. Raw thermal images for CT were captured on March 2, 2018, at 60 m AGL and were processed to generate (A) an orthomosaic image of the partial field and multiple orthorectified images of sections of the field, two of which are depicted here (B,C). Black polygons delimited by thin dotted lines within each image delineate plot boundaries. Black polygons in thick dashed lines highlight a field section of interest common to the three images. In each image, a black star marks the same plot. The range of temperature (in Celsius degree) is marked in each image. The continuous blue areas (B,C) are non-effective pixels due to orthorectification to the raw images.

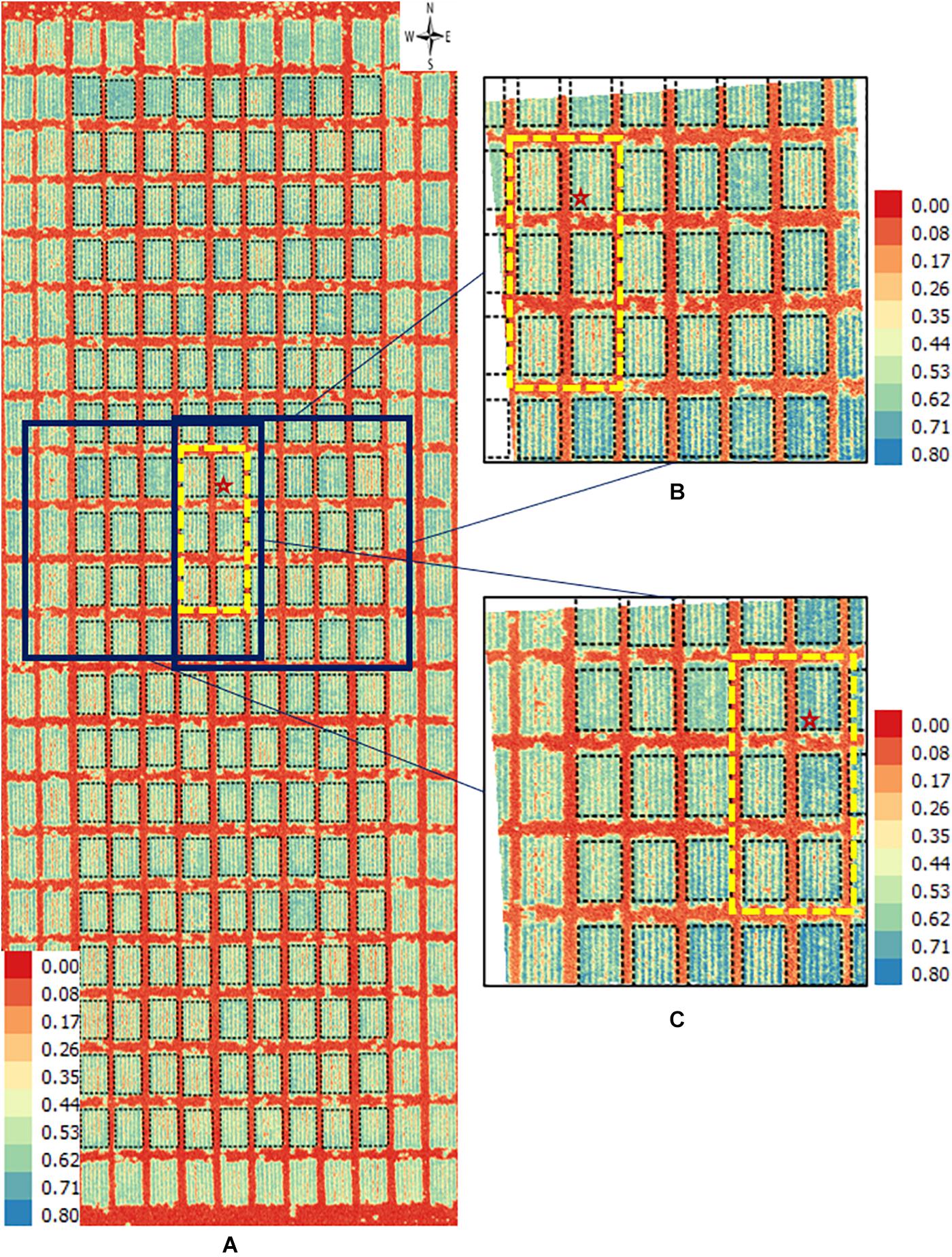

Figure 5. Orthomosaic and orthorectified images of NDVI. Raw images for NDVI were captured on April 4, 2018, at 20 m AGL from the 2017–18 wheat field experiment and were processed to generate (A) an orthomosaic image of a block in the field and multiple orthorectified images of sections of such block, two of which are depicted here (B,C). Black polygons delimited by thin dotted lines within each image delineate plot boundaries. Yellow rectangles in dashed lines delimit the same subset of six plots in all three images. The range of NDVI (unitless) is marked in each image. The continuous white areas (B,C) are non-effective pixels due to orthorectification to the raw images.

where μ, Gi, and Bj are defined as for Model II. In turn, is the random effect of the nth image and is assumed distributed as iid Meanwhile, each plot is identified by the combination of the ith entry in the jth block, namely and assumed iid Finally, is the left-over residual noise of the observation collected on the nth orthorectified image of the ijth plot, assumed distributed as iid Much like in Model II, residual terms in model III are unique to an observation within a plot and thus represent technical replication (i.e., subsampling) in the data collection process.

Model IV

Model IV extends Model III to recognize that orthorectified images on a given plot are captured from different angles. Thus, Model IV incorporated camera view angle as an explanatory covariate in the linear predictor. This angle is defined from the center of the field plot to the camera’s position where the image is captured. As the UAS’s altitude could not be held constant during image acquisition, the absolute camera height above the ground level could not be accurately measured. Therefore, only the latitude and longitude (i.e., y and x coordinates) values of both the plot center and the camera were used to calculate the camera azimuth angle (Figure 3). Model IV was specified as follows:

where Xijn is the camera azimuth angle corresponding to the nth orthorectified image for the ijth plot, β is the associated partial regression coefficient, and all remaining terms are defined as in Equation (6).

Model Comparison

Specific model comparisons were targeted to address questions of interest. Specifically, Model Ib was compared to Model Ia to evaluate the effect of an averaged plot-level observation extracted from multiple orthorectified images compared to a single observation extracted from blended pixels in an orthomosaic image. Next, Model II was compared to Model I to investigate the effect of subsampling on estimation of the additive genetic variance (and functions thereof) based on multiple plot-level observations extracted from orthorectified images (II) compared to a single plot-level observation extracted from an orthomosaic image (Ia) or from the average of multiple orthorectified images (Ib). Furthermore, a comparison between Models II and III were intended to consider alternative ways of accounting for spatial variation, namely through rows and columns (II) vs. image clusters (III). Finally, Model IV expanded Model III to adjust for potential technical effects of the UAS with respect to the camera view angle.

Two metrics were selected for model comparisons, specifically the broad-sense heritability (H2) or repeatability, and the Bayesian Information Criterion (BIC) (Neath and Cavanaugh, 2012).

For all models, variance component estimates were used to compute H2 as follows. Specifically, to Models Ia and Ib (Equations 3 to 4), H2 was calculated as,

Using estimates of the entry-level variance and the plot-level variance from Models Ia and Ib, and r defined as the number of plots per entry (i.e., number of blocks). For Models II, III, and IV (Equation 5 to 7), the calculation of H2 included plot-level variance estimates (i.e., , , , and estimates of characterizing subsampling, weighted by the number of subsamples (n) per plot, calculated as the harmonic mean number of observations across plots. Specifically, for model II

And for each of Models III and IV:

As Models Ia, Ib, and II have different response variables, and BIC is used for model comparison assuming the same set of observations on the response variable, BIC is only used for Models II, III and IV in this study. Values of BIC were obtained from the ASReml-R (Ver. 4) package output. Smaller values of BIC are considered to indicate better fitting models.

Data Availability

Data associated with these experiments, including the cropped, plot-level orthomosaic images and corresponding orthorectified images, can be accessed at the public repository7.

Results and Discussion

Orthomosaic and Orthorectified Image Generation and Gross Description

Using a case-study approach, we illustrate differences in image generation, gross description and corresponding trait extraction from orthomosaic images and orthorectified images. Specifically, for CT we used the March 2, 2018 dataset from spring wheat field (Figure 4), for NDVI we used the April 4, 2018 data from the winter wheat field (Figure 5), and for GC we used the November 3, 2018 data from the winter wheat field (Figure 6).

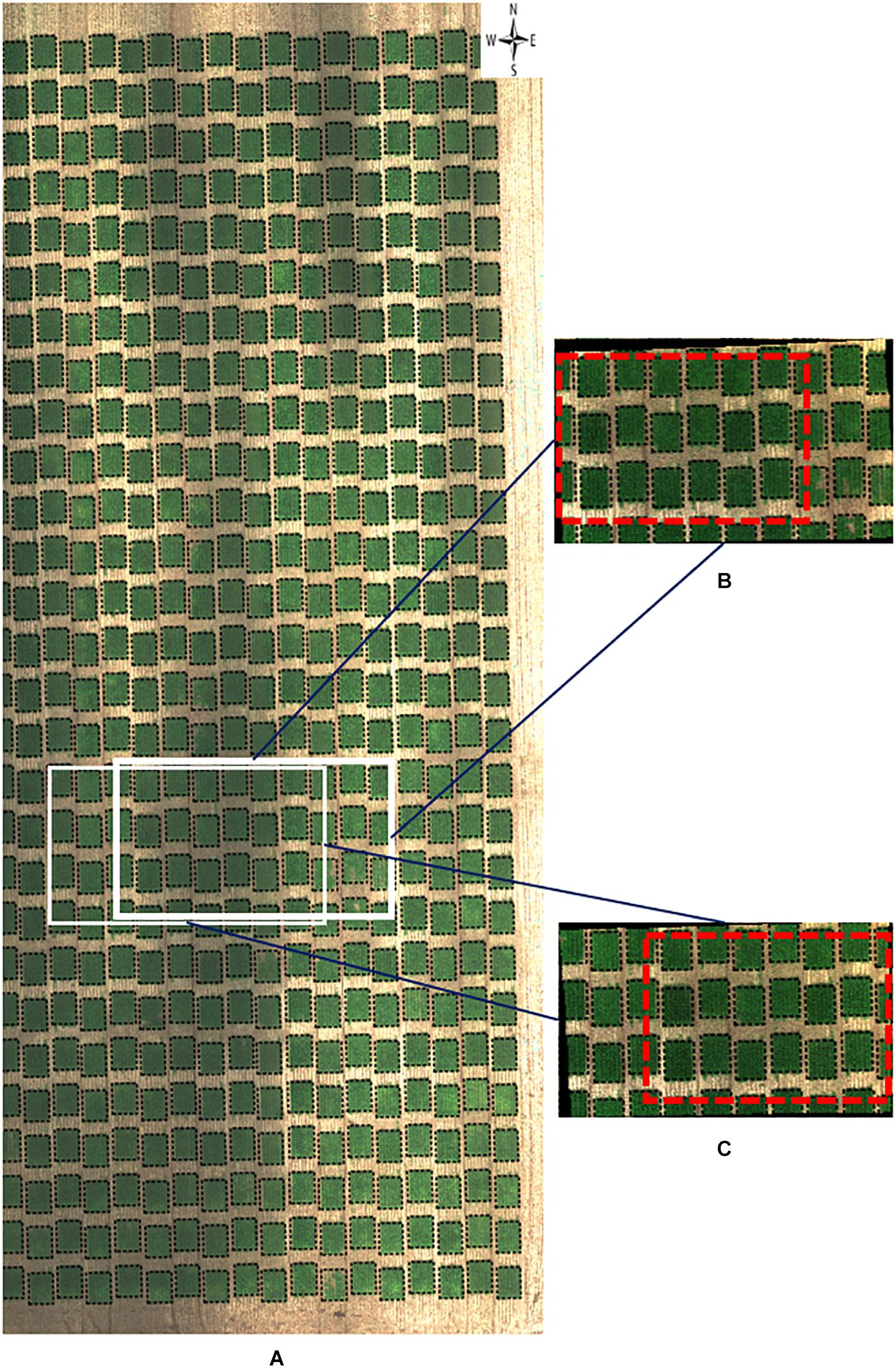

Figure 6. RGB orthomosaic and orthorectified images used for ground cover. Raw images were captured on November 3, 2018, at 20 m AGL from the 2018–19 wheat field experiment and were processed to generate (A) an RGB orthomosaic image of two blocks of the entire field, (B,C) two orthorectified sample RGB images illustrating different parts of the field. Black polygons in dashed lines within each image delineated plot boundaries. Red rectangles in dashed lines represented overlapped areas between two orthorectified RGB images. The continuous black areas (B,C) are non-effective pixels due to orthorectification to the raw images.

For the CT trait, gross differences in trait extraction are directly observable in a side-by-side comparison of the orthomosaic image with two of the orthorectified images (Figures 4A vs. 4B,C). Notably, all three images in Figure 3 show ranges in CT from 25°C (blue pixels) to 45°C (red pixels), as shown in the corresponding scales. Consider the individual plot marked with a star; the orthomosaic image seems to indicate a relatively low plot-level CT, based on more yellow pixels for that plot (Figure 4A). In contrast, the two orthorectified images show relatively high CT for the said star-marked plot, based on more orange and red pixels (Figures 4B,C). As observed, plot-level CT observations extracted from orthorectified images can disagree with the information available from the orthomosaic image, although not all orthorectified images reveal huge difference from the orthomosaic image on a given plot.

Similarly, directly observable differences were apparent between an orthomosaic and two orthorectified images for NDVI in wheat plots (Figure 5). Values of NDVI range from 0 (red pixels) to 0.8 (blue pixels) in all three images (Figure 5). For instance, consider the subset of six plots inside the yellow dashed rectangle in each of the three images. Although the difference is subtle, it is still visually detectable that more blue pixels in one orthorectified image (Figure 5C) than the other (Figure 5B). This perceived difference could be due to variation in reflectance over time due to the change of solar angle and different camera view angles. Another possible explanation may be digital artifacts of the camera, as it seemed that plots located at the east and south sides of both orthorectified images (Figures 5B,C) showed higher NDVI (i.e., more blue pixels) than plots in the remaining area of each image.

As for visual inspection of images of GC, we could not detect obvious GC differences between the orthomosaic image (Figure 6A) and the orthorectified images (Figures 6B,C). As GC is sensitive to the view angle from the camera to the plot, this observation supports that both ortho images (either orthomosaic or orthorectified) have been processed to apply corrections in the position of ground pixels caused by the perspective of the camera view angle. However, it is still unclear if pixel intensity may vary between orthomosaic and orthorectified images.

Taken together, the case studies presented here support potential variation in traits extracted from orthomosaic and orthorectified images. The main interest of a breeder is to quantify the genetic component of such variation; yet other sources of variation need to be considered and accounted for as well, namely environment factors (e.g., spatial effects) and imaging patterns due to the technology used for data collection (i.e., camera view angles and digital processing artifacts). Specifically, the illustrations presented above raised questions about the information contained in plot-level orthomosaic images generated by the photogrammetry process, as it was perceived to fail to accurately reflect trait variation that was directly apparent on plot-level observations originated from orthorectified images. Our concern is that the blending of pixel information that underlies the photogrammetry generation of orthomosaic images could lead to loss of information, thus undermining the quality of phenotypic data.

Plot-Level Traits Extraction

Plot-level observations on CT, NDVI, and GC traits from two, five, and four datasets, each corresponding to a different data collection date (Supplementary Table 2), were extracted from orthomosaic and orthorectified images. For each trait, the total number of observations extracted from orthorectified images and the minimum, maximum, and median number of observations per plot extracted from orthorectified images were summarized (Supplementary Tables 3–5). The number of observations per plot extracted from orthorectified images ranged from 3 to 49 across traits. From an experimental design standpoint, each individual plot was assigned to a given genetic line. Thus, the multiple observations per plot extracted from the orthorectified images may be considered subsamples (i.e., technical replication) for the entry that plot was assigned to. By contrast, only one observation per plot was obtained for each trait from the orthomosaic image for a given field.

Model Comparison

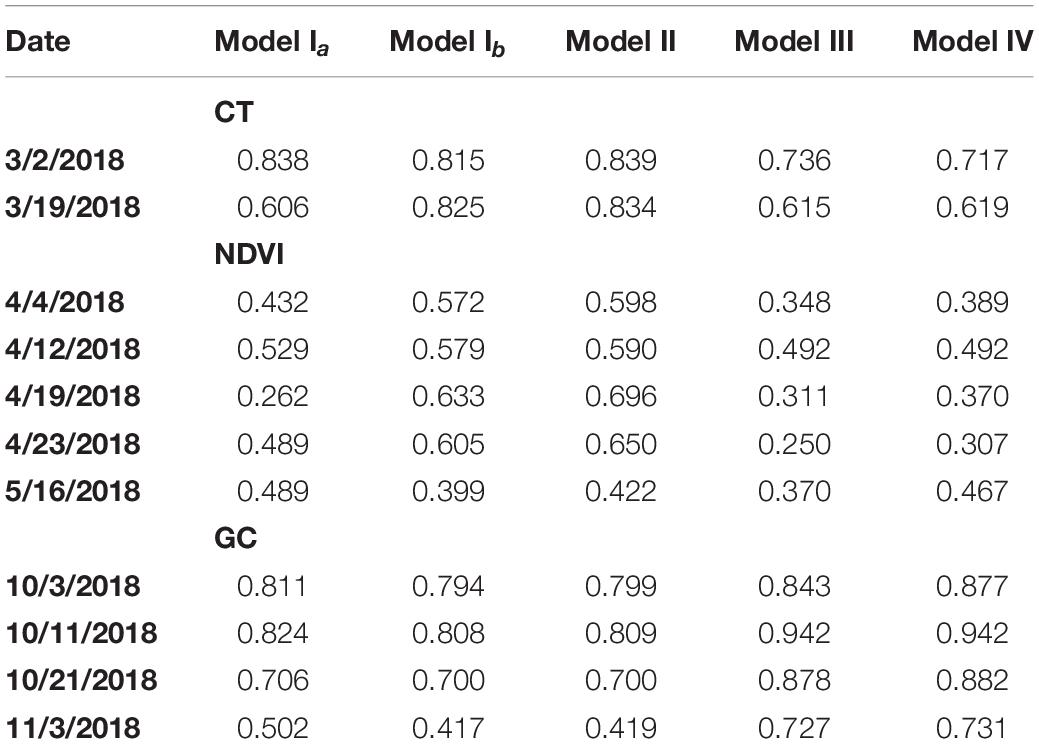

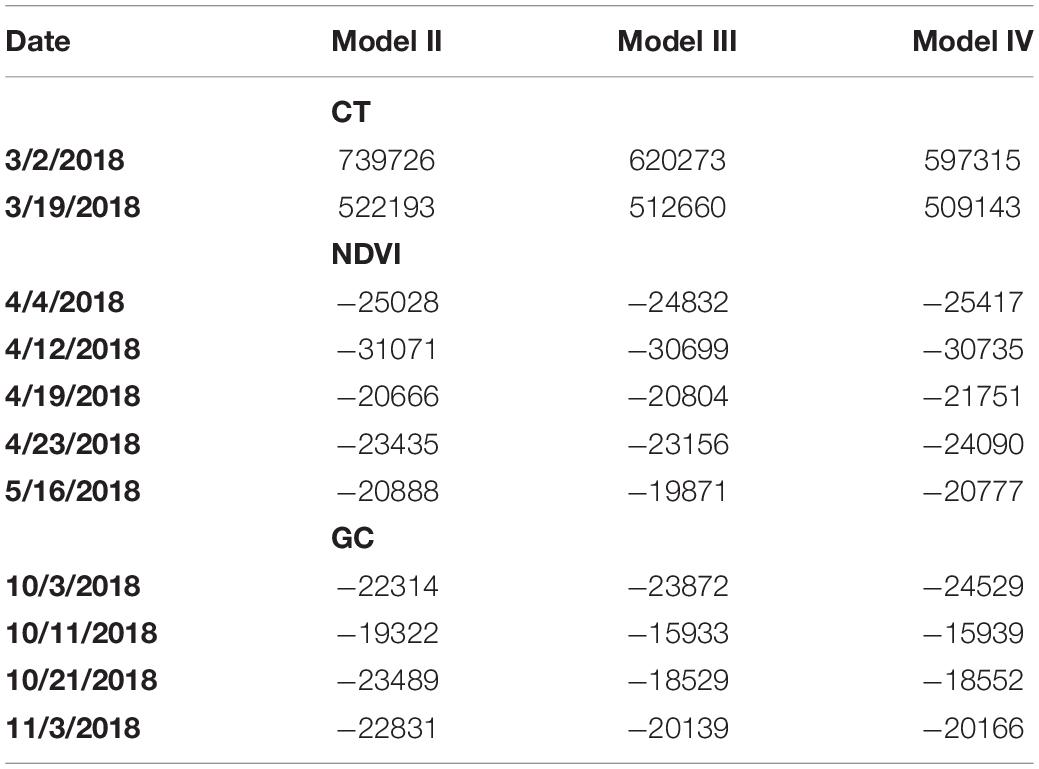

Table 1 shows estimated H2 for models Ia, Ib, II, III, and IV fitted to each of the traits extracted, namely CT, NDVI and GC. Table 2 shows BIC for model comparison between models II, III, and IV fitted to each of the traits extracted, namely CT, NDVI, and GC.

Table 1. Estimated broad-sense heritability (H2) for models Ia, Ib, II, III, and IV fitted to plot-level CT, NDVI, and GC observations on the case studies considered.

Table 2. Bayesian Information Criterion (BIC) for models II, III, and IV fitted to plot-level CT, NDVI, and GC observations on the case studies considered.

Models Ia, Ib, and II

For both CT and NDVI, the magnitude of H2 estimates for Model Ib and Model Ia showed an inconsistent pattern across datasets (Table 1), though estimates seemed to be numerically greater in magnitude more often under Model Ib. In contrast, for the GC trait, estimates of H2 were consistently greater in numerical magnitude under Model Ia than Ib based on the four datasets considered.

Comparing H2 estimates of all three traits between using Model Ia and Model II, we observed that H2 estimates of the CT trait on both two dates were improved by the latter model, as well as all H2 estimates of the NDVI trait except the one on the last date (Table 1). Taken together, for CT and NDVI traits, fitting multiple observations per plot into a hierarchical model that recognizes subsampling can help recover additive genetic variability in the data, as indicated by greater estimates of broad-sense heritability.

Compared to Model Ib, Model II explicitly accommodated technical replication in phenotypic information, causing beneficial H2 estimates in all cases; however, the magnitude of gains ranged from moderate to marginal.

In summary, fitting phenotypic values of some crop traits (e.g., CT and NDVI) extracted from orthorectified images could increase estimates of H2 in some cases, relative to the same phenotypic traits obtained from orthomosaic images (Model Ia). However, the estimation of H2 through fitting the GC trait could not be improved by simply replacing the single observation from the orthomosaic image with the average observations extracted from multiple orthorectified images. This inconsistent pattern was possibly due to the “mosaic” blending mode selected during photogrammetry processing of the orthomosaic photos. According to the Agisoft User Manual (2018), pixels were not simply blended by averaging pixel values from different photos in this blending mode, but through a pixel frequency related selection – a two-step approach. It was likely to be the reason why Model Ib and II could not always improve the trait estimation compared to Model Ia. Further research is required to characterize the extent of the expected benefit in terms of specific crops traits and circumstances of the growth season and data collection technology.

Model II vs. III

For CT and NDVI traits, H2 estimates were consistently decreased based on variance component estimates from Model III relative to Model II (Table 1), though the magnitude of the difference ranges from 12 to 62%. As for BIC-based model fit comparisons, results proved trait-specific. For CT, Model III showed smaller BIC values, and thus, better fit than Model II. However, for NDVI, most datasets showed better BIC-based fit by Model II compared to Model III (Table 2).

As for GC, the H2 estimates were increased for all datasets using Model III compared to Model II (Table 1). However, in all cases, BIC indicated a most prevalent better fit of Model II compared to III (Table 2). According to the result, for CT and NDVI traits estimation, considering the image cluster effect introduced in Model III as a factor could improve the phenotypic data quality; however, the row and column effect was still dominate for all the trait estimation in this study.

Model III vs. IV

In most cases, H2 estimates obtained using variance component estimates from Model IV were either increased or tied with those computed based on Model III. The one exception was for the CT trait based on the datasets from March 2, 2018 (Table 1). In addition, BIC estimates were smaller for model IV relative to III for all traits and in all cases, thereby indicated consistently improved model fit of Model IV relative to III (Table 2). Recall that Model IV included an additional explanatory variable, namely the camera azimuth angle from the plot center to the camera, could be proved to improve model fit. We observed that recent studies have confirmed that the reflectance observed by UAS are affected by multiple solar angles (Assmann et al., 2018) and camera view angles (Cheng et al., 2019). Compared to previous research, the multispectral images were not collected by the UAS at multiple discrete camera view angles intentionally in this study. Instead, the camera azimuth angles in this study were continuously distributed according to the flight route. Therefore, we considered the camera’s azimuth angle as an explanatory covariate in a more general way and aimed to improve the trait estimation.

In general, based on broad-sense heritability, the highest H2 estimates for CT and NDVI traits were most often obtained when Model II was used for estimation, whereas for the GC trait, the highest H2 estimates were obtained from Model IV (Table 1), indicating that the best fitting model may be trait-specific. Specifically, the highest estimates of H2 for CT and NDVI traits were obtained when row and column effects were recognized in the modeling exercise (i.e., Model II), while for the GC trait, accounting for multiple images and the camera view angle yielded higher H2 estimates, as shown by Model IV. Other technical aspects or components of experimental design may also be considered for modeling to further explain leftover noise and enhance genetic signal. Moreover, even within a trait, estimation results were not consistent. For example, for the NDVI trait, the dataset of May 16, 2018 yielded the highest H2 estimates when fitted with Model Ia. This indicates the need for further research to fine-tune processing of UAS imaging technology for efficient and accurate extraction of phenotypes relevant to crop improvement.

Cost Comparison Between the Two Image Processing Methods

During the Agisoft photogrammetry processing in this study, there is no computing cost difference between generation a single orthomosaic photo or generation of multiple orthorectified images. The export of multiple orthorectified images takes a longer time, though marginal relative to the entire analysis. As the number of orthorectified images per plot has increased compared to only one orthomosaic photo per plot, the trait extraction will take longer time than extracting trait values from the orthomosaic photo. Fortunately, the plot-boundary shapefile only needs to be generated once during trait extraction from the orthomosaic photo and the same shapefile can be used for trait extraction from multiple orthorectified images. Therefore, the analysis pipeline for orthophotos presented here does increase computational time, though a relatively marginal increase compared to overall pipeline computational requirements and the data collection time.

Conclusion

In this study, we demonstrated open-source and highly reproducible image processing methods and applied it for processing three crop phenotypes obtained by UAS, namely canopy temperature, canopy NDVI, and early-stage ground coverage, to seek the potential to improve quality of trait extraction from UAS-based remote sensing. We compared plot-level phenotypic traits extracted from the orthomosaic image with those obtained from orthorectified images, and we provided evidence that phenotyping by UAS remote sensing could be improved by extracting observations directly from multiple orthorectified images and through proper statistical models that are used to capture and account for technological sources of variability.

While further research will be needed, this study shows preliminary evidence with important practical implications for plant breeding and genetics. First, we developed image processing pipelines that have the potential to automatically generate the orthomosaic and orthorectified images from aerial images, without any need for manual manipulation. Second, we proposed batch processing pipelines to quantify different types of plot-level phenotypic traits, namely CT, NDVI, and GC. In addition, we illustrate how cropping plot-level images from orthorectified images can highly improve the efficiency to link genotypes to phenotypes. This approach can significantly increase the number of image samples per plot, indicating views of a plot from different angles, and provide huge training datasets for image-based deep learning. Finally, we proposed four statistical linear mixed models to efficiently partition sources of variation in each trait, specifically variation introduced by the UAS technology and accompanying image processing, in addition to experimental design. The models provide breeders multiple options to investigate traits extracted from high-throughput UAS-based imaging. Overall, through this study, it is expected that the future of modern breeding could be further highlighted, where in conjunction with powerful genomics and phenomics tools, UAS remote sensing can accelerate the genetic gains in plant breeding to meet the global demand for food, fiber, and fuel.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: http://people.beocat.ksu.edu/~xuwang/Data_2019_FPS/.

Author Contributions

XW and JP conceived and designed the study. XW, DS, and BE conducted UAS flights. XW performed image analysis. PS, NB, XW, JP, and DS contributed to the statistical analysis. RS, SM, and FE provided experimental lines. XW, PS, NB, and JP wrote the manuscript. JP directed the overall project. All authors edited and reviewed the manuscript.

Funding

This work was supported by the National Science Foundation (NSF) Plant Genome Research Program (PGRP) (Grant No. IOS-1238187), the Kansas Wheat Commission and Kansas Wheat Alliance, the United States Agency for International Development (USAID) Feed the Future Innovation Lab for Applied Wheat Genomics (Cooperative Agreement No. AID-OAA-A-13-00051), and the International Wheat Yield Partnership (Grant No. 2017-67007-25933/project accession no. 1011391) from the United States Department of Agriculture National Institute of Food and Agriculture (USDA NIFA). The opinions expressed herein are those of the author(s) and do not necessarily reflect the views of the USAID, the NSF, or the USDA.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the assistance of colleagues at Kansas State University, including Mark Lucas, Richard Brown, and Grant Williams and valuable input from Dr. Jose Crossa from CIMMYT, Dr. Atena Haghighattalab from University of Minnesota, and Dr. Scott Chapman from CSIRO, Australia.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpls.2020.587093/full#supplementary-material

Footnotes

- ^ www.flir-vue-pro.com/news

- ^ https://uavmissionplanner.netlify.app/

- ^ github.com/xwangksu/bip

- ^ github.com/micasense/imageprocessing

- ^ github.com/xwangksu/traitExtraction

- ^ www.flir.com/products/vue-pro-r

- ^ http://people.beocat.ksu.edu/~xuwang/Data_2019_FPS/

References

Agisoft, L. L. C. (2018). Agisoft PhotoScan User Manual: Professional Edition, Version 1.4. St Petersburg: Agisoft LLC.

Araus, J. L., and Cairns, J. E. (2014). Field high-throughput phenotyping: the new crop breeding frontier. Trends Plant Sci. 19, 52–61. doi: 10.1016/j.tplants.2013.09.008

Assmann, J. J., Kerby, J. T., Cunliffe, A. M., and Myers-Smith, I. H. (2018). Vegetation monitoring using multispectral sensors—Best practices and lessons learned from high latitudes. J. Unman. Vehicle Syst. 7, 54–75. doi: 10.1139/juvs-2018-0018

Balota, M., Payne, W. A., Evett, S. R., and Lazar, M. D. (2007). Canopy temperature depression sampling to assess grain yield and genotypic differentiation in winter wheat. Crop Sci. 47, 1518–1529. doi: 10.2135/cropsci2006.06.0383

Barker, J., Zhang, N., Sharon, J., Steeves, R., Wang, X., Wei, Y., et al. (2016). Development of a field-based high-throughput mobile phenotyping platform. Comput. Electron. Agricult. 122, 74–85. doi: 10.1016/j.compag.2016.01.017

Butler, D., Cullis, B. R., Gilmour, A., and Gogel, B. (2009). ASReml-R Reference Manual. Brisbane: The State of Queensland, Department of Primary Industries and Fisheries.

Cabrera-Bosquet, L., Crossa, J., Von Zitzewitz, J., Serret, M. D., and Luis, A. J. (2012). High-throughput phenotyping and genomic selection: the frontiers of crop breeding converge F. J. Integrat. Plant Biol. 54, 312–320. doi: 10.1111/j.1744-7909.2012.01116.x

Cheng, T., Lu, N., Wang, W., Zhang, Q., Li, D., Yao, X., et al. (2019). Estimation of nitrogen nutrition status in winter wheat from unmanned aerial vehicle based multi-angular multispectral imagery. Front. Plant Sci. 10:1601. doi: 10.3389/fpls.2019.01601

Chu, T., Starek, M., Brewer, M., Murray, S., and Pruter, L. (2017). Assessing lodging severity over an experimental maize (Zea mays L.) field using UAS images. Remote Sensing 9:923. doi: 10.3390/rs9090923

Cobb, J. N., Declerck, G., Greenberg, A., Clark, R., and Mccouch, S. (2013). Next-generation phenotyping: requirements and strategies for enhancing our understanding of genotype–phenotype relationships and its relevance to crop improvement. Theoret. Appl. Genet. 126, 867–887. doi: 10.1007/s00122-013-2066-0

Crain, J., Reynolds, M., and Poland, J. (2017). Utilizing high-throughput phenotypic data for improved phenotypic selection of stress-adaptive traits in wheat. Crop Sci. 57, 648–659. doi: 10.2135/cropsci2016.02.0135

Crain, J. L., Wei, Y., Barker, J., Thompson, S. M., Alderman, P. D., Reynolds, M., et al. (2016). Development and deployment of a portable field phenotyping platform. Crop Sci. 56, 965–975. doi: 10.2135/cropsci2015.05.0290

Crossa, J., Pérez-Rodríguez, P., Cuevas, J., Montesinos-López, O., Jarquín, D., De Los Campos, G., et al. (2017). Genomic selection in plant breeding: methods. models, and perspectives. Trends Plant Sci. 22, 961–975. doi: 10.1016/j.tplants.2017.08.011

Deery, D. M., Rebetzke, G. J., Jimenez-Berni, J. A., James, R. A., Condon, A. G., Bovill, W. D., et al. (2016). Methodology for high-throughput field phenotyping of canopy temperature using airborne thermography. Front. Plant Sci. 7:1808. doi: 10.3389/fpls.2016.01808

Duan, T., Chapman, S., Guo, Y., and Zheng, B. (2017). Dynamic monitoring of NDVI in wheat agronomy and breeding trials using an unmanned aerial vehicle. Field Crops Res. 210, 71–80. doi: 10.1016/j.fcr.2017.05.025

Eathington, S. R., Crosbie, T. M., Edwards, M. D., Reiter, R. S., and Bull, J. K. (2007). Molecular markers in a commercial breeding program. Crop Sci. 47, S–154. doi: 10.2135/cropsci2007.04.0015IPBS

Gilmour, A., Gogel, B., Cullis, B., Welham, S., and Thompson, R. (2015). ASReml User Guide Release 4.1 Structural Specification. Hemel Hempstead: VSN international ltd.

Haghighattalab, A., Pérez, L. G., Mondal, S., Singh, D., Schinstock, D., Rutkoski, J., et al. (2016). Application of unmanned aerial systems for high throughput phenotyping of large wheat breeding nurseries. Plant Methods 12:35. doi: 10.1186/s13007-016-0134-6

Hayes, B. J., and Goddard, M. E. (2001). Prediction of total genetic value using genome-wide dense marker maps. Genetics 157, 1819–1829.

Holland, J. B., Nyquist, W. E., and Cervantes-Martínez, C. T. (2003). Estimating and interpreting heritability for plant breeding: an update. Plant Breed. Rev. 22, 9–112. doi: 10.1002/9780470650202.ch2

Li, J., Shi, Y., Veeranampalayam-Sivakumar, A.-N., and Schachtman, D. P. (2018). Elucidating sorghum biomass, nitrogen and chlorophyll contents with spectral and morphological traits derived from unmanned aircraft system. Front. Plant Sci. 9:1406. doi: 10.3389/fpls.2018.01406

Neath, A. A., and Cavanaugh, J. E. (2012). The Bayesian information criterion: background, derivation, and applications. Wiley Interdiscip. Rev. 4, 199–203. doi: 10.1002/wics.199

Reynolds, M., and Langridge, P. (2016). Physiological breeding. Curr. Opin. Plant Biol. 31, 162–171. doi: 10.1016/j.pbi.2016.04.005

Rutkoski, J., Poland, J., Mondal, S., Autrique, E., Pérez, L. G., Crossa, J., et al. (2016). Canopy temperature and vegetation indices from high-throughput phenotyping improve accuracy of pedigree and genomic selection for grain yield in wheat. G3 Genes Genomes Genetics 6, 2799–2808. doi: 10.1534/g3.116.032888

Sagan, V., Maimaitijiang, M., Sidike, P., Eblimit, K., Peterson, K. T., Hartling, S., et al. (2019). UAV-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ICI 8640 P, FLIR Vue Pro R 640, and thermoMap cameras. Remote Sensing 11:330. doi: 10.3390/rs11030330

Sandmeier, S. R., and Itten, K. I. (1999). A field goniometer system (FIGOS) for acquisition of hyperspectral BRDF data. IEEE Transact. Geosci. Remote Sensing 37, 978–986. doi: 10.1109/36.752216

Shi, Y., Thomasson, J. A., Murray, S. C., Pugh, N. A., Rooney, W. L., Shafian, S., et al. (2016). Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS One 11:e0159781. doi: 10.1371/journal.pone.0159781

Singh, D., Wang, X., Kumar, U., Gao, L., Noor, M., Imtiaz, M., et al. (2019). High-throughput phenotyping enabled genetic dissection of crop lodging in wheat. Front. Plant Sci. 10:394. doi: 10.3389/fpls.2019.00394

Song, B., and Park, K. (2020). Verification of Accuracy of unmanned aerial vehicle (UAV) land surface temperature images using in-situ data. Remote Sensing 12:288. doi: 10.3390/rs12020288

Thorp, K., Thompson, A., Harders, S., French, A., and Ward, R. (2018). High-throughput phenotyping of crop water use efficiency via multispectral drone imagery and a daily soil water balance model. Remote Sensing 10:1682. doi: 10.3390/rs10111682

Wang, X., Singh, D., Marla, S., Morris, G., and Poland, J. (2018). Field-based high-throughput phenotyping of plant height in sorghum using different sensing technologies. Plant Methods 14:53. doi: 10.1186/s13007-018-0324-5

Wang, X., Thorp, K. R., White, J. W., French, A. N., and Poland, J. A. (2016). Approaches for geospatial processing of field-based high-throughput plant phenomics data from ground vehicle platforms. Transact. ASABE 59, 1053–1067. doi: 10.13031/trans.59.11502

Williamson, T. N., Dobrowolski, E. G., Meyer, S. M., Frey, J. W., and Allred, B. J. (2019). Delineation of tile-drain networks using thermal and multispectral imagery—Implications for water quantity and quality differences from paired edge-of-field sites. J. Soil Water Conserv. 74, 1–11. doi: 10.2489/jswc.74.1.1

Keywords: High-throughput phenotyping, unmanned aerial systems, canopy temperature, normalized difference vegetation index, ground cover, wheat

Citation: Wang X, Silva P, Bello NM, Singh D, Evers B, Mondal S, Espinosa FP, Singh RP and Poland J (2020) Improved Accuracy of High-Throughput Phenotyping From Unmanned Aerial Systems by Extracting Traits Directly From Orthorectified Images. Front. Plant Sci. 11:587093. doi: 10.3389/fpls.2020.587093

Received: 27 July 2020; Accepted: 30 September 2020;

Published: 21 October 2020.

Edited by:

Ankush Prashar, Newcastle University, United KingdomReviewed by:

Lea Hallik, University of Tartu, EstoniaJianfeng Zhou, University of Missouri, United States

Copyright © 2020 Wang, Silva, Bello, Singh, Evers, Mondal, Espinosa, Singh and Poland. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xu Wang, eHV3YW5nQGtzdS5lZHU=; Jesse Poland, anBvbGFuZEBrc3UuZWR1

Xu Wang

Xu Wang Paula Silva

Paula Silva Nora M. Bello4

Nora M. Bello4 Daljit Singh

Daljit Singh Byron Evers

Byron Evers Suchismita Mondal

Suchismita Mondal Francisco P. Espinosa

Francisco P. Espinosa Ravi P. Singh

Ravi P. Singh Jesse Poland

Jesse Poland