- 1Division of Computer Applications, Indian Council of Agricultural Research (ICAR)-Indian Agricultural Statistics Research Institute, New Delhi, India

- 2Division of Technology and Sustainable Agriculture, Indian Council of Agricultural Research (ICAR)-National Institute of Agricultural Economics and Policy Research, New Delhi, India

- 3Division of Plant Pathology, Wheat Pathology Laboratory, Indian Council of Agricultural Research (ICAR)-Indian Agricultural Research Institute, New Delhi, India

- 4Computer Services Centre, Indian Institute of Technology Delhi, New Delhi, India

- 5Division of Genetics, Indian Council of Agricultural Research (ICAR)-Indian Agricultural Research Institute, New Delhi, India

Wheat rust is a severe fungal disease that significantly impacts wheat crops, resulting in substantial losses in quality and quantity, often exceeding 50%. Timely and early accurate estimation of disease severity in fields is critical for effective disease management. Early identification of Rust at low severity levels can facilitate prompt implementation of control measures, potentially saving crops. This paper introduces an automated wheat rust severity stage estimation model utilizing the EfficientNet architecture and attention mechanism. The convolutional Block Attention Module was integrated into EfficientNet-B0 in place of the SE module to enhance feature extraction by simultaneously considering channel and spatial information. The proposed hybrid approach aims to identify rust disease severity accurately. The model is trained on an image dataset comprising three major rust types—stripe, stem, leaf, and healthy plants captured under real-life field conditions. Each disease is categorized into four severity stages: healthy, low, medium, and high. Experimental results demonstrate that the proposed model achieves impressive performance, with a training accuracy of 99.51% and a testing accuracy of 96.68%. Moreover, comparative analysis against state-of-the-art CNN models highlights the superior performance of our approach. An Android application was also designed and developed to facilitate real-time classification of plant disease severity. This system incorporates a severity model optimized for enhanced classification accuracy and rapid recognition, ensuring efficient performance.

1 Introduction

Wheat ranks among the primary staple crops globally, with over half of its production dedicated to human consumption, livestock feed, and processing. However, wheat-producing nations face formidable challenges from plant diseases and pests, jeopardizing agricultural sustainability and profitability. Among these, rusts—comprising stripe, leaf, and stem Rust—stand out as particularly menacing fungal diseases, prevalent across almost all wheat-growing regions (Lu et al., 2017; Nigam and Jain, 2020). Left unchecked, rusts can mutate into virulent strains, leading to catastrophic crop failures. Conventional disease identification and severity assessment methods rely on manual visual inspection, which is fraught with inefficiencies, subjectivity, and labor intensiveness (Bock et al., 2010; Mohanty et al., 2016; Arnal Barbedo, 2019; Atila et al., 2021). Recent advances in computer vision, artificial intelligence (AI), and deep learning offer promising opportunities to automate disease detection and severity assessment through image analysis. In the existing literature, remarkable achievements in AI-based plant disease classification (LeCun et al., 2015; Fuentes et al., 2021; Arnal Barbedo, 2019; Chin et al., 2023; Pavithra et al., 2023; Dheeraj and Chand, 2022, 2024) underscore the potential of these technologies in integrated disease management.

However, while significant progress has been made in plant disease detection, much of the research has primarily focused on disease type classification, leaving a critical gap in the accurate quantification of disease severity. This gap limits experts’ ability to recommend optimal pesticide applications, compromising disease control efficacy and environmental sustainability. Thus, there is a growing demand for automated disease severity classification using AI-driven approaches (Esgario et al., 2020; Wspanialy and Moussa, 2020; Hu et al., 2021; Nigam et al., 2021). Disease severity, a crucial parameter for assessing the intensity of plant diseases, is traditionally quantified by comparing the diseased area of a plant part (such as leaves, fruits, or stems) to the total area of the affected part, based on standardized severity grading systems (Bock et al., 2022; Liu et al., 2020). For wheat stripe rust, severity evaluation is essential for effective monitoring, but it has primarily been carried out through visual observation, a method that requires experienced assessors and is both time-consuming and prone to errors (Jiang et al., 2022). Accurately estimating lesion areas according to severity standards is challenging, further complicating the process.

In contrast, disease incidence, which only requires determining whether a plant part is diseased or not, is easier to assess but does not provide a precise estimate of severity. The relationship between disease incidence and severity is influenced by factors such as lesion distribution, wheat plant resistance to Puccinia striiformis (Pst), and overall incidence levels (Chen et al., 2014), limiting the practical utility of incidence-based severity estimation methods. Recent machine learning advancements have provided some solutions for severity estimation. For instance, Wang et al. (2017) developed a model to predict disease severity at early, medium, and final stages, achieving notable accuracy. Similarly, Liang et al. (2019) introduced the PD2SE-Net model for horticultural crops, while Zhao et al. (2021) proposed SevNet, which uses ResNet and CBAM to classify tomato disease severity with impressive accuracies of 97.59% and 95.37%, respectively.

Despite these advances, research on cereal crop severity estimation remains limited due to the scarcity of image datasets. Notable exceptions include the BLSNet model for rice (Chen et al., 2021) and models for maize common rust severity prediction by Sibiya and Sumbwanyambe (2021) and Haque et al. (2022). Particularly underexplored is the classification of wheat yellow rust severity, with only one model—Yellow-Rust-Xception—proposed for differentiating yellow rust stages, achieving a modest 91% accuracy (Hayit et al., 2021). Also, Jiang et al., 2022 and Jiang et al., 2023 developed the machine learning models for severity assessment in wheat stripe rust. However, no deep learning-based model is developed in literature to estimate the severity of all three wheat rusts. This highlights the pressing need for further research and development to enhance the precision and reliability of disease severity assessments, particularly in wheat crops.

Moreover, challenges persist regarding the availability of public image databases, predominantly comprising lab-captured images rather than real-world field scenarios (Mi et al., 2020; Nigam et al., 2023). Hence, addressing these limitations is crucial for robust automated disease severity detection systems trained on datasets collected from natural field conditions.

Therefore, this paper focuses on the critical task of wheat disease severity classification, addressing the challenges associated with identifying and categorizing disease symptoms, understanding their impact on crop health, and exploring effective management strategies. The study emphasizes early detection of low-severity stages to mitigate crop loss and support sustainable agricultural practices. The main contributions of this research are summarized as follows:

● A comprehensive Wheat Disease Severity Dataset (WheatSev) was created, comprising 5,438 real-field images of wheat crops affected by stripe rust, leaf rust, and stem Rust across various growth stages.

● A convolutional block attention module (CBAM) was integrated into the EfficientNet B0 architecture to classify wheat disease severity into three levels: low, medium, and high. The CBAM-EfficientNet model demonstrated superior classification performance compared to several established architectures, including VGGNet19, ResNet152, MobileNetV2, DenseNet169, InceptionV3, and the original EfficientNet B0.

● The proposed model significantly improved classification performance in terms of accuracy, recall, precision, and F1 score by leveraging the combined strengths of EfficientNet B0 and CBAM layers. This approach effectively addressed technical challenges such as vanishing gradients and computational complexity while enhancing the robustness of the model.

● Robust data augmentation techniques were employed to increase data diversity, mitigating the risk of overfitting and ensuring the model’s reliability in classifying diverse real-world samples.

● The model’s efficiency was validated through extensive hyperparameter tuning, comparative analyses with state-of-the-art architectures, and Grad-CAM visualizations. Experimental results underscored the effectiveness of the CBAM-EfficientNet B0 model for accurate wheat disease severity estimation.

2 Materials and methods

This section delineates the tools, techniques, and procedures employed in the present study, including the acquisition of the image dataset and the proposed framework incorporating an attention module within the EfficientNet architecture.

2.1 Image dataset

Images depicting wheat rusts at various severity level stages were captured within the fields of ICAR-Indian Agricultural Research Institute, New Delhi, India, spanning three consecutive crop seasons. Image acquisition took place during sunny conditions, typically between 11:00 am to 1:00 pm, at ten-day intervals following the initial onset of disease symptoms. This timing ensured consistent leaf growth stages across all captured images.

A handheld mobile camera with a 20-megapixel resolution and a 25mm wide-angle lens was utilized for image acquisition. The deliberate use of mobile devices instead of professional cameras aimed to mirror the tools commonly employed by farmers in similar scenarios. The severity level estimation dataset encompasses images of three major wheat diseases, categorized into three severity levels by plant pathologists: low, medium, high and healthy (Figure 1). Images were captured by directing the camera lens toward regions of the leaves exhibiting disease symptoms at various growth stages. Subsequently, pathologists labeled the severity levels based on the percentage of disease symptoms present. Images categorized as low severity level exhibited severity symptoms ranging from 0 to 25 percent, those classified as medium severity level ranged from 25 to 50 percent, and high severity level images displayed more than 50 percent disease symptoms. The images with no disease symptoms were considered healthy as shown in Figure 1.

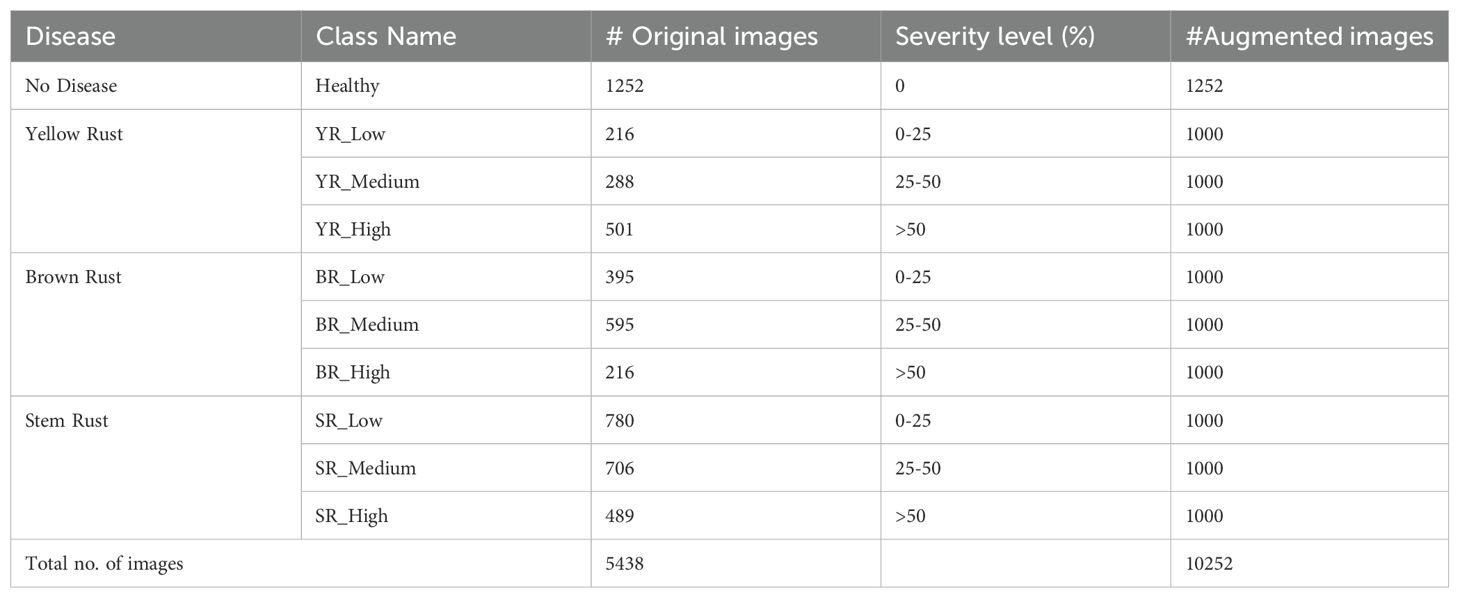

The primary emphasis of this study lies in low-level severity image classification to facilitate timely disease detection and mitigate crop loss. Initially, the severity stage estimation dataset comprised 5438 images distributed across ten classes, including three rust severity stages for each disease class and a class representing healthy leaves (refer to Table 1). To enhance classification performance and achieve balance among disease classes, the original dataset underwent augmentation, resulting in a total of 10252 images, with 1000 images allocated to each disease-infected class.

2.2 Data pre-processing and augmentation

Prior to model training, image pre-processing, and augmentation were performed to enhance model performance. Initially, duplicate, out-of-focus, noisy, or blurry photos were eliminated from the dataset to ensure data quality. Subsequently, the Augmentor Python package was utilized to augment the images by employing various techniques such as zooming, flipping, and rotating. This augmentation process aimed to diversify the dataset and enrich it with variations, thereby facilitating robust model training. Each class of severity stage images was augmented to contain 1000 images, ensuring balanced representation across classes (refer to Table 1). Additionally, the images were resized to 256 x 256 pixels to accommodate hardware constraints, optimize computational efficiency, and enhance the model’s generalization and performance. This pre-processing and augmentation pipeline laid the groundwork for effective model training on the augmented dataset.

2.3 Framework overview and structure

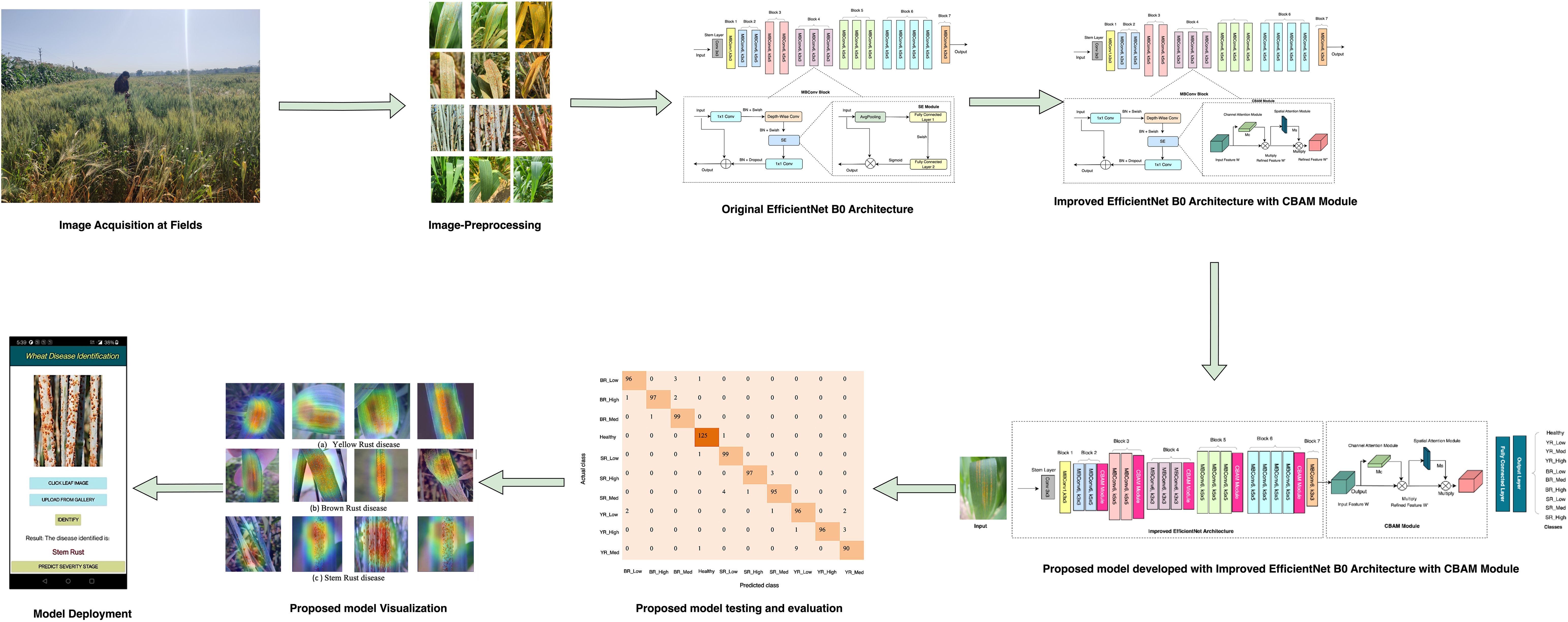

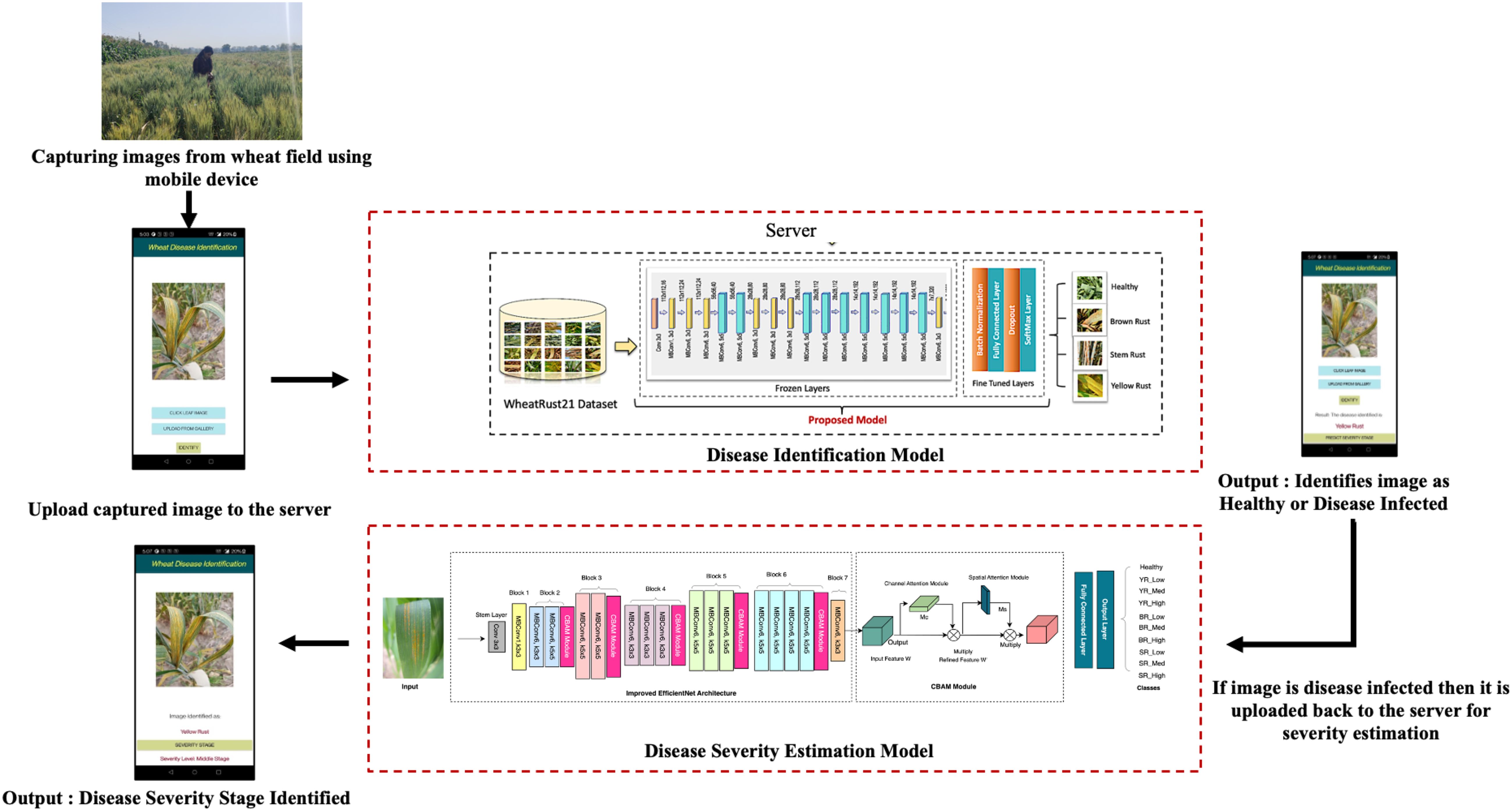

The research methodology is visually depicted in Figure 2. Initially, images were captured from real-world wheat crop fields using mobile devices. Domain experts labeled each image with the corresponding type of wheat rust and its severity level, organizing them into distinct folders. Subsequently, image processing techniques, including resizing, filtering, and noise reduction, were applied to refine the raw images. Augmentation techniques, such as random rotation, translation, flipping, and zooming, were employed to diversify the image dataset and validate the models before experimentation. Two datasets were created: the original dataset containing 5438 images and an augmented dataset comprising 10252 images, both segregated into train, test, and validation sets in an 80:10:10 ratio for experimentation purposes. Initially, the performance of the fine-tuned EfficientNet B0 model was evaluated on both datasets. Subsequently, to enhance the model’s performance, the proposed model was developed by integrating the CBAM module (Tan and Le, 2020) into the fine-tuned EfficientNet B0 model. The attention mechanism’s channel and spatial modules focus on key disease symptoms, aiding in determining the severity level of wheat rust. Figure 2 illustrates the flowchart of the wheat disease identification and severity stage estimation framework, with subsequent sections elaborating on each phase of the framework.

2.4 Architectural overview of EfficientNet and attention mechanism integration

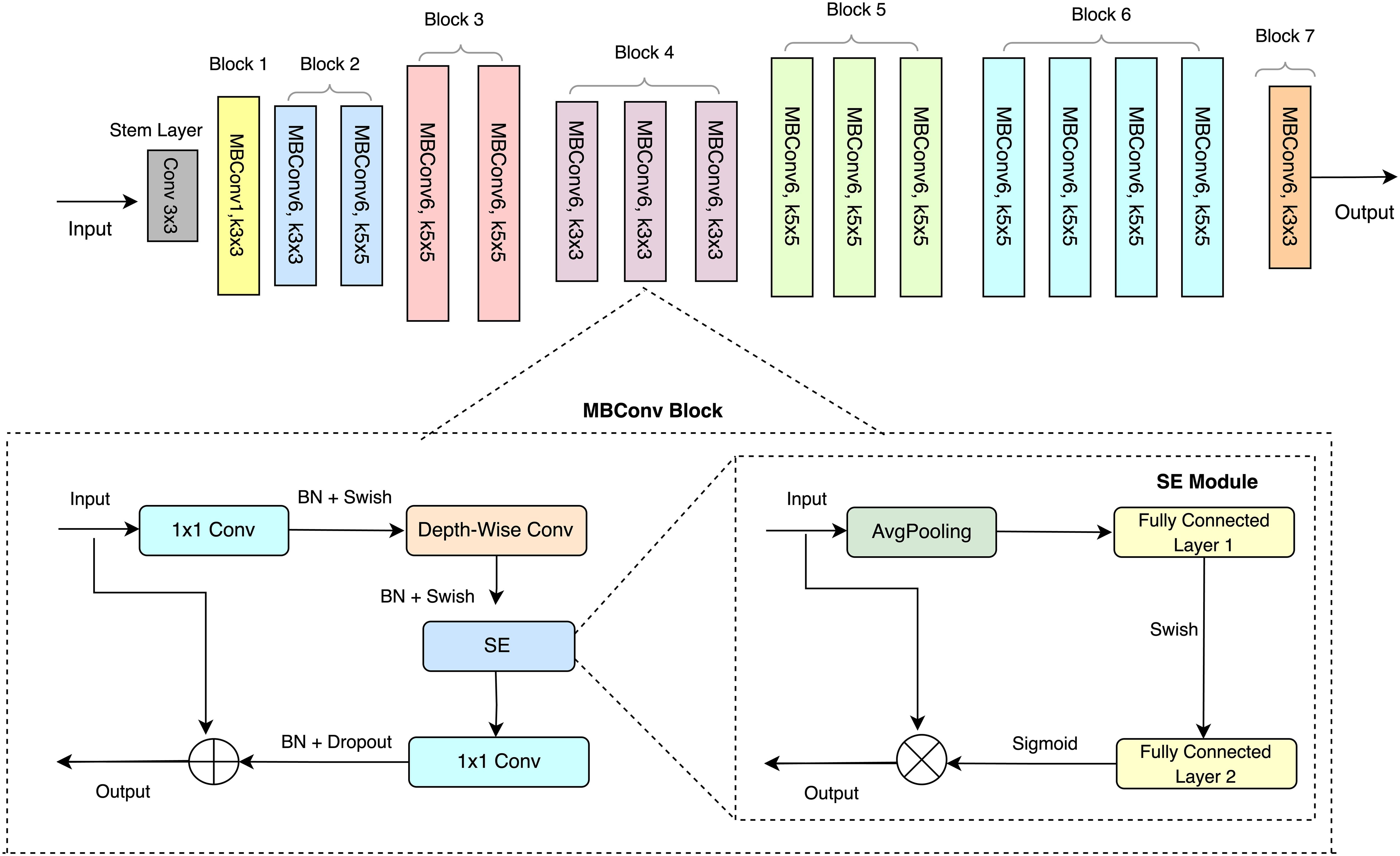

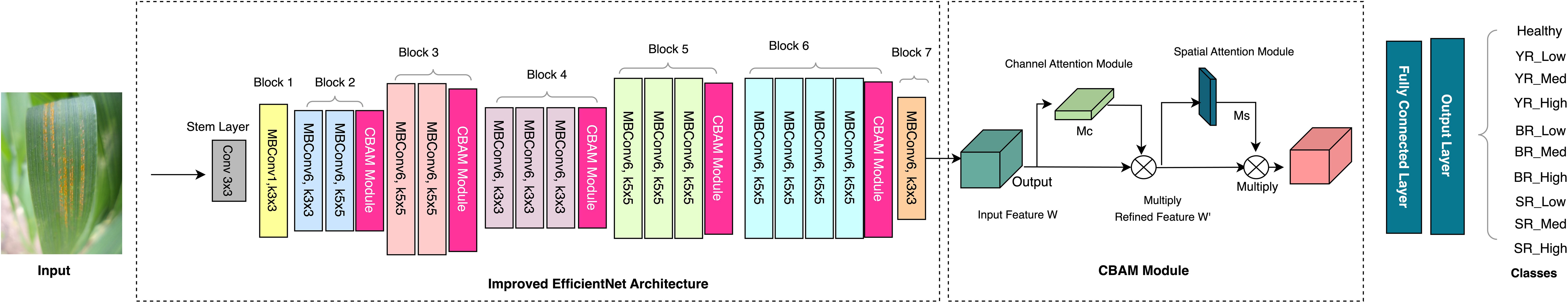

The EfficientNet B0 serves as the foundational model within the EfficientNet family, encompassing a total of eight variants (B0-B7) (Figure 3). EfficientNet B0 architecture achieves high accuracy and computational efficiency through a compound scaling approach as described by Atila et al. (2021). The EfficientNet architecture employs the Mobile Inverted Bottleneck Convolution (MBConv) as its primary building block, introduced by Sandler et al. (2018) and illustrated in Figure 3. The MBConv block comprises three components: a 1 × 1 convolution (1 × 1 Conv), Depth-wise convolution (Depth-wise Conv), and a Squeeze-and-Excitation (SE) module. Initially, the output of the preceding layer is passed through the MBConv block, where the number of channels is expanded using a 1 × 1 Conv. Subsequently, a 3 × 3 Depth-wise Conv reduces the number of parameters, followed by channel pruning that compresses the channel count through another 1 × 1 Conv. A residual connection is then introduced between the input and output of the projection layer to enhance feature representation. The SE module, as shown in Figure 3, incorporates two key operations: squeeze and excitation. The squeeze operation is performed using global average pooling (AvgPooling), while the excitation operation involves two fully connected layers activated sequentially with a Swish activation and a Sigmoid activation function. This design facilitates efficient parameter utilization while maintaining high performance. However, the SE module focuses on channel-wise feature recalibration by emphasizing informative channel characteristics while suppressing less relevant ones. However, this approach primarily addresses channel-specific information and overlooks spatial context, which is critical for visual recognition tasks such as severity estimation. This limitation negatively affected the model’s classification accuracy for severity estimation.

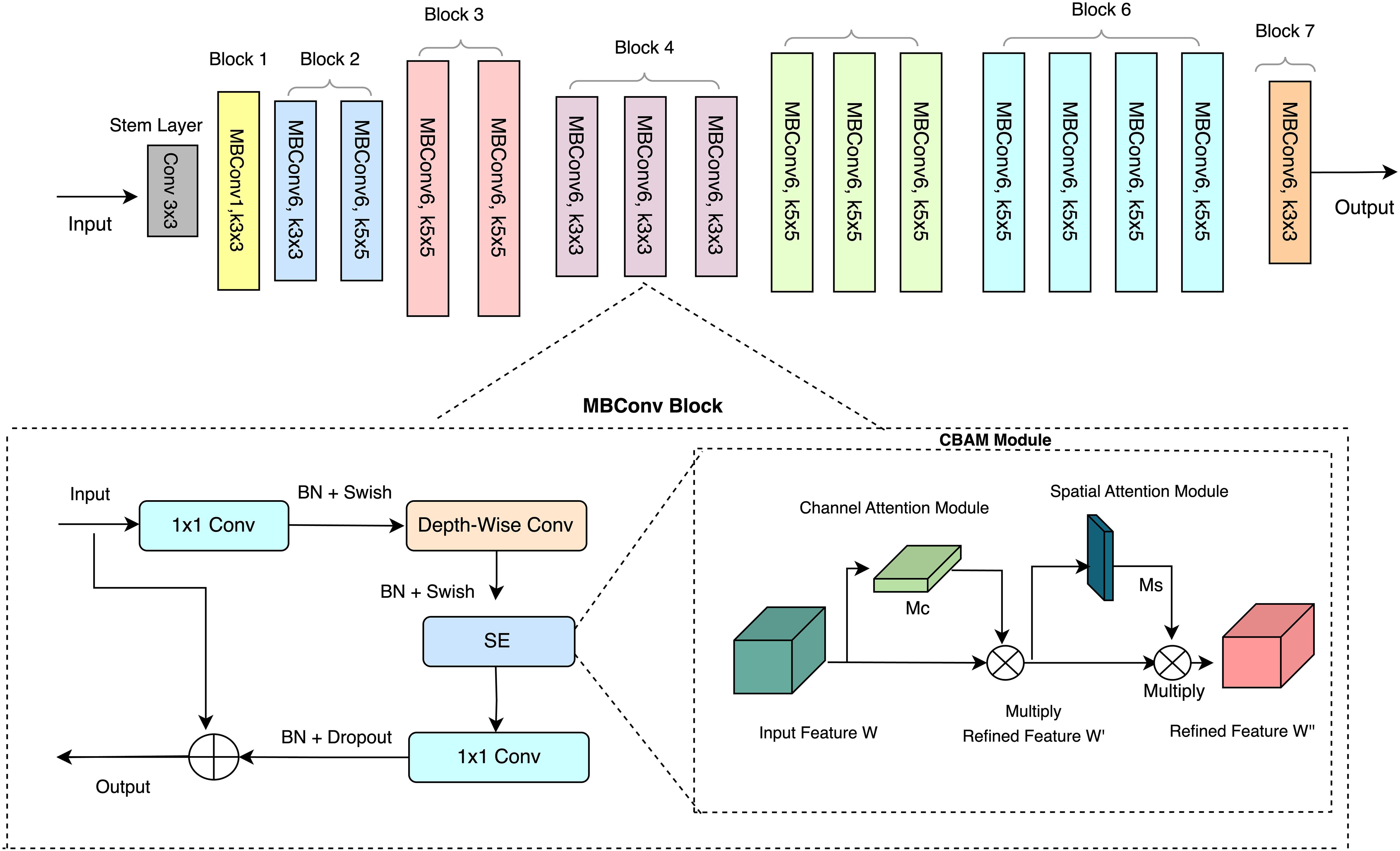

To address this, the (CBAM) was integrated into EfficientNet-B0 in place of the SE module to enhance feature extraction by simultaneously considering both channel and spatial information. The modified network, referred to as EfficientNet-CBAM, is illustrated in Figure 4. Key modifications include the replacement of the SE module in each MBConv layer with a CBAM module, allowing the network to retain vital spatial information alongside channel-specific features, particularly for identifying disease severity symptoms. Additionally, a CBAM module was introduced after the final convolutional layer, refining the extracted features and improving the network’s classification performance. The final convolutional layer of EfficientNet B0 produces feature maps, which serve as input for the Convolutional Block Attention Mechanism (CBAM) module (see Figure 4).

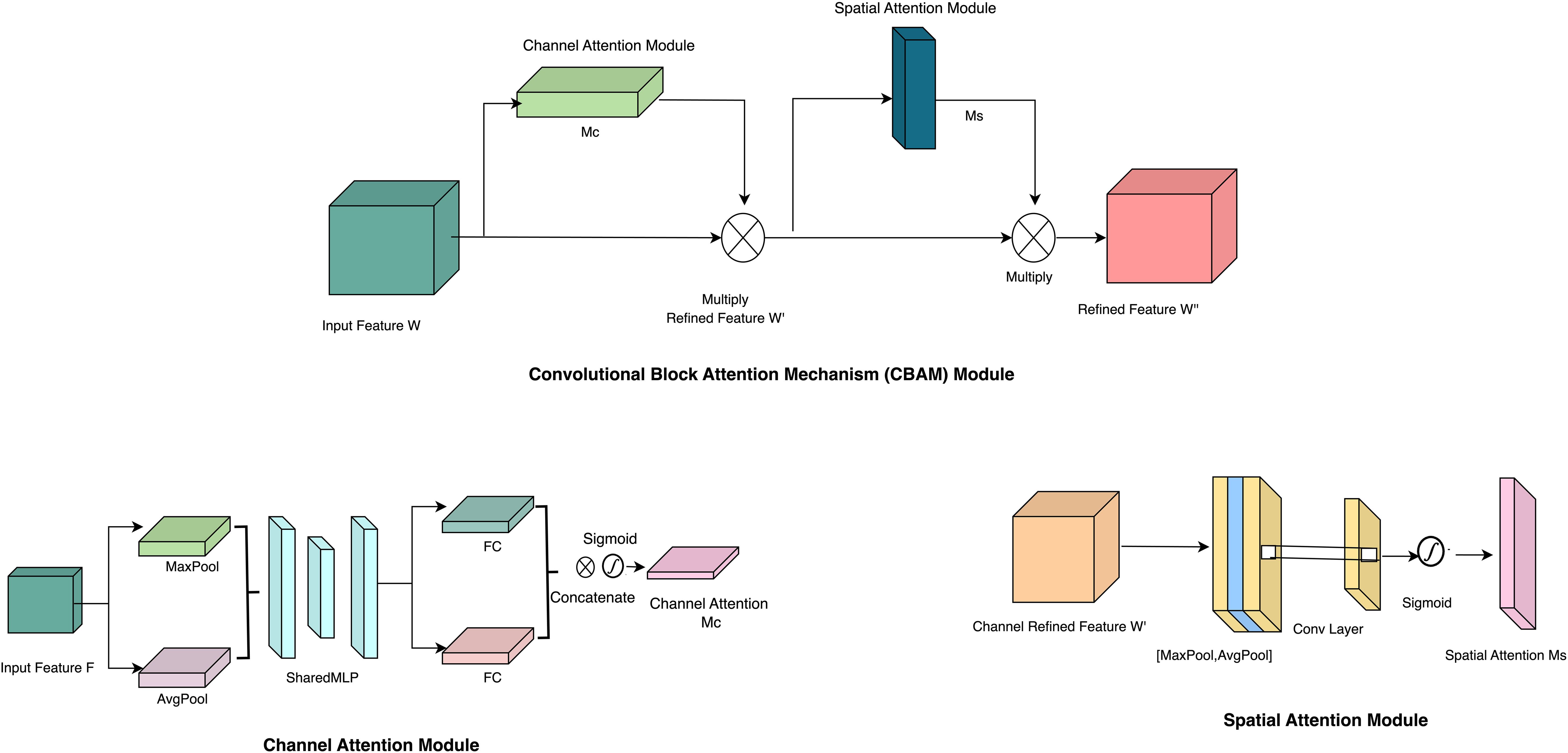

Attention mechanisms, extensively utilized in research, augment feature extraction and boost model performance in image classification tasks (Woo et al., 2018; Wang et al., 2017). Our architectural design incorporates a convolutional block attention module with two key components: the Channel Attention Module (CAM) and the Spatial Attention Module (SAM) (refer to Figures 5). These two modules work together to improve feature extraction and representation within the generated feature maps (Woo et al., 2018). The input feature map W, representing the wheat rust-infected leaf image, undergoes processing within the CAM, producing the channel attention feature map Mc. This map highlights essential image information, which is then used to generate the refined feature map W’. Element-wise multiplication between Mc and W yields the improved feature map W’. Subsequently, W’ is subjected to processing within the Spatial Attention Module, generating the spatial feature map Ms. This map selectively emphasizes significant image areas. The enhanced feature map W’ is subsequently combined with the spatial feature map Ms. This multiplication yields the ultimate feature map W’’, which encapsulates the representation of the wheat rust image. The Convolutional Block Attention Mechanism operates through the following Equations 1, 2:

The CBAM module’s channel attention mechanism utilizes pooling operations to compress the feature map W, focusing solely on essential symptom regions within the image while disregarding extraneous information or features. Conversely, the spatial attention mechanism identifies significant feature locations post-CAM processing. This process involves spatial dimension compression of the feature maps W’ and the generation of the spatial attention feature Ms utilizing the sigmoid activation function. It highlights critical features within specific image area, enhancing intermediate features.

2.5 Proposed severity estimation framework

The proposed methodology employs transfer learning, where a novel model aimed at disease severity stage identification is trained utilizing a pre-trained model, EfficientNet B0, as the foundation for learning. While retaining the initial layers of the EfficientNet B0 model, the final layer is replaced with new layers. These newly introduced layers are subsequently fine-tuned to classify infected leaves into ten distinct classes using, ‘WheatSev’ dataset developed by us, as per the methodology given by Too et al. (2019). Thus, the WheatSevNet model is designed to accurately classify the severity stages of wheat rust infections by enhancing feature extraction and representation. It builds upon EfficientNet-B0, a widely used deep learning architecture known for its computational efficiency and high performance. However, EfficientNet-B0’s Squeeze-and-Excitation (SE) module, while effective for channel-wise recalibration, lacks spatial feature extraction capabilities. To overcome this limitation, WheatSevNet integrates the Convolutional Block Attention Module (CBAM) in place of the SE module within each MBConv block of EfficientNet-B0 as depicted in Figure 6. The CBAM module consists of two components: the Channel Attention Module (CAM), which selectively enhances significant channels using global average pooling, max pooling, a multi-layer perceptron (MLP), and a sigmoid activation function, and the Spatial Attention Module (SAM), which refines feature extraction by applying average and max pooling across the channel axis, followed by a convolutional layer and a sigmoid activation function. In WheatSevNet, each MBConv block of EfficientNet-B0 is modified by replacing the SE module with CBAM, ensuring both spatial and channel-wise attention are effectively incorporated. Additionally, a final CBAM layer is introduced after the last convolutional layer to further refine feature maps before classification. The model leverages transfer learning and fine-tuning, utilizing pre-trained EfficientNet-B0 weights with the initial layers frozen while optimizing the later layers for severity classification. Further modification involves the addition of a normalization layers, fully connected (FC) layer, a dropout, and a convolutional layer, as depicted in Figure 6 for detecting and classifying different severity stages of wheat rust. By integrating CBAM at multiple levels, WheatSevNet achieves enhanced feature extraction, capturing both disease-specific spatial structures and critical channel-wise characteristics, thereby improving classification performance over traditional EfficientNet-based approaches.

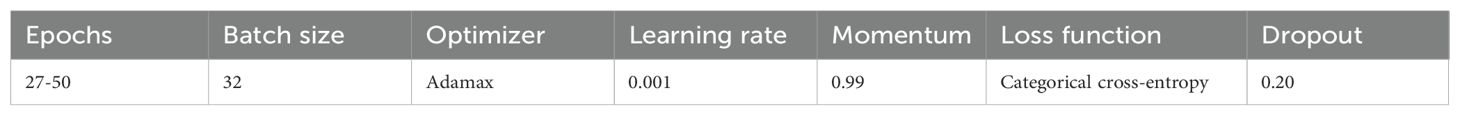

Additionally, Table 2 presents the hyperparameters employed for the disease severity estimation models. Owing to low stopping, the number of epochs ranged from 27 to 50, with a fixed learning rate of 0.001. To mitigate this risk, the authors have incorporated several measures to prevent overfitting. These include data augmentation, which helps in artificially increasing the dataset size and providing more diverse training examples. The augmentation process involved applying random transformations such as rotation, flipping, scaling, and color adjustments to create new variations of the existing images. This technique aimed to simulate real-world variations in the data, which helps prevent the model from overfitting to the specifics of the training set. Furthermore, class balancing was ensured by augmenting each class of severity stage images to contain a total of 1000 images per class, ensuring that all classes were equally represented in the dataset. This balanced representation prevents the model from being biased towards any particular class, enhancing its ability to generalize across different categories. Further, a dropout rate of 0.20 was implemented during the training process. Dropout works by randomly disabling 20% of the neurons in each layer during training, which helps prevent the model from becoming overly reliant on specific features and encourages it to learn more generalized patterns. Additionally, L2 regularization has been applied to control the complexity of the model and prevent it from overfitting to the training data. In addition to these measures, early stopping with patience as 3 was incorporated as an extra safeguard against overfitting. This technique monitors the validation loss during training, and if no improvement is seen for a specified number of epochs (the patience parameter), the training process is halted early. This prevents the model from continuing to learn noise and overfitting to the training data. The patience parameter was set to allow the model to train for several epochs without improvement before stopping, ensuring that it had enough time to converge but also preventing unnecessary overfitting. All these hyperparameters for the severity estimation models are reported in Table 2. Categorical cross-entropy served as the loss function during model training, while the batch size for experimentation was fixed at 32. The subsequent subsection will address the third objective of the research study, focusing on elucidating the validation of the developed models and their integration into mobile applications.

2.6 Evaluating model performance and efficacy

During the experiment, various pre-trained classical deep-learning models were compared to the proposed model. These models included VGGNet (Simonyan and Zisserman, 2014), ResNet152 (He et al., 2016), InceptionV3 (Szegedy et al., 2016), MobileNetV2 (Sandler et al., 2018), and DenseNet121 (Huang et al., 2017). These models underwent parameter resetting before training, followed by modifications to the bottom layers of the pre-trained networks. The bottom layer was substituted with a new SoftMax and output layers containing ten severity stage classes from the datasets.

2.7 Experimental implementation

The experimentation was conducted on a robust DGX server featuring GPU capabilities, with computations executed using the Keras and TensorFlow frameworks. The system has Ubuntu as the operating system, supported by an Intel® Xeon® CPU. All computationally intensive tasks were handled by the NVIDIA Tesla V100-SXM2 GPU, boasting ample memory resources of 528 GB (refer to Table 3).

2.8 Evaluation metrics

The accuracy of classification predictions in machine learning experiments is assessed through confusion matrices. It is used to analyze the correspondence between the predicted and actual prediction scores for individual classes of a classification model. Other metrics such as precision, accuracy, F1 score, and recall are also used to assess the performance of our model. Precision describes the ratio of true positives to all positive predictions, while accuracy refers to the proportion of correctly identified predictions relative to the total number of the predictions. On the other hand, Recall measures the ratio of true positive cases to all positive predictions (Hossin and Sulaiman, 2015). The F1 score is further calculated using the harmonic mean of precision and recall, providing a balanced evaluation of the model performance (Equations 3–6).

The “true positive” (TP) represents the count of images accurately detected within each severity stage class. Conversely, “true negative” (TN) represents the overall number of images correctly identified across all severity stages, excluding the specific severity stage to which they belong. “False negative” refers to the count of images wrongly classified within each relevant severity class, while “false positive” (FP) indicates the number of images incorrectly classified as belonging to different severity stage classifications. Finally, the predictive performance of the proposed model is summarized by the F1 score.

3 Results and discussion

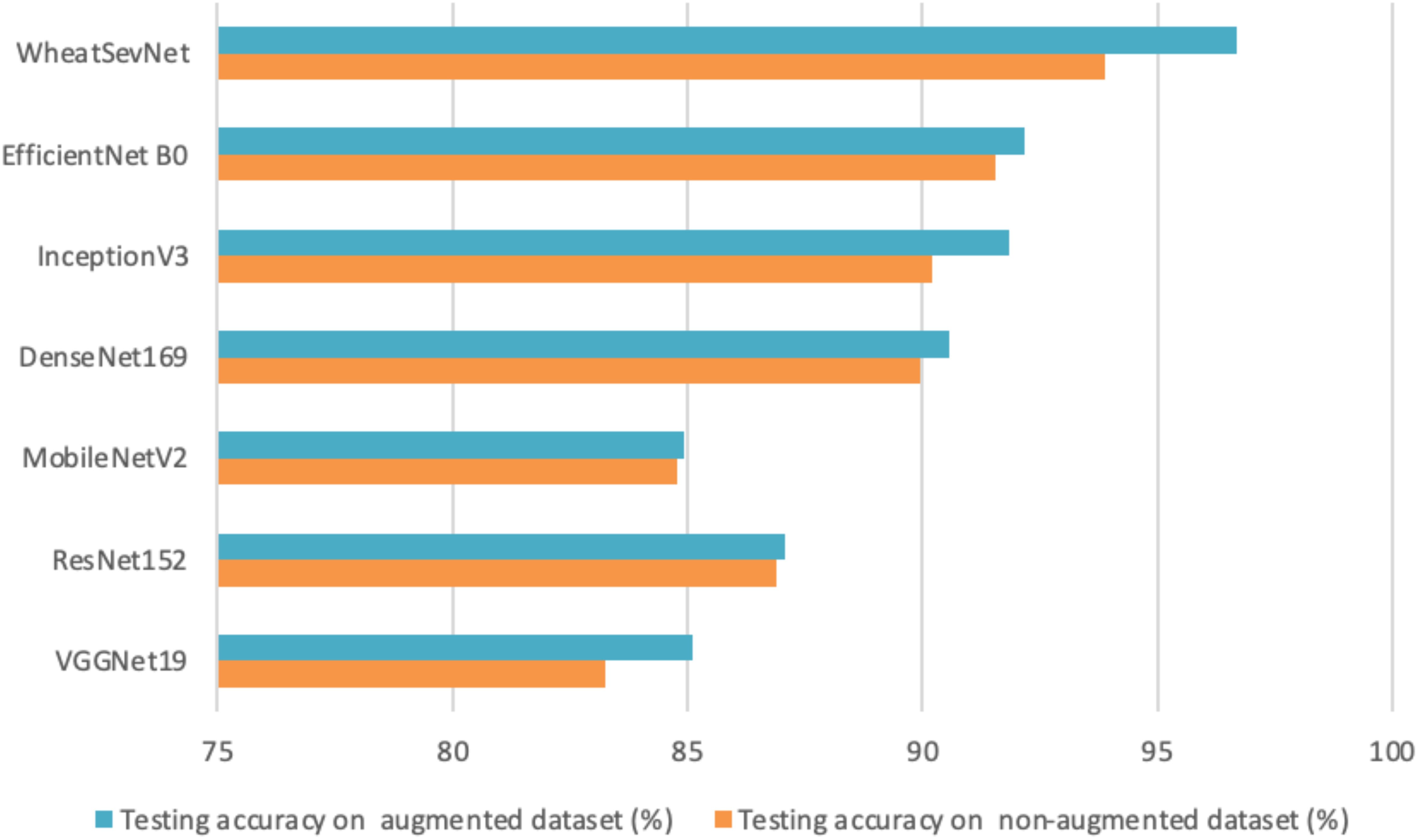

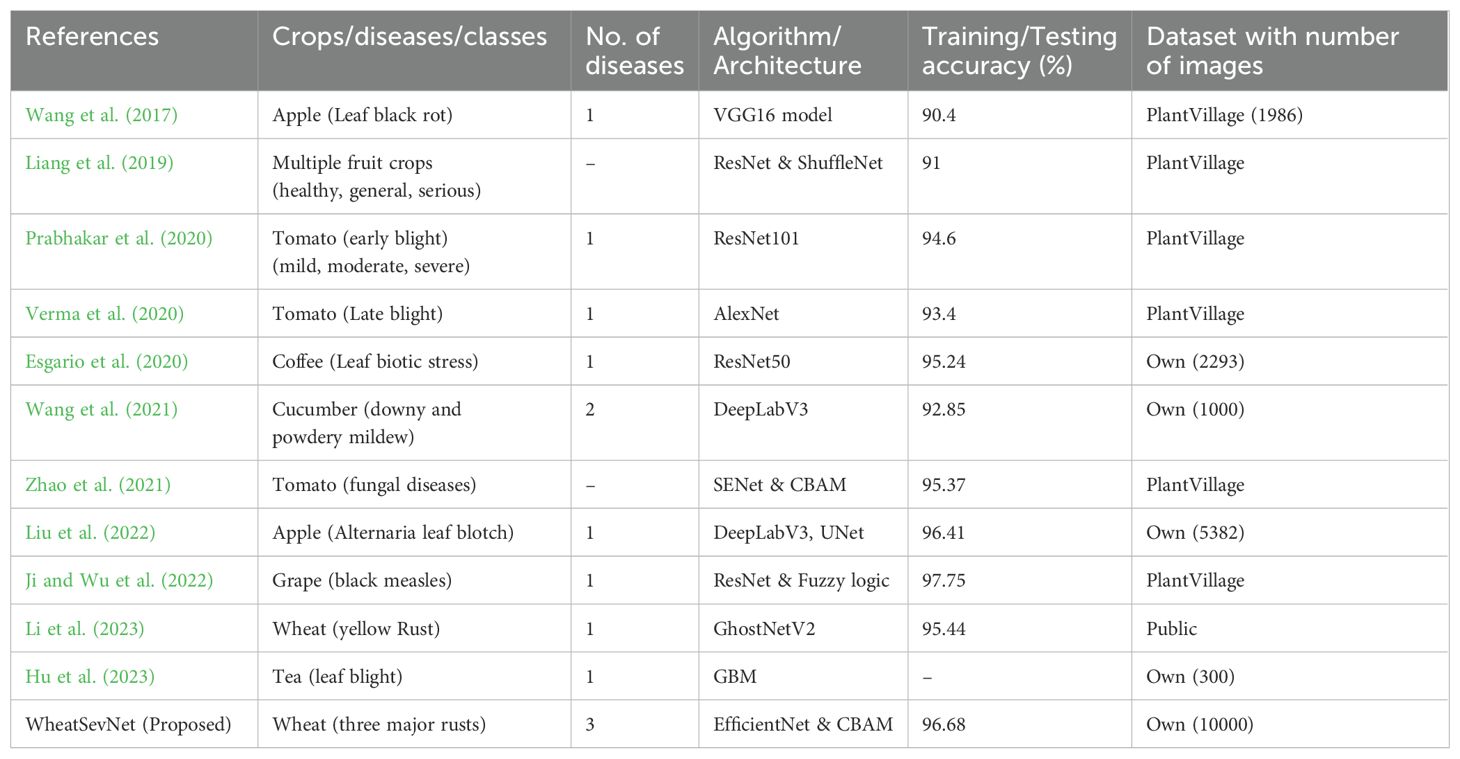

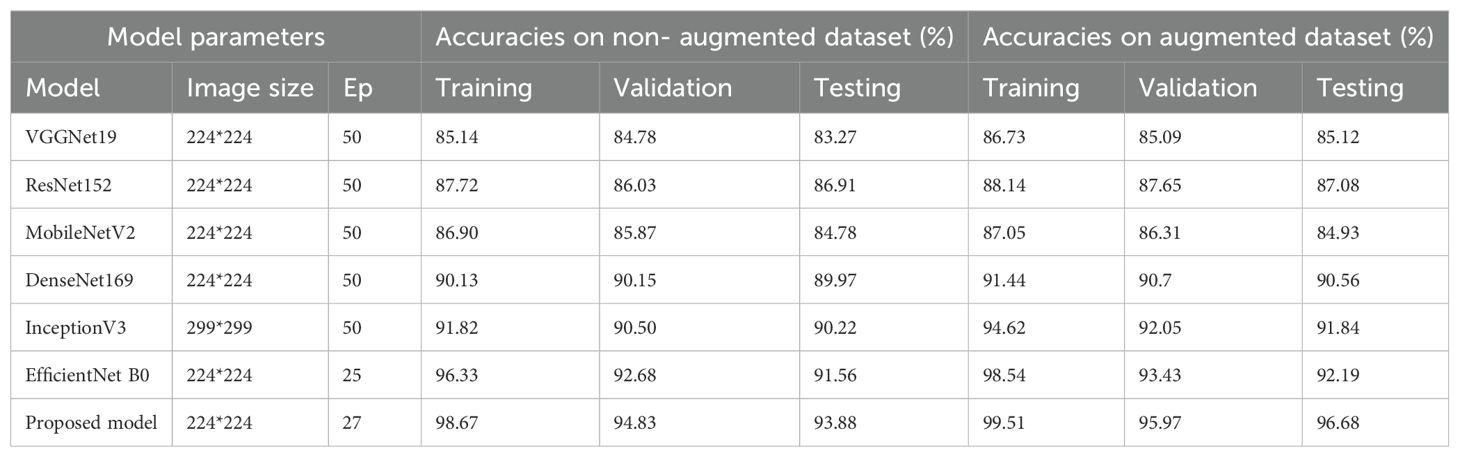

The experiment aimed to estimate the severity stages for all three wheat rusts utilizing the image dataset. The wheat disease severity estimation model was crafted using the EfficientNet architecture as the foundational model, augmented with the Convolutional Block Attention Mechanism (CBAM) integrated at the network’s base. Performance evaluation of the disease severity estimation model was conducted, posing it against state-of-the-art CNN models and a simple fine-tuned EfficientNet B0 model, as outlined in Table 4. Results demonstrate that the proposed severity model achieved the highest test accuracy, reaching 93.88% and 96.68% on both the non-augmented and augmented datasets, respectively. Upon comparing the experimental results of EfficientNet B0 and EfficientNet B0-CBAM, as presented in Table 4, a notable enhancement in disease severity identification was observed upon the integration of an attention mechanism into the model.

Table 4. Performance comparison of State-of-the-art CNN models with proposed severity estimation model.

In the absence of an attention mechanism in EfficientNetB0, the overall testing accuracy on the WheatSev dataset was recorded at 92.19%. However, upon integrating the CBAM module into EfficientNetB0, the overall testing accuracy markedly increased to 96.68%, as illustrated in Figure 7. Analysis of the disease severity stage classification results revealed that the performance enhancement observed in EfficientNetB0, when augmented with the attention mechanism, could be attributed to the spatial attention module of the CBAM module, which adeptly locates key information with greater accuracy. Furthermore, the channel attention module of CBAM exhibits the ability to amplify important features while suppressing irrelevant ones, thereby yielding a more refined feature representation. Consequently, it can be inferred that the incorporation of the CBAM module into the base model effectively contributes to improving the model’s performance in identifying the severity level of wheat rust diseases.

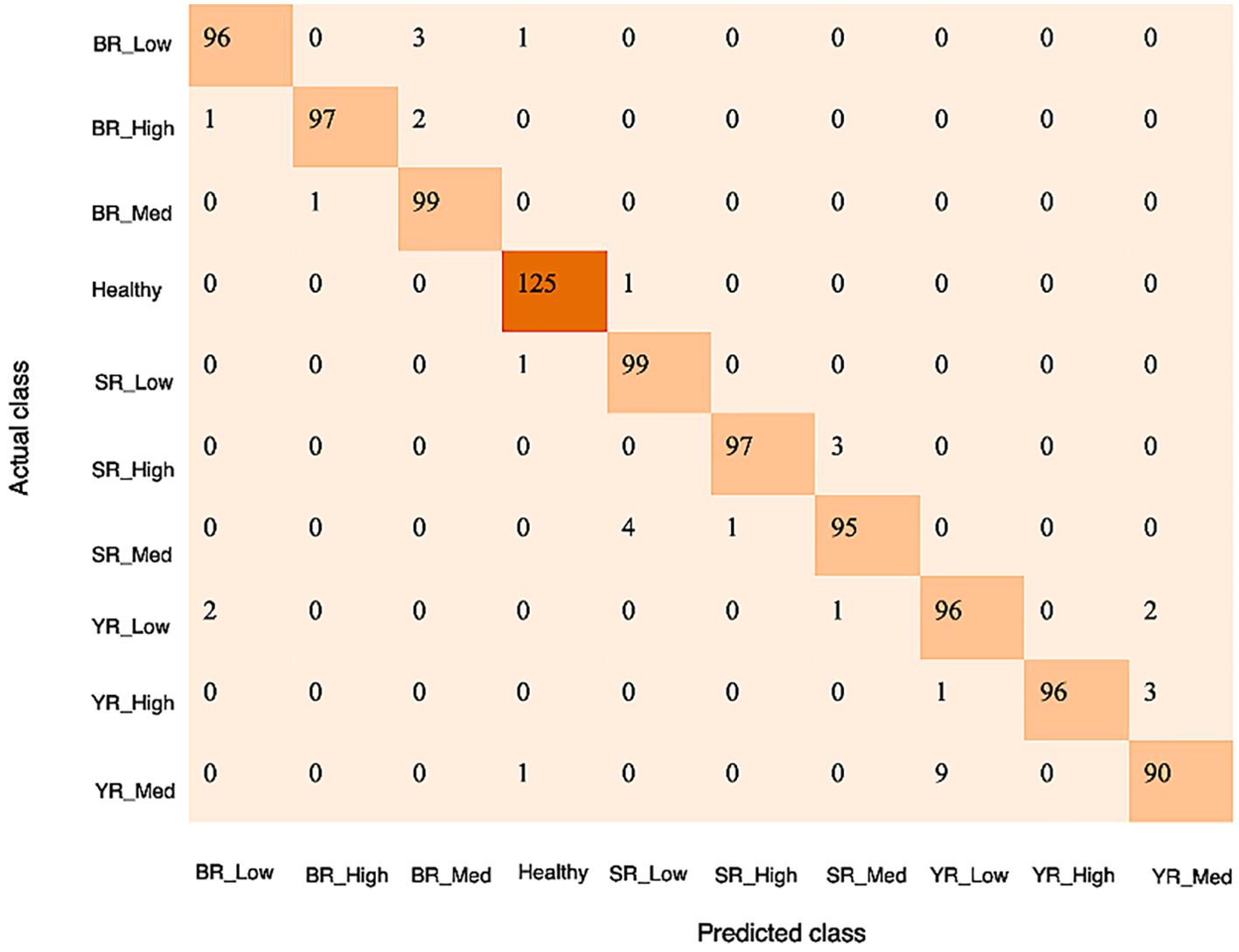

3.1 Confusion matrix and various performance metrics

Figure 8 illustrates the confusion matrix of the severity estimation model, depicting ten classes based on actual and predicted labels from augmented datasets. The numbers along the diagonal signify accurately identified images, while those outside the diagonal indicate instances of misclassification (Ting, 2017). Specifically, among the four low severity images of brown Rust, two were erroneously identified as medium-stage severity, and one as healthy (Figure 8). Similarly, misclassifications occur in other severity stages of brown Rust, with images of high and medium levels misclassified as medium and low, respectively. Furthermore, low-severity images of brown Rust were mistakenly classified as medium severity and healthy. Additionally, misclassifications were observed in stem rust and yellow rust severity stages.

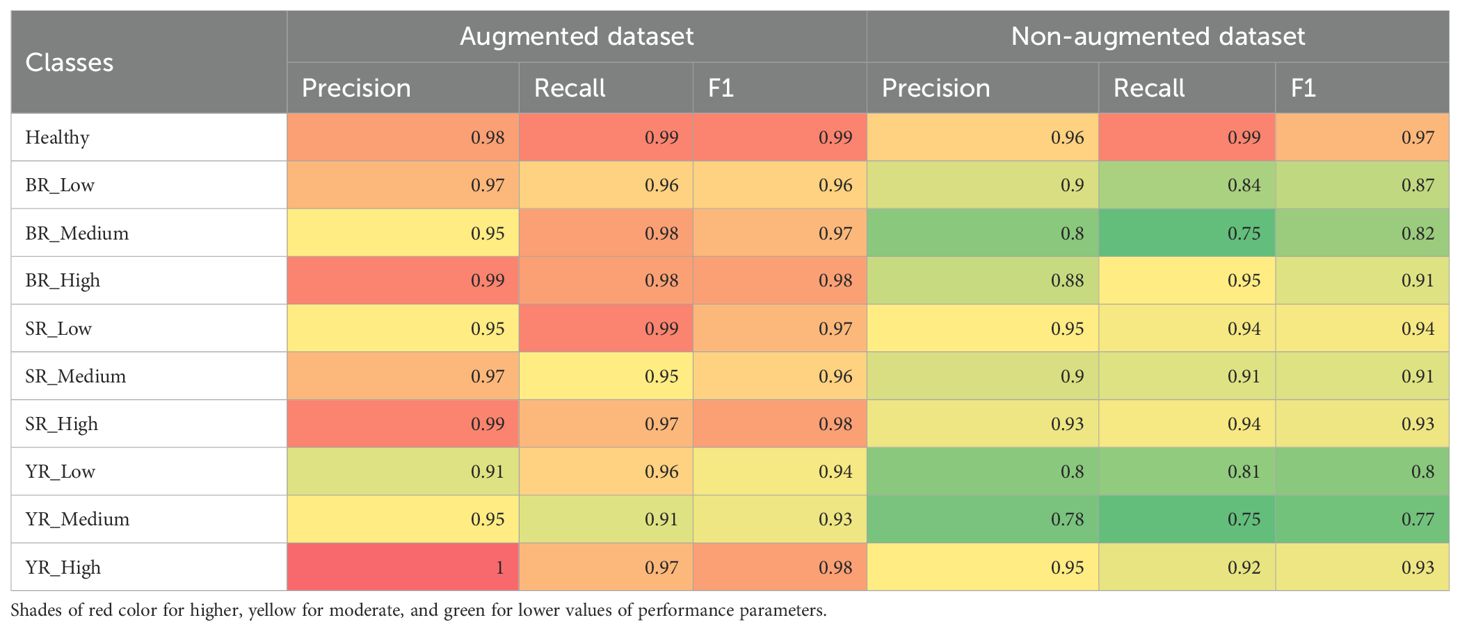

Upon analyzing the confusion matrix of EfficientNetB0 embedded with CBAM, it was noted that Rust spread on the upper surface of the leaf leads to significant confusion between the low and medium severity stages of the disease. Referencing Table 5, the classification report derived from the confusion matrices includes F1, precision, and recall metrics for the proposed disease severity estimation model. A model is deemed appropriate if its F1 score approaches one. After evaluating these performance metrics, the following findings emerge: On the augmented dataset, the average precision, recall, and F1 score for identifying severity stages in brown rust and stem rust is 97%, whereas, for yellow Rust, the average score for precision, recall, and F1 measure is 95%.

Table 5 shows that augmented datasets yield superior results compared to non-augmented datasets across all diseases and their categories. However, for stem rust disease, all performance parameters in non-augmented datasets surpass 90% across all stages, possibly due to easily identifiable features of stem rusts. Conversely, for brown and yellow Rust, the performance of non-augmented datasets is notably inferior to augmented datasets. Another noteworthy finding pertains to the higher classification accuracy of healthy leaves, which can be attributed to (i) the more significant number of images (1252) and (ii) the absence of any classes for healthy leaves. Although the accuracy improves as the disease stage matures, even in low stages, precision exceeds 90% for all types of rusts. For the augmented dataset, precision reaches 97% for brown Rust and 91% for yellow Rust.

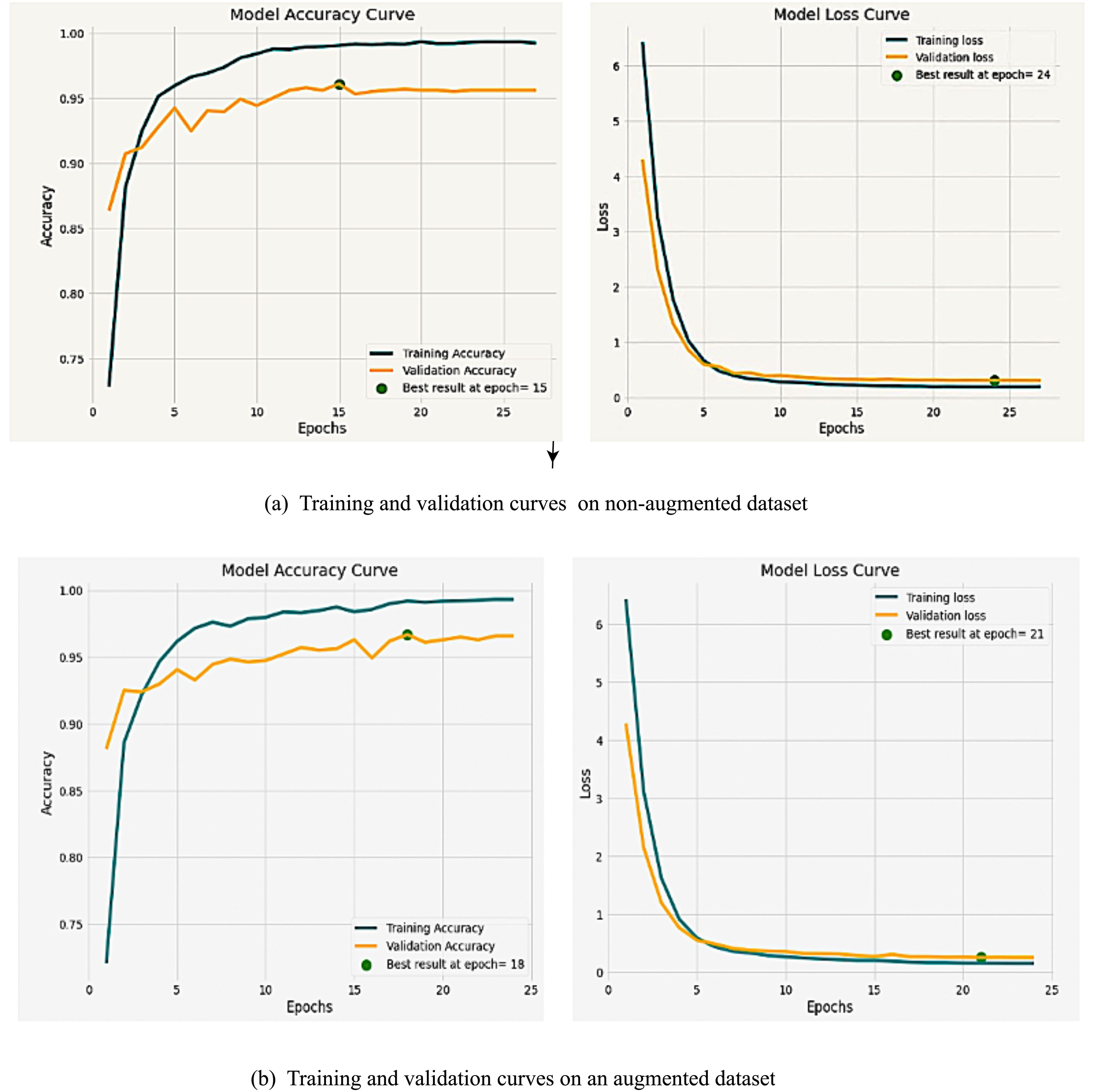

3.2 Model accuracy and loss curves

Figure 9 depicts the training and validation curves for the wheat severity estimation model, offering insights into the learning process. Notably, the accuracy curves indicate that our proposed severity model serves as a commendable and efficient fit model (Hossin and Sulaiman, 2015). The findings of the disease severity model underscore its efficacy in automatically identifying wheat severity stages based on images.

Figure 9. Training and validation curves for severity estimation model on (a) non-augmented dataset (b) augmented dataset.

In conclusion, the proposed models prove useful for identifying diseases at a low severity level, as evidenced by the accurate identification of most images in the low stages of Rust. This early severity assessment holds promise for crop preservation and can significantly minimize crop loss.

3.3 Visualization of disease symptoms model interpretability

The interpretability of the proposed model is carried out using the GradCAM (Selvaraju et al., 2020). Figure 10 illustrates that the proposed severity estimation model focuses explicitly on the features and the symptoms that play an important role in identifying the severity level of the type of the wheat rusts. The activation maps shown in the figure facilitate specific regions in the input test images necessary for estimating the severity of the disease. Thus, we aimed to illustrate how the model is directing its attention towards the areas where the symptoms are most noticeable in order to identify the disease at a low stage of severity. The attention mechanism has been found to improve the model’s ability to identify the appropriate symptoms in the correct location accurately.

Figure 10. GradCAM visualization of the (a) Yellow rust (b) Brown rust (c) Stem rust diseases at low severity level.

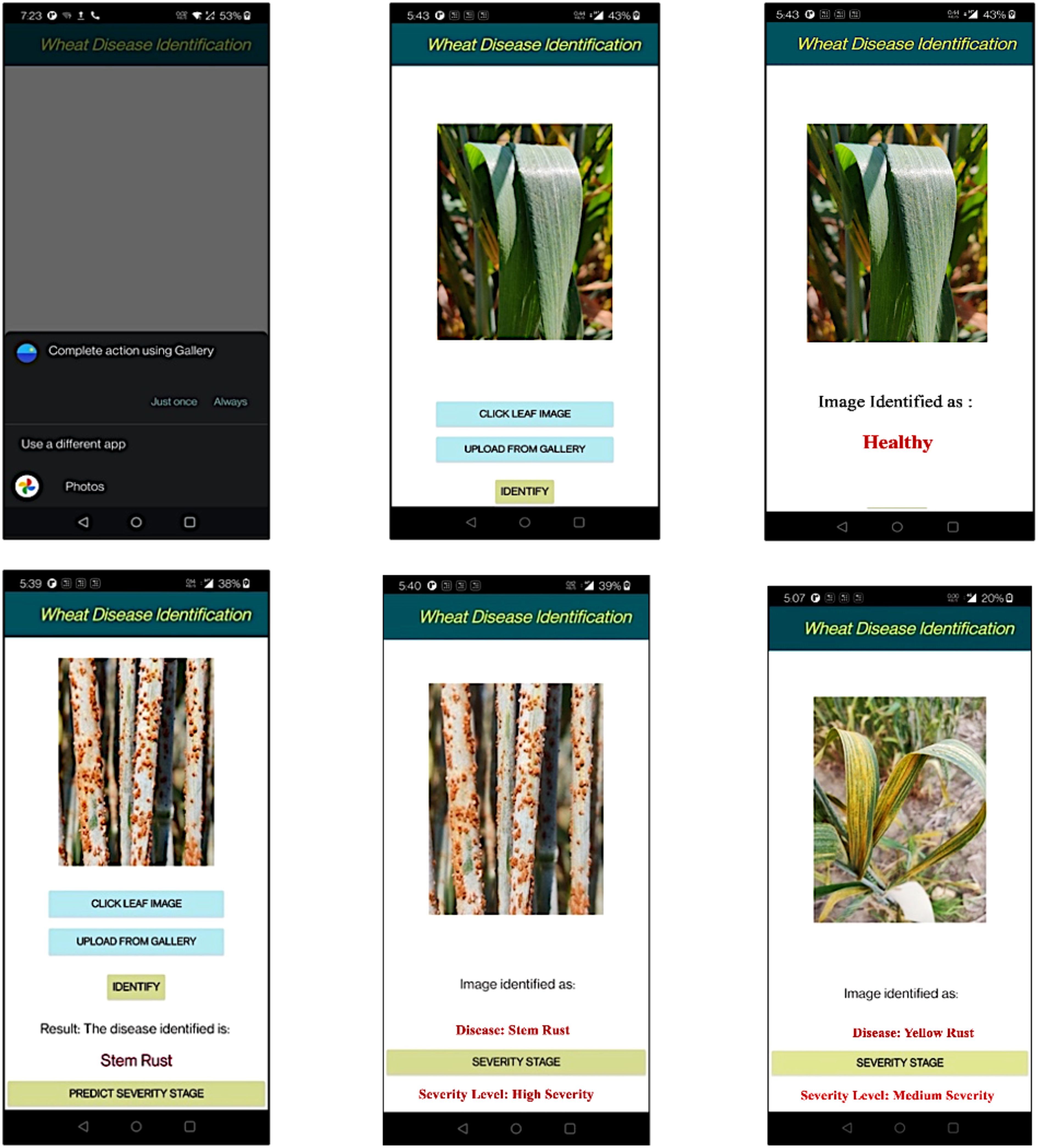

3.4 Development of an Android based mobile application for wheat disease severity estimation

In this study, we also developed an Android-based mobile application as a practical tool for the automatic identification of wheat diseases and their corresponding severity stages in agricultural fields. The proposed model was integrated into the application’s backend to facilitate this functionality. The mobile application allows users to capture real-time images from agricultural fields or select images stored in their mobile device gallery. These images are subsequently uploaded to a server for analysis. The analytical process begins with the application determining whether the uploaded image depicts a healthy or diseased wheat plant. For images identified as healthy, the result is directly displayed as “healthy”. Conversely, if the image is diagnosed as diseased, the application proceeds to identify the specific type of disease. Following disease identification, the application further evaluates the image to estimate the severity stage of the detected disease. Figure 11 provides a detailed illustration of the application’s process flow, from image acquisition to disease identification and severity stage estimation.

The developed mobile application for wheat disease severity estimation follows a streamlined workflow Figure 12. Upon launching, a splash screen introduces the app, followed by an interface that allows users to capture or upload a wheat leaf image. Once an image is uploaded, the “Identify” button determines if the leaf is healthy or diseased. For healthy images, the app displays a message indicating no further action is required. If a disease is detected, the application identifies the disease type and provides an option to predict its severity stage. The final screen presents the identified disease along with its severity stage, providing a complete diagnostic result for the uploaded image.

3.5 Comparison with existing studies in the literature

The Table 6 offers a comprehensive overview and comparative assessment of various models utilized for disease classification across different crops, alongside their respective training or testing accuracies. Our proposed model, specifically for diagnosing three major rusts in wheat crops, achieved a commendable testing accuracy of 96.68%. When compared with existing literature, our model emerges as a strong contender, demonstrating competitive performance. Notably, existing models developed for crops such as apple, tomato, coffee, cucumber, and grape achieved accuracies ranging from 90.4% to 97.75%, albeit focusing on single disease classes. Importantly, prior attempts at wheat severity estimation encompassing three diseases and their severity levels were scarce. Despite this, our model’s accuracy not only matches but also exceeds the reported accuracies in the literature, underscoring its efficacy in wheat rust severity estimation.

In this study, our primary contributions are twofold: Firstly, we curated a robust dataset for wheat disease classification, encompassing the estimation of severity categories. The non-augmented dataset comprises 5438 images, while the augmented dataset boasts a total of 10252 images. This dataset lays a strong foundation for future research in this domain. Secondly, we introduced WheatSevNet, an algorithm capable of identifying various wheat disease categories and assessing their severity levels. Despite the challenges posed by multi-disease classes and multi-severity levels, our enhanced model achieved an impressive accuracy rate of over 96%. This performance is comparable even to other algorithms designed for single disease identification. We could not compare with wheat severity estimation models for three diseases as no such published attempt is available to the best of our knowledge. The success of our approach not only addresses an immediate need in agricultural research and opens up promising avenues for future investigations in this field. We hope our study will inspire further exploration and innovation in automated plant disease diagnosis and severity estimation.

4 Conclusion

The major fungal diseases in a wheat crop significantly impact crop quality and quantity, leading to substantial agricultural yield losses. In our study, we aim to diagnose major wheat fungal diseases and its corresponding severity level, utilizing a model based on EfficientNet architecture and enhanced with a Convolutional Block Attention Mechanism. The proposed model demonstrates exceptional effectiveness, boasting a training accuracy of 99.51% and a testing accuracy of 96.68%. In comparative analyses, our model surpasses state-of-the-art CNN models and a fine-tuned EfficientNet B0 model, highlighting its superior performance in severity estimation. To ensure the robustness of our approach across various disease categories, we conducted experiments using images from real-life field conditions, encompassing three major types of wheat rusts: yellow, brown, and black. Notably, our model’s ability to classify severity stages into medium and high stages provides precise information, facilitating timely intervention. The integration of the CBAM module significantly enhances the model’s performance, boosting the testing accuracy from 93.21% to an impressive 96.68% on the WheatSev dataset. This improvement is largely attributed to the attention module within CBAM, which adeptly identifies critical information and enhances the representation of features. Furthermore, the channel attention module demonstrates its effectiveness in amplifying features while suppressing ones, thereby contributing to a more precise and accurate identification of the severity level of wheat rust disease. The results validate that the inclusion of the CBAM module substantially improves the efficiency of the model in detecting and assessing the severity of wheat rust disease.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

SN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. RJ: Conceptualization, Formal analysis, Investigation, Methodology, Supervision, Validation, Visualization, Writing – review & editing. VS: Data curation, Funding acquisition, Investigation, Resources, Supervision, Validation, Writing – review & editing. AS: Conceptualization, Formal analysis, Methodology, Project administration, Software, Visualization, Writing – original draft. HK: Investigation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by Bill and Melinda Gates Foundation under the project ICAR BMGF (Grant Number OPP1194767).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Arnal Barbedo, J. G. (2019). Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 180, 96–107. doi: 10.1016/j.biosystemseng.2019.02.002

Atila, Ü., Uçar, M., Akyol, K., and Uçar, E. (2021). Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inf. 61, 101182. doi: 10.1016/j.ecoinf.2020.101182

Bock, C. H., Pethybridge, S. J., Barbedo, J. G. A., Esker, P. D., Mahlein, A. K., and Del Ponte, E. M. (2022). A phytopathometry glossary for the twenty-first century: Towards consistency and precision in intra- and inter-disciplinary dialogues. Trop. Plant Pathol. 47, 14–24. doi: 10.1007/s40858-021-00454-0

Bock, C. H., Poole, G. H., Parker, P. E., and Gottwald, T. R. (2010). Plant disease severity estimated visually, by digital photography and image analysis, and by hyperspectral imaging. Crit. Rev. Plant Sci. 29, 59–107. doi: 10.1080/07352681003617285

Chen, S., Zhang, K., Zhao, Y., Sun, Y., Ban, W., Chen, Y., et al. (2021). An approach for rice bacterial leaf streak disease segmentation and disease severity estimation. Agriculture 11, 420. doi: 10.3390/agriculture11050420

Chen, W., Wellings, C., Chen, X., Kang, Z., and Liu, T. (2014). Wheat stripe (yellow) rust caused by P uccinia striiformis f. sp. tritici. Molecular plant pathology 15 (5), 433–446.

Chin, R., Catal, C., and Kassahun, A. (2023). Plant disease detection using drones in precision agriculture. Precis. Agric. 24, 1663–1682. doi: 10.1007/s11119-023-10014-y

Dheeraj, A. and Chand, S. (2022). “Deep learning model for automated image based plant disease classification,” in International Conference on Intelligent Vision and Computing (Springer Nature Switzerland, Cham), 21–32.

Dheeraj, A. and Chand, S. (2024). LWDN: lightweight DenseNet model for plant disease diagnosis. J. Plant Dis. Prot. 131 (3), 1043–59. doi: 10.1007/s41348-024-00915-z

Esgario, J. G., Krohling, R. A., and Ventura, J. A. (2020). Deep learning for classification and severity estimation of coffee leaf biotic stress. Comput. Electron. Agric. 169, 105162. doi: 10.1016/j.compag.2019.105162

Fuentes, A., Yoon, S., Lee, M. H., and Park, D. S. (2021). Improving accuracy of tomato plant disease diagnosis based on deep learning with explicit control of hidden classes. Front. Plant Sci. 12, 682230. doi: 10.3389/fpls.2021.682230

Haque, M. A., Marwaha, S., Arora, A., Deb, C. K., Misra, T., Nigam, S., et al. (2022). A lightweight convolutional neural network for recognition of severity stages of maydis leaf blight disease of maize. Front. Plant Sci. 13, 1077568. doi: 10.3389/fpls.2022.1077568

Hayit, T., Erbay, H., Varçın, F., Hayit, F., and Akci, N. (2021). Determination of the severity level of yellow rust disease in wheat by using convolutional neural networks. J. Plant Pathol. 103, 923–934. doi: 10.1007/s42161-021-00886-2

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Identity mappings in deep residual networks,” in European conference on computer vision (Springer, Cham), 630–645.

Hossin, M. and Sulaiman, M. N. (2015). A review on evaluation metrics for data classification evaluations. International journal of data mining & knowledge management process 5 (2), 1.

Hu, G., Wan, M., Wei, K., and Ye, R. (2023). Computer vision based method for severity estimation of tea leaf blight in natural scene images. Eur. J. Agron. 144, 126756. doi: 10.1016/j.eja.2023.126756

Hu, G., Wei, K., Zhang, Y., Bao, W., and Liang, D. (2021). Estimation of tea leaf blight severity in natural scene images. Precis. Agric. 22, 1239–1262. doi: 10.1007/s11119-020-09782-8

Huang, G., Liu, Z., van der Maaten, L., and Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition. 4700–4708.

Jiang, Q., Wang, H. L., and Wang, H. G. (2022). Two new methods for severity assessment of wheat stripe rust caused by Puccinia striiformis f. sp. tritici. Front. Plant Sci. 13. doi: 10.3389/fpls.2022.1002627

Jiang, Q., Wang, H., and Wang, H. (2023). Severity assessment of wheat stripe rust based on machine learning. Front. Plant Sci. 14, 1150855. doi: 10.3389/fpls.2023.1150855

Ji, M. and Wu, Z. (2022). Automatic detection and severity analysis of grape black measles disease based on deep learning and fuzzy logic. Computers and Electronics in Agriculture. 193, 106718.

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Li, Z., Fang, X., Zhen, T., and Zhu, Y. (2023). Detection of wheat yellow rust disease severity based on improved GhostNetV2. Appl. Sci. 13 (17), 9987. doi: 10.3390/app13179987

Liang, Q., Xiang, S., Hu, Y., Coppola, G., Zhang, D., and Sun, W. (2019). PD2SE-Net: Computer-assisted plant disease diagnosis and severity estimation network. Comput. Electron. Agric. 157, 518–529. doi: 10.1016/j.compag.2019.01.034

Liu, B., Ding, Z., Tian, L., He, D., Li, S., and Wang, H. (2020). Grape leaf disease identification using improved deep convolutional neural networks. Front. Plant Sci. 11, 1082. doi: 10.3389/fpls.2020.01082

Liu, B. Y, Fan, K. J., Su, W. H., and Peng, Y. (2022). Two-stage convolutional neural networks for diagnosing the severity of alternaria leaf blotch disease of the apple tree. Remote Sensing. 14 (11), 2519.

Lu, J., Hu, J., Zhao, G., Mei, F., and Zhang, C. (2017). An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 142, 369–379. doi: 10.1016/j.compag.2017.09.012

Mi, Z., Zhang, X., Su, J., Han, D., and Su, B. (2020). Wheat stripe rust grading by deep learning with attention mechanism and images from mobile devices. Front. Plant Sci. 11, 558126. doi: 10.3389/fpls.2020.558126

Mohanty, S. P., Hughes, D. P., and Salathé, M. (2016). Using deep learning for image-based plant disease detection. Front. Plant Sci. 7, 1419. doi: 10.3389/fpls.2016.01419

Nigam, S. and Jain, R. (2020). Plant disease identification using Deep Learning: A review. Indian J. Agric. Sci. 90, 249–257. doi: 10.56093/ijas.v90i2.98996

Nigam, S., Jain, R., Marwaha, S., Arora, A., Haque, M. A., Dheeraj, A., et al. (2023). Deep transfer learning model for disease identification in wheat crop. Ecol. Inf. 75, 102068. doi: 10.1016/j.ecoinf.2023.102068

Nigam, S., Jain, R., Prakash, S., Marwaha, S., Arora, A., Singh, V. K., et al. (2021). “Wheat disease severity estimation: A deep learning approach,” in International Conference on Internet of Things and Connected Technologies (Springer, Cham), 185–193.

Pavithra, A., Kalpana, G., and Vigneswaran, T. (2023). Deep learning-based automated disease detection and classification model for precision agriculture. Soft Computing. 28 (Suppl 2), 463–483. doi: 10.1007/s00500-023-07936-0

Prabhakar, M., Purushothaman, R., and Awasthi, D. P. (2020). Deep learning based assessment of disease severity for early blight in tomato crop. Multimedia tools and applications, 79, 28773–84.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and Chen, L. C. (2018). “Mobilenetv2: Inverted residuals and linear bottlenecks,” in Proceedings of the IEEE conference on computer vision and pattern recognition. 4510–4520.

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. (2020). “Grad-cam: Visual explanations from deep networks via gradient-based localization,” in Proceedings of the IEEE international conference on computer vision. 128, 336–359.

Sibiya, M. and Sumbwanyambe, M. (2021). Automatic fuzzy logic-based maize common rust disease severity predictions with thresholding and deep learning. Pathogens 10, 131. doi: 10.3390/pathogens10020131

Simonyan, K. and Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna, Z. (2016). Rethinking the inception architecture for computer vision. Proc. IEEE Conf. Comput. Vision Pattern Recognition. 2016, 2818–2826. doi: 10.1109/CVPR.2016.308

Tan, M. and Le, Q. (2020). “EfficientNet: Rethinking model scaling for convolutional neural networks,” in International conference on machine learning (PMLR), 6105–6114. Available online at: https://proceedings.mlr.press/v97/tan19a.html

Ting, K. M. (2017). “Confusion matrix,” in Encyclopedia of Machine Learning and Data Mining. Eds. Sammut, C. and Webb, G. I. (Springer, Boston, MA). doi: 10.1007/978-1-4899-7687-1_50

Too, E. C., Yujian, L., Njuki, S., and Yingchun, L. (2019). A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 161, 272–279. doi: 10.1016/j.compag.2018.03.032

Verma, S., Chug, A., and Singh, A. P. (2020). Application of convolutional neural networks for evaluation of disease severity in tomato plant. Journal of Discrete Mathematical Sciences and Cryptography. 2020 23 (1), 273-282.

Wang, C., Du, P., Wu, H., Li, J, Zhao, C, and Zhu, H. (2021). A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-Net. Computers and electronics in agriculture. 189, 106373.

Wang, G., Sun, Y., and Wang, J. (2017). Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017. doi: 10.1155/2017/2917536

Woo, S., Park, J., Lee, J. Y., and Kweon, I. S. (2018). “Cbam: Convolutional block attention module,” in Proceedings of the European conference on computer vision (ECCV). 3–19.

Wspanialy, P. and Moussa, M. (2020). A detection and severity estimation system for generic diseases of tomato greenhouse plants. Comput. Electron. Agric. 178, 105701. doi: 10.1016/j.compag.2020.105701

Keywords: wheat rust, EfficientNet architecture, attention mechanism, disease severity estimation, transfer learning

Citation: Nigam S, Jain R, Singh VK, Singh AK and Krishna H (2025) Automated severity level estimation of wheat rust using an EfficientNet-CBAM hybrid model. Front. Plant Sci. 16:1540642. doi: 10.3389/fpls.2025.1540642

Received: 06 December 2024; Accepted: 30 April 2025;

Published: 23 May 2025.

Edited by:

Qinhu Wang, Northwest A&F University, ChinaReviewed by:

Peisen Yuan, Nanjing Agricultural University, ChinaXinli Zhou, Southwest University of Science and Technology, China

Copyright © 2025 Nigam, Jain, Singh, Singh and Krishna. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rajni Jain, UmFqbmkuamFpbkBpY2FyLmdvdi5pbg==; cmFqbmlqYWluNjdAZ21haWwuY29t; Vaibhav Kumar Singh, RHIuc2luZ2h2YWliaGF2QGdtYWlsLmNvbQ==

Sapna Nigam

Sapna Nigam Rajni Jain2*

Rajni Jain2* Vaibhav Kumar Singh

Vaibhav Kumar Singh Hari Krishna

Hari Krishna