- Research Institute of Pomology, Chongqing Academy of Agricultural Sciences, Chongqing, China

Hyperspectral imaging (HSI) technology has great potential for the efficient and accurate detection of plant diseases. To date, no studies have reported the identification of yellow vein clearing disease (YVCD) in lemon plants by using hyperspectral imaging. A major challenge in leveraging HSI for rapid disease diagnosis lies in efficiently processing high-dimensional data without compromising classification accuracy. In this study, hyperspectral feature extraction is optimized by introducing a novel hybrid 3D-2D-LcNet architecture combined with three-dimensional (3D) and two-dimensional (2D) convolutional layers—a methodological advancement over conventional single-mode CNNs. The competitive adaptive reweighted sampling (CARS) and successive projection algorithm (SPA) were utilized to reduce the dimensionality of hyperspectral images and select the feature wavelengths for YVCD diagnosis. The spectra and hyperspectral images retrieved through feature wavelength selection were separately employed for the modeling process by using machine learning algorithms and convolutional neural network algorithms (CNN). Machine learning algorithms (such as support vector machine and partial least squares discriminant analysis) and convolutional neural network algorithms (CNN) (including 3D-ShuffleNetV2, 2D-LcNet and 2D-ShuffleNetV2) were utilized for comparison analysis. The results showed that CNN-based models have achieved an accuracy ranging from 93.90% to 97.35%, significantly outperforming machine learning approaches (ranging from 68.83% to 93.52%). Notably, the hybrid 3D-2D-LcNet has achieved the highest accuracy of 97.35% (CARS) and 96.86% (SPA), while reducing computational costs compared to 3D-CNNs. These findings suggest that hybrid 3D-2D-LcNet effectively balances computational complexity with feature extraction efficacy and robustness when handling spectral data of different wavelengths. Overall, this study offers insights into the rapidly processing hyperspectral images, thus presenting a promising method.

1 Introduction

Lemon (C. limon), a widely cultivated popular fruit, faces a significant threat from the citrus yellow vein clearing virus (CYVCV), which has caused substantial losses to the lemon industry, particularly in China and other major production countries (Liu et al., 2020). This disease severely affects the health and yield of lemon trees, often leading to orchard destruction. The affected areas are gradually expanding in major production regions such as China, India, and Pakistan (Liu et al., 2020). Moreover, no effective pesticides have been found to combat this viral disease once the trees become infected. The primary method for controlling the yellow vein clearing disease (YVCD) in lemons involves identification, quarantine and eradication of diseased trees. Currently, the traditional manual survey method and polymerase chain reaction (PCR)-based molecular technique (Chen et al., 2016; Afloukou and Önelge, 2021) are the main methods for identifying YVCD in lemons. Current methods such as manual survey and PCR-based detection have their limitations in light of time and cost invested, and environmental concerns, which has reduced their practical applicability for large-scale early diagnosis (Martinelli et al., 2015). Furthermore, multiple diseases often co-occur on a single lemon tree, of which the leaves exhibit the complex symptoms of YVCD and other diseases, thus making it more difficult to identify YVCD. Rapidly and accurately identifying a disease from leaves exhibiting complex symptoms is essential for effective disease control, which provides valuable guidance for orchard field management. There is an urgent need to develop more effective methods for YVCD detection when it comes to supporting the sustainable development of the lemon industry.

In recent years, optical and imaging techniques have been widely applied to the rapid identification of plant diseases (Pereira et al., 2011; Calderón et al., 2013; Poblete et al., 2021; Vallejo-Pérez et al., 2021). Hyperspectral imaging (HSI) technology surpasses the traditional imaging and spectroscopy techniques owing to its superior spectral resolution and broader wavelength ranges. HSI can acquire both spatial and spectral information of the plant tissue (Thomas et al., 2018). These advantages allow HSI to capture spectral changes caused by subtle alterations in plant physiological and metabolic status, which is conducive to more accurate and earlier detection of plant diseases (Mishra et al., 2017). HSI has been proven effective in identifying citrus diseases. For example, Weng et al. (2018) had achieved an accuracy of 93% in detecting Huanglongbing using HSI. In laboratory settings, Abdulridha et al. (2019) achieved accuracies of 94%, 96%, and 100% in detecting asymptomatic, early, and late symptom stages of citrus canker, respectively. Additionally, Guo et al. (2015) had achieved an accuracy of over 97% in detecting citrus tristeza virus. Satisfactory identification results have also been obtained in detecting other crop diseases. For instance, Lu et al. (2018) reported 100% accuracy in detecting yellow leaf curl disease in tomato leaves, and Liu et al. (2024) achieved 99.38% accuracy in identifying apple mosaic virus. Therefore, HSI has the potential to be applied in developing effective and accurate techniques for identifying YVCD in lemons. Despite the advantages, HSI involves complex data processing and calibration, which must be addressed for field-level deployment.

Hyperspectral images encompass both spectral and spatial information. In previous studies, spectral information was applied in developing disease identification technology, with spatial information neglected. Traditional processing of hyperspectral images mainly involves spectral preprocessing, feature extraction and model construction. Machine learning algorithms are widely used and have achieved excellent performance in the field of classification (Guo et al., 2015; Nagasubramanian et al., 2018; Weng et al., 2018; Jiang et al., 2021; Chen et al., 2025). Partial least squares and support vector machines (SVM) are two commonly used methods, which have good performance in data fitting and classification tasks (Xuan et al., 2022; Siripatrawan and Makino, 2024). In recent years, convolutional neural networks (CNNs), which can automatically extract and learn the most significant features in an end-to-end manner, have been extensively used in disease diagnosis and hyperspectral image processing (Lu et al., 2021; Russel and Selvaraj, 2022; Kaya and Gürsoy, 2023). Hyperspectral images can be considered as three-dimensional hypercubes encompassing spectral and spatial information. Both two-dimensional and three-dimensional CNNs can extract and learn features of this three-dimensional cube data. A three-dimensional convolutional neural network (3D-CNN) can extract both spectral and spatial features simultaneously, whereas, a two-dimensional neural network (2D-CNN) only captures spatial features. As a result, the rich spectral information of hyperspectral images is often compressed and not completely extracted (Jia et al., 2023). Ortac and Ozcan (2021) compared the performance of one-dimensional, two-dimensional, and three-dimensional CNNs in hyperspectral image classification. They reported that the 3D-CNN effectively integrated spectral and spatial information, achieving a higher classification rate. Similarly, Nguyen et al. (2021) used hyperspectral imaging and deep learning for early detection of grapevine virus. They reported that 3D-CNNs were more effective than 2D-CNNs in extracting features from hyperspectral images. This trend of 3D-CNNs outperforming 1D-CNN and 2D-CNN was further validated by Zhu et al. (2023), who applied HSI and CNNs to identify slightly sprouted wheat kernels. The above results indicate that 3D-CNNs have demonstrated superior performance in HSI classification tasks, although their advantages may depend on dataset characteristics and model configuration. However, compared to 2D convolution, 3D convolution requires computing an additional depth channel in performing the convolution, which increases the number of parameters, FLOPS, and training time in modeling. Therefore, while 3D-CNNs have demonstrated better feature integration, their scalability and field application are limited by high computational cost. Hybrid CNNs combine the representational power of 3D convolutions with the computational efficiency of 2D convolutions, enabling better generalization with fewer parameters. In recent years, studies have shown that hybrid CNNs combining 3D and 2D convolution could further improve classification performance when hyperspectral images are employed. Roy et al. (2020) proposed a hybrid 3D and 2D convolution spectral CNN (HybridSN) for HSI classification, achieving satisfactory performance. Qi et al. (2023) proposed a deep learning architecture (PLB-2D-3D-A) combining 2D and 3D convolutions to identify potato late blight disease. With higher accuracy, this architecture outperformed the random forest and the deep learning-based methods 2D-CNN and 3D-CNN. Chen et al. (2022) constructed three CNNs (2D-CNN, 3D-CNN, and 2D–3D-merged CNN) for inspecting defects in green coffee beans, resulting in the 2D-3D-merged CNN based on both full band and PCA-3 bands achieving a higher accuracy.

Currently, there are no reports on the rapid detection of YVCD in lemons with the use of HSI technology. The primary objective of this study was to investigate how to efficiently process hyperspectral images and achieve accurate identification of YVCD. The specific objectives are: 1) to conduct YVCD identification by selecting characteristic wavelengths; 2) to construct models based on spectral information and traditional machine learning algorithms, and evaluate the performance of traditional hyperspectral image processing methods in identifying YVCD; 3) to construct models on the basis of CNNs and evaluate their performance in identifying YVCD; and 4) to perform a comprehensive comparison to select the most efficient and accurate method for YVCD identification.

The key outcomes of this study represent three significant advances. First, a HSI-based rapid detection method for YVCD identification was developed, filling the gap of lacking effective and accurate identification techniques for this disease. Meanwhile, the characteristic wavelengths were screened on the same dataset by using the CARS and SPA independently, which may support future development of low-cost, portable detection tools. Furthermore, a new 3D-2D-LcNet architecture has been proposed. This architecture can effectively combine spatial and spectral feature extraction, optimally balancing computational complexity and feature extraction efficiency in hyperspectral image processing, thus achieving high classification accuracy. These advancements not only are able to tackle the dimensional challenges in hyperspectral image processing but also help build a scalable technical foundation for plant disease monitoring.

2 Materials and methods

2.1 Hyperspectral image acquisition

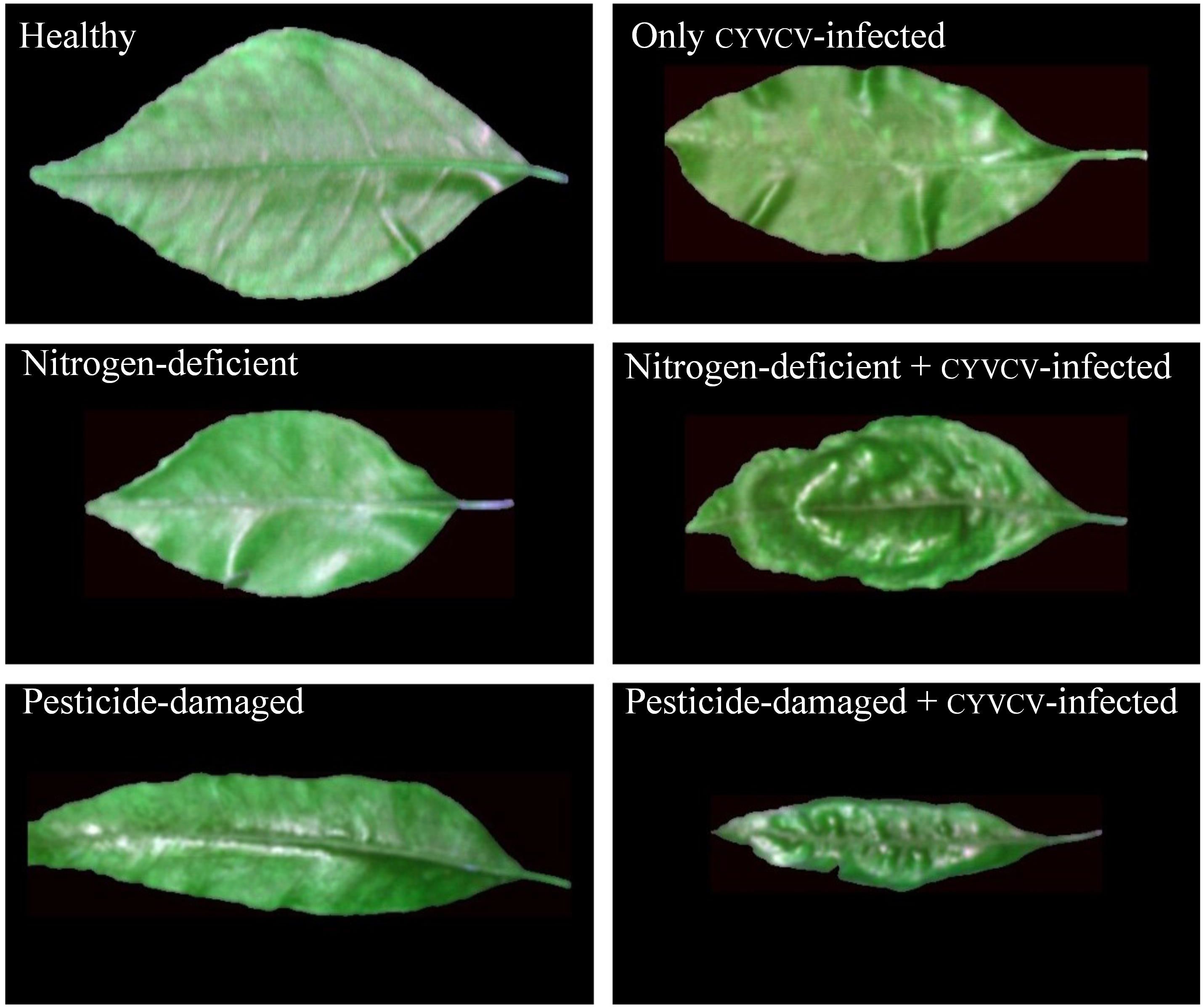

Totaling 522 4-month-old leaves were collected from lemon trees grown in a lemon orchard in Tongnan District, Chongqing City, China, in 2022. This orchard is a newly documented spread area for CYVCV, with only a few plants infected. Plant protection experts have identified the infected leaves and classified them into six groups on the basis of the presence, absence, or combination of disease symptoms (Figure 1): healthy (90 leaves, no symptoms of disease, nutrient deficiency, or pesticide damage), CYVCV-infected only (90 leaves, exhibiting specific symptoms of CYVCV with no signs of nutrient deficiency or pesticide damage), nitrogen-deficient (79 leaves, displaying nitrogen deficiency symptoms) without CYVCV infection or pesticide damage, nitrogen-deficient + CYVCV-infected (81 leaves, showing combined symptoms of nitrogen deficiency and CYVCV infection, including yellowing leaves with viral vein clearing or distortion), pesticide-damaged (90 leaves, With morphological signs of pesticide injury but no CYVCV infection or nutrient deficiency), and pesticide-damaged + CYVCV-infected (92 leaves, presenting mixed symptoms of pesticide damage and CYVCV infection). This orchard is a newly documented spread area for yellow vein disease, with only a few plants infected. All CYVCV-infected-free plants are located outside a range of 1000 m from the infected ones. The healthy, nitrogen-deficient only, and pesticide-damaged only groups were collected from the non-infected areas of the orchard. During sample categorization, the leaves of all diseased groups showed mild, early-stage, or indistinct symptoms.

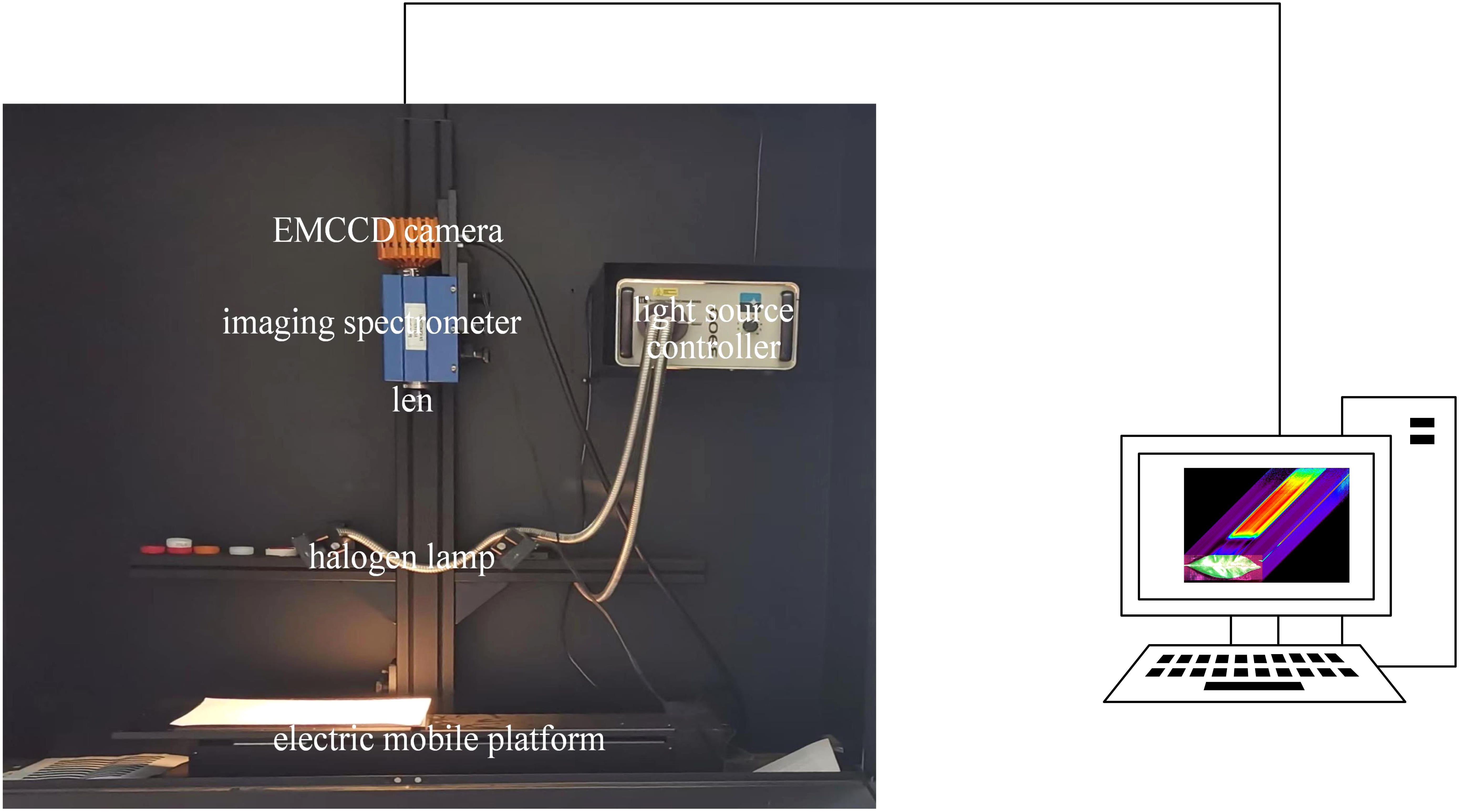

We used clean water to wash the leaves, and subsequently acquired the hyperspectral images with the HSI acquisition system (Figure 2). The HSI acquisition system comprised a darkbox (a light-tight enclosure), a hyperspectral imaging spectrometer (ImSpector V10E, Spectral Imaging Oy Ltd, Finland), an electric moving platform and controller (SC30021A, Zolix, China), two halogen light sources (150 W/21 V halogen lamp, Illuminator Technologies, Inc., USA) and a laptop. The spectrometer operated over a spectral range of 305–1090 nm. The halogen lamps were positioned at a 45°angle relative to the sample, with a fixed lens distance of 45 cm. The motorized stage moved at a speed of 1.87 mm/s. Before image acquisition, the system was preheated for 20 minutes to ensure stability and all measurements were conducted at an ambient temperature of 25°C to maintain consistent environmental conditions.

To eliminate the effects of uneven illumination and dark current noise, the white reference hyperspectral image was obtained by scanning a standard white board with 99% reflectance and a dark reference hyperspectral image was obtained by scanning with the lens closed. The original hyperspectral image was corrected by using the following Equation 1:

Where Iraw represents the raw hyperspectral image, Idark represents the dark reference image, Iwhite represents the white reference hyperspectral image and Ic represents the corrected hyperspectral image.

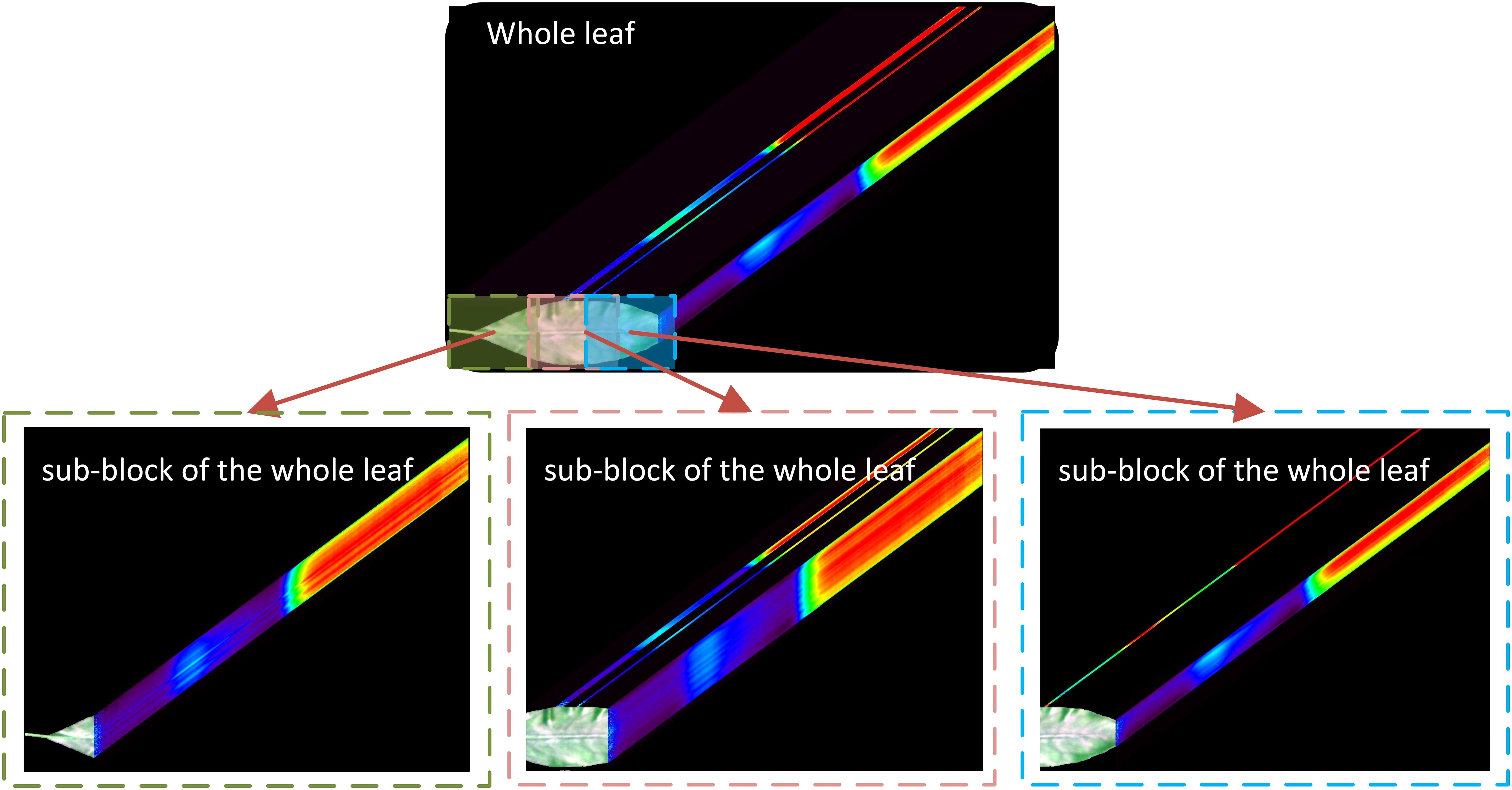

Hyperspectral images datasets were randomly divided into training, validation, and testing sets in a 60:20:20 ratio. The high cost of obtaining hyperspectral images had limited the data set available for this study, which is insufficient for deep learning. To address this limitation, a whole leaf was divided into 2–4 sub-blocks after the initial division into sample sets by referring to the methods of Park et al. (2018) and Nagasubramanian et al. (2019). The segmented whole leaves and their corresponding sub-blocks hyperspectral images were subsequently utilized for subsequent data analysis. The detailed segmentation method is illustrated in Figure 3, where the overlap rate between every two sub-blocks is less than 30%. To avoid data leakage, the segmentation was performed after the datasets had been divided into training, validation, and test sets.

To ensure robustness and reduce variability in terms of CNNs model performance, we implemented 5-time repeated random splitting on the combined training and validation set (80% total data). In each repetition, 60% of the total data was randomly selected as training set with preserved class distribution, while 20% of total data served for validation. The independent testing set (20%) remained strictly isolated for final evaluation. Dunn’s test with Bonferroni correction was used to analyze the significant differences.

2.2 Traditional processing of hyperspectral images

2.2.1 ROI segmentation and spectra extraction

At 800 nm, the spectral reflectance of the background and that of the leaf exhibited significant differences, which represents a characteristic facilitating the accurate separation of leaves from the background. Specifically, The grayscale image obtained at 800 nm was processed by using Otsu’s method (Yin et al., 2022). With the optimal segmentation threshold automatically determined, the grayscale images were partitioned into two classes: leaves (foreground) and background. This process generated a binary mask where pixel values of zero and one respectively denote the background and leaves. Then mask was applied to the full-band hyperspectral data cube, retaining only the spectral information of the leaf region while excluding background noise, thus achieving precise segmentation of leaves from the background. Finally, the spectral data of all pixels within the ROI of the leaf were averaged, and the mean spectrum was used for subsequent feature wavelength extraction and modeling.

2.2.2 Characteristic wavelength extraction and modeling

To eliminate noise at the extremes of the spectrum and facilitate subsequent analysis, we used spectral bands ranging from 400 nm to 1000 nm, which encompassed 761 wavelengths. Standard normal variate (SNV) (Barnes et al., 1989) was used to mitigate the effects of diffuse reflection, which is induced by uneven particle distributions, varying particle sizes, and differences in path length. This method was chosen over others (e.g., multiplicative scatter correction, MSC) due to its superior performance in preliminary tests. Given the high correlation among adjacent spectral bands, the Successive Projections Algorithm (SPA) (Ye et al., 2008) and Competitive Adaptive Reweighted Sampling (CARS) (Li et al., 2009) were applied for characteristic wavelengths extraction. This approach aimed to reduce redundancy and interference, thus alleviating the computational load on the hardware. Support Vector Machine (SVM) (Boser et al., 1992) and Partial Least Squares Discriminant Analysis (PLS-DA) are well -known traditional supervised learning algorithms. In this study, both SVM and PLS-DA were employed for YVCD identification. During the modeling process, the grid search algorithm was carried out to optimize the kernel function parameters for SVM and the number of principal components for PLS-DA.

2.3 Processing of hyperspectral images via CNNs

2.3.1 Dataset preparation

To standardize input dimensions and preserve central features, zero-value centered padding was applied to resize the ROIs to 112×112 pixels, as this size balances spatial resolution with GPU memory constraints. Subsequently, data augmentation was performed by using flip, rotation, and mirror techniques. Finally, the number of samples per type expanded to approximately 2000. The quality of the dataset significantly affects the training. Directly utilizing high-dimensional hyperspectral data for modeling not only strains computational resources but also degrades prediction accuracy due to the curse of dimensionality and redundant spectral features (Ram et al., 2024). Although CNNs can automatically extract features, it is essential to reduce the dimensionality of hyperspectral images to lower the training cost and enhance the prediction accuracy, especially when high spatial resolution or large spatial dimensions are used. In this study, hyperspectral images composed of extracted feature wavelengths were used for subsequent deep learning processes.

2.3.2 CNN framework

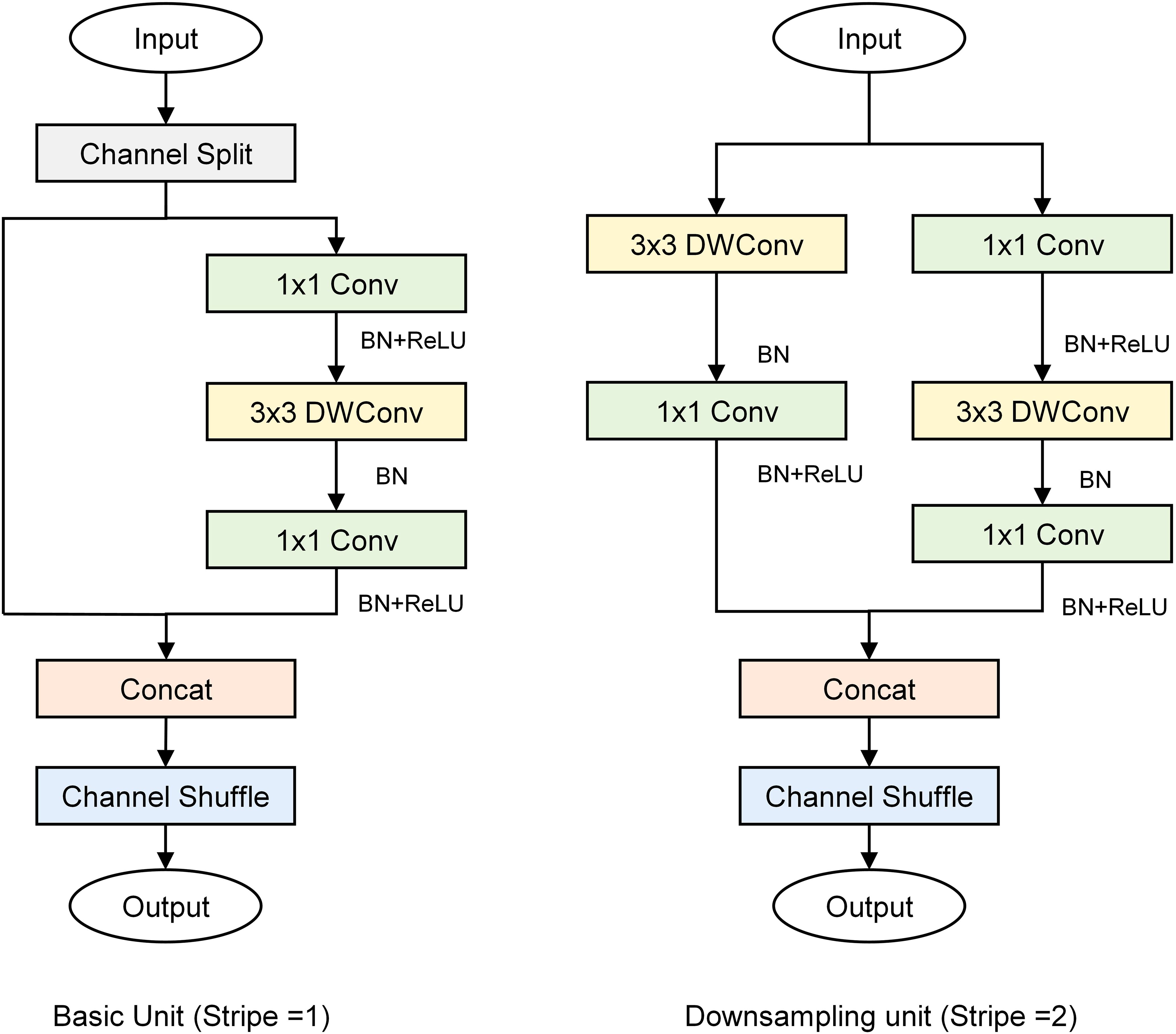

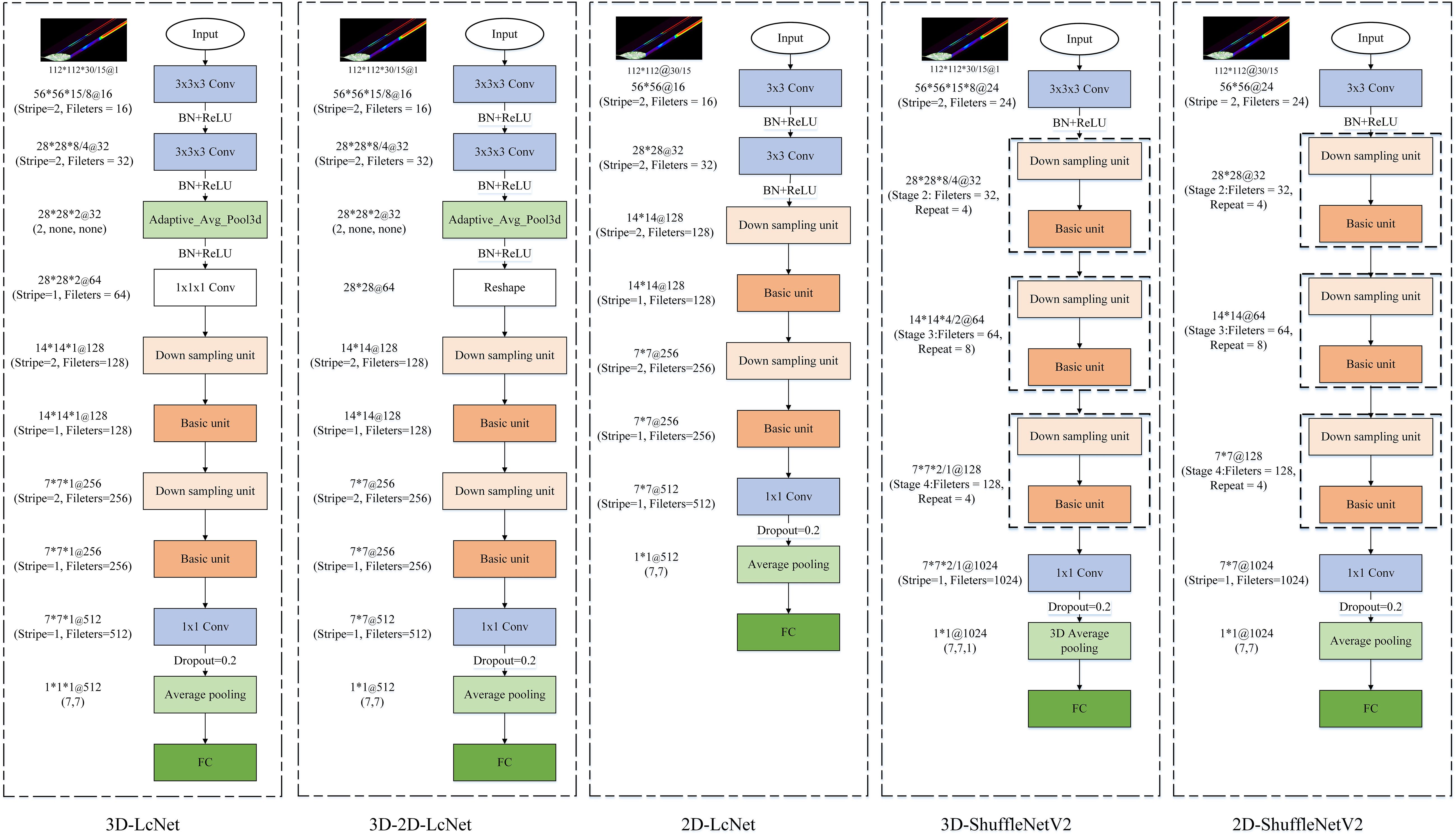

ShuffleNetV2 (Ma et al., 2018) is a lightweight CNN that adopts grouped convolutions grouped convolution, depthwise separable convolution, and channel shuffle techniques to guarantee both its speed and accuracy. In ShuffleNetV2, the basic and down sampling units are the primary building blocks of the network (Figure 4). The basic unit introduces channel splitting, pointwise convolution, deep convolution, and channel shuffling to effectively balance the number of channels and spatial information processing, and enable the network to maintain high accuracy with low computational complexity. The down sampling unit splits the input into two parts, effectively reducing the feature map size while increasing the number of channels, thereby enhancing feature extraction.

This study presents 3D-2D-LcNet, a lightweight hybrid CNN architecture. Unlike conventional hybrid CNNs that employ standard 2D convolution blocks, our design integrates optimized ShuffleNetV2 basic units and downsampling units in the post-3D processing stage. Compared with the original ShuffleNetV2 architecture, these units are adapted by reducing layer repetitions and lowering channel dimensions to strike a balance between computational efficiency and feature extraction capability. Specifically, the channel shuffle mechanism in ShuffleNetV2 basic units enables cross-group feature channel mixing to enhance inter-channel information interaction, while its downsampling units minimize information loss during spatial dimension reduction. These improvements maintain the 3D to 2D sequential processing pipeline consistent with traditional models, and enable direct processing of large-scale hyperspectral images (112×112 pixels), effectively addressing the application limitation of conventional models that can only handle small-sized inputs (e.g., ≤15×15 pixels). The network processes input hyperspectral cubes (112×112×N, where N=15 or 30 spectral bands) through two initial 3×3×3 3D convolutional layers, with each followed by batch normalization and ReLU activation function for effective feature extraction and training stabilization. Subsequently, a 3D adaptive average pooling layer is employed to reduce the spectral dimension from 8 (or 4, depending on the preceding convolutional stage) to 2. The output is then reshaped into a 28×28×64 tensor, enabling a seamless transition to 2D convolutional processing for spatial feature extraction. The reshaped feature maps contain an excessive number of channels. Modified ShuffleNetV2 units are introduced for these reshaped features: (1) a downsampling unit (stride=2) to shrink spatial resolution to 14×14 and enlarge the channels to 128; (2) a basic unit (stride=1) to keep the 14×14×128 resolution through channel shuffling and pointwise convolutions; (3) a second downsampling unit (stride=2) to further decrease resolution to 7×7 and raise the channels to 256; and (4) a final basic unit (stride=1) to retain the 7×7×256 features. Finally, the network concludes with a 1×1 convolution expanding channels to 512, global average pooling, dropout (p=0.2) for regularization, and a softmax classifier, enabling efficient hyperspectral image analysis through this optimized 3D-2D hybrid architecture.

To evaluate the performance of 3D-2D-LcNet in processing hyperspectral images for identifying YVCD, a comparative analysis was performed on the results generated by 3D-2D-LcNet, 3D-LcNet, 3D-ShuffleNetV2, 2D-LcNet, and 2D-ShuffleNetV2. Here LcNet refers to a lightweight convolutional network designed for efficient spatial-spectral feature fusion. For both 3D-ShuffleNetV2 and 2D-ShuffleNetV2, the width_mult was set to 0.25. Specifically, the output channels of the first and last convolutions were configured as 24 and 1024, respectively. The output channels of Stage 2, Stage 3, and Stage 4 were set to 32, 64, and 128, with repetition counts of 4, 8, and 4, respectively. The detailed architectures of the CNNs are shown in the Figure 5.

2.4 Experimental environment and evaluation

MATLAB R2020a was utilized for ROI segmentation, spectral extraction, and feature wavelength extraction. The training of the CNN models was carried out in the Anaconda environment via Python 3.9.13, PyTorch 1.11, and CUDA 11.3. The computer configuration comprised an ubuntu 20.04 system equipped with a 16-core Intel(R) Xeon(R) Gold 6430 CPU, an NVIDIA RTX 4090 GPU (24 GB VRAM), 120 GB RAM, and 1 TB storage.

In the CNN model training, the StepLR learning rate decay strategy was implemented to adjust the learning rate in order to enhance convergence and performance. The initial learning rate was set to 0.001, decaying to 50% of its previous value every 20 epochs. The total number of epochs was set to 200. The cross-entropy loss function was used. The optimizer used was the adaptive moment estimation (Adam) optimizer with its default parameters. The batch size was set to 64.

The confusion matrix along with precision, recall, F1-score, accuracy, and Matthews Correlation Coefficient (MCC) were used to evaluate the model performance. The calculation equations are presented in the following Equations 2-6:

Where TP, TN, FP, and FN respectively denote true positive, true negative, false positive and false negative.

To assess practical deployability, we quantified model efficiency using PyTorch’s built-in utilities: parameter count was obtained by torchinfo.summary(), FLOPS were calculated with the flops-counter package for a typical input size of 112×112×bands (bands =30 or 15), training time was logged by using NVIDIA CUDA events on an RTX 4090 GPU, and testing time represented the total inference duration for all independent samples in the test set (3,304 samples). Units were reported as millions of parameters (M), giga-floating-point operations (G-FLOPS), seconds (s) for training and testing.

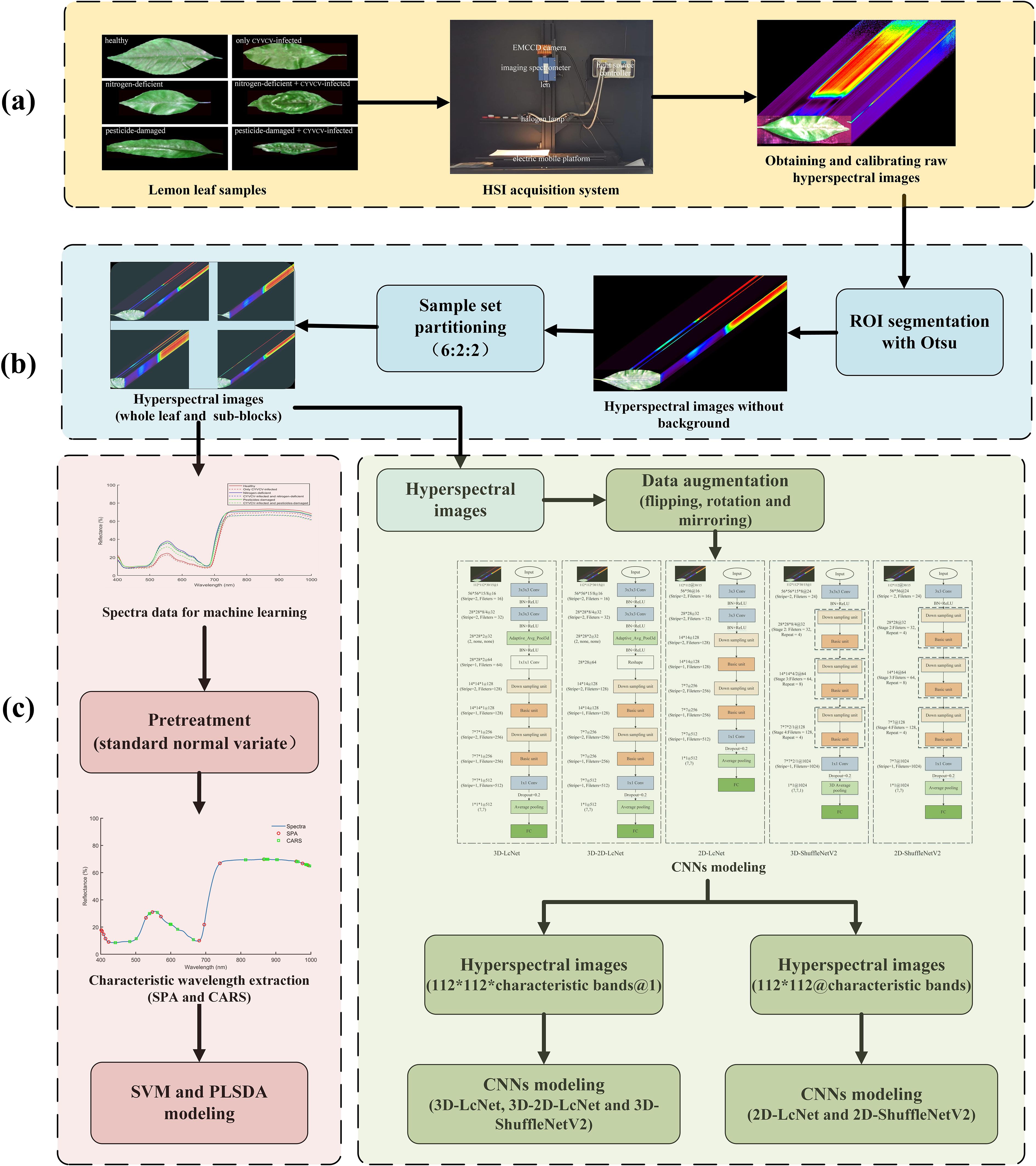

The workflow is shown in Figure 6.

Figure 6. Research workflow. (a) Hyperspectral image acquisition; (b) ROI segmentation and spectra extraction; (c) characteristic wavelength extraction and modeling; (d) processing of hyperspectral images via CNNs.

3 Results and analyses

3.1 Spectral analysis and wavelength selection

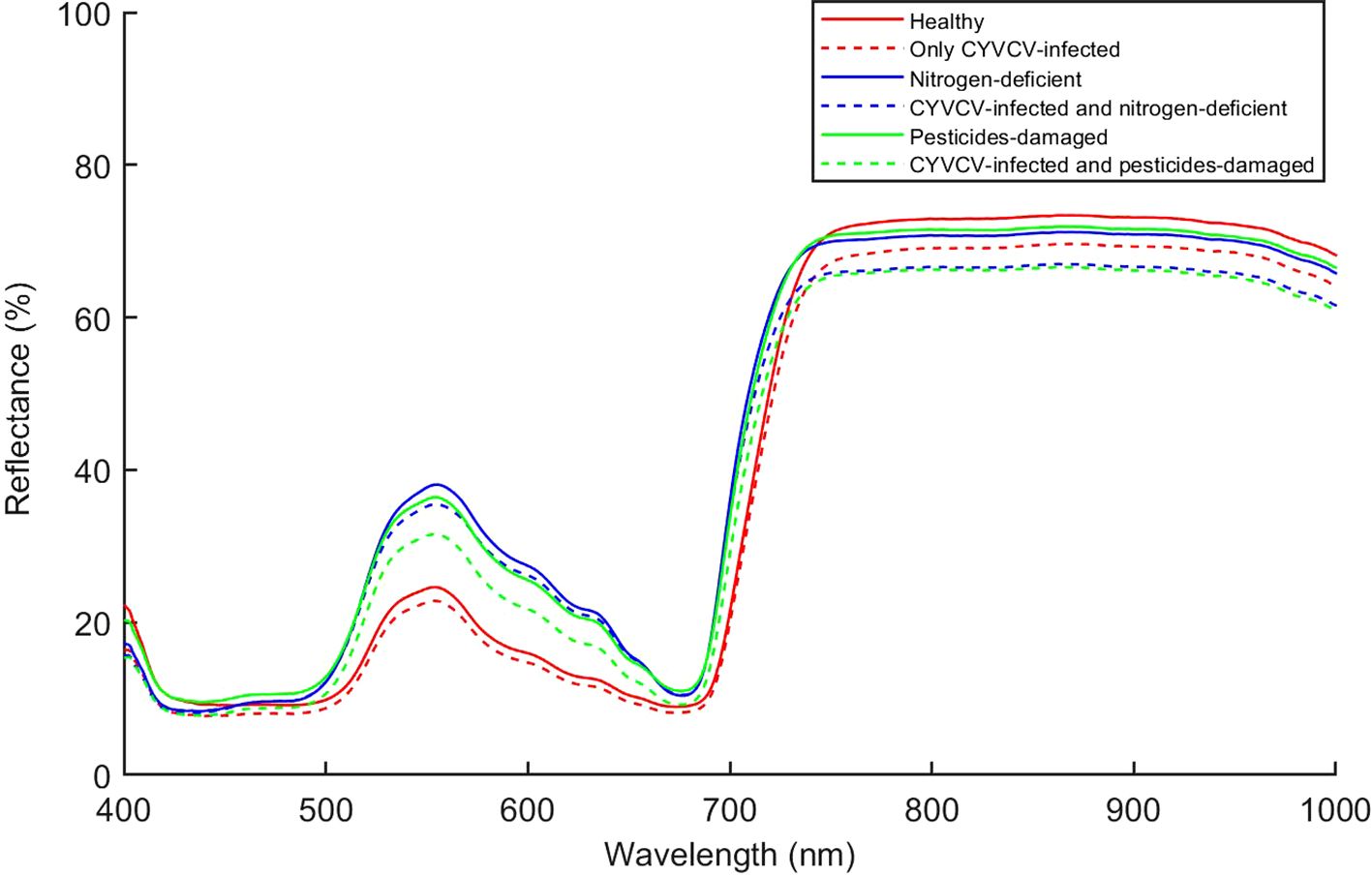

A lemon tree can often be infected with multiple diseases at the same time. In this study, we analyzed the types of lemon leaves infected with composite diseases, including YVCD, in field environments. Figure 7 shows the average spectral reflectance of different types of lemon leaves. The average spectral curves of lemon leaves of different types are generally similar and exhibit the typical spectral reflection characteristics of green plants (Gates et al., 1965; Gitelson and Merzlyak, 1996). Within the 500–680 nm range, we found that the spectral reflectance of healthy leaves and that of those infected only with CYVCV are significantly lower than that of other leaf types. This phenomenon is associated with differences in the chlorophyll content of these leaves (Gitelson et al., 2003) Within the 750–1000 nm range, uninfected leaves show significantly higher reflectance than that of CYVCV-infected leaves, which result from leaf-cell-structure changes and water content caused by CYVCV (PeÑUelas et al., 1993; Wang et al., 2022). Within full bands, the spectral reflectance curves of some leaf types are similar and even overlap at certain wavelengths. These findings indicate differences in terms of the average spectral reflectance among different types of lemon leaves. Therefore, identifying YVCD using leaf spectral information is feasible. However, to achieve higher identification accuracy, it is necessary to further extract and effectively utilize spectral features.

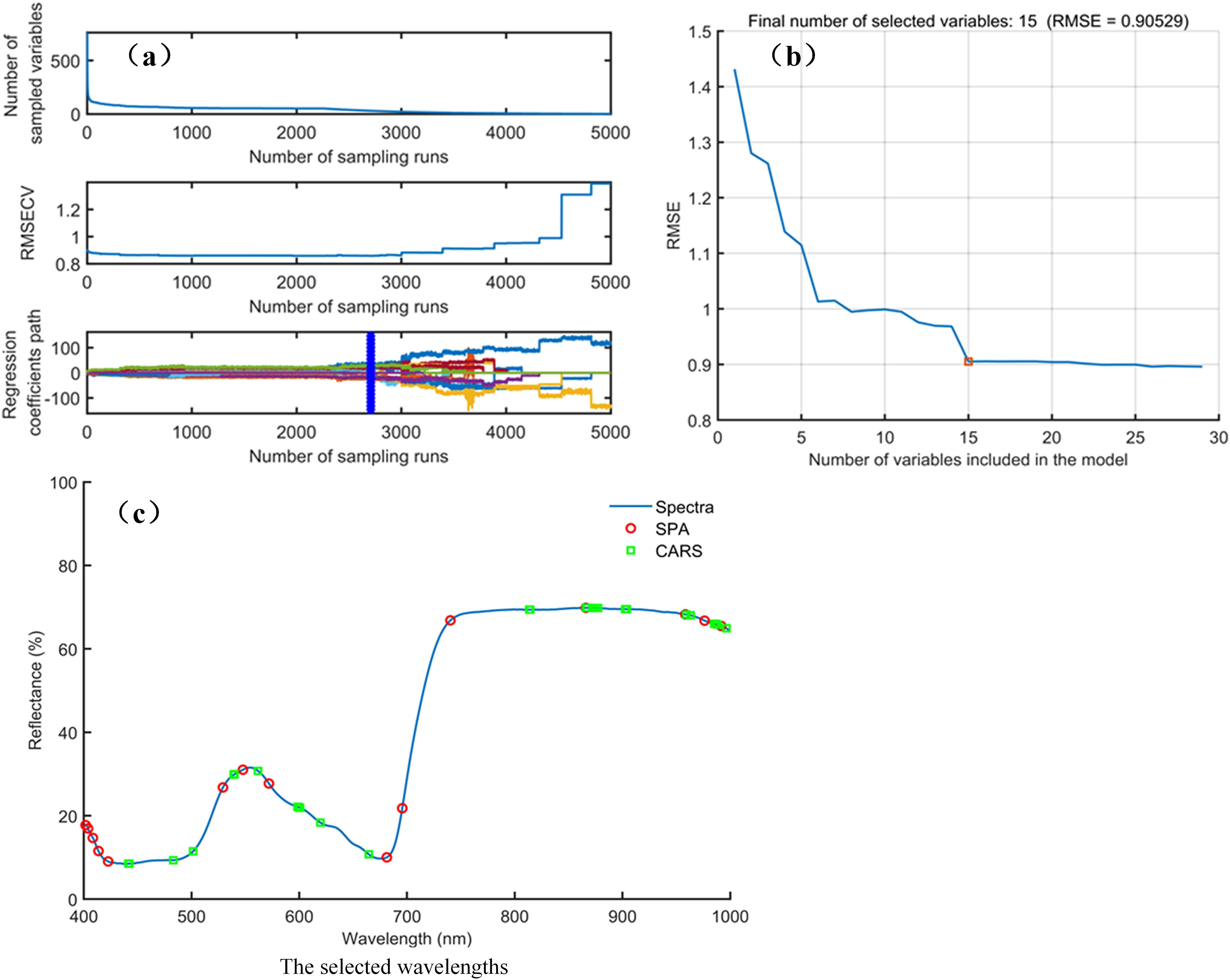

The processes and results of selecting characteristic wavelengths for distinguishing different types of lemon leaves by using the SPA and CARS are shown in Figure 8. In process of CARS (Figure 8a), as the sampling time increases, the selected wavelength number gradually decreases. The lowest root-mean-square-error-of-cross-validation (RMSECV) value was obtained at the 2708th sampling. CARS selected 30 characteristic wavelengths (441, 442, 483, 501, 539, 540, 562, 598, 599, 600, 601, 620, 665, 813, 814, 866, 867, 872, 875, 877, 902, 904, 959, 963, 985, 986, 987, 988, 996, and 1001 nm), primarily distributed in the 441–665 nm and 813–1001 nm ranges (Figure 8c). In process of SPA (Figure 8b), it is evident that as the number of selected variables increases, the root mean square error of prediction (RMSEP) decreases. When 15 wavelengths were chosen, the RMSEP showed no significant decrease. The SPA selected 15 characteristic wavelengths (402, 404, 408, 414, 423, 529, 548, 572, 681, 696, 740, 866, 958, 976, and 992 nm), distributed in the “green peak” (550 nm), “red edge,” (680–750 nm) and “high reflection platform” (750–1000 nm) (Figure 8c). The results indicate that due to the different characteristic wavelength selection algorithms used, there were inconsistencies in the numbers and positions of the characteristic wavelengths for identifying various types of lemon leaves.

Figure 8. The process and results of feature wavelength extraction. (a) The process of CARS; (b) The process of SPA; (c) The selected wavelengths.

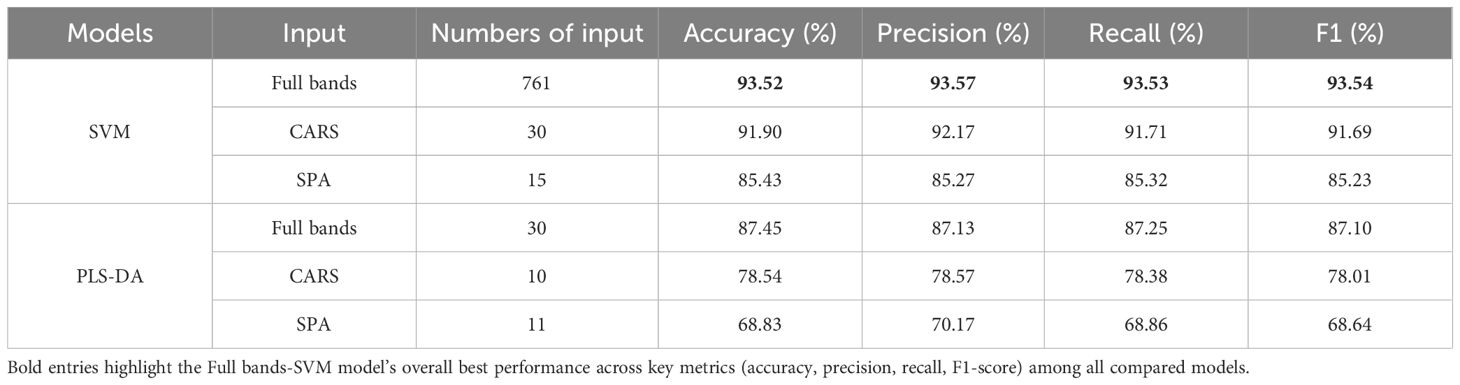

3.2 Results of SVM and PLS-DA

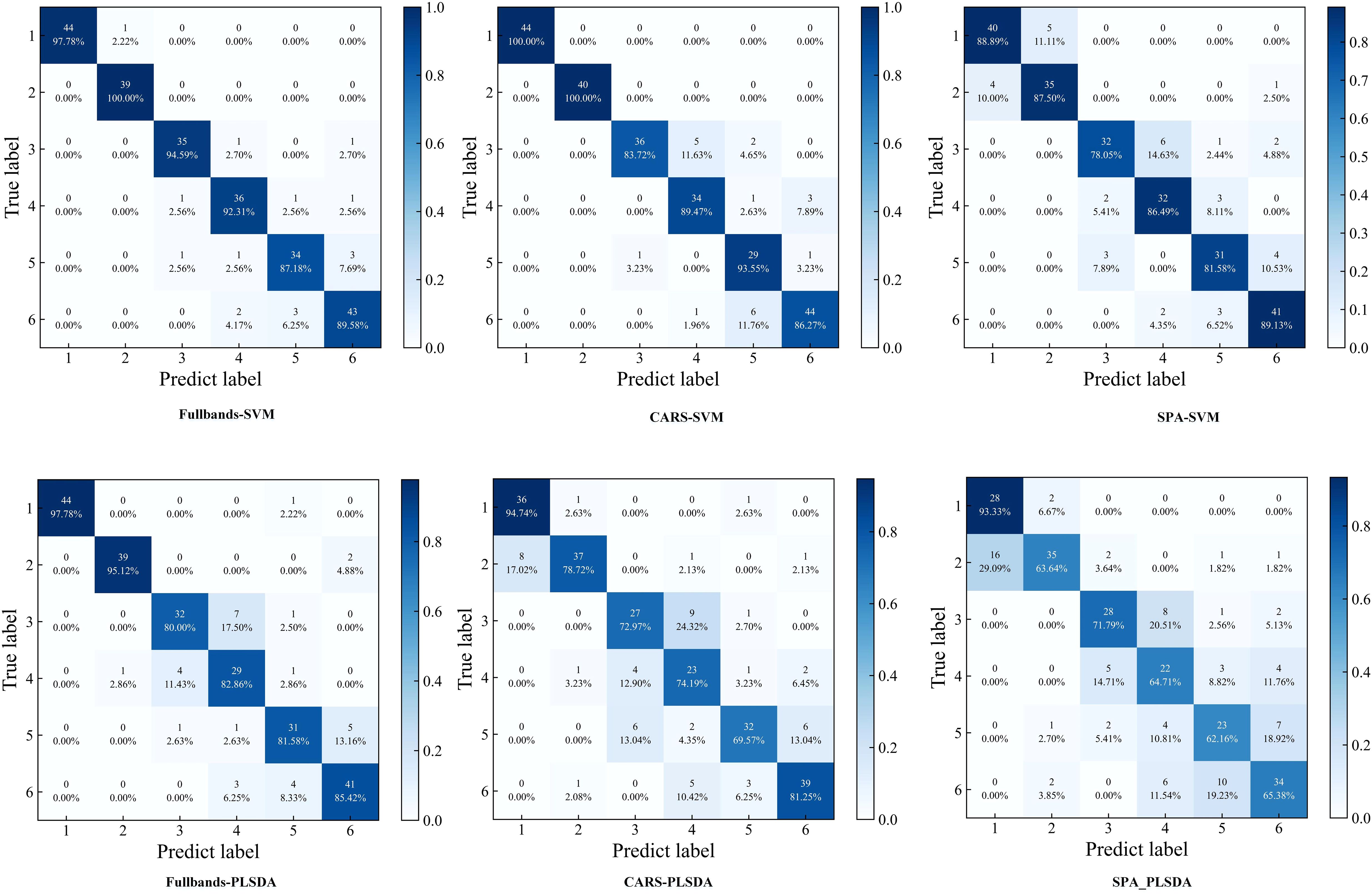

The prediction results of SVM and PLS-DA for identifying YVCD by using full-wavelength and characteristic wavelength spectral information are shown in Table 1 and Figure 9. The SVM and PLS-DA models using the full-band spectral information of 761 wavelengths achieved better prediction results, with accuracies of 93.52% and 87.45%, respectively, and exhibited high precision (93.57% and 87.13%), recall (93.53% and 87.25%), and F1-scores (93.54% and 87.10%), indicating balanced classification performance. The SPA-SVM and SPA-PLS-DA models built using the spectral data at the 15 characteristic wavelengths extracted by the SPA achieved the worst prediction results, having accuracies of only 85.43% and 69.83%, respectively. Their precision (85.27% and 70.17%), recall (85.32% and 68.86%), and F1-scores (85.23% and 68.64%) also demonstrated notable declines, suggesting poor generalization ability for these simplified models. Extraction of characteristic wavelengths by CARS and the SPA did not improve the identification performance. This may be the result of selecting only part of the wavelengths, with some spectral information relevant to disease identification potentially lost, thus leading to a decrease in model performance (Qiao et al., 2022). The CARS-SVM model built with the 30 wavelengths selected by CARS achieved a prediction accuracy of 91.90%, which is relatively close to the full-band SVM prediction accuracy. CARS selected 30 variables from 761 wavelengths, greatly reducing the complexity of inputs and computations, which is significant for reducing computational costs in practical applications. Therefore, the CARS-SVM model is the optimal spectral-data model.

Figure 9. Confusion matrixes of SVM and PLS-DA models. (1:healthy; 2:CYVCV-infected only; 3: nitrogen-deficient; 4: nitrogen-deficient + CYVCV-infected; 5:pesticide-damaged; 6: pesticide-damaged + CYVCV-infected).

The confusion matrix showed that the models based on full-bands and CARS achieved the highest accuracy in identifying healthy and only CYVCV-infected leaves in the test set. All models exhibited misclassifications among nitrogen-deficient, nitrogen-deficient + CYVCV-infected, pesticide-damaged, and pesticide-damaged + CYVCV-infected leaves. This misclassification is linked to the chlorophyll content of the samples. Studies have demonstrated that the chlorophyll content of healthy and that of only CYVCV-infected leaves are significantly higher than that of other categories, whereas the difference in chlorophyll content among other categories is less pronounced (Wang et al., 2022; Li et al., 2022). As shown by the spectral curves in Figure 7, the spectral reflectance of healthy and only CYVCV-infected leaves within the 400–700 nm wavelength range—closely associated with chlorophyll content—is significantly lower than that of other leaves. In contrast, the differences in spectral reflectance between the other types were not significant within 400–700 nm range. In SPA-SVM, healthy leaves were often confused with only CYVCV-infected leaves. This is attributed to the minimal difference in the chlorophyll content of these two types. Furthermore, the SPA only selected four wavelengths (866, 958, 976, and 992 nm) within the 800–1000 nm range, which is related to the leaf tissue structure. These four wavelengths may be insufficient to fully distinguish between all healthy and only CYVCV-infected leaves. These results suggest that spectral information-based classification models can achieve high accuracy in identifying plant leaves with significantly reduced chlorophyll contents, but are less effective in distinguishing complex diseases.

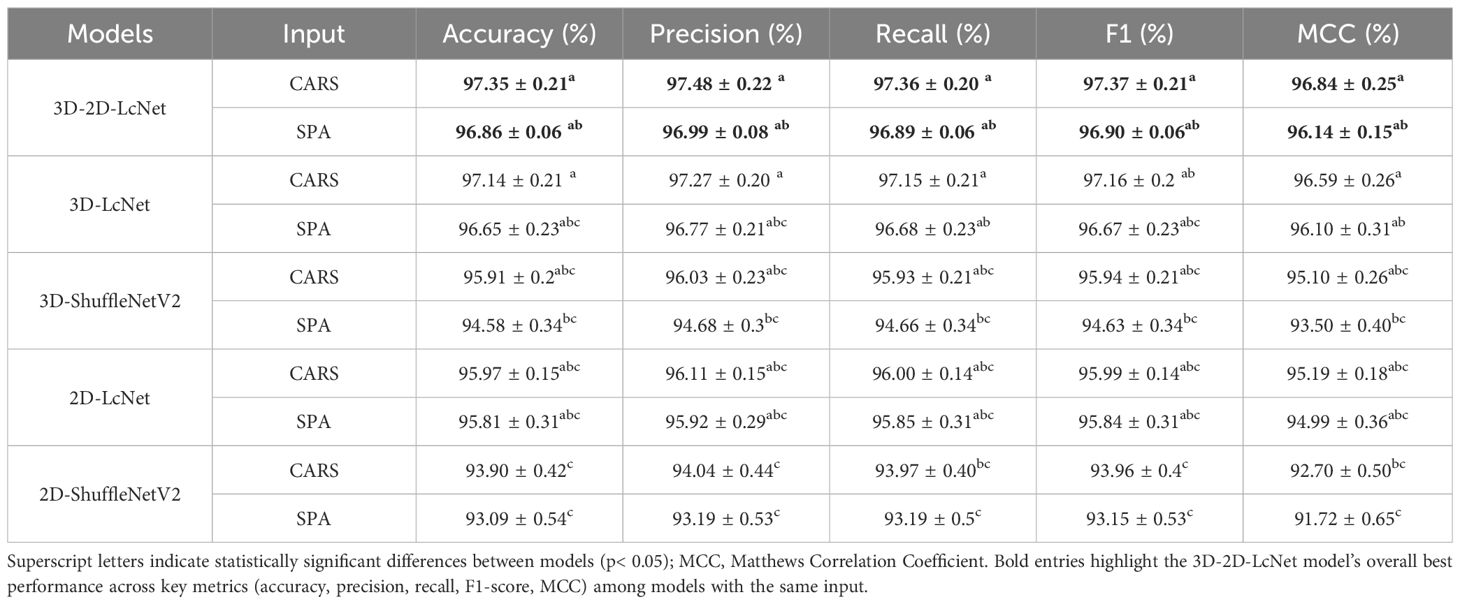

3.3 Results of CNNs

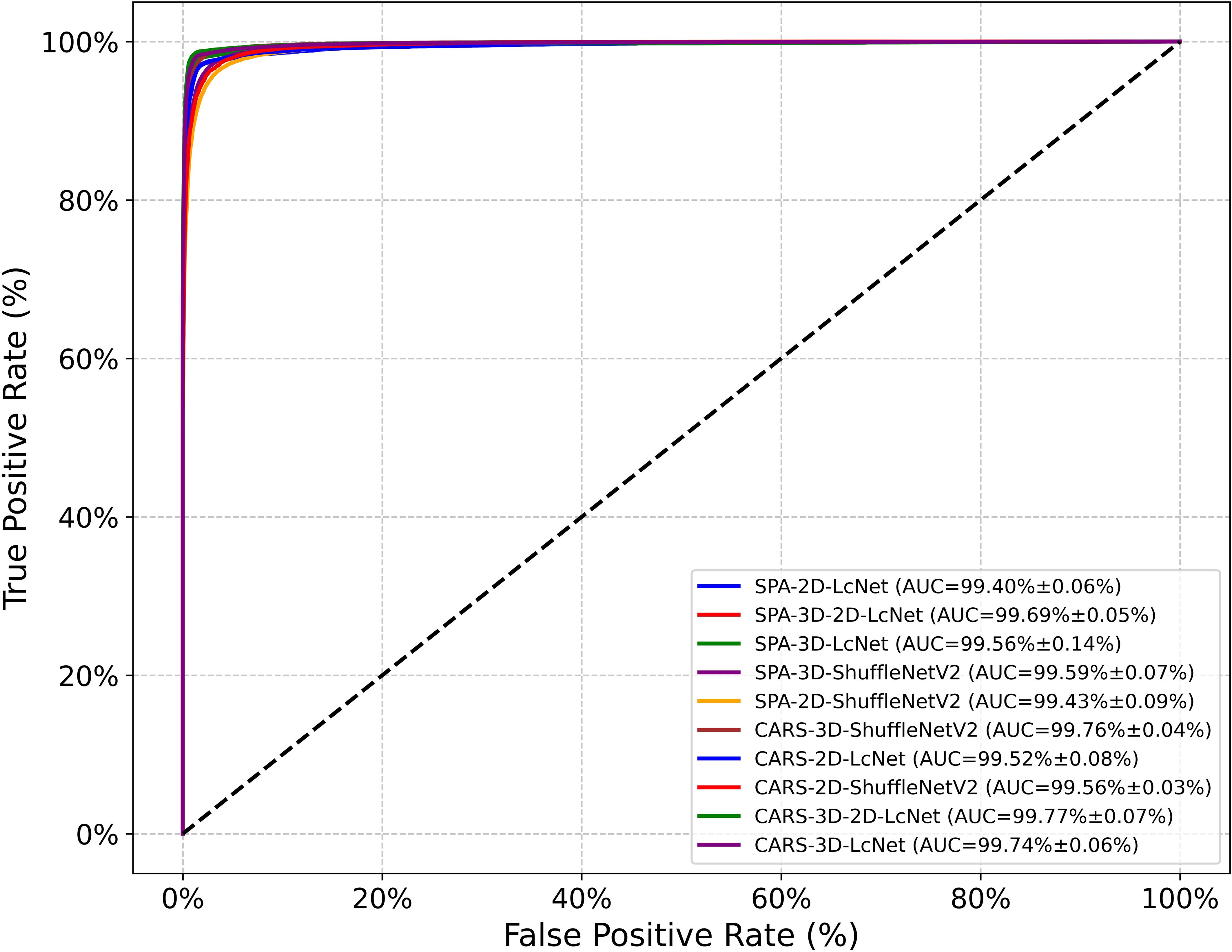

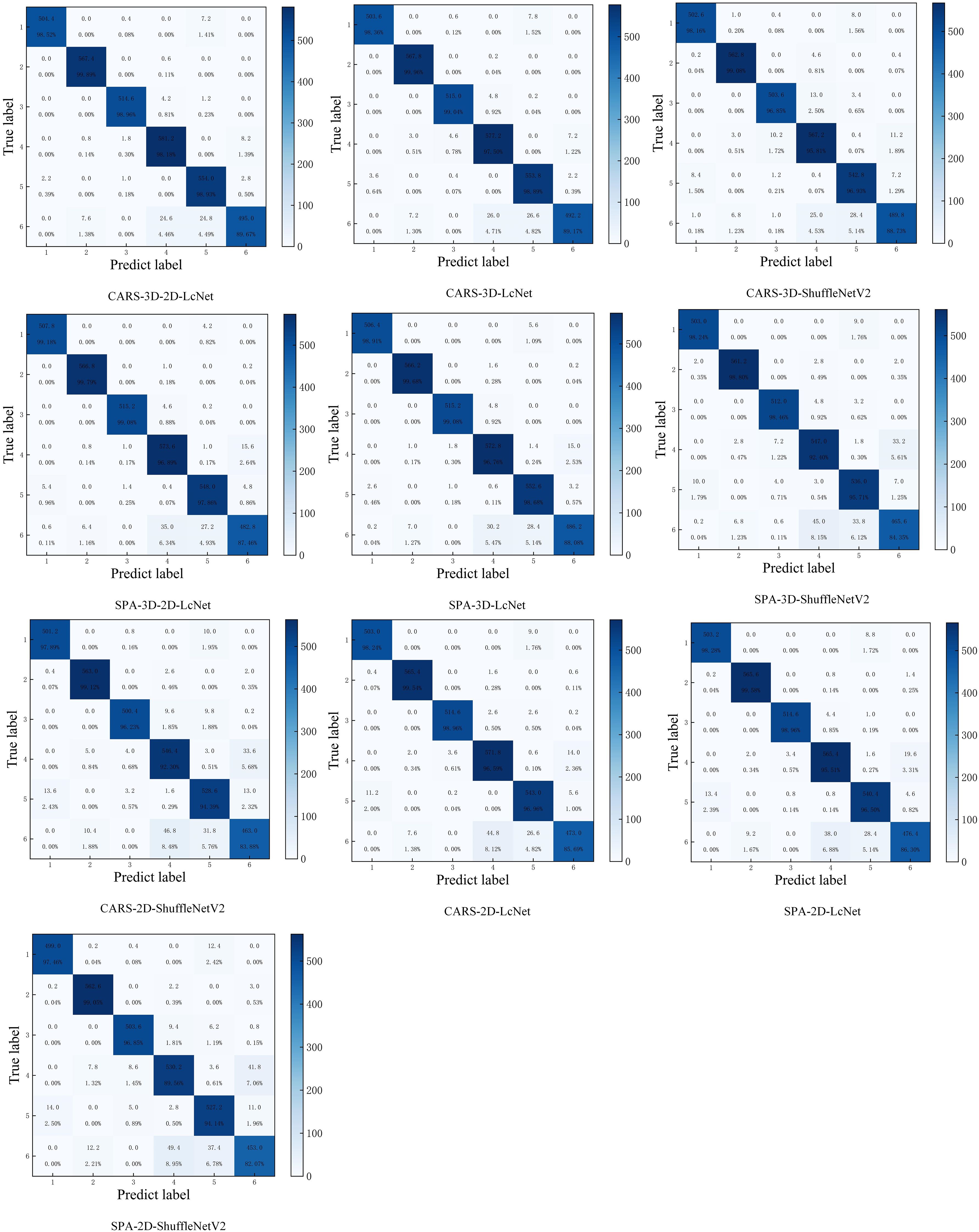

In this study, hyperspectral images composed of gray images at SPA and CARS feature wavelengths were used as the dataset to train CNN models. As shown in Tables 2, 3, and Figure 10, the performance evaluation metrics of the CNN models in testing set are presented. The mean accuracy, precision, recall, F1 score and MCC values of all CNN-based models exceeded 91.72%, with the 3D-2D-LcNet model demonstrating the highest overall performance. Specifically, for CARS input, 3D-2D-LcNet achieved 97.35 ± 0.21% accuracy, 97.48 ± 0.22% precision, 97.36 ± 0.20% recall, 97.37 ± 0.21% F1-score, and 96.84 ± 0.25% MCC. These values significantly surpass both 2D counterparts (2D-LcNet: 95.97 ± 0.15% accuracy; 2D-ShuffleNetV2: 93.90 ± 0.42% accuracy) and other 3D models (3D-ShuffleNetV2:95.91 ± 0.20% accuracy). For SPA input, the 3D-2D-LcNet outperformed all 2D and most 3D architectures across metrics, with 96.86 ± 0.06% accuracy, 96.99 ± 0.08% precision, 96.89 ± 0.06% recall, 96.90 ± 0.06% F1-score, and 96.14 ± 0.15% MCC. The performance differences among the models are also evident in their ROC curves (Figure 10). The area under the curve (AUC) is a key metric for evaluating the performance of binary classification models based on ROC curves. As shown in the ROC curves of CNN models, the 3D-2D-LcNet models demonstrated remarkable superiority in classification performance. Specifically, the CARS-3D-2D-LcNet model achieved the highest AUC of 99.77 ± 0.07%, significantly outperforming its 2D counterpart (CARS-2D-LcNet, 99.52 ± 0.08%) and other 3D models such as CARS-3D-ShuffleNetV2 (99.76 ± 0.04%). The SPA-3D-2D-LcNet model also excelled with an AUC of 99.69 ± 0.05%, surpassing SPA-2D models (e.g., SPA-2D-ShuffleNetV2, 99.43 ± 0.09%) and highlighting the robustness of the 3D-2D-LcNet architecture across different input modalities. This performance advantage is attributed to the model’s ability to integrate 3D convolution for capturing spatial-temporal features, enhancing discriminative power between positive and negative classes compared to traditional 2D models. Collectively, the ROC curve analysis further validates that the 3D-2D-LcNet model outperforms other architectures, consistent with the superior metrics observed in accuracy, precision, F1-score and MCC analyses. These results demonstrate that the hybrid 3D-2D-LcNet effectively extract both spectral and spatial features from hyperspectral images, thereby leveraging the advantages of hyperspectral imaging more effectively.

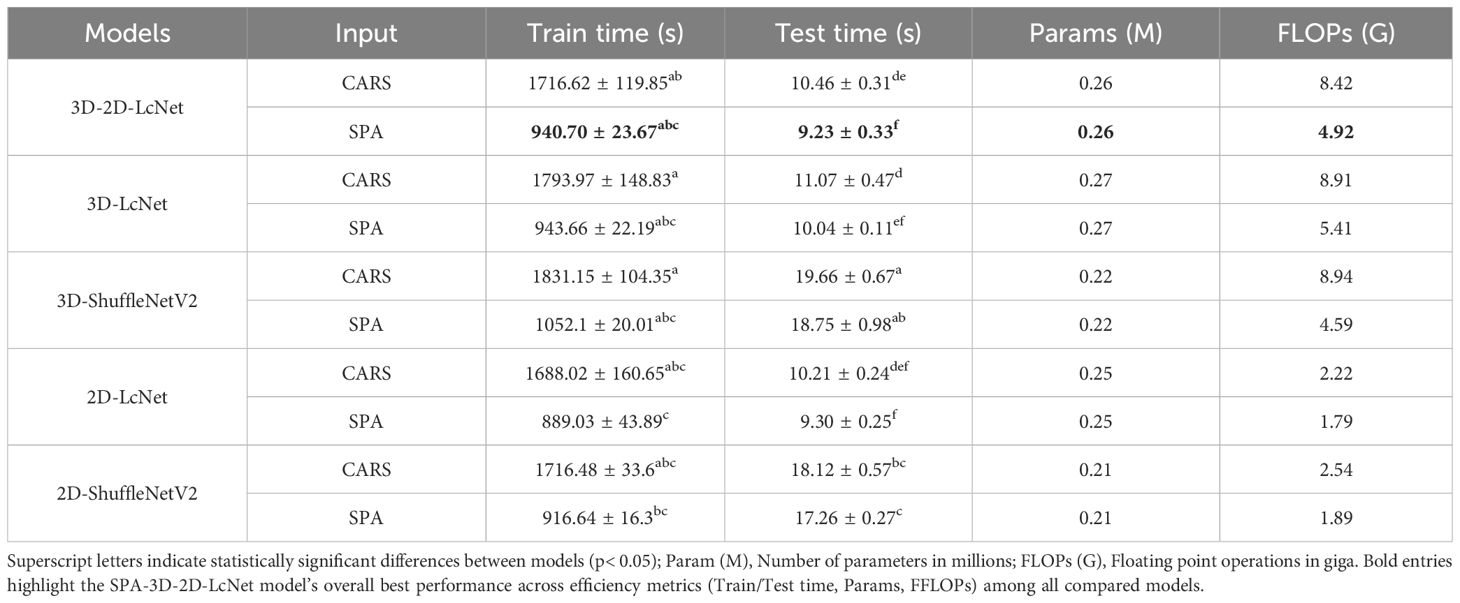

In terms of model complexity, the parameter counts of the 3D convolution models (3D-2D-LcNet, 3D-LcNet, and 3D-ShuffleNetV2) were not significantly higher than those of their 2D counterparts. For instance, the 3D-2D-LcNet and 3D-LcNet both have 0.26 million and 0.27 million parameters, respectively, which is comparable to the 0.25 million parameters of 2D-LcNet. Regarding training cost, the 3D convolution models (3D-LcNet and 3D-ShuffleNetV2) exhibited higher FLOPs, training time, and testing time than 2D models (2D-LcNet and 2D-ShuffleNetV2). Specifically, the 3D-LcNet required 1,793.97 seconds of training time on the CARS dataset, which is 6.2% longer than the CARS-2D-LcNet (1,688.02 seconds). However, the hybrid 3D-2D-LcNet demonstrated a unique advantage in that its training time and test time on both CARS and SPA datasets were shorter than that of pure 3D models. The hybrid 3D-2D-LcNet mitigated this trade-off by reducing FLOPs by 5.5% (8.42G vs. 8.91G) compared to 3D-LcNet while maintaining comparable accuracy.

The different dimension sizes in hyperspectral images result in differences in terms of the computational load and recognition accuracy. In this study, we compared the effects of hyperspectral image datasets at different characteristic wavelengths on CNN model training and performance. In terms of complexity, CNN models based on CARS clearly required significantly more computational resources than those based on SPA—particularly for CNNs incorporating 3D convolution modules. Regarding predictive performance, the evaluation metrics revealed no significant differences between the models with the same CNN architecture but different training datasets. These results demonstrate that hybrid 3D-2D-LcNet effectively balances computational complexity with feature extraction efficacy. Its robustness to spectral data of different wavelengths and efficient inference capabilities make it a versatile solution for hyperspectral images analysis.

The confusion matrix (Figure 11) shows that all the models achieved classification accuracies ranging from 93.07% to 100% of the five sample categories: healthy, only CYVCV-infected, nitrogen-deficient, nitrogen-deficient+ CYVCV-infected, and pesticide-damaged. However, the classification accuracy of pesticide-damaged+CYVCV-infected samples ranged from 77.17% to 89.86%, with misclassifications into only diseased, nitrogen-deficient+ CYVCV-infected, and pesticide-damaged categories. Overall, both traditional hyperspectral image classification methods and CNNs showed low accuracy in identifying pesticide-damaged+CYVCV-infected samples, and these samples were easily confused with only diseased, nitrogen-deficient+CYVCV-infected, and pesticide-damaged samples. This may be due to overlapping spectral features and insufficient sensitivity to subtle biochemical and structural variations. When the pesticide-damaged characteristics of a sample are not obvious, it is misclassified as CYVCV-infected, specifically as only diseased or nitrogen-deficient + CYVCV-infected. When the CYVCV-infected characteristics are not obvious, it was misclassified as pesticide-damaged. Only when both the pesticide-damaged and CYVCV-infected characteristics were prominent is it correctly classified as pesticide-damaged+CYVCV-infected.

Figure 11. Confusion matrix of CNN models. (The confusion matrix is generated by averaging the results of five repeated. (1: healthy; 2: CYVCV-infected only; 3: nitrogen-deficient; 4: nitrogen-deficient + CYVCV-infected; 5: pesticide-damaged; 6: pesticide-damaged + CYVCV-infected).

In summary, among all CNN models, the CARS-3D-2D-LcNet has consistently achieved the highest accuracy of 97.35 ± 0.21%, with no statistically significant difference from the SPA-3D-2D-LcNet. In contrast, the SPA-3D-2D-LcNet achieved an optimal balance between accuracy and efficiency by reducing computational resource consumption by 41.6% (4.92G vs. 8.42G FLOPs) and accelerating inference speed by 11.8%. Acquiring and transmitting high-dimensional hyperspectral images in practical deployment imposes higher demands on device storage, transmission, and computational capabilities. In resource-constrained scenarios, the SPA-3D-2D-LcNet significantly reduces deployment complexity and costs while maintaining nearly equivalent accuracy. Although the CARS-3D-2D-LcNet demonstrated peak performance under laboratory conditions, the SPA-3D-2D-LcNet better aligns with real-world requirements for limited resources and high real-time demands, offering a triple optimal solution in terms of accuracy, efficiency, and cost for practical implementation.

3.4 Comparison between deep learning and machine learning

Traditional hyperspectral image classification methods that use spectral information and machine learning are still widely utilized (Abdulridha et al., 2019; Qiao et al., 2022). Our results indicated a notable performance gap between CNN-based models and traditional machine learning models. The CNN-based models demonstrated accuracies ranging from 93.90% to 97.35%. Among CNN-based models, the CARS-3D-2D-LcNet model showed high accuracy across various classes, with most samples in each class being correctly classified. It achieved accuracies above 98.13% for healthy, only CYVCV-infected, nitrogen-deficient, nitrogen-deficient+CYVCV-infected, and pesticide-damaged samples, and maintaining 89.67% accuracy for pesticide-damaged+ CYVCV-infected samples. In contrast, the accuracies of machine learning-based models spanned from 68.83% to 93.52%. Among machine learning-based models, the fullbands-SVM outperformed best, while it achieved 87.18-97.78% accuracies for healthy, only CYVCV-infected, nitrogen-deficient, nitrogen-deficient + CYVCV-infected, and pesticide-damaged samples, only 85.29% of pesticide-damaged samples were correctly classified. This highlights that CNNs, with their ability to automatically extract hierarchical spatial-spectral features, outperform traditional methods in classifying complex composite diseases based on hyperspectral images. Meanwhile, traditional methods, which rely mainly on hand-engineered spectral features, can still deliver acceptable results for single-disease identification.

Although these CNN models have achieved good performance in the identification of YVCD based on hyperspectral images, hyperspectral data of high dimensionality requires substantial computational power for training. In particular, in 3D-CNN, the computational complexity increases exponentially. The high memory consumption and slow computational speed of CNN models during training and inference also make it difficult to deploy them on portable devices or edge devices (such as field monitoring devices). The proposed 3D-2D-LcNet, which combines lightweight modules and hybrid 3D-2D convolutions, alleviates the computational burden of traditional 3D-CNNs to some extent. However, the computational cost of 3D-2D-LcNet is still relatively high, and there is still a gap before it can be deployed on-site. In contrast, machine learning models exhibit unique advantages in field application due to their low computational complexity, low memory usage, and fast computation speed. They do not require large amounts of labeled data and can complete classification through artificially designed spectral features (such as spectral indices and band combinations), making them suitable for rapid deployment in resource-limited environments. This might account for why traditional hyperspectral image classification methods are still widely employed. However, the traditional methods fail to fully explore the subtle spectral-spatial information associations in hyperspectral data and cannot achieve satisfactory classification accuracy for complex composite diseases. Moreover, the performance of machine learning models can drop significantly when spectral data distribution shifts due to changes in environmental conditions. In contrast, although CNNs are limited in computational resources, their end-to-end learning mechanism can automatically adapt to data changes, giving them unique competitiveness in disease identification based on hyperspectral images.

4 Conclusion

In this study, we explored efficient and accurate methods for identifying YVCD in lemons using hyperspectral images. The results indicate that the four CNN architectures used in this study significantly outperformed traditional machine learning methods for YVCD identification. Among them, the innovative 3D-2D-LcNet stands out. By effectively integrating spatial and spectral feature extraction, it optimally balances computational complexity and feature extraction efficiency, significantly reducing computational load while maintaining high accuracy in extracting both spectral and spatial features from hyperspectral images. The CARS-3D-2D-LcNet model demonstrated best performance in identifying YVCD but faced challenges in practical deployment due to substantial computational demands and high-dimensional data requirements. In contrast, the SPA-3D-2D-LcNet achieves comparable performance with 41.6% lower FLOPs and 50% reduced data dimensionality, bringing hope for the field application of this hyperspectral image-based disease detection method. In conclusion, leveraging advanced CNN architectures can enhance the performance of hyperspectral imaging-based disease detection models. The 3D-2D-LcNet offers a highly effective and accurate approach for identification of YVCD in lemons, demonstrating its potential for practical applications in agricultural disease detection.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

XL: Software, Writing – original draft, Methodology. FP: Writing – review & editing, Data curation. ZW: Writing – review & editing, Data curation. GH: Writing – review & editing, Supervision.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by Natural Science Foundation of Chongqing (Grant Nos. CSTB2023NSCQ-MSX1018), Municipal financial scientific research project of Chongqing (Grant No. cqaas2023sjczqn001),the Chongqing Performance Incentive and Guidance Project for Scientific Research Institutions (Grant Nos. cstc2020jxjl80008 and cstc2022jxjl80021), Chongqing Municipal Financial Funds Technology Innovation Project (Grant No. KYLX20240500026), and Chongqing Modern Agricultural Industry Technology System (Grant No. CQMAITS202305).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdulridha, J., Batuman, O., and Ampatzidis, Y. (2019). UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 11, 1373. doi: 10.3390/rs11111373

Afloukou, F. and Önelge, N. (2021). Occurrence and molecular characterisation of citrus yellow vein clearing virus isolates in Adana province, Turkey. Physiol. Mol. Plant Pathol. 115, 101655. doi: 10.1016/j.pmpp.2021.101655

Barnes, R. J., Dhanoa, M. S., and Lister, S. J. (1989). Standard normal variate transformation and de-trending of near-infrared diffuse reflectance spectra. Appl. Spectrosc. 43, 772–777. doi: 10.1366/0003702894202201

Boser, B. E., Vapnik, N., Guyon, I. M., and Laboratories, T. B. (1992). “Training Algorithm Margin for Optimal Classifiers.A training algorithm for optimal margin classifiers,” in Proceedings of the fifth annual workshop on Computational learning theory, 144–152. doi: 10.1145/130385.130401

Calderón, R., Navas-Cortés, J. A., Lucena, C., and Zarco-Tejada, P. J. (2013). High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 139, 231–245. doi: 10.1016/j.rse.2013.07.031

Chen, S.-Y., Chiu, M.-F., and Zou, X.-W. (2022). Real-time defect inspection of green coffee beans using NIR snapshot hyperspectral imaging. Comput. Electron. Agric. 197, 106970. doi: 10.1016/j.compag.2022.106970

Chen, B., Li, N., and Bao, W. (2025). CLPr_in_ML: cleft lip and palate reconstructed features with machine learning. Curr. Bioinform. 20 (2), 179–193. doi: 10.2174/0115748936330499240909082529

Chen, H., Zhou, Y., Wang, X., Zhou, C., Yang, X., and Li, Z. (2016). Detection of Citrus yellow vein clearing virus by Quantitative Real-time RT-PCR. Hortic. Plant J. 2, 188–192. doi: 10.1016/j.hpj.2016.07.001

Gates, D. M., Keegan, H. J., Schleter, J. C., and Weidner, V. R. (1965). Spectral properties of plants. Appl. Optics 4, 11–20. doi: 10.1364/AO.4.000011

Gitelson, A. A., Gritz, Y., and Merzlyak, M. N. (2003). Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 160, 271–282. doi: 10.1078/0176-1617-00887

Gitelson, A. A. and Merzlyak, M. N. (1996). Signature analysis of leaf reflectance spectra: algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 148, 494–500. doi: 10.1016/S0176-1617(96)80284-7

Guo, D., Rangjin, X., Chun, Q., Fangyun, Y., Yan, Z., and Deng, L. (2015). Diagnosis of CTV-infected leaves using hyperspectral imaging. Intelligent Automation Soft Computing 21, 269–283. doi: 10.1080/10798587.2015.1015772

Jia, Y., Shi, Y., Luo, J., and Sun, H. (2023). Y–net: identification of typical diseases of corn leaves using a 3D–2D hybrid CNN model combined with a hyperspectral image band selection module. Sensors 23, 1494. doi: 10.3390/s23031494

Jiang, Q., Wu, G., Tian, C., Li, N., Yang, H., Bai, Y., et al. (2021). Hyperspectral imaging for early identification of strawberry leaves diseases with machine learning and spectral fingerprint features. Infrared Phys. Technol. 118, 103898. doi: 10.1016/j.infrared.2021.103898

Kaya, Y. and Gürsoy, E. (2023). A novel multi-head CNN design to identify plant diseases using the fusion of RGB images. Ecol. Inf. 75, 101998. doi: 10.1016/j.ecoinf.2023.101998

Li, H., Liang, Y., Xu, Q., and Cao, D. (2009). Key wavelengths screening using competitive adaptive reweighted sampling method for multivariate calibration. Analytica Chimica Acta 648, 77–84. doi: 10.1016/j.aca.2009.06.046

Li, X., Wei, Z., Peng, F., Liu, J., and Han, G. (2022). Estimating the distribution of chlorophyll content in CYVCV infected lemon leaf using hyperspectral imaging. Comput. Electron. Agric. 198, 107036. doi: 10.1016/j.compag.2022.107036

Liu, C., Liu, H., Hurst, J., Timko, M. P., and Zhou, C. (2020). Recent Advances on Citrus yellow vein clearing virus in Citrus. Hortic. Plant J. 6, 216–222. doi: 10.1016/j.hpj.2020.05.001

Liu, Y., Zhao, X., Song, Z., Yu, J., Jiang, D., Zhang, Y., et al. (2024). Detection of apple mosaic based on hyperspectral imaging and three-dimensional Gabor. Comput. Electron. Agric. 222, 109051. doi: 10.1016/j.compag.2024.109051

Lu, J., Tan, L., and Jiang, H. (2021). Review on convolutional neural network (CNN) applied to plant leaf disease classification. Agriculture 11 (8), 707. doi: 10.3390/agriculture11080707

Lu, J., Zhou, M., Gao, Y., and Jiang, H. (2018). Using hyperspectral imaging to discriminate yellow leaf curl disease in tomato leaves. Precis. Agric. 19, 379–394. doi: 10.1007/s11119-017-9524-7

Ma, N., Zhang, X., Zheng, H. T., and Sun, J. (2018). “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design,” in in Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science, vol 11218, eds. Ferrari, V., Hebert, M., Sminchisescu, C., and Weiss, Y. (Springer, Cham). doi: 10.1007/978-3-030-01264-9_8

Martinelli, F., Scalenghe, R., Davino, S., Panno, S., Scuderi, G., Ruisi, P., et al. (2015). Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 35, 1–25. doi: 10.1007/s13593-014-0246-1

Mishra, P., Asaari, M. S. M., Herrero-Langreo, A., Lohumi, S., Diezma, B., and Scheunders, P. (2017). Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 164, 49–67. doi: 10.1016/j.biosystemseng.2017.09.009

Nagasubramanian, K., Jones, S., Sarkar, S., Singh, A. K., Singh, A., and Ganapathysubramanian, B. (2018). Hyperspectral band selection using genetic algorithm and support vector machines for early identification of charcoal rot disease in soybean stems. Plant Methods 14, 86. doi: 10.1186/s13007-018-0349-9

Nagasubramanian, K., Jones, S., Singh, A. K., Sarkar, S., Singh, A., and Ganapathysubramanian, B. (2019). Plant disease identification using explainable 3D deep learning on hyperspectral images. Plant Methods 15, 98. doi: 10.1186/s13007-019-0479-8

Nguyen, C., Sagan, V., Maimaitiyiming, M., Maimaitijiang, M., Bhadra, S., and Kwasniewski, M. T. (2021). Early detection of plant viral disease using hyperspectral imaging and deep learning. Sensors 21 (3), 742. doi: 10.3390/s21030742

Ortac, G. and Ozcan, G. (2021). Comparative study of hyperspectral image classification by multidimensional Convolutional Neural Network approaches to improve accuracy. Expert Syst. Appl. 182, 115280. doi: 10.1016/j.eswa.2021.115280

Park, K., Hong, Y. K., Kim, G. H., and Lee, J. (2018). Classification of apple leaf conditions in hyper-spectral images for diagnosis of Marssonina blotch using mRMR and deep neural network. Comput. Electron. Agric. 148, 179–187. doi: 10.1016/j.compag.2018.02.025

PeÑUelas, J., I., F., C., B., L., S., and SavÉ, R. (1993). The reflectance at the 950–970 nm region as an indicator of plant water status. Int. J. Remote Sens. 14, 1887–1905. doi: 10.1080/01431169308954010

Pereira, F. M. V., Milori, D. M. B. P., Pereira-Filho, E. R., Venâncio, A. L., Russo, M., Cardinali, M., et al. (2011). Laser-induced fluorescence imaging method to monitor citrus greening disease. Comput. Electron. Agric. 79, 90–93. doi: 10.1016/j.compag.2011.08.002

Poblete, T., Navas-Cortes, J. A., Camino, C., Calderon, R., Hornero, A., Gonzalez-Dugo, V., et al. (2021). Discriminating Xylella fastidiosa from Verticillium dahliae infections in olive trees using thermal- and hyperspectral-based plant traits. ISPRS J. Photogrammetry Remote Sens. 179, 133–144. doi: 10.1016/j.isprsjprs.2021.07.014

Qi, C., Sandroni, M., Cairo Westergaard, J., Høegh Riis Sundmark, E., Bagge, M., Alexandersson, E., et al. (2023). In-field classification of the asymptomatic biotrophic phase of potato late blight based on deep learning and proximal hyperspectral imaging. Comput. Electron. Agric. 205, 107585. doi: 10.1016/j.compag.2022.107585

Qiao, M., Xu, Y., Xia, G., Su, Y., Lu, B., Gao, X., et al. (2022). Determination of hardness for maize kernels based on hyperspectral imaging. Food Chem. 366, 130559. doi: 10.1016/j.foodchem.2021.130559

Ram, B. G., Oduor, P., Igathinathane, C., Howatt, K., and Sun, X. (2024). A systematic review of hyperspectral imaging in precision agriculture: Analysis of its current state and future prospects. Comput. Electron. Agric. 222, 109037. doi: 10.1016/j.compag.2024.109037

Roy, S. K., Krishna, G., Dubey, S. R., and Chaudhuri, B. B. (2020). HybridSN: exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 17, 277–281. doi: 10.1109/LGRS.2019.2918719

Russel, N. S. and Selvaraj, A. (2022). Leaf species and disease classification using multiscale parallel deep CNN architecture. Neural Computing Appl. 34, 19217–19237. doi: 10.1007/s00521-022-07521-w

Siripatrawan, U. and Makino, Y. (2024). Hyperspectral imaging coupled with machine learning for classification of anthracnose infection on mango fruit. Spectrochimica Acta Part A: Mol. Biomolecular Spectrosc. 309, 123825. doi: 10.1016/j.saa.2023.123825

Thomas, S., Kuska, M. T., Bohnenkamp, D., Brugger, A., Alisaac, E., Wahabzada, M., et al. (2018). Benefits of hyperspectral imaging for plant disease detection and plant protection: a technical perspective. J. Plant Dis. Prot. 125, 5–20. doi: 10.1007/s41348-017-0124-6

Vallejo-Pérez, M. R., Sosa-Herrera, J. A., Navarro-Contreras, H. R., Álvarez-Preciado, L. G., Rodríguez-Vázquez, Á.G., and Lara-Ávila, J. P. (2021). Raman spectroscopy and machine-learning for early detection of bacterial canker of tomato: the asymptomatic disease condition. Plants 10 (8), 1542. doi: 10.3390/plants10081542

Wang, Y., Liao, P., Zhao, J. F., Zhang, X. K., Liu, C., Xiao, P. A., et al. (2022). Comparative transcriptome analysis of the Eureka lemon in response to Citrus yellow vein virus infection at different temperatures. Physiol. Mol. Plant Pathol. 119, 101832. doi: 10.1016/j.pmpp.2022.101832

Weng, H., Lv, J., Cen, H., He, M., Zeng, Y., Hua, S., et al. (2018). Hyperspectral reflectance imaging combined with carbohydrate metabolism analysis for diagnosis of citrus Huanglongbing in different seasons and cultivars. Sensors Actuators B: Chem. 275, 50–60. doi: 10.1016/j.snb.2018.08.020

Xuan, G., Li, Q., Shao, Y., and Shi, Y. (2022). Early diagnosis and pathogenesis monitoring of wheat powdery mildew caused by blumeria graminis using hyperspectral imaging. Comput. Electron. Agric. 197, 106921. doi: 10.1016/j.compag.2022.106921

Ye, S., Wang, D., and Min, S. (2008). Successive projections algorithm combined with uninformative variable elimination for spectral variable selection. Chemometrics Intelligent Lab. Syst. 91, 194–199. doi: 10.1016/j.chemolab.2007.11.005

Yin, H., Li, B., Liu, Y.-D., Zhang, F., Su, C.-T., and Ou-yang, A.-G. (2022). Detection of early bruises on loquat using hyperspectral imaging technology coupled with band ratio and improved Otsu method. Spectrochimica Acta Part A: Mol. Biomolecular Spectrosc. 283, 121775. doi: 10.1016/j.saa.2022.121775

Keywords: hyperspectral imaging, disease identification, lemon, machine learning, deep learning

Citation: Li X, Peng F, Wei Z and Han G (2025) Identification of yellow vein clearing disease in lemons based on hyperspectral imaging and deep learning. Front. Plant Sci. 16:1554514. doi: 10.3389/fpls.2025.1554514

Received: 02 January 2025; Accepted: 27 May 2025;

Published: 16 June 2025.

Edited by:

Pei Wang, Southwest University, ChinaReviewed by:

Wenzheng Bao, Xuzhou University of Technology, ChinaMikyeong Kim, Chungbuk National University, Republic of Korea

Xi Tian, Beijing Academy of Agriculture and Forestry Sciences, China

Firozeh Solimani, Politecnico di Bari, Italy

Copyright © 2025 Li, Peng, Wei and Han. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Guohui Han, aGdodWkyMDA3QDEyNi5jb20=

Xunlan Li

Xunlan Li Fangfang Peng

Fangfang Peng