- 1College of Agriculture, Shihezi University, Shihezi, China

- 2Research Center of Information Technology, Beijing Academy of Agriculture and Forestry Sciences, Beijing, China

- 3International PhD School, University of Almería, Almería, Spain

- 4College of Mechanical and Electrical Engineering, Shihezi University, Shihezi, China

Introduction: Existing facility environment prediction models often suffer from low accuracy, poor timeliness, and error accumulation in long-term predictions under multifactor nonlinear coupling conditions. These limitations significantly constrain the effectiveness of precise environmental regulation in agricultural facilities.

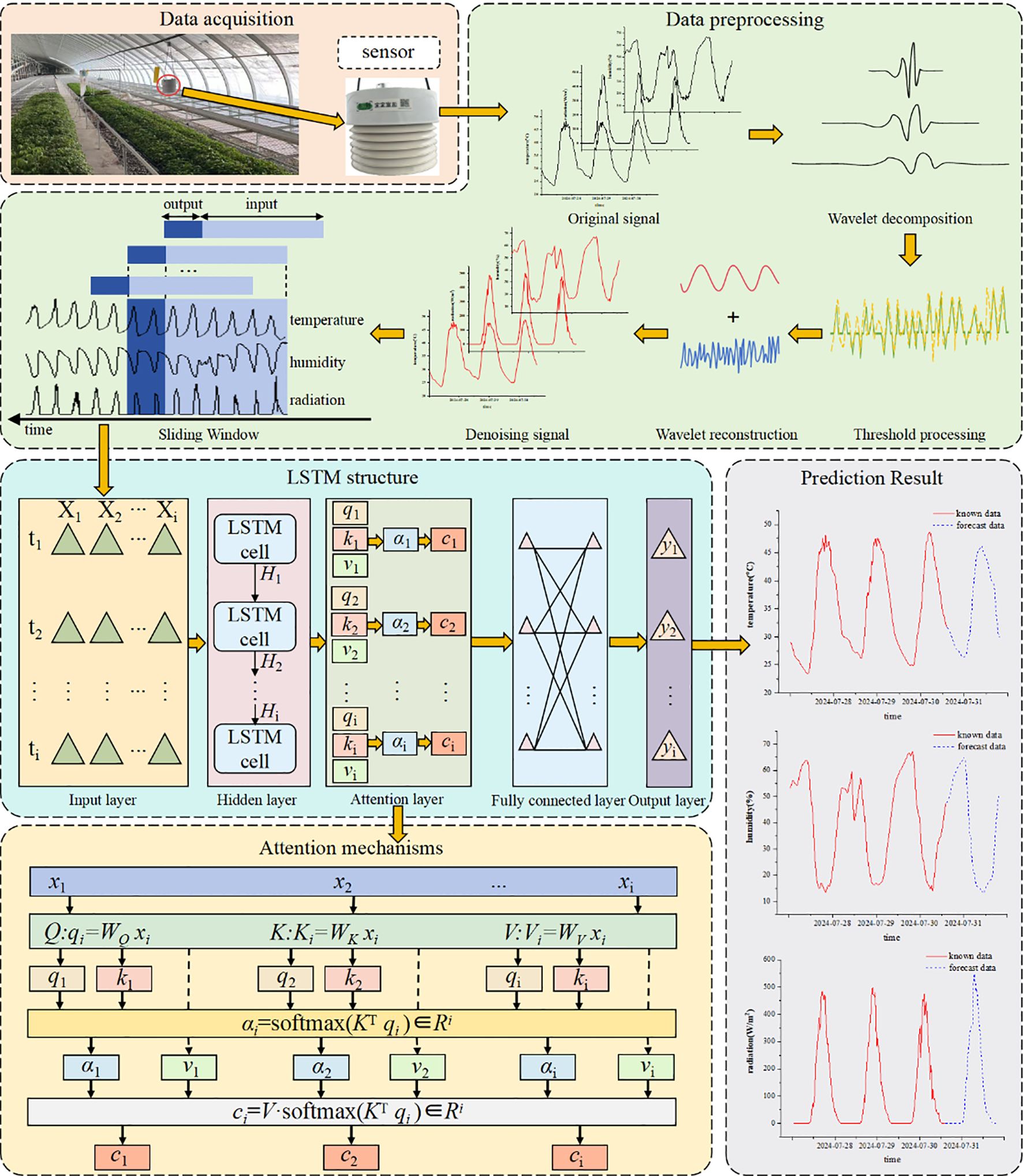

Methods: To address these challenges, this paper proposes a novel facility environment prediction model (LSTM-AT-DP) integrating Long Short-Term Memory networks with attention mechanisms and advanced data preprocessing. The model architecture employs: (1) a Data Preprocessing (DP) module combining Wavelet Threshold Denoising (WTD) for noise elimination and Sliding Window (SW) technique for feature matrix construction; (2) an LSTM core for deep temporal modeling; and (3) an Attention Mechanism (AT) for dynamic feature weighting to enhance critical temporal feature extraction.

Results: In 24-hour prediction tests, the model achieved determination coefficients (R²) of 0.9602 (temperature), 0.9529 (humidity), and 0.9839 (radiation), representing improvements of 3.89%, 5.53%, and 2.84% respectively over baseline LSTM models. Corresponding RMSE reductions were 0.6830, 1.8759, and 12.952 for these parameters.

Discussion: The results demonstrate that the LSTM-AT-DP model significantly enhances prediction accuracy while effectively suppressing error accumulation in long-term forecasts. This advancement provides robust technical support for precise facility environment regulation, with particular improvements observed in humidity prediction. The integrated attention mechanism proves particularly effective in identifying and weighting critical temporal features across all measured environmental parameters.

1 Introduction

Facility agriculture, a vital mode of modern agricultural production, addresses the limitations imposed by external environmental conditions on crop growth by creating controlled environments through engineering and technological interventions (Gao et al., 2022). The microclimate within these facilities—encompassing temperature, relative humidity, and radiation—plays a critical role in determining crop growth, yield, and quality (Tang et al., 2022; Islam et al., 2023). Specifically, temperature fluctuations can disrupt metabolic processes, excessive humidity promotes pest and disease outbreaks, and inadequate or excessive light negatively impacts photosynthesis, leading to stunted growth or leaf damage (Jeong et al., 2020; Cheng et al., 2021). These factors exhibit multifactor nonlinear coupling, significantly complicating environmental regulation. Consequently, developing accurate predictive models for facility environments is essential to enable dynamic and precise control.

Early environmental control systems in agricultural facilities primarily depended on expert knowledge to determine optimal parameters. These systems employed manual adjustments or timed controls based on real-time environmental monitoring to maintain stable growing conditions. While this approach offers operational simplicity and has been widely adopted in facility-based production, its effectiveness is limited by significant variations in facility types and crop requirements. Such limitations often lead to delayed responses and control inaccuracies (Kow et al., 2022), causing undesirable environmental fluctuations that may disrupt plant growth and yield stability.

The advancement of sensor networks and communication technologies has led to significant improvements in agricultural environmental monitoring systems and automated control equipment. Modern facility environmental control methods have evolved from traditional manual and timed operations to setpoint-based and intelligent control approaches (Subahi and Bouazza, 2020; Maraveas and Bartzanas, 2021). While these advanced methods demonstrate superior control accuracy, enhanced plant growth performance, and reduced resource consumption compared to conventional methods, they still lack the capability for dynamic feedback control in response to environmental variations. One of the key issues that need to be addressed to realize real-time feedback control is how to achieve accurate time-by-time and day-by-day prediction of environmental factors.

The rapid development of sensor networks and communication technologies has revolutionized agricultural environmental monitoring and automated control systems. Contemporary methods of controlling facility environments have transitioned from traditional manual and timed operations to setpoint-based regulation and intelligent control strategies (Subahi and Bouazza, 2020; Maraveas and Bartzanas, 2021). Although these modern approaches exhibit significantly improved control precision, better crop growth outcomes, and enhanced resource efficiency relative to traditional methods, they remain incapable of implementing adaptive feedback control in dynamic environmental conditions. A critical research challenge for achieving real-time feedback control involves developing reliable prediction models capable of accurate hourly and daily forecasting of environmental parameters.

Current environmental prediction models can be categorized into three primary types: mechanistic models, computational fluid dynamics (CFD) models, and data-driven models (Zhang et al., 2024). Mechanistic models employ thermodynamic principles to analyze dynamic energy and mass transfer processes within agricultural facilities, characterizing system behavior through fundamental conservation laws (Zhang et al., 2020). For instance, Zhang developed an energy balance-based thermal environment model for glass greenhouses that achieved precise air temperature prediction (Zhang et al., 2020). Similarly, Liu established a transient microclimate model using thermodynamic theory to evaluate temperature and humidity variations in solar greenhouses (Liu et al., 2021). CFD models represent a specialized subset of mechanistic modeling approaches (Bournet and Rojano, 2022). A notable application by Mao demonstrated successful integration of CFD simulations with experimental measurements for comprehensive greenhouse temperature and humidity field analysis (Mao and Su, 2024).

The rapid advancement of artificial intelligence technologies has catalyzed significant progress in data-driven modeling for environmental prediction. These data-driven approaches establish predictive mapping relationships by extracting latent patterns from historical datasets, with model accuracy being critically dependent on both data quality and algorithmic architecture (Sansa et al., 2020; Mehdizadeh, 2018). A representative example is the model predictive control framework developed by Mahmood which demonstrates robust performance in facility temperature prediction under uncertain conditions, thereby validating the practical efficacy of data-driven methods in environmental forecasting applications (Mahmood et al., 2023).

In the initial development phase of data-driven approaches, researchers predominantly employed conventional machine learning models for environmental prediction, including regression models, backpropagation (BP) neural networks, recurrent neural networks (RNN), and radial basis function neural networks. These methods typically required manual preprocessing of environmental factor data (e.g., noise filtering and feature selection) prior to conducting short-term predictions through classifier training. However, the predictive performance of these early models was constrained by both the scale of input data and temporal distribution characteristics, making them inadequate for capturing the nonlinear dynamics inherent in complex environmental systems.

Addressing the intricate challenges posed by dynamically evolving environmental systems with multifactorial interactions, deep learning methods have demonstrated significant potential in environmental prediction due to their superior capability in characterizing high-order nonlinear relationships (Torres et al., 2021). Distinguished from conventional approaches, deep learning architectures not only autonomously extract high-level abstract features through multilayer nonlinear networks (Li et al., 2021), but also exhibit enhanced nonlinear approximation capacity, substantially reducing dependence on manual feature engineering. Among various deep learning models, the Long Short-Term Memory (LSTM) neural network (Hochreiter and Schmidhuber, 1997) has proven particularly effective in capturing temporal dependencies among environmental factors owing to its unique gating mechanism (Hou et al., 2023). Consequently, LSTM-based approaches have been extensively adopted for facility environment prediction. A notable application is the GCP_LSTM greenhouse climate prediction model developed by Liu, which effectively leverages LSTM networks to model nonlinear interactions among historical environmental parameters (Liu et al., 2022).

Experimental investigations have revealed that current facility environment prediction models often demonstrate inadequate accuracy and poor timeliness when handling complex nonlinear systems. Particularly as prediction time spans increase, traditional models exhibit significant accuracy degradation due to error accumulation effects. This study presents an LSTM-AT-DP based multi-step prediction framework for facility environments, focusing on temperature, humidity, and radiation parameters. Leveraging deep learning’s strengths in temporal modeling, our approach first employs a data preprocessing module to eliminate high-frequency noise from sensor-acquired time-series data, thereby mitigating error propagation risks in long-term forecasting. The denoised temporal data is then transformed into feature matrices for deep temporal pattern extraction via gated LSTM networks. Furthermore, an attention mechanism dynamically enhances feature weights at critical temporal nodes. Through multi-step prediction parameter optimization, we ultimately construct a temporally continuous prediction model. This methodology establishes a high-fidelity mapping between environmental time-series data and multi-step prediction targets, forming an intelligent deep learning-based prediction system. The proposed framework provides reliable technical support for long-cycle precision regulation strategies in controlled agricultural environments.

2 Materials and methods

2.1 Overview of the facility environment prediction model testing process

The facility environment prediction model developed in this study addresses the complex challenge of multivariate time-series forecasting for greenhouse environmental parameters (temperature, humidity, and radiation) through advanced deep learning techniques. The framework operates through five key phases: First, distributed sensors collect real-time raw time-series data encompassing critical environmental variables including air temperature, relative humidity, and solar radiation intensity. Second, a dedicated data preprocessing stage performs noise reduction and outlier removal to enhance data quality, establishing a robust foundation for subsequent analysis. Third, the cleansed temporal data undergoes structural transformation into feature matrices for LSTM network processing, where the gated architecture provides fundamental temporal modeling capacity. Fourth, an integrated attention mechanism dynamically weights the denoised multidimensional features, enabling selective focus on critical temporal patterns while maintaining responsiveness to short-term fluctuations. Finally, the LSTM’s sophisticated gating mechanism performs deep temporal modeling and future-state prediction, complementing the attention module to achieve balanced short- and long-term dependency capture. The system outputs multi-horizon predictions (6h, 12h, and 24h) for all target environmental parameters, providing essential decision-support for precision environmental control (see Figure 1).

2.2 Overview of the experiment

The experimental study was conducted at the Xinjiang Kashgar (Shandong Shuifa) Vegetable Industry Demonstration Park (39.35°E, 76.02°N). The research facility consisted of a north-south oriented double-film double-arch greenhouse measuring 120 m in length and 18 m in width, equipped with comprehensive environmental control systems including:

1. External and internal thermal insulation screens

2. Sidewall and roof ventilation systems

3. Internal circulation fans

4. High-pressure mist cooling systems

The study utilized tomato seedlings grown in a substrate mixture of peat moss and vermiculite (2:1 v/v ratio). Experimental periods spanned from March to August 2024 and March 9 to June 26, 2025. During the early experimental phase (March), when temperatures were low, both insulation curtains were activated synergistically to maintain optimal temperatures. In the later phase (May-July), high temperatures prompted implementation of an integrated cooling strategy combining ventilation (side/top), internal air circulation, and misting systems. Throughout the study period, these precisely controlled environmental conditions were maintained to meet the developmental requirements of tomato seedlings.

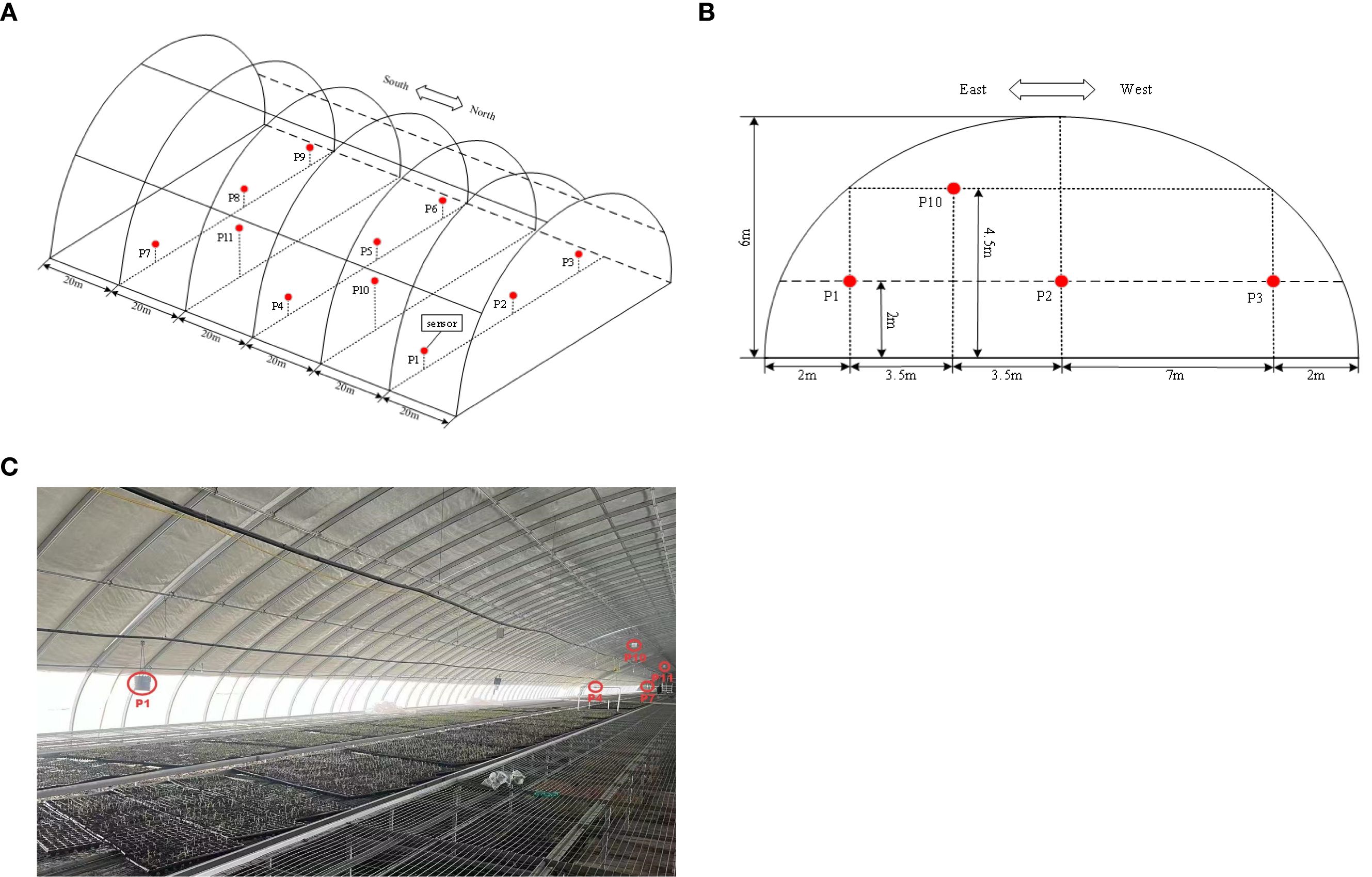

2.3 Data acquisition

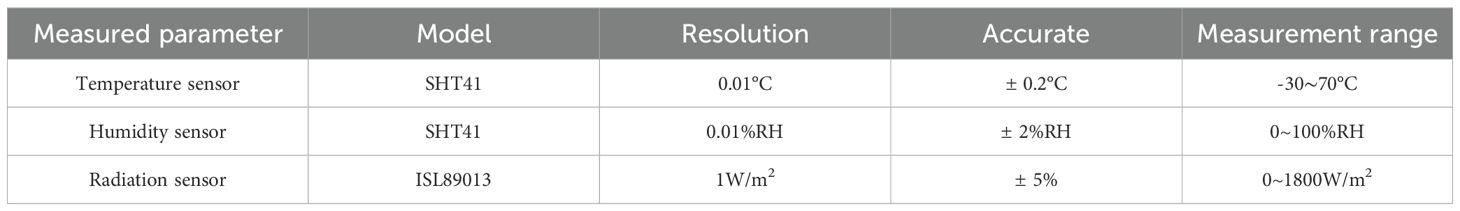

The experimental data acquisition system employed a multi-parameter environmental monitor (Nongxin Technology, Beijing) specifically designed for greenhouse applications. The system continuously recorded three critical parameters: air temperature, relative humidity, and solar radiation intensity. Eleven monitoring nodes were strategically distributed throughout the greenhouse to ensure comprehensive spatial coverage, with their precise locations illustrated in Figure 2. The complete technical specifications of all deployed sensors are systematically presented in Table 1.

Figure 2. (A) Sensor layout diagram (front view). (B) Sensor layout diagram (side view). (C) Sensor test layout diagram.

2.4 Data preprocessing

To address data quality issues arising from sensor acquisition and transmission anomalies, we implemented a rigorous two-step data preprocessing protocol:

Outlier detection using box-whisker plots with statistically defined thresholds, as shown in Equations 1 and 2:

where U is the upper bound, L is the lower bound, P75 is the 75th percentile, and P25 is the 25th percentile. IQR (interquartile range) represents the difference between the 75th and 25th percentiles (Ritter, 2023).

All identified outliers were treated as missing values.

(2) Missing Data Imputation:

For ≤5 consecutive missing points: Linear interpolation

For >5 consecutive missing points: Historical data-based imputation using nearest neighbors under identical weather conditions

This scheme is mathematically expressed by (Equation 3) (Ritter, 2023), which effectively avoids errors caused by long-span interpolation while ensuring data continuity.

where yt is the missing value, ya, ta and yb, tb are the time and value of the first valid point before and after the missing segment, t is the time to be interpolated, and yh is the nearest neighbor with the same weather conditions of the historical data.

To address scale discrepancies among heterogeneous data types, all input variables were normalized to a [0,1] range using min-max normalization as formalized in (Equation 4) (Wang et al., 2024). The normalized dataset was subsequently partitioned into training, validation, and test subsets following a 7:2:1 ratio, ensuring proper model development and evaluation.

where d is the original data, dmin and dmax are the minimum and maximum values in the original data, respectively, and y is the data after normalization.

3 Model construction

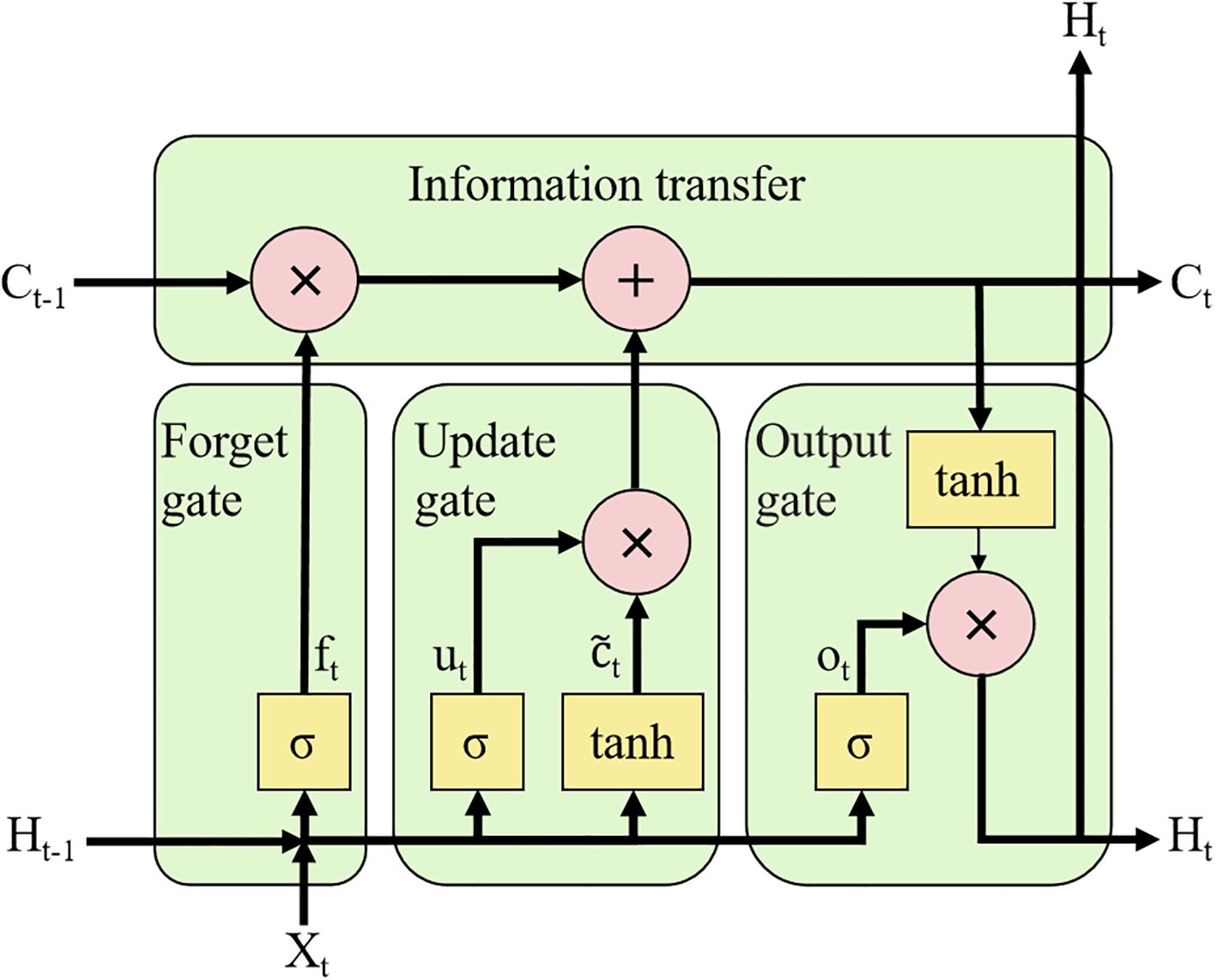

3.1 Long short-term memory

Temperature, humidity, and radiation exhibit strongly nonlinear temporal characteristics (Gao et al., 2023). The LSTM architecture, as a specialized variant of recurrent neural networks (RNN), demonstrates exceptional capability in modeling such nonlinear temporal dependencies while effectively learning long-range patterns (Fan et al., 2021; Hu et al., 2025). LSTM models demonstrate superior capability in processing long-sequence data while effectively addressing the gradient vanishing issue inherent in traditional RNN architectures (Chen et al., 2020; Hong et al., 2025), as illustrated in Figure 3.

Therefore, the LSTM model effectively processes long-term sequential data and mitigates the gradient vanishing problem inherent in traditional RNNs. The underlying mechanism is described by the following equations (Umutoni et al., 2025):

where ft,ut, , , ot and Ht denote the forgetting gate, updating gate, candidate cell state, current cell state, output gate, and hidden layer state, respectively; Wf, Wu, Wc, and Wo denote the weights of the forgetting gate, updating gate, cell state, and output gate; and bf, bu, bc and bo denote the bias matrices of the forgetting gate, updating gate, cell state, and output gate; tanh is the activation function and σ is the sigmoid activation function.

(Note: Ct-1 is the cell state at moment t-1; Ct is the cell state at moment t; Ht-1 is the output at moment t-1; Ht is the output at moment t.)

3.2 The LSTM-AT-DP model

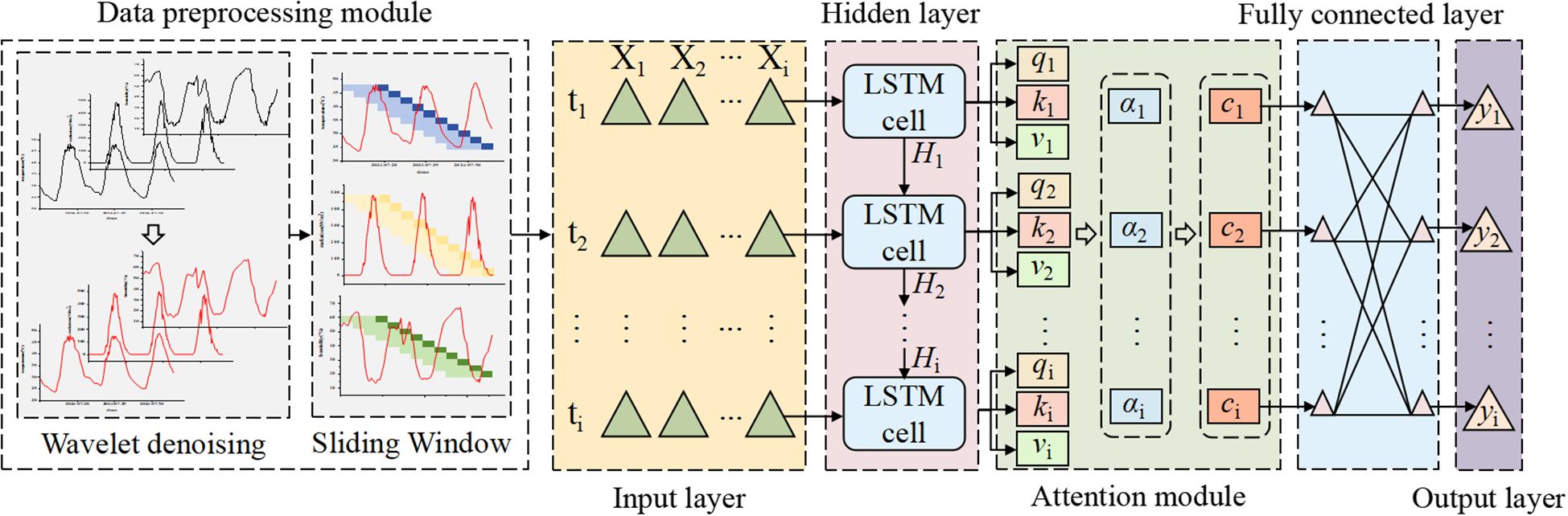

To address the limitations of traditional models in long-term time series forecasting, particularly their declining accuracy and poor timeliness, this study proposes an LSTM-AT-DP model: Long Short-Term Memory (LSTM), Attention Mechanism Module (AT), and Data Preprocessing Module (DP). The model’s process is divided into four stages: (1) The WTD unit in the DP module first applies wavelet denoising technology to the raw time series data, significantly improving the signal-to-noise ratio; (2) the SW unit then uses sliding window technology to restructure the denoised data into a feature matrix; (3) the LSTM network extracts long-term temporal dependencies from the processed data; (4) the AT module dynamically focuses on key features and optimizes weight distribution. This model is specifically designed for facility agriculture environments and can achieve high-precision predictions of key parameters (temperature, humidity, and radiation) in greenhouse environments. Figure 4 shows the complete architecture of this model.

The proposed LSTM-AT-DP architecture includes: data preprocessing module, input layer, hidden layer, attention mechanism module, fully connected layer, and output layer. The specific description is as follows:

1. Data preprocessing (DP) module: This module consists of two steps. First, the wavelet threshold denoising (WTD) unit performs denoising on the original multidimensional time series features. Then, the sliding window (SW) unit reorganizes the denoised sequence into a feature matrix with optimal time dependency and feeds it into the subsequent network layers.

2. Input Layer: The model takes multi-dimensional time series features as input, formatted as a 3D tensor (S, T, X) for LSTM processing, where:

S (Sample size): Sliding window length, determined by the prediction horizon:24 steps for 6-hour prediction (1 step = 15-min interval);48 steps for 12-hour prediction;96 steps for 24-hour prediction

T (Time steps): Prediction horizon (6/12/24 hours in this study).

X (Feature dimension): Three environmental parameters (temperature, humidity, and radiation).

3. Hidden Layer: Comprising multiple stacked LSTM units, this layer performs deep feature extraction and temporal dependency modeling. Through (Equations 5–10), the input information is stored in hidden states h1 to h2 and subsequently propagated to the next layer.

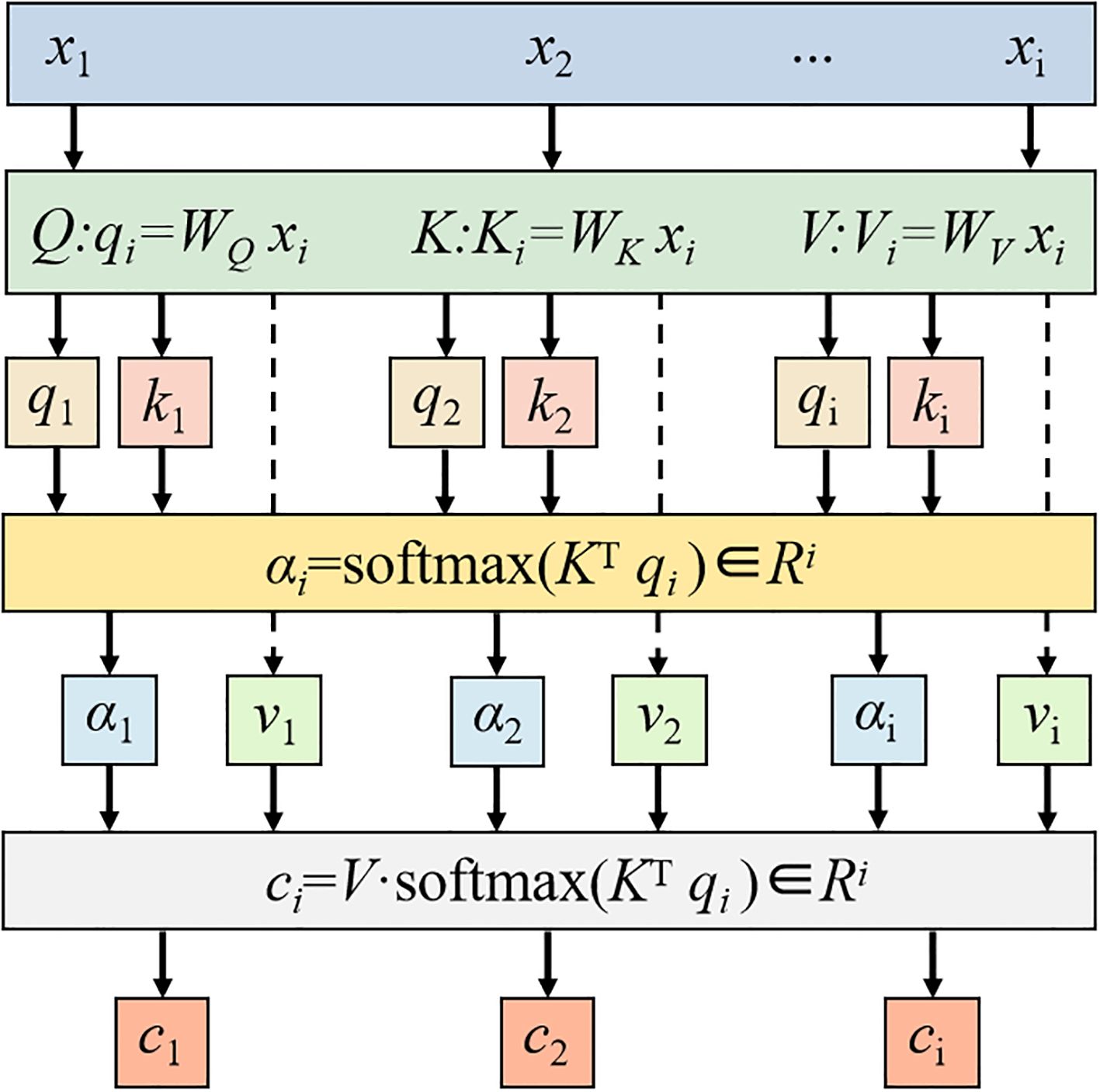

4. Attention Module: This module dynamically computes weight distributions over hidden-layer outputs to amplify the model’s focus on critical timesteps. Specifically: (Equations 14–16) derive the Query (Q), Key (K), and Value (V) vectors for each hidden state; (Equation 17) computes Q-K similarity scores, which are normalized via softmax to generate attention weights; (Equation 18) performs a weighted summation of V using these weights, producing the attention-enhanced output.

5. Fully connected layer: local feature integration and data dimension transformation of the output of the attention module.

6. Output Layer: The terminal component generates the model’s final predictions through linear transformation of the processed features, producing time-series forecasts with optimized temporal dependencies.

This study employs three evaluation metrics to assess model performance: the coefficient of determination (R²), mean absolute error (MAE), and root mean square error (RMSE). As a core indicator of regression model fitness, R² quantifies the proportion of variance in the dependent variable explainable by the model, representing the contribution of independent variables to dependent variable variation. MAE measures prediction bias, with smaller values indicating closer alignment between predicted and actual values. RMSE demonstrates particular sensitivity to peak prediction errors, where reduced values correspond to improved model accuracy. The calculation formula is as follows (Equations 11–13) (Yang et al., 2023):

(Note: where y’ is the model predicted value, y is the true value, and is the average of the true values.)

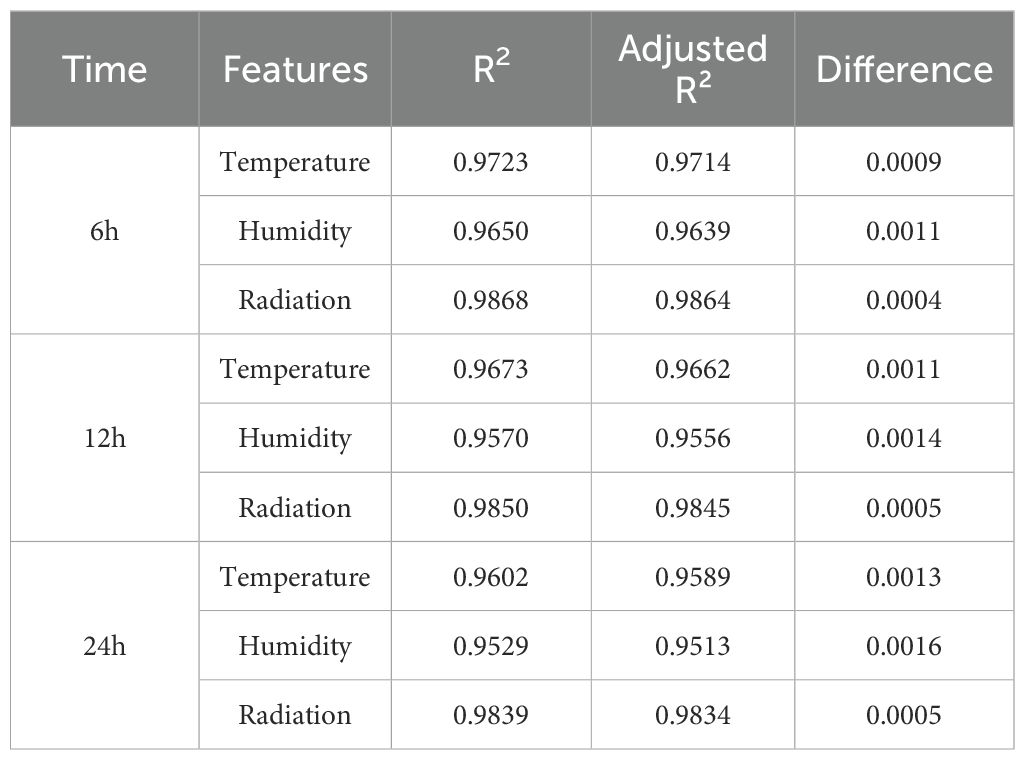

In evaluating the performance of environmental prediction models, R² and adjusted R² are key indicators for assessing model fit. As shown in Table 2, a systematic comparison of temperature, humidity, and radiation prediction results at three time scales (6 hours, 12 hours, and 24 hours) revealed that the Adjusted R² was slightly lower than the R² in all scenarios. This difference stems from the penalty adjustment that Adjusted R² applies to the number of features. In this study, three features were used: temperature, humidity, and radiation. When the number of features is fixed and the sample size is sufficient, the difference between the two values is less than 0.2%, indicating that the impact of model complexity on explanatory power can be ignored. Furthermore, the R² value for radiation predictions consistently exceeds 0.98, with the smallest difference from the adjusted R² value, which confirms the model’s strong explanatory power for radiation changes. Due to the stability of feature engineering, the sufficient sample size, and R²’s more intuitive interpretability in this study, it was ultimately selected as the core evaluation metric. This choice aligns with the numerical stability characteristics presented in Table 2 and facilitates horizontal comparisons with similar studies.

3.3 Attention mechanism module

The AT Module dynamically weights and aggregates input information through the coordinated interaction of three vectors: Query (Q), Key (K), and Value (V) (Guo et al., 2022; Tian et al., 2022). These vectors are generated by trainable weight matrices (WQ, WK, WV) that project input features into respective latent spaces, rather than using random initialization. The Q vector encapsulates the current task’s informational requirements, actively retrieving relevant sequence elements. The K vector serves as a feature identifier for each input element, computing relevance scores through matching with Q. The V vector contains the actual content information that undergoes weighted aggregation based on Q and K matching results. In short, attention weights are calculated through three consecutive operations: (1) Calculate the dot product similarity between the Q vector and the K vector, (2) Standardize the operation to obtain the weights of each element of the K vector, (3) Use these normalized attention weights to perform weighted aggregation on the V vector to obtain the final result.

The specific flowchart of the attention module is shown in Figure 5:

The main steps in its calculation process are as follows (Zou et al., 2024):

Where Q is the query vector, K is the key vector and V is the value vector; WQ, WK, WV are the parameter matrices; qi is the element of vector Q, ki is the element of vector K and vi is the element of vector V; αi is the attention distribution; ci is the final output; i is the feature order number, ranging from 1 to i.

3.4 Data preprocessing module

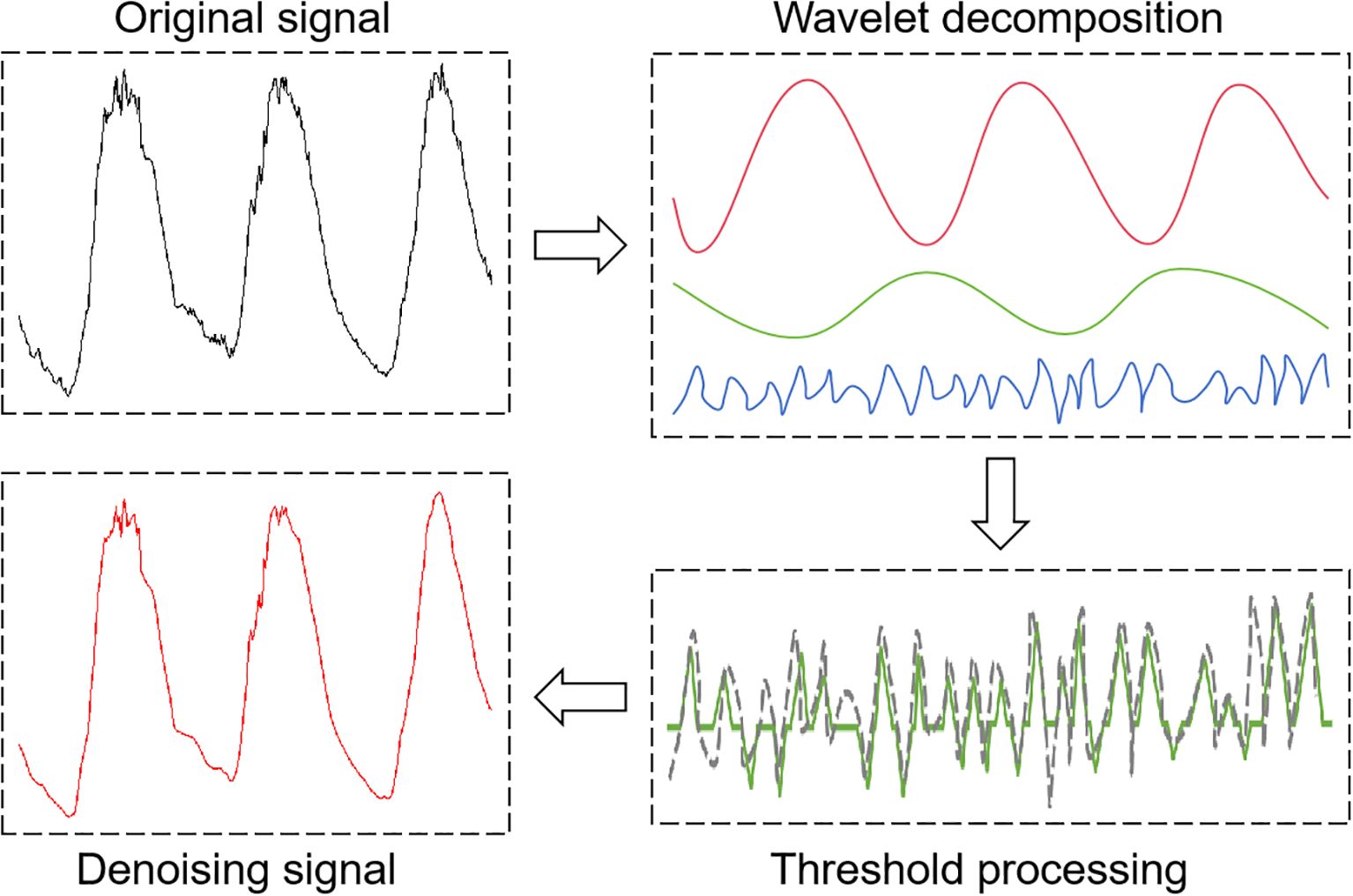

The DP module comprises two core components: (1) the Wavelet Threshold Denoising (WTD) unit that eliminates noise from raw time-series data to enhance signal-to-noise ratio, and (2) the Sliding Window (SW) unit that restructures the denoised data into equal-length continuous sequences through temporal windowing. This processing pipeline ultimately generates high-dimensional feature matrices optimized for deep feature extraction in subsequent prediction models.

3.4.1 Wavelet threshold denoising unit

The WTD unit uses multiscale wavelet analysis to suppress noise through coefficient threshold processing. The process involves: decomposing the input signal into multi-band wavelet coefficients, applying optimal threshold processing to high-frequency (noise-dominated) coefficients, and reconstructing the signal from the processed coefficients (Dong et al., 2024; Li et al., 2025). WTD unit demonstrates excellent denoising capabilities due to its computational efficiency and adaptive properties (Jiang et al., 2025). As shown in Figure 6, noise reduction performance critically depends on three fundamental parameters: (i) choice of wavelet basis functions, (ii) threshold optimization strategy, and (iii) number of decomposition levels (Wu et al., 2024). These parameters collectively form the core foundation of the entire data preprocessing pipeline.

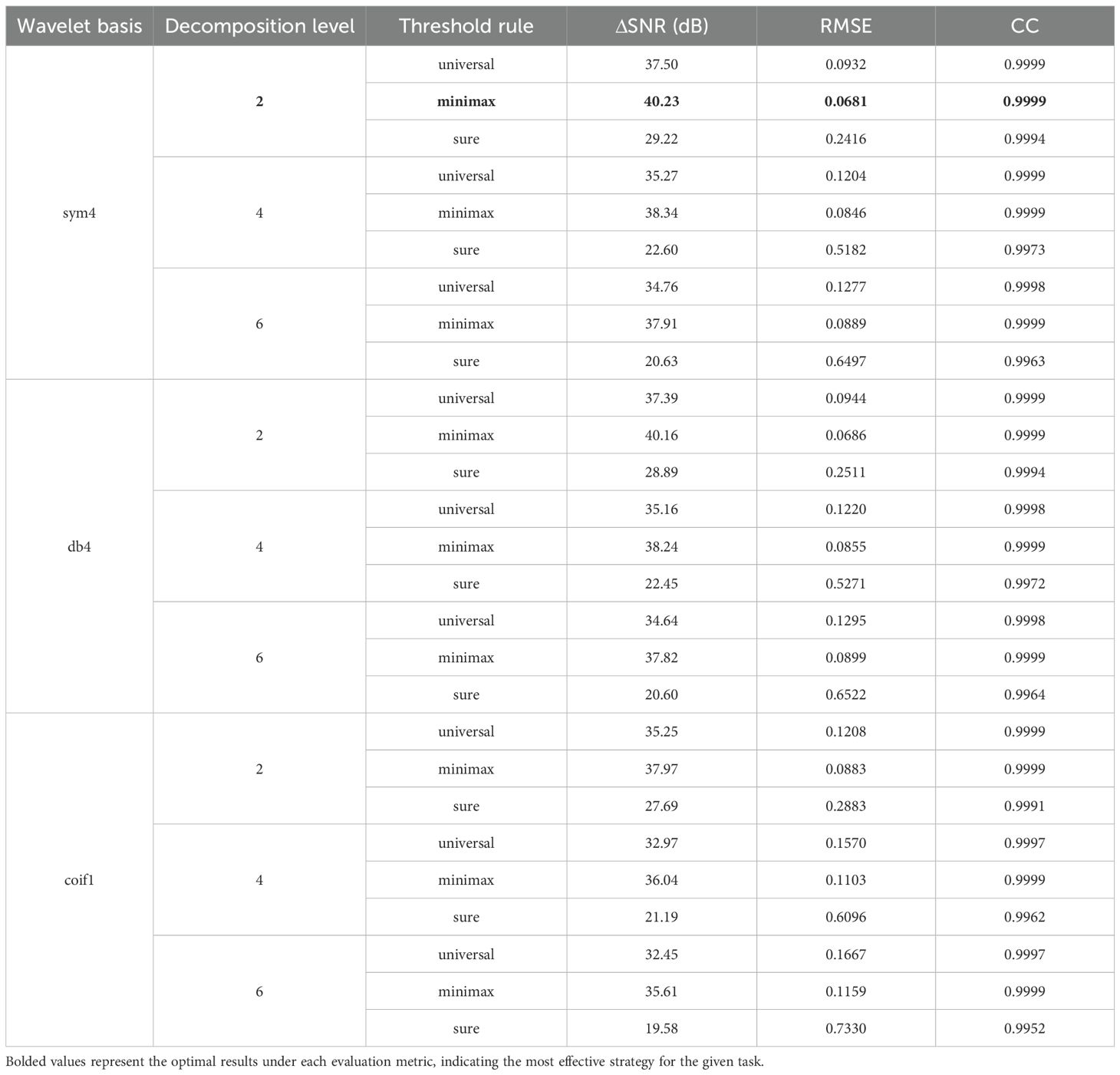

This study selected the sym4 wavelet for analysis and denoising to address the significant periodicity (e.g., diurnal cycles and seasonal variations) exhibited by facility environmental parameters (e.g., temperature, humidity, and radiation), multi-parameter coupling effects, and non-stationarity caused by noise artifacts resulting from control operations (e.g., ventilation and shading). The sym4 wavelet possesses approximate symmetry and excellent time-frequency locality. Experimental results show that, when set to a decomposition level of 2 and using the minimax threshold strategy, the Sym4 wavelet outperforms other wavelet basis functions in terms of noise reduction performance (ASNR = 40.23 dB, RMSE = 0.0681, and CC = 0.9999). This selection improves the signal-to-noise ratio and preserves more of the original signal’s features, providing a reliable foundation for subsequent signal processing. The following mathematical formulas (Equations 19, 20) (Dong et al., 2024) illustrate this point:

where cAL[n] is the jth layer approximation coefficient (low frequency part) and cDL[n] is the jth layer detail coefficient (high frequency part); x is the original signal, ψ is the wavelet function, and ϕ is the scale function.

The soft thresholding method was selected for processing facility environmental data due to its superior performance characteristics: continuous shrinkage properties that maintain signal smoothness, effective suppression of low-amplitude noise components, robust preservation of transient features, and optimal output smoothness. These advantages make it particularly suitable for analyzing non-stationary environmental parameters. The mathematical expression for this thresholding method is shown in Equation 21 (Li et al., 2025):

where a is the wavelet coefficient and is the wavelet threshold λ.

The process of restoring the processed wavelet coefficients to the original signal is given by Equation 22 (Jiang et al., 2023):

where xr is the reconstructed signal.

The reconstructed denoised signal xr is used as an input to the sliding window, whereby the sliding window unit generates the input data required for the prediction model.

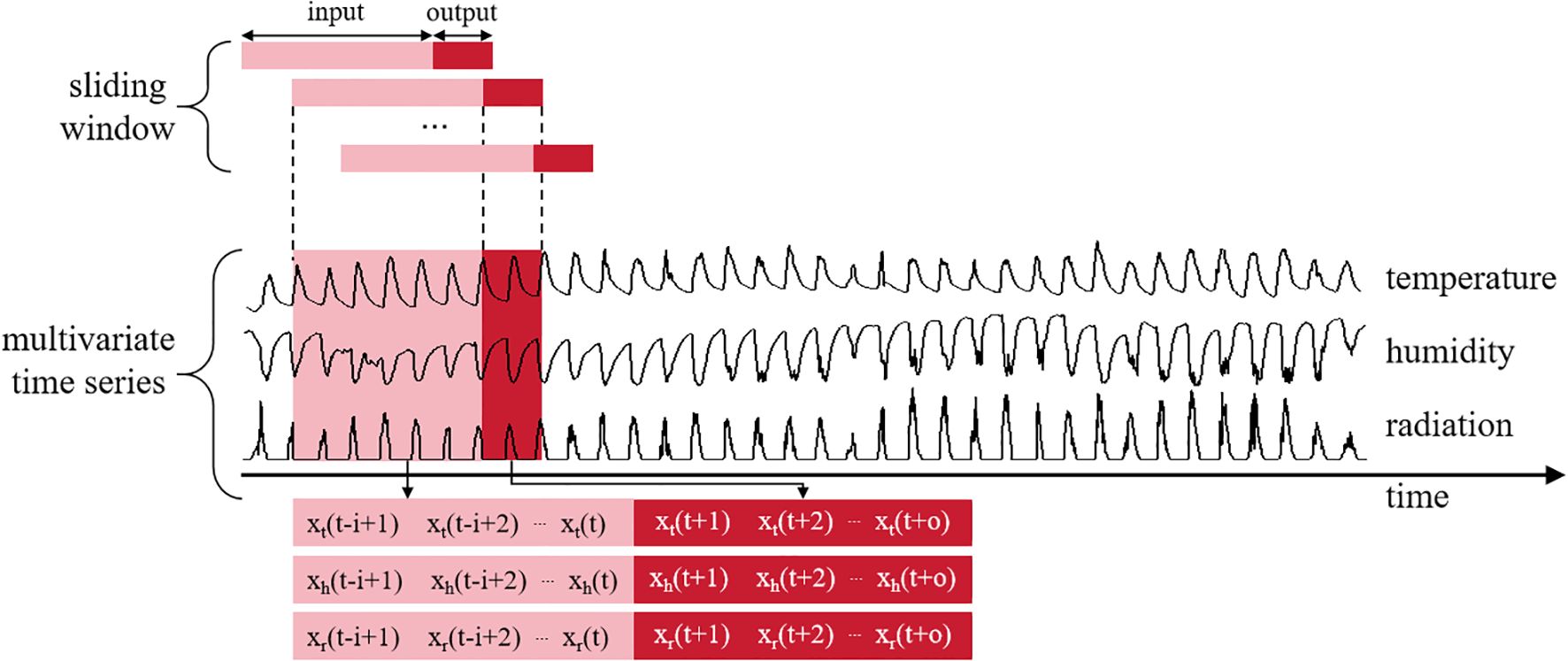

3.4.2 Sliding window unit

The monitoring and prediction of facility environmental parameters (temperature, humidity, solar radiation) constitute a characteristic multivariate time series forecasting problem. This problem presents two fundamental challenges: (1) strong temporal dependencies (e.g., thermal inertia effects, radiative accumulation), and (2) complex cross-variable dynamics (e.g., humidity-temperature coupling, radiation-driven cross-effects). Naive approaches using instantaneous independent observations fail to capture the temporal continuity of environmental evolution, leading to inaccurate predictions of future facility states.

The SW unit systematically segments multivariate time series data to establish temporal correlations between historical observations (e.g., continuous hourly temperature, humidity, and radiation measurements) and future target values (e.g., predicted values for subsequent hours), thereby forming structured input-output pairs (Yang et al., 2023). This methodological framework helps the model explicitly learn complex cross-variable coupling relationships and multi-scale dynamic evolution patterns at different time intervals, as shown in Figure 7.

where xt denotes the environment variable temperature, xh denotes the environment variable humidity, and xr denotes the environment variable radiation; i denotes the length of the input window; o denotes the length of the output window; and t denotes the current time point.

The DP module uses WTD units for initial noise reduction processing, followed by reconstruction of the noise-reduced time series based on SW units to generate a feature matrix. This architecture enhances the model’s predictive capabilities by extracting underlying patterns in the dynamic facility environment, significantly improving the accuracy and reliability of predictions for future environmental parameters (temperature, humidity, solar radiation).

4 Results and analysis

4.1 Parameterization

The facility environment prediction model of this study was run on a hardware environment of Intel(R) Core(TM) i5-9300HF central processor and NVIDIA GeForce GTX 1650 graphics card, coded in Python 3.7, with an integrated development environment of PyCharm2024.1.2, under the framework of Pytorch1.8.1 Development and evaluation were carried out. After extensive testing, the optimal number of layers and neurons for the model was determined, with the following specific parameter settings: 2 LSTM layers, 100 hidden layer units, a learning rate of 0.001, the Adam optimizer selected, a batch size of 64, and 100 epochs.

4.2 Explainability and computational overhead analysis of attention mechanisms

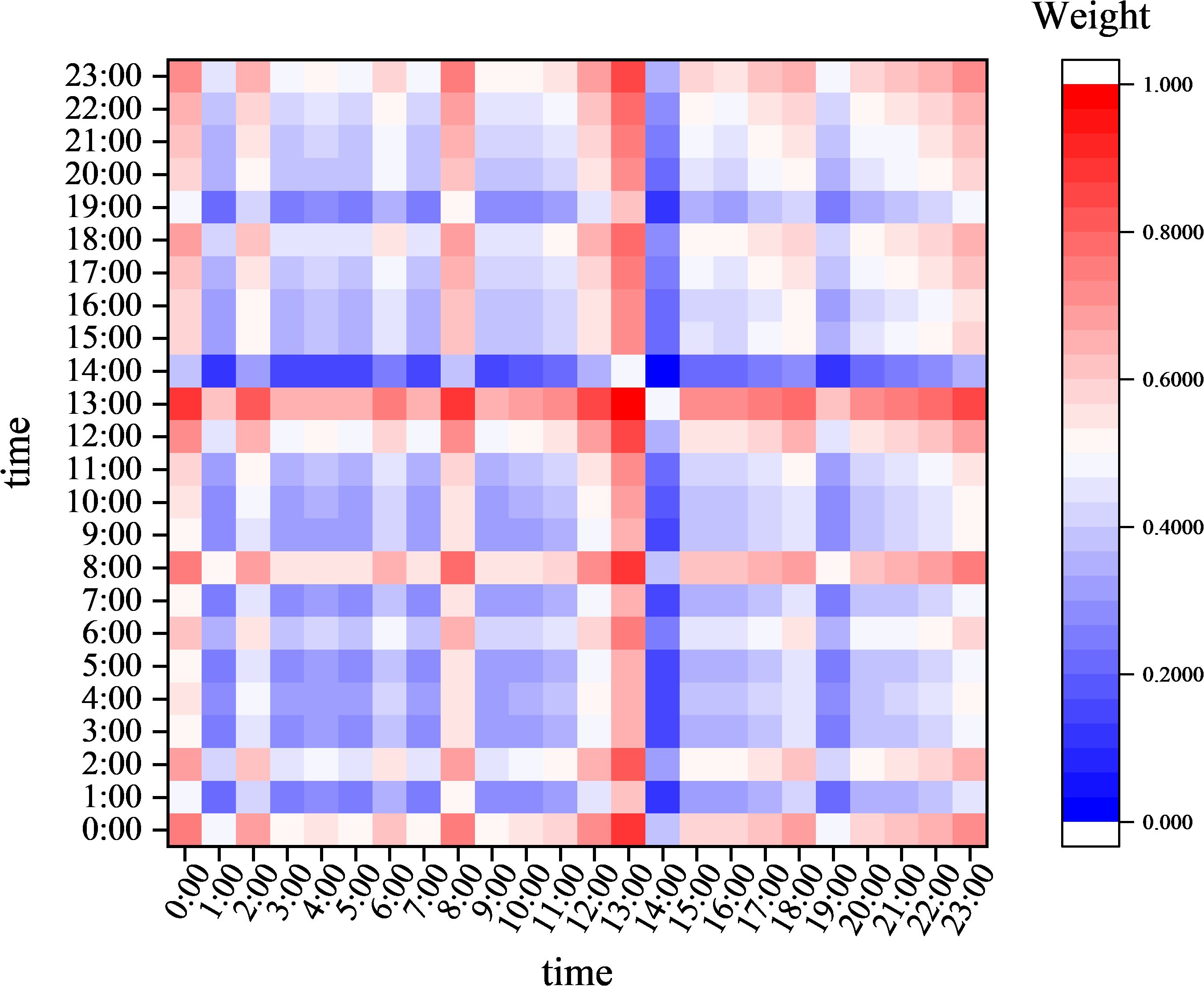

Figure 8 illustrates how visualizing the distribution of attention weights across the time dimension can intuitively present the model’s dynamic attention patterns toward the input sequence. The Y-axis of the heatmap represents a single attention head, the X-axis corresponds to consecutive time steps, and the color gradient (from light yellow to dark red) reflects the model’s attention intensity toward features at each time step. The experimental results show that the prominent red regions align spatially and temporally with key feature changes, such as sudden temperature changes. This indicates that the model can effectively capture sudden temporal events. The light yellow regions correspond to periods of feature stability. Their low attention weights are statistically significantly associated with states of low information content. This visualization validates AT’s capability to dynamically focus on key temporal segments, as there is a quantifiable positive correlation between attention weights and feature importance.

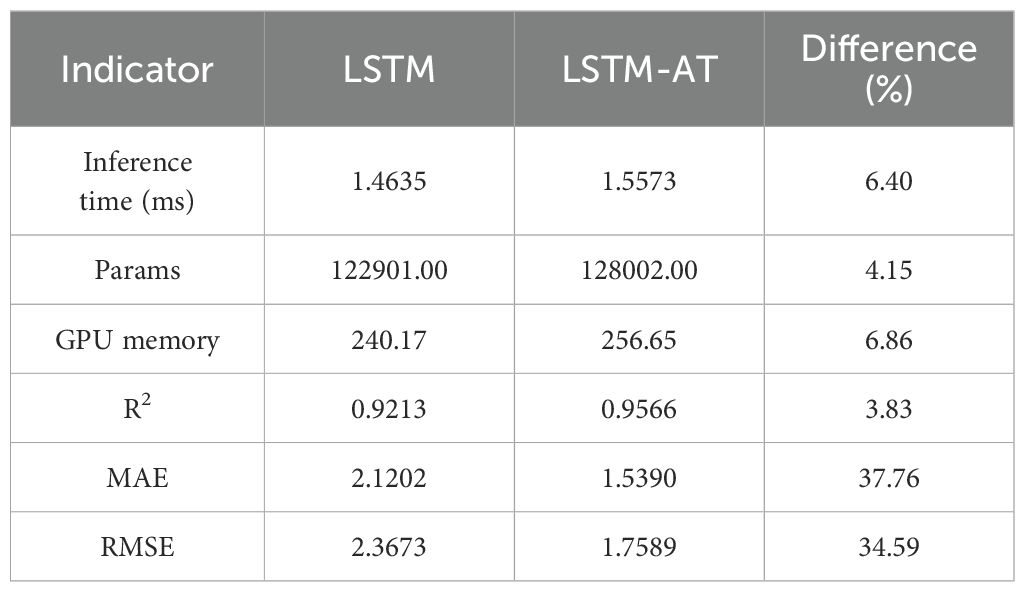

The introduction of the AT module significantly improves the performance of temporal modeling, primarily due to its ability to dynamically model non-local dependencies across time steps in long sequences. While the standard LSTM model has basic temporal feature extraction capabilities, the AT module provides more refined temporal feature selection at the lightweight cost of a 4.15% increase in parameters (122,901 to 128,002). This enhancement enables the model to adaptively focus on discriminative features at critical time steps, showcasing its superior temporal pattern modeling capabilities in complex environmental prediction tasks. As shown in Table 3 using a 24-hour temperature prediction task as an example, experimental results demonstrate that AT significantly improves the model’s accuracy in capturing nonlinear temporal dynamics by establishing global temporal context associations, thereby enhancing the robustness and reliability of prediction results. These characteristics make AT a key component for addressing long-sequence dependency issues, particularly in scenarios requiring precise modeling of temporal dynamics, such as weather forecasting and industrial equipment monitoring.

4.3 Choosing and comparing small wave functions

In wavelet threshold denoising methods, the selection of wavelet basis functions, decomposition levels, and threshold strategies are key performance factors. This study selected three representative wavelet basis functions—sym4, db4, and coif1—and three threshold strategies—universal threshold, maximum-minimum threshold, and SURE (Stein unbiased risk estimate) threshold—to systematically evaluate the impact of different parameter combinations on denoising performance. Comparative experiments were conducted at three decomposition levels (2, 4, and 6). Three objective evaluation metrics—improved signal-to-noise ratio (ASNR), root mean square error (RMSE), and correlation coefficient (CC)—were introduced to quantitatively assess denoising performance (see Table 4). Using temperature data as an example, experimental data show that the system achieves optimal denoising performance when using the sym4 wavelet basis function, decomposition level 2, and the minimum-maximum threshold strategy: The ASNR improves to 40.23 dB, the RMSE decreases to 0.0681, and the CC reaches 0.9999. This parameter combination significantly outperforms other experimental groups across all evaluation metrics and is therefore established as the standard configuration for subsequent research. This optimized scheme effectively preserves the feature information of the original signal while improving the signal-to-noise ratio and providing a reliable foundation for subsequent signal processing.

4.4 LSTM-AT-DP model ablation test

This study evaluates the effectiveness of model optimization by analyzing three key environmental parameters (temperature, humidity, and radiation). Specifically, it analyzes the individual and synergistic enhancement effects of the AT and DP modules on LSTM performance and compares the differences in prediction accuracy between isolated improvement methods and integrated improvement methods.

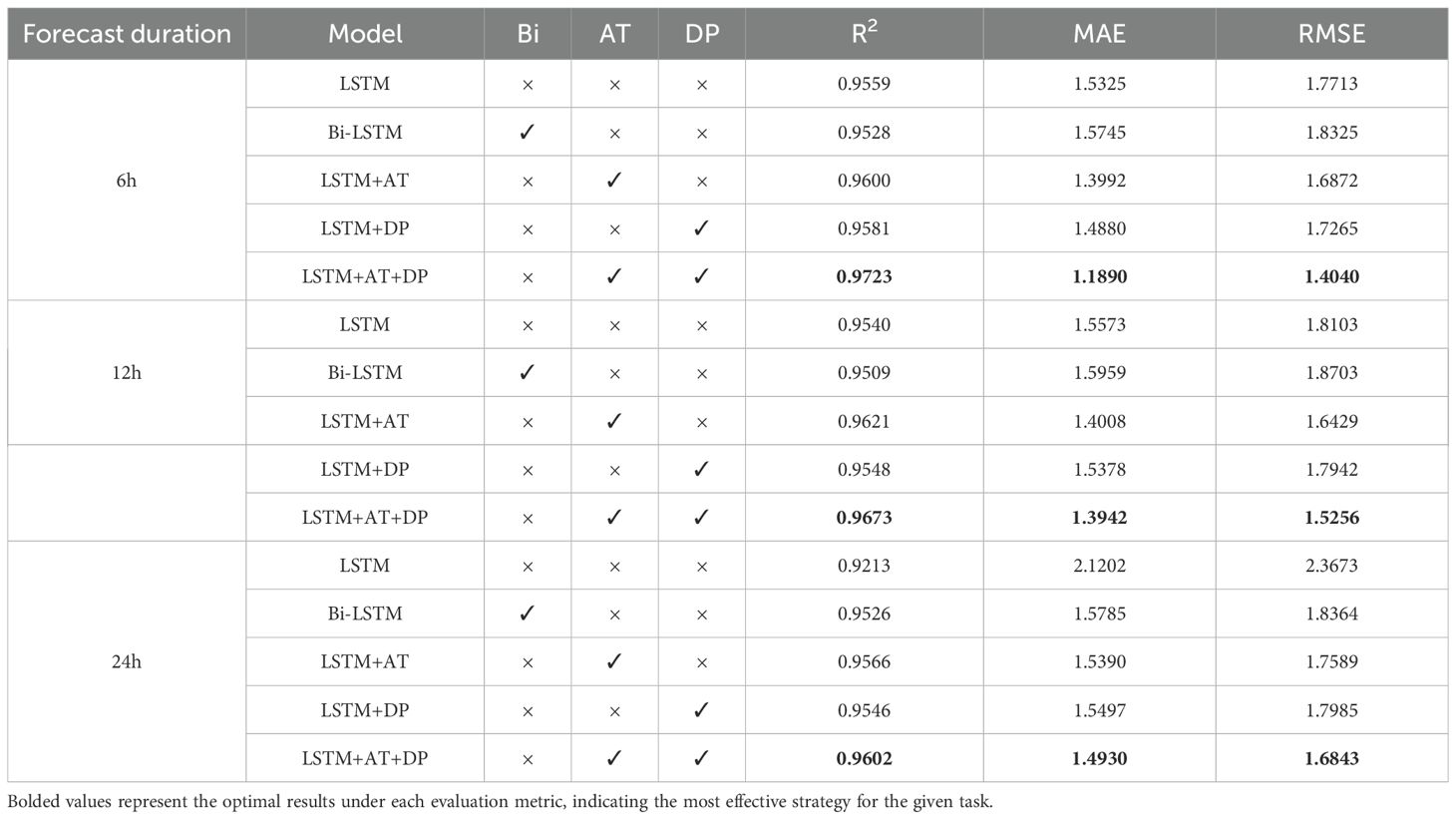

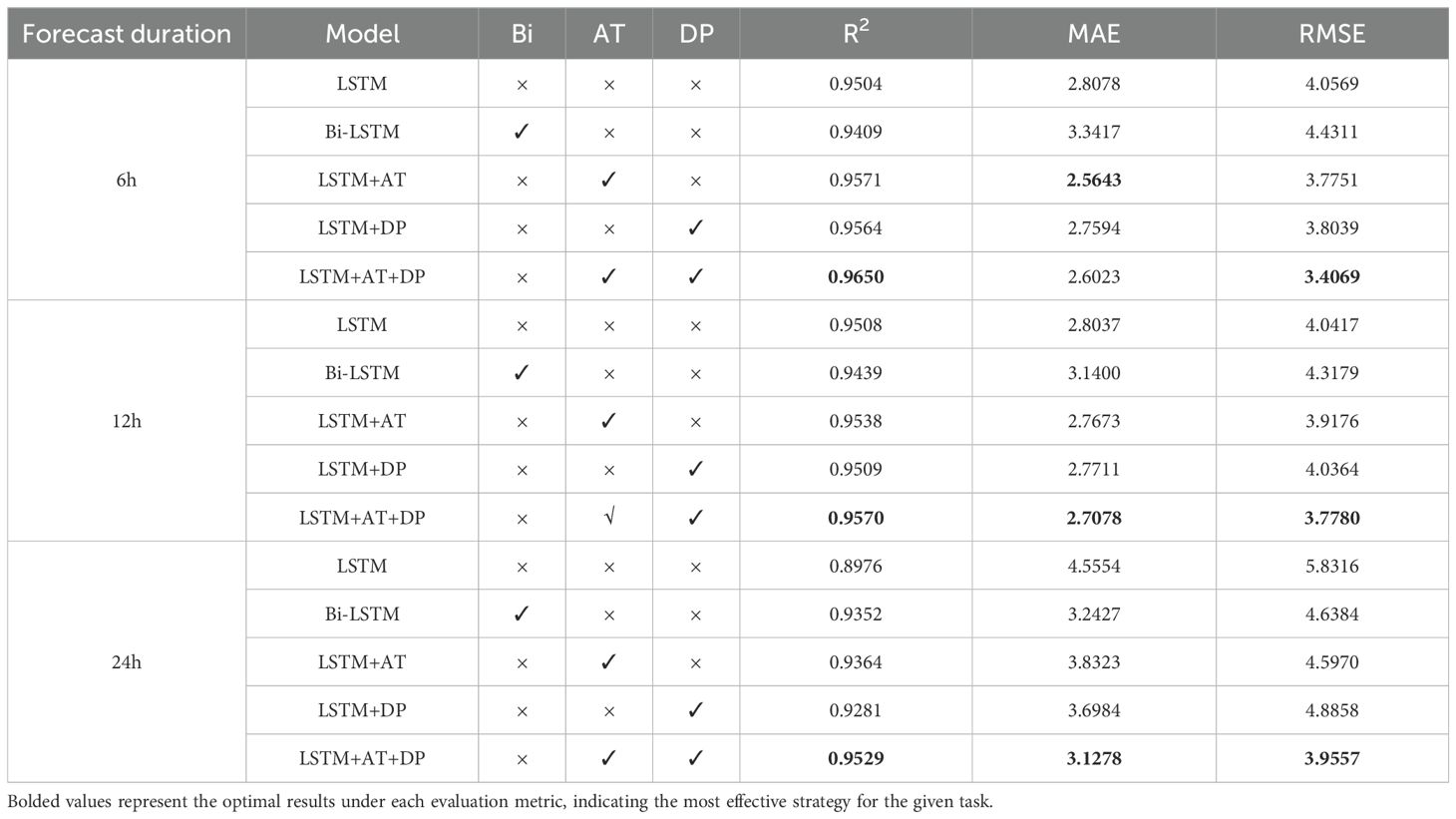

4.4.1 Optimization evaluation of AT and DP modules on temperature prediction performance of LSTM models

As shown in the experimental data in Table 5, The Bi-LSTM model exhibits clear limitations in short-term forecasting. Compared to the original LSTM model, the R² value decreased by 0.31% for 6-hour forecasts and by 0.31% for 12-hour forecasts. Additionally, the MAE value increased by 0.0420 for 6-hour forecasts and by 0.0386 for 12-hour forecasts, while the RMSE value increased by 0.0612 for 6-hour forecasts and by 0.0600 for 12-hour forecasts. While the bidirectional structure improves long-term forecasting performance, it actually leads to a decline in accuracy when handling short-term forecasting tasks. For 24-hour forecasts, R² increases by 3.13%, MAE decreases by 0.5417, and RMSE decreases by 0.5309. Integrating the AT module into the LSTM model resulted in significant improvements across multiple metrics. Specifically, the R² values for 6-hour, 12-hour, and 24-hour predictions increased by 0.41%, 0.81%, and 3.53%, respectively, while the MAE decreased by 0.1333, 0.1565, and 0.5812, respectively, and the RMSE decreased by 0.0841, 0.1674, and 0.6084, respectively. These results indicate that AT significantly enhances the stability of long-term predictions. When the DP module is added, the R² values for the corresponding time intervals increased by 0.22%, 0.08%, and 3.33%, respectively, MAE decreased by 0.0445, 0.0195, and 0.5705, respectively, and RMSE decreased by 0.0448, 0.0161, and 0.5688, respectively. Notably, the DP module demonstrated particularly significant noise reduction effects in long-term forecasts. The model combining LSTM-AT-DP achieved the most significant improvements: R² increased by 1.64%, 1.33%, and 3.89%, respectively, while the MAE decreased by 0.3435, 0.1631, and 0.6272, respectively, and the root RMSE decreased by 0.3673, 0.2847, and 0.6830, respectively. It is worth noting that the R² value for the 24-hour forecast increased by 3.89%, which significantly surpasses the improvement achieved by using the AT module (3.53%) or the DP module (3.33%) alone, thereby confirming the synergistic optimization effect between the AT module and the DP module.

4.4.2 Optimization evaluation of AT and DP modules on humidity prediction performance of LSTM models

As shown in Table 6, the experimental results reveal distinct performance characteristics across different humidity prediction models. The Bi-LSTM model clearly demonstrates temporal dependency in its predictive capabilities. It underperforms the base LSTM model for short-term 6-hour and 12-hour forecasts, with R² reductions of 0.95% and 0.69%, respectively. This is accompanied by increased mean absolute error (MAE) of 0.5339 and 0.3363 and root mean squared error (RMSE) of 0.3742 and 0.2762, respectively. However, the Bi-LSTM model’s performance substantially improves for 24-hour predictions, achieving a 3.76% R² improvement while reducing MAE by 1.3127 and RMSE by 1.1932.In comparison, the AT-enhanced LSTM model shows consistent performance gains across all time horizons. It achieves R² improvements ranging from 0.30% to 3.88%, with MAE reductions between 0.0364 and 0.7231 and RMSE decreases between 0.1241 and 1.2346. The DP module notably improves long-term accuracy, providing a 3.05% R² increase for 24-hour forecasts while maintaining stable short-term performance. The combined LSTM-AT-DP model is the most effective solution, delivering superior performance across all prediction windows. It achieves R² improvements of 1.46%, 0.62%, and 5.53% for 6-, 12-, and 24-hour forecasts, respectively. The model’s most significant enhancement is evident in 24-hour predictions, where it reduces MAE by 1.4276 and RMSE by 1.8759. This demonstrates the synergistic benefits of integrating attention mechanisms and denoising processes. This evaluation clearly shows that, although Bi-LSTM offers long-term advantages, the LSTM-AT-DP combination provides more reliable and consistent improvements across all prediction timeframes.

4.4.3 Optimization evaluation of AT and DP modules on radiation prediction performance of LSTM models

As shown in Table 7, the experimental results demonstrate significant variations in radiation prediction performance across different model architectures and time horizons. The Bi-LSTM model exhibits inconsistent performance characteristics. For six-hour predictions, it achieves performance gains, with R² increasing by 1.25 percentage points, and reducing both MAE and RMSE. However, its predictive capability deteriorates for 12-hour forecasts, showing reduced accuracy across all metrics. The model regains its predictive advantage for 24-hour forecasts, delivering an improved R² value and substantial reductions in error metrics. In comparison, the AT-enhanced LSTM model demonstrates more stable improvements across all prediction windows. It consistently increases R² values and achieves significant decreases in MAE and RMSE across 6-, 12-, and 24-hour forecasts. The DP module exhibits comparable yet slightly less pronounced enhancement effects, especially in longer-term predictions. The integrated LSTM-AT-DP model is the most robust solution, providing superior performance that surpasses the improvements of its individual components. This combined architecture achieves the highest R² values and the most substantial reductions in error metrics across all time horizons. Its 6-hour prediction performance is particularly noteworthy, as the RMSE reduction significantly outperforms what either module achieves independently. These results clearly demonstrate the complementary nature and synergistic effects of combining attention mechanisms with denoising processes for radiation prediction tasks.

4.5 Exploration of the performance of the LSTM-AT-DP model in comparison with the classical model

To validate the efficacy of the enhanced LSTM-AT-DP model, this study performed comparative experiments against several classical baseline models, including RNN, LSTM, and GRU architectures. The experimental results demonstrate that our proposed model achieves superior performance across all evaluation metrics.

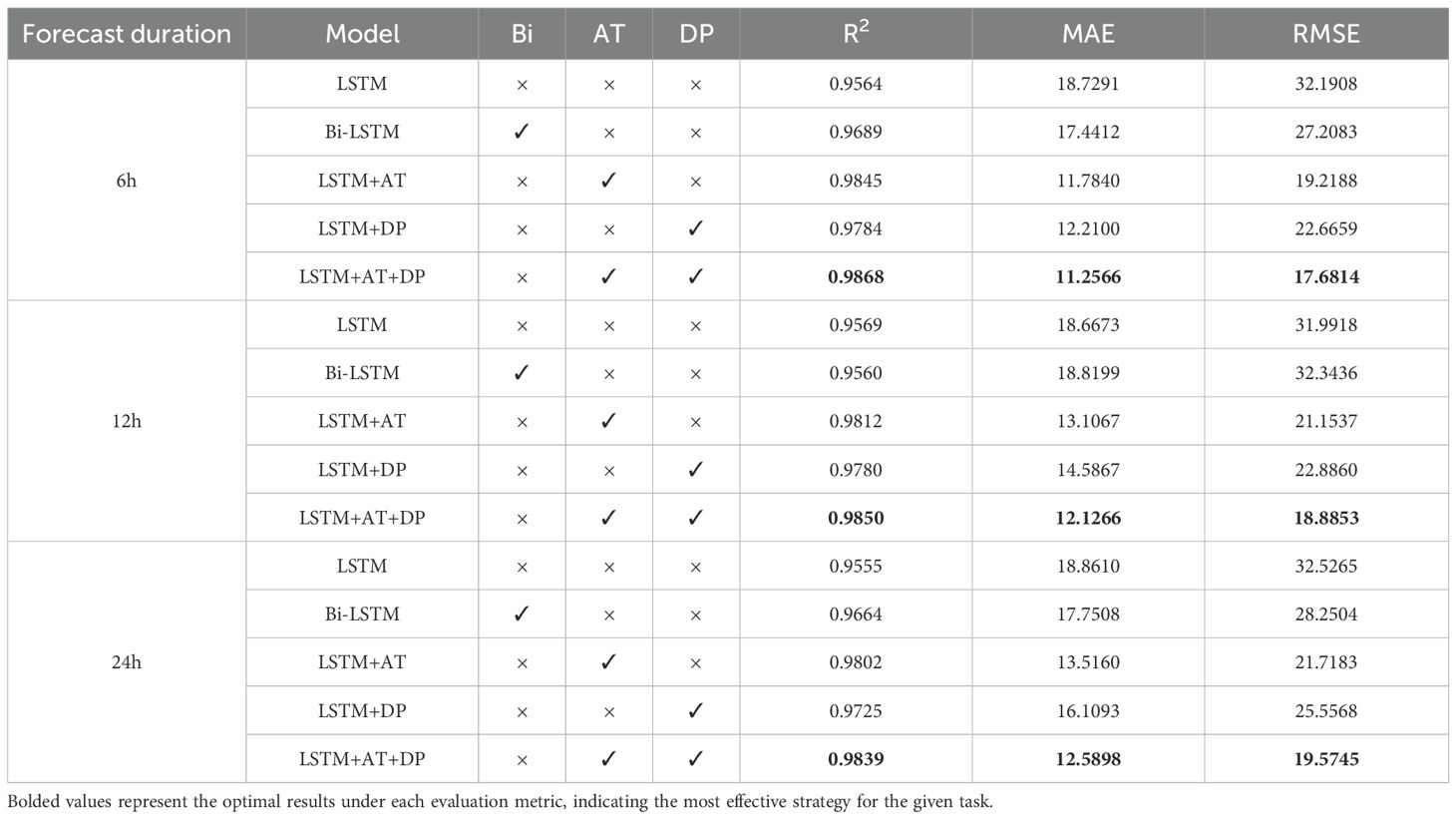

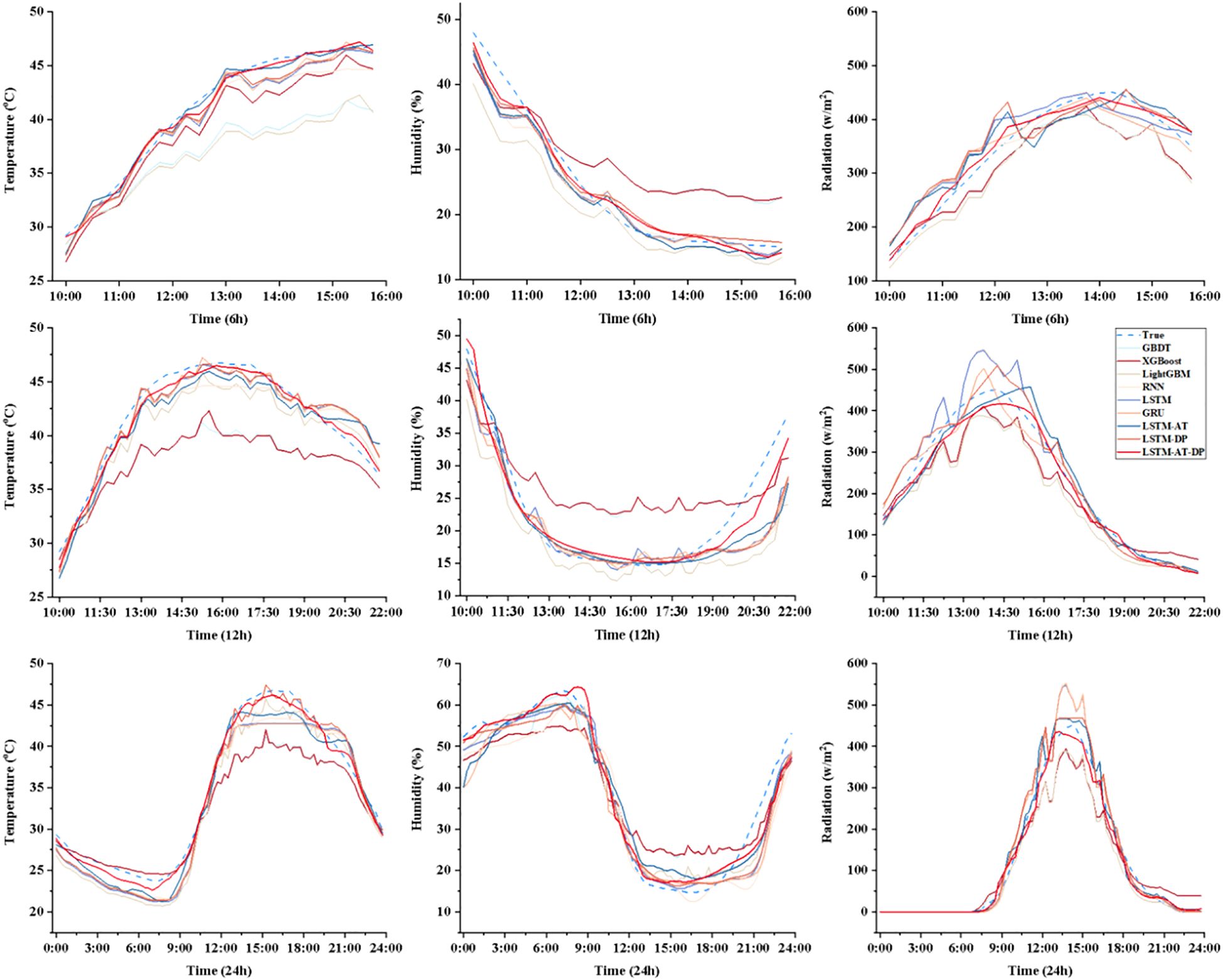

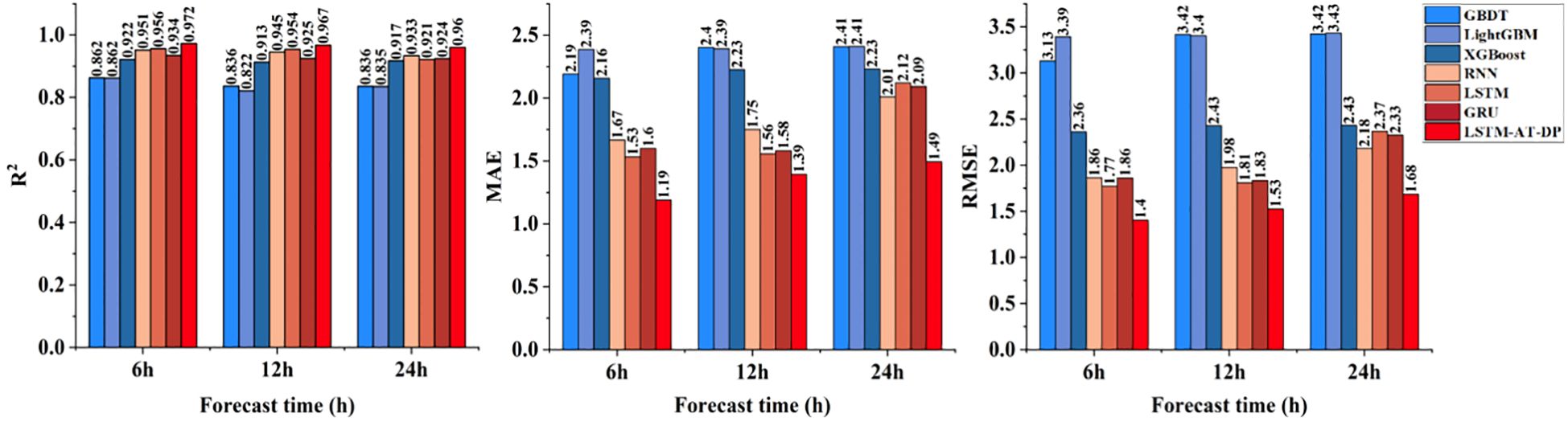

4.5.1 Comparison and evaluation of temperature prediction performance of LSTM-AT-DP model and classical models

As shown in the data in Figure 9, the LSTM-AT-DP model achieved significant R² values of 0.9723, 0.9673, and 0.9602 for 6-hour, 12-hour, and 24-hour predictions, respectively, representing improvements of 1.7% to 4.2% compared to the traditional LSTM model. Additionally, the model achieved significant reductions in MAE and RMSE, decreasing by 22.4% to 29.6% and 20.7% to 28.8%, respectively. Notably, the 24-hour prediction performance of the LSTM-AT-DP model achieved an MAE of 1.4930, significantly outperforming the LSTM (2.1202) and GRU (2.0924) benchmarks. This performance improvement can be attributed to the model’s innovative architecture: the AT module mitigates gradient decay issues in long-term predictions by dynamically focusing on key temporal features, while the DP module provides robust multi-scale signal processing capabilities. The synergistic integration of these components enables the LSTM-AT-DP model to achieve higher accuracy and stability in temperature forecasting tasks.

Figure 9. Comparison of the temperature prediction performance of the LSTM-AT-DP model and the classical model.

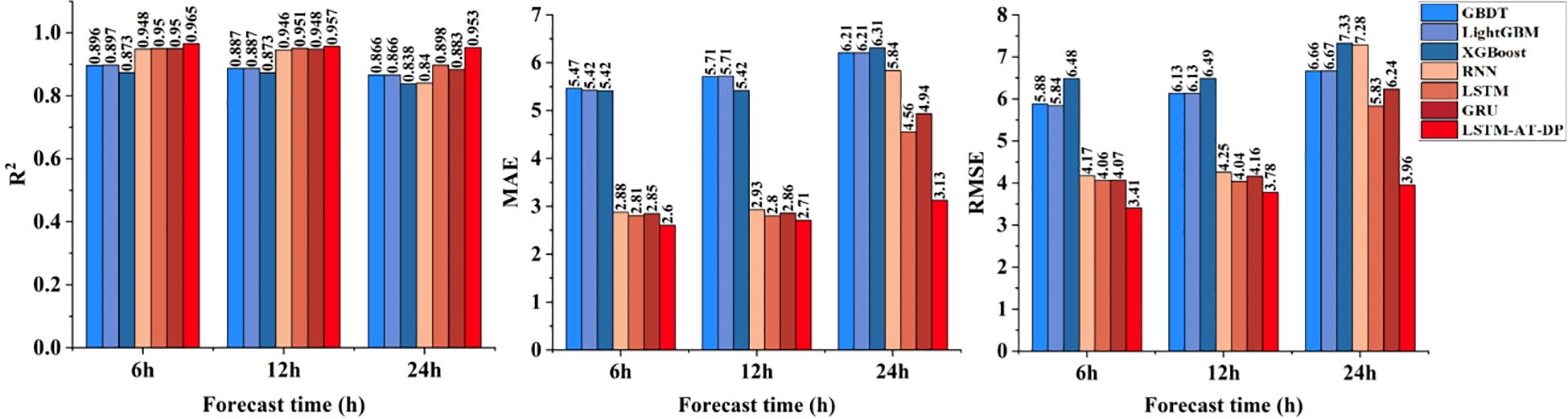

4.5.2 Comparison and evaluation of humidity prediction performance of LSTM-AT-DP model and classical models

Figure 10 shows a comprehensive performance comparison of various models in a humidity prediction task characterized by highly nonlinear dynamics and significant noise interference. The LSTM-AT-DP model performs exceptionally well, achieving an R² value of 0.9529 for 24-hour predictions, representing a 6.2% improvement over the baseline LSTM model’s R² value of 0.8976. Notably, the MAE is reduced to 3.1278, a significant 31.4% decrease compared to the LSTM baseline model. Comparing the prediction curves shows that the LSTM-AT-DP model exhibits significantly reduced prediction fluctuations in low signal-to-noise ratio regions, confirming the DP module’s exceptional noise separation capability. These results collectively indicate that the LSTM-AT-DP model possesses a clear advantage in handling noisy humidity prediction scenarios, achieving more accurate predictions of humidity changes through its effective noise suppression and feature extraction mechanisms.

Figure 10. Comparison of the humidity prediction performance of the LSTM-AT-DP model and the classical model.

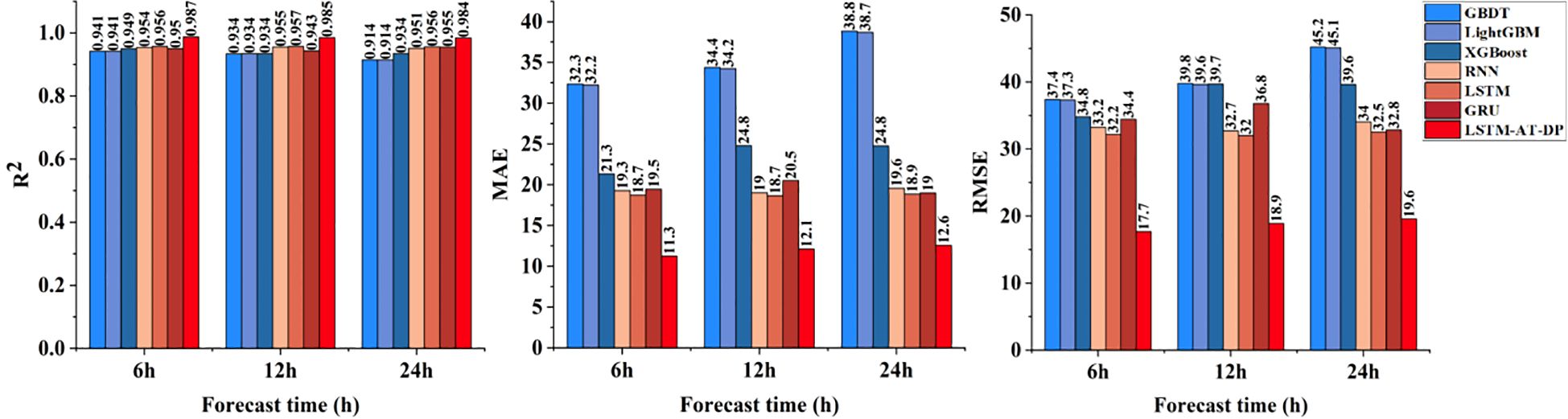

4.5.3 Comparison and evaluation of radiation prediction performance of LSTM-AT-DP model and classical models

Figure 11 demonstrates the outstanding performance of the LSTM-AT-DP model in radiation prediction tasks with frequent mutation characteristics. The model achieved an excellent R² value of 0.9868 in 6-hour predictions, with a corresponding MAE of 11.2566, representing a significant reduction of 39.9% compared to the MAE (18.7291) of the LSTM baseline model. Detailed curve analysis shows that the LSTM-AT-DP model consistently achieves prediction errors below 5% during the midday radiation peak period, significantly outperforming traditional models (error range of 8% to 12%). This performance advantage stems from two core mechanisms: the AT module enhances feature weights during sudden changes, and the DP module improves transient response accuracy through advanced high-frequency signal decomposition techniques. For 24-hour predictions, the model’s MAE is 12.5898, which is 49.2% lower than XGBoost’s MAE (24.7675), and the R² is improved by 5.4%, further validating the superiority of this deep learning architecture in spatio-temporal nonlinear modeling.

Figure 11. Comparison of the radiation prediction performance of the LSTM-AT-DP model and the classical model.

5 Discussion

Figure 12 shows a comprehensive comparison of the predictive performance of the proposed LSTM-AT-DP model and traditional models in facility environments under different environmental parameters (temperature, humidity, radiation) and different forecast lead times (6 hours, 12 hours, 24 hours). Through a systematic analysis of Figures 8-11, the LSTM-AT-DP model demonstrates significant advantages, specifically as follows:

The LSTM-AT-DP model demonstrates excellent temporal stability when the prediction time is extended from 6-hour to 24-hour, with only a minimal decline in performance. In terms of temperature prediction, the model exhibits excellent consistency, with an R² value fluctuation of only 0.0121, which is 63.5% and 55.8% higher than the LSTM model (0.0346) and GRU model (0.0274), respectively. In humidity prediction, the model achieves a 24-hour MAE of 3.1278, an improvement of 46.4% over RNN (5.8399), while maintaining an RMSE of 3.9557, which is 32.2% lower than LSTM’s 5.8316. In radiation forecasting, the model demonstrated near-perfect temporal consistency, with R² values for 6 hour (0.9868) and 24 hour (0.9839) predictions differing by less than 0.3%, significantly outperforming the 1.6% performance degradation of the XGBoost model. These results collectively validate the model’s unprecedented stability in multi-variable long-term environmental forecasting.

The LSTM-AT-DP model demonstrates superior cross-variable prediction performance across all three environmental parameters (temperature, humidity, and radiation). For 24-hour predictions, the model achieves MAE values of 1.4930 (temperature) and 3.1278 (humidity), with a remarkably small inter-variable difference of merely 1.6348 significantly lower than the 2.4352 observed in the baseline LSTM model. This substantial 32.9% reduction in performance variance clearly indicates the model’s enhanced capability to balance the learning of coupling relationships between different environmental factors. In radiation forecasting, the model’s 12-hour MAE of 12.1266 represents a 51.1% improvement over XGBoost (24.7902), while its RMSE of 18.8853 is only 47.6% of conventional models. These results provide compelling evidence that the LSTM-AT-DP architecture effectively captures high-frequency transient components while maintaining both high efficiency and accuracy in multi-variable prediction tasks, owing to its sophisticated feature extraction and noise suppression mechanisms.

The LSTM-AT-DP model demonstrates significant advantages in modeling different environmental factors. In 24-hour radiation forecasts, the model achieved a MAE of 12.5898, a 49.2% reduction compared to XGBoost (24.7675), while increasing the coefficient of determination R² by 5.4 percentage points. This significant improvement clearly demonstrates the superior ability of deep learning architectures to capture the inherent spatio-temporal nonlinear relationships in complex radiation data. In humidity prediction, the model achieved an R² value of 0.9529 within 24 hours, which is 7.9% higher than GRU (0.8829), while reducing the MAE value by 36.7%. These improvements clearly indicate that the integration of the AT module and DP module effectively enhances feature selection and noise suppression capabilities, enabling more precise identification of key humidity patterns while minimizing interference from noise signals, thereby significantly improving prediction accuracy.

Data analysis indicates that the DP module significantly improves data quality through the synergistic integration of the WTD unit and SW unit. The WTD unit utilizes the time-frequency localization characteristics of the sym4 wavelet basis function to achieve precise separation of signals and noise in facility environmental monitoring data, particularly excelling in suppressing high-frequency noise components. Experimental results show that in radiation prediction tasks, the independent DP module achieved error reductions of 6.5191 W/m² (MAE) and 9.5249 W/m² (RMSE), confirming its dual capabilities in noise elimination and high-frequency signal retention. In 24-hour temperature forecasts, the DP module increased the R² coefficient by 3.33% while reducing MAE and RMSE by 0.5705 and 0.5688, respectively. These metrics collectively validate the module’s exceptional performance in handling non-stationary signals and its robust capability to optimize data quality. The SW unit operates by dividing continuous time series into a structured mapping from historical states to future targets. This architectural approach enables the LSTM network to capture the systematic spatiotemporal dynamics of environmental processes rather than discrete-time features, significantly enhancing the model’s ability to learn complex spatiotemporal dependencies among environmental variables.

By integrating the AT module, the model can dynamically adjust feature weights at critical time points, significantly enhancing its ability to detect sudden environmental events. In experiments verifying humidity forecasts, the AT module reduced the MAE of 24-hour predictions by 36.7% and increased the R² by 5.53%. These metrics confirm the module’s dual functionality: it can both precisely allocate attention to key features and effectively suppress noise, thereby improving prediction accuracy. The AT module automatically enhances feature extraction during crop-sensitive phenological periods by calculating the similarity between Q and K. This architecture ensures that prediction performance remains robust even under low signal-to-noise ratio conditions. Notably, in the 24-hour humidity forecast task, the model incorporating the AT module achieved an MAE of just 3.1278% RH, representing a 36.7% improvement over the traditional GRU model. This comparative advantage fully demonstrates the effectiveness of the AT module in strategic information focusing and noise suppression.

The synergistic integration of the DP module and the AT module has effectively broken through the bottleneck of declining accuracy in long-term forecasts. The DP module ensures high signal-to-noise ratio input data, while the AT module dynamically enhances feature representation capabilities at critical time points through adaptive weight allocation. This dual optimization strategy significantly alleviates the error propagation issue in sequence prediction. Experimental results show that compared to the baseline LSTM model, the integrated model achieves R² improvements of 3.89%, 5.53%, and 2.84% in 24-hour temperature, humidity, and radiation forecasts, respectively, while reducing MAE by 29.6% to 39.9%. Notably, the model’s ability to detect sudden events has significantly improved: for 6-hour radiation forecast peaks, the MAE percentage has dropped to 3.2%–4.8%, representing a significant improvement over the 8%–12% range observed in traditional models. These findings not only validate the accuracy improvements achieved through the synergistic effects of DP-AT but also highlight the model’s stability and ability to capture sudden events.

The multi-factor time series modeling method establishes a unified representation of the coupled relationships between temperature, humidity, and radiation through multivariate reconstruction and deep feature extraction driven by the DP module based on LSTM-AT, thereby demonstrating outstanding performance. The model maintains excellent stability, with R² values for all three factors remaining above 0.95 in both 6-hour and 24-hour forecasts. Compared to the baseline model, cross-factor performance variability is significantly reduced (LSTM-AT-DP exhibits 1.46% variability in humidity forecasts, while GRU shows 7.9%). Under low signal-to-noise ratio conditions, the synergistic effect of the DP module and AT module ensures robust performance: the DP module maintains data reliability by effectively suppressing noise, while the AT module dynamically optimizes feature weights. This combination achieves an MAE value as low as 3.1278% RH in 24-hour humidity forecasts, representing a 36.7% improvement over GRU. These results validate the model’s ability to balance learning cross-factor coupling relationships and capturing high-frequency mutations. In radiation prediction, the LSTM-AT-DP model achieved an R² of 0.9868 and a 6-hour MAE of 11.2566 W/m², representing a 39.9% improvement over the LSTM model. This indicates that the AT module focuses more on transient events, while the DP module enhances the resolution of high-frequency signal components through advanced decomposition techniques.

6 Conclusion

This study proposes a model based on LSTM-AT-DP, which achieves significant improvements in accuracy and stability in time series prediction of multiple factors (temperature, humidity, and radiation) in controlled agricultural environments through the collaborative optimization of the DP module and the AT module. The DP module effectively removes data noise and improves data quality using WTD and SW units, thereby providing structurally optimized inputs for subsequent modeling. Experimental results show that in the temperature prediction task, the independent DP module improves the 24-hour prediction R² metric by 3.33%, confirming its exceptional adaptability to non-stationary signals. Meanwhile, the AT module amplifies feature representations at critical time points through dynamic weight allocation, significantly enhancing the model’s ability to detect sudden environmental changes. This effect was quantitatively verified in a humidity prediction task, where the integration of the AT module reduced the 24-hour MAE metric by 36.7%, fully demonstrating its accuracy in focusing on time-sensitive information.

Comprehensive experimental evaluations demonstrate that the proposed LSTM-AT-DP architecture outperforms traditional models across all evaluation metrics (R², MAE, and RMSE), particularly in long-term forecasting tasks. Specifically, the model achieved R² values of 0.9602 for temperature, 0.9529 for humidity, and 0.9839 for radiation in 24-hour forecasts, representing improvements of 3.89%, 5.53%, and 2.84%, respectively, compared to the baseline LSTM model. More notably, the model achieved significant error reduction, with the MAE for temperature prediction decreasing from 2.1202 to 1.4930 (a reduction of 29.6%) and the RMSE decreasing from 2.3673 to 1.6843 (a reduction of 28.9%). These quantitative results clearly demonstrate the model’s exceptional ability to handle multi-factor coupling relationships and noise interference in controlled agricultural environments. Especially under harsh conditions with low signal-to-noise ratios, the model still maintains robust prediction accuracy—this finding provides empirical validation for the synergistic optimization between the noise suppression DP module and the feature enhancement AT module.

In summary, the LSTM-AT-DP model provides a solid technical foundation for precise environmental control in facility agriculture. The model’s superior performance in terms of prediction accuracy (parameter-average R² improved by 4.09%), temporal stability (MAE reduced by 39.9%), and transient event detection capability (peak error reduced to 3.2–4.8%) has been rigorously validated through comprehensive experimental data. In the future, the application of this model can be further expanded to different types of facilities and crop varieties to verify its universality and promotional value. At the same time, more advanced deep learning technologies and data processing methods can be explored to further improve the model’s predictive performance and practicality.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

LL: Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing. HS: Formal Analysis, Methodology, Writing – review & editing. ZW: Investigation, Validation, Writing – review & editing. SW: Formal Analysis, Investigation, Writing – review & editing. CL: Data curation, Writing – review & editing. MD: Conceptualization, Funding acquisition, Project administration, Resources, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This research was supported by: The Seventh Division - Shihezi University Science and Technology Innovation Special Project (QS2023012). The earmarked fund for XJARS (XJARS-07). The Xinjiang Uygur Autonomous Region Key R&D Project (2022B02032-3).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bournet, P.-E. and Rojano, F. (2022). Advances of computational fluid dynamics (CFD) applications in agricultural building modelling: Research, applications and challenges. Comput. Electron. Agric. 201, 107277. doi: 10.1016/j.compag.2022.107277

Chen, D., Zhang, J., and Jiang, S. (2020). Forecasting the short-term metro ridership with seasonal and trend decomposition using loess and LSTM neural networks. IEEE Access. 8, 91181–91187. doi: 10.1109/ACCESS.2020.2995044

Cheng, W., He, J., and Liu, Z. (2021). Evaluating how the temperature changes in a sunken solar greenhouse. Engenharia Agrícola 41, 279–285. doi: 10.1590/1809–4430Eng.Agric.v41n3P279-285/2021

Dong, H., Liu, S., Liu, D., Tao, Z., Fang, L., Pang, L., et al. (2024). Enhanced infrasound denoising for debris flow analysis: Integrating empirical mode decomposition with an improved wavelet threshold algorithm. Measurement. 235, 114961. doi: 10.1016/j.measurement.2024.114961

Fan, D., Sun, H., Yao, J., Zhang, K., Yan, X., and Sun, Z. (2021). Well production forecasting based on ARIMA-LSTM model considering manual operations. Energy. 220, 119708. doi: 10.1016/j.energy.2020.119708

Gao, M., Wu, Q., Li, J., Wang, B., Zhou, Z., Liu, C., et al. (2023). Temperature prediction of solar greenhouse based on NARX regression neural network. Sci. Rep. 13, 1563. doi: 10.1038/s41598-022-24072-1

Gao, P., Tian, Z., Lu, Y., Lu, M., Zhang, H., Wu, H., et al. (2022). A decision-making model for light environment control of tomato seedlings aiming at the knee point of light-response curves. Comput. Electron. Agric. 198, 107103. doi: 10.1016/j.compag.2022.107103

Guo, M.-H., Xu, T.-X., Liu, J.-J., Liu, Z.-N., Jiang, P.-T., Mu, T.-J., et al. (2022). Attention mechanisms in computer vision: A survey. Comput. Visual Media. 8, 331–368. doi: 10.1007/s41095-022-0271-y

Hochreiter, S. and Schmidhuber, J. (1997). Long short-term memory. Neural Computation. 9, 1735–1780. doi: 10.1162/neco.1997.9.8.1735

Hong, D., Li, F., Ma, J., Man, K. L., Wen, H., and Wong, P. (2025). Temporal environment informed photovoltaic performance prediction framework with multi-spatial attention LSTM. Solar Energy 296, 113550.

Hou, J., Wang, Y., Hou, B., Zhou, J., and Tian, Q. (2023). Spatial simulation and prediction of air temperature based on CNN-LSTM. Appl. Artif. Intelligence. 37, 2166325. doi: 10.1080/08839514.2023.2166235

Hu, F., Yang, Q., Yang, J., Shao, J., and Wang, G. (2025). An adaptive rainfall-runoff model for daily runoff prediction under the changing environment: Stream-LSTM. Environ. Model. Software 191, 106524. doi: 10.1016/j.envsoft.2025.106524

Islam, M. N., Iqbal, M. Z., Ali, M., Gulandaz, M. A., Kabir, M. S. N., Jang, S. H., et al. (2023). Evaluation of a 0.7 kW suspension-type dehumidifier module in a closed chamber and in a small greenhouse. Sustainability. 15, 5236. doi: 10.3390/su15065236

Jeong, J. M., Mo, Y., Hyun, U. J., and Jeung, J. U. (2020). Identification of Quantitative Trait Loci for Spikelet Fertility at the Booting Stage in Rice (Oryza sativa L.) under Different Low-Temperature Conditions. Agronomy. 10, 1225. doi: 10.3390/agronomy10091225

Jiang, T., Yu, C., Huang, K., Zhao, J., and Wang, L. (2023). Bridge signal denoising method combined VMD parameters optimized by Aquila optimizer with wavelet threshold. China J. Highway Transport 36, 158–168. doi: 10.19721/j.cnki.1001-7372.20240910.1038.054

Jiang, S., Zhang, H., Lu, Y., Han, R., Gao, Y., Wang, Y., et al. (2025). SNR enhancement for Raman distributed temperature sensors using intrinsic modal functions with improved adaptive wavelet threshold denoising. Optics Lasers Engineering. 189, 108949. doi: 10.1016/j.optlaseng.2025.108949

Kow, P. Y., Lee, M. H., Sun, W., Yao, M. H., and Chang, F. J. (2022). Integrate deep learning and physically-based models for multi-step-ahead microclimate forecasting. Expert Syst. Appl. 210, 118481. doi: 10.1016/j.eswa.2022.118481

Li, H., Guo, Y., Zhao, H., Wang, Y., and Chow, D. (2021). Towards automated greenhouse: A state of the art review on greenhouse monitoring methods and technologies based on internet of things. Comput. Electron. Agric. 191, 106558. doi: 10.1016/j.compag.2021.106558

Li, S., Zhao, Q., Liu, J., Zhang, X., and Hou, J. (2025). Noise reduction of steam trap based on SSA-VMD improved wavelet threshold function. Sensors. 25, 1573. doi: 10.3390/s25051573

Liu, R., Li, M., Guzmán, J. L., and Rodríguez, F. (2021). A fast and practical one-dimensional transient model for greenhouse temperature and humidity. Comput. Electron. Agriculture. 186, 106186. doi: 10.1016/j.compag.2021.106186

Liu, Y., Zhao, C., and Huang, Y. (2022). A combined model for multivariate time series forecasting based on MLP-feedforward attention-LSTM. IEEE Access. 10, 88644–88654. doi: 10.1109/ACCESS.2022.3192430

Mahmood, F., Govindan, R., Bermak, A., Yang, D., and Al-Ansari, T. (2023). Data-driven robust model predictive control for greenhouse temperature control and energy utilisation assessment. Appl. Energy. 343, 121190. doi: 10.1016/j.apenergy.2023.121190

Mao, C. and Su, Y. (2024). CFD based heat transfer parameter identification of greenhouse and greenhouse climate prediction method. Thermal Sci. Eng. Progress. 49, 102462. doi: 10.1016/j.tsep.2024.102462

Maraveas, C. and Bartzanas, T. (2021). Application of Internet of Things (IoT) for optimized greenhouse environments. AgriEngineering. 3, 954–970. doi: 10.3390/agriengineering3040060

Mehdizadeh, S. (2018). Assessing the potential of data-driven models for estimation of long-term monthly temperatures. Comput. Electron. Agric. 144, 114–125. doi: 10.1016/j.compag.2017.11.038

Ritter, F. (2023). A procedure to clean, decompose, and aggregate time series. Hydrol. Earth Syst. Sci. 27, 349–361. doi: 10.5194/hess-27-349-2023

Sansa, I., Boussaada, Z., Mazigh, M., and Bellaaj, N. M. (2020). “Solar radiation prediction for a winter day using ARMA model,” in 2020 6th IEEE International Energy Conference (ENERGYCon). (Gammarth, Tunisia), 326–330. doi: 10.1109/ENERGYCon48941.2020.9236541

Subahi, A. F. and Bouazza, K. E. (2020). An intelligent ioT-based system design for controlling and monitoring greenhouse temperature. IEEE Access 8, 125488–125500. doi: 10.1109/ACCESS.2020.3007955

Tang, W., Guo, H., Baskin, C. C., Xiong, W., Yang, C., Li, Z., et al. (2022). Effect of light intensity on morphology, photosynthesis and carbon metabolism of alfalfa (Medicago sativa) seedlings. Plants-Basel. 11, 1688. doi: 10.3390/plants11131688

Tian, Y., Xu, Y., and Zhou, J. (2022). Underwater image enhancement method based on feature fusion neural network. IEEE Access. 10, 107536–107548. doi: 10.1109/ACCESS.2022.3210941

Torres, J. F., Hadjout, D., Sebaa, A., Martínez-Álvarez, F., and Troncoso, A. (2021). Deep learning for time series forecasting: A survey. Big Data. 9, 3–21. doi: 10.1089/big.2020.0159

Umutoni, L., Samadi, V., Vellidis, G., Privette, C., III, Payero, J., and Koc, B. (2025). Decoding time: Unraveling the power of N-BEATS and N-HiTS vs. LSTM for accurate soil moisture prediction. Comput. Electron. Agric. 237, 110614. doi: 10.1016/j.compag.2025.110614

Wang, G., Su, H., Mo, L., Yi, X., and Wu, P. (2024). Forecasting of soil respiration time series Via Clustered ARIMA. Comput. Electron. Agric. 225, 109315. doi: 10.1016/j.compag.2024.109315

Wu, L., Guo, W., Baoquan, W., Yongzhi, M., Jianming, W., Baopeng, L., et al. (2024). High voltage shunt reactor acoustic signal denoising based on the combination of VMD parameters optimized by coati optimization algorithm and wavelet threshold. Measurement. 224, 113854. doi: 10.1016/j.measurement.2023.113854

Yang, Y., Gao, P., Sun, Z., Wang, H., Lu, M., Liu, Y., et al. (2023). Multistep ahead prediction of temperature and humidity in solar greenhouse based on FAM-LSTM model. Comput. Electron. Agriculture. 213, 108261. doi: 10.1016/j.compag.2023.108261

Zhang, G., Ding, X., He, F., Yin, Y., Li, T., Ren, J., et al. (2024). Predicting greenhouse air temperature using LSTM-AT. Trans. Chin. Soc. Agric. Eng. (Transactions CSAE). 40, 194–201. doi: 10.19721/j.cnki.1001-7372.20240910.1038.054

Zhang, G., Ding, X., Li, T., Pu, W., Lou, W., and Hou, J. (2020). Dynamic energy balance model of a glass greenhouse: An experimental validation and solar energy analysis. Energy. 198, 117281. doi: 10.1016/j.energy.2020.117281

Keywords: LSTM, attention mechanism, wavelet threshold denoising, multi-factor time series forecasting, environmental prediction

Citation: Liang L, Shi H, Wang Z, Wang S, Li C and Diao M (2025) Research on time series prediction model for multi-factor environmental parameters in facilities based on LSTM-AT-DP model. Front. Plant Sci. 16:1652478. doi: 10.3389/fpls.2025.1652478

Received: 23 June 2025; Accepted: 29 July 2025;

Published: 18 August 2025.

Edited by:

Xing Yang, Anhui Science and Technology University, ChinaReviewed by:

Nagaraj R., Sri Sairam Engineering College, IndiaAmrita Sarkar, Birla Institute of Technology, Mesra, India

Copyright © 2025 Liang, Shi, Wang, Wang, Li and Diao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ming Diao, ZGlhb21pbmdAc2h6dS5lZHUuY24=

Longwei Liang

Longwei Liang Hui Shi2,3

Hui Shi2,3 Ming Diao

Ming Diao